Cracking a skill-specific interview, like one for Digital Workflow Integration, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Digital Workflow Integration Interview

Q 1. Explain your experience with different integration patterns (e.g., message queues, REST APIs, SOAP).

Integration patterns are the architectural blueprints for connecting different systems. I have extensive experience with various patterns, each suited for different scenarios. Let’s explore three key ones:

- REST APIs (Representational State Transfer): These are lightweight, stateless APIs that use standard HTTP methods (GET, POST, PUT, DELETE) to interact with resources. They’re excellent for web-based integrations and offer good scalability. For instance, I integrated a CRM system with a marketing automation platform using REST APIs to seamlessly transfer customer data. We used JSON for data exchange, ensuring interoperability.

- SOAP (Simple Object Access Protocol): A more robust, message-based protocol, SOAP utilizes XML for data exchange and is often preferred for enterprise-level integrations requiring high security and reliability. It’s more complex to implement than REST but offers features like transactions and security mechanisms like WS-Security. I used SOAP in a project involving the integration of financial systems where strict data integrity and security were paramount.

- Message Queues (e.g., RabbitMQ, Kafka): These provide asynchronous communication, decoupling systems and improving resilience. Messages are placed in a queue, and systems consume them when ready. This is crucial for handling high volumes of transactions or when systems have different processing speeds. A project I worked on used Kafka to manage a high-throughput stream of sensor data, ensuring that no data was lost even during periods of peak load.

My experience encompasses choosing the optimal pattern based on factors such as security requirements, scalability needs, data volume, and the capabilities of the involved systems. It’s not a one-size-fits-all approach; understanding the strengths and weaknesses of each pattern is vital for successful integration.

Q 2. Describe your experience with ETL (Extract, Transform, Load) processes.

ETL (Extract, Transform, Load) is a core process in data integration, responsible for moving data from source systems to a target data warehouse or data lake. My experience covers the entire ETL lifecycle:

- Extract: This phase involves retrieving data from diverse sources – databases, flat files, APIs, etc. I’ve utilized various tools and techniques, including database connectors, scripting languages like Python, and specialized ETL tools.

- Transform: This is often the most complex phase, involving data cleaning, validation, transformation, and enrichment. I employ techniques such as data mapping, data cleansing rules, and data normalization to ensure data quality and consistency. For instance, I once addressed inconsistent data formats in customer addresses using regular expressions and custom transformation logic.

- Load: This involves loading the transformed data into the target system. I’ve worked with various target systems, including relational databases, NoSQL databases, and cloud-based data warehouses. I ensure efficient loading using techniques like bulk loading and optimized database queries.

I’ve successfully implemented ETL processes for projects involving data warehousing, data migration, and business intelligence reporting. My approach emphasizes robust error handling, logging, and monitoring to ensure data integrity and operational efficiency. I also utilize testing and validation throughout the ETL process to minimize errors and ensure data quality.

Q 3. How do you approach troubleshooting integration issues?

Troubleshooting integration issues requires a systematic approach. My strategy involves:

- Reproducing the issue: This often involves gathering logs, reviewing error messages, and recreating the scenario to isolate the problem.

- Analyzing logs and error messages: Careful examination of logs from both the source and target systems provides clues about the root cause. I use various log management tools and techniques for this.

- Testing and validating individual components: Isolating the problem to a specific component helps focus the investigation and simplifies debugging. I employ unit testing and integration testing to verify the functionality of each part of the integration process.

- Using monitoring tools: Monitoring tools provide real-time insights into system performance and help identify bottlenecks or anomalies that may indicate integration issues.

- Collaboration and communication: Open communication with involved teams is critical, especially when the issue spans multiple systems or involves external dependencies.

For example, in one project, a seemingly simple API integration was failing. By meticulously analyzing logs, I identified a mismatch in data formats between the two systems. A simple data transformation resolved the issue.

Q 4. What are the key performance indicators (KPIs) you use to measure the success of a workflow integration project?

Measuring the success of a workflow integration project requires a set of key performance indicators (KPIs). These KPIs depend on the project’s goals, but common ones include:

- Data Accuracy: The percentage of correctly integrated data, measured by validation checks and data comparison.

- Integration Success Rate: The percentage of successful integration attempts compared to the total number of attempts.

- Data Latency: The time it takes for data to move from source to target. Lower latency is generally better.

- Throughput: The volume of data integrated per unit of time. Higher throughput indicates better efficiency.

- System Uptime: The percentage of time the integrated system is operational and available. High uptime minimizes disruptions.

- Error Rate: The frequency of errors occurring during integration. Lower error rates indicate better stability.

- Cost of Integration: The overall cost of implementing and maintaining the integration solution.

These KPIs are tracked using monitoring tools and dashboards, providing insights into the performance and effectiveness of the integration solution. Regular monitoring and reporting are essential for identifying areas of improvement and ensuring ongoing success.

Q 5. What experience do you have with different integration platforms (e.g., MuleSoft, Boomi, Dell Boomi) ?

I have significant experience with several leading integration platforms:

- MuleSoft: I’ve extensively used MuleSoft Anypoint Platform for building robust and scalable integration solutions. Its Anypoint Studio provides a user-friendly environment for developing and deploying integrations, and its connectors streamline connectivity to various systems. I’ve leveraged MuleSoft’s features like API management, message transformation, and error handling in multiple projects.

- Boomi: I’m proficient in using Dell Boomi’s low-code/no-code platform for rapid integration development. Its visual interface simplifies the design and deployment process. Boomi’s AtomSphere platform offers excellent scalability and cloud-based deployment options. I utilized Boomi to create several integrations between cloud-based applications.

My experience encompasses selecting the appropriate platform based on project requirements, considering factors such as scalability needs, development speed, existing infrastructure, and cost. Each platform has its strengths and weaknesses; choosing the right tool is crucial for project success.

Q 6. How do you ensure data integrity during workflow integration?

Maintaining data integrity during workflow integration is paramount. My approach involves several key strategies:

- Data Validation: Implementing rigorous data validation rules at each stage of the integration process. This includes checks for data types, formats, ranges, and consistency.

- Data Transformation and Cleansing: Applying transformations to standardize data formats, clean up inconsistencies, and handle missing values. This ensures that data entering the target system is accurate and consistent.

- Error Handling and Logging: Implementing comprehensive error handling mechanisms to capture and log errors during the integration process. This facilitates troubleshooting and ensures that data errors are identified and addressed.

- Data Reconciliation: Regularly comparing data in source and target systems to identify and resolve any discrepancies. This ensures data consistency and completeness.

- Version Control and Auditing: Using version control for integration code and maintaining an audit trail of data changes. This enables tracking data modifications and facilitates rollback if necessary.

For instance, in a recent project, I implemented checksum validation to ensure data integrity during file transfers, guaranteeing that data wasn’t corrupted during transmission.

Q 7. Explain your understanding of API security best practices in the context of workflow integration.

API security is crucial in workflow integration. My approach aligns with industry best practices:

- Authentication and Authorization: Implementing robust authentication mechanisms (e.g., OAuth 2.0, JWT) to verify the identity of clients accessing APIs. Authorization controls ensure that only authorized users have access to specific resources.

- Input Validation and Sanitization: Validating and sanitizing all input data received through APIs to prevent injection attacks (e.g., SQL injection, cross-site scripting).

- Data Encryption: Encrypting sensitive data both in transit and at rest using industry-standard encryption algorithms (e.g., TLS/SSL, AES).

- Rate Limiting: Implementing rate limiting to prevent denial-of-service attacks by limiting the number of requests from a single client within a given time period.

- API Security Testing: Performing regular security testing (e.g., penetration testing, vulnerability scanning) to identify and address potential security vulnerabilities.

- Security Logging and Monitoring: Implementing comprehensive security logging and monitoring to detect and respond to security incidents promptly.

I always prioritize secure coding practices and follow OWASP guidelines to mitigate security risks. In one instance, I helped a client integrate a payment gateway securely by implementing OAuth 2.0 for authentication and HTTPS with TLS encryption for secure communication.

Q 8. How do you handle conflicts between different systems’ data formats?

Data format conflicts are a common challenge in digital workflow integration. Different systems often use varying formats like XML, JSON, CSV, or proprietary structures. Handling these conflicts requires a multi-pronged approach.

- Data Transformation: This is the most common solution. We use tools like ETL (Extract, Transform, Load) processes or message transformation engines within integration platforms (e.g., MuleSoft’s DataWeave, Azure Logic Apps’ mapping) to convert data from one format to another that is compatible with the target system. For example, transforming an XML feed into a JSON structure expected by a REST API.

- Data Mapping: Creating a clear mapping between fields from the source and target systems helps ensure data integrity during transformation. This often involves specifying how to handle missing values, data type conversions, and potential data inconsistencies. Spreadsheet mapping tools or visual mapping interfaces within integration platforms are commonly used.

- Data Standardization: Where feasible, standardizing data formats across systems proactively eliminates conflict points. Adopting common data models or utilizing industry standards (e.g., HL7 for healthcare) simplifies integration and reduces maintenance overhead. This strategy requires collaboration across different teams and systems.

- Error Handling and Exception Management: Robust error handling is crucial. The integration process needs to gracefully handle situations where data transformation fails, for instance, due to invalid data or format issues. This might involve logging errors, retrying failed operations, or routing the malformed data to a separate error queue for manual review and correction.

For instance, I once worked on a project integrating a legacy system using a proprietary flat file format with a modern cloud-based CRM. We used a custom ETL script to parse the flat file, transform it into JSON, and then upload it to the CRM’s API.

Q 9. What strategies do you use for version control in workflow integration projects?

Version control is paramount for managing complexity and ensuring traceability in workflow integration projects. We typically employ a Git-based version control system (like GitLab or GitHub) for managing code, configuration files, and data transformation scripts. This allows for collaborative development, rollback capabilities, and effective branching strategies.

- Branching Strategies: We utilize feature branches to develop and test new integrations independently. This prevents conflicts and allows for parallel development. Once tested thoroughly, features are merged into the main branch.

- Continuous Integration/Continuous Deployment (CI/CD): Automating the build, testing, and deployment process through CI/CD pipelines ensures rapid iteration and early detection of issues. This includes automated testing of data transformations and integration workflows.

- Configuration Management: We use configuration management tools to store and version-control integration configurations (e.g., API endpoints, credentials, data mappings). This separates configuration data from code, enhancing maintainability and security.

- Documentation: Clear and up-to-date documentation is essential. We use tools like Confluence or Notion to maintain detailed documentation about the integration process, data flows, and versions.

Imagine needing to revert to a previous working version of an integration after a deployment error. Our version control system enables a quick and safe rollback, minimizing downtime and preventing data loss.

Q 10. Describe your experience with monitoring and logging in integration environments.

Effective monitoring and logging are crucial for maintaining the health and stability of integration environments. We utilize a combination of approaches:

- Centralized Logging: We leverage centralized logging platforms (e.g., Elasticsearch, Splunk, Graylog) to aggregate logs from different systems and components involved in the integration. This enables comprehensive monitoring and facilitates troubleshooting.

- Real-time Monitoring Dashboards: Dashboards provide real-time visibility into key integration metrics like message throughput, error rates, and latency. This allows for proactive identification of performance bottlenecks or errors.

- Alerting Systems: We set up alerting systems (e.g., PagerDuty, Opsgenie) to trigger notifications based on predefined thresholds (e.g., high error rates, long processing times). This ensures timely response to critical issues.

- Application Performance Monitoring (APM): APM tools (e.g., Datadog, New Relic) provide insights into the performance of individual integration components, allowing granular analysis of bottlenecks and optimization opportunities.

In a recent project, our monitoring system alerted us to a spike in error rates in our payment gateway integration. Through the detailed logs, we quickly identified a problem with a newly deployed data transformation script that was failing to handle invalid data formats. We immediately rolled back the change and deployed a corrected version, preventing any major service disruption.

Q 11. How do you balance the need for speed of integration with the need for robustness and security?

Balancing speed, robustness, and security is a critical aspect of integration design. A purely speed-focused approach can compromise security and long-term maintainability, while an overly cautious approach might hinder agility. We address this through a phased approach.

- Prioritization: We identify critical integration aspects and prioritize them based on business needs. Critical paths might require more rigorous testing and security checks, while less critical integrations can be implemented more quickly.

- Modular Design: Building modular integrations allows for independent development, testing, and deployment. This means changes in one part of the integration do not necessarily require a complete re-deployment.

- Automated Testing: Thorough automated testing is critical for speed and robustness. Unit testing, integration testing, and performance testing help to identify and address issues early.

- Security Best Practices: We incorporate security best practices throughout the integration lifecycle, including secure coding, data encryption, access control, and regular security audits. This includes using secure protocols like HTTPS for all communication and implementing robust authentication and authorization mechanisms.

For instance, in one project, we prioritized a quick integration of a marketing automation platform for a new campaign. However, we implemented strict security measures around customer data access and implemented a robust data validation layer to prevent vulnerabilities while ensuring speed.

Q 12. What methodologies do you use for designing and implementing workflow integrations?

We employ a combination of methodologies for designing and implementing workflow integrations, adapting our approach based on project complexity and requirements. Commonly used approaches include:

- Agile Methodologies: We use agile methodologies like Scrum or Kanban to iterate quickly, respond to changing requirements, and deliver value incrementally. This is especially useful for complex integration projects where requirements might evolve during development.

- Enterprise Integration Patterns (EIP): EIP provides a catalog of reusable design patterns for solving common integration problems. This leads to more robust, maintainable, and predictable integrations. For example, using the Message Router pattern to route messages to different systems based on content.

- API-led Connectivity: Using APIs as the primary means of communication between systems promotes reusability and modularity. APIs allow systems to communicate with each other without tight coupling.

- Microservices Architecture: Breaking down complex integrations into smaller, independent microservices enhances scalability, maintainability, and resilience. Changes in one microservice do not necessarily impact others.

In one recent project, we used an API-led approach to integrate several legacy systems with a new cloud-based platform. This allowed us to reuse APIs across multiple integrations and made the overall system more flexible and maintainable.

Q 13. How do you ensure scalability and maintainability of your integration solutions?

Ensuring scalability and maintainability is crucial for long-term success. We achieve this through:

- Scalable Architecture: Designing integrations that can handle increased load and data volume is important. Cloud-based solutions naturally offer scalability, but even on-premise solutions benefit from architecture designs that anticipate growth. This might include techniques like message queues, load balancing, and horizontal scaling.

- Loose Coupling: Minimizing dependencies between different components promotes independence and reduces the impact of changes. This means components can be updated or replaced without affecting other parts of the integration.

- Modular Design (Reiterated): Breaking down integrations into smaller, self-contained modules enhances maintainability. Changes or updates to a module don’t affect the entire system.

- Automated Testing (Reiterated): Comprehensive automated testing, including regression testing, ensures that changes do not introduce new bugs and maintains the stability of the integration.

- Comprehensive Documentation (Reiterated): Clear, well-maintained documentation is essential for understanding and maintaining integrations over time. This includes API specifications, data flow diagrams, and detailed explanations of the integration logic.

For instance, by using a message queue in one project, we were able to handle peak loads without performance degradation, ensuring reliable data processing even during high traffic periods.

Q 14. What experience do you have with cloud-based integration platforms?

I have extensive experience with various cloud-based integration platforms, including:

- MuleSoft Anypoint Platform: I have used MuleSoft for building and deploying enterprise-grade integrations, leveraging its robust features for data transformation, API management, and monitoring. Its visual development environment makes it relatively user-friendly.

- Azure Logic Apps: I’ve utilized Azure Logic Apps for building serverless integrations within the Microsoft Azure ecosystem. Its ease of use and integration with other Azure services makes it suitable for simpler integrations.

- AWS Integration Services (e.g., Amazon MSK, SQS, API Gateway): I have experience using Amazon’s suite of integration services for building and managing scalable and resilient integrations within the AWS cloud environment.

- Boomi: I’ve worked with Boomi’s iPaaS (Integration Platform as a Service) solution for integrating cloud and on-premise applications. Its visual interface and connectors simplify the integration process.

Cloud platforms offer advantages like scalability, elasticity, and reduced infrastructure management overhead. The choice of platform often depends on specific project requirements and existing infrastructure. For example, for a project deeply integrated with other Microsoft services, Azure Logic Apps was a natural choice.

Q 15. Describe your experience with testing and validation of workflow integrations.

Testing and validating workflow integrations is crucial for ensuring seamless data flow and operational efficiency. My approach involves a multi-layered strategy encompassing unit, integration, and system testing.

- Unit Testing: I focus on individual components of the workflow, verifying each module’s functionality in isolation. For example, I’d test a specific API call within an integration to confirm it correctly parses data and returns the expected response.

- Integration Testing: This stage involves testing the interaction between different components of the workflow. I use mock data and services to simulate real-world scenarios, identifying issues in data transformation or communication protocols between systems. For instance, I’d test the handoff between a CRM system and an ERP system to verify the accurate transfer of customer order data.

- System Testing: This is the end-to-end testing of the entire workflow, simulating real-world usage scenarios. This validates the entire process from start to finish, including data integrity and performance. A real-world example would be running a full order processing cycle from order placement to shipment confirmation to check for bottlenecks or errors.

I also employ various testing methodologies, including Test-Driven Development (TDD) where tests are written before the code, and Behavior-Driven Development (BDD) using tools like Cucumber for collaborative specification and automated testing. This proactive approach ensures robust and reliable integrations.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you communicate technical concepts to non-technical stakeholders?

Communicating technical details to non-technical stakeholders requires clear, concise language and a focus on the business impact. I avoid jargon and technical terms whenever possible. Instead, I use analogies and real-world examples to illustrate complex concepts.

For instance, when explaining API integrations, I might compare it to ordering food online – the restaurant (system A) receives the order (data request), prepares it (processes the data), and sends it to you (system B), the customer (receiving system). This simple analogy makes the process understandable without getting into the details of HTTP requests or JSON payloads.

Visual aids like flowcharts and diagrams are invaluable tools. They visually depict the workflow and data flow, eliminating any ambiguity. I also focus on the business value and the positive impact the integration will have on the business outcomes – increased efficiency, reduced costs, better customer service, etc.

Q 17. How do you handle unexpected errors or exceptions during workflow execution?

Handling unexpected errors and exceptions requires a robust error handling strategy. This involves implementing comprehensive logging, exception handling mechanisms, and monitoring tools.

- Logging: Detailed logging helps pinpoint the source of errors, providing crucial information for debugging and troubleshooting. I ensure that logs include timestamps, error messages, and relevant context such as data values.

- Exception Handling: I use try-catch blocks and other exception-handling mechanisms in the code to gracefully handle errors without crashing the entire workflow. This often involves redirecting the failed task to a separate error queue or sending email alerts to relevant personnel.

- Monitoring: Real-time monitoring of the workflow using tools that provide dashboards and alerts is crucial. This allows me to quickly identify and respond to exceptions as they occur. Dashboards often display key performance indicators (KPIs) like success rates and processing times.

A retry mechanism with exponential backoff is a common approach for handling transient errors, allowing the system to recover from temporary network issues or service disruptions. For persistent errors, a manual intervention process might be necessary, which usually involves escalation procedures.

Q 18. What are your preferred tools for designing and documenting workflows?

My preferred tools for designing and documenting workflows depend on the complexity and context of the project. For simple workflows, I often use tools like draw.io or Lucidchart to create visual diagrams.

For more complex scenarios, especially those involving multiple systems and intricate logic, I leverage Business Process Model and Notation (BPMN) tools such as Camunda Modeler or Signavio. BPMN allows for precise modeling of processes, including gateways, events, and tasks, facilitating clear communication and collaborative design.

Regarding documentation, I utilize a combination of tools and approaches. This includes generating documentation automatically from the code (using tools like Swagger for APIs) and creating comprehensive user manuals and technical specifications for the workflow. The goal is to have a readily accessible and understandable documentation set that supports both technical and non-technical audiences.

Q 19. What is your experience with different database technologies and their integration?

I have extensive experience integrating various database technologies, including relational databases like MySQL, PostgreSQL, and SQL Server, as well as NoSQL databases such as MongoDB and Cassandra. The choice of database technology depends heavily on the specific needs of the workflow.

For structured data with relationships between entities, relational databases are usually the best fit. On the other hand, NoSQL databases excel at handling unstructured or semi-structured data, large volumes of data, and high-velocity data streams. I’m proficient in using various techniques for data transformation and mapping to ensure seamless integration between different database systems, frequently using ETL (Extract, Transform, Load) processes.

For example, I’ve worked on projects integrating a CRM system’s relational database (SQL Server) with a real-time data analytics system using a NoSQL database (Cassandra) for high-speed data ingestion and processing. This involved designing and implementing robust ETL pipelines to ensure data consistency and accuracy across the two systems.

Q 20. How do you manage the risks associated with integrating legacy systems?

Integrating legacy systems presents several risks, including data incompatibility, security vulnerabilities, and performance bottlenecks. Managing these risks involves a phased approach focused on thorough assessment, careful planning, and meticulous execution.

- Assessment: A comprehensive assessment of the legacy system’s architecture, data structure, and security protocols is paramount. This helps identify potential challenges and vulnerabilities early on.

- Data Migration: Data migration from a legacy system often involves data cleansing, transformation, and validation to ensure data integrity and compatibility with the new systems. A phased migration approach minimizes disruption.

- API Abstraction: Creating an API layer on top of the legacy system can act as an intermediary, abstracting the complexities of the legacy system from the new integrations. This helps maintain stability while modernizing the integration approach.

- Security Considerations: Addressing security concerns is critical. This includes reviewing access controls, implementing authentication and authorization protocols, and ensuring data encryption both in transit and at rest.

A well-defined communication plan among stakeholders is also essential to manage expectations and ensure smooth collaboration throughout the integration process. The focus should be on minimizing disruption to ongoing operations and maximizing efficiency.

Q 21. What is your understanding of microservices architecture and its impact on workflow integration?

Microservices architecture significantly impacts workflow integration by breaking down monolithic applications into smaller, independent services. Each service focuses on a specific business function and can be developed, deployed, and scaled independently.

This approach leads to greater agility, improved resilience, and easier maintenance compared to traditional monolithic architectures. However, it also introduces complexities in terms of managing inter-service communication and data consistency across services.

In the context of workflow integration, microservices provide flexibility. Each microservice can be integrated with other services or external systems using lightweight protocols such as REST or message queues. However, managing distributed transactions and ensuring data consistency across multiple microservices requires careful consideration and the use of appropriate design patterns and technologies, such as sagas or event sourcing.

For example, an order fulfillment process might be split into multiple microservices: one for order placement, one for inventory management, one for payment processing, and one for shipping. Each service could be developed and deployed independently, but careful coordination is needed to ensure the entire order fulfillment process flows smoothly.

Q 22. Explain your experience with implementing security protocols (e.g., OAuth, SAML) in workflow integrations.

Implementing robust security protocols is paramount in workflow integrations, ensuring data privacy and integrity. My experience encompasses the use of OAuth 2.0 and SAML 2.0, two widely adopted standards for authorization and authentication. OAuth 2.0 is ideal for granting access to specific resources, often used in scenarios where a user needs to connect to a third-party service (like granting a social media platform access to your calendar). I’ve used it extensively in projects integrating CRM systems with marketing automation platforms, for example, to ensure only authorized users can access and modify customer data. SAML, on the other hand, is better suited for single sign-on (SSO) across multiple applications within an organization. This drastically improves the user experience by eliminating the need to log into multiple systems separately. In one project, we utilized SAML to integrate our internal project management tool with our HR system, allowing employees to seamlessly access both platforms with a single set of credentials. In both cases, careful consideration is given to token management, encryption, and access control lists to ensure only authorized parties have access to sensitive information. We frequently employ JSON Web Tokens (JWTs) for their ease of use and strong security properties. My approach includes thorough testing and penetration testing to proactively identify and mitigate potential vulnerabilities.

Q 23. How do you choose the appropriate integration technology for a given project?

Selecting the right integration technology depends heavily on several factors: the systems involved, the volume of data exchanged, the desired level of real-time interaction, security requirements, and budget constraints. For simple integrations between two systems with relatively low data volume, a lightweight approach like REST APIs with JSON might suffice. This is easily implemented and understood. However, for complex integrations involving multiple systems, real-time data streaming, or high volume transactions, a more robust Enterprise Service Bus (ESB) or message queueing system (e.g., Kafka, RabbitMQ) might be more appropriate. ESBs provide centralized management, monitoring, and transformation capabilities. Message queues are excellent for asynchronous communication, preventing one system from blocking another. In one project, we integrated a legacy system with a modern cloud-based platform using an ESB to handle the complexity and ensure reliable communication. The decision process always involves evaluating the pros and cons of each technology in relation to the project’s specific requirements and constraints. I consider factors like scalability, maintainability, and ease of future expansion. Technical feasibility and the availability of skilled resources are also critical factors in my decision-making process.

Q 24. Describe your experience with Agile development methodologies in the context of workflow integration.

Agile methodologies have become integral to my workflow integration projects. The iterative nature of Agile allows for greater flexibility and adaptability to changing requirements. Using Scrum or Kanban, we break down large integration projects into smaller, manageable sprints. Each sprint focuses on delivering a working increment of the integration. This allows us to receive early and frequent feedback from stakeholders, ensuring the integration aligns with their evolving needs. For instance, in a recent project integrating an e-commerce platform with a warehouse management system, we used two-week sprints. Each sprint delivered a specific functionality, such as order processing or inventory updates, enabling continuous testing and validation. Daily stand-up meetings, sprint reviews, and retrospectives ensure transparency, collaboration, and continuous improvement throughout the project lifecycle. The Agile approach enables efficient risk management and quick adaptation to unforeseen challenges, leading to a more successful and cost-effective integration process.

Q 25. What are your preferred methods for documenting and sharing knowledge related to workflow integrations?

Thorough documentation is crucial for the success and maintainability of workflow integrations. My preferred methods combine various techniques for clarity and accessibility. I begin with a high-level architecture diagram that provides a visual overview of the entire integration landscape. This is supplemented by detailed API specifications using tools like Swagger or OpenAPI. These specifications clearly define the endpoints, request/response formats, and authentication mechanisms. For each integration component, I maintain detailed documentation outlining its functionality, configuration parameters, and error handling procedures. This documentation is stored in a centralized repository (e.g., Confluence or SharePoint), readily accessible to all stakeholders. Furthermore, I leverage visual tools like sequence diagrams and flowcharts to illustrate the interaction between different systems. Knowledge sharing is also critical. Regular team meetings and workshops ensure that everyone understands the integration’s design and functionality. We also utilize internal wikis and knowledge bases to capture best practices and lessons learned.

Q 26. How do you handle changing requirements during a workflow integration project?

Handling changing requirements is a core aspect of successful workflow integration projects. Agile methodologies provide a robust framework for managing these changes effectively. We actively solicit feedback from stakeholders throughout the project lifecycle. This ensures we’re always building the right product. When a change request arises, we assess its impact on the project scope, timeline, and budget. We use a change control process to formally evaluate and prioritize these requests. This typically involves documenting the change, assessing its feasibility, and estimating the necessary resources and time. Prioritized changes are then integrated into the project’s backlog and addressed in subsequent sprints. Transparency is vital; stakeholders are kept informed of the impact of the change and any adjustments to the project plan. We prioritize changes based on their business value and urgency. Effective communication and collaboration are crucial in minimizing disruption and ensuring that changes are implemented smoothly and efficiently. Tools like Jira or similar project management software are essential for tracking and managing these changes.

Q 27. Describe a challenging integration project you worked on and how you overcame the challenges.

One particularly challenging project involved integrating a legacy mainframe system with a modern cloud-based CRM. The legacy system used a proprietary communication protocol, lacked comprehensive documentation, and had limited access to experienced developers. The initial approach of a direct integration proved highly complex and risky. To overcome these challenges, we employed a phased approach. Initially, we developed an intermediary layer using an ESB to act as a translator between the legacy system and the CRM. This allowed us to decouple the systems and reduce the risk of impacting the legacy system. We focused on extracting only the essential data needed for the CRM, minimizing the complexity of the integration. We used a combination of custom scripts and pre-built connectors to handle data transformation and mapping. We also created comprehensive test cases and implemented rigorous testing procedures at each phase to identify and resolve any issues early on. Through meticulous planning, incremental development, and effective communication, we successfully completed the integration, demonstrating the importance of a well-defined strategy and adaptability when faced with legacy systems and limited resources. The project successfully improved data visibility and increased operational efficiency.

Q 28. How do you stay current with the latest trends and technologies in digital workflow integration?

Staying current in the rapidly evolving field of digital workflow integration requires a multifaceted approach. I actively participate in online communities and forums, engaging in discussions with peers and experts. Attending industry conferences and webinars provides exposure to the latest technologies and best practices. Following key influencers and thought leaders on platforms like LinkedIn and Twitter keeps me abreast of emerging trends. I regularly review technical publications, blogs, and research papers. I also dedicate time to experimenting with new tools and technologies, often working on personal projects to solidify my understanding. Subscribing to relevant newsletters and participating in online courses and training programs are additional strategies I utilize. Continuous learning is crucial for remaining competitive and delivering high-quality integration solutions. A strong focus on continuous learning not only enhances my technical skills but also expands my understanding of the broader business context, ultimately leading to more effective and valuable solutions.

Key Topics to Learn for Digital Workflow Integration Interview

- Workflow Automation Tools & Platforms: Understanding the capabilities and limitations of various platforms (e.g., RPA tools, BPM suites) is crucial. Consider their strengths in different integration scenarios.

- API Integration & Data Exchange: Mastering concepts like REST APIs, SOAP APIs, and different data formats (JSON, XML) is essential for seamless data flow between systems. Practice designing and implementing integrations.

- Data Mapping & Transformation: Learn how to effectively map data fields between disparate systems and utilize transformation techniques to ensure data consistency and accuracy. Explore ETL processes.

- Security & Compliance: Understanding security protocols (OAuth, SAML) and compliance regulations (GDPR, HIPAA) relevant to data exchange within integrated workflows is vital. Practice designing secure integration solutions.

- Integration Patterns & Architectures: Familiarize yourself with common integration patterns (e.g., message queues, event-driven architectures) and architectural styles (microservices, monolithic) to choose the right approach for different scenarios. Understand the trade-offs involved.

- Troubleshooting & Debugging: Develop strong troubleshooting skills to identify and resolve integration issues. Learn to use logging and monitoring tools effectively.

- Cloud-Based Integration: Gain experience with cloud integration platforms (e.g., AWS, Azure, GCP) and their services for building scalable and reliable integrations.

- Process Optimization & Efficiency: Understand how digital workflow integration improves efficiency, reduces manual effort, and streamlines business processes. Be prepared to discuss real-world examples.

Next Steps

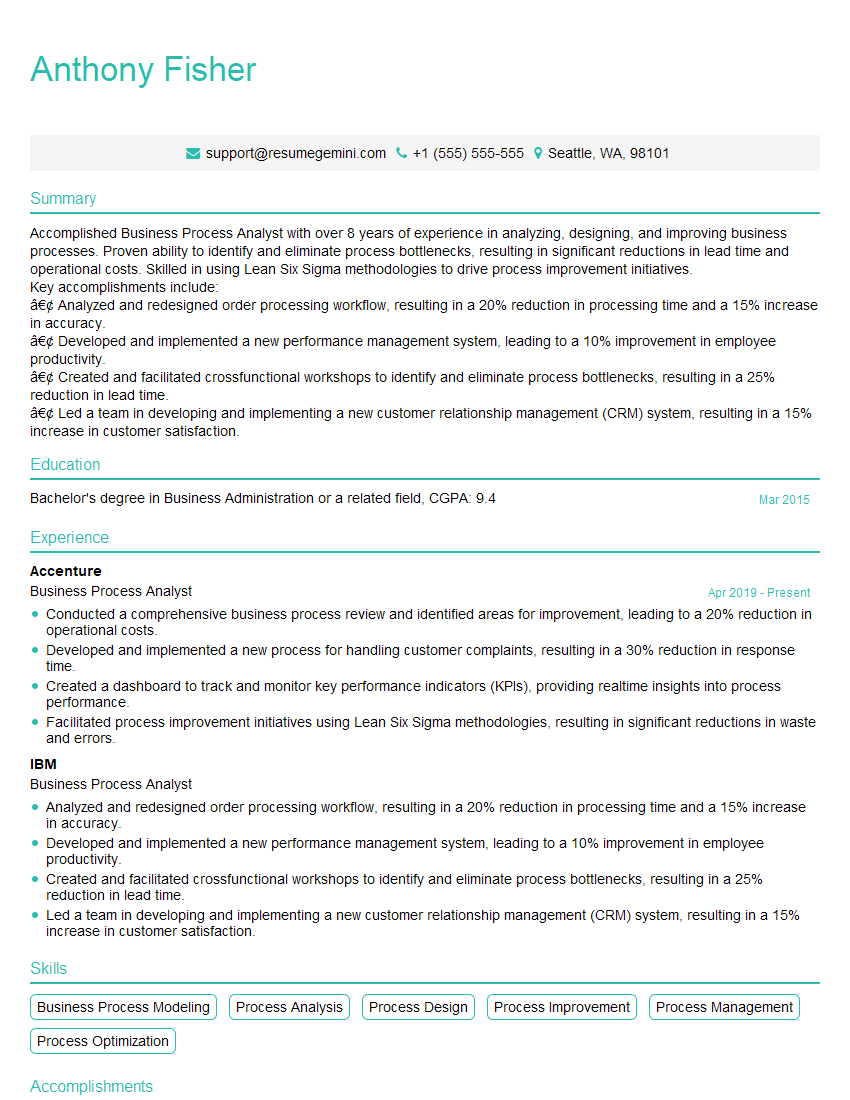

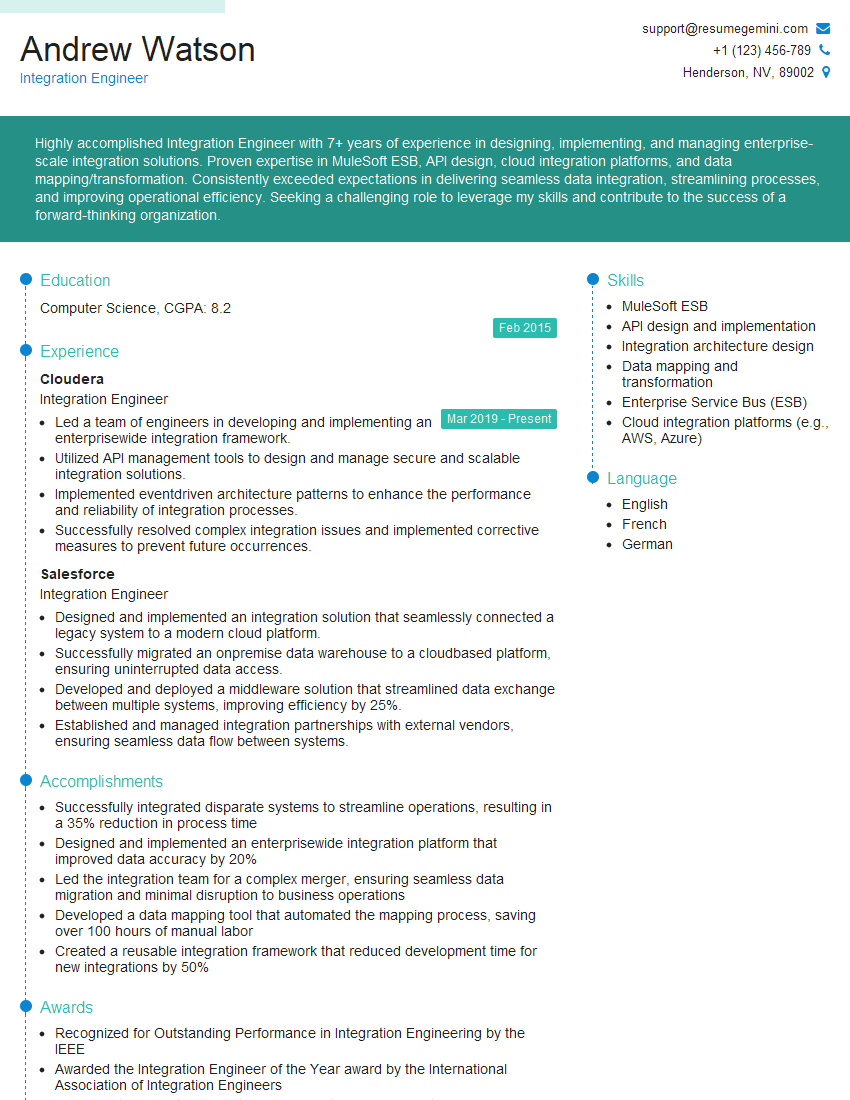

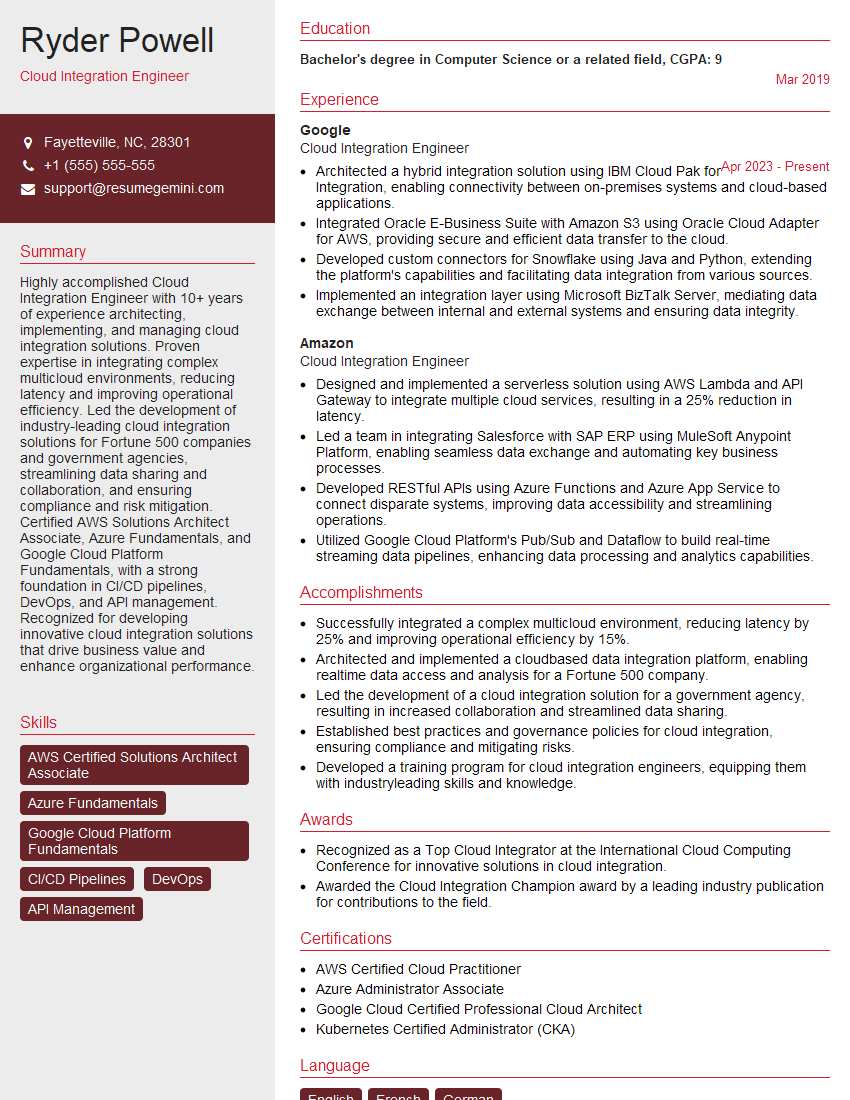

Mastering Digital Workflow Integration opens doors to exciting and high-demand roles, significantly boosting your career prospects. A well-crafted, ATS-friendly resume is your key to unlocking these opportunities. To make your resume stand out, we highly recommend using ResumeGemini. ResumeGemini provides a streamlined process for building professional resumes, and we have examples of resumes tailored specifically to Digital Workflow Integration available to help you showcase your skills effectively. Invest the time – it’s an investment in your future.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good