Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Engineering Design and Optimization interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Engineering Design and Optimization Interview

Q 1. Explain your understanding of Design of Experiments (DOE).

Design of Experiments (DOE) is a powerful statistical methodology used to efficiently plan experiments, collect data, and analyze results to understand the relationships between input factors and output responses. Think of it as a systematic way to explore a design space instead of randomly trying different combinations. Instead of blindly changing one parameter at a time, DOE allows us to intelligently vary multiple factors simultaneously, revealing interactions between them that might otherwise be missed.

A common DOE approach is the factorial design. For example, if we’re optimizing the strength of a composite material, we might have three factors: fiber type (A), resin type (B), and curing temperature (C). A full factorial design would test all possible combinations (e.g., A1B1C1, A1B1C2, A1B2C1, and so on), providing a complete picture of the effects of each factor and their interactions. Analysis of Variance (ANOVA) is then used to determine the statistical significance of each factor’s effect.

In practice, fractional factorial designs are often used for more complex scenarios to reduce the number of experiments needed. These designs strategically select a subset of the full factorial design while still allowing for estimation of main effects and some interactions. The choice of the appropriate DOE method depends heavily on the number of factors, resources, and the desired level of detail.

Q 2. Describe your experience with different optimization algorithms (e.g., genetic algorithms, gradient descent).

I have extensive experience with various optimization algorithms. Genetic algorithms (GAs) are particularly useful for tackling complex, non-linear problems with many variables and constraints where gradient information isn’t readily available. GAs mimic natural selection: they evolve a population of potential solutions iteratively, selecting the ‘fittest’ ones to reproduce and mutate, gradually converging towards an optimal solution. I’ve used GAs effectively in optimizing the design of complex mechanical structures where traditional methods struggled.

Gradient descent methods, on the other hand, are very efficient when dealing with smooth, differentiable objective functions. These algorithms iteratively adjust design parameters in the direction of the steepest descent of the objective function, guided by the gradient. I’ve successfully employed gradient descent, and its variations like stochastic gradient descent, in optimizing control systems and parameter estimation in simulations.

The choice between GAs and gradient descent, or other methods like simulated annealing or particle swarm optimization, depends heavily on the problem’s characteristics. For instance, GAs are robust to noisy objective functions but can be computationally expensive for high-dimensional problems. Gradient descent methods are fast for smooth functions but can get stuck in local optima.

Q 3. How would you approach optimizing a complex system with multiple conflicting objectives?

Optimizing a complex system with multiple conflicting objectives requires a multi-objective optimization approach. These problems often involve trade-offs: improving one objective might negatively impact another. Instead of finding a single ‘best’ solution, the goal is to find a set of Pareto optimal solutions. A Pareto optimal solution is one where you can’t improve one objective without worsening another.

Several techniques can be used to handle this. One common approach is to use weighted sum methods, where each objective is assigned a weight reflecting its relative importance. This transforms the multi-objective problem into a single-objective one, but the choice of weights can heavily influence the result. Another approach is to use Pareto-based methods, such as Non-dominated Sorting Genetic Algorithm II (NSGA-II), which explicitly identify and maintain a set of Pareto optimal solutions during the optimization process.

In practice, I often employ a combination of techniques. I start by using a visualization technique like a Pareto front plot to understand the trade-offs between objectives, helping stakeholders identify desirable solutions based on their priorities. Then, I might use a specific multi-objective optimization algorithm to refine the set of solutions within the chosen region of the Pareto front.

Q 4. What are the limitations of using simulation in engineering design optimization?

Simulation is a powerful tool in engineering design optimization, allowing for cost-effective exploration of the design space. However, it’s crucial to be aware of its limitations. Firstly, simulations are inherently approximations of reality. Model fidelity is crucial: a simplified model might not capture all important phenomena, leading to inaccurate predictions. Secondly, simulation models often require significant computational resources, especially for complex systems or high-fidelity simulations. The computational cost can become a bottleneck, limiting the number of design iterations or the complexity of the optimization algorithm.

Another crucial limitation is uncertainty in the input parameters. Real-world parameters are often subject to variability, and these uncertainties should be properly accounted for during simulation. Techniques like Monte Carlo simulations can help quantify these uncertainties, but they increase the computational burden.

Finally, validating the simulation model against experimental data is crucial. A well-validated model increases confidence in the optimization results, while a poorly validated model can lead to inaccurate conclusions. In summary, simulation is valuable, but it’s just one piece of the puzzle, and its results should always be interpreted within the context of its limitations.

Q 5. Explain your experience with Finite Element Analysis (FEA) and its application in optimization.

Finite Element Analysis (FEA) is a numerical technique used to simulate the behavior of structures and systems subjected to various loads and conditions. It’s an indispensable tool in engineering design optimization because it allows for detailed analysis of stress, strain, deformation, and other important properties under different design scenarios. I have extensive experience using FEA software (e.g., ANSYS, Abaqus) to analyze various structures and systems.

In optimization, FEA is often integrated within an optimization loop. The optimization algorithm suggests design changes, FEA is used to analyze the resulting structure’s performance, and the optimization algorithm then uses this analysis to refine the design. This iterative process continues until the desired level of performance is achieved or a termination criterion is met. For instance, I used FEA in a project to optimize the weight of a car chassis while maintaining its structural integrity under various crash scenarios.

The integration of FEA and optimization can be computationally expensive, but the ability to evaluate numerous designs in a virtual environment allows for efficient exploration of design space and significantly reduces the need for expensive physical prototyping.

Q 6. How do you handle uncertainty and variability in design optimization?

Uncertainty and variability are inherent in engineering systems. Material properties, manufacturing tolerances, and operating conditions can all vary, affecting the performance of a design. Ignoring these uncertainties can lead to suboptimal or even unsafe designs.

To handle uncertainty, I incorporate probabilistic methods into the optimization process. This might involve using robust optimization techniques, which aim to find designs that perform well across a range of possible scenarios. Alternatively, I can employ reliability-based optimization methods, which explicitly consider the probability of failure and aim to minimize this probability.

Monte Carlo simulation is a valuable tool for assessing uncertainty propagation. By repeatedly running simulations with randomly sampled input parameters, we can generate probability distributions for output variables, providing insights into the design’s sensitivity to uncertainties. This helps in identifying critical parameters and in making informed decisions about design tolerances.

Q 7. Describe your experience with multidisciplinary design optimization (MDO).

Multidisciplinary Design Optimization (MDO) addresses the challenge of optimizing systems involving multiple engineering disciplines (e.g., aerodynamics, structures, controls). In such systems, design variables in one discipline can impact the performance of other disciplines, requiring a coordinated optimization strategy.

I’ve worked on several MDO projects, employing different methodologies. One common approach is to use a collaborative optimization scheme where each discipline has its own optimization model. These models interact through a system-level model that coordinates the optimization process. Other techniques include hierarchical optimization, where disciplines are ordered in a hierarchy, and concurrent optimization, where all disciplines are optimized simultaneously.

The selection of the appropriate MDO method depends on the complexity of the system and the interactions between disciplines. Effective communication and collaboration among the engineering teams involved are also crucial for successful MDO implementation. For example, in the design of an aircraft, MDO helps to coordinate the optimization of aerodynamic performance, structural weight, and control system stability.

Q 8. How do you validate your optimization results?

Validating optimization results is crucial to ensure the solution is both feasible and reliable. It’s not just about finding a solution; it’s about verifying that it’s the right solution. My validation process typically involves several steps:

- Verification of the Optimization Model: First, I meticulously check the accuracy of the mathematical model used. This includes validating the objective function (what we’re trying to optimize) and the constraint functions (limitations on the design). I might compare the model’s predictions to known data or simplified analytical solutions where possible. Any discrepancies need to be investigated and resolved.

- Sensitivity Analysis: I perform a sensitivity analysis to understand how sensitive the optimal solution is to changes in the input parameters or assumptions. This helps determine the robustness of the solution and identifies potential areas of uncertainty.

- Comparison with Alternative Methods: Whenever feasible, I compare the results obtained from my chosen optimization algorithm with those from alternative algorithms or approaches. This provides a cross-check and helps identify potential biases or limitations of the chosen method. For example, comparing a gradient-based method with a genetic algorithm.

- Physical Testing/Simulation: For many engineering applications, it’s vital to validate the optimization results through physical testing or high-fidelity simulations. This step bridges the gap between the theoretical model and the real world. Discrepancies might highlight areas for model improvement or reveal unforeseen issues.

- Documentation and Review: Finally, the entire process, including the model, assumptions, validation methods, and results, are thoroughly documented. This allows for independent verification and facilitates future improvements or modifications.

For example, in optimizing the design of an aircraft wing, validation might involve comparing the computationally-optimized wing’s aerodynamic performance against wind tunnel test data or advanced Computational Fluid Dynamics (CFD) simulations.

Q 9. What software packages are you proficient in for design optimization (e.g., MATLAB, ANSYS, Python)?

My proficiency in software for design optimization spans several popular packages. I’m highly experienced in using:

- MATLAB: I frequently use MATLAB’s Optimization Toolbox for solving various optimization problems, leveraging its extensive library of algorithms and its powerful scripting capabilities. I find it particularly useful for prototyping and exploring different optimization strategies.

- ANSYS: For finite element analysis (FEA) and multiphysics simulations, ANSYS is my go-to software. I integrate ANSYS simulations within the optimization loop, allowing for the iterative refinement of designs based on the simulation results. This is especially beneficial when dealing with complex stress, thermal, or fluid flow constraints.

- Python: I extensively use Python, often in conjunction with libraries like SciPy (for optimization algorithms), NumPy (for numerical computations), and matplotlib (for visualization). Python offers a highly flexible environment, ideal for custom-designed optimization workflows and integration with other tools and data sources.

My expertise encompasses both commercial and open-source tools, allowing me to select the most appropriate software based on the project requirements and available resources.

Q 10. Explain your experience with different types of constraints in optimization problems.

I have extensive experience working with various constraints in optimization problems. Constraints define the feasible region within which the optimal solution must lie. They’re essential for ensuring that the design meets practical limitations and requirements. Different types of constraints include:

- Equality Constraints: These constraints require a certain relationship to hold exactly. For example,

x + y = 10. This might represent a material balance in a chemical process. - Inequality Constraints: These constraints define upper or lower bounds on variables or functions. For example,

x ≤ 5orf(x,y) ≥ 0. This could represent limits on stress, weight, or cost. - Linear Constraints: These constraints are linear functions of the design variables. They’re relatively easy to solve and often appear in linear programming problems.

- Nonlinear Constraints: These constraints involve nonlinear functions of the design variables. They are more complex to solve and often require specialized algorithms. For example, geometric constraints or aerodynamic performance requirements often lead to nonlinear constraints.

- Integer Constraints: These constraints restrict the design variables to integer values. This is common when dealing with discrete choices, like the number of components or the selection of materials.

Handling these different constraint types effectively requires expertise in selecting appropriate optimization algorithms and techniques. For instance, linear programming methods are efficient for problems with only linear constraints, while nonlinear programming techniques are necessary for problems with nonlinear constraints.

Q 11. How do you balance computational cost with solution accuracy in optimization?

Balancing computational cost and solution accuracy is a constant challenge in optimization. The ideal scenario is to achieve high accuracy with minimal computational expense, but this is often not possible. My approach involves several strategies:

- Algorithm Selection: Choosing the right optimization algorithm is critical. Some algorithms are faster but less accurate, while others are more accurate but computationally expensive. The choice depends on the complexity of the problem, the required accuracy, and the available computational resources. For example, gradient-based methods are fast but may get stuck in local optima, while genetic algorithms are slower but can explore a larger solution space.

- Approximation Techniques: For computationally expensive simulations (like CFD or FEA), response surface methodology (RSM) or surrogate models can be used to create approximations of the objective and constraint functions. These approximations are much faster to evaluate, allowing for more efficient optimization. However, accuracy is compromised, so careful validation is essential.

- Adaptive Strategies: Some algorithms allow for adaptive refinement. The algorithm starts with a coarse approximation and progressively refines the solution as more computational resources become available. This allows for a balance between early exploration and later exploitation of promising solutions.

- Parallel Computing: Leveraging parallel computing can significantly reduce the computational time, especially when dealing with expensive simulations or large design spaces. I often use parallel computing capabilities offered by software packages like MATLAB or Python.

- Termination Criteria: Careful selection of termination criteria is essential. These criteria define when the optimization process should stop. The criteria might involve a maximum number of iterations, a tolerance on the objective function improvement, or a combination of both.

The best strategy often involves a combination of these approaches, tailored to the specific problem and resources.

Q 12. Describe a challenging design optimization problem you solved and the approach you used.

One challenging optimization problem I tackled involved designing a lightweight yet structurally robust chassis for a high-performance electric vehicle. The objective was to minimize the chassis’s weight while satisfying stringent constraints on stiffness, strength, and crashworthiness. The problem was highly complex due to the nonlinear relationship between the design variables (material thickness, geometry, etc.) and the performance metrics (stiffness, strength). The design space was also vast.

My approach involved a multi-stage process:

- Topology Optimization: I initially used topology optimization to identify the optimal material distribution within the chassis, leading to a significant weight reduction while maintaining adequate structural integrity. This phase provided a starting point for the subsequent detailed design.

- Shape Optimization: Next, I performed shape optimization to refine the geometry based on the topology optimization results. This stage focused on improving the structural efficiency and manufacturability of the design. Finite Element Analysis (FEA) within ANSYS was crucial for evaluating the structural performance.

- Multi-objective Optimization: Since minimizing weight and maximizing stiffness were conflicting objectives, I utilized a multi-objective optimization technique, specifically a Pareto optimization approach. This yielded a set of optimal designs representing the trade-off between weight and performance. The final design choice was made by considering the specific requirements of the vehicle.

- Surrogate Modeling: Due to the computational expense of the FEA simulations, I employed kriging (a type of Gaussian process regression) to build surrogate models. This allowed for faster evaluation of the objective and constraint functions during the optimization process.

The final design achieved a 15% weight reduction compared to the initial design while meeting all the performance requirements. The project highlighted the importance of using a multi-stage approach and employing advanced optimization techniques for solving complex engineering problems.

Q 13. What are the key performance indicators (KPIs) you consider in design optimization?

The key performance indicators (KPIs) I consider in design optimization vary based on the specific project but generally include:

- Objective Function Value: This represents the optimal value achieved for the quantity being minimized or maximized (e.g., weight, cost, performance).

- Constraint Satisfaction: This assesses whether all constraints are met by the optimized design. Violation of constraints indicates a flawed design.

- Computational Time/Cost: The efficiency of the optimization process is crucial. This KPI measures the time and resources required to reach the solution.

- Solution Robustness: This measures how sensitive the optimal solution is to changes in input parameters or uncertainties. A robust solution is less affected by variations.

- Manufacturing Feasibility: The optimized design should be manufacturable using available technologies and processes. This often involves considering factors like tolerances, material availability, and manufacturing costs.

Depending on the context, additional KPIs might include things like lifecycle cost, environmental impact, or user experience.

Q 14. How do you communicate complex technical information about optimization results to non-technical stakeholders?

Communicating complex technical information about optimization results to non-technical stakeholders requires clear, concise, and engaging communication. My strategy involves:

- Visualizations: Charts, graphs, and diagrams are invaluable tools. I use them to visually represent key findings, such as the trade-off between different design parameters or the improvement achieved by optimization.

- Analogies and Metaphors: I often use simple analogies and metaphors to explain complex concepts. For example, I might compare optimization to finding the highest point on a mountain range.

- Focus on Key Messages: I avoid overwhelming the audience with technical details. I focus on the key takeaways and their impact on the overall project goals.

- Storytelling: Framing the optimization process as a narrative helps to make it more engaging and easier to understand. This could involve describing the challenges faced, the approach taken, and the results achieved.

- Interactive Presentations: Using interactive presentations allows stakeholders to explore the results at their own pace and ask questions.

For example, when presenting the results of a chassis optimization to a board of directors, I might focus on the weight reduction achieved, its impact on fuel efficiency and performance, and the minimal increase in manufacturing cost. Technical details would only be presented upon request.

Q 15. What is your experience with robust design optimization?

Robust design optimization focuses on creating designs that perform well even when faced with uncertainties in manufacturing, operating conditions, or material properties. It’s about building resilience into the design, not just finding the optimal solution under ideal conditions. My experience involves employing techniques like Taguchi methods and Monte Carlo simulations to quantify and minimize the impact of these uncertainties. For instance, in designing a car engine, we might use robust design to ensure consistent performance across different fuel qualities and temperatures, or to reduce the sensitivity to variations in manufacturing tolerances.

Specifically, I’ve worked on projects involving the optimization of composite materials for aerospace applications where the variability in fiber orientation and material properties needed to be addressed to ensure structural integrity under various flight conditions. We used a combination of Design of Experiments (DOE) and Response Surface Methodology (RSM) to build a robust design model and identify optimal parameter settings that minimized the variability in performance metrics.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the difference between local and global optimization.

The key difference between local and global optimization lies in the scope of the search. Local optimization algorithms find a solution that is optimal only within a limited region of the search space. Think of it like finding the highest peak on a small hill – you might find a peak, but there could be much higher peaks on other hills you haven’t explored. Global optimization, on the other hand, aims to find the absolute best solution across the entire search space, even if it involves navigating complex landscapes with multiple local optima. Finding the highest mountain in a range is a global optimization problem.

Imagine you’re trying to minimize a function with many valleys and peaks (local optima). A local optimization algorithm, like gradient descent, might get stuck in a local minimum (a valley) and never reach the global minimum (the deepest valley). A global optimization algorithm, such as genetic algorithms or simulated annealing, would explore more of the search space, increasing the likelihood of finding the global minimum.

Q 17. How do you select appropriate optimization algorithms for different problem types?

Selecting the right optimization algorithm depends heavily on the characteristics of the problem. Factors to consider include the problem’s dimensionality, the nature of the objective function (smooth, non-smooth, noisy, etc.), the presence of constraints, and computational resources available.

- Gradient-based methods (e.g., steepest descent, Newton’s method) are efficient for smooth, unconstrained problems with readily available gradients. They are fast but can get trapped in local optima.

- Evolutionary algorithms (e.g., genetic algorithms, particle swarm optimization) are robust for non-smooth, noisy, or high-dimensional problems and are less likely to get stuck in local optima, but they are computationally more expensive.

- Interior-point methods are well-suited for problems with numerous constraints.

- Simulated annealing is a probabilistic method that is particularly effective for escaping local optima in complex landscapes.

For example, for a low-dimensional, smooth problem with no constraints, a gradient-based method might be the best choice. However, for a high-dimensional, noisy, constrained problem, a genetic algorithm might be more appropriate.

Q 18. What is your experience with surrogate modeling in design optimization?

Surrogate modeling plays a crucial role in design optimization, especially when dealing with computationally expensive simulations. A surrogate model is a simplified approximation of the computationally expensive model. Instead of running the expensive simulation many times during the optimization, we build a surrogate (e.g., a response surface, Kriging, radial basis functions) from a limited set of simulations. The optimization is then performed using the surrogate, significantly reducing computational time.

In my work, I’ve utilized Kriging models for aerodynamic design optimization. The computational fluid dynamics (CFD) simulations required to evaluate the aerodynamic performance of an airfoil are very time-consuming. By building a Kriging model based on a limited set of CFD simulations, we were able to efficiently explore the design space and identify the optimal airfoil shape with minimal computational effort. This accelerated the design process by orders of magnitude.

Q 19. Describe your understanding of sensitivity analysis and its importance in optimization.

Sensitivity analysis is crucial in optimization because it helps us understand how changes in the design variables affect the objective function and constraints. It quantifies the influence of each design parameter on the overall performance. This information is valuable for several reasons: it helps in identifying the most important design variables to focus on, it guides the selection of optimization algorithms, and it helps in making design decisions. For example, if we find that a specific parameter has minimal impact on the performance, we can fix it at a nominal value, simplifying the optimization problem.

Methods such as finite difference methods and adjoint methods are commonly employed for sensitivity analysis. The results of a sensitivity analysis might reveal that a certain design parameter significantly affects the objective function, thus warranting closer scrutiny during optimization. Conversely, it may reveal parameters with negligible influence allowing us to simplify the design process by fixing those parameters.

Q 20. How do you handle noisy data in optimization problems?

Noisy data, which is often encountered in real-world optimization problems, can significantly affect the performance of optimization algorithms. It can lead to inaccurate gradient estimations, premature convergence to suboptimal solutions, and difficulty in identifying the global optimum. Strategies for handling noisy data include:

- Filtering techniques: Smoothing the noisy data using moving averages or Kalman filters can reduce the noise before applying the optimization algorithm.

- Robust optimization techniques: These methods explicitly account for uncertainty in the data. For example, using stochastic programming or chance-constrained programming to manage the uncertainty and risk associated with noisy data.

- Ensemble methods: Combining multiple optimization runs with different initial points and averaging the results can help reduce the influence of noise.

- Using algorithms less sensitive to noise: Evolutionary algorithms generally perform better than gradient-based methods in the presence of noise.

In a project involving optimizing a chemical process, the measured yields were noisy due to variations in experimental conditions. By applying a Kalman filter to pre-process the data and then employing a genetic algorithm, we effectively managed the noise and achieved a successful optimization.

Q 21. Explain your experience with topology optimization.

Topology optimization is a powerful technique used to find the optimal distribution of material within a given design space to achieve a desired objective, such as minimizing weight while satisfying structural constraints. Unlike shape optimization, which modifies the shape of a pre-defined structure, topology optimization determines the optimal material layout itself. It’s a powerful tool for creating innovative designs that might not be readily apparent through conventional design methods.

I have experience using density-based methods, which represent the material distribution with a density field, and level-set methods, which represent the material boundary using a level-set function. A common application I’ve worked on is the lightweight design of mechanical components, like chassis or brackets. We start with a given design space and optimization algorithms iteratively remove material from less critical areas, resulting in a structure that is lighter but still meets the required strength and stiffness criteria. The resulting structure often features complex shapes and intricate internal features that are difficult to achieve through traditional design methods. This leads to significant weight savings, reduced material costs, and improved overall performance.

Q 22. What are your strategies for dealing with ill-conditioned optimization problems?

Ill-conditioned optimization problems are characterized by a highly sensitive objective function or constraints, making it difficult to find the optimal solution. Small changes in the input parameters can lead to significant changes in the output, making the optimization process unstable and potentially yielding inaccurate results. My strategies for handling these problems involve a multi-pronged approach:

- Regularization Techniques: These methods add a penalty term to the objective function to improve the conditioning of the problem. For example, L1 or L2 regularization can help prevent overfitting and improve the stability of the optimization process. Think of it like adding a ‘governor’ to a system – preventing extreme swings.

- Preconditioning: This involves transforming the problem into an equivalent but better-conditioned form. This could involve scaling variables, using different coordinate systems, or applying appropriate transformations to the objective function or constraints. It’s similar to changing the gears in a car to optimize for a specific terrain.

- Robust Optimization: Instead of aiming for a single optimal point, this approach focuses on finding a solution that is less sensitive to variations in the input parameters. This is useful when there is uncertainty in the problem data. This is akin to building a bridge with a large safety margin to account for unexpected loads.

- Alternative Optimization Algorithms: Some algorithms are better suited for ill-conditioned problems than others. For instance, interior-point methods are often more robust to ill-conditioning than gradient descent. The selection of the algorithm is crucial based on the problem’s characteristics.

- Sensitivity Analysis: Conducting a thorough sensitivity analysis helps identify the parameters that most significantly affect the solution, allowing for focused improvements in the problem formulation.

In a real-world project involving the optimization of a complex chemical process, I encountered ill-conditioning due to highly nonlinear reaction kinetics. By implementing L2 regularization and carefully scaling the input variables, I was able to significantly improve the stability and accuracy of the optimization process.

Q 23. How do you incorporate manufacturing constraints into the design optimization process?

Incorporating manufacturing constraints is crucial for translating theoretically optimal designs into producible products. Ignoring these constraints often leads to designs that are impossible or prohibitively expensive to manufacture. My approach involves:

- Explicit Constraint Definition: Manufacturing constraints are explicitly defined as mathematical constraints within the optimization problem. This could include limitations on material thickness, tolerances, achievable surface finishes, or the use of specific manufacturing processes (e.g., injection molding, machining).

- Design for Manufacturing (DFM) Guidelines: I integrate DFM principles throughout the design process. This involves considering aspects like assembly complexity, part count, material selection, and ease of handling throughout the entire life cycle of a product.

- Collaboration with Manufacturing Experts: Close collaboration with manufacturing engineers is vital. Their practical experience helps identify potential manufacturing challenges early in the design process, allowing for proactive design adjustments.

- Simulation and Analysis: Using simulation tools to model the manufacturing process (e.g., finite element analysis for stress, mold flow analysis for injection molding) helps anticipate and mitigate potential manufacturing issues.

- Tolerance Analysis: A thorough tolerance analysis determines the impact of manufacturing tolerances on the final product’s performance, ensuring that the design remains functional within the specified tolerances. For example, it would prevent designs where close tolerances require excessive cost or are simply unattainable.

For instance, in designing a plastic enclosure, I would incorporate constraints on wall thickness (minimum for structural integrity, maximum for injection moldability), and draft angles (to facilitate part removal from the mold). These constraints would be included in the optimization formulation, ensuring the final design is both optimal and manufacturable.

Q 24. Describe your understanding of Pareto optimality.

Pareto optimality, also known as Pareto efficiency, describes a state where it’s impossible to improve one objective without worsening another. In the context of multi-objective optimization, a Pareto optimal solution is one that represents a trade-off between multiple conflicting objectives. No other solution dominates it.

Imagine you’re trying to design a car: you want it to be both fuel-efficient and fast. These objectives often conflict. A Pareto optimal solution would represent a set of designs where increasing fuel efficiency necessarily reduces speed, and vice versa. No single solution is ‘best’; rather, there is a set of equally optimal solutions representing various trade-offs.

Graphically, Pareto optimal solutions form the Pareto front, a curve or surface representing the best possible compromises among different objectives. The choice of a specific solution from the Pareto front depends on the prioritization of the objectives based on the specific application and user requirements.

Q 25. How do you assess the trade-offs between different design objectives?

Assessing trade-offs between design objectives involves a multi-faceted approach combining quantitative and qualitative methods.

- Multi-Objective Optimization Algorithms: Techniques like weighted sum method, ε-constraint method, and evolutionary algorithms (e.g., NSGA-II, MOEA/D) are used to find the Pareto optimal set of solutions. These algorithms help explore the trade-off space efficiently.

- Decision Making Tools: Techniques like AHP (Analytic Hierarchy Process) or utility functions can help rank different design objectives based on their importance and weigh them accordingly in the optimization process. These frameworks allow decision makers to incorporate subjective preferences.

- Visualization: Visualizing the Pareto front (through graphs or other means) provides a clear understanding of the achievable trade-offs. This allows stakeholders to understand the design space and make informed decisions.

- Sensitivity Analysis: By analyzing the sensitivity of the design to changes in objective weights or constraint parameters, designers can better understand the robustness of different solutions and the implications of design choices.

- Trade-Off Analysis: This involves explicitly quantifying the cost (or penalty) of improving one objective in terms of the degradation of another. For example, a trade-off analysis might show that a 10% increase in fuel efficiency requires a 5% reduction in speed.

In a project optimizing the design of a wind turbine, I used the NSGA-II algorithm to find the Pareto optimal designs balancing energy generation, structural integrity, and manufacturing cost. Visualization of the Pareto front allowed the client to select the design that best suited their budget and performance goals.

Q 26. What is your experience with design for manufacturability (DFM)?

Design for Manufacturability (DFM) is a critical aspect of my work. It’s not merely an afterthought but an integral part of the design process. My experience encompasses:

- Understanding Manufacturing Processes: I have a strong understanding of various manufacturing processes, including machining, casting, molding, additive manufacturing, etc. This knowledge allows me to design parts that are compatible with chosen processes and avoid common manufacturing pitfalls.

- Tolerance Analysis: I routinely perform tolerance stack-up analysis to ensure that the dimensional tolerances of individual components allow for successful assembly and proper functionality within the system. This is a key aspect of ensuring manufacturability.

- Material Selection: I choose materials appropriate for the intended manufacturing process and application, considering factors like cost, strength, machinability, and environmental impact. For instance, choosing an easily moldable plastic for injection molding instead of a hard-to-machine metal.

- Simplified Designs: I strive for simplified designs with fewer parts and simpler geometries to reduce manufacturing complexity and costs. This may involve redesigning parts to be symmetrical or using standard components to minimize custom tooling requirements.

- DFM Software: I have utilized DFM software to assess the manufacturability of designs, identifying potential issues early in the development process. This allows me to proactively address challenges before they become expensive problems.

In one project involving the design of a complex medical device, applying DFM principles allowed us to reduce the number of parts by 30%, resulting in significant cost savings and improved assembly efficiency.

Q 27. Explain your experience using optimization techniques to improve product reliability.

Optimization techniques play a crucial role in enhancing product reliability. By optimizing the design for robustness and resilience, we can significantly reduce the likelihood of failures.

- Reliability-Based Optimization (RBO): This method explicitly incorporates reliability constraints into the optimization process. It aims to find designs that satisfy performance requirements while minimizing the probability of failure. This might involve optimizing component dimensions to minimize stress concentrations or using reliability models to predict the lifetime of the product.

- Robust Design Optimization: This approach aims to design products that are less sensitive to variations in manufacturing tolerances, environmental conditions, or operating parameters. Techniques such as Taguchi methods or robust design optimization algorithms are used to identify robust design parameters that minimize the impact of uncertainties.

- Simulation and Analysis: Finite element analysis (FEA), computational fluid dynamics (CFD), and other simulation tools are used to assess the structural integrity and performance of the design under various operating conditions. This helps to identify potential weak points and optimize the design for enhanced reliability.

- Failure Mode and Effects Analysis (FMEA): FMEA is a systematic approach to identify potential failure modes and their effects on the system. The optimization process can then be used to mitigate the risks associated with these potential failures.

For example, in the design of a satellite component, I used RBO to optimize the dimensions of the structural members to meet strength requirements while minimizing the probability of fatigue failure under launch conditions. This resulted in a more reliable and robust design.

Q 28. How do you stay up-to-date with the latest advancements in engineering design optimization?

Staying current in the rapidly evolving field of engineering design optimization requires a proactive and multi-faceted approach.

- Professional Development: I actively participate in conferences, workshops, and seminars related to optimization and design. These events provide opportunities to learn about the latest research and best practices.

- Literature Review: I regularly review relevant journals, publications, and online resources to stay abreast of new algorithms, techniques, and applications. This involves reading research papers in journals such as the Journal of Mechanical Design and Optimization and Engineering.

- Online Courses and Webinars: I leverage online platforms offering courses and webinars on advanced optimization techniques, software tools, and specific applications relevant to my field.

- Software Proficiency: I continuously improve my skills in using various optimization software packages (e.g., MATLAB, ANSYS, OptiStruct) and familiarize myself with new tools and functionalities. This ensures that I can effectively apply the latest optimization techniques in practice.

- Networking: I actively participate in professional organizations and networks to exchange ideas and collaborate with experts in the field. This allows me to learn from the experiences of others and remain updated on emerging trends.

This continuous learning process ensures I’m at the forefront of the latest advancements, allowing me to implement the most effective and efficient optimization strategies in my projects.

Key Topics to Learn for Engineering Design and Optimization Interview

- Design Philosophies and Methodologies: Understand various design approaches like Design Thinking, TRIZ, and Agile methodologies, and their application in engineering projects. Consider their strengths and weaknesses in different contexts.

- Optimization Algorithms and Techniques: Familiarize yourself with linear programming, nonlinear programming, dynamic programming, and metaheuristic algorithms (genetic algorithms, simulated annealing). Be prepared to discuss their applications in real-world scenarios, such as resource allocation or supply chain optimization.

- Statistical Analysis and Data Interpretation: Master statistical methods crucial for design validation and optimization. This includes understanding experimental design, hypothesis testing, regression analysis, and data visualization techniques to interpret results effectively.

- Modeling and Simulation: Develop a strong understanding of creating and using mathematical models (finite element analysis, computational fluid dynamics) and simulations to analyze and optimize designs. Be able to discuss model limitations and validation.

- Design for Manufacturing (DFM) and Design for Assembly (DFA): Understand the principles of DFM and DFA to ensure designs are manufacturable and cost-effective. Be prepared to discuss considerations such as material selection, tolerances, and assembly processes.

- Sustainability and Life Cycle Assessment (LCA): Demonstrate awareness of sustainable design practices and the ability to incorporate LCA principles into the design process. This includes understanding environmental impacts and resource efficiency.

- Case Studies and Portfolio Preparation: Prepare examples from your past projects showcasing your ability to apply these concepts. Highlight the challenges faced, your problem-solving approach, and the results achieved.

Next Steps

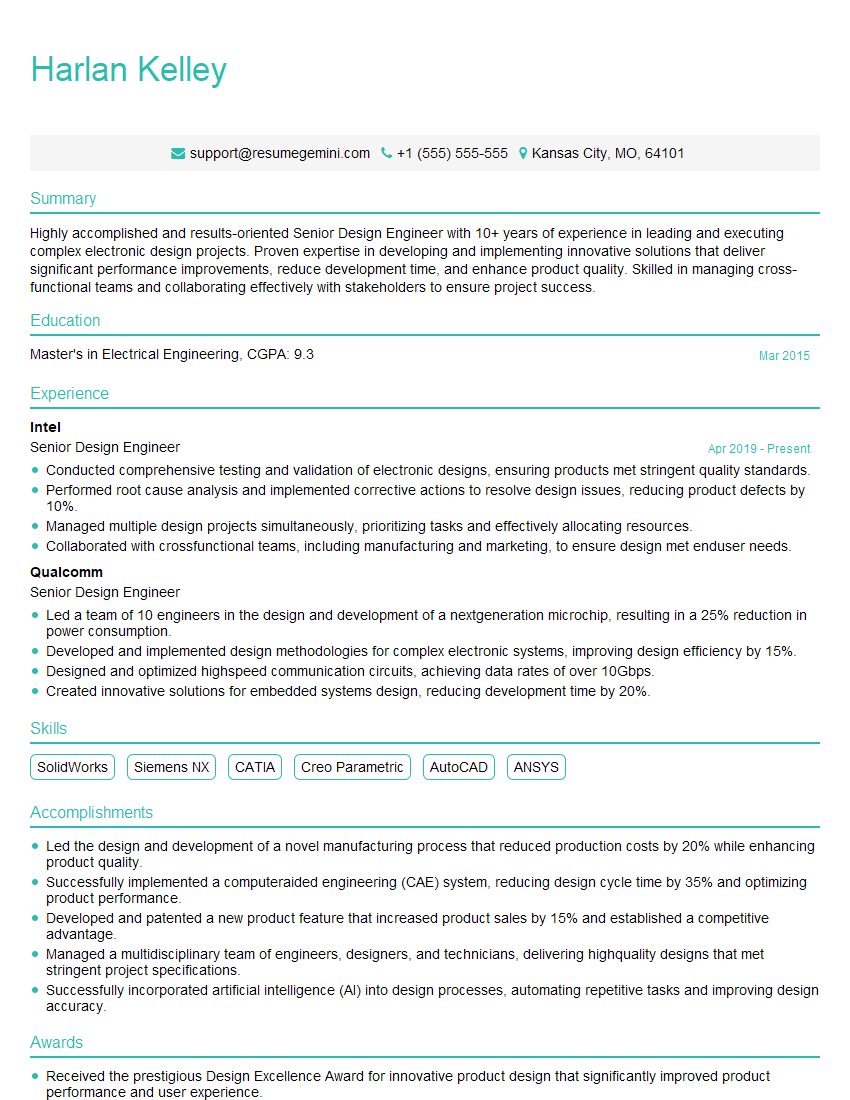

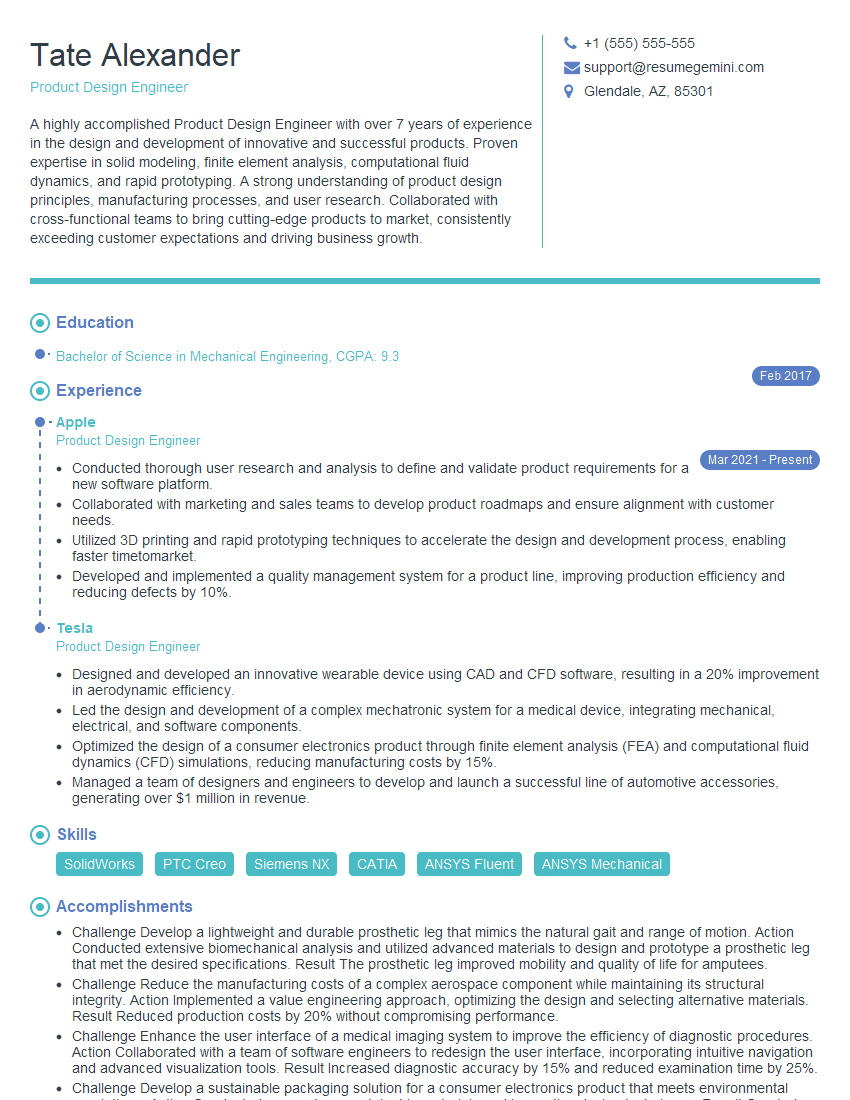

Mastering Engineering Design and Optimization is crucial for accelerating your career. These skills are highly sought after, opening doors to challenging and rewarding roles with significant growth potential. To maximize your job prospects, creating an ATS-friendly resume is essential. A well-structured resume highlights your skills and experience effectively, increasing your chances of landing an interview. We highly recommend using ResumeGemini to build a professional and impactful resume tailored to your specific experience in Engineering Design and Optimization. ResumeGemini provides examples of resumes optimized for this field, helping you present yourself in the best possible light. Take the next step in your career journey – build a winning resume today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good