Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Environmental Data Analysis and Modeling interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Environmental Data Analysis and Modeling Interview

Q 1. Explain the difference between descriptive, predictive, and prescriptive environmental modeling.

Environmental modeling can be broadly categorized into descriptive, predictive, and prescriptive types. Each serves a distinct purpose in understanding and managing our environment.

- Descriptive Modeling: This type focuses on summarizing and visualizing existing environmental data. Think of it as creating a detailed map of current conditions. For example, a descriptive model might map the current distribution of a certain plant species using GIS software and overlay it with climate data to understand its current habitat. This doesn’t predict future changes, it merely describes the present state.

- Predictive Modeling: This type uses existing data to forecast future environmental conditions. It answers the question, “What will happen?” A common example is climate change models predicting future temperature increases based on greenhouse gas emission scenarios. These models often use statistical techniques like regression or machine learning algorithms.

- Prescriptive Modeling: This advanced type goes beyond prediction to recommend optimal actions to achieve environmental goals. It answers, “What should we do?” For instance, a prescriptive model could optimize the placement of wind turbines to maximize energy generation while minimizing environmental impact, considering factors such as wind patterns, bird migration routes, and land use.

The difference lies in their objectives: descriptive models describe, predictive models forecast, and prescriptive models recommend solutions. Often, these types are used in a sequence—descriptive modeling informs predictive modeling, which in turn informs prescriptive modeling.

Q 2. Describe your experience with different types of environmental datasets (e.g., raster, vector, time series).

My experience encompasses a wide range of environmental datasets. I’ve worked extensively with:

- Raster Data: This represents data as a grid of cells, each with a value. I’ve used satellite imagery (e.g., Landsat, MODIS) for land cover classification, analyzing deforestation patterns, and assessing changes in vegetation indices. I’m also proficient in processing elevation data (DEMs) for hydrological modeling and terrain analysis.

- Vector Data: This represents data as points, lines, and polygons. I’ve utilized shapefiles containing information like river networks, pollution monitoring sites, and protected areas for spatial analysis. I’ve used this data to map pollutant dispersal, analyze the effectiveness of conservation efforts, and understand species distribution based on habitat characteristics.

- Time Series Data: This records data over time. I’ve worked extensively with meteorological data (temperature, precipitation, wind speed) and water quality data (dissolved oxygen, nutrient levels) to study climate change impacts, identify pollution sources, and predict extreme weather events. I’m experienced with analyzing these time series using methods such as ARIMA modeling to identify trends and seasonality and predict future values.

My expertise involves not just handling these data types individually but also integrating them for comprehensive environmental assessments. For instance, combining raster data (satellite imagery) with vector data (river networks) and time series data (water quality measurements) provides a holistic understanding of water pollution dynamics.

Q 3. What are the common statistical methods used in environmental data analysis?

Environmental data analysis employs a variety of statistical methods, chosen based on the data type and research question. Some common ones include:

- Regression Analysis: Used to model the relationship between a dependent variable (e.g., species abundance) and one or more independent variables (e.g., temperature, rainfall). Linear regression is a common starting point, but more complex methods like generalized linear models (GLMs) are frequently needed to account for non-linear relationships or non-normal data distributions.

- Time Series Analysis: Techniques like ARIMA models are used to analyze data collected over time and identify trends, seasonality, and autocorrelations, crucial for forecasting, such as predicting future water levels or air pollution.

- Spatial Statistics: Methods like Geostatistics (kriging) are used to interpolate values at unsampled locations, essential for creating continuous surfaces from point data. Spatial autocorrelation analysis helps understand the spatial dependencies in data, influencing the choice of statistical models.

- Multivariate Analysis: Techniques like Principal Component Analysis (PCA) and cluster analysis are used to reduce dimensionality and identify patterns in datasets with numerous variables, helping to summarize complex datasets into meaningful groups.

- Bayesian Statistics: Used to incorporate prior knowledge into statistical modeling, particularly useful when dealing with limited data or uncertainty.

The choice of method is heavily dependent on the data characteristics and the nature of the research question. A strong understanding of statistical assumptions and limitations is crucial for valid conclusions.

Q 4. How do you handle missing data in environmental datasets?

Missing data is a common challenge in environmental datasets due to equipment malfunctions, data loss, or inaccessibility. Handling missing data requires careful consideration to avoid bias and inaccurate results.

- Deletion: Complete-case analysis involves removing rows or columns with missing values. However, this can significantly reduce the sample size and introduce bias if the missing data is not random.

- Imputation: This involves replacing missing values with estimated values. Simple methods include using the mean or median of the available data for that variable. More sophisticated methods include multiple imputation, which generates multiple plausible imputed datasets, accounting for uncertainty in the imputation process. For spatial data, kriging can be used to estimate missing values based on the spatial correlation structure.

- Modeling the Missingness: Advanced techniques model the mechanism behind missing data. If missingness is related to other variables, ignoring it will lead to biased results. Methods like multiple imputation or inverse probability weighting can be used to correct for this bias.

The best approach depends on the extent and pattern of missing data, as well as the specific research question. Careful consideration is needed to minimize bias and ensure the validity of the results. Documentation of the method used to handle missing data is crucial for transparency and reproducibility.

Q 5. Explain your experience with spatial autocorrelation and how it affects your analysis.

Spatial autocorrelation refers to the correlation between values at different locations. In environmental data, nearby locations often exhibit similar characteristics (e.g., temperature, soil properties). Ignoring spatial autocorrelation leads to biased results in many statistical analyses, particularly regression modeling.

My experience involves detecting and addressing spatial autocorrelation using several methods:

- Moran’s I: A global statistic used to measure the overall spatial autocorrelation in a dataset. A significant positive Moran’s I indicates clustering of similar values.

- Geographically Weighted Regression (GWR): This accounts for spatial non-stationarity by fitting separate regression models for different locations. This is particularly useful when the relationship between variables varies across space.

- Spatial Lag and Spatial Error Models: These are extensions of traditional regression models that explicitly incorporate spatial autocorrelation into the model structure. The spatial lag model considers the influence of neighboring values on a particular location, while the spatial error model considers spatial autocorrelation in the model residuals.

Failure to account for spatial autocorrelation inflates the significance of model results, leading to incorrect conclusions about the relationships between variables. Therefore, it’s vital to assess and address spatial autocorrelation using appropriate statistical techniques.

Q 6. What are the limitations of using linear regression in environmental modeling?

Linear regression, while simple and interpretable, has limitations in environmental modeling:

- Non-linear relationships: Many environmental relationships are not linear. For instance, the relationship between pollutant concentration and its effect on aquatic life might be non-linear, with minimal effects at low concentrations and drastic effects at high concentrations. Linear regression would misrepresent this relationship.

- Non-normal data: Linear regression assumes normally distributed residuals. Environmental data often violates this assumption, especially when dealing with count data (e.g., number of species) or skewed data (e.g., heavy metal concentrations).

- Collinearity: When independent variables are highly correlated, it becomes difficult to isolate their individual effects, making interpretation challenging and potentially affecting model stability.

- Spatial autocorrelation: Linear regression assumes independence of observations. Ignoring spatial autocorrelation, common in environmental data, leads to inaccurate standard errors and inflated R-squared values.

- Outliers: Outliers can disproportionately affect the regression line, potentially leading to inaccurate predictions.

Therefore, it’s crucial to assess the appropriateness of linear regression before applying it. When its assumptions are violated, more advanced techniques like Generalized Linear Models (GLMs), Generalized Additive Models (GAMs), or other non-parametric methods are often more suitable.

Q 7. Describe your experience with various GIS software (e.g., ArcGIS, QGIS).

I’m proficient in various GIS software packages, with extensive experience in both ArcGIS and QGIS.

- ArcGIS: I have used ArcGIS extensively for spatial data management, analysis, and visualization. My experience includes geoprocessing tasks such as raster and vector data manipulation, spatial analysis tools (overlay analysis, proximity analysis), and creating maps and charts for reporting and presentations. I’ve used ArcGIS extensions such as Spatial Analyst and 3D Analyst for advanced spatial analysis and modeling.

- QGIS: I’m also fluent in QGIS, appreciating its open-source nature and flexibility. I’ve used QGIS for similar tasks as ArcGIS, including data processing, spatial analysis, and cartography. The open-source nature allows for customization and integration with other open-source tools and programming languages such as Python for more advanced data manipulation and modeling.

My experience with both ArcGIS and QGIS allows me to select the most appropriate software based on project requirements, budget, and data volume. I can leverage the strengths of each platform to effectively manage and analyze spatial environmental data.

Q 8. How do you assess the accuracy and reliability of environmental models?

Assessing the accuracy and reliability of environmental models is crucial for ensuring their usefulness in decision-making. We employ a multifaceted approach, combining statistical measures with expert judgment. Accuracy refers to how close the model’s predictions are to the observed values, while reliability reflects the model’s consistency and predictability over time and across different scenarios.

We use several key metrics. Goodness-of-fit statistics like R-squared, RMSE (Root Mean Square Error), and MAE (Mean Absolute Error) quantify the difference between predicted and observed values. A higher R-squared (closer to 1) indicates better fit, while lower RMSE and MAE values signify less error. However, these metrics alone are insufficient.

Model validation is equally important. We use techniques like cross-validation, splitting the data into training and testing sets to evaluate the model’s performance on unseen data. Sensitivity analysis helps identify parameters most influential on model output, informing where improvement efforts should focus. Furthermore, we qualitatively assess the model’s output against our understanding of the system’s behavior—does it make sense in the real world? This involves comparing model predictions to expert knowledge, physical constraints and plausible scenarios. For instance, if a model predicts negative water levels, it clearly indicates a flaw.

Finally, uncertainty analysis, discussed later, provides a critical evaluation of the model’s reliability, acknowledging the inherent limitations and variability in both the data and the model structure itself.

Q 9. Explain your experience with model calibration and validation.

Model calibration and validation are iterative processes essential for developing reliable environmental models. Calibration involves adjusting model parameters to best fit the observed data, while validation assesses the model’s performance on independent datasets. Think of it like this: calibration is fine-tuning a musical instrument, and validation is testing its performance in a concert.

In my experience, I’ve used various calibration techniques including manual adjustment based on visual inspection of model output versus observation plots, and automated methods such as least-squares optimization or more sophisticated techniques like Markov Chain Monte Carlo (MCMC) for more complex models. The choice of method depends heavily on the model’s complexity and data structure.

Validation is equally crucial. I typically employ k-fold cross-validation, dividing the data into k subsets, training the model on k-1 subsets, and validating on the remaining subset. This process is repeated k times, providing a robust estimate of model performance. Alternatively, I might use a separate dataset entirely, held back from the calibration process. A strong validation score, demonstrating good agreement between predicted and observed values, offers confidence in the model’s predictive power. If validation fails to show acceptable agreement, the model might require recalibration or even reformulation.

For instance, in a project modeling groundwater flow, I used a parameter estimation technique to calibrate a numerical groundwater model using historical water level data from monitoring wells. The calibrated model was then validated against independent measurements from wells not used in the calibration process. This allowed for assessment of the model’s predictive capability and identification of potential areas for improvement.

Q 10. What are the key considerations when selecting appropriate environmental models?

Selecting the appropriate environmental model is a critical step, requiring careful consideration of several factors. The most important factor is the specific environmental problem being addressed. This dictates the model’s required functionality and complexity. Other factors include data availability, computational resources, and the desired level of detail. Imagine choosing the right tool for a job—you wouldn’t use a sledgehammer to crack a nut.

- Spatial and Temporal Scales: Does the problem require a highly detailed model at a local scale, or a coarser resolution over a larger area? The choice between a simple regression model and a complex agent-based model depends heavily on this.

- Data Availability: The model’s complexity should be commensurate with the available data. An intricate model is useless if suitable data is scarce.

- Computational Resources: Some models are computationally intensive and may require powerful computers and software. Practical limitations need to be considered.

- Model Complexity vs. Simplicity: A simple model is easier to understand, calibrate and validate, but may not capture all the relevant complexities. A complex model might capture more nuances but might be more difficult to interpret.

- Model Purpose: What are the objectives? Is it forecasting, impact assessment, or understanding underlying processes? This should guide model selection.

For instance, if the task is predicting the spread of invasive species over a large geographical area, a spatially explicit individual-based model may be appropriate. However, if the aim is to understand the relationship between precipitation and streamflow in a specific watershed, a simple hydrological model might suffice.

Q 11. Describe your experience with different types of environmental sensors and data acquisition techniques.

My experience encompasses a wide range of environmental sensors and data acquisition techniques. This includes in-situ measurements using various sensors, remote sensing techniques, and the use of existing datasets from government agencies and research institutions.

- In-situ Measurements: This involves direct measurement of environmental parameters at specific locations. Examples include using water quality sensors (measuring pH, dissolved oxygen, turbidity), weather stations (measuring temperature, precipitation, wind speed), and soil moisture sensors. These are often deployed in networks to allow spatial and temporal analysis of variables.

- Remote Sensing: This includes using satellite and aerial imagery (e.g., Landsat, Sentinel) for monitoring land cover, vegetation health, and water quality at larger spatial scales. Data acquisition involves processing imagery to extract relevant information using specialized software.

- Existing Datasets: Leveraging freely available data from organizations like NASA, USGS, NOAA greatly enhances analytical capabilities. However, careful consideration is given to data quality, resolution, and metadata associated with these sources.

Data acquisition also involves careful consideration of data quality, including calibration and validation of sensors, accounting for sensor drift, and ensuring data integrity. I have extensive experience in data preprocessing, including cleaning, filtering, and handling missing data using various techniques. I’m also proficient in data logging using different techniques and protocols to ensure long term data security and accessibility.

Q 12. How do you visualize and interpret environmental data using different visualization tools?

Visualizing and interpreting environmental data is crucial for effective communication and understanding. I utilize various tools and techniques, selecting the most appropriate method depending on the nature of the data and the intended audience.

- Geographic Information Systems (GIS): GIS software (like ArcGIS or QGIS) is essential for visualizing spatially referenced data, creating maps of environmental variables, and overlaying different datasets. For example, mapping pollutant concentrations alongside population density to identify areas at high risk.

- Data Visualization Libraries (Python): Using libraries like Matplotlib, Seaborn, and Plotly allows creation of various types of plots (scatter plots, histograms, time series plots, heatmaps) to analyze relationships between different variables. For example, visualizing trends in air quality over time.

- Interactive Dashboards: Tools like Tableau or Power BI allow creating interactive dashboards to explore environmental data dynamically, allowing stakeholders to interact with the data and generate customized views.

The choice of visualization method depends on the data type and the message to be conveyed. A simple line graph might be suitable for showing temporal trends, while a heatmap might be best for illustrating spatial patterns. Clear labels, legends, and titles are crucial for effective communication.

For example, I once used GIS to visualize the spatial distribution of soil erosion risk factors across a landscape, aiding in targeted conservation planning. Another time, I used time series plots in Python to present changes in water quality over a period of several years to stakeholders, thereby illustrating the effect of a new treatment plant.

Q 13. Explain your understanding of uncertainty analysis in environmental modeling.

Uncertainty analysis in environmental modeling is critical because environmental systems are inherently complex and involve many sources of uncertainty. This uncertainty stems from various sources such as measurement errors in data, incomplete understanding of the processes being modeled, and simplifying assumptions made during model development. Ignoring uncertainty can lead to misleading predictions and flawed decision-making. Understanding this is paramount to making robust decisions.

Uncertainty is often categorized into aleatoric (random, irreducible uncertainty) and epistemic (reducible uncertainty due to lack of knowledge). Aleatoric uncertainty reflects inherent variability in the system, for example, the stochastic nature of weather events. Epistemic uncertainty arises from imperfect model structure, parameter values, and data quality – things we could potentially address with better data or models.

We assess uncertainty using various techniques. Sensitivity analysis helps identify parameters that significantly influence model output. Monte Carlo simulation involves running the model many times using different parameter values randomly sampled from probability distributions, providing a range of predictions and their associated probabilities. Bayesian methods provide a formal framework to incorporate prior knowledge and update uncertainty as new data becomes available.

Q 14. How do you incorporate uncertainty into your environmental model predictions?

Incorporating uncertainty into model predictions is crucial for producing realistic and reliable results. Ignoring uncertainty can lead to overly confident and potentially inaccurate predictions. Instead of presenting single-point predictions, we focus on providing uncertainty ranges, allowing decision-makers to fully understand the range of possible outcomes.

Several methods incorporate uncertainty into model predictions. Probabilistic forecasting uses Monte Carlo simulations to generate a distribution of predictions, illustrating the likely range of outcomes rather than a single prediction. We present these results as probability distributions, perhaps showing the 95% confidence interval. This shows that there’s a range of possible outcomes, not just one definite answer.

Ensemble modeling involves running multiple models with different structures or parameter sets, combining the results to improve prediction accuracy and quantify uncertainty. The spread of predictions from different models provides a measure of uncertainty.

For example, in a climate change impact assessment, we might use a probabilistic forecast to predict future sea-level rise, providing a range of potential sea-level rise scenarios with their associated probabilities instead of a single projection. This informs coastal management strategies by illustrating the range of possible impacts and helping plan for a range of outcomes.

Q 15. Describe your experience working with large environmental datasets.

Working with large environmental datasets is a core part of my expertise. It’s not just about the sheer volume of data – terabytes of satellite imagery, sensor readings, or climate model outputs – but also about its complexity: diverse formats, inconsistencies, and the need for efficient processing. My experience involves tackling these challenges using a multi-pronged approach.

For instance, in a project analyzing global deforestation patterns using Landsat imagery, I first streamlined data ingestion by using cloud-based platforms like Google Earth Engine to handle the massive volume of data efficiently. Then, I leveraged parallel processing techniques in Python (with libraries like Dask) to speed up computationally intensive tasks like image classification and change detection. Data cleaning was critical; I developed automated scripts to identify and handle missing values and outliers. This ensured the quality of the downstream analysis, leading to accurate deforestation rate estimations.

Another project involved working with a national-scale air quality monitoring network. Here, the challenge was not only the size of the data but also its heterogeneity, with data coming from various sources with different formats and reporting frequencies. I used data wrangling techniques in R to standardize data formats, address missing values through imputation strategies, and perform quality control checks to ensure data accuracy and reliability. This ultimately facilitated the development of a robust air quality model.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What programming languages and statistical software are you proficient in (e.g., R, Python)?

My proficiency spans several key programming languages and statistical software crucial for environmental data analysis and modeling. I’m highly proficient in R and Python. R provides powerful statistical capabilities and dedicated packages for ecological and environmental analysis (e.g., vegan, raster, sp), while Python offers flexibility and scalability, especially for handling large datasets and integrating with other tools through its extensive libraries like NumPy, Pandas, Scikit-learn, and GeoPandas.

I also have experience with specialized software like ArcGIS and QGIS for geospatial data analysis and visualization. I use these tools for tasks like map creation, spatial statistics, and geoprocessing. The choice of programming language and software depends on the specific project requirements and the nature of the data.

Q 17. Explain your experience with version control systems (e.g., Git).

Version control using Git is an integral part of my workflow. It’s essential for managing code, data, and documentation, especially in collaborative projects. I understand the importance of branching, merging, and resolving conflicts. I utilize platforms like GitHub and GitLab for project hosting and collaboration.

For example, in a recent project involving the development of a hydrological model, using Git allowed multiple team members to work concurrently on different aspects of the model without overwriting each other’s work. Git’s branching system allowed us to explore different model configurations and compare their performance effectively. Furthermore, it provided a complete history of all changes, facilitating traceability and allowing for easy rollback to previous versions if needed.

Q 18. Describe your approach to troubleshooting errors in environmental models.

Troubleshooting errors in environmental models is a systematic process that requires careful investigation and a methodical approach. I employ a multi-step strategy:

- Reproduce the error: First, I ensure I can consistently reproduce the error. This often involves checking the input data, model parameters, and code execution environment.

- Isolate the source: Once I can reproduce it, I try to pinpoint the source. I might use debugging tools to step through the code, examine intermediate results, and check for inconsistencies.

- Examine model assumptions: I carefully review the assumptions underlying the model. Are the data appropriate? Are the model parameters realistic? Are there limitations in the model’s design or its applicability to the specific context?

- Consult documentation and literature: I leverage available documentation for the software or model being used. I also review relevant scientific literature to better understand potential sources of error and explore possible solutions.

- Simplify the model: Sometimes, simplifying the model can help identify the problem. For instance, I might start by testing the model with a smaller, simplified dataset or with reduced complexity.

- Seek external help: If necessary, I’ll consult with colleagues or experts in the field for assistance.

Think of it like diagnosing a car problem – you need to systematically check different parts until you pinpoint the issue.

Q 19. How do you communicate complex environmental data and modeling results to non-technical audiences?

Communicating complex environmental data and modeling results to non-technical audiences requires clear and concise language, avoiding jargon. I utilize a multi-faceted approach:

- Visualizations: I rely heavily on clear and informative visuals: charts, graphs, maps, and infographics. Data visualization makes complex information more accessible and engaging.

- Analogies and storytelling: I use relatable analogies to explain technical concepts, making them easier to understand. I also try to embed results in a compelling narrative. For example, instead of saying ‘the model predicts a 20% increase in flood risk,’ I might say, ‘imagine this neighborhood being flooded 20% more often in the coming years due to changing weather patterns.’

- Simplified summaries: I provide concise summaries of key findings in plain language, avoiding technical terminology.

- Interactive tools and platforms: For larger or more interactive presentations, I leverage interactive dashboards or web applications that allow non-technical users to explore data and results at their own pace.

The goal is to make the information understandable, relevant, and actionable for the audience.

Q 20. What are some ethical considerations in environmental data analysis and modeling?

Ethical considerations are paramount in environmental data analysis and modeling.

- Data integrity and transparency: Maintaining data integrity is crucial. This involves ensuring data accuracy, completeness, and appropriate handling of missing values. Transparency is also vital; the methods used for data collection, analysis, and modeling should be clearly documented and accessible.

- Bias and fairness: It’s essential to be aware of and mitigate biases in data and models. Bias can arise from various sources, including data collection methods, model assumptions, and the selection of variables.

- Environmental justice: The results of environmental analyses and models often have significant implications for environmental justice. Therefore, it’s crucial to ensure that the analysis and conclusions do not disproportionately impact vulnerable communities.

- Data privacy and security: Protecting the privacy of individuals and ensuring the security of environmental data are also crucial ethical considerations.

- Conflicts of interest: It’s important to avoid conflicts of interest that might compromise the objectivity and integrity of the analysis or modeling process.

Ethical considerations guide my work, ensuring responsible and impactful environmental research.

Q 21. Explain your experience with environmental regulations and compliance.

My experience with environmental regulations and compliance is closely tied to my analytical work. Understanding relevant regulations is crucial for ensuring the validity and applicability of environmental studies.

I have worked on projects that require compliance with regulations like the Clean Water Act (CWA) and the Clean Air Act (CAA) in the US. For example, in a water quality assessment project, I had to ensure that our data collection methods and analytical approaches met the standards and requirements specified by the CWA. This included adhering to specific protocols for water sampling, laboratory analysis, and data reporting. Similarly, in air quality modeling projects, I ensured the models used complied with the relevant CAA requirements and guidance documents. This involved using EPA-approved models and methodologies, incorporating the necessary input data, and making sure the model outputs met the required reporting specifications. My understanding of these regulations is not only crucial for the legal validity of my work but also for the practical interpretation and application of the results.

Q 22. Describe a challenging environmental data analysis project you worked on and how you overcame the challenges.

One particularly challenging project involved predicting the spread of invasive species in a complex estuarine ecosystem. The challenge stemmed from the high dimensionality of the data – we had hydrological data, satellite imagery, water quality parameters, and species distribution records, all with varying resolutions and levels of uncertainty. Furthermore, the interactions between these factors were non-linear and not easily captured by traditional statistical methods.

To overcome this, we employed a multi-faceted approach. First, we pre-processed the data rigorously, dealing with missing values using imputation techniques like k-nearest neighbors and handling different spatial resolutions through resampling and geostatistical methods. We then used dimensionality reduction techniques like Principal Component Analysis (PCA) to identify the most influential variables, simplifying the model without significant information loss.

Finally, we chose a machine learning algorithm best suited for handling non-linear relationships and spatial autocorrelation, specifically a Random Forest model. We validated our model using rigorous cross-validation techniques and compared its performance against simpler models like generalized linear models. The Random Forest model proved significantly more accurate in predicting the spread of the invasive species, allowing for more targeted management strategies. The key to success was a systematic approach that combined data cleaning and transformation, careful selection of appropriate models, and thorough validation.

Q 23. How do you stay updated with the latest advancements in environmental data analysis and modeling?

Staying current in this rapidly evolving field requires a multi-pronged approach. I regularly read peer-reviewed journals such as Environmental Modelling & Software, Remote Sensing of Environment, and Ecological Modelling. I also actively participate in online communities and forums, such as those hosted by professional organizations like the Ecological Society of America and the Society for Environmental Toxicology and Chemistry.

Attending conferences and workshops is crucial. They provide opportunities to hear about the latest research, network with other professionals, and learn about new software and techniques. I also actively follow influential researchers and organizations on platforms like Twitter and LinkedIn. Finally, I dedicate time to self-directed learning through online courses and tutorials on platforms like Coursera and edX, focusing on emerging techniques in machine learning and big data analytics applied to environmental problems.

Q 24. What are some emerging trends in environmental data analysis and modeling?

Several exciting trends are shaping the future of environmental data analysis and modeling. One is the increasing use of big data and high-performance computing. The sheer volume of data now available from sensors, satellites, and simulations demands advanced computational tools to process and analyze it effectively. This facilitates more sophisticated models and allows us to address complex environmental challenges at unprecedented scales.

Another significant trend is the integration of machine learning and artificial intelligence. Algorithms such as deep learning are being used to analyze complex patterns in environmental data, predict future scenarios, and optimize resource management. For instance, deep learning is improving the accuracy of climate models and predicting extreme weather events.

Furthermore, there’s a growing emphasis on citizen science and open data initiatives. This approach leverages the contributions of volunteers to collect and analyze environmental data, leading to more comprehensive and geographically diverse datasets. This also fosters greater transparency and public engagement in environmental monitoring and decision-making.

Finally, the development of more sophisticated coupled models, which integrate different aspects of the environment (e.g., hydrology, ecology, climate) is becoming increasingly prevalent, leading to a more holistic and accurate understanding of complex environmental systems.

Q 25. Describe your experience with machine learning techniques in environmental modeling.

I have extensive experience applying machine learning techniques in environmental modeling. My work has involved using various algorithms, including Random Forests, Support Vector Machines (SVMs), and Neural Networks, to address diverse challenges. For example, I’ve used Random Forests to model species distribution, predicting where endangered species might thrive or invasive species might spread. This involves using environmental variables as predictors, such as temperature, precipitation, and land cover.

SVMs have been valuable in classifying land cover types from remotely sensed imagery. The ability of SVMs to handle high-dimensional data and find optimal separating hyperplanes is particularly useful in this context. Neural networks, particularly convolutional neural networks (CNNs), are increasingly being applied to analyze satellite imagery for tasks like detecting deforestation or monitoring water quality. The ability of CNNs to automatically learn hierarchical features from images makes them powerful tools for these tasks.

A key aspect of my work is not just applying these algorithms but also carefully selecting the appropriate algorithm based on the specific problem and dataset. This includes considerations of data characteristics (e.g., linearity, dimensionality), the computational resources available, and the interpretability required for the model’s output.

Q 26. How do you ensure the reproducibility of your environmental modeling work?

Reproducibility is paramount in environmental modeling. I adhere to strict protocols to ensure that my work can be easily replicated by others. This begins with meticulous documentation of every step in the analysis process. I use version control systems like Git to track changes in code and data. This allows for easy rollback to previous versions if needed and facilitates collaboration.

All code is written in a modular and well-commented fashion, making it understandable and maintainable. I use reproducible research environments like Docker or conda to encapsulate all software dependencies, ensuring that the same software versions used during the analysis are available for replication. Data is stored in organized and well-documented formats, ideally using open formats like CSV or GeoTIFF.

Furthermore, all data processing and modeling steps are clearly defined and documented, often using literate programming techniques, which interleave code and narrative descriptions. Finally, I carefully describe all model parameters, input data, and assumptions in a comprehensive report or manuscript, making it clear how the results were obtained.

Q 27. What are the limitations of using remote sensing data in environmental studies?

Remote sensing data offers invaluable insights into environmental processes, but limitations exist. One key limitation is spatial resolution. While high-resolution data is becoming increasingly available, it can be costly and may not always be suitable for large-scale studies. Lower resolution data might miss crucial fine-scale details relevant to ecological processes.

Another limitation is temporal resolution. The frequency at which data is collected can restrict the ability to capture dynamic processes. For instance, cloud cover can frequently interrupt satellite imagery acquisition, resulting in data gaps. The processing of some remote sensing data is time-consuming and requires significant technical skills.

Furthermore, atmospheric effects can significantly impact the accuracy of remotely sensed data. Atmospheric conditions can scatter and absorb radiation, affecting the signal received by the sensor. Careful atmospheric correction is crucial, but it’s not always perfect. Finally, data interpretation can be challenging. While remote sensing data provides valuable information, it often requires ground-truthing and integration with other data sources to validate and interpret the results effectively.

Q 28. Explain your understanding of different types of environmental monitoring programs.

Environmental monitoring programs vary widely depending on the specific goals and resources. Broadly, they can be categorized into several types:

- Continuous monitoring involves regular, automated measurements of environmental parameters, such as air quality or water flow. This approach is well-suited for detecting real-time changes and immediate threats. Examples include weather stations and automated water quality sensors.

- Periodic monitoring involves taking measurements at regular intervals, such as monthly or annually. This approach is often more cost-effective than continuous monitoring but may miss rapid changes between sampling events. Examples include surveys of plant or animal populations.

- Event-based monitoring is triggered by specific events, such as a natural disaster or industrial accident. This approach is focused on assessing impacts and informing emergency response. Examples would include water quality monitoring post-flood.

- Long-term monitoring involves tracking environmental changes over extended periods, often decades. This provides valuable context for understanding long-term trends and impacts. Examples are ongoing climate change monitoring programs.

The choice of monitoring program depends on various factors, including the environmental parameter of interest, the spatial and temporal scale of interest, the available resources, and the specific research questions or management objectives. A well-designed program considers these aspects and uses the appropriate combination of methods to achieve its aims.

Key Topics to Learn for Environmental Data Analysis and Modeling Interview

- Statistical Methods: Regression analysis, time series analysis, hypothesis testing. Practical application: Analyzing trends in air pollution levels and predicting future concentrations.

- Spatial Data Analysis: GIS software (ArcGIS, QGIS), geostatistics, spatial interpolation. Practical application: Mapping pollutant distribution and identifying pollution hotspots.

- Environmental Modeling Techniques: Exposure assessment models, fate and transport models, ecological risk assessment models. Practical application: Simulating the impact of a proposed industrial plant on water quality.

- Data Management and Visualization: Data cleaning, data transformation, data visualization techniques (charts, maps). Practical application: Effectively communicating complex environmental data to stakeholders.

- Programming Languages: R, Python (with relevant libraries like pandas, scikit-learn, geopandas). Practical application: Automating data processing, model building, and analysis workflows.

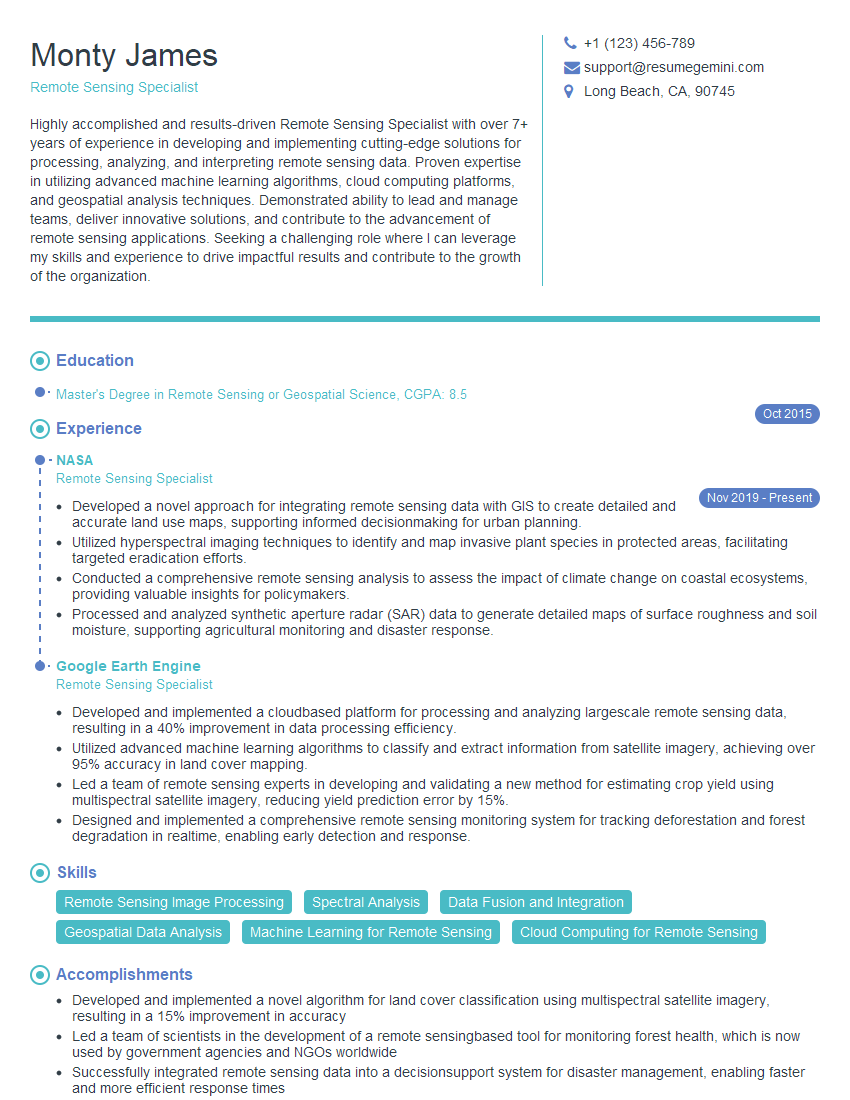

- Remote Sensing and Image Analysis: Processing satellite imagery to extract environmental information. Practical application: Monitoring deforestation rates or assessing the extent of a wildfire.

- Uncertainty and Sensitivity Analysis: Understanding and quantifying uncertainty in model predictions. Practical application: Evaluating the reliability of model outputs and making informed decisions.

- Case Studies and Research: Familiarize yourself with real-world applications of environmental data analysis and modeling. This demonstrates practical understanding beyond theory.

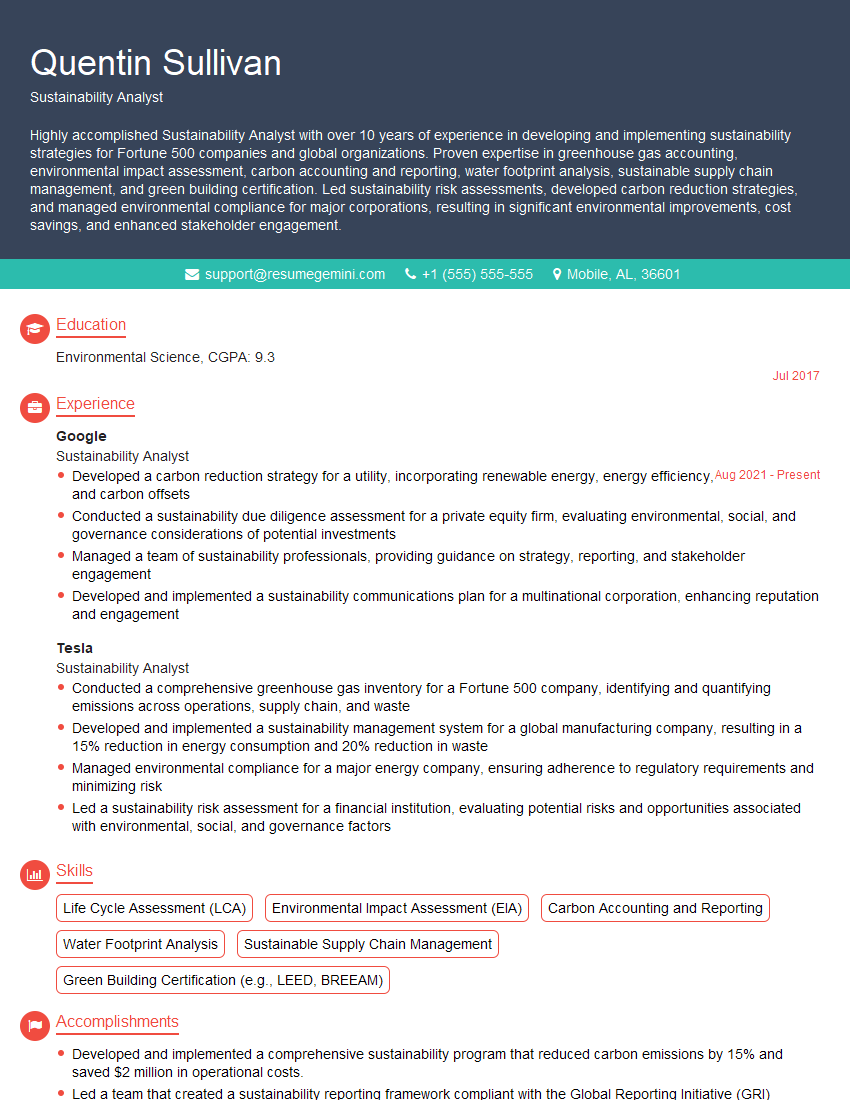

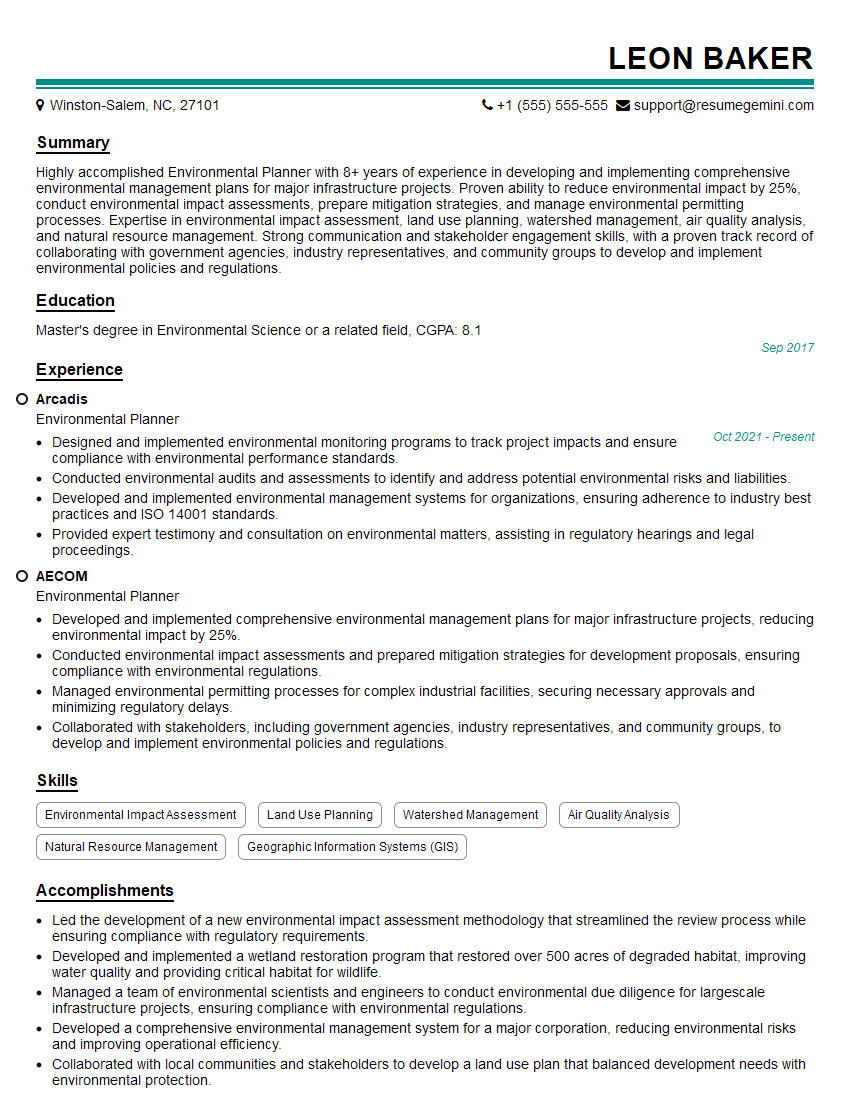

Next Steps

Mastering Environmental Data Analysis and Modeling opens doors to exciting and impactful careers in environmental protection, resource management, and scientific research. To maximize your job prospects, creating a compelling and ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you build a professional and effective resume that highlights your skills and experience in this field. Examples of resumes tailored to Environmental Data Analysis and Modeling are available to provide you with inspiration and guidance. Invest time in crafting a strong resume – it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good