Cracking a skill-specific interview, like one for Experience in using data collection software, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Experience in using data collection software Interview

Q 1. Explain your experience with different data collection methodologies.

My experience encompasses a wide range of data collection methodologies, tailored to the specific project needs. I’m proficient in both quantitative and qualitative approaches. For quantitative data, I frequently employ surveys, using tools like Qualtrics or SurveyMonkey to gather structured data on attitudes, behaviors, and demographics. This is ideal when you need statistically significant results and can easily analyze the data. For example, I recently used Qualtrics to conduct a customer satisfaction survey for a major telecom company, gathering data from over 1000 respondents.

On the qualitative side, I’ve extensively used in-depth interviews and focus groups to gain rich, nuanced insights. These methods are particularly valuable for exploratory research, uncovering the ‘why’ behind behaviors or uncovering unexpected patterns. For instance, I used semi-structured interviews to understand customer perceptions of a new software product, identifying key areas for improvement before the official launch. I also have experience with observational studies, particularly in ethnographic research settings, where I document and analyze behaviors in natural environments. This type of data collection requires careful planning and a keen eye for detail. Selecting the right methodology hinges critically on the research questions and desired outcomes.

Q 2. What data collection software are you proficient in?

My proficiency spans various data collection software, each suited for different tasks. I’m highly skilled in using Qualtrics and SurveyMonkey for online surveys. These platforms allow for robust questionnaire design, data collection, and analysis. I’m also experienced with specialized software like SPSS for statistical analysis of large datasets and NVivo for qualitative data analysis, allowing me to code and analyze transcripts from interviews or focus groups. For web data, I’ve worked extensively with tools like Octoparse and Import.io to perform web scraping tasks efficiently and reliably. Finally, I have experience utilizing APIs to directly access and collect data from various sources, including social media platforms and e-commerce websites. My choice of software always depends on the project’s specific needs and the type of data being collected.

Q 3. Describe your experience with web scraping techniques and tools.

Web scraping is a crucial skill in my toolkit. I’m adept at using tools like Octoparse and Import.io to extract data from websites. My approach always starts with understanding the website’s structure and identifying the relevant data elements. I then use these tools to create scripts that navigate the website, locate the necessary information, and extract it into a structured format. This includes handling pagination, dynamic content loading, and dealing with potential website changes. For example, I recently used Octoparse to scrape product information – including price, reviews, and descriptions – from an e-commerce platform for a market analysis project. It’s important to always respect a website’s robots.txt file and terms of service to ensure ethical and legal compliance. Furthermore, rotating proxies and implementing delays in the scraping process are vital to avoid overloading the target website and getting blocked.

Q 4. How do you ensure data quality during the collection process?

Ensuring data quality is paramount. My approach is multi-faceted and begins even before data collection. This includes meticulous questionnaire design to minimize ambiguity and maximize clarity. For surveys, pre-testing is crucial to identify and rectify any issues with question wording or flow. During data collection, I employ various validation checks. For example, range checks and consistency checks in surveys prevent the entry of clearly erroneous data points. Data cleaning protocols are integrated into the collection process to handle outliers and missing values. Automated checks and manual reviews are used to identify and correct inconsistencies or anomalies. For instance, I developed a custom Python script to flag potentially erroneous data points in a large dataset based on statistical outliers. Regular quality control checkpoints throughout the process are essential for maintaining high standards.

Q 5. What are the ethical considerations involved in data collection?

Ethical considerations are paramount in data collection. Informed consent is crucial, ensuring participants understand the purpose of the research, how their data will be used, and their right to withdraw. Data privacy is strictly adhered to; I follow all relevant regulations like GDPR and CCPA to protect personal information. Anonymization and de-identification techniques are employed whenever possible. Transparency is key; I clearly communicate the data collection methods and any potential risks to participants. Furthermore, I always prioritize data security, employing robust measures to protect collected data from unauthorized access or breaches. My approach is guided by ethical principles to ensure responsible and trustworthy data handling. I consistently review ethical guidelines to stay updated on best practices and potential changes in regulations.

Q 6. Explain your experience with data cleaning and preprocessing.

Data cleaning and preprocessing are integral to my workflow. It’s a multi-step process starting with handling missing data. I use various imputation techniques, such as mean/median imputation or more sophisticated methods like k-nearest neighbors, depending on the nature of the data and the extent of missing values. Outliers are addressed through careful examination; sometimes they represent genuine extreme values, while others reflect errors. I employ various methods to identify and handle outliers, including using box plots, Z-scores, or IQR (interquartile range). Data transformation is another crucial step; this includes standardizing or normalizing data to improve model performance or to make variables comparable. For example, I frequently use techniques like log transformation or scaling to handle skewed data. Inconsistencies in data entry are also addressed through standardization, and coding schemes are carefully developed for qualitative data. This meticulous process ensures the data is reliable and suitable for analysis.

Q 7. How do you handle incomplete or inconsistent data?

Handling incomplete or inconsistent data requires a thoughtful approach. For incomplete data, I first try to identify the reasons behind the missing values – are they missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR)? Different imputation techniques are suitable depending on the pattern of missingness. For inconsistent data, I examine the discrepancies carefully. Are they due to data entry errors, or do they reflect real variations requiring further investigation? I use various data quality checks like consistency checks and range checks to identify such issues. Data cleaning tools and scripting in languages like Python (with libraries like Pandas) are essential for efficiently managing large datasets. When faced with substantial inconsistencies or missing data, I might revisit the data collection process to understand and potentially resolve the issues. For example, if a significant portion of a specific variable is missing, I may need to consider alternative analysis methods or revisit the questionnaire design in future studies to gather more complete data.

Q 8. How do you choose the appropriate data collection method for a given project?

Choosing the right data collection method is crucial for a successful project. It depends heavily on the research question, target population, budget, and desired level of data quality. Think of it like choosing the right tool for a job – you wouldn’t use a hammer to screw in a screw.

- Surveys: Ideal for collecting large amounts of structured data quickly and efficiently from a large sample size. For example, I used online surveys powered by Qualtrics to gather customer feedback on a new product launch, analyzing responses to understand preferences and areas for improvement.

- Interviews: Best for in-depth qualitative data. I once conducted semi-structured interviews with healthcare professionals to understand their experiences with a new software system, gaining valuable insights into usability issues not apparent through quantitative methods.

- Observations: Useful for collecting behavioral data in natural settings. For instance, I observed customer interactions in a retail store to understand shopping patterns and identify potential pain points in the customer journey.

- Experiments (A/B testing): Effective for comparing different versions of a product or service. I’ve used A/B testing to optimize website design, analyzing user clicks and conversions to determine the most effective layout.

- Existing Data Sources (Secondary Data): Leveraging already existing datasets can be highly efficient and cost-effective. This could involve using publicly available datasets or accessing internal company data.

The decision process typically involves weighing the pros and cons of each method considering factors like feasibility, cost, ethical implications, and the type of data needed.

Q 9. Describe your experience with data validation techniques.

Data validation is paramount to ensure data quality and reliability. It’s like proofreading a document before submitting it – you want to catch errors before they cause problems. My approach involves a multi-step process:

- Range Checks: Ensuring data falls within expected boundaries (e.g., age between 0 and 120).

- Consistency Checks: Verifying that data across different fields is consistent (e.g., date of birth matching age).

- Completeness Checks: Making sure all required fields are populated.

- Uniqueness Checks: Confirming that data points are unique (e.g., no duplicate email addresses).

- Data Type Checks: Verifying that data conforms to the expected data type (e.g., a phone number is actually a number).

- Cross-Referencing and Validation using External Databases: Checking data against known reliable sources to verify accuracy.

For example, in a customer database, I would use range checks to ensure ages are reasonable and cross-reference postal codes with a geographical database to detect invalid entries. I also utilize scripting languages like Python with libraries such as pandas to automate much of this validation process.

Q 10. What is your experience with APIs and data integration?

APIs (Application Programming Interfaces) are essential for data integration. They act as bridges, allowing different software systems to communicate and exchange data seamlessly. Think of them as translators between different languages. My experience involves:

- RESTful APIs: I’ve extensively used RESTful APIs to extract data from various sources, such as social media platforms (e.g., Twitter API), weather services, and marketing automation tools.

- GraphQL APIs: I’ve worked with GraphQL APIs to fetch only the necessary data, improving efficiency compared to REST.

- API Authentication and Authorization: I have experience handling API security using OAuth, API keys, and other authentication methods.

- Data Transformation: I’ve used tools and scripting languages like Python to transform data fetched from APIs into formats suitable for my analysis or database.

For example, in a project involving customer behavior analysis, I used the Google Analytics API to extract website usage data, then integrated it with CRM data through a custom-built Python script. This provided a comprehensive view of customer interactions.

Q 11. How do you manage large datasets efficiently?

Managing large datasets efficiently requires a strategic approach. It’s like organizing a massive library – you need a system to find what you need quickly. My strategies include:

- Data Sampling: Analyzing a representative subset of the data instead of the entire dataset, significantly reducing processing time and resources. This is particularly helpful during exploratory data analysis.

- Data Compression: Reducing file sizes to save storage space and improve processing speed. Techniques like gzip compression are commonly used.

- Distributed Computing: Leveraging cloud computing platforms (like AWS, Azure, or Google Cloud) to distribute data processing across multiple machines, significantly speeding up analysis.

- Database Optimization: Choosing the right database management system (DBMS) and optimizing its structure (indexing, partitioning) to improve query performance.

- Data Warehousing and Data Lakes: Utilizing these technologies for efficient storage and retrieval of large datasets.

For example, when dealing with terabytes of sensor data, I used Apache Spark on a cloud computing platform to perform distributed processing, allowing for timely analysis of the data.

Q 12. Explain your experience with database management systems.

My experience with database management systems (DBMS) spans various types, including relational (SQL) and NoSQL databases. Understanding DBMS is like understanding the architecture of a well-organized filing cabinet.

- Relational Databases (SQL): Proficient in SQL, I’ve worked extensively with MySQL, PostgreSQL, and SQL Server. I’m skilled in database design, normalization, query optimization, and data integrity management.

- NoSQL Databases: Experienced with MongoDB and Cassandra, I understand the strengths of NoSQL databases for handling large volumes of unstructured or semi-structured data.

- Database Administration: I’ve performed tasks such as database setup, user management, backup and recovery, and performance tuning.

For example, I designed and implemented a relational database for a customer relationship management (CRM) system, ensuring data integrity and efficient retrieval of customer information. In another project, I used MongoDB to store and manage large volumes of social media data.

Q 13. Describe your experience with ETL processes.

ETL (Extract, Transform, Load) processes are crucial for moving data from various sources into a target data warehouse or data lake. It’s like a data pipeline, bringing data from different streams into a central reservoir.

- Extraction: I’ve used various methods to extract data from different sources, including databases, flat files, APIs, and web scraping techniques.

- Transformation: This involves cleaning, transforming, and enriching the data to ensure consistency and quality. Techniques include data cleansing, data type conversion, data aggregation, and data standardization.

- Loading: I’ve loaded the transformed data into the target data warehouse or data lake using tools like Apache Kafka, Apache Sqoop, and cloud-based ETL services.

For instance, I built an ETL pipeline to consolidate customer data from various CRM systems and marketing platforms, transforming and cleaning the data before loading it into a central data warehouse for reporting and analytics. This involved using Python, SQL, and cloud-based ETL services.

Q 14. How do you ensure data security and privacy during collection?

Data security and privacy are paramount. This is like safeguarding valuable assets – you need robust measures to prevent loss or unauthorized access. My approach involves:

- Data Encryption: Encrypting data both in transit and at rest using industry-standard encryption algorithms.

- Access Control: Implementing strict access control measures, granting only authorized personnel access to sensitive data.

- Data Anonymization and Pseudonymization: Removing or masking personally identifiable information (PII) to protect individual privacy.

- Compliance with Regulations: Adhering to relevant data protection regulations such as GDPR and CCPA.

- Secure Data Storage: Utilizing secure storage solutions, including cloud-based storage with appropriate security features.

- Regular Security Audits: Conducting regular security audits and vulnerability assessments to identify and address potential weaknesses.

For instance, when working with health data, I ensured compliance with HIPAA regulations, employing encryption and access control mechanisms to protect patient privacy. I also implemented data anonymization techniques to remove identifying information before sharing data for research purposes.

Q 15. What are the challenges of collecting data from various sources?

Collecting data from various sources presents a unique set of challenges. The primary difficulty lies in the inherent inconsistencies across different platforms and systems. Each source may employ different data formats, structures, and methodologies. For instance, you might pull data from a CRM system (structured data), a social media platform (semi-structured), and customer surveys (unstructured). Harmonizing this diverse data for meaningful analysis is a major hurdle.

- Data Format Differences: Some sources might use CSV, others JSON, XML, or even proprietary formats. Converting between these formats can be time-consuming and error-prone.

- Data Integrity Issues: Data quality varies greatly across sources. Some data may be incomplete, inaccurate, or inconsistently formatted. For example, addresses might be formatted differently or dates may use different conventions.

- Data Access Restrictions: Accessing data from different sources often requires navigating various authentication systems and APIs, which can be complex and require specialized knowledge. Some sources may have limitations on the amount of data you can extract or the frequency of access.

- Data Volume and Velocity: The sheer volume of data from multiple sources can be overwhelming, making processing and analysis challenging. The velocity, or speed, at which new data arrives also needs careful management.

Addressing these challenges often requires a robust data integration strategy, involving data transformation, cleansing, and validation processes. Tools like ETL (Extract, Transform, Load) pipelines are crucial in this context.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you measure the success of a data collection project?

Measuring the success of a data collection project requires a multi-faceted approach, going beyond simply collecting the data. It’s about evaluating whether the data collected achieved its intended purpose and provided valuable insights.

- Meeting Objectives: Did the project gather the data needed to answer the specific research questions or business problems it was designed to address? This involves reviewing the completeness and accuracy of the data against pre-defined specifications.

- Data Quality: Was the data accurate, complete, consistent, and timely? Metrics like data completeness (percentage of missing values) and accuracy (comparison against known values) are crucial. Inconsistencies can point to issues in the data collection process that need fixing.

- Cost-Effectiveness: Did the project achieve its objectives within budget and the allocated timeline? This involves evaluating the resources used (personnel, software, etc.) against the value derived from the data.

- Impact and Actionability: Did the data analysis lead to actionable insights and informed decision-making? Did it influence strategy, improve processes, or enhance products/services? This is arguably the most important metric, as it shows the overall return on investment of the project.

For example, a customer satisfaction survey project would be considered successful if it yielded a high response rate, provided reliable insights into customer sentiment, and directly led to improvements in the company’s products or services.

Q 17. What is your experience with data visualization tools?

I have extensive experience with various data visualization tools, including Tableau, Power BI, and Python libraries like Matplotlib and Seaborn. My experience extends beyond simply creating charts; I understand how to select appropriate visualizations based on the type of data and the insights we want to communicate.

For example, I recently used Tableau to create interactive dashboards for a marketing campaign, visualizing key metrics like website traffic, conversion rates, and customer acquisition costs. The interactive nature of the dashboards allowed stakeholders to explore the data, filter by different segments, and gain a deeper understanding of the campaign’s performance. This was far more effective than simply providing a static report.

My proficiency extends to choosing the right chart types. For instance, I’d use a scatter plot to show the correlation between two continuous variables, a bar chart for comparing categorical data, and a line chart for visualizing trends over time. The goal is always to communicate data clearly and effectively, avoiding misleading visuals.

Q 18. Describe your experience with data analysis and reporting.

My data analysis and reporting experience involves a structured approach encompassing data cleaning, exploratory data analysis (EDA), statistical modeling, and report generation. I’m proficient in SQL, R, and Python for data manipulation and analysis. I’m also experienced in creating both technical reports for data scientists and more accessible, business-oriented reports for executives.

In a recent project, I analyzed sales data to identify key trends and patterns using regression analysis. I then created a presentation for senior management, summarizing my findings with clear visualizations and actionable recommendations. The presentation effectively communicated complex statistical insights in a way that facilitated strategic decision-making. The report included not only the findings but also the methodology employed and any limitations.

I also emphasize clear and concise communication. I adapt my reporting style to the audience, whether it’s a detailed technical report for a data science team or a high-level summary for business stakeholders.

Q 19. Explain your experience with data mining techniques.

My experience with data mining techniques includes the application of various algorithms and methodologies to extract useful patterns and insights from large datasets. I’ve worked with both supervised and unsupervised learning techniques.

- Supervised Learning: For instance, I’ve used regression models to predict customer churn based on historical data, and classification algorithms (like logistic regression or decision trees) to identify fraudulent transactions.

- Unsupervised Learning: I’ve used clustering algorithms (like K-means) to segment customers into distinct groups based on their purchasing behavior, and dimensionality reduction techniques (like PCA) to simplify complex datasets.

I’m familiar with various data mining tools and techniques, including association rule mining (market basket analysis), anomaly detection, and sequential pattern mining. The choice of technique depends on the specific business problem and the nature of the data. For example, in a retail setting, association rule mining can reveal which products are frequently purchased together, which can inform product placement strategies.

Q 20. How do you handle data collection errors and inconsistencies?

Handling data collection errors and inconsistencies requires a multi-step process. It begins even before data collection, with careful planning and design of the collection process to minimize errors. During and after data collection, various techniques are applied to identify and correct problems.

- Data Validation: Implementing data validation rules during data entry helps prevent errors. For instance, you can constrain data entry to specific formats (like email addresses or dates) and set range checks (to ensure values are within acceptable bounds).

- Data Cleaning: This involves identifying and correcting or removing errors in the data. Common tasks include handling missing values (imputation or removal), identifying and correcting outliers, and standardizing inconsistent data formats.

- Data Reconciliation: When data is collected from multiple sources, reconciliation is crucial to identify and resolve discrepancies. This might involve comparing data across sources and applying appropriate rules to resolve conflicts.

- Error Logging and Tracking: Maintaining a log of errors identified and actions taken helps in identifying patterns and potential improvements to the data collection process.

Imagine a survey with a question asking for age. Data validation would prevent non-numeric entries. Data cleaning would then handle outliers (e.g., ages of 200) or missing ages. Logging every instance helps detect patterns – are missing ages skewed towards a specific demographic?

Q 21. Describe your experience with different data formats (CSV, JSON, XML).

I have extensive experience working with various data formats, including CSV, JSON, and XML. Each format has its own strengths and weaknesses, and the best choice depends on the specific application.

- CSV (Comma Separated Values): A simple and widely used format for tabular data. It’s easy to read and write using most programming languages and spreadsheet software. However, it doesn’t handle complex data structures well.

- JSON (JavaScript Object Notation): A lightweight and human-readable format often used for data exchange between web applications. It’s particularly well-suited for representing nested data structures. Libraries in various programming languages are readily available for parsing JSON.

- XML (Extensible Markup Language): A more complex and verbose format used for representing hierarchical data structures. It’s often used for exchanging data between different systems. XML requires parsing libraries but offers strong schema definition capabilities, which ensures data integrity.

I routinely use Python libraries like Pandas to read and manipulate data from these different formats, making the process of converting between them seamless. Understanding the nuances of each format is vital to ensure efficient data processing and avoid errors.

Q 22. How do you maintain data integrity throughout the collection process?

Maintaining data integrity during collection is paramount. It’s like building a house – a shaky foundation leads to a shaky structure. We ensure accuracy and consistency through several key strategies:

- Data Validation: Implementing real-time checks within the data collection tool. For example, using dropdown menus instead of free text for categorical variables reduces errors and inconsistencies. If a respondent enters an invalid value, a clear error message is displayed immediately.

- Regular Audits: Periodic reviews of the collected data, both automated and manual. This involves checking for outliers, inconsistencies, and missing values. For instance, if I’m collecting age data and a respondent enters ‘200’, a flag is raised for manual review.

- Version Control: Tracking changes made to questionnaires or data files. This prevents accidental overwriting of crucial information and allows for easy rollback if necessary. Imagine using a version control system like Git for questionnaires – each change is tracked and you can revert to previous versions.

- Data Cleaning Procedures: Establishing a clear process for handling missing data and outliers. This could involve imputation (filling in missing values using statistical methods) or removal of outliers after thorough investigation. It’s important to document the cleaning procedures used to ensure reproducibility.

By combining these methods, we significantly reduce errors and maintain high data quality from the start.

Q 23. What is your experience with data governance policies and procedures?

My experience with data governance involves adhering to strict policies and procedures related to data privacy, security, and ethical considerations. This includes:

- Compliance with Regulations: Ensuring compliance with relevant regulations such as GDPR, CCPA, and HIPAA, depending on the type of data collected and the geographic location of the respondents. This involves implementing appropriate security measures and obtaining informed consent.

- Data Access Control: Limiting access to data based on the principle of least privilege. Only authorized personnel are granted access to the data, and access is logged for auditing purposes. This safeguards sensitive information.

- Data Retention Policies: Adhering to specific data retention policies, determining how long data needs to be kept and establishing secure procedures for archiving and disposal of data. This ensures that data is not stored beyond its necessary lifetime.

- Documentation: Maintaining meticulous documentation of data governance procedures, including data dictionaries, metadata, and processing logs. This allows for traceability and compliance audits.

In a recent project, I implemented strict data encryption and access controls, which ensured compliance with GDPR regulations when collecting sensitive personal information from European Union residents.

Q 24. Explain your experience with different data collection tools (e.g., SurveyMonkey, Qualtrics).

I’ve worked extensively with various data collection tools, each with its strengths and weaknesses. My experience includes:

- SurveyMonkey: Ideal for simpler surveys and quick data collection. I’ve used it for market research projects where the focus was on capturing large sample sizes efficiently. Its ease of use and intuitive interface are beneficial for less technical users.

- Qualtrics: A more sophisticated platform for complex surveys, branching logic, and advanced data analysis. I’ve utilized Qualtrics for academic research projects requiring highly customized questionnaires with embedded logic and detailed respondent tracking.

- Custom-built solutions: For highly specialized data collection needs, I’ve worked on projects requiring custom-built solutions using programming languages like Python and R, integrated with databases like PostgreSQL or MySQL. This offered complete control over the data collection process and allowed for seamless integration with other systems.

The choice of tool depends entirely on the project requirements. Simple surveys might benefit from SurveyMonkey’s ease of use, while complex research studies often require the capabilities of Qualtrics or a custom solution.

Q 25. How do you optimize data collection for efficiency and cost-effectiveness?

Optimizing data collection for efficiency and cost-effectiveness involves a multi-pronged approach:

- Targeted Sampling: Carefully defining the target population and selecting the most appropriate sampling method to minimize the sample size while maintaining representativeness. This reduces survey costs and response time.

- Survey Design: Creating concise and engaging surveys to minimize respondent burden and maximize response rates. Using clear and unambiguous questions reduces the need for follow-up clarifications.

- Automation: Leveraging automation wherever possible. This includes automated data entry, email reminders to non-respondents, and automated data cleaning procedures.

- Technology Selection: Choosing cost-effective data collection tools tailored to the project’s needs. A free tool might suffice for smaller projects, while larger projects may justify the cost of a more powerful platform.

- Data Analysis Planning: Clearly defining the research questions and analysis plan before data collection helps ensure that the data collected is relevant and avoids unnecessary expense.

For example, by using a carefully designed questionnaire and automated email reminders, I was able to achieve a 75% response rate in a recent project, significantly reducing the costs associated with acquiring a larger sample size.

Q 26. Describe your experience with data anonymization and de-identification techniques.

Data anonymization and de-identification are crucial for protecting respondent privacy. The difference lies in the level of protection:

- De-identification: Removing identifying information like names, addresses, and phone numbers. This reduces the risk of re-identification, but it’s not foolproof. Advanced techniques like data linkage could potentially re-identify individuals.

- Anonymization: Applying more robust techniques to make it computationally infeasible to re-identify individuals. This could involve data perturbation (adding noise to data), generalization (replacing specific values with broader categories), or using differential privacy methods (adding carefully calibrated noise to query results).

In a project involving sensitive health data, I employed a combination of de-identification (removing direct identifiers) and generalization (grouping ages into broader age ranges) to protect respondent privacy while retaining the utility of the data for analysis. Careful consideration must always be given to the balance between privacy and data utility.

Q 27. How do you stay current with the latest trends in data collection and technology?

Staying current in the dynamic field of data collection requires continuous learning. I actively engage in several strategies:

- Professional Development: Attending conferences, webinars, and workshops focused on data science, data privacy, and new technologies in data collection.

- Industry Publications: Regularly reading industry publications and journals to stay abreast of the latest research and trends.

- Online Courses and Certifications: Completing online courses and obtaining certifications in relevant areas such as data privacy and security.

- Networking: Connecting with peers and professionals in the field through online communities and professional organizations.

- Experimentation: Actively experimenting with new data collection tools and techniques to assess their effectiveness and applicability to my work.

Recently, I’ve been exploring the use of AI-powered tools for automated data labeling and analysis, which could significantly improve efficiency in future projects.

Q 28. How do you handle bias in data collection and analysis?

Addressing bias in data collection and analysis is crucial for obtaining unbiased results. Bias can creep in at various stages:

- Sampling Bias: This occurs when the sample doesn’t accurately represent the population. Careful sample design and the use of appropriate sampling techniques are critical. Stratified sampling can address overrepresentation of certain groups.

- Question Bias: Leading questions or poorly worded questions can introduce bias into the responses. Careful questionnaire design and pre-testing are essential to identify and rectify biased questions.

- Response Bias: Respondents may answer questions in a way that they believe is socially desirable or expected. Ensuring anonymity and using neutral language can help mitigate this.

- Confirmation Bias: The tendency to search for or interpret information that confirms pre-existing beliefs. Rigorous analytical methods and transparent reporting are essential to mitigate this.

To counter bias, I employ techniques such as blind analysis (analysts are unaware of the groups being studied), rigorous statistical methods to control for confounding variables, and careful interpretation of results. Transparency in methodology and reporting is key to allowing others to assess the potential impact of bias on the findings.

Key Topics to Learn for Experience in using Data Collection Software Interviews

- Data Collection Methodologies: Understanding various data collection methods (surveys, interviews, observations, etc.), their strengths, weaknesses, and appropriate applications. Consider how to choose the right method for a given research question or business problem.

- Software Proficiency: Demonstrate familiarity with popular data collection tools (e.g., Qualtrics, SurveyMonkey, Alchemer, custom-built solutions). Be prepared to discuss your experience with specific software, including data entry, cleaning, and export processes. Highlight your ability to adapt to new software quickly.

- Data Integrity and Validation: Explain your approach to ensuring data accuracy and reliability. Discuss techniques for identifying and handling missing data, outliers, and inconsistencies. Showcase your understanding of data validation rules and processes.

- Data Security and Privacy: Discuss ethical considerations related to data collection and storage, including compliance with relevant regulations (e.g., GDPR, HIPAA). Show your awareness of data anonymization and security best practices.

- Data Analysis Fundamentals: While not strictly data *collection*, a basic understanding of descriptive statistics and data visualization will strengthen your candidacy. Be able to talk about how collected data informs decisions or further analysis.

- Problem-Solving with Data Collection: Describe instances where you encountered challenges during data collection (e.g., low response rates, technical difficulties, unexpected data patterns) and how you overcame them. Focus on your proactive approach and ability to adapt to changing circumstances.

Next Steps

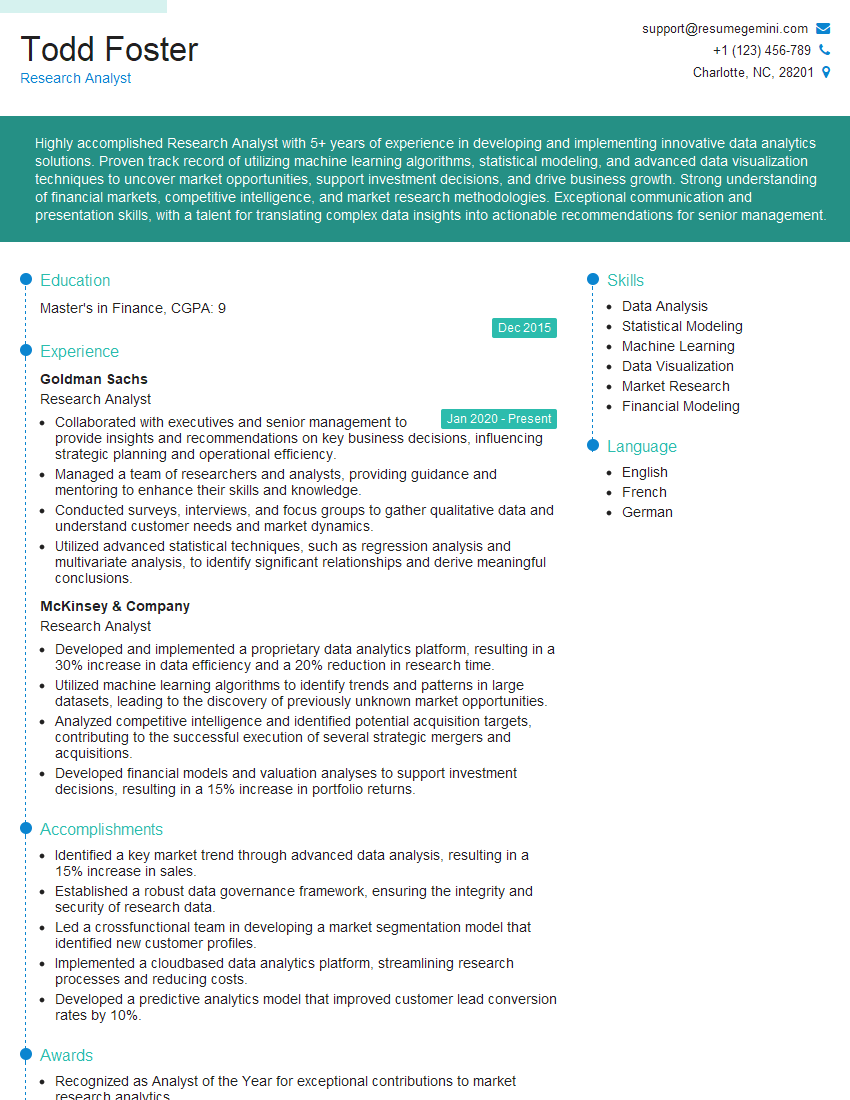

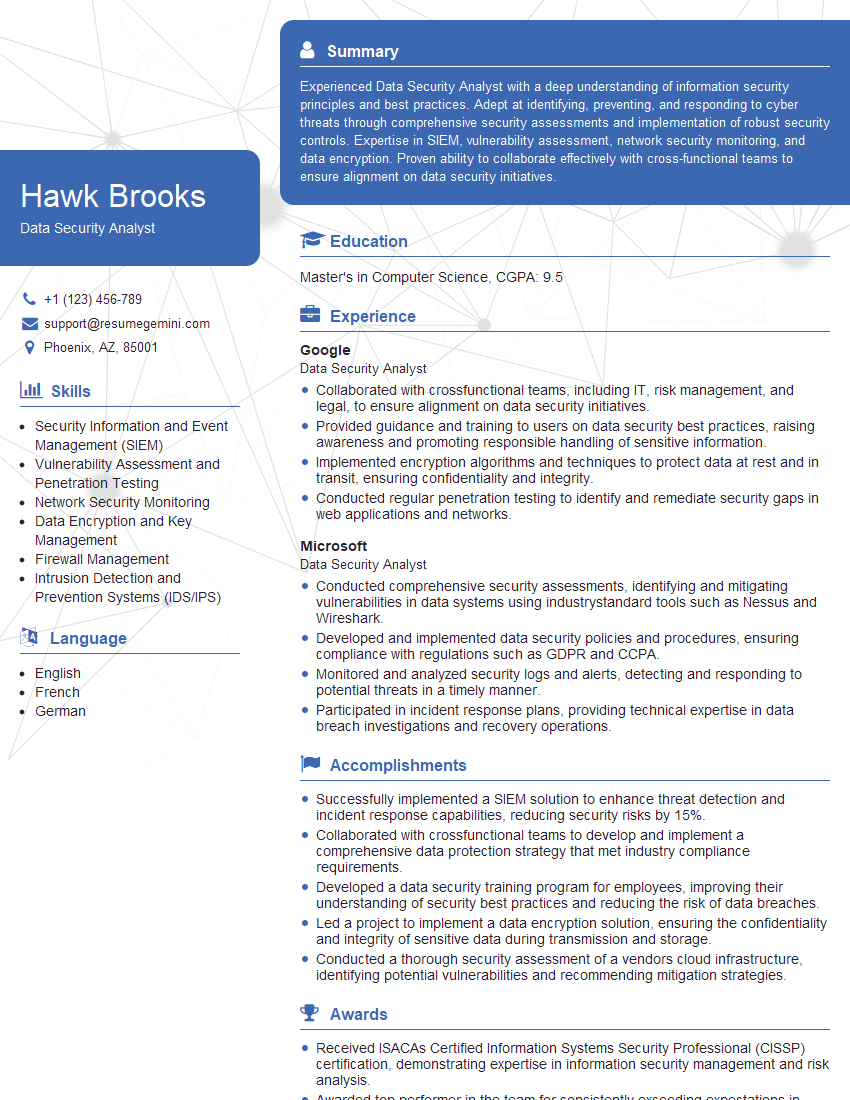

Mastering data collection software skills is crucial for advancing your career in many fields, opening doors to exciting opportunities and higher earning potential. A strong resume is your key to unlocking these opportunities. Creating an ATS-friendly resume is vital to getting noticed by recruiters. We highly recommend using ResumeGemini to craft a compelling resume that showcases your expertise in data collection software. ResumeGemini provides examples of resumes tailored to this specific skillset, helping you present your qualifications effectively and increase your chances of landing your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good