Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Experience in using human factors software (e.g., Human Factors Toolkit, Ergotron, UXD) interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Experience in using human factors software (e.g., Human Factors Toolkit, Ergotron, UXD) Interview

Q 1. Describe your experience using Human Factors Toolkit software.

The Human Factors Toolkit software is a valuable asset for conducting various human factors analyses. I’ve extensively used it for tasks like time-motion studies, where I record and analyze the time taken for specific actions to identify bottlenecks in a workflow. For example, in a recent project involving the design of a new control panel for a medical device, I used the Toolkit to analyze the time surgeons took to perform specific tasks, revealing that the placement of certain controls was slowing down the process. The software’s features for creating and analyzing video recordings were especially helpful in identifying these subtle inefficiencies. Beyond time-motion studies, I’ve also used the Toolkit for creating detailed task analyses, breaking down complex tasks into smaller, manageable components to understand potential areas for human error or improvement. The software’s ability to export data in various formats facilitates seamless integration with other analysis tools.

Q 2. How have you utilized Ergotron products in a human factors project?

Ergotron products are crucial for creating ergonomic workstations. In a recent project designing a call center, we used Ergotron monitor arms and keyboard trays to optimize the workspace setup for each agent. This was particularly important in minimizing repetitive strain injuries and promoting better posture. By utilizing Ergotron’s adjustable height desks and monitor arms, we allowed for personalized workspace adjustments, catering to individual operator needs and preferences. Before implementation, we conducted usability testing to ensure that the chosen Ergotron products were intuitive and easy to adjust. We also measured worker comfort levels and postural changes pre and post implementation to quantify the impact of the ergonomics improvements.

Q 3. Explain your workflow when using UXD software for usability testing.

My workflow with UXD software for usability testing typically follows these steps: First, I define the testing goals and objectives, outlining the specific aspects of the design I want to evaluate. Then, I create detailed test plans, including the tasks users will perform and the metrics I’ll collect (e.g., task completion time, error rates, subjective satisfaction). Next, I recruit participants representative of the target user group. The UXD software then facilitates the remote or in-person testing sessions, allowing me to record user interactions, screen activity and even facial expressions. Afterward, the software helps me analyze the collected data, including heatmaps to visualize user interaction patterns and session recordings to review individual participant behaviour. Finally, I consolidate my findings into a comprehensive report with recommendations for design improvements. For instance, in a recent e-commerce site redesign, UXD helped us identify navigation issues that were hindering the checkout process. We observed users abandoning their carts due to confusing navigation elements, insights directly obtained from the session recordings and heatmaps generated by the software.

Q 4. What are the key differences between heuristic evaluation and usability testing?

Heuristic evaluation and usability testing are both methods to assess the usability of a design, but they differ significantly in their approach. Heuristic evaluation involves having usability experts review the design against established usability principles (heuristics) to identify potential problems. It’s a relatively quick and cost-effective method, ideal for early-stage design feedback. Usability testing, on the other hand, involves observing actual users interacting with the design. This provides direct evidence of usability problems and offers insights into user behaviour. Think of it this way: heuristic evaluation is like a doctor’s preliminary examination—a quick check for obvious problems. Usability testing is like a thorough physical examination with tests and monitoring, providing detailed information on the system’s health. While heuristic evaluation can identify many issues, usability testing confirms real-world impact and allows observing unexpected user behaviours that experts may overlook.

Q 5. How do you measure task completion time and error rates during usability testing?

Measuring task completion time and error rates during usability testing is crucial for quantifying usability. Task completion time is simply the time it takes a user to complete a specific task. This can be easily recorded using the built-in timers in tools like UXD, or manually using a stopwatch. Error rates are calculated by counting the number of errors a user makes while performing a task, dividing it by the total number of attempts and multiplying by 100 to express it as a percentage. I typically use data spreadsheets to consolidate and analyze these metrics for each participant and then calculate the average task completion time and error rate across all participants. This provides a quantitative understanding of the design’s efficiency and accuracy. For example, if users frequently fail to find the “add to cart” button, this highlights a significant usability problem that needs to be addressed. Identifying such issues allows for clear improvements based on concrete data.

Q 6. What is your experience with A/B testing in a UX context?

A/B testing, in the UX context, is a powerful method to compare two versions of a design (A and B) and determine which performs better. I have extensive experience in designing and implementing A/B tests for websites and applications. For example, in a recent project, we tested two different button designs for a call to action. Version A used a green button, while Version B used a blue button. By randomly assigning users to see either Version A or Version B, we collected data on click-through rates and conversion rates. The results clearly showed that Version B (blue button) had a higher click-through rate and conversion rate, leading to its adoption in the final design. A/B testing provides empirical evidence for design choices, helping to make data-driven decisions and continuously optimize the user experience.

Q 7. How do you analyze user feedback to improve design?

Analyzing user feedback is a crucial step in iterative design. I approach this by first categorizing the feedback into themes or patterns. This often involves using qualitative data analysis techniques like thematic analysis to identify recurring issues or positive experiences. For instance, recurring negative comments about a specific feature could highlight a major usability problem. Then, I prioritize the feedback based on its severity and frequency. High-severity, frequently reported issues are addressed first. Finally, I translate the qualitative feedback into concrete design solutions. User comments are not simply read; they are carefully weighed to inform data-driven modifications to the user interface or user experience, resulting in a more user-friendly and efficient design.

Q 8. What methods do you employ to identify usability issues in software interfaces?

Identifying usability issues in software interfaces involves a multi-faceted approach combining heuristic evaluation, user testing, and data analysis. I leverage a combination of methods to ensure a comprehensive understanding of the user experience.

Heuristic Evaluation: I use established usability heuristics, like Nielsen’s 10 Heuristics, to systematically evaluate the interface for potential problems. This involves experts reviewing the interface against these principles, identifying areas for improvement. For example, if a crucial feature is hidden or requires too many clicks, it violates the ‘visibility of system status’ heuristic.

User Testing: I conduct usability testing with representative users, observing their interactions and identifying pain points. This could involve think-aloud protocols, where users verbalize their thoughts while interacting, or more structured tasks with post-test interviews. A recent project revealed users struggled with a specific form field due to unclear labeling – something easily detected through user observation.

Data Analysis: Analyzing user behavior data, such as clickstream data, error rates, and task completion times, provides quantitative insights into usability issues. For instance, a high error rate on a specific screen points to a potential usability problem requiring investigation.

By combining these approaches, I gain a holistic view of usability, encompassing both qualitative (user feedback) and quantitative (data-driven) evidence to make informed design decisions.

Q 9. How do you apply human factors principles in designing user interfaces?

Applying human factors principles in UI design centers around understanding and accommodating the cognitive, physical, and perceptual limitations and capabilities of users. This involves considering factors such as:

Cognitive Load: Designing interfaces that minimize cognitive load is crucial. This involves simplifying tasks, using clear and concise language, and providing effective feedback to users. For example, breaking down complex processes into smaller, manageable steps reduces mental burden.

Perceptual Principles: Utilizing principles of visual perception, like Gestalt principles, helps create intuitive and easily understood layouts. Good use of visual hierarchy, color contrast, and spacing guides the user’s attention and improves comprehension.

Human-Computer Interaction (HCI) Guidelines: Adhering to established HCI guidelines, such as those from ISO or the Nielsen Norman Group, ensures consistency and usability across different interfaces. This ensures the design aligns with user expectations and best practices.

Accessibility: Designing for users with disabilities is paramount. I incorporate WCAG guidelines to ensure the interface is usable by a broad range of users, including those with visual, auditory, or motor impairments.

In essence, I strive to create interfaces that are efficient, effective, engaging, and error-tolerant, reflecting a deep understanding of human capabilities and limitations.

Q 10. Describe your experience creating user personas and journey maps.

Creating user personas and journey maps are essential steps in understanding user needs and behaviors. I utilize a structured approach to develop these valuable artifacts.

User Personas: I create detailed user personas based on user research, encompassing demographic data, goals, motivations, frustrations, and technology proficiency. A recent project involved creating personas for a financial app, distinguishing between young professionals and seasoned investors, each with specific needs and expectations.

Journey Maps: These visually represent the user’s experience across multiple touchpoints. They highlight pain points, opportunities for improvement, and key moments in the user experience. For instance, I map the user’s journey from initial app download through key features, identifying potential friction points like lengthy registration processes.

The combination of personas and journey maps provides a deep understanding of the user’s perspective, informing design decisions to create more intuitive and satisfying experiences. These artifacts are living documents, iteratively refined as new information emerges.

Q 11. How familiar are you with different usability testing methodologies (e.g., think-aloud protocol, eye-tracking)?

I’m proficient in various usability testing methodologies, employing the most suitable approach based on project requirements and research questions.

Think-Aloud Protocol: This involves having users verbalize their thoughts while performing tasks, providing rich qualitative data on their cognitive processes and decision-making. It helps identify areas of confusion or difficulty.

Eye-Tracking: This sophisticated method tracks users’ eye movements to understand their visual attention and focus during interface interaction. Heatmaps generated from eye-tracking data pinpoint areas of interest and potential usability issues, such as areas users consistently overlook.

A/B Testing: This quantitative method compares different versions of an interface to determine which performs better based on key metrics like task completion rates and error rates. It’s particularly useful for making data-driven design decisions.

Heuristic Evaluation: As previously mentioned, expert reviews help identify usability issues early in the design process. Combining this with user testing provides a comprehensive view.

Choosing the right methodology depends on the context; for example, eye-tracking might be overkill for a simple form, while think-aloud would be valuable in understanding complex workflows.

Q 12. How do you incorporate accessibility guidelines (e.g., WCAG) into your design process?

Incorporating accessibility guidelines, primarily WCAG (Web Content Accessibility Guidelines), is integral to my design process. Accessibility is not an afterthought; it’s built into the foundation of the design.

WCAG Compliance: I ensure adherence to WCAG principles at each stage of the design process, using WCAG Success Criteria as a checklist. This includes considerations like keyboard navigation, alternative text for images, sufficient color contrast, and proper heading structure.

Assistive Technology Testing: I test designs with assistive technologies such as screen readers and switch access devices to verify accessibility. This provides firsthand understanding of how users with disabilities experience the interface.

By proactively addressing accessibility throughout the design cycle, rather than as a separate phase, I create more inclusive and usable interfaces for everyone. This approach is not only ethically correct but also extends the reach of the product to a wider audience.

Q 13. Explain your understanding of cognitive ergonomics and its application in design.

Cognitive ergonomics focuses on optimizing the interaction between humans and their work environment to enhance cognitive performance and reduce mental workload. In design, this involves understanding how users process information, make decisions, and learn. Applying cognitive ergonomics principles leads to more intuitive and efficient interfaces.

Mental Models: I design interfaces that align with users’ existing mental models, their understanding of how a system works. For example, a user will have a pre-existing mental model of an e-commerce website and its checkout process; deviating from this might cause confusion.

Information Architecture: Structuring information logically and intuitively allows users to easily find what they need. Clear navigation, consistent labeling, and effective search functionality are vital aspects of effective information architecture.

Decision Support: Designing interfaces that provide appropriate decision support reduces cognitive load and error. This could involve clear instructions, visual cues, or feedback mechanisms. A good example is showing the impact of user choices in a real-time preview.

By consciously designing for cognitive ease and efficiency, I improve user satisfaction, task completion rates, and reduce error, contributing to a more effective and enjoyable user experience.

Q 14. How do you balance aesthetics with usability when designing a product?

Balancing aesthetics and usability is a crucial aspect of design. It’s not about choosing one over the other, but rather integrating them harmoniously. An aesthetically pleasing interface that is unusable is just as bad as a functional interface that is unattractive.

Iterative Design: I use an iterative approach, incorporating feedback from usability testing to refine both the aesthetic and functional aspects of the design. A beautiful interface might undergo revisions if usability testing reveals significant issues.

User-Centered Design: Prioritizing user needs guides both aesthetic and functional decisions. The aesthetic elements shouldn’t overshadow functionality; instead, they should complement and enhance the overall user experience.

Usability Heuristics and Aesthetics Guidelines: Employing both usability heuristics and design principles to guide decisions ensures both elements are considered throughout the design process. Visual appeal should never sacrifice clarity and ease of use.

Think of it like building a house: a beautiful facade is useless without a well-constructed and functional interior. Similarly, a visually stunning interface needs to be equally usable to be successful.

Q 15. How do you conduct a user interview effectively?

Effective user interviews are the cornerstone of understanding user needs and pain points. They’re not just about asking questions; it’s about creating a comfortable and open dialogue. I typically follow a structured approach, starting with introductory icebreakers to establish rapport. Then, I move into open-ended questions, focusing on the user’s experience rather than leading them to specific answers. For example, instead of asking ‘Did you find the button easy to use?’, I might ask ‘Tell me about your experience finding the button you needed’. This encourages more insightful responses.

Throughout the interview, I actively listen, using techniques like paraphrasing and summarizing to ensure understanding. I also incorporate observational notes, paying attention to body language and tone, which often reveal unspoken frustrations or preferences. After the open-ended questions, I might introduce more targeted questions to clarify specific points or delve deeper into areas of interest. Finally, I always end with a thank-you and an opportunity for the participant to ask any questions they might have. I often record the interviews (with consent, of course) and meticulously transcribe them for thorough analysis later. Analyzing the data allows me to identify trends and patterns in user behavior and feedback which guides design iteration.

For example, in a recent project redesigning a medical device interface, initial interviews revealed a significant difficulty in understanding alarm indicators. This led to a complete redesign of the alarm system, making it simpler and less prone to misinterpretations.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with remote usability testing?

My experience with remote usability testing is extensive. I’ve utilized platforms such as Zoom, UserTesting.com, and even custom-built solutions depending on project requirements. The key to successful remote testing is meticulous planning and execution. This includes ensuring participants have the necessary equipment and a stable internet connection. Clear instructions and a well-defined testing scenario are crucial for consistent results. I always conduct a thorough tech check beforehand to prevent any technical hiccups during the session. Tools like screen recording software (e.g., OBS Studio) and annotation tools (built into many video conferencing software) are essential for capturing user interactions and feedback.

One challenge with remote testing is the lack of direct observation of the user’s physical environment. To mitigate this, I often employ techniques like screen sharing, task-based scenarios, and think-aloud protocols, where the user vocalizes their thoughts while interacting with the system. The think-aloud protocol provides invaluable insight into the user’s cognitive processes. After each test session, I collect quantitative and qualitative data, including completion rates, time on task, error rates, and user feedback. This detailed data contributes to informed design decisions. For example, using remote eye-tracking software, we could observe and measure user attention and engagement in an online application, identifying areas of confusion or disinterest.

Q 17. Describe your experience with data analysis techniques for usability studies.

Data analysis in usability studies is a crucial step to translate raw data into actionable insights. My approach is multifaceted and depends on the type of data collected. For quantitative data, such as task completion times and error rates, I use statistical methods like descriptive statistics (mean, median, standard deviation), to understand the central tendencies and dispersion of the data. I might also utilize inferential statistics (t-tests, ANOVA) to compare performance across different user groups or system designs.

Qualitative data, such as interview transcripts and user feedback, require a different approach. Here, I often use thematic analysis to identify recurring themes and patterns in the data. This involves carefully reading and coding the data, identifying key concepts, and organizing them into meaningful themes. Software like NVivo can be very helpful for managing and analyzing large qualitative datasets. I also leverage techniques such as affinity diagramming to visualize relationships between different themes and ideas.

I always strive to triangulate data sources; that is, to compare and contrast findings from different methods (e.g., quantitative metrics and qualitative feedback) to build a more robust and comprehensive understanding of the usability issues. By combining quantitative and qualitative data analysis, I obtain a holistic view of the usability problems, identifying both the frequency and severity of issues.

Q 18. How familiar are you with different types of ergonomic assessments?

I’m proficient in various ergonomic assessment techniques. These range from simple checklists and questionnaires to more sophisticated methods involving physical measurements and biomechanical modeling. For example, using the Rapid Upper Limb Assessment (RULA) methodology allows me to evaluate the risk of musculoskeletal disorders associated with specific postures and movements during computer work. RULA involves scoring different body postures based on established criteria, providing a quantitative assessment of risk. Similarly, the Rapid Entire Body Assessment (REBA) extends RULA to evaluate the entire body.

I also have experience conducting workplace walkthroughs to identify potential ergonomic hazards in the physical environment. This might include assessing workstation setup, lighting, and noise levels. For more detailed assessments, I might utilize motion capture technology to analyze worker movements and identify areas of strain or discomfort. Beyond physical assessments, I also incorporate subjective assessments, including questionnaires like the Nordic Musculoskeletal Questionnaire (NMQ) to gauge worker self-reported discomfort and symptoms. These combined methodologies allow for a holistic understanding of the ergonomic challenges present.

Q 19. What software or tools do you use for data visualization of usability test results?

For data visualization, I primarily use tools that allow for both interactive and static visualizations. Microsoft Excel and Google Sheets are commonly used for generating basic charts and graphs (bar charts, pie charts, scatter plots) to represent key metrics from usability studies. However, for more complex visualizations and interactive dashboards, I often turn to tools like Tableau or Power BI. These advanced tools offer extensive customization options and the ability to create dynamic visualizations that can help stakeholders understand the data more easily. For example, I might use a heatmap to visualize user attention patterns on a website or a user flow diagram to display the sequence of steps taken during a task.

Beyond these tools, I frequently utilize specialized software for usability testing platforms, which often include built-in data visualization tools for analysis and report generation. Ultimately, the choice of software depends on the complexity of the data, the desired level of detail in the visualization, and the target audience for the report. The key is to create visualizations that are clear, concise, and effective in communicating findings.

Q 20. How do you handle conflicting requirements from stakeholders during the design process?

Handling conflicting stakeholder requirements is a common challenge in design. My approach involves fostering open communication and collaboration from the outset. I start by clearly documenting all requirements, ensuring everyone understands the scope and objectives of the project. Then, I facilitate workshops or meetings where stakeholders can openly discuss their needs and priorities. This often reveals underlying assumptions or misunderstandings that can be addressed through collaborative problem-solving.

When direct conflict arises, I facilitate prioritization using techniques like MoSCoW analysis (Must have, Should have, Could have, Won’t have). This involves ranking requirements based on their importance and feasibility. I present a prioritized list of requirements to the stakeholders, explaining the rationale behind the prioritization. Sometimes, compromise is necessary. I work with stakeholders to find solutions that meet the most critical needs while mitigating potential trade-offs. Throughout the process, I emphasize user-centered design principles, ensuring that the final design meets the most critical user needs, even if compromises need to be made on less crucial stakeholder requests. Prioritizing user needs over conflicting stakeholder requirements is key to developing a truly usable product.

Q 21. How do you prioritize usability issues based on severity and frequency?

Prioritizing usability issues requires a structured approach. I commonly use a severity and frequency matrix. This involves categorizing each usability issue based on its severity (e.g., catastrophic, critical, major, minor) and its frequency (e.g., always, often, sometimes, rarely). This matrix provides a clear visual representation of the relative importance of each issue. The matrix typically weights severity more heavily, meaning a critical issue occurring infrequently might still get prioritized over a minor issue that occurs very often.

For example, a critical error that prevents users from completing a key task (high severity, high frequency) would be prioritized over a minor cosmetic issue that only affects a few users (low severity, low frequency). I often involve stakeholders in the prioritization process, explaining the rationale behind the severity and frequency ratings. This collaborative approach ensures buy-in and shared understanding of which issues to address first. The matrix allows me to focus design efforts on the most impactful usability improvements, maximizing return on investment.

Q 22. How do you measure the effectiveness of design changes after implementation?

Measuring the effectiveness of design changes post-implementation is crucial for iterative improvement. We don’t just rely on gut feeling; we employ a multifaceted approach combining quantitative and qualitative data.

- Quantitative Methods: We track key performance indicators (KPIs) like task completion rates, error rates, time on task, and user satisfaction scores (often through surveys or in-app feedback mechanisms). For example, if we redesigned an e-commerce checkout process, we’d compare conversion rates before and after the change. A significant increase would indicate success.

- Qualitative Methods: We conduct post-implementation user testing, often involving usability testing sessions where we observe users interacting with the redesigned interface and gather feedback through interviews or think-aloud protocols. This helps us understand the ‘why’ behind the quantitative data – perhaps a design change improved efficiency but caused confusion elsewhere. We use tools like Human Factors Toolkit to analyze these session recordings.

- A/B Testing: For specific features or changes, A/B testing is extremely valuable. We expose different user groups to the original design (A) and the revised design (B) and compare their performance across our KPIs. This controlled experiment isolates the impact of the change.

By combining these methods, we gain a holistic understanding of the design change’s effectiveness, enabling informed decisions for future iterations. We document all findings in detailed reports, which are shared with stakeholders.

Q 23. Explain your experience with user research methodologies.

My experience with user research methodologies is extensive, encompassing a range of techniques tailored to the specific project needs. I’m proficient in both generative and evaluative research methods.

- Generative Research (understanding user needs): This includes conducting user interviews, contextual inquiries (observing users in their natural environment), focus groups, and ethnographic studies. For example, to design a new mobile banking app, I’d conduct contextual inquiries to observe users managing their finances in their daily lives.

- Evaluative Research (testing designs): This involves usability testing (both moderated and unmoderated), A/B testing, heuristic evaluations (applying established usability principles), and cognitive walkthroughs (simulating user mental processes). I regularly leverage tools like UXD to manage and analyze data from usability tests.

I’m adept at creating research plans, recruiting participants, conducting sessions, analyzing data, and creating reports that clearly communicate findings to stakeholders. My approach is always user-centered, aiming to deeply understand user needs and behaviors to inform design decisions. I also prioritize ethical considerations in all research activities, ensuring informed consent and data privacy.

Q 24. Describe a time you had to advocate for user-centered design in a challenging situation.

In a previous project, we were under immense pressure to launch a new software application quickly. The initial design prioritized speed of development over user experience. The interface was cluttered, navigation was confusing, and crucial functionality was poorly placed.

I advocated for a user-centered approach, presenting data from initial usability tests that showed high error rates and low user satisfaction. I argued that releasing a poorly designed product would not only harm user experience but also damage the company’s reputation and potentially lead to high customer support costs.

I proposed a phased approach, incorporating iterative user testing throughout the development process. This allowed for course correction based on real user feedback. While there was initial resistance due to the tight deadline, I managed to build consensus by demonstrating the long-term benefits of a well-designed product. The final product, while launched slightly later than originally planned, enjoyed significantly higher user satisfaction and significantly lower support costs, proving my advocacy worthwhile.

Q 25. How do you stay up-to-date with the latest trends in human factors and UX design?

Staying current in human factors and UX design is a continuous process. I utilize several strategies to ensure I’m up-to-date with the latest trends and research.

- Conferences and Workshops: I regularly attend industry conferences (like CHI and UXPA) and workshops to learn about new methodologies, tools, and best practices from leading experts.

- Publications and Journals: I subscribe to relevant journals like the Journal of Human-Computer Interaction and regularly read articles and research papers published in these publications.

- Online Resources: I follow key influencers and thought leaders on platforms like Twitter, LinkedIn, and subscribe to reputable UX newsletters and blogs.

- Online Courses and Training: I actively participate in online courses and training programs offered by platforms like Coursera and Udemy to deepen my knowledge in specific areas.

By diversifying my learning sources, I ensure that my knowledge base remains current and relevant, enabling me to apply the latest advancements in my work.

Q 26. What are some common usability problems you have encountered and how did you solve them?

Throughout my career, I’ve encountered many common usability problems. Here are a couple of examples and how I addressed them:

- Problem: Inconsistent navigation patterns across a website. Users were confused and frustrated when trying to find information.

Solution: I conducted a comprehensive heuristic evaluation of the website’s information architecture. This revealed inconsistent use of menus, labels, and internal links. I then redesigned the navigation using clear, consistent labeling, intuitive information hierarchy, and standardized link styles. Post-redesign testing showed a significant improvement in task completion rates and user satisfaction. - Problem: A mobile app’s form design was cluttered, and users were making frequent errors during input.

Solution: Using the principles of progressive disclosure, I redesigned the form, breaking it into smaller, logically grouped sections. We also incorporated clear input validation and error messages to guide users. This reduced error rates and improved overall user experience. The Human Factors Toolkit helped in tracking error rates and analyzing user behavior during form completion.

My approach to solving usability problems always involves a combination of user research, data analysis, and iterative design. I use various software tools, like Ergotron for ergonomic assessments and UXD for usability testing, to support my problem-solving process.

Q 27. How familiar are you with the principles of Gestalt psychology and their application to UI design?

I am very familiar with the principles of Gestalt psychology and their application to UI design. Gestalt principles describe how humans perceive visual information holistically, rather than as individual elements. Understanding these principles is crucial for creating intuitive and effective user interfaces.

- Proximity: Grouping related elements together visually creates a sense of connection and improves understanding. For example, grouping form fields related to billing information creates visual clarity.

- Similarity: Using consistent visual styles (color, shape, size) for similar elements helps users to perceive them as related. For instance, using the same button style consistently improves navigation predictability.

- Closure: Humans tend to perceive incomplete shapes as complete, allowing for the use of simple, uncluttered designs. A logo that uses negative space effectively relies on this principle.

- Continuity: Elements arranged in a line or curve are perceived as related, promoting smooth visual flow. Consider using a consistent layout pattern across web pages to achieve this.

- Figure/Ground: The design should clearly differentiate the focal point (figure) from the background (ground). This principle is fundamental for creating clear visual hierarchy.

By consciously applying these principles, designers can create visually appealing and easy-to-understand interfaces that guide user behavior effectively. Incorporating Gestalt principles helps reduce cognitive load and improves overall usability.

Q 28. Describe your experience working with cross-functional teams on UX projects.

I have extensive experience working with cross-functional teams on UX projects. Effective collaboration is essential for successful outcomes. My approach focuses on fostering clear communication and mutual understanding.

- Collaboration Tools: I utilize various collaboration tools like project management software (Jira, Asana), shared design platforms (Figma, Adobe XD), and communication platforms (Slack, Microsoft Teams) to facilitate seamless teamwork.

- Clear Communication: I prioritize clear and concise communication of user research findings, design decisions, and project progress to all stakeholders. This involves creating easily understandable reports, presentations, and documentation.

- Stakeholder Management: I proactively engage with stakeholders from different disciplines (developers, marketing, product managers) to align on project goals, manage expectations, and address concerns. Regular feedback sessions are key to keeping everyone informed.

- Constructive Feedback: I actively encourage and provide constructive feedback within the team, promoting a culture of continuous improvement. I also know how to effectively present my ideas and incorporate feedback from team members.

My experience demonstrates that successful collaboration requires active listening, empathy, and a strong commitment to a shared vision. I believe that a well-functioning, cross-functional team is the key to creating impactful UX solutions.

Key Topics to Learn for Experience in using human factors software (e.g., Human Factors Toolkit, Ergotron, UXD) Interview

- Understanding Human Factors Principles: Review core human factors concepts like ergonomics, usability, and cognitive psychology. How do these principles inform the design and evaluation of products and systems?

- Software Proficiency: Demonstrate familiarity with the specific software mentioned (Human Factors Toolkit, Ergotron, UXD). Practice using their key features and functionalities. Be prepared to discuss specific projects where you utilized these tools.

- Data Analysis and Interpretation: Human factors often involves data collection and analysis. Review methods for analyzing user data, identifying usability issues, and presenting findings clearly and concisely. Practice interpreting data generated by the software you’ve used.

- Methodology & Research Design: Understand different research methodologies used in human factors, such as heuristic evaluation, usability testing, and cognitive walkthroughs. How would you design a study using these tools and methodologies?

- Problem-Solving and Critical Thinking: Be ready to discuss how you’ve used these software tools to identify and solve problems related to human-computer interaction, workplace ergonomics, or product design. Provide concrete examples of your problem-solving process.

- Collaboration and Communication: Human factors work often involves teamwork. Prepare to discuss your experience collaborating with designers, engineers, and other stakeholders to improve user experience and workplace safety.

Next Steps

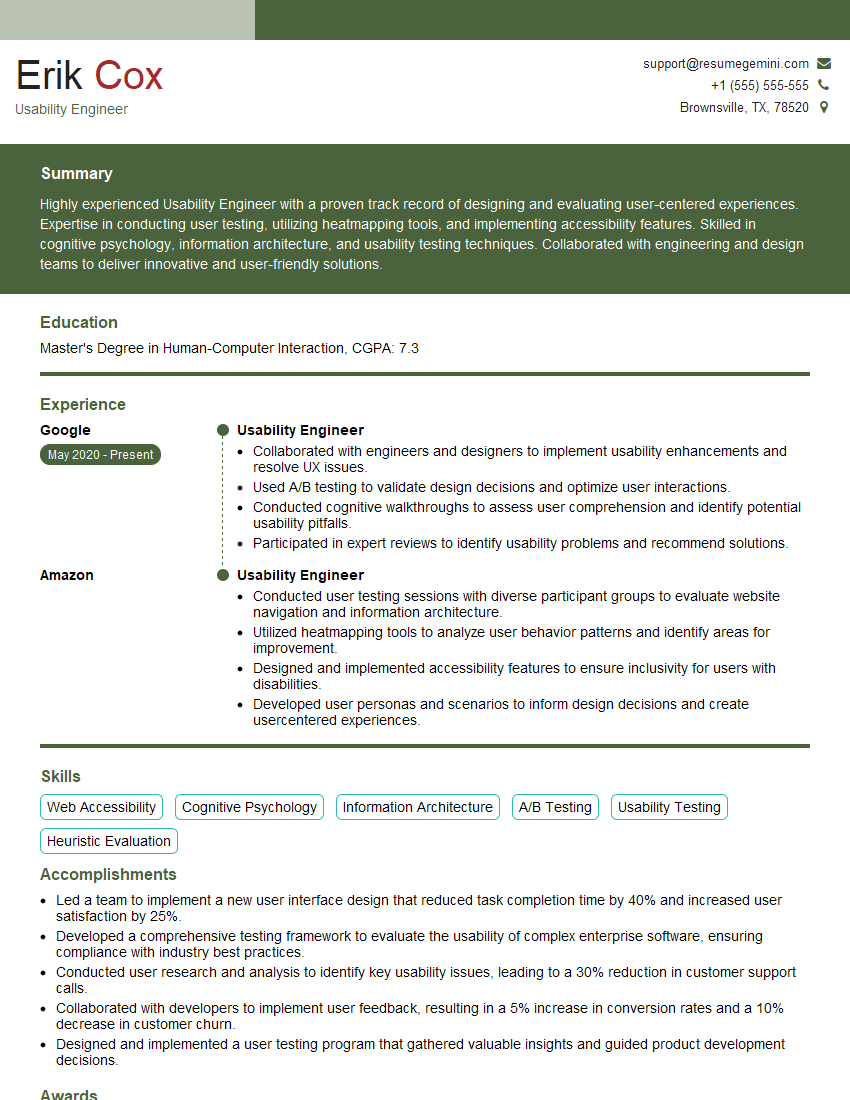

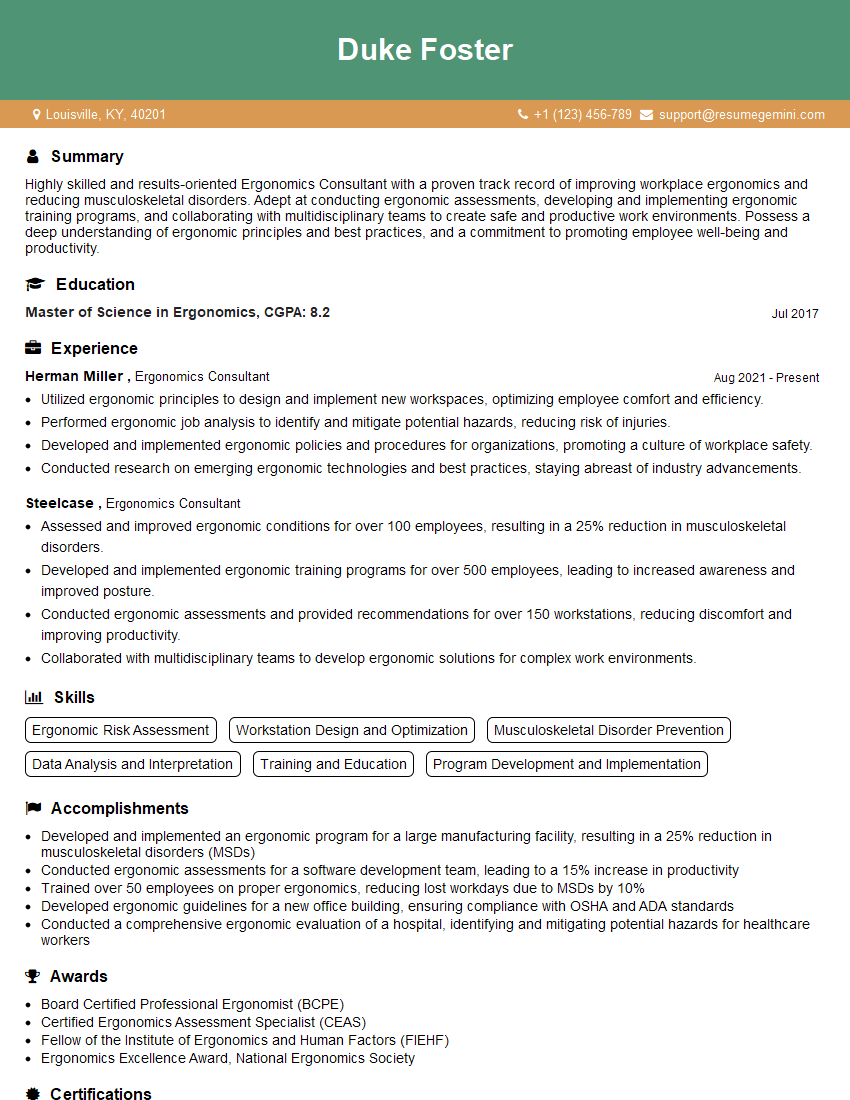

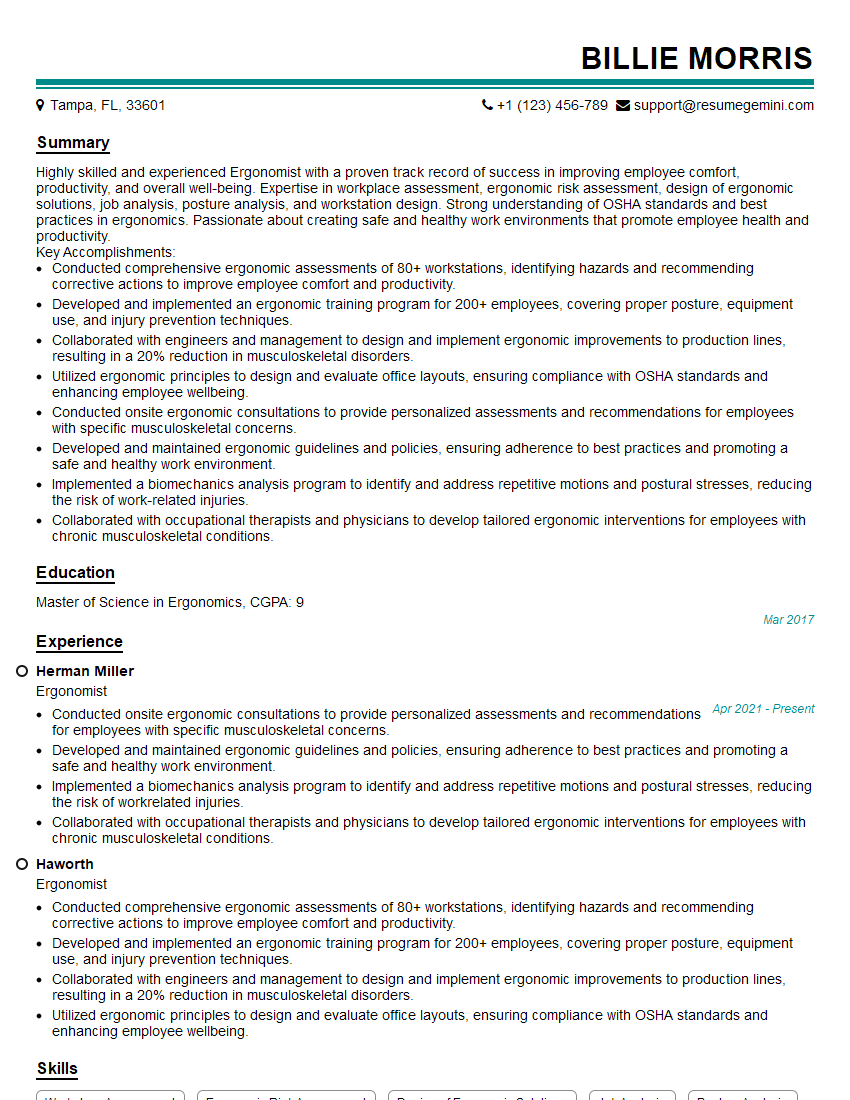

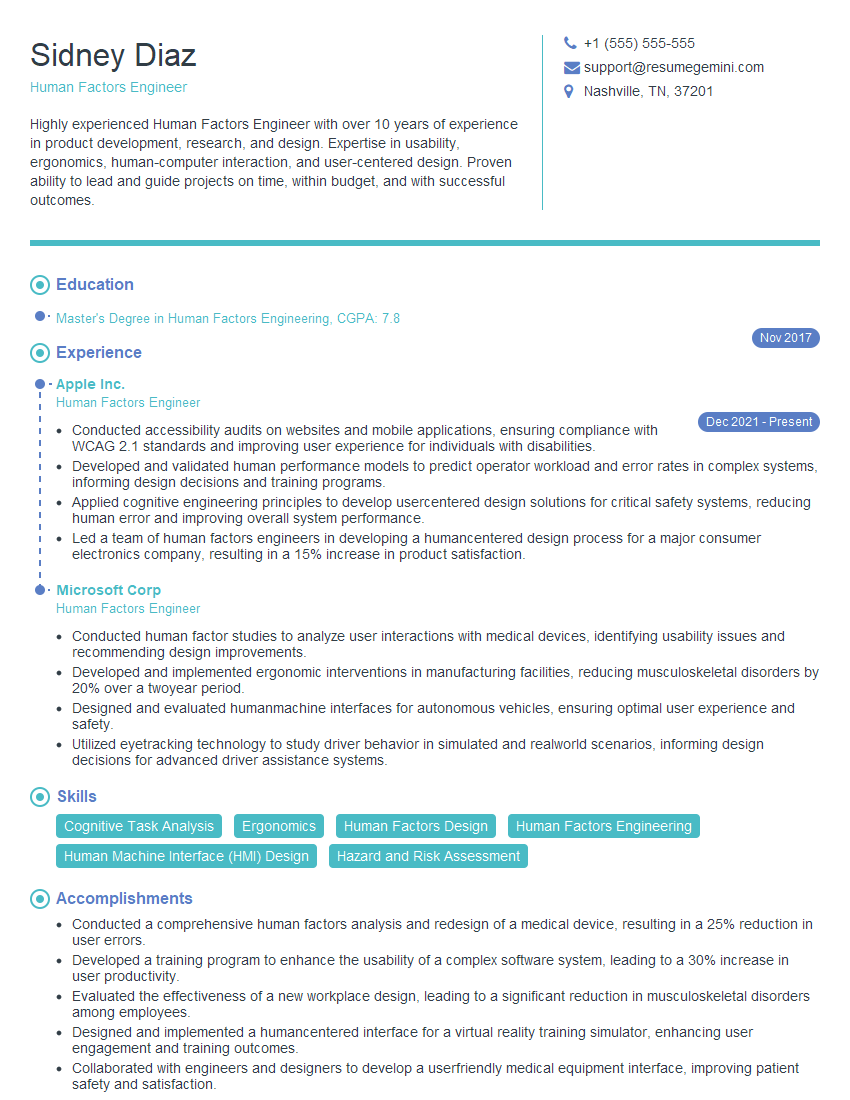

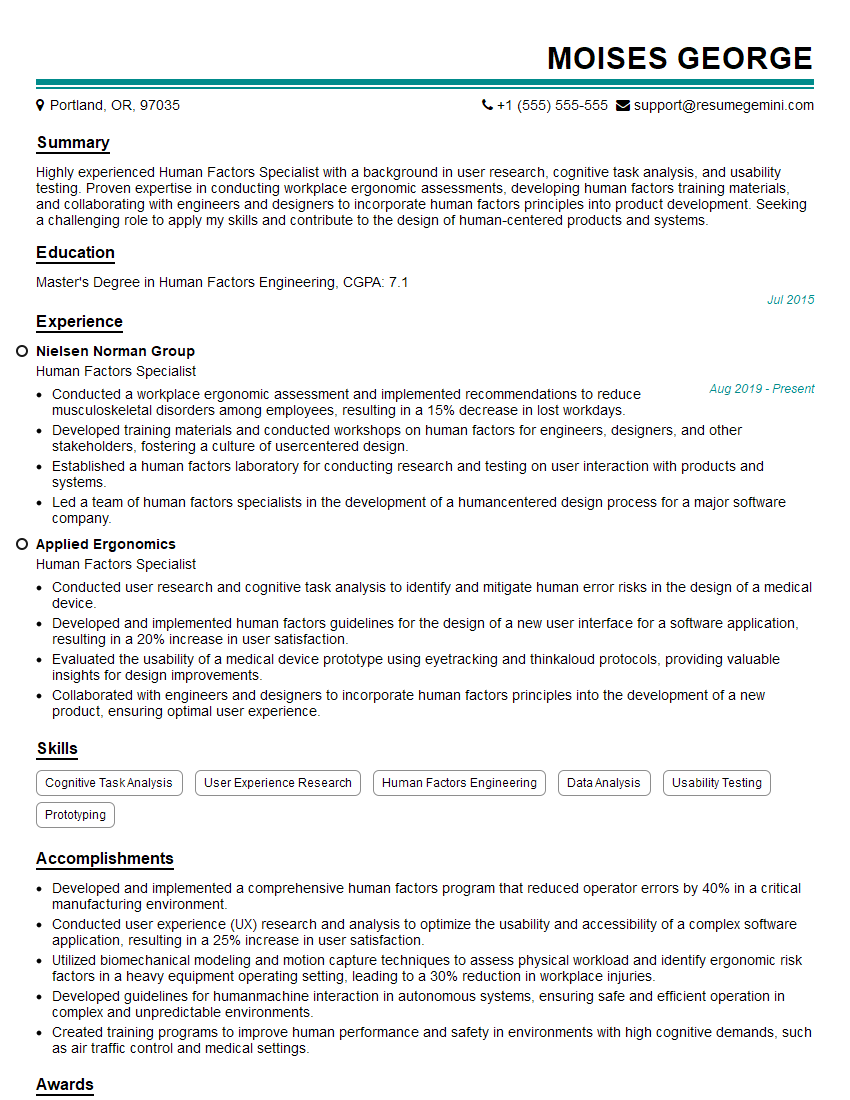

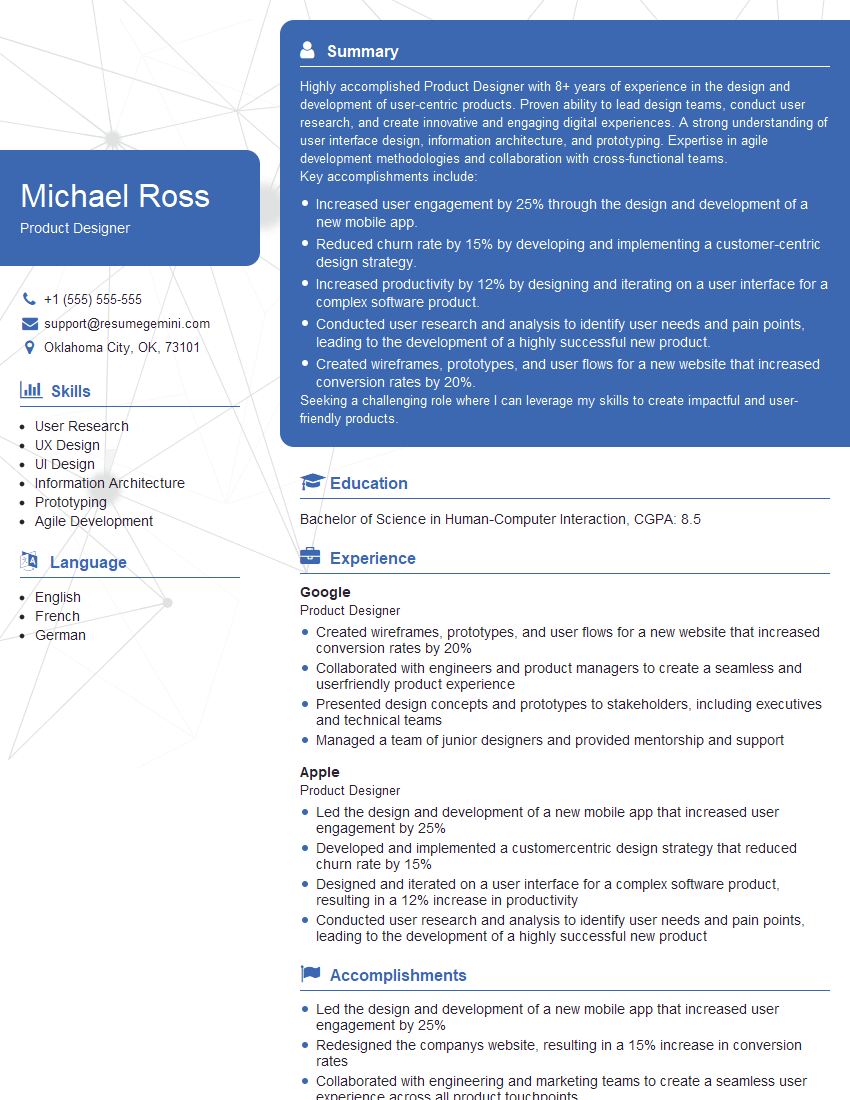

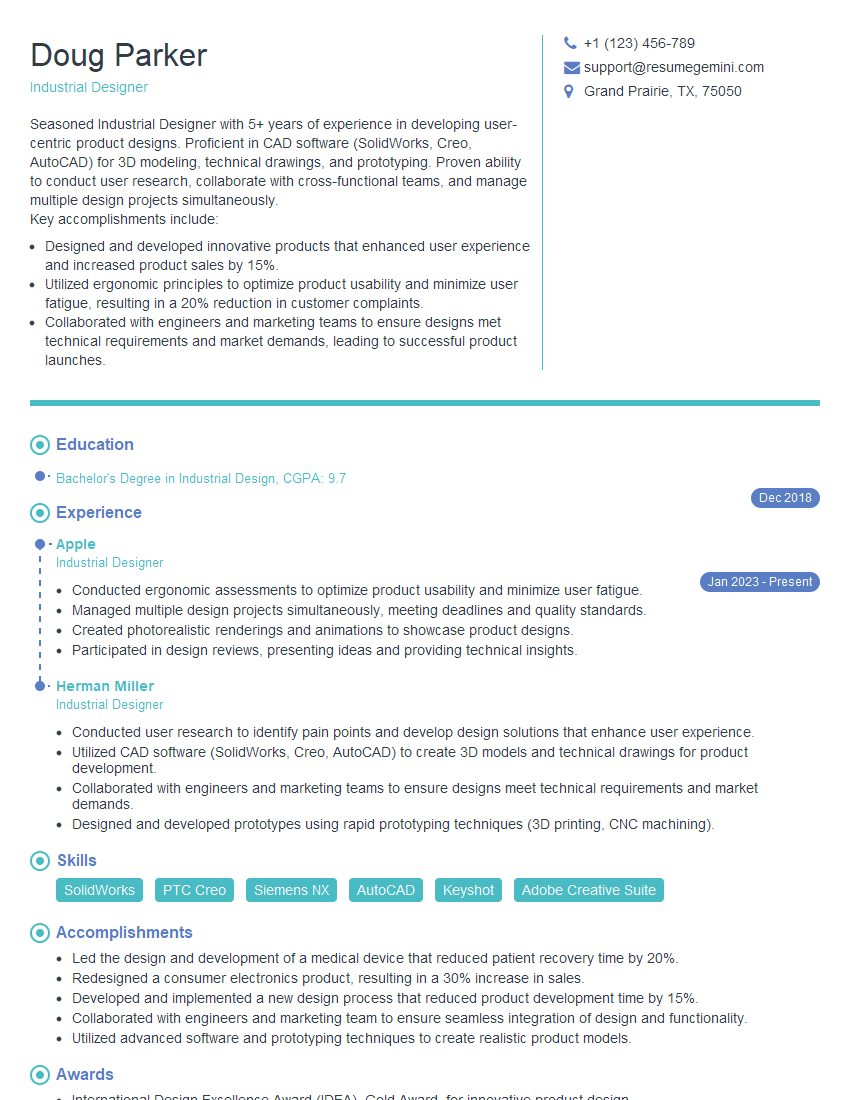

Mastering human factors software is crucial for career advancement in fields demanding user-centered design and ergonomic solutions. A strong understanding of these tools, coupled with a well-structured resume, significantly increases your chances of landing your dream job. Creating an ATS-friendly resume is paramount. To ensure your resume effectively highlights your skills and experience, leverage the power of ResumeGemini. ResumeGemini offers a streamlined approach to resume building, helping you craft a professional document that stands out. Examples of resumes tailored to showcasing experience with Human Factors Toolkit, Ergotron, and UXD are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good