Unlock your full potential by mastering the most common Experience with Packet Capture and Analysis Software interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Experience with Packet Capture and Analysis Software Interview

Q 1. Explain the difference between TCP and UDP.

TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) are both core protocols in the internet protocol suite (IP suite), but they differ significantly in how they handle data transmission. Think of it like sending a package: TCP is like sending a registered package requiring a signature upon delivery – it ensures reliable and ordered delivery. UDP is like sending a postcard – it’s faster but doesn’t guarantee delivery or order.

- TCP: Connection-oriented, reliable, ordered delivery, uses acknowledgements (ACKs) to confirm receipt, handles retransmissions in case of packet loss, slower due to overhead. Excellent for applications requiring high reliability, such as web browsing (HTTP), email (SMTP), and file transfer (FTP).

- UDP: Connectionless, unreliable, unordered delivery, no acknowledgements, faster and more efficient than TCP because it has less overhead. Suitable for applications where speed is prioritized over reliability, such as streaming video (RTP), online gaming, and DNS lookups.

In a nutshell: Choose TCP when reliability is paramount; choose UDP when speed is the priority.

Q 2. What is a packet capture and how does it work?

Packet capture is the process of intercepting and saving network traffic passing through a network interface. It’s like recording a conversation on a phone line, but instead of voices, you’re recording the digital data packets that make up network communication. This allows you to analyze the content and timing of network interactions, identifying potential problems or security breaches.

It works by using specialized software (like Wireshark or tcpdump) to monitor the network interface card (NIC) for incoming and outgoing data packets. The software then copies these packets to a file for later analysis. The NIC typically operates in promiscuous mode during capture, meaning it copies all traffic it sees on the network segment, regardless of whether it’s addressed to the capturing machine. This is essential to capture all the relevant communication for troubleshooting.

Q 3. Describe the functionality of Wireshark or tcpdump.

Wireshark and tcpdump are both powerful packet capture and analysis tools, but they differ in their approach. Think of Wireshark as a sophisticated, graphical recording studio, while tcpdump is more like a powerful, command-line recorder.

- Wireshark: A graphical network protocol analyzer. It provides a user-friendly interface to capture, filter, and analyze network traffic. It decodes various network protocols, providing a comprehensive view of the captured data. Features include real-time capture, filtering, protocol decoding, and advanced analysis capabilities.

- tcpdump: A command-line network packet analyzer. It’s powerful but requires a command-line interface, making it less user-friendly than Wireshark, but faster and often preferred for scripting or automating tasks. It’s excellent for capturing packets based on specific criteria and for quickly capturing large amounts of data.

Both tools are invaluable for network troubleshooting and security analysis, with Wireshark favored for its ease of use and tcpdump preferred for its command-line efficiency and speed. The choice depends on the user’s comfort level and specific needs.

Q 4. How would you use a packet capture to troubleshoot a network connectivity issue?

Using packet capture to troubleshoot network connectivity issues is a systematic process. Here’s a step-by-step approach:

- Identify the problem: Clearly define the issue. Is it a specific application not connecting, slow performance, or complete network outage?

- Set up the capture: Use Wireshark or tcpdump on a machine involved in the communication path. Include relevant filters (e.g., IP addresses, ports, protocols) to reduce the amount of data captured, making analysis more manageable. For example, if you suspect a problem with a web server on IP 192.168.1.100, you’d filter by that IP.

- Reproduce the issue: Try to repeat the problem while the capture is running. This is crucial to capturing the relevant packets associated with the failure.

- Analyze the capture: Examine the captured packets for signs of problems. Look for dropped packets, incorrect sequence numbers (TCP), timeouts, or other anomalies. Wireshark’s protocol decoding capabilities are incredibly helpful here.

- Identify the cause: Based on the analysis, identify the root cause. Is it a firewall rule blocking traffic, a routing problem, a DNS resolution issue, or something else?

- Implement a solution: Once the root cause is identified, implement the appropriate fix (e.g., adjust firewall rules, reconfigure routing, check DNS settings).

- Verify the solution: After implementing the solution, repeat the packet capture to confirm the issue has been resolved.

For example, if a web page isn’t loading, you might see TCP packets failing to establish a connection, indicating a firewall or routing problem.

Q 5. What are the common file formats used for storing packet captures?

Several common file formats are used to store packet captures. The most prevalent ones are:

- pcap (libpcap): The de facto standard, supported by most packet capture and analysis tools including Wireshark and tcpdump. It’s a binary format.

- pcapng (Improved libpcap): A newer, more advanced format that offers features not found in the older pcap format, such as better metadata support and the ability to handle larger capture files.

- Other formats: Some tools might use proprietary formats. However, pcap and pcapng are the most widely used and compatible options, ensuring interoperability between different analysis tools.

Q 6. How do you filter packets in Wireshark or tcpdump based on specific criteria (e.g., port, protocol, IP address)?

Filtering packets is crucial for managing the volume of data in a capture. Both Wireshark and tcpdump provide powerful filtering mechanisms using display filters and capture filters respectively.

- Wireshark (Display Filters): These filters are applied *after* the capture is complete. They are used to display only the packets that match the specified criteria. Examples include:

ip.addr == 192.168.1.100(show packets to/from IP 192.168.1.100)port 80(show packets on port 80 – HTTP)tcp.port == 443(show TCP packets on port 443 – HTTPS)http(show HTTP packets)

- tcpdump (Capture Filters): These filters are applied *during* the capture, defining which packets are saved to the capture file. This is more efficient as it reduces the amount of data recorded. The syntax is similar to Wireshark’s display filters but applied before capturing.

tcpdump -i eth0 host 192.168.1.100(capture packets to/from IP 192.168.1.100 on interface eth0)tcpdump -i eth0 port 22(capture packets on port 22 – SSH)

Both approaches utilize a powerful filter language, and the exact syntax and available options might vary slightly between tools and versions. Consulting the documentation is recommended for precise control and advanced filtering techniques.

Q 7. Explain the concept of a network protocol stack.

The network protocol stack is a layered architecture that defines how network communication is organized. Each layer has specific responsibilities and interacts with the layers above and below it. It’s analogous to a multi-story building, with each floor (layer) having its specific function.

The common model is the TCP/IP model (although other models exist), which comprises four main layers:

- Application Layer: This is the top layer, interacting directly with the user or application. Protocols here include HTTP, FTP, SMTP, DNS.

- Transport Layer: This layer handles end-to-end data transfer. TCP and UDP operate at this layer.

- Network Layer: Responsible for routing packets between networks. IP is the primary protocol at this layer.

- Link Layer: This is the bottom layer, dealing with physical transmission of data over the network media. Ethernet and Wi-Fi operate at this layer.

Data travels down the stack from the application layer to the link layer, and then back up the stack from the link layer to the application layer. Each layer adds its own header and/or trailer to the data, providing information needed for that specific layer’s functionality.

Understanding the protocol stack is crucial for network troubleshooting. Problems can occur at any layer, and identifying the affected layer is critical for effective resolution.

Q 8. What are some common network protocols you’d analyze using packet capture?

Packet capture analysis involves examining the raw data transmitted over a network. Many protocols are commonly analyzed, depending on the investigation’s goals. Some of the most frequent include:

- TCP (Transmission Control Protocol): A connection-oriented protocol providing reliable data delivery. Analyzing TCP packets helps understand application communication, identify dropped packets (indicating potential network issues), and examine TCP flags (SYN, ACK, FIN, etc.) for insights into connection establishment and termination.

- UDP (User Datagram Protocol): A connectionless protocol offering faster but less reliable data transfer. Analyzing UDP packets is crucial for examining applications like DNS, streaming media (e.g., RTP), and VoIP, where slight data loss is acceptable for speed.

- HTTP (Hypertext Transfer Protocol): The foundation of web communication. Analyzing HTTP packets reveals website activity, including requests, responses, and data exchanged between a client (browser) and a server.

- HTTPS (Hypertext Transfer Protocol Secure): The secure version of HTTP using SSL/TLS encryption. While the payload is encrypted, we can still analyze the handshake process, identify the server certificate, and detect potential SSL/TLS vulnerabilities.

- DNS (Domain Name System): Resolves domain names to IP addresses. Analyzing DNS packets helps trace website access, identify potential DNS poisoning or spoofing attacks, and map network infrastructure.

- SMTP (Simple Mail Transfer Protocol): Handles email transmission. Examining SMTP packets can unveil email-based attacks, analyze email routing, and identify email server configurations.

- FTP (File Transfer Protocol): Used to transfer files between systems. Analysis can reveal data exfiltration attempts or unauthorized file access.

The specific protocols analyzed depend heavily on the investigation’s context. For instance, if investigating a suspected data breach, focus would be on protocols like HTTPS, FTP, and potentially custom protocols used by the organization’s applications.

Q 9. How can you identify malware communication in a packet capture?

Identifying malware communication in a packet capture requires a multi-faceted approach. Malware often communicates using various protocols and techniques to avoid detection. Key indicators include:

- Unusual destinations: Malware might communicate with Command and Control (C&C) servers located in unexpected locations (e.g., unusual IP addresses, countries, or domains).

- Obscured communication: Malware may use encryption or tunneling techniques to hide its communication. Analyzing encrypted traffic is difficult but examining the metadata (e.g., source and destination IPs, ports) can still reveal suspicious patterns.

- Unexpected ports: Malware often uses non-standard ports to evade detection by firewalls or intrusion detection systems (IDS).

- High volume of traffic to unknown hosts: A sudden increase in outgoing connections to unfamiliar destinations can indicate an infection.

- Specific protocol patterns: Some malware families exhibit unique patterns in their network traffic. Analyzing these patterns using signature-based detection or machine learning can be crucial.

Tools like Wireshark can facilitate this analysis by allowing filtering based on specific protocols, IP addresses, ports, or keywords. Correlation with other security logs and endpoint detection data is crucial for confirmation.

For instance, observing numerous connections from a workstation to a server in a country known for hosting malicious infrastructure while using unusual ports should raise suspicion and warrant further investigation. It’s important to consider the context – unusual traffic might have benign causes but needs careful examination in relation to network baselines.

Q 10. How do you analyze a packet capture to identify performance bottlenecks?

Analyzing packet captures to identify performance bottlenecks involves scrutinizing various aspects of the network traffic. The goal is to pinpoint where delays or congestions are occurring.

- High latency: Examine the time it takes for packets to travel between points. High latency values indicate delays. You can pinpoint the specific network segments or devices causing these delays.

- Packet loss: Missing packets signify that data was lost during transmission. Identify the points of packet loss and their causes (e.g., network congestion, faulty hardware). Wireshark allows for efficient packet loss visualization.

- Retransmissions: Frequent retransmissions show that TCP is struggling to reliably transmit data due to packet loss or congestion. Analyzing these retransmissions provides clues on bandwidth limitations or network errors.

- Network congestion: High utilization of network links, visualized through metrics like bandwidth utilization, indicates a potential bottleneck. Identify congested network segments to address the underlying cause (e.g., insufficient bandwidth, faulty switches/routers).

- Protocol analysis: Analyzing specific protocols (e.g., TCP slow start) can reveal issues within application layers or network stack configurations. It could reveal an application consuming excessive resources.

Imagine a scenario with slow application response times. Analyzing the packet capture would reveal if the bottleneck is within the application itself (high latency within application-level interactions), the network (high latency between servers, high packet loss), or the client machine (slow processing of received packets).

Q 11. Explain the concept of port scanning and how you would detect it using packet capture.

Port scanning involves probing a target system to identify open ports, which can reveal vulnerabilities. Attackers use this information to exploit weaknesses or gain unauthorized access.

Detecting port scanning in a packet capture hinges on identifying patterns of TCP and UDP packets directed at numerous ports of a single target. Key indicators include:

- SYN scans (TCP): The attacker sends SYN packets (connection initiation) without completing the handshake (no ACK). Multiple SYN packets targeting various ports indicate a potential SYN scan.

- Connect scans (TCP): The attacker fully establishes TCP connections (SYN, SYN-ACK, ACK) to each port before closing them. These are easier to spot but can leave traces. A large number of completed connections suggests a connect scan.

- UDP scans: The attacker sends UDP packets to various ports. An absence of a response from the target server is expected, but many probes to different ports in a short time frame raises concerns.

- Stealth scans (TCP): Attackers might try to evade detection with stealthier methods, such as using TCP flags like FIN, NULL, or XMAS to avoid a clear response.

Using Wireshark filters, you can easily search for scans. For example, a filter like tcp.flags == 0x02 would highlight SYN scans. The context is crucial; a single probe to a port might be normal, while many probes targeting multiple ports are suspicious. Time-based analysis also matters; a sudden burst of scans to a specific IP address is more suspicious than a few sporadic scans.

Q 12. Describe different types of network attacks that can be detected using packet capture.

Packet capture analysis is a critical tool for detecting various network attacks. Here are some examples:

- Man-in-the-middle (MITM) attacks: These attacks involve intercepting communication between two parties. Analyzing packet captures can reveal unexpected traffic routing or unauthorized decryption attempts. Look for inconsistencies in communication flows, indicating an intermediary might be present.

- SQL injection: This attack injects malicious SQL code into database queries. While not directly visible in the network packets, the subsequent database activity or unusual HTTP responses may reveal the attack.

- Cross-site scripting (XSS): This attack injects malicious scripts into websites. Similar to SQL injection, analyzing web traffic may show unusual data being sent or received, possibly containing malicious script code.

- Session hijacking: The attacker steals a valid session ID and impersonates the legitimate user. Capturing the network session reveals the compromised session ID being used by an unauthorized party.

- ARP poisoning: This attacks alters the Address Resolution Protocol (ARP) table, causing network traffic to be redirected to the attacker. Analyzing ARP packets can reveal unauthorized ARP entries.

- DNS poisoning: Attackers redirect DNS queries to malicious servers. Capturing DNS traffic helps reveal unauthorized DNS responses.

The effectiveness of detection depends on the sophistication of the attack and the analyst’s skills. Many attacks use advanced techniques like encryption, making it challenging to identify them through simple packet inspection. Correlating network data with other security logs is extremely valuable.

Q 13. How can you use packet capture to investigate Denial-of-Service (DoS) attacks?

Investigating Denial-of-Service (DoS) attacks using packet captures focuses on identifying a flood of traffic targeting a specific victim. Key indicators include:

- High volume of traffic: A sudden surge in network traffic directed at a particular IP address or port signals a potential DoS attack. Analyze the bandwidth consumption and traffic patterns to identify the source(s) and type of attack.

- SYN floods: A massive number of SYN packets without subsequent ACKs overwhelm the victim’s resources, indicating a SYN flood attack.

- UDP floods: A large amount of UDP packets targeting various ports overwhelms the victim’s ability to process them.

- ICMP floods (ping of death): A flood of ICMP (ping) requests, often oversized or malformed, can crash the victim’s system.

- Source IP address analysis: The source IP addresses of the attack traffic can point to numerous compromised systems (botnet) or spoofed addresses (difficult to trace). Analyzing the volume of traffic from various sources provides important insights.

Let’s say a web server experiences an outage. Analyzing its packet captures will show an abnormal surge in requests (e.g., HTTP GET requests), overwhelming its resources, potentially from numerous different IP addresses. The nature of the attack traffic helps determine the specific type of DoS attack and assist in mitigation.

Q 14. What are the ethical considerations when performing packet capture analysis?

Ethical considerations are paramount when performing packet capture analysis. Capturing network traffic involves accessing private communications and potentially sensitive data. Key ethical aspects include:

- Consent: Always obtain explicit consent from all parties involved before capturing their network traffic. This is crucial for legal compliance and ethical conduct.

- Privacy: Protect the privacy of individuals whose communications are captured. Data should be anonymized whenever possible and handled according to relevant data privacy regulations (e.g., GDPR, CCPA).

- Confidentiality: Ensure confidentiality of the captured data. Use appropriate security measures (e.g., encryption, access controls) to protect against unauthorized access and disclosure.

- Purpose limitation: Clearly define the purpose of the packet capture and only use the data for that specific reason. Avoid using data for unintended purposes or purposes outside of the initial consent.

- Data retention: Establish a clear data retention policy. Only keep captured data for as long as necessary and securely dispose of it once no longer needed.

- Legal compliance: Ensure all activities comply with all relevant local, national, and international laws and regulations concerning data protection, privacy, and network security.

In short, treating network data as confidential, protecting privacy, and respecting individual rights are central to responsible and ethical packet capture analysis. Ignoring these aspects can result in legal and ethical repercussions.

Q 15. How do you ensure the integrity of a packet capture?

Ensuring the integrity of a packet capture is paramount for reliable analysis. A compromised capture can lead to inaccurate conclusions and wasted time. This involves several key steps:

- Verify Capture Tool Integrity: Use a reputable and well-maintained packet capture tool. Regularly update the software to patch security vulnerabilities that could compromise data during acquisition.

- Check for Timestamp Consistency: Examine the timestamps of the captured packets. Inconsistent or erratic timestamps suggest a problem with the capture process, potentially indicating data corruption or manipulation. Many tools provide visual representations of timestamps, allowing for easy spotting of anomalies.

- Hashing and Digital Signatures: For high-stakes scenarios, consider generating a cryptographic hash (e.g., SHA-256) of the capture file. This provides a verifiable fingerprint; any changes to the file will result in a different hash. If you need additional security, you could use digital signatures to authenticate the file’s origin and confirm it hasn’t been tampered with.

- Chain of Custody: In legal or forensic contexts, maintaining a strict chain of custody is critical. This documented record tracks every person who has handled the capture file, ensuring its integrity remains unquestionable. Detailed logs of access and modification are crucial elements of this process.

- Regular Backups: Always maintain multiple backups of your packet captures in different physical locations to protect against accidental data loss or hardware failure. A robust backup strategy is a vital part of preserving data integrity.

By diligently following these procedures, you significantly reduce the risk of encountering integrity issues, ensuring your analysis is based on accurate and trustworthy data.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe a scenario where you had to analyze a packet capture to solve a problem. What was the problem, your approach, and the outcome?

I once investigated intermittent network outages affecting a critical VoIP system. Users experienced dropped calls and significant latency. The problem was initially suspected to be hardware related.

My approach involved capturing packets on the affected network segments using Wireshark. I focused on the VoIP traffic, using display filters (tcp.port == 5060 || udp.port == 5060 for SIP) to isolate relevant conversations. I also captured traffic on both the network switches and the VoIP server to get a holistic view.

Analysis of the captures revealed periods of high packet loss correlated with specific timeframes when the outages occurred. Further investigation showed that the problem wasn’t with the VoIP hardware itself, but with network congestion caused by a rogue application consuming excessive bandwidth. Identifying the culprit application (a peer-to-peer file-sharing program) through examination of the captured data led to its removal and resolution of the VoIP outages.

The outcome was a swift resolution, avoiding costly hardware replacements and minimizing disruption to the business.

Q 17. What are some limitations of packet capture tools?

Packet capture tools, while powerful, have certain limitations:

- Performance Overhead: Capturing all network traffic on a busy network can significantly impact performance and potentially introduce more problems than it solves. The act of capturing consumes system resources.

- Storage Capacity: Large-scale captures, especially at high speeds, can quickly consume vast amounts of disk space. Managing and storing these files can be challenging.

- Filter Inefficiency: Although filters are crucial for narrowing down the data, complex filters can sometimes be inefficient and slow down analysis.

- Encryption: Packet capture tools alone cannot decipher encrypted traffic (HTTPS, VPNs, etc.). Specialized tools or knowledge of encryption keys are needed.

- Sampling Issues: Selective capture, while crucial for managing large volumes of data, may miss critical events, biasing the analysis if not carefully configured.

- Network Interface Limitations: The bandwidth of the network interface card (NIC) used for capture limits the data acquisition speed; this can cause packets to be missed.

Understanding these limitations is crucial for effective planning and deployment of packet capture techniques.

Q 18. Explain the difference between full and selective packet capture.

The difference lies in the amount of traffic captured:

- Full Packet Capture: This method captures every single packet traversing the network interface. It’s ideal for thorough analysis but generates massive files quickly, rapidly exhausting storage capacity and impacting network performance. Imagine recording an entire concert – you have all the details, but it takes a lot of storage.

- Selective Packet Capture: This technique only captures packets that match specific criteria, defined using filters based on protocols, ports, IP addresses, etc. This drastically reduces file size and performance impact. It’s analogous to recording only specific instruments from that concert based on your interest, making analysis more manageable and efficient. You can easily miss important parts if your criteria are incorrect.

Choosing between full and selective capture depends on the specific investigation. A full capture is often necessary for initial exploratory analysis or when the exact nature of the problem is unknown. Selective capture is preferred for focused investigations where the relevant traffic patterns are already identified.

Q 19. How do you deal with large packet capture files?

Dealing with large packet capture files requires a multi-pronged approach:

- Efficient Capture Strategies: Prioritize selective packet capture using well-defined filters. Use only the capture filters that are essential to investigate the problem, to minimize the size of the capture file.

- Compression: Compress capture files using appropriate tools to reduce their size. Many packet capture tools and utilities offer compression options.

- Chunking and Filtering: Divide large captures into smaller, manageable segments using filtering techniques applied before the actual capture or post-processing tools. Process these chunks separately or utilize software capable of efficiently processing large files.

- Specialized Software: Consider using tools designed for handling large-scale packet captures. These often leverage advanced techniques for efficient data processing.

- Cloud Storage: For extremely large files, cloud storage solutions can provide a scalable and cost-effective way to store and access the data.

- Specialized Analysis Tools: Some analysis tools allow streaming packet captures from their location instead of loading the whole file into memory. This makes analysis of large files much more efficient, allowing you to work on a subset of the data.

The key is to minimize the size of the capture file from the start through careful planning and using appropriate tools throughout the analysis process.

Q 20. What are some common challenges encountered when analyzing packet captures?

Common challenges when analyzing packet captures include:

- Data Volume: The sheer volume of data can be overwhelming. Efficient filtering and analysis techniques are crucial. It is useful to make use of display filters such as

ip.addr == 192.168.1.100to filter only packets related to a specific IP address. - Network Complexity: Analyzing complex networks with multiple devices and protocols can be difficult. Visualizations and advanced analysis tools can help make sense of the data.

- Encrypted Traffic: The inability to decode encrypted traffic is a significant limitation, often requiring additional tools or access to decryption keys.

- Protocol Obscurity: Understanding obscure or less-common protocols requires specialized knowledge and research.

- Missing Context: Packet captures provide network data but often lack system-level context such as application logs. Correlating network traffic with system logs provides a more complete picture.

- Error Handling: Incomplete or corrupt captures can lead to inaccurate results. Always validate the integrity of your capture.

Overcoming these challenges requires a combination of technical skills, experience, and the use of appropriate tools and techniques.

Q 21. How do you analyze encrypted traffic using packet capture?

Analyzing encrypted traffic using packet captures is challenging. Packet capture tools can show that encrypted traffic is present but cannot inherently decrypt it. The content remains hidden.

To analyze encrypted traffic, you need:

- Access to Keys/Credentials: If you possess the encryption keys or session credentials used to encrypt the traffic, you can decrypt the traffic using appropriate tools. This may require cooperation with involved parties, such as the network administrator.

- Protocol Specific Decryption: Tools exist to decrypt specific protocols like SSL/TLS if you possess the server’s private key. However, this process can require considerable technical expertise, depending on the tool selected and the protocol involved.

- Man-in-the-Middle Attacks (Ethical and Legal Considerations): In controlled environments (and only with proper authorization), a man-in-the-middle attack can be used to decrypt traffic, but this must be performed with utmost care and only after thoroughly considering the ethical and legal implications.

- Focus on Metadata: Even without decryption, you can analyze metadata within the encrypted packets to glean insights. Examining the volume, timing, and destination of encrypted communications can provide valuable contextual information.

It’s crucial to note that attempting to decrypt traffic without proper authorization is illegal and unethical. Always operate within legal and ethical boundaries.

Q 22. Explain the role of timestamps in packet capture analysis.

Timestamps are absolutely crucial in packet capture analysis; they’re the timestamps that provide the temporal context for each network event. Think of it like a video recording of network traffic – without timestamps, you’d just see a jumbled mess of events, unable to determine the sequence or timing of what happened. With accurate timestamps, we can reconstruct the timeline of events, understand the flow of communication, and identify latency issues or anomalies.

For example, if we see a login attempt followed by a file download a few seconds later, the timestamps allow us to establish a direct causal relationship. Conversely, if we find a series of failed login attempts all clustered around a specific time, this could indicate a potential brute-force attack. The precision of timestamps is also important; microsecond-level accuracy is often needed to analyze high-frequency trading or detect very short-lived network events.

Many packet capture tools allow you to adjust the display of timestamps, showing them in various formats (e.g., seconds since epoch, human-readable date and time), making it easy to correlate events across different parts of a capture.

Q 23. What are some tools you use besides Wireshark or tcpdump for network analysis?

While Wireshark and tcpdump are industry standards, I utilize several other tools depending on the specific task. For instance, tcpflow is fantastic for reconstructing TCP streams, making it much easier to analyze the actual data being transferred, rather than just the individual packets. This is particularly helpful when dealing with HTTP or HTTPS traffic to examine the content of web pages or API responses.

For more advanced analysis, I’ve used tools like ngrep for specific pattern matching within packet payloads. Imagine needing to find all packets containing a specific credit card number – ngrep would quickly filter out the noise and highlight the relevant packets. And for visualizing network traffic, I often integrate packet capture data with network monitoring tools such as SolarWinds or PRTG to get a holistic view of network performance and identify potential bottlenecks.

Finally, tools like tshark , the command-line version of Wireshark, are invaluable for scripting and automated analysis of large packet captures. I frequently use it to write custom scripts that perform specific tasks, such as generating reports or detecting anomalies based on pre-defined rules.

Q 24. How would you analyze a packet capture to identify unauthorized access attempts?

Identifying unauthorized access attempts involves a multi-step process. First, I would filter the packet capture for failed login attempts, focusing on protocols like SSH, RDP, or HTTP (for web-based authentication). I’d look for repeated login attempts from unusual IP addresses or geographic locations. Unusual login times, outside of typical business hours, are also a red flag.

Next, I’d examine the source and destination IP addresses of the failed attempts. Are they coming from known malicious sources? Are they targeting sensitive servers or databases? I would cross-reference the IP addresses against threat intelligence feeds to determine if they have been previously implicated in malicious activities.

Further investigation would involve looking at the payload of the packets. Are the credentials being sent in plain text (a huge security risk)? Are there any signs of automated tools, such as brute-force attacks or SQL injection attempts, being used? Finally, I would correlate this information with other logs, such as authentication logs or system event logs, to gain a more comprehensive picture of the potential breach.

For example, a sudden surge in failed SSH login attempts from a range of IP addresses outside the company’s network, during off-peak hours, would strongly suggest an organized attempt to gain unauthorized access. Examining the packet payloads would confirm if this was a brute-force attack or involved more sophisticated techniques.

Q 25. Explain your experience with analyzing different network topologies using packet captures.

Analyzing packet captures across different network topologies requires adapting your approach based on the network’s structure and protocols in use. In a simple, flat network, analysis is relatively straightforward. However, in more complex topologies like VLANs or VPNs, you must consider how traffic flows and is segmented.

For instance, when analyzing a network using VLANs, I would ensure I’m capturing traffic on the relevant VLANs to isolate specific segments. Understanding the VLAN tagging mechanism is essential to correctly interpret the traffic flow. With VPNs, you need to be mindful of encapsulation and decryption – if the VPN traffic is encrypted, you’ll need to use specialized techniques or have access to the VPN decryption keys to analyze the underlying data.

In a more complex network, I would often use tools such as Wireshark’s display filters to focus on specific traffic flows and protocols, and use packet capture data in conjunction with network diagrams or other configuration information to map the traffic patterns to the physical or logical network topology. A real-world example is investigating performance issues in a multi-site corporate network utilizing MPLS VPN. I’d carefully analyze the packet captures to identify potential bottlenecks or latency issues introduced by the VPN tunnels or MPLS infrastructure.

Q 26. What are some key performance indicators (KPIs) you’d monitor in a network using packet capture data?

Using packet capture data, several key performance indicators (KPIs) can be monitored to assess network health and performance. These include:

- Average latency: The average time it takes for a packet to travel from source to destination.

- Packet loss rate: The percentage of packets that are lost during transmission.

- Throughput: The amount of data transmitted per unit of time.

- Jitter: The variation in delay between packets.

- Bandwidth utilization: The percentage of available bandwidth being used.

Monitoring these KPIs allows us to proactively identify and address network bottlenecks, performance issues, and security vulnerabilities. For instance, a high packet loss rate could indicate a faulty network cable or switch, while consistently high latency might signal congestion or routing problems. By analyzing these metrics over time and correlating them with other network data, we can pinpoint the root cause of performance problems and implement appropriate solutions.

Q 27. How do you stay updated with the latest trends and techniques in packet capture and analysis?

Staying updated in the dynamic field of packet capture and analysis requires a multi-pronged approach. I regularly attend industry conferences and webinars, which provide insights into new tools, techniques, and emerging threats. This allows me to learn about innovative methods and best practices from leading experts.

Furthermore, I actively follow industry blogs, publications (like those from SANS Institute), and online forums. Participating in online communities fosters collaboration and allows me to learn from others’ experiences and challenges. New protocols and encryption methods constantly emerge, so continuous learning is essential.

Finally, hands-on experience is paramount. I frequently work on challenging projects involving different network topologies and protocols, allowing me to test and refine my skills in a real-world setting. This continuous practical application keeps my knowledge fresh and relevant.

Key Topics to Learn for Experience with Packet Capture and Analysis Software Interview

- Network Protocols: Understanding TCP/IP, UDP, HTTP, HTTPS, and other relevant protocols is fundamental. Be prepared to discuss their function, differences, and how they appear in packet captures.

- Capture Tools: Familiarize yourself with popular packet capture tools like Wireshark, tcpdump, or others relevant to your experience. Practice using them to capture and filter network traffic.

- Packet Analysis Techniques: Master the art of analyzing captured packets. This includes identifying communication patterns, troubleshooting network issues, and extracting relevant information from headers and payloads.

- Protocol Decoding: Develop your ability to decode packets at different layers of the OSI model, understanding how data is encapsulated and transmitted.

- Troubleshooting Network Problems: Practice applying your packet analysis skills to real-world scenarios, such as identifying network congestion, latency issues, or security breaches.

- Security Implications: Understand how packet capture and analysis can be used for both security monitoring and malicious activities. Discuss security best practices and potential vulnerabilities.

- Performance Optimization: Learn how packet analysis can help identify bottlenecks and optimize network performance.

- Filter and Display Options: Master the various filtering and display options within your chosen capture tools to efficiently isolate relevant information.

- Log Analysis: Become familiar with correlating packet captures with relevant system logs for a more comprehensive understanding of network events.

Next Steps

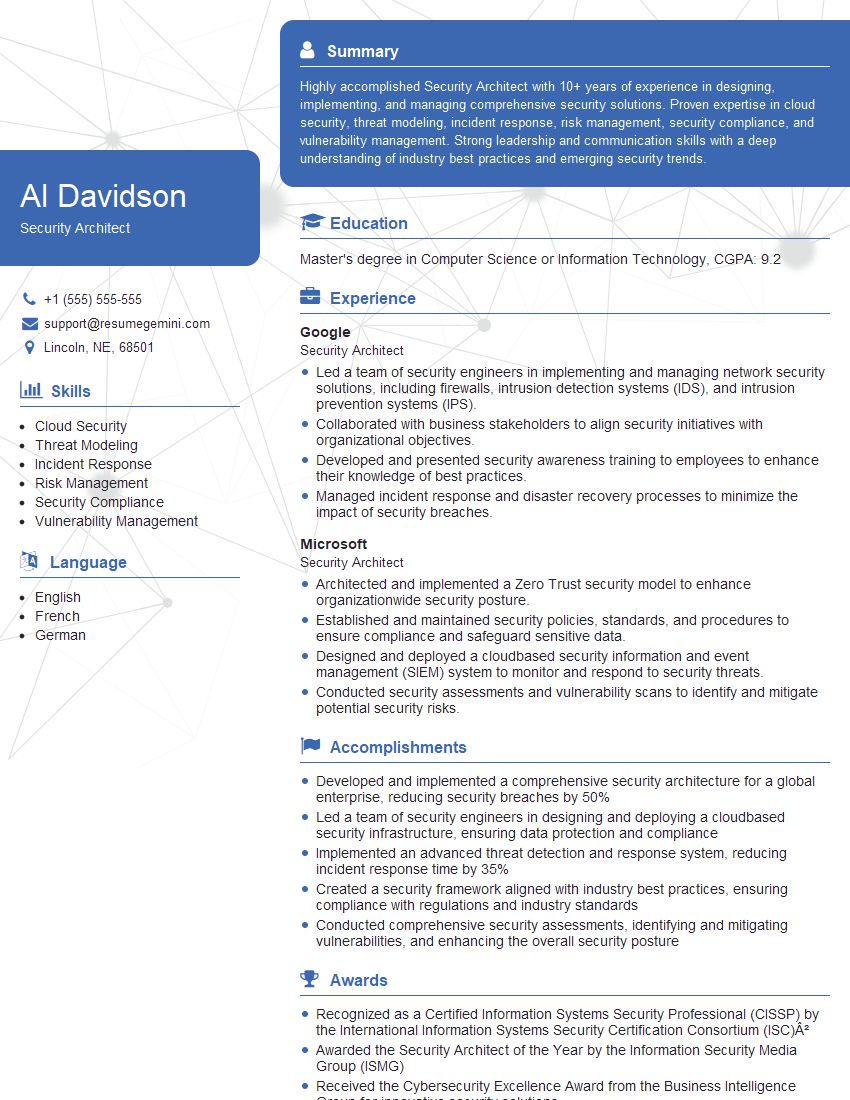

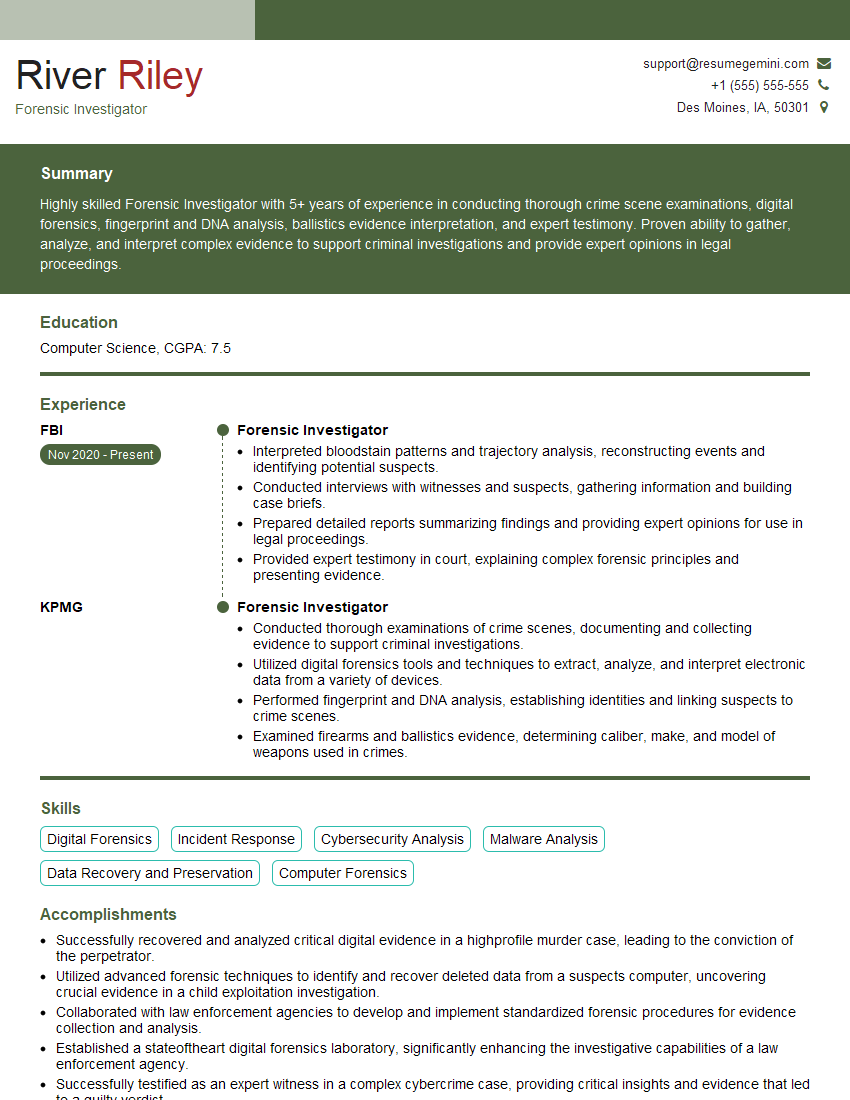

Mastering packet capture and analysis software is crucial for advancement in networking, security, and related fields. It demonstrates a high level of technical proficiency and problem-solving skills highly valued by employers. To significantly boost your job prospects, focus on creating a strong, ATS-friendly resume that clearly showcases your expertise. ResumeGemini is a trusted resource that can help you craft a professional and impactful resume tailored to highlight your skills in packet capture and analysis. Examples of resumes specifically designed for this field are available to guide you in showcasing your abilities effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good