Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Expertise in network security threat detection and mitigation interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Expertise in network security threat detection and mitigation Interview

Q 1. Explain the difference between signature-based and anomaly-based intrusion detection systems.

Signature-based and anomaly-based Intrusion Detection Systems (IDS) represent two distinct approaches to threat detection. Think of it like this: signature-based IDS is like having a photo album of known criminals – it only flags activity matching known malicious patterns. Anomaly-based IDS, on the other hand, is like having a security guard who knows what ‘normal’ behavior looks like and alerts you to anything unusual.

- Signature-based IDS: These systems rely on predefined signatures or patterns, often called ‘rules’, that define known malicious activities. When network traffic matches a signature, the IDS raises an alert. This is effective against known threats but misses novel attacks (zero-day exploits).

- Anomaly-based IDS: These systems establish a baseline of normal network activity by learning traffic patterns over time. Any deviation from this baseline, exceeding pre-defined thresholds, is flagged as an anomaly and could indicate a malicious activity. It’s better at detecting new threats but can generate false positives if the baseline isn’t carefully configured or if network behavior genuinely changes (e.g., during peak hours).

For example, a signature-based IDS might detect a known SQL injection attempt based on specific keywords in the HTTP request, while an anomaly-based IDS might detect a sudden surge in database queries from an unusual IP address, even if the attack method is new.

Q 2. Describe the process of incident response lifecycle.

The incident response lifecycle is a structured process for handling security incidents. It’s a crucial step in mitigating damage and improving future security posture. Imagine it like a well-orchestrated emergency response team.

- Preparation: This involves developing incident response plans, defining roles and responsibilities, establishing communication channels, and creating a comprehensive inventory of systems and data.

- Identification: This is the detection phase, where security tools and monitoring activities identify a potential security incident. This could be anything from a system log alert to a user report.

- Containment: This phase focuses on isolating the affected systems or networks to prevent further damage. This might involve shutting down a compromised server or blocking suspicious IP addresses.

- Eradication: This involves removing the threat and restoring the affected systems to a secure state. This could involve malware removal, patching vulnerabilities, or replacing compromised hardware.

- Recovery: This focuses on restoring systems and data to their normal operational state. It might involve data recovery from backups and testing system functionality.

- Post-Incident Activity: This phase involves analyzing the incident to understand what happened, identifying vulnerabilities exploited, and implementing preventative measures to avoid similar incidents in the future. This is critical for learning and improvement.

Q 3. What are the common types of network attacks and how can they be mitigated?

There’s a wide range of network attacks, but some common ones include:

- Denial-of-Service (DoS): Overwhelms a system with traffic, making it unavailable to legitimate users. Mitigation involves using firewalls, rate limiting, and DDoS mitigation services.

- Man-in-the-Middle (MitM): Intercepts communication between two parties. Mitigation involves using encryption (HTTPS), VPNs, and strong authentication.

- SQL Injection: Exploits vulnerabilities in database applications. Mitigation involves input validation, parameterized queries, and regularly updating software.

- Phishing: Attempts to trick users into revealing sensitive information. Mitigation involves security awareness training, email filtering, and multi-factor authentication.

- Cross-Site Scripting (XSS): Injects malicious scripts into websites. Mitigation involves input validation, output encoding, and using a web application firewall (WAF).

Mitigation strategies often involve a combination of technical controls (firewalls, IDS/IPS, WAFs) and non-technical controls (security awareness training, strong passwords, access control).

Q 4. How do you identify and respond to a Denial of Service (DoS) attack?

Identifying and responding to a Denial of Service (DoS) attack requires a multi-pronged approach. First, you need to detect the attack using monitoring tools, observing unusual spikes in network traffic or slow response times. Think of it like noticing a sudden, massive crowd trying to flood a building.

- Detect the attack: Use network monitoring tools to identify unusual traffic patterns, such as a large volume of requests from a single IP address or multiple sources flooding a target server. Look for sudden drops in service availability.

- Identify the source(s): Trace the origin of the malicious traffic using tools like packet capture (tcpdump) and network flow analysis. This helps understand the attack vector.

- Mitigate the attack: This might involve temporarily blocking the source IPs at the firewall, implementing rate limiting to restrict the number of requests from a single source, or using a dedicated DDoS mitigation service which handles the traffic scrubbing and redirects legitimate traffic.

- Analyze and report: Once the attack is mitigated, analyze the logs and network traffic to understand the attack method and potential vulnerabilities. Document everything for future incident response.

Q 5. Explain your understanding of firewalls and their role in network security.

Firewalls are essential security devices that control network traffic based on predefined rules. They act like a gatekeeper, allowing only authorized traffic to pass while blocking everything else. Imagine them as a bouncer at a club, only letting in people with the proper credentials.

Firewalls can be implemented at various layers of the network: Network Layer firewalls (packet filtering) inspect packet headers, while Application Layer firewalls (proxy firewalls) inspect the content of the traffic. They play a crucial role in preventing unauthorized access, protecting against malicious traffic, and enforcing security policies.

Common firewall functionalities include packet filtering, stateful inspection, application control, and intrusion prevention. They are a cornerstone of any network security architecture, forming a first line of defense against many types of attacks.

Q 6. What are the key components of a Security Information and Event Management (SIEM) system?

A Security Information and Event Management (SIEM) system is a centralized security monitoring solution. It collects and analyzes log data from various sources across the network, providing a comprehensive view of security events. Think of it as a central command center receiving reports from all security systems.

- Log Collection: Gathers security logs from various sources, including firewalls, IDS/IPS, servers, and applications.

- Log Normalization and Correlation: Processes raw log data, standardizes it, and correlates events across different sources to identify patterns and potential threats.

- Security Monitoring and Alerting: Monitors the aggregated data for suspicious activity and generates alerts based on predefined rules or anomalies.

- Reporting and Analytics: Provides dashboards, reports, and analytics on security events, allowing security teams to understand security posture and identify trends.

- Incident Response: Assists in incident response activities by providing context and details about security incidents.

Q 7. Describe your experience with intrusion detection and prevention systems (IDS/IPS).

I have extensive experience with both Intrusion Detection Systems (IDS) and Intrusion Prevention Systems (IPS). I’ve deployed and managed various IDS/IPS solutions, including both signature-based and anomaly-based systems. My experience spans configuring rules, tuning alert thresholds, and analyzing alerts to identify and respond to security incidents. In one project, I implemented an anomaly-based IDS to detect unusual network traffic patterns in our cloud environment. It identified a series of credential stuffing attempts that our signature-based IDS had missed. By analyzing the anomalies, we were able to strengthen our authentication procedures and prevent a data breach.

I am proficient in using tools like Snort, Suricata, and commercial IPS solutions. I understand the importance of properly tuning these systems to minimize false positives while maximizing the detection of malicious activity. In addition, I know how to integrate IDS/IPS with other security tools like SIEMs for improved threat detection and response capabilities.

Q 8. How do you analyze network logs to detect suspicious activity?

Analyzing network logs for suspicious activity involves a multi-step process that combines automated tools and human expertise. Think of it like detective work – we’re looking for patterns and anomalies that suggest malicious behavior. First, we need to identify the right logs to examine. This could include firewall logs, intrusion detection system (IDS) logs, web server logs, and even application logs, depending on the suspected threat.

Next, we use tools like Security Information and Event Management (SIEM) systems to aggregate and correlate these logs. These tools allow us to search for specific events, such as failed login attempts, unusual access patterns, or large data transfers. For example, a sudden surge in failed login attempts from a single IP address could indicate a brute-force attack. We can also use regular expressions (regex) to identify specific patterns in the log data, like suspicious strings or commands.

Beyond automated analysis, human expertise is crucial. We need to interpret the data in context, understanding normal traffic patterns within the organization. An unusual spike in traffic might be harmless if it corresponds to a planned system update, but could be a serious threat otherwise. We develop custom rules and baselines to match against the data using statistical techniques, machine learning and other analysis methods to more accurately filter outliers and identify patterns. Finally, it’s essential to validate any suspicions, using additional evidence to confirm if it is truly a security incident.

Q 9. What are the key principles of network segmentation and how does it improve security?

Network segmentation is like creating separate compartments within your network, each with its own security policies. Imagine a building divided into different wings – each wing has its own security system and access controls. The key principles are: dividing the network into smaller, isolated zones; applying granular security controls to each zone; limiting access between segments only to what is absolutely necessary; and implementing strong perimeter security for each segment. This is achieved using firewalls, VLANs (Virtual LANs), and other network devices to control traffic flow.

By segmenting the network, you limit the impact of a security breach. If an attacker compromises one segment, they won’t have automatic access to the entire network. For example, a compromised guest Wi-Fi network is less likely to affect your company’s internal servers if they are isolated in a separate segment. Network segmentation enhances security by reducing the attack surface, improving response time to security incidents, and facilitating easier compliance with various regulations.

Q 10. Explain your understanding of vulnerability management.

Vulnerability management is the ongoing cycle of identifying, assessing, prioritizing, and remediating security vulnerabilities across an organization’s systems and applications. It’s like a regular health check-up for your IT infrastructure. It starts with identifying vulnerabilities using vulnerability scanners, penetration testing, and security audits. These tools and techniques reveal weaknesses in software, hardware, and configurations that could be exploited by attackers.

Once identified, we assess the severity and risk associated with each vulnerability. This involves considering factors like the likelihood of exploitation and the potential impact if exploited. Then, we prioritize vulnerabilities based on their risk score, focusing on the most critical ones first. Remediation can involve patching software, configuring systems securely, or implementing security controls. Finally, we monitor the effectiveness of our remediation efforts and repeat the process to maintain a strong security posture.

Q 11. How do you prioritize security vulnerabilities?

Prioritizing security vulnerabilities involves a structured approach, often using a risk-based framework. This isn’t about simply patching everything at once; it’s about focusing on the vulnerabilities that pose the greatest threat to the organization. We typically use a combination of factors to assess the risk posed by a vulnerability. This includes the severity (how bad is the bug itself?), the likelihood of exploit (how easy is it for an attacker to abuse it?), and the impact (how much damage can be done if it’s exploited?).

We often use risk scoring systems, such as the Common Vulnerability Scoring System (CVSS), which provides a numerical score based on these factors. High-scoring vulnerabilities are prioritized for immediate remediation, while lower-scoring ones might be addressed later, depending on available resources and business priorities. Factors such as the business impact of the affected asset should also be considered. For instance, an exploited vulnerability on a critical server will be prioritized far higher than one in a development system even if the CVSS score is lower. This ensures that resources are focused on the most impactful vulnerabilities first.

Q 12. What is the difference between authentication, authorization, and accounting (AAA)?

AAA stands for Authentication, Authorization, and Accounting. Imagine a club with a strict entry policy. Authentication is like showing your ID at the door – it verifies who you are. Authorization is like checking if you’re on the guest list – it determines what you’re allowed to do once inside. Accounting keeps track of everything you do while you’re there – who you spoke to, what drinks you ordered, and how long you stayed. In cybersecurity, these three functions work together to secure access to resources.

- Authentication: Verifying the identity of a user, device, or other entity. This often involves usernames and passwords, multi-factor authentication, or digital certificates.

- Authorization: Determining what a user is allowed to access or do after successful authentication. This is based on roles, permissions, and policies.

- Accounting: Tracking user activity for auditing and security monitoring. This includes logging login times, actions performed, and resources accessed.

AAA is critical for securing any system that requires user access control, from simple logins to complex network access. Using AAA effectively strengthens overall security by preventing unauthorized access and providing valuable audit trails.

Q 13. Describe your experience with security auditing and compliance frameworks (e.g., ISO 27001, NIST).

I have extensive experience with security auditing and compliance frameworks such as ISO 27001 and NIST Cybersecurity Framework. Security auditing is the process of systematically examining an organization’s security controls to ensure they are effective and meet regulatory requirements. It’s like a thorough checkup of your organization’s security health. This process typically involves reviewing policies, procedures, configurations, and logs, and sometimes conducting penetration testing and vulnerability assessments.

My work with ISO 27001, for example, involved conducting audits to ensure compliance with the standard’s information security management system (ISMS) requirements. This includes documenting processes, managing risks, and ensuring appropriate controls are in place. Similarly, my experience with the NIST Cybersecurity Framework focuses on aligning organizational security practices with the Framework’s five functions: Identify, Protect, Detect, Respond, and Recover. This involves mapping organizational capabilities to the framework and identifying gaps in security posture, aiding risk assessment, and measuring effectiveness of overall security controls. I am familiar with various reporting methodologies and documentation requirements associated with these frameworks.

Q 14. Explain your experience with various security protocols (e.g., TLS, SSH, IPSec).

I have worked extensively with security protocols like TLS, SSH, and IPSec. These protocols are fundamental for securing network communications. They’re like different locks on different doors, each designed to protect specific types of data.

- TLS (Transport Layer Security): This protocol secures communications over a network, typically used for web traffic (HTTPS). It encrypts data exchanged between a client and a server, preventing eavesdropping. Think of it as securing your online banking transactions.

- SSH (Secure Shell): SSH secures remote logins and other network services. It encrypts the communication between a client and a server, preventing unauthorized access and data interception. It’s like a secure tunnel for managing servers remotely.

- IPSec (Internet Protocol Security): IPSec provides secure communication at the network layer, often used for VPNs (Virtual Private Networks) and secure site-to-site connections. It encrypts and authenticates network packets to protect data in transit. It’s like creating a secure connection between two networks.

My experience involves configuring and troubleshooting these protocols in various environments, ensuring they are properly implemented to provide strong security and maintaining best practices for key management and certificate lifecycle management.

Q 15. What are common malware types and how are they detected and removed?

Malware encompasses a broad range of malicious software designed to damage, disrupt, or gain unauthorized access to computer systems. Common types include viruses, worms, Trojans, ransomware, spyware, and adware. Detection and removal involve a multi-layered approach.

Viruses: Self-replicating programs that attach to other files. Detection relies on antivirus software scanning for known virus signatures or using heuristic analysis to identify suspicious behavior. Removal involves quarantining or deleting the infected files, often requiring system restoration.

Worms: Self-replicating programs that spread across networks without needing a host file. Detection involves network monitoring for unusual traffic patterns and using intrusion detection systems. Removal involves isolating the infected systems and patching vulnerabilities to prevent further spread.

Trojans: Disguised as legitimate software, they grant attackers unauthorized access. Detection is challenging, often relying on behavioral analysis and sandboxing techniques. Removal requires identifying and removing the Trojan, along with any backdoors it created.

Ransomware: Encrypts data and demands a ransom for decryption. Detection involves monitoring for unusual encryption activity and suspicious network connections. Removal may involve decrypting the data (if a key is available) and restoring backups. Prevention through regular backups is crucial.

Spyware: Secretly monitors user activity and collects sensitive information. Detection involves using anti-spyware software and examining system logs for unusual processes. Removal involves uninstalling the spyware and changing passwords for affected accounts.

Adware: Displays unwanted advertisements. Detection is typically through user observation of intrusive ads or through security software analysis. Removal involves uninstalling the adware program.

Effective malware removal often requires a combination of automated tools (antivirus, anti-malware, EDR solutions), manual investigation (log analysis, network monitoring), and system restoration techniques. Regular software updates and security awareness training for users are essential preventative measures.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle security incidents, including escalation procedures?

Handling security incidents requires a structured approach. My process involves these key steps:

Identification: Detect the incident through monitoring systems (SIEM, security logs, alerts) or user reports. This step includes verifying the legitimacy of the alert.

Containment: Isolate the affected system(s) from the network to prevent further damage or spread. This might involve disabling network access, disconnecting infected devices, or implementing network segmentation.

Eradication: Remove the threat from the system(s). This may involve removing malware, patching vulnerabilities, restoring from backups, or reinstalling systems.

Recovery: Restore affected systems and data to a functional state. This includes verifying data integrity and restoring services.

Post-Incident Activity: Analyze the incident to understand its cause, impact, and identify any vulnerabilities exploited. This analysis informs future security improvements. A comprehensive report documenting the entire process is crucial.

Escalation procedures are critical. I would escalate the incident based on severity and my ability to handle it. For example, incidents involving significant data breaches, system-wide outages, or legal ramifications would be immediately escalated to management and potentially external legal and forensic teams. Clear communication throughout the process, with all relevant parties, is paramount.

Q 17. What is your experience with endpoint detection and response (EDR) solutions?

I have extensive experience with various EDR solutions, including CrowdStrike Falcon, Carbon Black, and SentinelOne. EDR solutions provide real-time monitoring of endpoint activity, enabling proactive threat detection and response. My experience encompasses:

Deployment and Configuration: I’ve successfully deployed and configured EDR agents across diverse environments, ensuring optimal performance and minimal impact on system resources. This includes agent integration with existing security tools, such as SIEM and SOAR systems.

Threat Hunting and Investigation: I’ve used EDR’s advanced features to actively hunt for threats within endpoint data, including analyzing memory forensics, identifying malicious processes, and reconstructing attack timelines. EDR’s ability to provide a deep dive into an attack’s behavior is invaluable for incident response.

Alert Management: I’ve developed and refined alert rules to reduce noise and prioritize high-risk events, minimizing false positives while ensuring timely detection of critical threats. Effective alert management directly contributes to faster response times.

Integration with other Security Tools: EDR solutions integrate seamlessly with other security tools, enabling a holistic view of the security posture. This integration facilitates improved threat detection and reduced response time.

My experience demonstrates proficiency in leveraging EDR’s full capabilities for enhanced threat detection, incident response, and overall improvement of security posture.

Q 18. Explain your understanding of cloud security best practices.

Cloud security best practices revolve around the principle of shared responsibility. While cloud providers manage the underlying infrastructure, the customer retains responsibility for securing their data and applications within that infrastructure. Key aspects include:

Identity and Access Management (IAM): Implementing strong IAM controls, using least privilege access, and regularly reviewing user permissions.

Data Encryption: Encrypting data at rest and in transit to protect against unauthorized access.

Network Security: Utilizing virtual private clouds (VPNs), firewalls, and intrusion detection/prevention systems to secure network access and prevent unauthorized connections.

Vulnerability Management: Regularly scanning for vulnerabilities and patching systems promptly.

Security Information and Event Management (SIEM): Centralized logging and monitoring of security events to detect and respond to threats.

Data Loss Prevention (DLP): Implementing measures to prevent sensitive data from leaving the cloud environment unauthorized.

Regular Security Audits and Assessments: Conducting periodic assessments to identify weaknesses and ensure compliance with security standards.

Adopting a defense-in-depth approach, combining multiple layers of security controls, is essential for mitigating risks in the cloud. Regular security awareness training for cloud users is also a critical component.

Q 19. What experience do you have with penetration testing methodologies?

My penetration testing experience spans various methodologies, including black box, white box, and gray box testing. I’m proficient in using automated tools and manual techniques to identify vulnerabilities in systems and applications.

Black Box Testing: Simulates an external attacker with limited knowledge of the target system. This approach helps identify vulnerabilities that might be missed in other testing types.

White Box Testing: Provides testers with complete access to the system’s source code and internal documentation. This allows for a more thorough analysis of the system’s architecture and logic, uncovering deeper vulnerabilities.

Gray Box Testing: Offers a balance between black box and white box testing, providing the tester with some limited knowledge of the system. This approach is often used to simulate a more realistic attack scenario.

I utilize various tools and techniques, including vulnerability scanners, exploit frameworks (Metasploit), and manual exploitation methods. I adhere to strict ethical guidelines throughout the testing process, ensuring all testing is authorized and conducted responsibly. My reports provide detailed findings, including vulnerability severity, remediation advice, and potential business impacts. A well-structured penetration test report ensures clear communication and helps prioritize remediation efforts.

Q 20. How do you stay up-to-date with the latest security threats and vulnerabilities?

Staying current with the ever-evolving threat landscape is paramount. I employ several strategies to achieve this:

Threat Intelligence Feeds: I subscribe to reputable threat intelligence feeds from organizations like SANS Institute, MITRE ATT&CK, and various cybersecurity vendors. These feeds provide early warnings about emerging threats and vulnerabilities.

Security Conferences and Webinars: I regularly attend industry conferences and webinars to learn about the latest threats, techniques, and best practices from leading experts. Networking with peers at these events is also invaluable.

Industry Publications and Blogs: I actively follow leading cybersecurity publications and blogs, such as KrebsOnSecurity and Threatpost, to stay informed on breaking news and security research.

Vulnerability Databases: I regularly check vulnerability databases like the National Vulnerability Database (NVD) to assess the risk to my organization’s systems and prioritize patching.

Certifications and Training: Maintaining up-to-date certifications such as (relevant certifications, e.g., CISSP, CEH) demonstrates ongoing commitment to professional development and keeps my skills sharp.

Continuous learning is an integral part of my role. By staying informed about new threats and vulnerabilities, I can proactively adapt security strategies and protect against emerging risks.

Q 21. Explain your understanding of data loss prevention (DLP) strategies.

Data Loss Prevention (DLP) strategies aim to prevent sensitive data from leaving the organization’s control. This involves a multi-faceted approach:

Data Classification: Identifying and classifying sensitive data based on its value and sensitivity (e.g., PII, financial data, intellectual property). This provides a foundation for implementing appropriate controls.

Data Discovery and Monitoring: Identifying where sensitive data resides, both on premises and in the cloud, and continuously monitoring its access and movement.

Access Control: Implementing strong access controls to restrict access to sensitive data based on the principle of least privilege. Role-based access control (RBAC) is a common mechanism.

Data Encryption: Encrypting sensitive data both at rest and in transit to protect it from unauthorized access, even if it is compromised.

Data Loss Prevention Tools: Utilizing DLP tools that monitor data movement and block unauthorized attempts to transfer sensitive data outside the organization’s control. These tools often involve network-based monitoring and endpoint-based agents.

Employee Training: Educating employees about the importance of data security and providing training on best practices to prevent accidental data loss. This includes educating employees on phishing scams and social engineering attacks, which are often used to compromise sensitive data.

Regular Audits and Reviews: Conducting regular security audits and reviews to ensure that DLP measures are effective and up-to-date.

A comprehensive DLP strategy requires a combination of technical and procedural controls to ensure the confidentiality, integrity, and availability of sensitive data. It’s a continuous process of adaptation and improvement based on evolving threats and organizational needs.

Q 22. Describe your experience with threat intelligence platforms and sources.

Threat intelligence platforms are the central nervous system of a proactive security posture. They aggregate data from various sources to identify emerging threats, vulnerabilities, and potential attacks against your organization. My experience encompasses working with several leading platforms, including commercially available solutions like ThreatConnect and IBM QRadar Advisor with Watson, as well as open-source platforms like MISP (Malware Information Sharing Platform).

These platforms ingest data from diverse sources: external sources such as commercial threat feeds (e.g., VirusTotal, Flashpoint), open-source intelligence (OSINT) gathered from forums and public reports, and vulnerability databases (e.g., CVE); and internal sources such as security information and event management (SIEM) systems, endpoint detection and response (EDR) tools, and network traffic analysis tools. I’m adept at configuring these platforms to prioritize relevant threat data, filter noise, and enrich the information to create actionable intelligence.

For example, I once used ThreatConnect to correlate a newly discovered zero-day exploit targeting a specific version of our CRM software with our internal vulnerability scan results. This allowed us to prioritize patching that specific system before an attacker could exploit it.

Q 23. How do you correlate security events from different sources?

Correlating security events from disparate sources is crucial for accurate threat detection. Imagine security events as puzzle pieces; the more pieces you have, and the better you understand their relationship, the clearer the picture becomes. I employ a multi-faceted approach:

- Timestamp Correlation: Identifying events that occur within a short timeframe often indicates a related attack sequence. For example, a failed login attempt followed by a suspicious network connection from the same IP address suggests a potential brute-force attack.

- Source and Destination IP Address Correlation: Tracking the flow of communication between different systems helps pinpoint the source and target of attacks. This is especially important in identifying lateral movement within a network.

- User and Entity Behavior Analytics (UEBA): This involves analyzing user and system activity to identify deviations from normal behavior. A sudden spike in data exfiltration by a specific user could indicate a compromised account.

- Using SIEM capabilities: SIEM systems are specifically designed to correlate events based on predefined rules and custom queries. I leverage these capabilities to create sophisticated correlations, combining various data points to form comprehensive threat narratives.

For instance, I’ve used SIEM dashboards to visualize the relationships between a phishing email (identified by email security), a compromised user account (identified by the authentication logs), and subsequent anomalous network activity (identified by the network intrusion detection system) – all pointing to a successful targeted attack.

Q 24. What is your experience with SIEM rule creation and tuning?

SIEM rule creation and tuning is a critical aspect of effective security monitoring. It’s like fine-tuning a highly sensitive instrument; you need precision and expertise to avoid missing real threats while minimizing false alarms. My experience includes developing and refining rules for various SIEM platforms like Splunk and QRadar.

I follow a structured approach:

- Understanding the Threat Landscape: Staying updated on current threats allows for the creation of rules that address emerging attack vectors.

- Defining Specific Use Cases: Clear objectives are essential before writing any rules. For instance, a rule to detect suspicious outbound connections to known malicious IP addresses.

- Leveraging Existing Rule Sets: Many platforms offer pre-built rules that can be adapted and customized to your environment.

- Testing and Tuning: Rules must be rigorously tested against both real and simulated data to ensure accuracy and effectiveness. This iterative process of refining rules based on testing is key to reducing noise.

I’ve implemented rules to detect, for example, unusual spikes in database queries, file exfiltration attempts, and privilege escalation activity. Continuous monitoring and adjustment of these rules are essential to maintain their effectiveness.

Example Splunk rule (simplified): index=* sourcetype=access_log action=failed count=* | stats count by src_ip | where count>100

This Splunk rule counts failed login attempts from each source IP address and flags those with more than 100 failed attempts, suggesting a potential brute-force attack.

Q 25. How do you handle false positives in security monitoring systems?

False positives are inevitable in security monitoring, akin to receiving a fire alarm that turns out to be a kitchen mishap. Effectively handling them is about balancing security with operational efficiency. My approach involves:

- Improving Rule Tuning: Revisiting and refining SIEM rules to increase their specificity and reduce the likelihood of triggering alerts for benign activity. This often involves adding additional criteria to the rules.

- Contextual Analysis: Examining the surrounding events to determine whether an alert represents a real threat. A seemingly suspicious event might be justified by legitimate administrative activity.

- Automation: Implementing automated workflows to filter and suppress known false positives based on patterns identified over time. This involves creating workflows that auto-close tickets or alerts matching pre-determined scenarios.

- Machine Learning Techniques: Using machine learning algorithms to analyze patterns and distinguish between normal and abnormal behavior. This can help identify and filter false positives based on established baselines.

- Regular Review and Feedback: Periodically reviewing alerts and adjusting the rules and filtering mechanisms based on feedback from the security team. This continual improvement cycle is key to reducing long-term false positives.

For instance, I once developed a machine learning model that could identify and automatically dismiss alerts generated by a specific application’s legitimate yet unusual high-traffic activity, which had previously generated many false positives.

Q 26. Describe your approach to risk assessment and mitigation.

Risk assessment and mitigation is a systematic process of identifying potential threats, evaluating their likelihood and impact, and implementing controls to reduce their potential harm. It’s like building a fortress; you need to know where the weak points are and reinforce them. My approach follows a structured methodology:

- Asset Identification: Determining what assets need protection (data, systems, applications).

- Threat Identification: Listing potential threats (malware, phishing, denial-of-service attacks).

- Vulnerability Assessment: Identifying vulnerabilities in systems and applications (using vulnerability scanners, penetration testing).

- Risk Analysis: Estimating the likelihood and impact of each threat exploiting a specific vulnerability.

- Mitigation Planning: Developing and implementing controls to reduce the risk (e.g., patching vulnerabilities, implementing firewalls, intrusion detection systems).

- Monitoring and Review: Continuously monitoring the effectiveness of implemented controls and updating the risk assessment periodically.

A recent risk assessment I performed involved identifying the risks associated with migrating sensitive data to the cloud. By carefully evaluating the cloud provider’s security controls and implementing additional security measures such as encryption and access control lists, we effectively mitigated the risks involved.

Q 27. Explain your understanding of zero-trust security architecture.

Zero-trust security architecture is a paradigm shift from traditional network security models. It assumes no implicit trust, regardless of network location (inside or outside the corporate network). Imagine a fortress where every individual, regardless of their position, needs to prove their identity before gaining access to any resource.

Key principles include:

- Least Privilege Access: Granting users and systems only the minimum necessary access rights to perform their tasks. No more broad network access for all users.

- Microsegmentation: Dividing the network into smaller, isolated segments to limit the impact of a breach. If one segment is compromised, the attackers can’t easily move laterally.

- Continuous Verification: Regularly verifying the identity and trustworthiness of users and devices. This can involve multi-factor authentication (MFA) and regular security checks.

- Data Encryption: Protecting data both in transit and at rest using encryption techniques. This prevents attackers from accessing data even if they manage to compromise a system.

Implementing a zero-trust architecture requires a holistic approach, involving changes in network infrastructure, security policies, and user access management. It’s a long-term investment, but the improved security posture justifies the cost.

Q 28. What are your thoughts on the future of network security?

The future of network security will be defined by several key trends:

- AI and Machine Learning: These technologies will play an increasingly important role in threat detection, response, and incident investigation. AI can analyze vast amounts of data to identify subtle patterns and anomalies that humans might miss.

- Automation and Orchestration: Security tasks will become increasingly automated, improving efficiency and reducing response times to security incidents.

- Cloud Security: As organizations increasingly rely on cloud services, the focus will shift towards securing cloud environments and hybrid cloud architectures.

- DevSecOps: Integrating security into the software development lifecycle will become increasingly crucial to prevent vulnerabilities from reaching production environments.

- Quantum Computing: While still in its early stages, quantum computing poses a significant threat to current encryption techniques. The industry needs to adapt to this emerging technology.

- Extended Detection and Response (XDR): The convergence of security data from different sources (endpoints, network, cloud) into a unified platform to improve threat detection and response capabilities.

The future of network security is a dynamic landscape that requires continuous learning and adaptation. The ability to stay ahead of emerging threats and adopt new technologies will be crucial for success.

Key Topics to Learn for Expertise in Network Security Threat Detection and Mitigation Interview

- Network Security Fundamentals: Understanding TCP/IP model, network protocols, and common network devices (routers, switches, firewalls).

- Threat Detection Techniques: Proficiency in Intrusion Detection Systems (IDS), Intrusion Prevention Systems (IPS), Security Information and Event Management (SIEM) tools, and log analysis.

- Vulnerability Assessment and Penetration Testing: Experience with vulnerability scanners, penetration testing methodologies, and ethical hacking principles.

- Security Monitoring and Incident Response: Knowledge of incident response plans, incident handling procedures, and experience with security orchestration, automation, and response (SOAR) tools.

- Cloud Security: Understanding security considerations within cloud environments (AWS, Azure, GCP) and cloud-native security tools.

- Malware Analysis: Familiarity with malware analysis techniques, reverse engineering, and sandbox environments.

- Threat Intelligence: Understanding threat intelligence feeds, threat actors, and common attack vectors.

- Security Auditing and Compliance: Knowledge of relevant security frameworks (e.g., NIST, ISO 27001) and compliance requirements.

- Practical Application: Discuss real-world scenarios where you’ve applied these concepts to detect and mitigate threats. Prepare examples showcasing your problem-solving skills and technical expertise.

- Problem-Solving Approach: Practice articulating your thought process when faced with a complex security challenge. Highlight your ability to analyze, diagnose, and propose effective solutions.

Next Steps

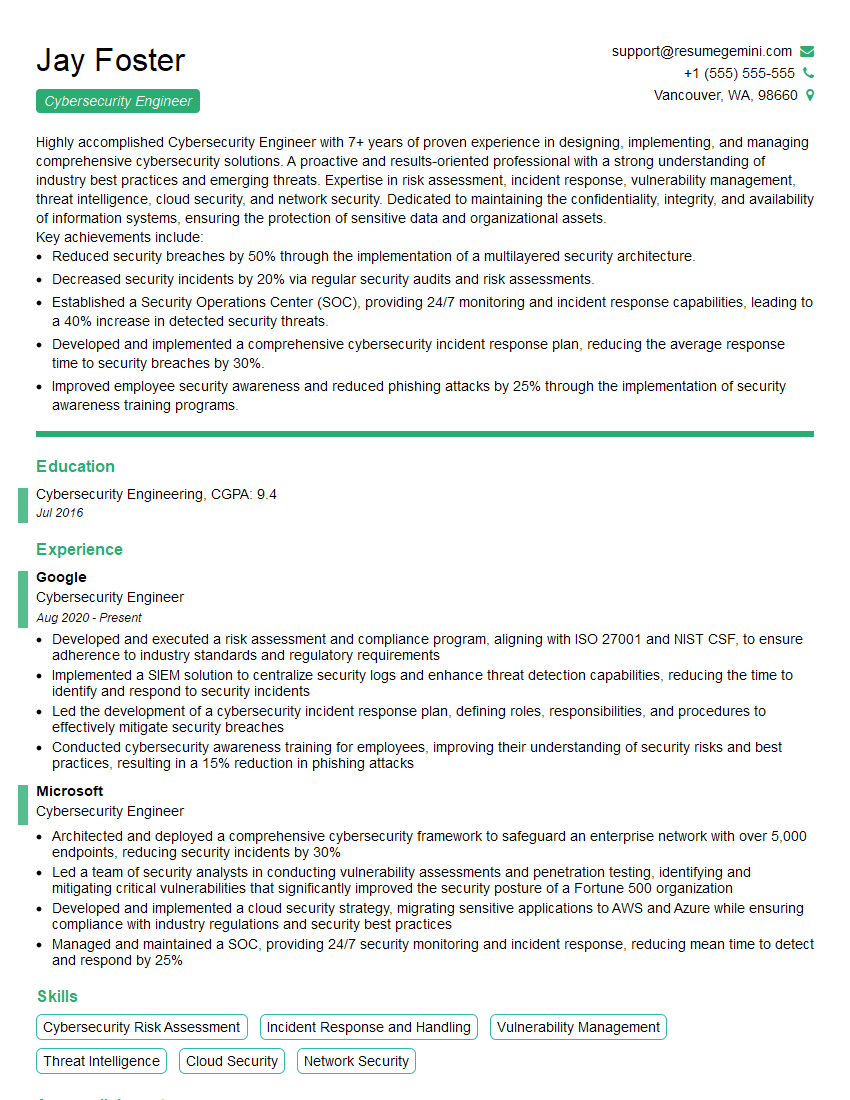

Mastering expertise in network security threat detection and mitigation is crucial for a successful and rewarding career in cybersecurity. This field offers continuous learning opportunities and high demand, leading to significant career advancement. To maximize your job prospects, it’s essential to create a strong, ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to the specific requirements of cybersecurity roles. Examples of resumes tailored to Expertise in network security threat detection and mitigation are available to help guide your resume creation process.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good