Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Failure Analysis and Root Cause Analysis interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Failure Analysis and Root Cause Analysis Interview

Q 1. Describe your experience with various Failure Analysis techniques (e.g., microscopy, spectroscopy, chemical analysis).

My experience with Failure Analysis techniques is extensive, encompassing a wide range of microscopy, spectroscopy, and chemical analysis methods. I’ve utilized optical microscopy for surface examination, identifying cracks, corrosion, and wear patterns. Scanning Electron Microscopy (SEM) and Transmission Electron Microscopy (TEM) have been crucial for high-resolution analysis of microstructural features and compositional variations at a nanoscale level. This allows for the identification of defects like voids, inclusions, and grain boundary issues which often are the root causes of component failure.

Spectroscopy, particularly Energy-Dispersive X-ray Spectroscopy (EDS) integrated with SEM, has been instrumental in determining the elemental composition of materials at various points within a failed component. This helps identify corrosion products, contamination, or diffusion effects. Techniques like X-ray diffraction (XRD) helped me determine the crystallographic phases present, useful in understanding material degradation processes. Furthermore, various chemical analysis techniques like Inductively Coupled Plasma Mass Spectrometry (ICP-MS) and gas chromatography-mass spectrometry (GC-MS) are employed to analyze chemical composition and trace impurities, providing valuable clues about the failure mechanism. For instance, in one project involving a failed turbine blade, SEM coupled with EDS helped pinpoint the presence of excessive sulfur, indicating a problem with the fuel composition, ultimately leading to the failure.

Q 2. Explain the difference between Failure Mode and Effects Analysis (FMEA) and Fault Tree Analysis (FTA).

Failure Mode and Effects Analysis (FMEA) and Fault Tree Analysis (FTA) are both proactive risk assessment techniques, but they approach the problem from opposite directions. FMEA is a bottom-up approach, systematically identifying potential failure modes for each component or process and evaluating their effects. It’s preventative, aiming to identify and mitigate risks before they happen. Imagine designing a new car; FMEA would systematically evaluate the potential failures of every part, from the engine to the headlights, predicting the consequences of each failure and assigning a severity rating.

Conversely, FTA is a top-down deductive approach. It starts with a specific undesired event (the top event) and works backward to identify the combinations of lower-level events that could cause it. Think of an airplane losing altitude – FTA would trace back potential causes like engine failure, control system malfunction, or wing damage, breaking down how these could lead to the top event. The output visually represents all possible failure pathways in a ‘tree’ like structure.

In short: FMEA focuses on preventing failures by assessing the potential modes of failure for individual components. FTA identifies the potential causes of a specific, undesired system failure. They are often used together for a more comprehensive risk assessment.

Q 3. How would you approach a situation where multiple potential root causes are identified?

When faced with multiple potential root causes, a structured approach is crucial. I would first employ a prioritization matrix, ranking potential causes based on factors like likelihood (probability of occurrence) and impact (severity of the consequences). This involves gathering data – failure rates, environmental conditions, operating parameters – to assign realistic probabilities and severity levels.

Next, I utilize techniques like Design of Experiments (DOE) to isolate the most influential factors. This might involve creating controlled experiments to systematically test the effect of each potential root cause, keeping other factors constant. Statistical methods, such as regression analysis or ANOVA, are applied to analyze the experimental data and determine which factors have the most significant impact on failure.

Finally, I employ the Pareto principle (80/20 rule) to focus on the most critical causes. Often, a relatively small number of root causes contribute to a significant portion of the failures. Addressing these ‘vital few’ will yield the most significant improvement. This iterative process helps narrow down the possibilities and identify the most likely root cause(s), even in complex scenarios with multiple contributing factors.

Q 4. What are some common limitations of Root Cause Analysis methodologies?

Root Cause Analysis methodologies, while powerful, have limitations. One significant limitation is the inherent difficulty of proving causality – correlation does not equal causation. You might find strong correlations between two factors, but that doesn’t guarantee one caused the other. For example, a company might notice an increased failure rate in one city, but that doesn’t necessarily mean the location is the root cause. Another factor like humidity, improper installation or customer use could be the real culprit.

Another limitation is human bias. Investigators might unintentionally favor hypotheses that align with their preconceived notions or initial assumptions, leading to a confirmation bias. Furthermore, incomplete data can hamper the accuracy of RCA. Lack of access to historical data, limited test data, or insufficient investigation resources can lead to incomplete conclusions.

Finally, the complexity of modern systems makes isolating the root cause challenging. Interactions between multiple components and factors can mask the true root cause, creating a ‘black box’ effect that requires more advanced modelling and investigative techniques to unravel.

Q 5. Describe your experience with statistical analysis tools used in Failure Analysis.

My experience with statistical analysis tools in Failure Analysis is extensive. I routinely utilize tools like Minitab, JMP, and R for various statistical analyses. Specifically, I use descriptive statistics to summarize failure data (mean, median, standard deviation, etc.), and inferential statistics to test hypotheses and make predictions about failure rates and mechanisms.

For example, I’ve used control charts (Shewhart, CUSUM) to monitor process stability and detect shifts in failure rates. This helps identify patterns or trends that might indicate underlying issues. Regression analysis is regularly applied to examine the relationship between different factors (e.g., operating temperature and failure rate) to understand which factors have the greatest influence on failures.

Survival analysis techniques, like Kaplan-Meier estimation and Weibull analysis, help me model the lifetime distribution of components and predict failure probabilities. This allows us to determine when maintenance should be scheduled to minimize failure occurrences. Ultimately, my application of these tools enables data-driven decision-making which improves product reliability and safety.

Q 6. How do you determine the significance of a failure mechanism?

Determining the significance of a failure mechanism involves a multi-faceted approach. Firstly, I assess the frequency of the failure mechanism. How often does this mechanism cause failure? This is often determined through historical data analysis, including failure rates and defect counts. Secondly, I evaluate the severity of the consequences when this mechanism causes failure. Does it lead to minor inconveniences, or catastrophic consequences? A simple scoring system or risk matrix can help quantify this.

Thirdly, I analyze the detectability of the mechanism. How easily can this problem be identified during manufacturing or in-service inspections? This involves a consideration of the available inspection methods and their effectiveness. Finally, I combine these factors – frequency, severity, and detectability – to assess the overall significance. A failure mechanism with high frequency, high severity, and low detectability is far more significant than one with low frequency, low severity, and high detectability. A weighted scoring system, incorporating these factors, can help prioritize failure mechanisms and guide corrective actions.

Q 7. Explain the concept of ‘Pareto Analysis’ and its application in RCA.

Pareto Analysis, also known as the 80/20 rule, states that roughly 80% of effects come from 20% of causes. In RCA, this principle helps focus efforts on the most significant contributors to failures. Instead of investigating every potential cause equally, it directs resources toward the ‘vital few’ causes that have the largest impact.

To apply Pareto Analysis in RCA, you collect data on the different types of failures, their frequencies, and the associated costs or consequences. Then, organize this data in descending order of frequency or impact. A Pareto chart, a bar graph showing the cumulative frequency, is a helpful visualization tool. It clearly illustrates which failures account for the majority of problems. In a manufacturing setting, for example, a Pareto chart might reveal that 80% of production downtime stems from just 20% of the identified machine failures. Focusing corrective actions on those 20% will likely deliver the most significant improvements in productivity.

Q 8. How do you differentiate between a systemic failure and a random failure?

The key difference between systemic and random failures lies in their predictability and underlying cause. A systemic failure is caused by a flaw inherent in the design, manufacturing process, or operating environment that affects multiple units. Think of it like a faulty blueprint – every house built from that blueprint will have the same structural weakness. These failures exhibit a pattern and can often be predicted statistically.

Conversely, a random failure is unpredictable and affects individual units due to chance occurrences. This could be a manufacturing defect affecting only one item in a large batch, or a sudden, unexpected external event causing damage. These failures are typically sporadic and don’t reveal a consistent underlying problem within the system itself.

Example: Imagine a batch of computer hard drives. A systemic failure might be a design flaw in the drive’s motor causing premature failure across many drives. A random failure could be a single drive being damaged due to a power surge affecting only that specific unit.

Q 9. Describe your experience with using Weibull analysis in reliability studies.

Weibull analysis is a powerful statistical tool I frequently use to model the failure rate of components or systems. It helps determine the underlying distribution of time-to-failure, allowing us to predict the reliability of a product over its lifespan. The analysis can reveal if failures are primarily due to early-life defects (infant mortality), random failures during the useful life, or wear-out failures towards the end of life.

In practice, I gather failure data, typically the time to failure for a number of units. This data is then plotted on a Weibull probability plot. The slope of the fitted line (the Weibull shape parameter, β) provides insights into the failure mechanism. A β < 1 indicates infant mortality, β ≈ 1 suggests a constant failure rate, and β > 1 signifies wear-out. The characteristic life (η) gives an estimate of the typical life expectancy.

For instance, in analyzing the reliability of a specific type of pump, Weibull analysis helped identify a wear-out failure mode characterized by a high shape parameter and allowed us to predict the optimal time for preventative maintenance, ultimately reducing costly unscheduled downtime. The results allowed for strategic improvements in the pump design to increase its overall life.

Q 10. How do you handle situations where insufficient data is available for RCA?

Insufficient data is a common challenge in RCA. When facing this limitation, I employ several strategies. First, I prioritize the available data, focusing on the most relevant information, even if it’s limited. I use techniques like fault tree analysis (FTA) or fishbone diagrams, which can guide investigation even with incomplete datasets. This helps to systematically explore potential causes.

Secondly, I actively seek additional information. This could involve reviewing design specifications, manufacturing records, maintenance logs, or interviewing operators and maintenance personnel. I often use statistical techniques to estimate missing values. For example, if I have partial data on a component’s failure, I might use Bayesian inference to estimate the remaining parameters.

Finally, I carefully document my assumptions and limitations. Transparency in reporting my findings is crucial, especially when data is limited. Acknowledging the uncertainties related to limited data strengthens the credibility of the investigation.

Q 11. Explain the 5 Whys technique and its limitations.

The 5 Whys is a simple yet effective iterative interrogative technique for exploring cause-and-effect relationships. It involves repeatedly asking “Why?” to drill down to the root cause of a problem. Each answer becomes the basis for the next question, leading to deeper understanding.

Example:

- Problem: The machine is not working.

- Why? Because the motor is not turning.

- Why? Because the power supply is faulty.

- Why? Because a wire is disconnected.

- Why? Because the wire was improperly installed during assembly.

The limitation of the 5 Whys lies in its simplicity. It assumes a relatively straightforward cause-and-effect chain. It might struggle with complex scenarios with multiple interacting factors or underlying systemic issues. It doesn’t provide a structured approach for complex systems and can be subjective to the person asking the questions. More sophisticated techniques are often needed for intricate problems.

Q 12. How do you present your findings from a Failure Analysis investigation to a non-technical audience?

Presenting complex failure analysis findings to a non-technical audience requires careful planning and clear communication. I avoid technical jargon and instead use clear, concise language, aided by visuals like flowcharts, diagrams, and photos. I structure my presentation with a clear narrative: describing the problem, the investigation process, and the key findings. The root cause, and its implications, should be highlighted clearly.

Analogies and real-world examples can help the audience grasp complex concepts. For example, instead of describing fatigue fracture mechanics, I might use the analogy of repeatedly bending a paper clip until it breaks. I also focus on the impact of the failure, conveying its implications in terms of cost, safety, or operational disruption. This helps the audience understand the significance of the findings and the importance of corrective actions.

Finally, I ensure the presentation is interactive, allowing ample time for questions and discussion. This helps to address any misunderstandings and reinforce understanding. Summarizing key findings and recommended actions ensures the audience leaves with actionable information.

Q 13. Describe your experience with different types of failure modes (e.g., fatigue, creep, corrosion).

My experience encompasses a wide range of failure modes. Fatigue is a common culprit, where repeated cyclic loading leads to crack initiation and propagation, ultimately causing failure. I’ve worked on cases involving fatigue failures in aircraft components, where microscopic crack analysis and fractography were crucial in determining the root cause.

Creep, the time-dependent deformation under sustained stress at high temperatures, is another significant area of my expertise. I’ve investigated creep failures in turbine blades, leveraging material characterization techniques and finite element analysis to model the deformation and predict failure times.

Corrosion, both uniform and localized, is a frequent failure mode. I’ve analyzed corrosion damage in pipelines and offshore structures, relying on techniques like electrochemical testing and microscopy to determine the corrosive environment and the mechanisms of attack. Each type of failure requires specialized analytical techniques and a thorough understanding of the materials and operating conditions involved.

Q 14. What is the importance of documentation in Failure Analysis and Root Cause Analysis?

Documentation is paramount in Failure Analysis and RCA. It provides a complete record of the investigation, ensuring traceability, reproducibility, and accountability. A well-maintained record enables efficient communication among team members, avoids repeating mistakes, and supports decision-making. It’s also vital for legal and insurance purposes.

My documentation approach usually includes detailed descriptions of the failed component, the failure event, initial observations, methodology, test results, and conclusions. Photographs, videos, schematics, and data tables are crucial. I use a standardized format to ensure consistency and facilitate efficient retrieval of information. This approach aids future investigations by providing valuable insights and preventing recurrence of similar problems.

Furthermore, thorough documentation strengthens the integrity and credibility of the investigation. It ensures that the findings are well-supported and that the root cause has been properly identified. This is essential for building trust among stakeholders and implementing effective corrective actions.

Q 15. How do you prioritize different potential root causes?

Prioritizing potential root causes is crucial for efficient Failure Analysis. We don’t chase every lead; we focus on the most likely culprits first. I use a combination of methods, including risk assessment and Pareto analysis. Risk assessment considers the likelihood of a cause and its potential impact. For example, a minor defect with minimal consequence might be ranked lower than a critical defect with a high probability of causing a major failure, even if the latter is less frequent. Pareto analysis, often visualized as a Pareto chart, helps identify the ‘vital few’ causes contributing to the majority of problems. By focusing on these high-impact causes, we maximize our efforts and achieve faster resolution.

In practice, I might build a prioritization matrix, scoring each potential root cause on likelihood and impact (e.g., 1-5 for each). A high score indicates higher priority. I also consider factors such as ease of investigation and the availability of resources. This structured approach ensures that we tackle the most significant issues first, minimizing downtime and maximizing the impact of our analysis.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you validate the effectiveness of corrective actions implemented after RCA?

Validating corrective actions is essential to ensure that the root cause has indeed been addressed and the problem won’t recur. This involves a multi-step process. Firstly, we define measurable Key Performance Indicators (KPIs) related to the failure mode. For example, if the RCA pointed to a faulty component causing frequent equipment downtime, a relevant KPI might be ‘downtime per month’.

Secondly, we establish a baseline for these KPIs before implementing the corrective actions. After implementing the changes, we monitor these KPIs closely over a predetermined period, comparing the post-implementation data to the baseline. Statistical methods, like control charts, can help determine if the observed improvement is statistically significant. If the KPIs show a sustained positive trend, it validates the effectiveness of the corrective actions.

Thirdly, we document the entire validation process, including the chosen KPIs, baseline data, post-implementation data, statistical analysis and conclusions. This documentation is critical for continuous improvement and auditing purposes.

For instance, if our corrective action involved replacing a specific component, we would track the failure rate of the new component over several months. If the failure rate remains significantly lower than the baseline, we have strong evidence that the corrective action was successful. However, if the issue persists, it signals that either the wrong root cause was identified, or that the corrective action was not fully effective, requiring further investigation.

Q 17. Describe your experience with using Fishbone diagrams in RCA.

Fishbone diagrams, also known as Ishikawa diagrams or cause-and-effect diagrams, are a powerful visual tool for brainstorming potential root causes in a structured way. They’re particularly useful in group settings, fostering collaboration and diverse perspectives. I’ve extensively used them across various projects, from analyzing manufacturing defects to investigating software crashes.

The diagram’s structure, resembling a fishbone, helps categorize causes into key areas (main bones) like manpower, machinery, materials, methods, measurements, and environment. Sub-causes branch out from these main categories. For example, if a manufacturing process is yielding defective products, the ‘machinery’ bone might have sub-causes like ‘worn-out tooling,’ ‘incorrect machine settings,’ or ‘inadequate maintenance’.

During a recent investigation into recurring software bugs, we used a Fishbone diagram. Each team member contributed to identifying potential causes under categories such as ‘code design,’ ‘testing procedures,’ and ‘deployment process’. This collaborative approach led to the identification of a previously overlooked coding error in a crucial module as the root cause of the recurring bugs.

Q 18. What are some common pitfalls to avoid in conducting a root cause analysis?

Several pitfalls can derail a root cause analysis, leading to ineffective solutions and repeated failures. One common mistake is jumping to conclusions prematurely, often referred to as ‘confirmation bias’. This involves focusing only on evidence supporting a pre-conceived notion and ignoring contradictory information. Another pitfall is focusing solely on symptoms rather than digging deeper to uncover the underlying causes. Treating symptoms without addressing the root cause is like putting a band-aid on a broken bone—it provides temporary relief but doesn’t address the actual problem.

Another issue is neglecting human factors. Often, procedural errors, inadequate training, or lack of communication contribute significantly to failures. Failing to consider these aspects can result in incomplete analysis. Finally, a lack of data or insufficient investigation can also lead to inaccurate conclusions. It’s crucial to gather comprehensive data from multiple sources and perform thorough investigations before drawing conclusions.

To avoid these pitfalls, it’s essential to maintain a structured approach, apply critical thinking, and involve a diverse team with different perspectives. A thorough review of the findings by independent experts can also help identify potential biases and errors.

Q 19. How do you ensure the objectivity and impartiality of your Failure Analysis investigations?

Objectivity and impartiality are paramount in Failure Analysis. To ensure this, I follow several key principles. First, I establish a clear scope and methodology before starting the investigation. This documented approach ensures a systematic investigation and minimizes biases. Second, I collect data from multiple independent sources, to avoid relying solely on a single perspective or potentially biased information. Third, I meticulously document all findings, including data, analysis, and conclusions, enabling others to review and validate the results. Transparency and traceability are key.

Furthermore, I actively seek diverse opinions and perspectives, including those who might have initially contributed to the issue. Involving individuals from different departments or levels of expertise helps uncover blind spots and biases. I also utilize statistical methods and data analysis techniques to minimize subjective interpretation. Finally, where appropriate, I involve independent external experts to ensure impartiality and bolster credibility.

For example, during an investigation into a manufacturing process failure, I ensured that data was collected from multiple sources—machine logs, operator reports, and quality control records—and analyzed independently by multiple engineers. This approach ensures that the results are not influenced by individual biases.

Q 20. Describe a situation where you had to deal with conflicting data or expert opinions during a RCA.

In one instance, I investigated a series of unexpected shutdowns in a critical manufacturing facility. Initially, the maintenance team suspected a problem with a specific piece of equipment, while the process engineers pointed towards a flaw in the control system software. The data initially seemed to support both arguments—there were instances of equipment malfunction and also instances of software-related errors around the same time. The conflicting data initially created confusion.

To resolve this conflict, I employed a multi-pronged strategy. Firstly, I extended the data collection period to gather more comprehensive data. Secondly, I worked closely with both the maintenance and process engineering teams to understand their individual perspectives and the rationale behind their conclusions. Finally, I used statistical analysis to identify correlations between the identified failure modes and the different potential causes, examining when each appeared and what other operational parameters were active at those times. This process revealed a subtle interaction between a specific equipment parameter and the control software that caused the shutdowns under certain operational conditions.

This case highlights the importance of detailed data collection, interdisciplinary collaboration, and robust data analysis in resolving conflicting information during an RCA. The seemingly contradictory evidence, when carefully investigated, led to a complete understanding of the root cause, preventing future occurrences.

Q 21. How familiar are you with different standards and regulations related to Failure Analysis (e.g., ISO 9001)?

I’m very familiar with various standards and regulations related to Failure Analysis, including ISO 9001, ISO 14001, and industry-specific standards. Understanding these standards is fundamental to conducting thorough and compliant investigations. ISO 9001, for example, emphasizes the importance of a robust quality management system, including processes for identifying, analyzing, and preventing nonconformities—which is directly relevant to failure analysis. The requirements for corrective actions and preventive actions are directly related to my work on RCA and validation of corrective actions.

My experience includes working with aerospace standards (e.g., AS9100) which mandate rigorous failure analysis procedures, especially for safety-critical systems. These standards dictate detailed documentation, traceability, and the use of approved methods for analysis. I’m also conversant with regulatory requirements for specific industries, such as those relating to medical devices or automotive safety, which often have strict guidelines for reporting and investigations of failures. This knowledge is critical in ensuring that our analysis meets both regulatory requirements and industry best practices.

In essence, my understanding of these standards informs my approach, ensuring a systematic and compliant process for all Failure Analysis investigations.

Q 22. Explain the role of preventive maintenance in reducing failures.

Preventive maintenance is proactive; it aims to prevent failures before they occur, rather than reacting to them after the fact. Think of it like regular check-ups for your car – you change the oil, rotate the tires, and inspect various components to avoid a major breakdown down the road. In the context of manufacturing or engineering systems, this involves scheduled inspections, lubrication, cleaning, and part replacements based on predicted wear and tear, or even predetermined intervals.

By implementing a robust preventive maintenance program, you significantly reduce the likelihood of unexpected equipment failures, leading to less downtime, lower repair costs, and increased overall system lifespan. For example, in a chemical plant, regularly inspecting pressure vessels for corrosion prevents catastrophic failures that could result in significant environmental damage and financial losses. The cost of preventive maintenance is always less than the cost of repairing catastrophic failures or dealing with unplanned downtime.

- Reduced Downtime: Fewer unexpected failures mean less time spent on repairs and more time producing.

- Lower Repair Costs: Addressing minor issues before they escalate drastically reduces the expense of major repairs.

- Improved Safety: By catching potential problems early, preventive maintenance mitigates safety hazards associated with equipment failure.

- Increased Equipment Lifespan: Regular maintenance extends the useful life of equipment, delaying the need for expensive replacements.

Q 23. Describe your experience with design for reliability (DFR).

Design for Reliability (DFR) is a crucial aspect of my work. It’s about integrating reliability considerations into the design process from the very beginning, rather than addressing them as an afterthought. My experience with DFR spans several projects, including the development of a new high-speed data acquisition system. We employed several key DFR principles including:

- Failure Mode and Effects Analysis (FMEA): We systematically identified potential failure modes for each component, assessed their severity, probability of occurrence, and detectability, helping prioritize design improvements.

- Reliability Modeling and Prediction: Using software such as Weibull++, we modeled the reliability of the system, predicting its lifetime and expected failure rate under various operating conditions. This helped optimize component selection and system architecture for enhanced reliability.

- Stress-Strength Analysis: We analyzed the stress imposed on components during operation and compared them to the component’s strength to identify potential failure points. This facilitated changes in material selection and design to enhance robustness.

In this particular project, the proactive application of DFR resulted in a system with a significantly higher Mean Time Between Failures (MTBF) compared to our initial projections, minimizing warranty claims and enhancing customer satisfaction.

Q 24. How do you use Failure Analysis data to inform future product design and development?

Failure Analysis data is the goldmine for improving future designs. After conducting a thorough failure analysis and identifying the root cause, I use that information to inform several aspects of the product development cycle.

- Design Modifications: For example, if a failure analysis reveals that a component is prone to fatigue under specific operating conditions, we can modify the design to reduce stress on that component, perhaps by using a stronger material, improving the geometry, or changing the operating parameters.

- Material Selection: If a material’s properties were identified as a major contributing factor, we can select a more suitable material with improved strength, corrosion resistance, or temperature tolerance.

- Manufacturing Process Improvements: Failure analyses may reveal flaws in the manufacturing process, such as inconsistencies in welding, inadequate heat treatment, or improper assembly techniques. This data drives improvements in manufacturing protocols to enhance product quality and consistency.

- Testing and Validation: The findings from the failure analysis inform the development of more rigorous testing and validation protocols to identify and eliminate similar failures in future products. We might add new tests to our validation plan to specifically target the identified failure mechanism.

Essentially, we use the lessons learned from failures to improve the reliability and robustness of future products, preventing similar failures and increasing customer satisfaction.

Q 25. What software tools are you proficient in for Failure Analysis and RCA (e.g., Minitab, JMP)?

I’m proficient in several software tools used for Failure Analysis and Root Cause Analysis. My expertise includes:

- Minitab: I use Minitab extensively for statistical analysis of failure data. This includes performing Weibull analysis to model failure rates, capability analysis to assess process performance, and various statistical tests to determine significant factors contributing to failures.

- JMP: JMP is another powerful statistical software package I use for analyzing complex datasets, visualizing relationships between variables, and building predictive models to anticipate future failures.

- MATLAB: I use MATLAB for more advanced simulations and modeling, particularly for analyzing complex systems and predicting their behavior under various stress conditions.

- Specialized FEA software: Depending on the nature of the failure, I might also use Finite Element Analysis (FEA) software like ANSYS or Abaqus to simulate the stress and strain on components and identify potential weak points in the design.

Q 26. How do you balance the cost of investigation with the potential consequences of failure?

Balancing the cost of investigation with the potential consequences of failure is a critical aspect of risk management. It’s a delicate balance—investigating every single failure can be prohibitively expensive, but ignoring potential issues can lead to far more significant costs down the line. My approach involves a risk-based assessment. I consider:

- Severity of potential failure: A failure that could result in significant injury or environmental damage demands a more thorough and expensive investigation than a minor inconvenience.

- Probability of recurrence: If a failure is likely to happen again, the cost of a thorough investigation is justified to prevent future problems.

- Cost of corrective action: The cost of implementing corrective measures must also be considered. If a simple fix can address a failure, it may not necessitate an extensive investigation.

- Available resources: The budget and available expertise influence the scope of the investigation.

In practice, this might involve employing a tiered approach: a quick initial assessment to determine the urgency of investigation, followed by a more thorough investigation only if warranted by the risks involved. A cost-benefit analysis is key to making informed decisions.

Q 27. Explain your experience with Failure Reporting, Analysis and Corrective Action Systems (FRACAS).

I have extensive experience with Failure Reporting, Analysis, and Corrective Action Systems (FRACAS). FRACAS is a structured approach to managing failures, from initial reporting to implementing corrective actions and verifying their effectiveness. In my previous role, I was instrumental in implementing and managing a company-wide FRACAS system. My responsibilities included:

- Developing and maintaining the FRACAS database: Ensuring accurate and timely recording of all failures, including descriptions, contributing factors, and corrective actions.

- Conducting failure analyses: Determining the root cause of failures using various techniques like fault tree analysis (FTA) and fishbone diagrams.

- Implementing and verifying corrective actions: Ensuring the effectiveness of corrective actions through follow-up analysis and monitoring.

- Generating reports and presenting findings: Communicating findings to management and stakeholders to inform design improvements and process optimization.

- Training personnel: Educating employees on the proper procedures for reporting failures and contributing to the FRACAS process.

The implementation of a robust FRACAS system drastically reduced the recurrence of failures and improved overall product reliability.

Q 28. Describe a time you failed in a Failure Analysis investigation, and what you learned from it.

In one instance, I investigated a recurring failure in a complex electromechanical system. Initially, I focused on the mechanical aspects, overlooking the subtle influence of electrical noise. I spent considerable time and resources meticulously analyzing the mechanical components, performing extensive simulations and even replacing several parts. Despite all efforts, the failure persisted.

It was only after a team member suggested considering the electrical system more closely that we discovered the root cause: electrical noise was causing intermittent malfunctions in the control circuitry. This oversight taught me the importance of considering all potential failure modes, even those outside my initial area of focus. I learned to adopt a more holistic and less biased approach, leveraging teamwork and cross-functional collaboration to ensure thorough investigations. Now, I always make a point to involve individuals with diverse expertise early in the investigation process, to broaden the perspective and reduce the chance of overlooking a critical aspect.

Key Topics to Learn for Failure Analysis and Root Cause Analysis Interview

- Understanding Failure Modes: Learn to identify and categorize different types of failures (e.g., mechanical, electrical, chemical). This includes recognizing the signs and symptoms of various failure mechanisms.

- Data Collection and Analysis Techniques: Master methods for gathering relevant data (visual inspection, testing, data logging) and analyzing it effectively using statistical tools and techniques. Practical application: Analyzing sensor data from a malfunctioning system to pinpoint the root cause.

- Root Cause Analysis Methodologies: Become proficient in various RCA methodologies such as 5 Whys, Fishbone diagrams, Fault Tree Analysis (FTA), and Failure Mode and Effects Analysis (FMEA). Understand their strengths and weaknesses and when to apply each.

- Problem-Solving Frameworks: Familiarize yourself with structured problem-solving approaches like the DMAIC (Define, Measure, Analyze, Improve, Control) methodology. This ensures a systematic and thorough investigation.

- Reporting and Communication: Practice clearly and concisely communicating your findings, including technical details and recommendations, to both technical and non-technical audiences. This includes creating effective presentations and written reports.

- Preventive Measures and Corrective Actions: Learn to develop and implement effective preventative and corrective actions to mitigate future failures and improve overall system reliability. Understand the importance of verification and validation.

- Specific Industry Knowledge (if applicable): Tailor your preparation to the specific industry you’re targeting (e.g., automotive, aerospace, manufacturing). Research common failure modes and analysis techniques relevant to that sector.

Next Steps

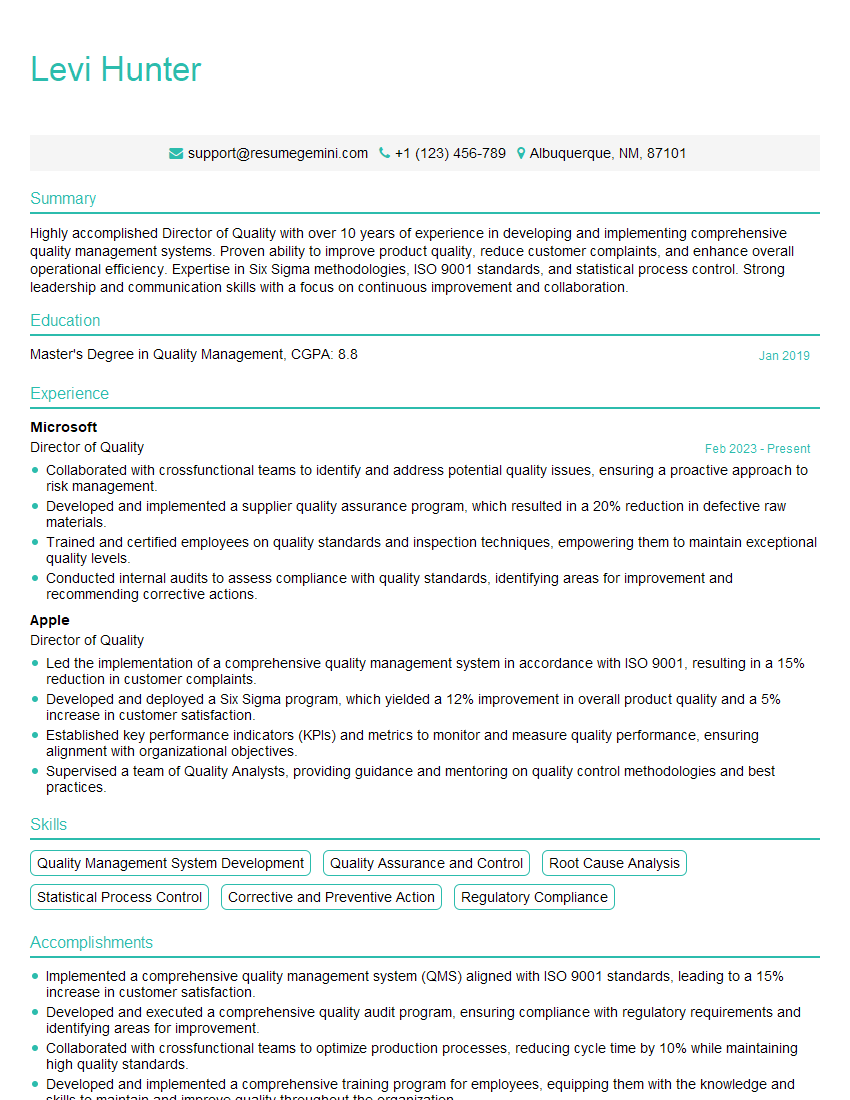

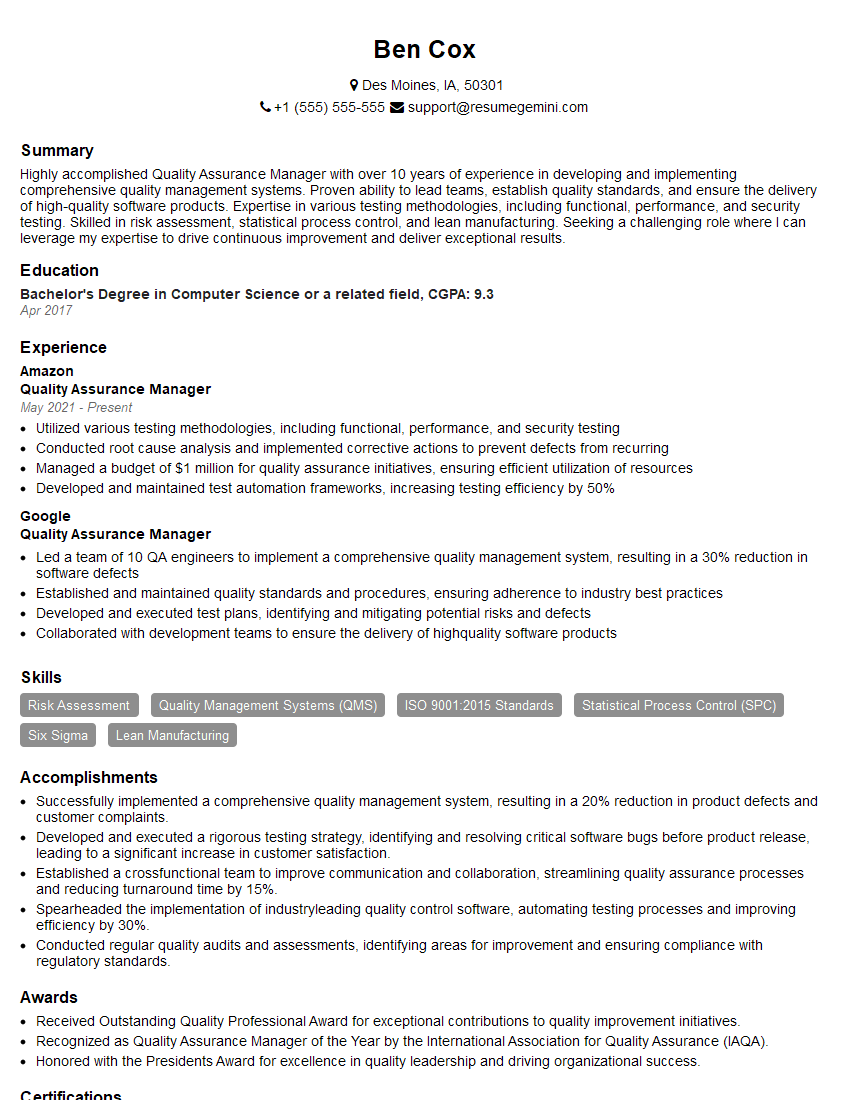

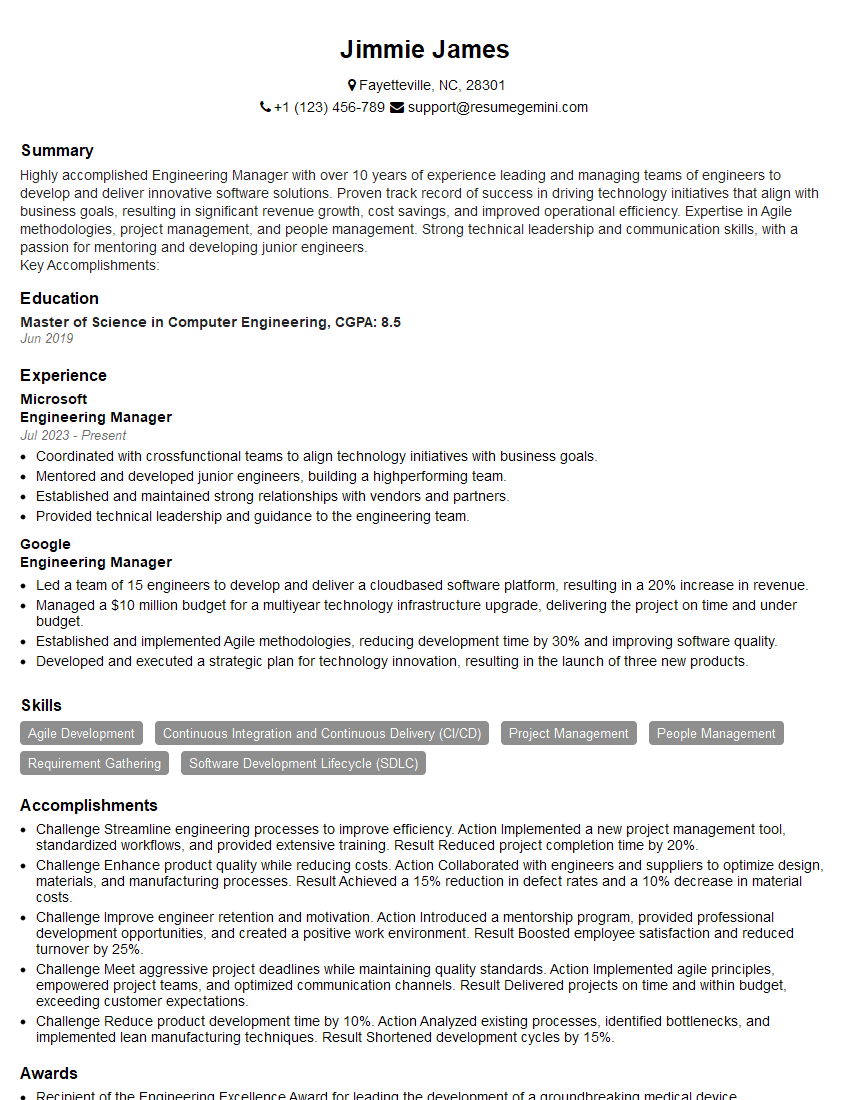

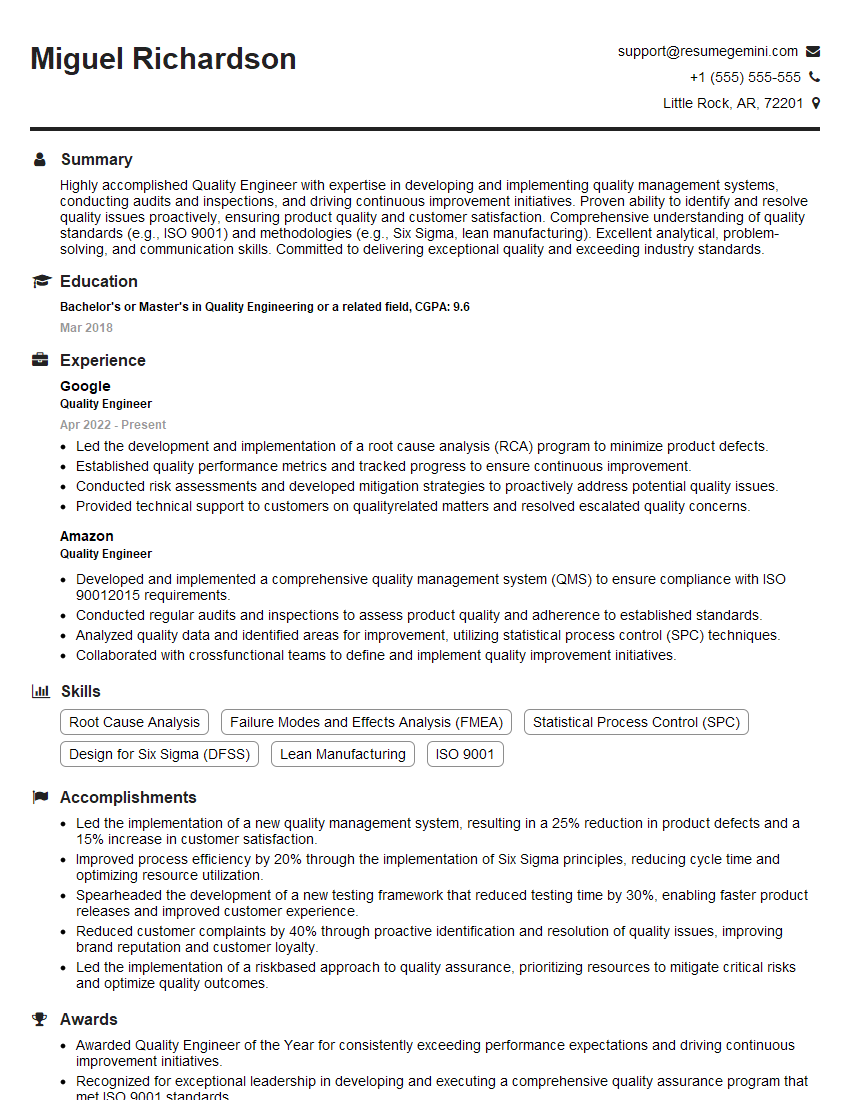

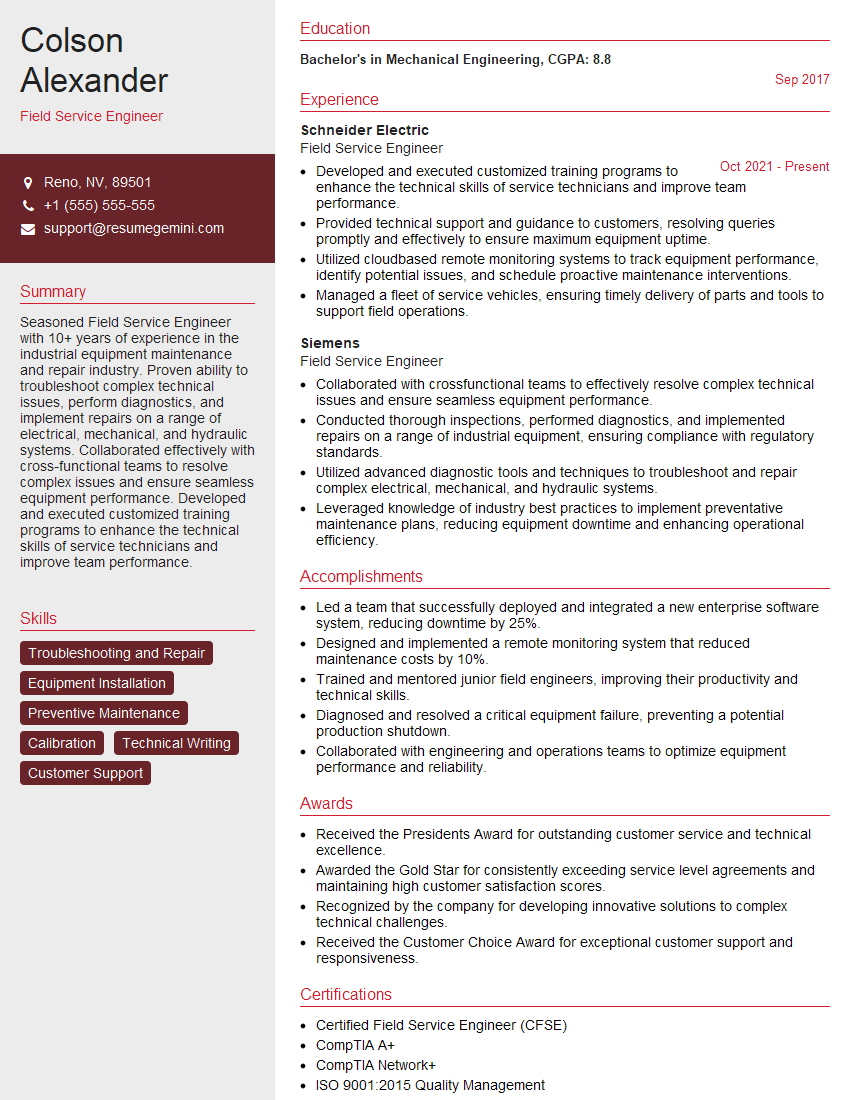

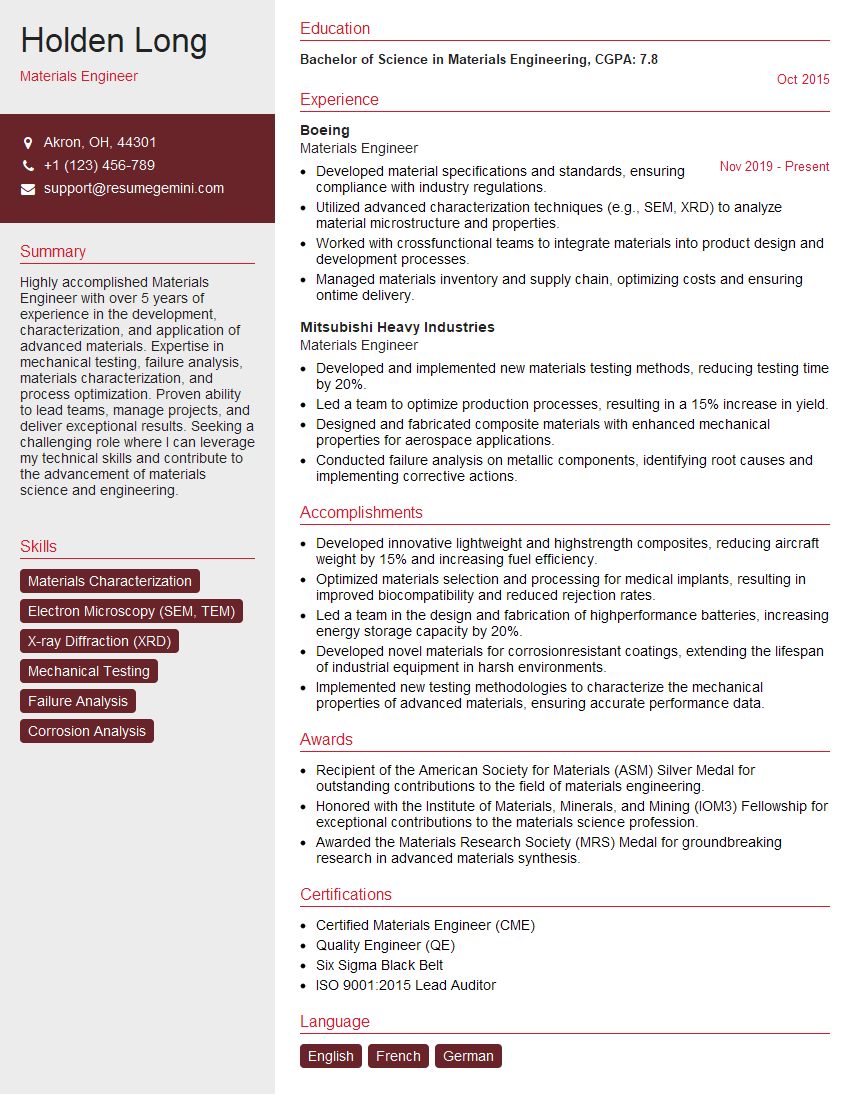

Mastering Failure Analysis and Root Cause Analysis is crucial for career advancement in numerous engineering and technical fields. It demonstrates valuable problem-solving skills and a commitment to quality and reliability. To significantly boost your job prospects, creating a strong, ATS-friendly resume is essential. ResumeGemini can help you build a professional and impactful resume that highlights your skills and experience effectively. We provide examples of resumes tailored to Failure Analysis and Root Cause Analysis roles to guide you through the process. Invest in your future—build a resume that gets noticed.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good