The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Fair and Impartial Grading interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Fair and Impartial Grading Interview

Q 1. Describe your experience developing or applying a grading rubric.

Developing and applying grading rubrics is crucial for ensuring fair and consistent assessment. A rubric provides a structured framework that outlines specific criteria and performance levels for a given assignment. My experience includes developing rubrics for various assessment types, from essay writing to complex research projects. For example, when creating a rubric for a student presentation, I would define criteria like content accuracy, organization, visual aids, and delivery style, each with clearly defined levels of proficiency (e.g., Excellent, Good, Fair, Poor). Each level would include specific descriptors to avoid ambiguity. This ensures that all students are evaluated against the same standards, promoting fairness and transparency.

In applying a rubric, I ensure that I carefully review each criterion for every student’s work, rating them independently. I use a systematic approach, ensuring consistency in applying the same standards across all submissions. After grading, I frequently review my scoring to ensure consistency and identify potential areas for improvement in rubric design or application. This iterative process continually refines the grading process and improves its reliability.

Q 2. How do you ensure fairness and consistency in your grading?

Fairness and consistency in grading are paramount. I achieve this through several strategies. First, using a well-defined and transparent rubric is key. This prevents subjective biases from creeping into the evaluation process. Second, I always grade assignments anonymously whenever possible, eliminating potential bias based on student names or prior performance. Third, I grade in batches, focusing on a specific criterion across all assignments before moving on to the next. This helps maintain consistency in applying each criterion’s standards. Finally, regular self-reflection on my grading practices and seeking feedback from colleagues are essential. This helps to identify and correct any inconsistencies or unconscious biases in my approach.

Q 3. Explain your understanding of inter-rater reliability.

Inter-rater reliability refers to the degree of agreement between different graders when evaluating the same work. High inter-rater reliability indicates that the grading process is objective and consistent. To ensure high inter-rater reliability, it’s crucial to have clear and specific grading criteria defined within a rubric. We often employ strategies such as double-blind grading (neither the grader nor the student’s identity is known), training sessions to ensure graders understand the rubric uniformly, and calibration exercises where graders grade the same assignments and discuss discrepancies in their scoring to reach a consensus. Discrepancies are analyzed to identify areas where the rubric needs refinement or where further training might be necessary. Statistical measures like Cohen’s Kappa can be used to quantify the level of agreement between graders.

Q 4. How would you handle a situation where you disagree with a colleague’s grading decision?

Disagreements with a colleague’s grading decision are addressed professionally and constructively. My first step is to schedule a meeting with the colleague to discuss the specific grading decision in question. I approach the conversation with an open mind, focusing on the specific criteria within the rubric that led to the differing scores. I present my reasoning and ask them to explain theirs, aiming for a shared understanding. We may review the student’s work together, focusing on specific points of disagreement. If we still disagree, we may involve a third party (e.g., a department head) for mediation. The goal is always to reach a fair and consistent resolution, ensuring that the student receives an accurate and justifiable grade.

Q 5. What strategies do you employ to minimize bias in your grading process?

Minimizing bias in grading requires conscious effort and awareness. I use several strategies: First, anonymizing student work whenever possible helps to eliminate bias based on name recognition or prior impressions. Second, I actively look for potential biases in my own thinking, being mindful of implicit biases around gender, race, socioeconomic status, or writing style. I try to focus solely on the criteria outlined in the rubric, not on extraneous factors. Third, using a standardized rubric is crucial; it provides a structured framework that reduces the impact of subjective interpretations. Finally, regular reflection on my own grading practices and seeking feedback from colleagues helps to identify and address potential blind spots.

Q 6. How do you ensure that grading criteria are clear, concise, and objective?

Clear, concise, and objective grading criteria are essential for fair and consistent assessment. I ensure this by using precise language in the rubric, avoiding vague terms like “good” or “adequate.” Instead, I use specific and measurable descriptors such as “accurately cites three scholarly sources” or “effectively integrates evidence to support claims.” The criteria are presented in a hierarchical structure, with clear definitions of each performance level. For instance, a rubric for an essay might include criteria such as thesis statement clarity, argument development, evidence use, and style, each with distinct levels (e.g., Exemplary, Proficient, Developing, Needs Improvement) and accompanying descriptors for each level. This ensures that both the graders and students have a shared understanding of the expectations.

Q 7. Describe your experience with different types of assessment methods (e.g., multiple-choice, essay, performance-based).

I have extensive experience with diverse assessment methods. Multiple-choice questions are efficient for assessing factual knowledge, but they can be limited in capturing deeper understanding or critical thinking skills. Essays allow for a more in-depth exploration of a topic, assessing critical thinking, writing skills, and the ability to synthesize information. However, grading essays can be time-consuming and potentially more susceptible to subjective biases. Performance-based assessments (e.g., presentations, projects, lab work) directly evaluate students’ abilities to apply knowledge and skills in real-world contexts. These methods offer a more holistic evaluation of learning but often require more complex rubrics and grading procedures. For each method, I tailor the assessment and grading rubric to the specific learning objectives and the nature of the assessment task. This ensures that the chosen assessment method aligns with the learning outcomes and provides a fair and accurate measure of student achievement.

Q 8. How do you address potential issues with grading ambiguity?

Grading ambiguity arises when assessment criteria are unclear or inconsistently applied, leading to unfair or inaccurate grading. To address this, I employ a multi-pronged approach. First, I ensure rubrics are meticulously crafted, outlining specific, measurable, achievable, relevant, and time-bound (SMART) criteria for each assessment. These rubrics are shared with students *before* the assessment, providing complete transparency and minimizing misunderstandings. Second, I use examples of excellent, good, fair, and poor work alongside the rubric to illustrate the expectations. This visual aid bridges the gap between abstract criteria and concrete student performance. Finally, I conduct regular inter-rater reliability checks, comparing my grading with that of a colleague, especially for subjective assignments like essays. Discrepancies are discussed to refine the rubric or clarify interpretations, thereby enhancing consistency.

For instance, if grading essays on a particular historical event, the rubric would specify points for accurate historical details, coherent arguments, proper citation, and clear writing style. Providing examples would demonstrate what constitutes, for example, a ‘coherent argument’ versus a ‘weak argument’. This prevents the arbitrary scoring of essays based on personal preferences.

Q 9. How do you manage a large volume of assessments to ensure timely and accurate grading?

Managing a high volume of assessments efficiently and accurately requires a well-organized system. I leverage technology to streamline the process. This includes using Learning Management Systems (LMS) like Canvas or Blackboard for submission and grading, often utilizing features like automated feedback for objective questions. For larger classes, I prioritize assignments that can be partially automated, such as multiple-choice quizzes. For subjective assessments, I create a structured grading schedule, allocating specific time blocks for different assessment types. I also utilize peer review or self-assessment strategies, where appropriate, to reduce my individual workload while engaging students in the evaluation process. This collaborative approach promotes self-reflection and provides additional feedback data. Regular breaks and prioritization of assignments also prevents burnout and maintains accuracy.

Q 10. How do you adapt your grading approach to diverse student populations?

Adapting my grading approach to diverse student populations involves recognizing and addressing potential barriers to equitable assessment. This means understanding and acknowledging that students learn and express themselves differently. I begin by designing assessments that allow for diverse methods of demonstrating knowledge. For example, offering choices in assignment formats (essays, presentations, projects) caters to varied learning styles. Further, I consider providing accommodations for students with disabilities, following established guidelines and collaborating with accessibility services. I also strive to use inclusive language and avoid culturally biased questions or examples in my assessments. By creating a welcoming and flexible assessment environment, I ensure that all students have a fair opportunity to showcase their learning.

For instance, instead of only requiring written essays, I might offer students the option to create a video presentation, a podcast, or an infographic – acknowledging that different students excel in different modes of expression.

Q 11. Explain your process for providing constructive feedback.

Constructive feedback is crucial for student learning. My process involves providing both specific praise for strengths and targeted suggestions for improvement. I avoid generic comments and instead focus on concrete examples from the student’s work. I use a combination of quantitative and qualitative feedback. Quantitative feedback might include numerical scores on specific criteria outlined in the rubric. Qualitative feedback includes descriptive comments explaining the ‘why’ behind the score – what worked well and what could be enhanced. Furthermore, I frame feedback positively, focusing on the learning process and encouraging growth rather than dwelling on errors. I also consider offering personalized suggestions based on individual student needs, providing resources for improvement if necessary.

For example, instead of writing ‘Your essay is good’, I might write, ‘Your introduction effectively establishes the main argument. To further strengthen your conclusion, consider summarizing the key findings in a more concise manner. Here’s a helpful resource on writing effective conclusions.’

Q 12. How do you track and analyze grading data to identify trends or areas for improvement?

Tracking and analyzing grading data helps identify patterns and areas needing improvement in both teaching and assessment design. I use the LMS’s built-in reporting features to generate statistics on overall class performance, individual student progress, and performance on specific questions or assessment items. This data reveals trends like consistent difficulties with particular concepts or an overall lack of comprehension in specific areas. I also conduct qualitative analyses of student work, looking for recurring themes or challenges in student responses. Based on this analysis, I might adjust my teaching strategies, modify future assessments, or create additional support materials. This iterative process of assessment and refinement ensures continuous improvement.

For example, if data shows that a significant portion of students struggled with a particular problem on a test, it might indicate a need to review that topic more thoroughly in class or provide additional practice problems.

Q 13. What are some common sources of bias in assessment, and how can they be mitigated?

Bias in assessment can stem from various sources. One common source is cultural bias, where questions or examples are unfamiliar or irrelevant to students from particular cultural backgrounds. Gender bias can manifest in assignments that implicitly favor certain gender roles or stereotypes. Halo effect occurs when a grader’s positive impression in one area influences their judgment in others. Severity bias and leniency bias refer to graders being either too harsh or too lenient in their scoring. To mitigate bias, I employ several strategies, including: using standardized rubrics, ensuring diversity in assessment materials and examples, being mindful of potential biases in my own grading, engaging in regular calibration with colleagues, and utilizing blind grading techniques where student identities are concealed.

For example, using diverse examples in case studies prevents the implicit favoring of particular demographics. Employing blind grading reduces the potential impact of halo effect or implicit biases based on student names or background information.

Q 14. Describe your experience with software or tools designed for automated grading.

I have experience using various software tools for automated grading, primarily focusing on LMS integrated features for multiple-choice and short-answer questions. These tools provide immediate feedback to students and free up time for more in-depth evaluation of subjective assignments. However, I recognize the limitations of automated grading for complex assessments. Automated systems can struggle with nuanced responses, creative assignments, and evaluating critical thinking skills. Therefore, I primarily use automation for objective, easily-scored tasks. While technology assists in efficiency, I believe careful human review remains essential for accurate and fair grading, especially for more complex, subjective assessments. Automated tools are a supplement, not a replacement, for effective human judgment.

Q 15. How do you maintain confidentiality and security of student data during the grading process?

Maintaining student data confidentiality and security during grading is paramount. It requires a multi-layered approach encompassing physical, technological, and procedural safeguards. Think of it like protecting a valuable treasure – multiple locks and security systems are needed.

Physical Security: Grading should only occur in secure locations with restricted access, preventing unauthorized individuals from accessing student work. This might involve locked offices, secure servers, or even designated grading rooms.

Technological Security: All digital grading platforms should be encrypted and password-protected. Regular software updates and the implementation of firewalls are essential to prevent cyberattacks. Data should be anonymized whenever possible, removing personally identifiable information from documents before sharing with graders (unless absolutely necessary).

Procedural Security: Clear protocols must be established for handling student work, including secure storage, transportation, and disposal. This includes procedures for handling lost or misplaced assignments and securely deleting digital files after grading is complete. Training for all personnel involved in the grading process is vital to ensure everyone understands and adheres to these protocols.

For example, in one instance, I implemented a system where student IDs were replaced with unique alphanumeric codes during the online grading process, ensuring anonymity while still allowing for efficient tracking of individual scores.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you balance speed and accuracy in grading?

Balancing speed and accuracy in grading is a constant juggling act. It’s about finding the optimal workflow that ensures fair and reliable assessment without sacrificing efficiency. Imagine it like a skilled chef – they need to be both fast and precise.

Efficient Grading Strategies: Using well-designed rubrics, standardized grading criteria, and effective time management techniques significantly enhance speed without compromising accuracy. This might include prioritizing grading tasks by urgency or complexity.

Technology Integration: Utilizing automated grading tools for objective assessments (like multiple-choice tests) can free up time to focus on the more subjective components of assessment which require careful attention to detail.

Regular Breaks and Quality Checks: Taking regular breaks to prevent grader fatigue minimizes errors. Implementing internal quality checks, such as double-checking a random sample of graded assignments, ensures consistent and accurate evaluations.

In my experience, I found that creating a detailed grading checklist, combined with the use of a spreadsheet for tracking grades, reduced grading time significantly while maintaining high accuracy.

Q 17. How familiar are you with different assessment methodologies (e.g., criterion-referenced, norm-referenced)?

I’m very familiar with various assessment methodologies. Understanding these different approaches is crucial for choosing the right evaluation method for a particular learning objective and context. Each approach offers unique insights into student learning.

Criterion-Referenced Assessments: These assessments measure student performance against a pre-defined set of criteria or standards. Think of a driving test – you must meet specific criteria to pass, regardless of how other drivers perform. Examples include rubrics for essays or checklists for projects.

Norm-Referenced Assessments: These assessments compare a student’s performance to the performance of a larger group (the norm group). Think of standardized tests – your score is interpreted relative to the scores of other test-takers. These are often used for ranking students or selecting candidates.

The choice between these methodologies depends heavily on the assessment goals. Criterion-referenced assessments are useful for evaluating mastery of specific skills, while norm-referenced assessments are beneficial for comparing performance across a large group.

Q 18. Describe your understanding of statistical methods used in assessment.

Statistical methods play a crucial role in ensuring the fairness and reliability of assessments. They help us understand the distribution of scores, identify potential bias, and ultimately ensure that the grades accurately reflect student learning.

Descriptive Statistics: Measures like mean, median, mode, and standard deviation describe the central tendency and variability of scores. This information helps in identifying unusual patterns or potential grading inconsistencies.

Inferential Statistics: Techniques like t-tests and ANOVA can be used to compare the performance of different groups or to analyze the effectiveness of different teaching methods.

Reliability Analysis: Cronbach’s alpha, for example, assesses the internal consistency of a test, ensuring the questions measure the same construct consistently. Inter-rater reliability measures the agreement between multiple graders, highlighting areas for improvement in grading consistency.

For instance, I once used standard deviation to identify a possible anomaly in a set of exam scores, leading to a review of the grading process and the identification of a minor error in the rubric interpretation.

Q 19. How do you address challenges in grading subjective assessments?

Grading subjective assessments presents unique challenges because interpretation can vary between graders. To address this, a structured approach is necessary, focusing on clarity, consistency, and transparency.

Detailed Rubrics: Clearly defined rubrics with specific criteria and scoring guidelines are crucial to minimize subjectivity. The rubric needs to leave little room for individual interpretation.

Multiple Raters/Inter-rater Reliability: Using multiple graders for subjective assignments, such as essays or presentations, and analyzing inter-rater reliability (agreement between graders), enhances fairness and reduces the influence of individual biases.

Training and Calibration: Providing graders with training on the rubric and conducting calibration sessions to ensure consistent application of grading standards are essential.

Blind Grading: If feasible, anonymizing student identifiers during grading can further reduce potential bias.

In a past project, I successfully minimized grading discrepancies by using a detailed analytic rubric and conducting a pre-grading calibration session with the other graders, leading to significantly higher inter-rater reliability.

Q 20. What is your experience with different types of rubrics (e.g., holistic, analytic)?

Different rubrics serve distinct purposes in assessment. The choice of rubric depends on the complexity of the assessment and the level of detail required.

Holistic Rubrics: These provide a single overall score based on a general impression of the student’s work. They are efficient but can lack the detail for targeted feedback. Think of it like a general performance review – an overall rating is given.

Analytic Rubrics: These break down the assessment into specific components, each with its own scoring criteria. This allows for more precise feedback and identification of specific areas of strength and weakness. This is akin to a detailed performance review that analyzes specific aspects of a job.

I’ve extensively used both types of rubrics depending on the assignment. For simple assignments, a holistic rubric might suffice, whereas for more complex projects, an analytic rubric is more appropriate to provide detailed, constructive feedback.

Q 21. Explain how you would handle a complaint about unfair grading.

Handling complaints about unfair grading requires a fair, transparent, and thorough process. The goal is to address the concerns respectfully, investigate thoroughly, and resolve the issue equitably. Imagine it like a judge reviewing a case – all sides need to be heard, evidence examined, and a just decision reached.

Documentation Review: The first step is to thoroughly review all relevant documentation, including the student’s work, the grading rubric, and any notes from the grader.

Communication and Explanation: Communicate with the student to understand their concerns and explain the grading rationale clearly and professionally. It’s important to listen attentively and acknowledge their perspective.

Re-evaluation (if warranted): If the complaint raises valid concerns, a re-evaluation of the assignment might be necessary, possibly involving an independent grader or a review committee. A well-defined procedure for re-evaluation is crucial.

Documentation of the Process: Maintain detailed documentation of the entire process, including the complaint, the investigation, and the resolution, protecting both the student’s and the grader’s rights.

In one instance, a student challenged their grade. Following the established procedure, a re-evaluation by a second grader confirmed the initial grade, but the detailed explanation of the grading process and the fairness of the procedure assuaged the student’s concern.

Q 22. How do you ensure the validity and reliability of your grading methods?

Ensuring validity and reliability in grading is paramount for fair assessment. Validity refers to whether the grading process accurately measures what it intends to, while reliability signifies the consistency of the results. I achieve this through a multi-pronged approach:

Clearly Defined Rubrics: I use detailed, objective rubrics that specify the criteria for each grade level. This leaves little room for subjective interpretation. For example, an essay rubric might outline specific point values for argumentation, evidence, organization, and style, with clear descriptions for each level (e.g., ‘Excellent,’ ‘Good,’ ‘Fair,’ ‘Poor’).

Multiple Assessment Methods: Relying on a single assignment type can be unreliable. I incorporate diverse methods like essays, projects, presentations, and quizzes to obtain a more holistic and accurate picture of student understanding. This reduces the impact of a single ‘bad day’ or a student’s strength in a specific area.

Inter-rater Reliability Checks: When multiple assessors are involved, we conduct inter-rater reliability checks. This involves having a sample of work graded independently by multiple assessors, then comparing the scores. Significant discrepancies highlight areas needing clarification in the rubric or further assessor training.

Regular Calibration Sessions: Periodic calibration sessions allow assessors to discuss challenging cases and ensure consistent application of the rubric. This process helps identify and address biases or differing interpretations.

Q 23. How do you stay up-to-date on best practices in fair and impartial grading?

Staying current with best practices in fair and impartial grading requires continuous professional development. I achieve this through several avenues:

Professional Organizations: Active membership in organizations like the American Educational Research Association (AERA) or relevant subject-specific associations provides access to research, publications, and conferences on assessment and grading.

Conferences and Workshops: Attending conferences and workshops focused on assessment and grading keeps me abreast of the latest research findings, innovative techniques, and evolving best practices.

Peer Review and Collaboration: I actively participate in peer review of assessment materials and collaborate with colleagues to share strategies, discuss challenges, and refine our approaches to grading.

Online Resources: I regularly explore credible online resources such as journals, articles, and educational websites that publish research and best practices in assessment and grading.

Q 24. What steps would you take to address inconsistencies in grading across multiple assessors?

Inconsistencies in grading across assessors undermine fairness and validity. Addressing these inconsistencies requires a structured approach:

Analyze the Data: First, I’d analyze the grading data to identify specific areas of inconsistency. This might involve calculating inter-rater reliability statistics (e.g., Cohen’s kappa) to quantify the level of agreement between assessors.

Refine the Rubric: The discrepancies may stem from ambiguities or gaps in the rubric. We’d review the rubric together, clarifying unclear criteria, adding examples, and ensuring that all aspects of the rubric are consistently applied.

Conduct Training Sessions: Training sessions are crucial. We would discuss specific examples of student work, focusing on the application of the rubric and addressing any differing interpretations. Role-playing scenarios and collaborative grading sessions can be particularly helpful.

Establish Clear Communication Channels: Open communication is vital. We would establish a system where assessors can discuss challenging cases and seek clarification, ensuring all assessors are on the same page throughout the grading process.

Monitor and Re-evaluate: Post-grading, we continue to monitor for inconsistencies through regular checks and feedback, refining our processes as needed.

Q 25. Describe a time you had to make a difficult grading decision. How did you approach it?

I once had to grade a student’s project that fell just short of the criteria for an ‘A,’ yet demonstrated exceptional creativity and innovative thinking outside the scope of the assignment. It was a difficult decision because the rubric, while robust, didn’t fully account for such unexpected contributions.

My approach involved several steps:

Review the Rubric and Criteria: I carefully re-examined the rubric to ensure I was applying it fairly and consistently.

Consider the Student’s Effort and Learning: I acknowledged the student’s exceptional effort, creativity, and demonstration of learning, even though they didn’t fully meet all the assigned criteria.

Document the Decision-Making Process: I meticulously documented my reasoning in the grading notes, explaining why I assigned the grade I did, given the student’s unique approach.

Consider Alternative Assessment Methods: While I maintained the original grade, I also discussed with the student the potential for alternative avenues to showcase their exceptional abilities in future assessments, perhaps through more open-ended projects.

Q 26. How do you handle appeals or challenges to your grading decisions?

Handling appeals requires a fair, transparent, and consistent process. I typically follow these steps:

Review the Appeal: I carefully review the student’s appeal, examining their arguments and evidence.

Re-examine the Work: I re-examine the student’s work in light of the appeal, taking into account any new information or perspectives presented.

Consult with Colleagues (if necessary): If the appeal raises complex issues or involves significant disagreement, I consult with colleagues to ensure a balanced and informed decision.

Provide a Thorough Response: I provide the student with a written response explaining the decision, clearly outlining the reasoning and any applicable criteria.

Maintain Documentation: I meticulously document the entire appeal process, ensuring transparency and accountability.

Q 27. What are your thoughts on using technology to improve fairness and efficiency in grading?

Technology offers significant potential to enhance fairness and efficiency in grading. However, it’s crucial to use it responsibly:

Automated Grading Tools: Tools that automate objective grading tasks, such as multiple-choice quizzes or basic coding assignments, can free up time for more nuanced tasks that require human judgment. However, it’s vital to choose tools carefully and ensure they don’t introduce bias.

Plagiarism Detection Software: Software to detect plagiarism safeguards academic integrity and enhances the fairness of the grading process.

Learning Management Systems (LMS): LMS platforms provide efficient ways to distribute assignments, collect submissions, and provide feedback. Their features for automated feedback on certain assignment types also aids in efficiency.

Intelligent Tutoring Systems: Advanced AI-powered tutoring systems can provide individualized feedback and support, potentially reducing the need for extensive manual grading in certain areas.

However, Caution is Warranted: It’s important to remember that technology should supplement, not replace, human judgment. Over-reliance on automated systems can lead to unintended bias and a lack of consideration for the nuances of student work.

Key Topics to Learn for Fair and Impartial Grading Interview

- Defining Fairness and Impartiality in Grading: Understanding the core principles and their practical implications in various assessment contexts.

- Bias Detection and Mitigation Strategies: Identifying potential sources of bias (e.g., cultural, gender, socioeconomic) in grading rubrics and assessment materials, and developing strategies to minimize their impact.

- Developing Reliable and Valid Assessment Instruments: Creating clear, concise, and objective assessment tools that accurately measure student learning outcomes.

- Implementing Standardized Grading Procedures: Establishing consistent and transparent grading practices across different assessors and assessment methods.

- Utilizing Technology for Fair and Impartial Grading: Exploring the use of technology to enhance objectivity and efficiency in grading processes (e.g., automated scoring tools, plagiarism detection software).

- Addressing Grading Discrepancies and Appeals: Developing protocols for handling grading disputes and ensuring fair and transparent resolution processes.

- Ethical Considerations in Grading: Understanding the ethical responsibilities of assessors and the importance of maintaining confidentiality and integrity in the grading process.

- Data Analysis and Interpretation in Grading: Utilizing data to identify trends and patterns in student performance and inform improvements to instruction and assessment practices.

- Communicating Grading Results Effectively: Providing clear, constructive, and timely feedback to students that promotes learning and growth.

Next Steps

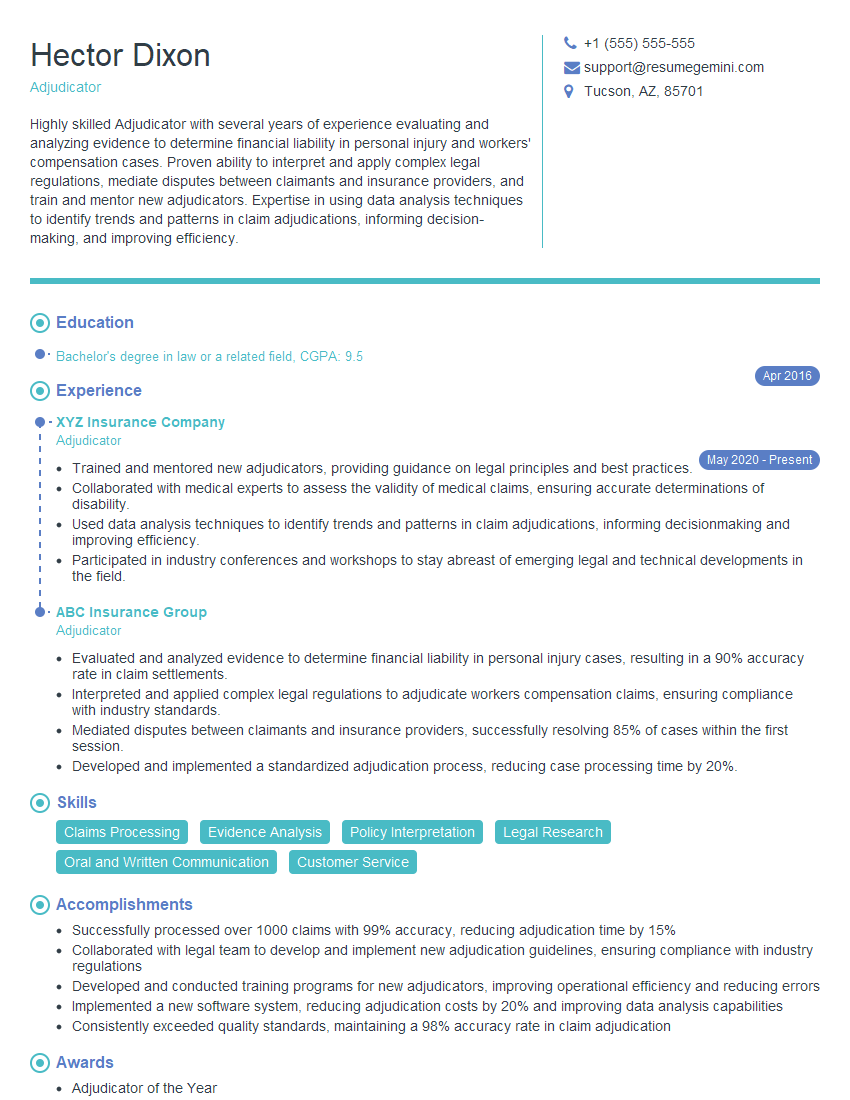

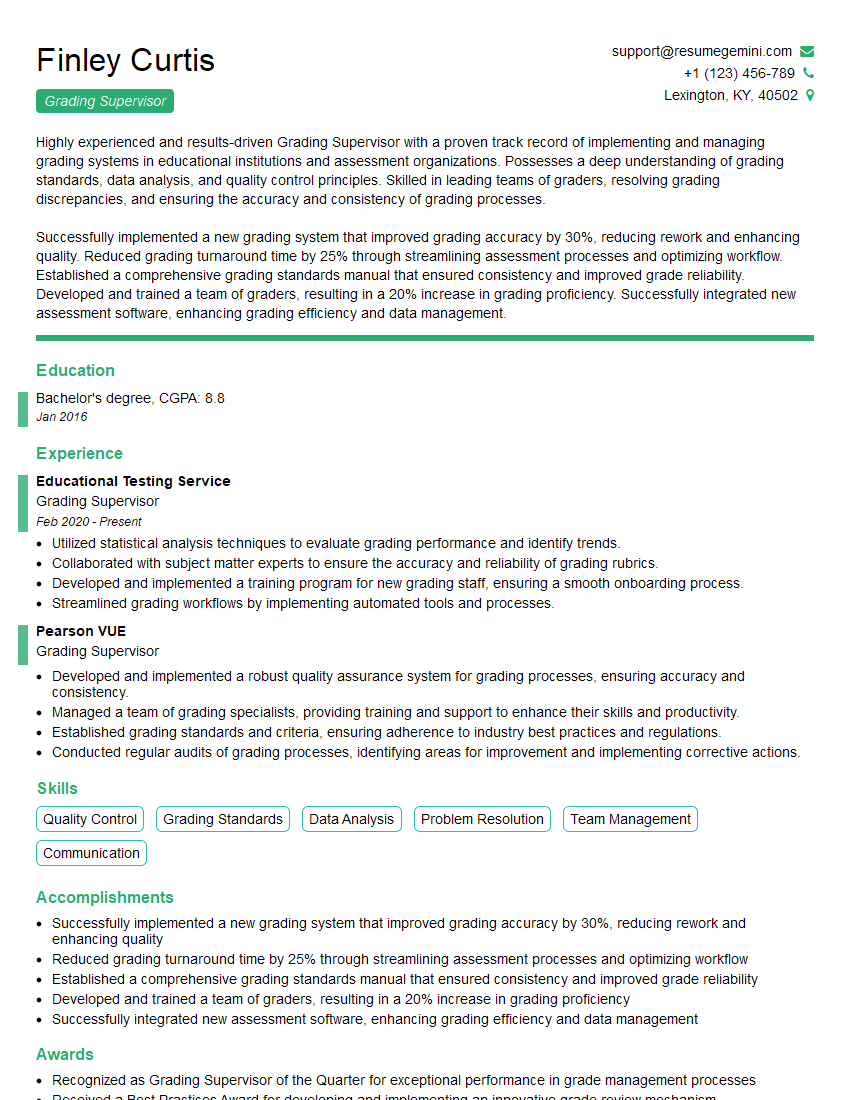

Mastering Fair and Impartial Grading demonstrates a crucial commitment to educational equity and excellence, significantly enhancing your career prospects in education and related fields. A well-crafted resume is essential for showcasing these skills to potential employers. Building an ATS-friendly resume increases your chances of getting noticed by recruiters. We highly recommend using ResumeGemini to build a professional and impactful resume. ResumeGemini provides tools and examples to help you create a resume that highlights your expertise in Fair and Impartial Grading. Examples of resumes tailored to this area are available within the ResumeGemini platform.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good