Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Google Cloud Platform Certified Professional Cloud Architect interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Google Cloud Platform Certified Professional Cloud Architect Interview

Q 1. Explain the different types of Google Cloud virtual machine instances and their use cases.

Google Cloud Platform (GCP) offers a wide array of virtual machine (VM) instances, each tailored to specific needs. The choice depends heavily on the application’s computational requirements, memory needs, and budget. Think of it like choosing the right car – you wouldn’t use a sports car for hauling cargo!

- General-purpose machines (e.g., n1-standard): These are versatile VMs suitable for a broad range of workloads, including web servers, databases, and application servers. They offer a balanced CPU, memory, and storage ratio. Imagine these as your reliable family sedan.

- Compute-optimized machines (e.g., n2-highcpu): Designed for computationally intensive tasks like scientific computing, video encoding, and gaming servers. They prioritize high CPU cores and clock speeds. Think of these as high-performance sports cars.

- Memory-optimized machines (e.g., n2-highmem): Ideal for in-memory databases, big data analytics, and caching. They provide large amounts of RAM. These are like your cargo vans, capable of carrying a large amount of data.

- Storage-optimized machines (e.g., d2-highstorage): Optimized for applications that require high-throughput disk I/O, such as databases and data warehousing. These are like your heavy-duty trucks, optimized for moving large amounts of ‘storage’.

- Accelerator-optimized machines (e.g., a2-highgpu): These VMs include specialized hardware accelerators like GPUs or TPUs, perfect for machine learning, artificial intelligence, and high-performance computing. Think of these as highly specialized equipment for specific tasks.

For instance, a web application might use general-purpose VMs for its front-end servers and memory-optimized VMs for its database.

Q 2. Describe the process of creating and managing a Google Kubernetes Engine (GKE) cluster.

Creating and managing a Google Kubernetes Engine (GKE) cluster involves several steps. First, you need to determine your cluster’s requirements – node size, number of nodes, and networking configuration. Then, you can use the Google Cloud Console, the gcloud command-line tool, or the Kubernetes API to create the cluster. Let’s outline the process using the gcloud command line:

- Initialization: Ensure the

gcloudSDK is installed and configured with the correct project. - Cluster Creation: Use the following command (replace placeholders with your values):

gcloud container clusters create my-cluster --zone us-central1-a --num-nodes 3This creates a cluster named ‘my-cluster’ in the ‘us-central1-a’ zone with 3 nodes. - Node Configuration: You can customize node configurations (machine type, disk size, etc.) during creation. For example:

gcloud container clusters create my-cluster --zone us-central1-a --num-nodes 3 --machine-type n1-standard-2 - Networking: GKE handles networking automatically, but you can configure VPC settings. Proper networking is crucial for security and communication.

- Access: You’ll need to configure authentication and authorization to access your cluster, typically using kubectl.

- Management: Once created, you can manage your cluster through the console,

gcloudcommands, or the Kubernetes API. This includes scaling, upgrading, and monitoring. - Monitoring: GKE integrates with Cloud Monitoring and Cloud Logging to provide insights into the health and performance of your cluster.

Managing a GKE cluster involves updating nodes, scaling up or down the number of nodes based on demand, and regularly patching and upgrading the Kubernetes components. Proper monitoring is essential to prevent outages and ensure high availability.

Q 3. How would you design a highly available and scalable architecture on Google Cloud Platform?

Designing a highly available and scalable architecture on GCP requires a multi-pronged approach focusing on redundancy, load balancing, and autoscaling. Imagine building a bridge – you wouldn’t use just one support beam!

- Redundancy: Distribute your application across multiple zones and even regions. This protects against regional outages. Use multiple availability zones for your VMs and databases.

- Load Balancing: Use Google Cloud Load Balancing to distribute traffic across multiple instances of your application. This ensures even distribution and prevents overloading individual servers. Consider both internal and external load balancers depending on your needs.

- Autoscaling: Configure autoscaling groups for your VMs and other resources. This ensures that the number of resources scales up or down based on demand, maintaining optimal performance and cost-efficiency. Think of this like adding more lanes to a highway during rush hour.

- Databases: Use a managed database service like Cloud SQL or Cloud Spanner, which offers built-in high availability and scalability features. These databases provide replication and failover capabilities.

- Storage: Utilize Cloud Storage, which is inherently scalable and durable. For application data, consider using Persistent Disks with multiple zones for redundancy.

- Monitoring and Alerting: Implement robust monitoring and alerting using Cloud Monitoring and Cloud Logging. This allows for proactive identification and resolution of issues before they impact users.

For example, a critical application might have its front-end servers distributed across three zones, load balanced using a global HTTP(S) load balancer, and backed by a managed database service with multi-regional replication. This architecture ensures high availability and scalability, mitigating risks associated with any single point of failure.

Q 4. Explain the benefits and tradeoffs of using different Google Cloud storage options (Cloud Storage, Persistent Disk, etc.).

GCP offers various storage options, each with its strengths and weaknesses. The best choice depends on your application’s needs, cost considerations, and performance requirements.

- Cloud Storage: Object storage offering high scalability, durability, and cost-effectiveness. Ideal for storing unstructured data like images, videos, backups, and archival data. It’s like a massive warehouse, perfect for storing lots of things, but retrieval may take a bit more time depending on the class of storage.

- Persistent Disk: Block storage providing persistent storage for VMs. Offers high performance and is ideal for storing data directly accessed by VMs. Think of this as a hard drive directly attached to your computer – fast access, but limited scalability compared to Cloud Storage.

- Cloud SQL (for databases): Managed MySQL, PostgreSQL, and SQL Server databases. Provides high availability and scalability, but comes with a higher cost than other storage options. This is like having a professional database administrator managing your important information.

Tradeoffs: Cloud Storage is cost-effective for large amounts of data but slower access compared to Persistent Disk. Persistent Disk offers high performance but is limited in scalability. Cloud SQL provides managed services for databases with higher costs, but you gain manageability and high availability. The choice involves balancing performance, cost, and manageability based on the specific workload.

Q 5. How would you implement a robust disaster recovery plan for a critical application on GCP?

Implementing a robust disaster recovery (DR) plan for a critical application on GCP involves several key steps. Think of it like having a backup plan for your most important documents.

- Replication: Replicate your application and data to a secondary region. This can involve using managed services like Cloud SQL’s multi-region replication or creating a replica of your VMs in a different region.

- Failover Mechanism: Establish a mechanism for automatically failing over to the secondary region in case of a disaster. This could involve using load balancing with regional failover configurations, or employing orchestration tools like Kubernetes.

- Testing: Regularly test your DR plan to ensure that it functions correctly. Periodically conduct failover drills to ensure smooth transitions during an actual event. This is crucial to validating and refining your process.

- Monitoring: Use Cloud Monitoring to monitor the health and availability of both primary and secondary regions. This will give early warnings of potential problems and help with a timely response.

- Recovery Time Objective (RTO) and Recovery Point Objective (RPO): Define your RTO and RPO to determine the acceptable downtime and data loss in case of a disaster. These parameters should guide your DR strategy.

- Documentation: Maintain comprehensive documentation outlining the steps for activating and managing the DR plan. This will reduce confusion and ensure effective response during emergencies.

For example, a critical e-commerce application might replicate its databases to a separate region using Cloud SQL’s multi-region capabilities, and use a load balancer to automatically switch traffic to the secondary region if the primary region becomes unavailable. Regular drills and thorough documentation will make sure your plan is effective in any crisis.

Q 6. Describe your experience with Google Cloud networking components (VPC, subnets, firewalls, etc.).

Google Cloud networking components are fundamental for creating secure and scalable applications. I have extensive experience working with Virtual Private Clouds (VPCs), subnets, firewalls, and other networking elements.

- VPCs: A virtual network that provides isolation and security for your cloud resources. I’ve designed and managed numerous VPCs, tailoring their configurations to meet the specific security and performance requirements of various applications.

- Subnets: Divisions within a VPC, enabling finer-grained control over network access. I’ve used subnets to segment traffic, implement security zones, and isolate sensitive workloads.

- Firewalls: Critical for controlling network traffic. I’ve configured both network-level firewalls (using Cloud Firewall) and instance-level firewalls to restrict access to resources and enhance security. This includes defining rules based on IP addresses, protocols, and ports.

- Cloud Interconnect: I’ve used Cloud Interconnect to establish a dedicated connection between on-premises networks and GCP, enhancing hybrid cloud deployments.

- Cloud VPN: I’m proficient in setting up and managing Cloud VPN tunnels to securely connect GCP networks to on-premises networks or other clouds.

- Route Management: I’ve worked extensively with routing tables to manage traffic flow within and between networks.

For instance, a recent project involved creating a VPC with separate subnets for web servers, application servers, and databases. We implemented strict firewall rules to control traffic flow and used Cloud VPN to connect the GCP network to the client’s on-premises network. This ensured security and network segmentation.

Q 7. How do you manage and monitor resources in GCP using Cloud Monitoring and Cloud Logging?

Cloud Monitoring and Cloud Logging are essential for managing and monitoring resources in GCP. They provide comprehensive insights into the health, performance, and security of your infrastructure and applications. Think of them as the dashboard and logs for your entire cloud environment.

- Cloud Monitoring: Offers metrics, dashboards, and alerts for monitoring resource utilization, application performance, and overall system health. I use it to track CPU usage, memory consumption, network latency, and other critical metrics. Setting up alerts helps proactively address potential problems before they escalate.

- Cloud Logging: Provides logs from various GCP services and applications, enabling analysis of events, debugging issues, and tracking application behavior. I leverage Cloud Logging’s powerful filtering and search capabilities to quickly identify and resolve issues.

- Alerting: Both services support alerting, allowing proactive notification of critical events. This ensures rapid response to issues affecting the availability or performance of applications. I configure alerts based on specific thresholds and conditions.

- Metrics and Logs Integration: Cloud Monitoring and Cloud Logging work seamlessly together. For instance, we can correlate metrics like high CPU utilization with specific error logs in Cloud Logging to quickly pinpoint the root cause of a performance bottleneck.

In a recent project, we used Cloud Monitoring to set up alerts for high CPU usage on our web servers. When an alert triggered, we used Cloud Logging to investigate the related error logs and quickly identified and fixed a bug causing an unexpected surge in traffic. This proactive approach prevented a major service disruption.

Q 8. Explain different Google Cloud pricing models and how to optimize costs.

Google Cloud Platform offers a variety of pricing models, primarily based on usage, commitment, and sustained use discounts. Understanding these is crucial for cost optimization. Let’s break them down:

- Pay-as-you-go: This is the most common model. You only pay for the resources you consume, such as compute time, storage, and network traffic. This is flexible but can lead to unpredictable costs if not carefully monitored.

- Committed Use Discounts (CUDs): By committing to a certain amount of resource usage over a specific period (1 or 3 years), you can get significant discounts. This is ideal for predictable workloads with consistent resource needs. Think of it like a bulk discount at the grocery store – the more you commit to buying, the cheaper each unit becomes.

- Sustained Use Discounts (SUDs): These discounts apply automatically based on your consistent usage of a resource over a month. The more consistently you use a resource, the larger the discount. It’s a great option for applications with consistent, albeit not perfectly predictable, needs.

- Free Tier: GCP provides a generous free tier for many services, allowing you to experiment and learn without incurring costs. It’s perfect for testing and development purposes.

Optimizing Costs:

- Rightsizing Instances: Choose the appropriate instance size for your workload. Avoid over-provisioning. Regularly review and adjust instance types based on actual usage.

- Using Spot Instances (Preemptible VMs): These are significantly cheaper than regular VMs but can be terminated with a short notice. Suitable for fault-tolerant, non-critical tasks.

- Auto-Scaling: Configure auto-scaling groups to automatically adjust the number of instances based on demand. This ensures you only pay for what you need.

- Resource Monitoring and Alerting: Use Cloud Monitoring to track resource usage and set up alerts to notify you of any unusual spikes or anomalies. This allows for proactive cost management.

- Regular Cost Analysis: Use the Cloud Billing console to regularly analyze your spending, identify areas of high consumption, and implement optimization strategies.

- Deletion of Unused Resources: Regularly review and delete any unused resources such as VMs, storage buckets, or databases.

For example, if you’re running a web application with fluctuating traffic, using auto-scaling and sustained use discounts can significantly reduce your costs compared to running a fixed number of large instances.

Q 9. Discuss your experience with implementing security best practices on GCP (IAM, VPC, etc.).

Implementing robust security on GCP requires a multi-layered approach. I have extensive experience leveraging IAM, VPC networking, and other security services to create secure environments. Here’s how:

- IAM (Identity and Access Management): I meticulously define roles and permissions at the granular level. Instead of granting broad access, I use the principle of least privilege, assigning only necessary permissions to users, service accounts, and applications. This minimizes the blast radius in case of a compromise. For example, a database administrator might only have read/write access to the specific database they manage, not the entire GCP project.

- VPC Network Security: I design and implement secure VPC networks with firewall rules to control ingress and egress traffic. I utilize VPC peering for secure communication between different VPCs. I leverage Cloud Armor for DDoS protection and Web Application Firewall (WAF) for mitigating web application vulnerabilities.

- Data Encryption: I encrypt data at rest using Cloud Storage encryption and at transit using HTTPS and VPNs. I utilize Cloud Key Management Service (KMS) to manage encryption keys securely.

- Security Scanning and Monitoring: I regularly use Security Health Analytics and other security scanning tools to identify and remediate vulnerabilities. I implement logging and monitoring to detect suspicious activities.

- Vulnerability Management: I leverage tools like Container Threat Detection and Binary Authorization for containerized workloads to maintain security posture.

- Data Loss Prevention (DLP): For sensitive data, I integrate DLP tools to detect and prevent data breaches.

In one project, I migrated an on-premises application to GCP and implemented a multi-layered security approach using these techniques. The outcome was a significant improvement in security posture while maintaining efficiency.

Q 10. How would you design a CI/CD pipeline using Google Cloud Build and other relevant services?

Building a robust CI/CD pipeline on GCP usually involves Cloud Build, along with other services for source code management, artifact repositories, and deployment. Here’s a typical design:

- Source Code Management: I’d start with a version control system like Cloud Source Repositories (or integrate with GitHub, Bitbucket). This acts as the single source of truth for the code.

- Cloud Build Triggers: Cloud Build triggers automate the build process. These are configured to start a build whenever changes are pushed to the source code repository.

- Build Process: The Cloud Build configuration (

cloudbuild.yaml) defines the build steps: building the application, running tests, and creating deployable artifacts (Docker images, etc.). - Artifact Registry: The built artifacts are stored in Artifact Registry, a secure repository for Docker images, Java archives, and other packages. This provides a central location for managing and distributing artifacts.

- Deployment: Depending on the target environment (Kubernetes Engine, App Engine, Cloud Run), I would use appropriate deployment tools. For Kubernetes, I’d typically use tools like Skaffold or Spinnaker. For App Engine or Cloud Run, I’d leverage their respective deployment APIs.

- Testing and Monitoring: Integrate testing at various stages of the pipeline (unit, integration, end-to-end). Cloud Monitoring provides insights into the deployed application’s health and performance.

Example cloudbuild.yaml snippet:

steps: - name: 'gcr.io/cloud-builders/docker' args: ['build', '-t', 'gcr.io/$PROJECT_ID/my-app', '.'] - name: 'gcr.io/cloud-builders/docker' args: ['push', 'gcr.io/$PROJECT_ID/my-app']This snippet shows a simple build process that builds a Docker image and pushes it to Artifact Registry. The entire process is orchestrated and automated by Cloud Build, ensuring a fast and reliable CI/CD pipeline.

Q 11. Explain your experience with deploying and managing applications on Google App Engine or Cloud Run.

I have extensive experience deploying and managing applications on both Google App Engine and Cloud Run. They both offer serverless capabilities, but with different characteristics:

- App Engine: App Engine is a fully managed platform as a service (PaaS). It handles scaling, infrastructure, and many operational aspects. It supports various programming languages and frameworks. I’ve used it for applications that need automatic scaling based on request traffic and don’t require fine-grained control over the underlying infrastructure.

- Cloud Run: Cloud Run is a serverless container platform. You deploy containerized applications, and Cloud Run manages their scaling and lifecycle. It offers more control and flexibility than App Engine, especially when working with custom container images and configurations. I’ve utilized it for microservices architectures and applications requiring specific runtime environments or dependencies.

Management aspects: In both cases, I utilize the Google Cloud Console, command-line tools (gcloud), and APIs to manage applications, monitor performance, and handle deployments. For logging and monitoring, I leverage Cloud Logging and Cloud Monitoring.

Example: In a recent project, we used Cloud Run to deploy a microservice that processed image uploads. Its scalability and easy integration with other GCP services made it an ideal choice. We were able to scale the service seamlessly based on demand without worrying about server management.

Q 12. How would you migrate a legacy application to Google Cloud Platform?

Migrating a legacy application to GCP involves a well-defined strategy. It’s not a simple lift-and-shift; it often requires careful planning and execution. Here’s a structured approach:

- Assessment and Planning: Thoroughly assess the application’s architecture, dependencies, and functionalities. Identify potential challenges and risks. Define migration goals (e.g., improved scalability, cost reduction, enhanced security).

- Refactoring (Optional but Recommended): For monolithic applications, consider refactoring into microservices for easier deployment and management on GCP. This might involve breaking down the application into smaller, independent units.

- Choosing the Right GCP Services: Select appropriate GCP services based on the application’s requirements. Consider Compute Engine, App Engine, Cloud Run, Kubernetes Engine, or a hybrid approach depending on the application’s needs and infrastructure dependencies.

- Database Migration: Migrate the legacy database to a GCP-managed database service like Cloud SQL or Cloud Spanner. This might involve data conversion, schema changes, and testing.

- Network Configuration: Set up a secure VPC network, configure firewalls, and ensure connectivity between the application and other GCP services.

- Testing and Validation: Conduct thorough testing at each stage of the migration. This includes functional testing, performance testing, and security testing.

- Phased Rollout: Migrate the application in phases, starting with a small subset of users or functionality, and gradually expanding to the full production environment. This minimizes the risk and allows for quick identification and resolution of any issues.

- Monitoring and Optimization: After migration, closely monitor application performance, identify bottlenecks, and optimize resource usage for better cost efficiency.

A phased rollout helps mitigate risk by allowing for iterative testing and improvement before the entire application is moved. This is a crucial aspect of a successful migration.

Q 13. Describe your understanding of serverless computing on GCP (Cloud Functions, Cloud Run).

Serverless computing on GCP, primarily using Cloud Functions and Cloud Run, offers significant advantages in terms of scalability, cost-effectiveness, and operational simplicity. Let’s compare:

- Cloud Functions: These are event-driven functions triggered by various events such as changes in Cloud Storage, Pub/Sub messages, or HTTP requests. They are ideal for short-lived tasks that don’t require persistent connections or long-running processes. They are highly scalable and cost-effective because you only pay for the compute time used when the function is executed.

- Cloud Run: This is more suited for longer-running processes and containerized applications. You deploy container images, and Cloud Run manages scaling and lifecycle. It offers greater control and flexibility compared to Cloud Functions, allowing you to specify custom runtime environments, dependencies, and resource limits.

Choosing between them: Cloud Functions are best for small, independent tasks triggered by events, while Cloud Run is ideal for longer-running applications, microservices, and scenarios requiring more control over the execution environment.

Example: A common use case for Cloud Functions is image resizing triggered by uploading images to Cloud Storage. Conversely, a REST API or a microservice would be better suited for Cloud Run.

Q 14. How would you implement a data pipeline using Dataflow and BigQuery?

Building a data pipeline using Dataflow and BigQuery involves several steps:

- Data Ingestion: The pipeline begins with data ingestion. Data sources can vary (CSV files in Cloud Storage, databases, streaming data from Pub/Sub). Dataflow can read from these sources.

- Data Transformation (Dataflow): Dataflow is used to process and transform the data. This might involve cleaning, filtering, aggregating, or joining data from different sources. Dataflow’s parallel processing capabilities enable efficient handling of large datasets.

- Data Loading into BigQuery: The transformed data is then loaded into BigQuery, Google’s fully managed, highly scalable, and cost-effective data warehouse. Dataflow provides efficient ways to load data into BigQuery.

- Data Quality and Monitoring: Implement data quality checks within the Dataflow pipeline to ensure data accuracy and consistency. Utilize Cloud Monitoring to track pipeline performance and identify any issues.

- Scheduling and Orchestration: Use Cloud Composer (or other orchestration tools) to schedule and manage the entire data pipeline. This ensures the pipeline runs regularly and reliably.

Example: Let’s say you need to process log data from various sources, aggregate it, and store it in BigQuery for analysis. Dataflow would be used to read the log data, parse and clean it, aggregate relevant metrics, and finally load the processed data into BigQuery. You can schedule this pipeline using Cloud Composer to run daily or hourly, depending on your requirements.

Q 15. Explain your experience with Google Cloud’s database options (Cloud SQL, Cloud Spanner, Bigtable).

Google Cloud offers a diverse range of database solutions, each catering to specific needs. My experience encompasses Cloud SQL, Cloud Spanner, and Bigtable. Cloud SQL is ideal for relational databases, providing managed instances of MySQL, PostgreSQL, and SQL Server. I’ve used it extensively for applications requiring ACID properties and structured data, like e-commerce platforms needing reliable transaction management. For example, I migrated a client’s legacy MySQL database to Cloud SQL, improving performance and scalability significantly by leveraging its automated backups and high availability options. Cloud Spanner, a globally-distributed, scalable, and strongly consistent database, is perfect for applications demanding low latency across multiple regions. I’ve utilized it in projects requiring worldwide data synchronization, ensuring consistent data access irrespective of location. Imagine building a global social network – Cloud Spanner’s global consistency would be invaluable. Finally, Bigtable is a NoSQL, wide-column store ideal for large-scale analytical processing and operational data stores. I’ve employed it in projects involving massive datasets, such as analyzing user behavior on a large-scale e-commerce platform, where its ability to handle petabytes of data is crucial. The choice between these databases hinges on factors like data structure, scalability needs, consistency requirements, and budget.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you ensure the security and compliance of data stored in Google Cloud?

Data security and compliance are paramount in Google Cloud. My approach is multi-layered and encompasses several strategies. First, I leverage Identity and Access Management (IAM) to implement the principle of least privilege, granting only necessary permissions to users and services. This prevents unauthorized access and minimizes the impact of potential breaches. Second, I utilize Virtual Private Clouds (VPCs) and firewalls to isolate sensitive data and control network access. This ensures that only authorized systems can communicate with the databases and applications storing sensitive information. Third, I encrypt data both in transit (using TLS/SSL) and at rest (using Google Cloud’s encryption services like Cloud KMS). This protects data even if a system is compromised. Fourth, I regularly monitor security logs and alerts to detect and respond promptly to any suspicious activity. Finally, compliance is addressed by adhering to relevant frameworks like HIPAA, PCI DSS, or ISO 27001, depending on the specific requirements. This involves configuring appropriate controls, implementing regular audits, and maintaining comprehensive documentation. For instance, I recently implemented a robust security posture for a healthcare client, leveraging Cloud KMS for encryption and employing Cloud Security Command Center for threat detection, ensuring compliance with HIPAA regulations.

Q 17. Explain your experience with deploying and managing containerized applications on Google Kubernetes Engine (GKE).

I have extensive experience deploying and managing containerized applications on Google Kubernetes Engine (GKE). My workflow typically begins with creating a GKE cluster, carefully selecting node pools and configurations based on application requirements. I then use tools like Helm or kubectl to deploy and manage applications, leveraging Kubernetes’ features for scalability, high availability, and automated rollouts. I’m proficient in utilizing Kubernetes concepts such as Deployments, StatefulSets, and DaemonSets to ensure appropriate application deployment and management. I’m also familiar with implementing advanced features like Horizontal Pod Autoscaling (HPA) and Ingress controllers for efficient resource utilization and traffic management. For example, I recently migrated a monolithic application to a microservices architecture on GKE, significantly improving the application’s scalability and maintainability. This involved creating separate deployments for each microservice, utilizing ConfigMaps and Secrets for configuration management and securing sensitive information. Monitoring and logging are integral parts of my GKE deployments, utilizing tools like Prometheus, Grafana, and the Cloud Logging service to proactively identify and resolve issues.

Q 18. How would you implement a microservices architecture on Google Cloud?

Implementing a microservices architecture on Google Cloud leverages many of GCP’s services. I would start by defining clear service boundaries and responsibilities. Each microservice would be a self-contained unit, deployed independently using containers managed by GKE. Cloud Run might also be considered for stateless services. For communication between services, I’d use a service mesh like Istio, offering features like traffic management, security, and observability. Each microservice could have its own database (Cloud SQL, Cloud Spanner, or Cloud Firestore depending on its needs). API Gateway would manage external access to the microservices, securing and routing requests. Cloud Pub/Sub would facilitate asynchronous communication between services. For monitoring and logging, I would integrate with Cloud Monitoring and Cloud Logging. Finally, I would utilize CI/CD pipelines (e.g., Cloud Build) for automated builds, testing, and deployments. This approach provides high availability, fault isolation, and scalability, allowing for independent scaling and updates of individual services. For example, an e-commerce platform could be decomposed into services managing inventory, payments, and user accounts, each deployed and scaled independently.

Q 19. Describe different load balancing options available on GCP and their use cases.

Google Cloud offers several load balancing options, each with specific use cases. The primary types include:

- HTTP(S) Load Balancing: Distributes inbound HTTP and HTTPS traffic across multiple instances of an application. Ideal for web applications, APIs, and other services requiring load balancing at the application layer. This is commonly used for scaling web applications.

- TCP/UDP Load Balancing: Distributes network traffic for TCP and UDP protocols. Suitable for applications that don’t use HTTP, such as gaming servers or custom applications using TCP or UDP.

- Internal TCP/UDP Load Balancing: This type of load balancing handles traffic within the VPC, distributing traffic between instances in a private network. Ideal for internal microservices communications. Reduces external exposure and adds another layer of security.

- SSL Proxy Load Balancing: This service terminates SSL/TLS connections at the edge, reducing the load on your backend instances. This improves security and performance.

Q 20. How would you troubleshoot common network connectivity issues on GCP?

Troubleshooting network connectivity issues on GCP involves a systematic approach. I would start by checking the instance’s status and ensuring it’s running. Next, I’d verify network configuration, checking firewall rules to ensure that inbound and outbound traffic is allowed. The `gcloud compute firewall-rules list` command is invaluable here. I’d also inspect the instance’s network interfaces and ensure proper IP addressing and routing. Tools like `traceroute` and `ping` can help identify points of failure. If the problem lies within the VPC, I would examine the VPC network configuration, including subnets, routes, and peered networks. Cloud Logging and Monitoring provide crucial information on network activity and potential errors. If the issue involves external connectivity, I would check for any DNS resolution problems, and ensure that the appropriate DNS settings are configured. I would also look at the health checks associated with load balancers, confirming that the backend instances are healthy and responding to requests. For instance, a recent issue was resolved by identifying a misconfigured firewall rule that was blocking inbound traffic to a web server.

Q 21. Explain your experience with using Terraform or Cloud Deployment Manager for infrastructure-as-code.

I have significant experience with both Terraform and Cloud Deployment Manager (CDM) for Infrastructure-as-Code (IaC). Terraform’s declarative approach, using HashiCorp Configuration Language (HCL), offers excellent flexibility and portability across multiple cloud providers. I find its community support and extensive module library very useful. I’ve used it for managing everything from simple VMs to complex multi-region deployments. An example project involved creating and managing a global infrastructure using Terraform, ensuring consistent and repeatable deployments across multiple regions. CDM, Google Cloud’s own IaC solution, provides tight integration with GCP services. Its YAML-based configuration is straightforward for deploying resources within GCP. However, its portability is limited to GCP. I’ve successfully used CDM for automating the deployment of complex environments that heavily rely on GCP specific services, such as Cloud Dataflow pipelines. The choice between Terraform and CDM depends on the specific project needs and preferences. If portability is a priority or the project involves multi-cloud environments, Terraform is preferred. For projects solely on GCP and leveraging deeply integrated services, CDM can be a more efficient option.

Q 22. How would you design and implement a solution for data backup and recovery on GCP?

Data backup and recovery on GCP is crucial for business continuity and disaster recovery. My approach would leverage a multi-layered strategy combining native GCP services for optimal efficiency and resilience.

- Compute Engine Instances: For VM instances, I’d use pre-emptive instances for cost-effectiveness in backup processes. Snapshots are taken regularly and stored in a different region for geographical redundancy. These snapshots are then replicated to a secondary region using Cloud Storage transfer service for added protection.

- Cloud SQL: For Cloud SQL instances, I’d configure automated backups to Cloud Storage, specifying retention policies and backup frequency based on Recovery Time Objective (RTO) and Recovery Point Objective (RPO). Point-in-time recovery (PITR) is crucial for minimizing data loss.

- Cloud Storage: I’d use lifecycle policies to automatically manage storage class transitions (e.g., moving older backups to Nearline or Coldline storage to optimize costs). Versioning protects against accidental deletion. Data encryption at rest and in transit should be enabled for security.

- Cloud Storage Transfer Service: This service allows for efficient transfer of backups to and from other cloud providers or on-premises locations, fostering a hybrid-cloud backup strategy. It offers scheduling and replication options for automated backups.

- Disaster Recovery: I would design a failover plan using a secondary region, ensuring the application can be restored with minimal downtime. Regular disaster recovery drills are vital to validate the plan’s effectiveness.

Example: For a high-availability e-commerce application, I’d implement daily backups to Cloud Storage using Cloud SQL’s automated backup feature. These backups would be replicated to a geographically separate region. For compute instances, I’d utilize scheduled snapshots and a secondary region for disaster recovery, ensuring RTO and RPO targets are met.

Q 23. Describe your approach to monitoring and alerting for critical applications running on GCP.

Monitoring and alerting for critical GCP applications requires a comprehensive strategy utilizing GCP’s monitoring and logging services. My approach is proactive, focusing on early detection and rapid response to potential issues.

- Cloud Monitoring: I’d use Cloud Monitoring to create dashboards visualizing key performance indicators (KPIs) such as CPU utilization, memory usage, network latency, and request response times. Custom metrics can be defined to track application-specific data.

- Cloud Logging: Cloud Logging captures logs from applications and infrastructure, enabling detailed analysis of errors, performance bottlenecks, and security events. Structured logging is crucial for effective searching and analysis.

- Alerting: I’d configure alerts based on predefined thresholds for KPIs and log patterns. Alerts would be sent via email, PagerDuty, or other notification channels, ensuring timely responses to critical events. Alerting rules should be carefully tuned to avoid alert fatigue.

- Trace and Profiler: Using Cloud Trace to analyze the performance of individual requests and Cloud Profiler to identify performance bottlenecks in application code would help in proactive issue identification and resolution.

- Error Tracking: Implement tools like Error Reporting to collect and analyze application errors, allowing for proactive fixes and ensuring high application availability.

Example: If CPU utilization exceeds 80% for 15 minutes, an alert would be triggered, notifying the operations team to investigate potential scaling issues. Similarly, specific error messages in Cloud Logging can trigger alerts, ensuring quick resolution of application bugs.

Q 24. Explain your understanding of Google Cloud’s identity and access management (IAM).

Google Cloud’s Identity and Access Management (IAM) is a fundamental security service providing granular control over access to resources. It’s based on the principle of least privilege, meaning users only have the permissions necessary to perform their tasks.

- Roles and Permissions: IAM defines roles which are collections of permissions. These roles are assigned to users, groups, and service accounts. This allows for efficient management of access control.

- Hierarchy: IAM employs a hierarchical structure, allowing for inheritance of permissions. This simplifies administration when managing access to multiple resources within an organization.

- Service Accounts: Service accounts are special accounts used by applications to access GCP resources. They offer a secure way for applications to authenticate and authorize without relying on human users.

- Organizations, Folders, and Projects: IAM’s hierarchical structure allows for central management of permissions across multiple projects. Organizations sit at the top level, then folders for grouping projects, and finally individual projects.

- IAM Policies: IAM policies define the access control rules for a given resource. These policies can be managed through the Google Cloud Console, the gcloud command-line tool, or APIs.

Example: A database administrator might be assigned the roles/sql.admin role, granting full access to a Cloud SQL instance. A read-only user would be assigned a custom role with only read permissions.

Q 25. How would you optimize the performance of a database running on GCP?

Optimizing database performance on GCP depends on the specific database system and workload. However, several general strategies apply.

- Right-Sizing Instances: Choose an instance type with sufficient CPU, memory, and storage based on expected workload. Consider using instance machine types optimized for databases.

- Database Tuning: Optimize database configurations, including connection pooling, query optimization, and indexing strategies. Use database-specific tools for performance analysis and tuning.

- Caching: Implement caching mechanisms to reduce database load. Cloud Memorystore or Redis can be used to cache frequently accessed data.

- Connection Pooling: Efficient connection management through connection pooling reduces overhead associated with establishing database connections.

- Query Optimization: Analyze slow queries and optimize them using techniques like indexing, query rewriting, and using appropriate data types.

- Scaling: Consider scaling the database vertically (larger instances) or horizontally (multiple instances) depending on the workload’s growth. Cloud SQL offers features for both scaling approaches.

- Schema Design: Ensure a well-designed database schema that is normalized and avoids unnecessary joins.

Example: For a high-traffic application, using Cloud SQL’s Second Generation with a suitable instance size and employing appropriate indexing strategies are crucial for performance.

Q 26. Discuss your experience with using Google Cloud’s API management services.

Google Cloud’s API management services, primarily Apigee, provide a comprehensive platform for designing, securing, and managing APIs. My experience involves using Apigee to create robust and scalable API solutions.

- API Design and Development: Apigee supports designing APIs using RAML or OpenAPI specifications and provides tools for testing and debugging APIs.

- Security: Apigee provides robust security features including authentication, authorization, rate limiting, and API key management. This is essential for protecting sensitive data and preventing unauthorized access.

- Monitoring and Analytics: Apigee offers detailed monitoring and analytics dashboards to track API performance, usage, and security events. These insights are crucial for optimizing API performance and troubleshooting issues.

- Deployment and Management: Apigee simplifies deployment and management of APIs through its integrated platform. Versioning and lifecycle management features ensure smooth updates and rollbacks.

- API Gateway: Apigee acts as a gateway, routing API requests to backend services and enforcing security policies.

Example: I’ve used Apigee to create an API for a mobile application, securing it with OAuth 2.0 and implementing rate limiting to prevent abuse. The analytics dashboard provided valuable insights into API usage patterns, allowing for optimization and capacity planning.

Q 27. Explain your approach to troubleshooting application performance issues on GCP.

Troubleshooting application performance issues on GCP involves a systematic approach leveraging GCP’s monitoring and diagnostic tools.

- Identify the Problem: Start by identifying symptoms, such as slow response times, high error rates, or resource exhaustion.

- Gather Data: Use Cloud Monitoring, Cloud Logging, and Cloud Trace to collect relevant data, including metrics, logs, and traces. Look for patterns and anomalies.

- Analyze the Data: Correlate data from different sources to identify the root cause of the problem. Use visualization tools to gain insights into application behavior.

- Isolate the Issue: Isolate the problem to a specific component of the application or infrastructure. This might involve analyzing network traffic, database queries, or application code.

- Implement Solutions: Based on the root cause analysis, implement solutions such as code optimization, database tuning, resource scaling, or infrastructure changes.

- Validate Solutions: Monitor the application after implementing the solutions to verify their effectiveness and ensure the problem is resolved.

Example: If a web application is experiencing slow response times, I’d first check Cloud Monitoring for CPU and memory utilization. Then, I’d analyze Cloud Logging for error messages and Cloud Trace for request latency. This systematic approach would help pinpoint the bottleneck (e.g., database query, network issue, or application code) and allow for targeted solutions.

Q 28. How would you implement a multi-region deployment strategy for a highly available application on GCP?

Implementing a multi-region deployment strategy for high availability on GCP involves replicating application components across multiple regions to ensure resilience against regional outages.

- Regional Deployment: Deploy application components in multiple regions, ensuring geographic diversity for resilience against regional failures.

- Load Balancing: Use Cloud Load Balancing to distribute traffic across instances in different regions, ensuring high availability and responsiveness.

- Data Replication: Replicate data across regions using services like Cloud SQL’s regional replication or Cloud Spanner for globally distributed databases.

- Global DNS: Configure Global DNS to route traffic to the closest region, minimizing latency and improving user experience.

- Service Mesh: Utilize a service mesh like Istio for managing service discovery, traffic routing, and fault tolerance across different regions.

- Disaster Recovery Plan: Establish a comprehensive disaster recovery plan, outlining procedures for switching over to a secondary region in case of a primary region failure.

- Monitoring and Alerting: Implement robust monitoring and alerting across all regions to quickly detect and respond to failures.

Example: A globally distributed e-commerce application would have its web servers, application servers, and databases deployed in multiple regions. Cloud Load Balancing would distribute traffic, ensuring high availability. Cloud SQL’s regional replication or Cloud Spanner would guarantee data consistency across regions, enabling seamless failover.

Key Topics to Learn for Google Cloud Platform Certified Professional Cloud Architect Interview

- Compute Engine: Understand instance types, machine types, scheduling options, and autoscaling. Consider practical applications like designing a highly available web application or a cost-effective batch processing system.

- Networking: Master VPC networking, including subnets, firewalls, VPNs, and Cloud Interconnect. Practice designing secure and scalable network architectures for different application needs.

- Storage: Deeply understand Cloud Storage, Persistent Disk, and other storage options. Practice choosing the right storage solution for various data types and access patterns. Consider the implications of different storage classes on cost and performance.

- Databases: Gain proficiency in Cloud SQL, Cloud Spanner, and Cloud Datastore. Be prepared to discuss database selection based on scalability, reliability, and cost considerations for different applications.

- Security: Understand Identity and Access Management (IAM), security best practices, and compliance considerations. Be ready to discuss designing secure cloud architectures and implementing security measures to protect sensitive data.

- Deployment and Management: Familiarize yourself with deployment strategies using tools like Deployment Manager and Kubernetes Engine. Practice automating deployments and managing infrastructure as code.

- Data Analytics: Explore BigQuery, Dataflow, and Dataproc for processing and analyzing large datasets. Be ready to discuss designing data pipelines and building data warehousing solutions.

- Cost Optimization: Learn how to analyze and optimize cloud costs using tools and best practices. This includes understanding pricing models and implementing strategies for cost reduction.

- Monitoring and Logging: Understand how to monitor the health and performance of your cloud infrastructure using Cloud Monitoring and Cloud Logging. Be prepared to discuss setting up alerts and dashboards for proactive issue management.

- Serverless Technologies: Gain experience with Cloud Functions and Cloud Run. Be ready to discuss the advantages and disadvantages of serverless architectures and when to use them.

Next Steps

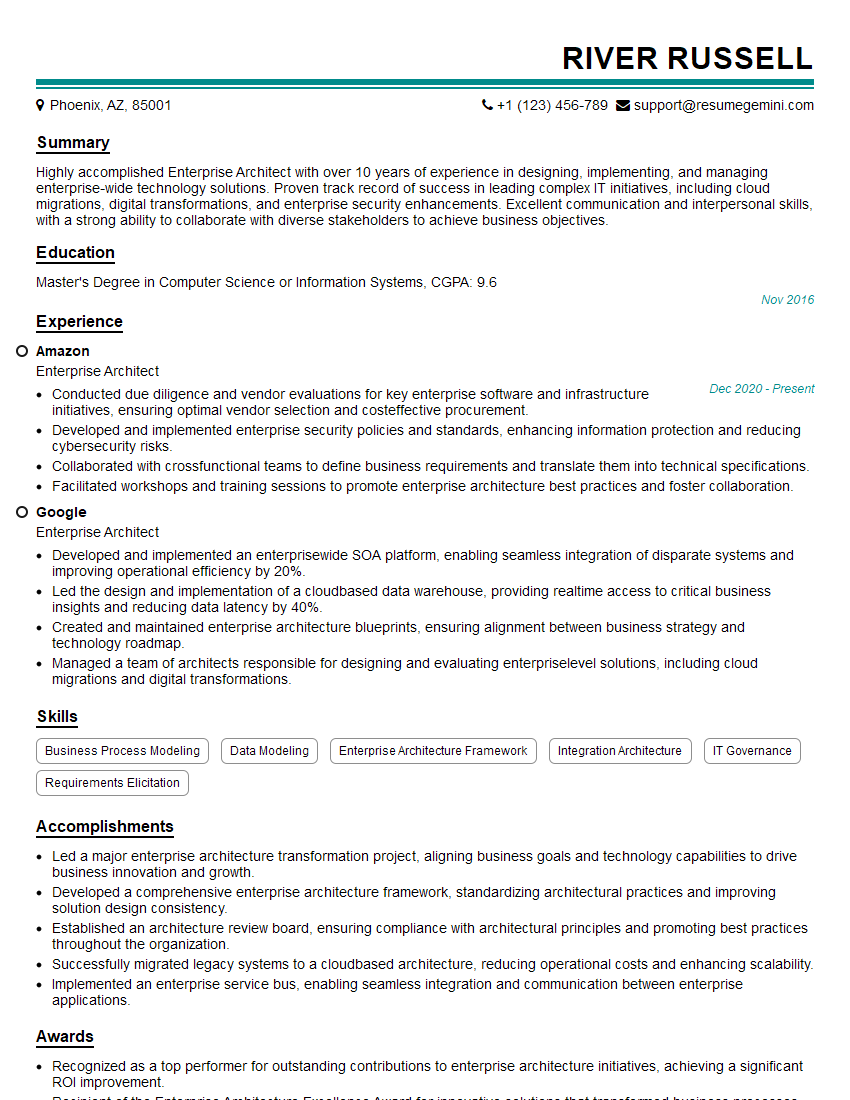

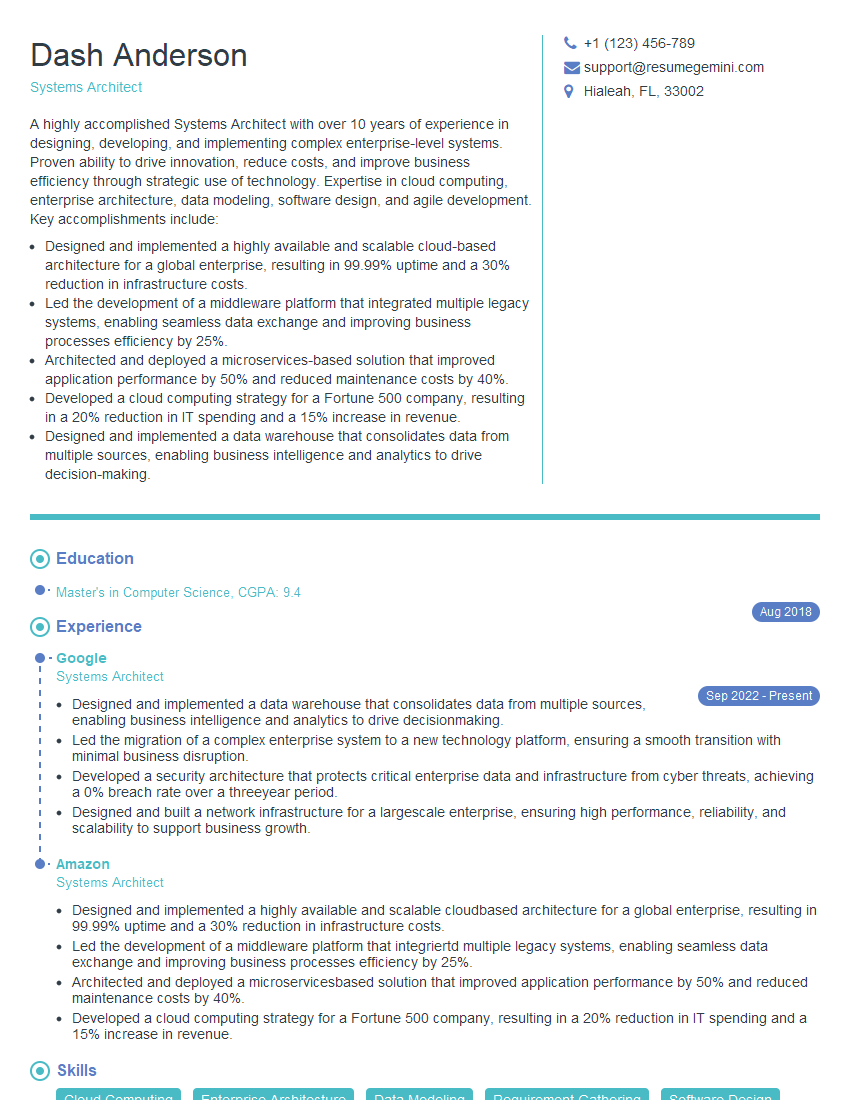

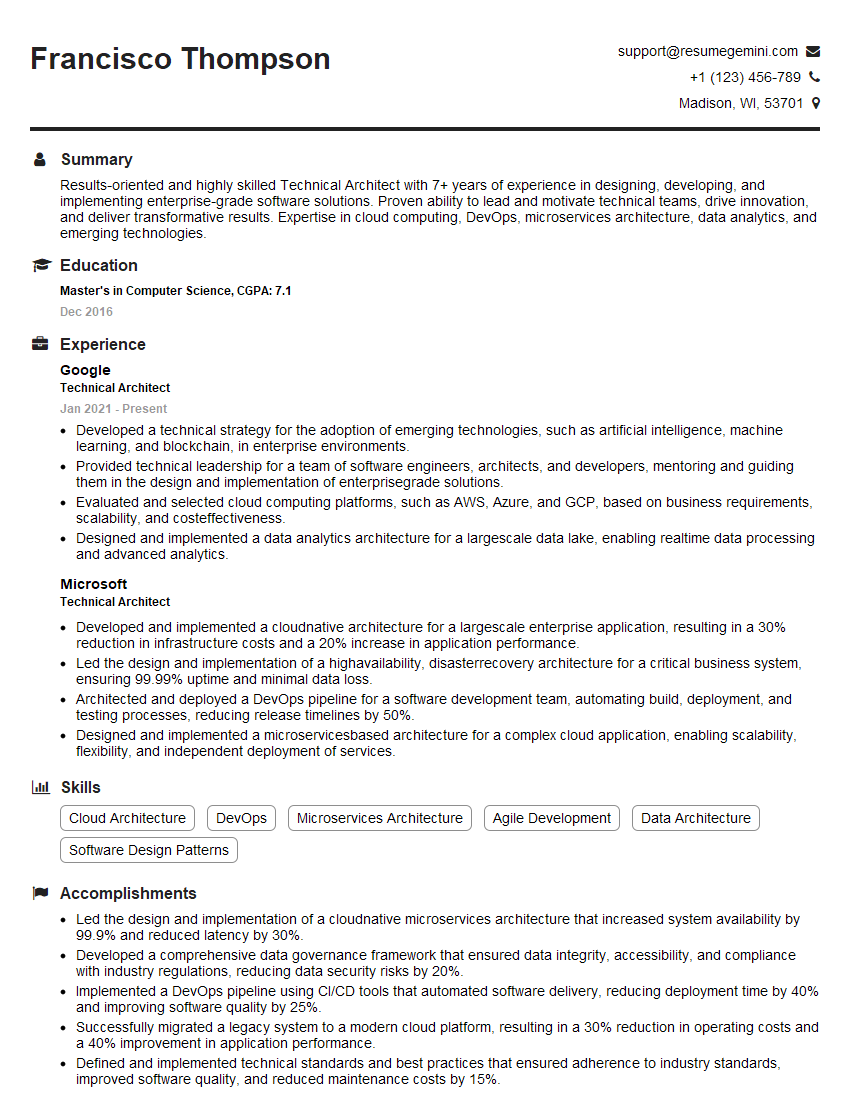

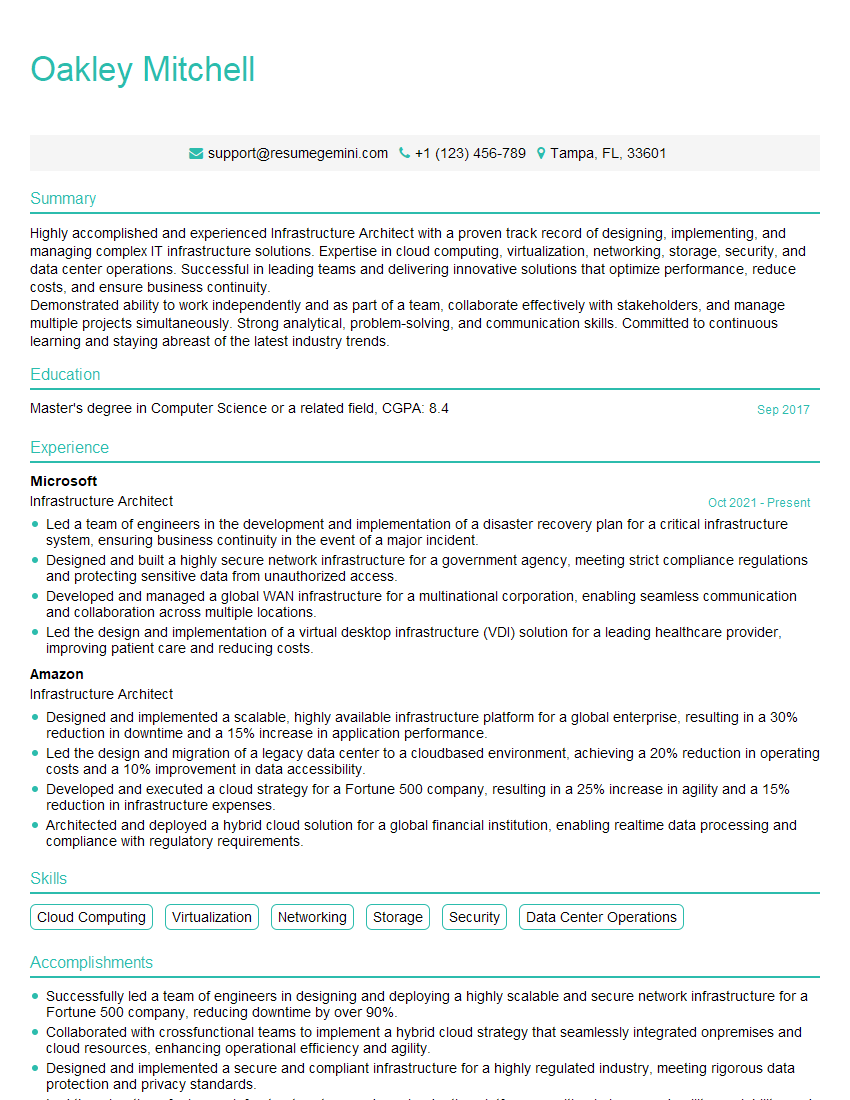

Mastering the Google Cloud Platform Certified Professional Cloud Architect certification significantly boosts your career prospects, opening doors to high-demand roles with substantial compensation. To maximize your job search success, create a compelling and ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you craft a professional resume tailored to the specific requirements of this certification. Examples of resumes tailored to the Google Cloud Platform Certified Professional Cloud Architect role are available to provide you with inspiration and guidance. Invest the time to build a strong resume – it’s a crucial step in landing your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good