The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to GPS and GIS data collection interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in GPS and GIS data collection Interview

Q 1. Explain the difference between GPS and GIS.

GPS (Global Positioning System) and GIS (Geographic Information System) are closely related but distinct technologies. Think of it like this: GPS provides the where, while GIS provides the what and why.

GPS is a satellite-based navigation system that determines the precise location of a receiver on Earth. It uses signals from multiple satellites to calculate latitude, longitude, and altitude. It’s the technology behind your car’s navigation system and many mapping apps.

GIS, on the other hand, is a system designed to capture, store, manipulate, analyze, manage, and present all types of geographically referenced data. This data could be anything from property boundaries and road networks to environmental data like soil composition or population density. GIS uses GPS data as one of its many data sources to create maps and perform spatial analysis.

In essence, GPS provides the location data, while GIS provides the framework for understanding and using that data within a broader context.

Q 2. Describe the various types of GPS receivers and their applications.

GPS receivers come in a wide variety of types, each with its own strengths and applications:

- Handheld GPS Receivers: These are portable devices, ideal for recreational use, hiking, or basic surveying. They offer basic positioning capabilities and are relatively inexpensive.

- Mapping-grade GPS Receivers: These are more accurate and robust than handheld units. They’re commonly used for professional surveying, mapping, and GIS data collection, offering centimeter-level accuracy.

- Real-Time Kinematic (RTK) GPS Receivers: These provide the highest level of accuracy, achieving sub-centimeter precision. They require a base station and a rover unit to correct for atmospheric errors. RTK is essential for high-precision applications like construction surveying and precision agriculture.

- Integrated GPS Receivers: These are built into other devices, such as smartphones, tablets, and even drones. The accuracy varies greatly depending on the device and the quality of the integrated GPS chip.

The choice of GPS receiver depends entirely on the accuracy requirements and the application. For example, a handheld unit is sufficient for hiking, while RTK GPS is necessary for precise land surveying.

Q 3. What are the common sources of error in GPS data collection?

GPS data collection is susceptible to several sources of error. These can be broadly categorized as:

- Atmospheric Effects: The ionosphere and troposphere can delay or refract GPS signals, leading to positional errors. These are particularly significant in mountainous areas.

- Multipath Errors: Signals can bounce off buildings or other objects before reaching the receiver, causing inaccurate position readings. This is common in urban canyons.

- Satellite Geometry (GDOP): The geometric arrangement of satellites affects the accuracy of the position calculation. Poor satellite geometry (high GDOP) leads to lower accuracy.

- Receiver Noise: Internal noise in the receiver can degrade signal quality and increase positional errors. This is less of an issue with higher-end receivers.

- Obstructions: Anything blocking the line of sight to the satellites (trees, buildings, mountains) will reduce the number of visible satellites and decrease accuracy.

Understanding these error sources is crucial for planning GPS data collection and implementing appropriate error mitigation strategies.

Q 4. How do you handle differential GPS (DGPS) data?

Differential GPS (DGPS) significantly improves the accuracy of GPS data by using a known reference station with a precisely known location. The reference station receives the same signals as the rover unit (the receiver taking measurements in the field) and calculates the differences between the observed and true positions. These corrections are then transmitted to the rover unit, allowing it to adjust its position calculation, reducing errors.

Handling DGPS data involves:

- Post-Processing: The rover’s raw data is processed using the correction data from the base station. This is often done using specialized software.

- Real-Time Corrections: Corrections are transmitted to the rover in real-time, allowing for immediate, highly accurate positioning. This requires a communication link between the base station and rover (like a radio modem).

- Data Quality Control: Checking the accuracy of the correction data and identifying any outliers or errors in the processed data.

Using DGPS is essential for applications requiring high-precision measurements, such as land surveying and construction.

Q 5. What are the different coordinate systems used in GIS?

GIS uses various coordinate systems to represent locations on the Earth’s surface. The most common are:

- Geographic Coordinate System (GCS): Uses latitude and longitude to define locations on a spherical Earth. Latitude measures north-south position, and longitude measures east-west position. It’s a global system, but it’s not ideal for local area mapping due to distortion.

- Projected Coordinate System (PCS): Projects the curved surface of the Earth onto a flat plane, using a mathematical projection. This removes the distortion inherent in GCS, but the choice of projection matters greatly. Different projections are suited for different regions and purposes (e.g., UTM, State Plane).

- Universal Transverse Mercator (UTM): A common PCS that divides the Earth into 60 longitudinal zones, each projected onto a transverse Mercator projection. It is widely used in mapping and surveying.

- State Plane Coordinate System (SPCS): A PCS that uses different projections optimized for smaller geographical areas, such as individual states or provinces. This reduces distortion within each zone.

Selecting the appropriate coordinate system is crucial for ensuring accurate spatial analysis and avoiding significant distortions in map representations.

Q 6. Explain the concept of datum transformation.

Datum transformation is the process of converting coordinates from one geodetic datum to another. A datum defines the shape and size of the Earth and the origin of its coordinate system. Different datums exist because the Earth isn’t a perfect sphere; its shape is complex and variations exist in its measurements.

For example, NAD83 and WGS84 are two common datums. While they are quite similar, differences exist, and transforming between them is often necessary to ensure accurate overlay and analysis of spatial datasets using both datums.

Datum transformations typically involve using mathematical equations or transformation parameters to adjust coordinates from one datum to another. This ensures that data originating from different sources, using different datums, can be accurately integrated and analyzed within a GIS environment. The need for these transformations highlights the complexity of representing the Earth’s surface in a digital format.

Q 7. Describe your experience with different data collection methods (e.g., total stations, RTK GPS).

My experience encompasses a wide range of data collection methods, including:

- Total Stations: I’ve extensively used total stations for high-precision surveying projects, measuring distances and angles to create detailed topographic maps and cadastral surveys. Their accuracy makes them ideal for precise measurements of features.

- RTK GPS: I’ve leveraged RTK GPS extensively for real-time, sub-centimeter accuracy in various applications, including construction stakeout, precision agriculture mapping, and environmental monitoring. The real-time feedback is invaluable for these applications.

- GNSS (Global Navigation Satellite System): This goes beyond just GPS, encompassing other global satellite navigation systems like GLONASS, Galileo, and BeiDou. Using multi-GNSS receivers increases the number of available satellites, improving the accuracy and reliability of positioning, especially in challenging environments with poor satellite geometry.

- Mobile Mapping Systems (MMS): I’ve worked with MMS which use a combination of GPS, inertial measurement units (IMUs), and laser scanners to create highly accurate and detailed 3D models of infrastructure, roads, and other assets. This provides a more complete picture than simple point data.

Each method offers unique advantages depending on the project’s requirements, budget, and desired level of accuracy. Selecting the optimal method requires careful consideration of the specific needs and constraints of each project. For instance, while RTK GPS provides incredible accuracy, total stations may be more suitable in environments with heavy canopy cover where satellite signals are weak.

Q 8. How do you ensure data accuracy and precision during GPS data collection?

Ensuring data accuracy and precision in GPS data collection is paramount. It involves a multi-pronged approach combining best practices in equipment handling, data processing, and quality control. Think of it like baking a cake – you need the right ingredients (equipment), the correct recipe (procedures), and careful attention to detail (QC) to get a perfect result.

High-Quality GPS Receivers: Using a receiver with a high number of channels and the ability to track multiple satellite constellations (GPS, GLONASS, Galileo, BeiDou) improves signal acquisition, especially in challenging environments with poor satellite visibility (like dense urban areas or heavily forested regions). I always prefer receivers capable of differential GPS (DGPS) or Real-Time Kinematic (RTK) correction, significantly enhancing accuracy.

Proper Survey Techniques: Techniques such as static surveying (for high-accuracy base station establishment) and kinematic surveying (for efficient data collection) are crucial. For kinematic surveying, I carefully plan the survey route to optimize satellite geometry and minimize signal obstructions. I also employ multiple base stations for large-scale projects to ensure consistent accuracy across the entire survey area.

Post-Processing: Even with RTK, post-processing is essential to further refine the data. This involves applying corrections from base station data to rover data, removing atmospheric errors, and accounting for other systematic biases. Software like RTKLIB is extensively used for this purpose.

Data Validation: After data collection, I perform rigorous data validation. This may involve visual inspection on a map to identify outliers or improbable positions, checking for positional accuracy against known control points and using statistical methods to analyze the data’s overall quality and consistency.

Q 9. What software packages are you proficient in for GIS data processing and analysis (e.g., ArcGIS, QGIS)?

My GIS software proficiency spans both industry-standard and open-source solutions. I’m highly experienced in ArcGIS, proficient across its various extensions, including ArcMap, ArcGIS Pro, Spatial Analyst, and Geostatistical Analyst. I utilize ArcGIS for everything from geoprocessing and spatial analysis to cartography and data management. I’m also highly skilled in QGIS, appreciating its flexibility and open-source nature. QGIS serves as a powerful alternative, particularly useful for tasks like data visualization, processing large datasets, and plugin integration. In addition, I have experience using other specialized software packages like Global Mapper and Whitebox GAT depending on the project’s needs.

Q 10. How do you handle data quality control and assurance in a GIS project?

Data quality control and assurance (QA/QC) is an integral part of any GIS project. Think of it as a quality check at every step of the process. Neglecting this step can lead to costly errors and inaccurate results. My approach incorporates several crucial steps:

Data Source Assessment: I begin by evaluating the accuracy, completeness, and reliability of all data sources. This includes assessing metadata and verifying data provenance. Knowing the quality of your ‘ingredients’ is as crucial as the recipe itself.

Data Cleaning and Preprocessing: This involves identifying and correcting errors like spatial inconsistencies, attribute errors, and inconsistencies in data formats. This is like prepping your ingredients before you start cooking – making sure everything is ready.

Spatial Validation: I use various techniques like topological checks (identifying overlaps and gaps in polygons), positional accuracy assessment (comparing to known control points), and visual inspection to identify spatial anomalies. This is similar to tasting your cake batter – you need to ensure all the ingredients are properly combined before baking.

Attribute Validation: Ensuring consistency and accuracy of attribute data, including data type checking and range checks. This involves checking for inconsistencies or errors in the data entered.

Documentation: Thorough documentation throughout the entire process, which includes detailing the QA/QC procedures and the results of data checks, is essential to ensure reproducibility and transparency.

Q 11. Explain your experience with georeferencing and creating geodatabases.

Georeferencing and creating geodatabases are fundamental aspects of my work. Georeferencing involves assigning real-world coordinates to data that doesn’t have them (like scanned maps or aerial photographs). This requires identifying corresponding points in both the source image and a reference dataset (e.g., a known map or aerial imagery). ArcGIS and QGIS offer powerful tools for this, utilizing techniques like polynomial transformations to achieve optimal accuracy. I often use ground control points (GCPs) – points with known coordinates – for optimal alignment.

Creating geodatabases involves organizing and storing geographic data in a structured manner. A geodatabase can be considered the ‘house’ where all the geographic data (‘residents’) live. It provides a flexible framework for managing datasets, feature classes (e.g., points, lines, polygons), and their attributes. I utilize file geodatabases (for smaller projects) and enterprise geodatabases (for large, collaborative projects) based on project scale and requirements. This organized structure ensures efficient data management and analysis.

Q 12. Describe your experience working with various map projections.

Working with different map projections is essential for representing geographic data accurately. Map projections are ways to transform the curved surface of the Earth onto a flat map, each projection introducing some distortion. Understanding which projection to use for specific applications and regions is key. I have extensive experience with various map projections including:

UTM (Universal Transverse Mercator): Commonly used for large-scale mapping, minimizing distortion within relatively small zones.

State Plane Coordinate Systems: Optimized for specific states or regions, minimizing distortion for that area.

Geographic Coordinate System (GCS) – Latitude and Longitude: A global system but with significant distortion at larger scales.

Albers Equal-Area Conic: Useful for preserving area, often used in thematic mapping.

My selection of a projection depends heavily on the scale and extent of the project, the intended application (e.g., navigation, thematic analysis, land surveying), and the acceptable levels of distortion. Incorrect projection choice can lead to inaccuracies in distances, areas, and shapes, which is why selecting the most suitable projection is crucial.

Q 13. How do you create and edit shapefiles and feature classes?

Shapefiles and feature classes are fundamental data structures in GIS. Shapefiles, though now often superseded by geodatabases, are still widely used. They store vector data as a collection of related files (e.g., .shp, .shx, .dbf). Feature classes are the core components of geodatabases, representing geographic features (points, lines, polygons) with associated attributes.

I create and edit these using both ArcGIS and QGIS. Creation typically involves defining the feature class properties (geometry type, coordinate system, attributes), and then digitizing features directly on the map or importing data from other sources (e.g., CAD files, CSV files). Editing involves adding, modifying, deleting features, and updating attribute information. For instance, using ArcGIS Pro’s editing tools, I can accurately digitize a polygon representing a building footprint, then add attributes for building ID, address, and construction year. Similar workflows can be done with shapefiles in QGIS. Using tools like the ‘attribute table’ in QGIS or ‘attribute window’ in ArcGIS Pro, I manage and modify these attributes.

Q 14. What are your skills in spatial analysis (e.g., buffering, overlay)?

Spatial analysis is a core strength. My skills include a wide range of techniques, including:

Buffering: Creating zones of a specified distance around features (e.g., creating a 500-meter buffer around rivers to identify floodplains).

Overlay: Combining multiple spatial datasets to extract information (e.g., overlaying a soil map and a land-use map to analyze soil types in different land-use zones).

Spatial Join: Linking attributes from one feature class to another based on spatial relationships (e.g., joining population data to census tracts).

Network Analysis: Analyzing networks for shortest paths, optimal routes, and service area calculations (e.g., finding the nearest hospital based on driving distances).

Proximity Analysis: Analyzing distances between features to understand spatial relationships (e.g., determining the distance between a new store and the nearest competitors).

I utilize both the built-in spatial analysis tools in ArcGIS and QGIS, as well as scripting (e.g., Python) for more complex or customized analyses. For example, I have utilized Python scripting to automate a series of overlay operations on a large dataset, significantly reducing processing time compared to manual processing.

Q 15. How familiar are you with different data formats (e.g., shapefiles, GeoTIFF, GeoJSON)?

I’m highly proficient in various geospatial data formats. Understanding these formats is crucial for interoperability and efficient data processing. Shapefiles, for instance, are a widely used vector format storing geographic features like points, lines, and polygons, along with their associated attributes. Each feature is represented by a record in a database table. GeoTIFFs, on the other hand, are raster formats ideal for imagery data, such as satellite images or aerial photography, encoding georeferencing information directly within the file. Finally, GeoJSON is a text-based format that’s increasingly popular due to its ease of use and compatibility with web mapping applications. It represents geographic features as JavaScript Object Notation (JSON) objects, making it perfect for web-based GIS applications. I routinely work with all three in my projects, often needing to convert between them depending on the task at hand.

For example, I recently worked on a project mapping deforestation in the Amazon. The initial data was provided as a GeoTIFF satellite image showcasing changes in vegetation. I then processed this data to extract specific features – boundaries of deforestation – and saved them in a shapefile to easily perform spatial analysis in GIS software. The final product, an interactive map showing deforestation zones, was created using GeoJSON to facilitate easy web integration.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with attribute data management.

Attribute data management is a cornerstone of GIS. It involves organizing and managing the non-spatial information associated with geographic features. Think of it as the ‘who, what, when, where’ of your map data. For example, in a shapefile representing roads, attributes might include road name, type (highway, residential), speed limit, and last maintenance date. My experience encompasses data cleaning, validation, and standardization; ensuring data consistency and accuracy is vital. This often involves dealing with missing values, incorrect data types, and inconsistencies in data entry. I’m skilled in using database management systems and GIS software to perform these tasks efficiently.

I once worked on a project involving a large dataset of water quality monitoring points. The data contained numerous errors, including inconsistent units, missing dates, and misspelled locations. I used a combination of scripting (Python with libraries like Pandas and Geopandas) and GIS software to clean and standardize the data. This included automated checks for data type consistency, spatial validation against existing map layers to identify misplaced points, and using statistical techniques to impute missing values. The result was a clean, reliable dataset ready for advanced analysis.

Q 17. Explain your experience with spatial querying and data retrieval.

Spatial querying and data retrieval are essential for extracting meaningful information from geospatial datasets. It’s like asking specific questions about your data based on location or spatial relationships. I’m proficient in using SQL-based queries within spatial databases, as well as using the query tools built into GIS software like ArcGIS or QGIS. This includes techniques like proximity analysis (finding features within a certain distance), spatial joins (combining data from multiple layers based on spatial location), and overlay analysis (combining data from different layers using set operations).

For instance, imagine needing to find all buildings within a 500-meter radius of a proposed new hospital. I would use a spatial query to select all building features that fall within the specified buffer zone around the hospital’s location. This process allows for efficient identification of buildings that might be affected by the construction or need to be considered in the planning process. I’m confident in my ability to design efficient queries to address a variety of complex spatial scenarios.

Q 18. How would you address data inconsistencies or errors discovered after data collection?

Data inconsistencies or errors are inevitable in any data collection process. Addressing them effectively requires a multi-step approach. First, thorough data validation checks should be performed during data collection to minimize errors from the start. Once inconsistencies surface, I adopt a systematic process:

- Identification: Employing quality control measures, including visual inspection, statistical analysis, and spatial validation checks (e.g., checking if points fall within expected boundaries).

- Analysis: Determining the nature and extent of the errors. Is it a systematic error (affecting many records in a similar way) or a random error? Understanding the source of the error is key to fixing it.

- Correction: Using appropriate methods for correction. This can involve manual editing, automated scripting (using tools like Python), applying spatial interpolation techniques for missing data, or utilizing error propagation models for correcting systematic errors.

- Documentation: Meticulously documenting the errors detected, the methods used for correction, and the rationale behind each decision. This is crucial for transparency and accountability.

For example, if GPS data shows points consistently offset by a certain distance, a systematic error, this could be corrected using a transformation matrix calculated from control points.

Q 19. What is your experience with map design and cartographic principles?

Effective map design goes beyond simply placing features on a map; it’s about communicating information clearly and effectively. My experience encompasses all facets of cartographic principles, from data visualization techniques to symbology, labeling, and map layout. I’m familiar with various map projections and their implications, choosing the appropriate projection based on the geographic area and the purpose of the map. I also understand the importance of creating visually appealing and easy-to-understand maps using appropriate color schemes, fonts, and legends. I consistently aim for maps that are both informative and aesthetically pleasing, maximizing the effectiveness of the information presented.

In a recent project involving the distribution of a specific bird species, I used color ramps and proportional symbols to effectively illustrate population density across the study area. The choice of color scheme was crucial in conveying the density gradient accurately without creating any misleading visual effects. This attention to detail is crucial in ensuring the accuracy and interpretability of the final map product.

Q 20. Describe your experience with GPS data post-processing.

GPS data post-processing is crucial for improving the accuracy of GPS measurements. Raw GPS data often contains errors due to atmospheric conditions, multipath effects (signals bouncing off objects), and receiver limitations. I am experienced in using post-processing software (like RTK-GPS software or specialized GIS software with post-processing tools) to correct these errors. This usually involves applying differential correction techniques using base station data (a known, stationary GPS receiver) to remove systematic errors, improving the positional accuracy from meters to centimeters. Techniques like kinematic positioning (real-time kinematic or post-processed kinematic) are regularly used for high-accuracy applications like surveying or precision agriculture.

For example, while surveying property boundaries, I would utilize post-processed kinematic GPS data to accurately determine the coordinates of boundary markers, which are essential for precise land delineation. The accuracy improvements achieved through post-processing were critical in ensuring the reliability of the final survey results.

Q 21. How do you ensure the security and integrity of geospatial data?

Ensuring the security and integrity of geospatial data is paramount. This involves implementing measures to protect data from unauthorized access, modification, or destruction. This includes physical security measures, such as secure storage of data backups and access control to servers. But equally important are digital security measures: access control lists to restrict access to sensitive datasets; encryption to protect data both in transit and at rest; version control systems to track changes and allow for rollback in case of errors; regular data backups to protect against data loss. Data provenance tracking is crucial for understanding the origin and processing history of a dataset, enabling quality assessment and allowing for identification of potential data manipulation.

Furthermore, metadata standards (such as ISO 19115) ensure that datasets are well-documented, allowing for better understanding and use. Adherence to ethical guidelines for data collection and sharing is vital, respecting privacy concerns and ensuring data is used responsibly and ethically. My experience in all these areas is extensive, guaranteeing that geospatial data under my care is both secure and trustworthy.

Q 22. Explain your experience with cloud-based GIS platforms (e.g., ArcGIS Online, Google Earth Engine).

My experience with cloud-based GIS platforms like ArcGIS Online and Google Earth Engine is extensive. I’ve leveraged these platforms for various projects, from creating interactive web maps for public engagement to performing complex geospatial analyses on large datasets. ArcGIS Online, for example, excels in its collaborative features; I’ve used its tools to share and manage map projects with colleagues, enabling efficient teamwork and version control. Its robust geoprocessing capabilities are invaluable for automating tasks and streamlining workflows. I’ve utilized its spatial analysis tools for tasks such as proximity analysis and overlay operations, essential for understanding spatial relationships between different datasets. Google Earth Engine, on the other hand, provides unparalleled access to massive satellite imagery archives. I’ve used it for time-series analysis of deforestation, assessing changes in land cover over decades. Its ability to process terabytes of data with relative ease allows for sophisticated analyses that would be impossible with on-premise solutions. For instance, in a recent project, I used Earth Engine to monitor glacial melt patterns by analyzing a multi-decade Landsat time series, enabling the creation of predictive models for future melt rates.

Q 23. What are your skills in spatial statistics and modeling?

Spatial statistics and modeling are core to my GIS skillset. I’m proficient in applying various techniques to analyze spatial patterns and relationships within geographic data. This includes understanding spatial autocorrelation, using methods like Moran’s I to identify clustering or dispersion. I’m also well-versed in spatial regression techniques like geographically weighted regression (GWR), which allows for the modeling of relationships that vary across space. For example, in a study of crime hotspots, I utilized GWR to account for the varying influence of socioeconomic factors across different neighborhoods, yielding more accurate and localized predictions than traditional regression methods. My experience further extends to spatial interpolation techniques like Kriging, used to estimate values at unsampled locations based on nearby observations. This is particularly valuable when working with sparsely distributed data points, like air quality monitoring stations. Additionally, I have experience with point pattern analysis, using tools like Ripley’s K function to assess the randomness or clustering of spatial events. Finally, I’m comfortable using various statistical software packages such as R and Python, along with GIS software, to perform these analyses and visualize the results.

Q 24. Describe your experience working with LiDAR or other remote sensing data.

I have considerable experience working with LiDAR and other remote sensing data. LiDAR, in particular, offers incredibly detailed elevation data, which I have utilized for numerous applications, including creating high-resolution digital elevation models (DEMs) for hydrological modeling, identifying areas prone to flooding. From these DEMs, I derive slope, aspect, and curvature maps – critical for a wide range of analyses including landslide susceptibility mapping and habitat suitability modeling. Beyond LiDAR, I’m familiar with processing and analyzing imagery from various satellite platforms, including Landsat, Sentinel, and PlanetScope. This involves pre-processing tasks like atmospheric correction and geometric correction, followed by image classification and change detection techniques. For instance, I used multispectral imagery to monitor agricultural yields by classifying different crop types and assessing their health throughout the growing season. This involved the application of supervised and unsupervised classification methods, such as maximum likelihood classification and k-means clustering.

Q 25. How do you manage large datasets in a GIS environment?

Managing large datasets efficiently in a GIS environment is crucial. My approach involves a combination of strategies. Firstly, I utilize geodatabases, which offer a structured and optimized way to store and manage vector data. For raster data, I leverage cloud storage and processing capabilities of platforms like Google Earth Engine or Amazon Web Services to handle datasets too large for local storage. Secondly, I employ data compression techniques to reduce file sizes without significant loss of information. Thirdly, I utilize data tiling and other methods to split large datasets into smaller, manageable chunks for processing. This allows for parallel processing, significantly accelerating analysis times. I also leverage tools that efficiently manage spatial indexes within the database for faster query processing. Finally, I routinely check data quality and undertake data cleaning to maintain data integrity, ensuring the accuracy of all analyses. This often involves identifying and correcting spatial and attribute errors. Regular backups are also vital to avoid data loss.

Q 26. Explain your problem-solving approach when encountering unexpected issues during data collection.

My problem-solving approach when faced with unexpected issues during data collection is systematic and methodical. First, I carefully examine the problem to isolate the source of the error. This might involve reviewing logs, checking data quality reports, and inspecting the data visually. Second, I systematically test various hypotheses to explain the error. This could involve checking equipment settings, re-running data collection procedures, and comparing the problematic data to known good data. Third, I leverage my knowledge of GIS concepts and technologies to develop potential solutions. This might include applying spatial filters to remove noise, utilizing data transformations to improve data quality, or employing different data collection techniques. Fourth, I document the solution and any lessons learned, ensuring that similar issues can be avoided in future data collection projects. For instance, during a recent GPS survey, I encountered significant positional inaccuracies. Through investigation, I identified that multipath errors were the culprit. The solution involved carefully selecting survey points to minimize signal reflections and employing advanced GPS techniques to mitigate the effect of these errors.

Q 27. Describe your experience with integrating GIS data with other data sources.

Integrating GIS data with other data sources is a common aspect of my work. This often involves joining spatial data with tabular attribute data from sources like databases or spreadsheets. I regularly use spatial joins to merge attributes from one layer to another based on spatial relationships. For example, I might join census data to a polygon layer representing neighborhoods to analyze socioeconomic characteristics within each neighborhood. I also have experience integrating GIS data with time-series data from sensors, environmental monitoring stations, or other sources. This often involves working with databases and creating spatio-temporal analysis workflows to assess changes over time. In one project, I integrated weather data with crop yield data using geospatial and temporal joins and statistical methods to understand the relationship between weather patterns and agricultural productivity. The process involves understanding the different data structures, coordinate systems, and data formats involved, ensuring consistency and accuracy throughout the integration process. Data cleaning, transformation, and validation are critical to prevent errors during the integration.

Key Topics to Learn for GPS and GIS Data Collection Interview

- GPS Fundamentals: Understanding GPS signal reception, accuracy (including sources of error like multipath and atmospheric effects), and differential GPS techniques. Practical application: Analyzing the impact of different GPS receivers and settings on data quality in a specific field project.

- Data Acquisition Methods: Mastering various data collection techniques using handheld GPS units, mobile mapping systems, and drones. Practical application: Comparing the efficiency and accuracy of different data acquisition methods for a given task (e.g., mapping a forest versus a city street network).

- GIS Software Proficiency: Demonstrating expertise in common GIS software packages (ArcGIS, QGIS) including data import/export, geoprocessing tools (e.g., spatial analysis, data manipulation), and map creation. Practical application: Describing your experience using GIS software to analyze collected GPS data and create insightful visualizations.

- Coordinate Systems and Projections: Understanding different coordinate systems (geographic, projected) and their implications for data accuracy and analysis. Practical application: Explaining the process of choosing the appropriate coordinate system for a specific project and transforming data between systems.

- Data Quality Control and Assurance: Implementing procedures for ensuring data accuracy, completeness, and consistency. Practical application: Describing your approach to identifying and correcting errors in GPS data, including strategies for outlier detection and data validation.

- Spatial Data Models: Understanding vector and raster data models and their appropriate applications. Practical application: Choosing the optimal data model for representing specific geographic features and explaining the tradeoffs involved.

- Problem-Solving and Troubleshooting: Demonstrating the ability to diagnose and resolve technical issues related to GPS and GIS data collection. Practical application: Recounting a situation where you successfully resolved a data collection challenge, highlighting your problem-solving skills.

Next Steps

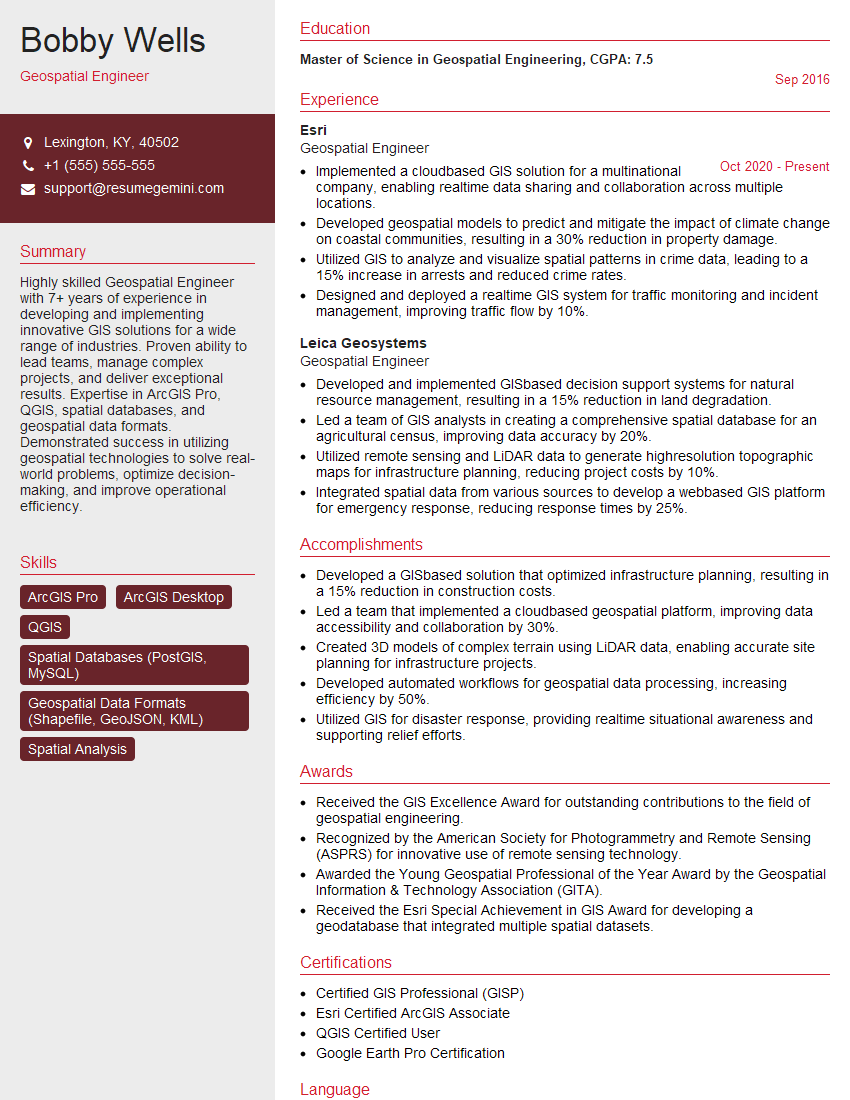

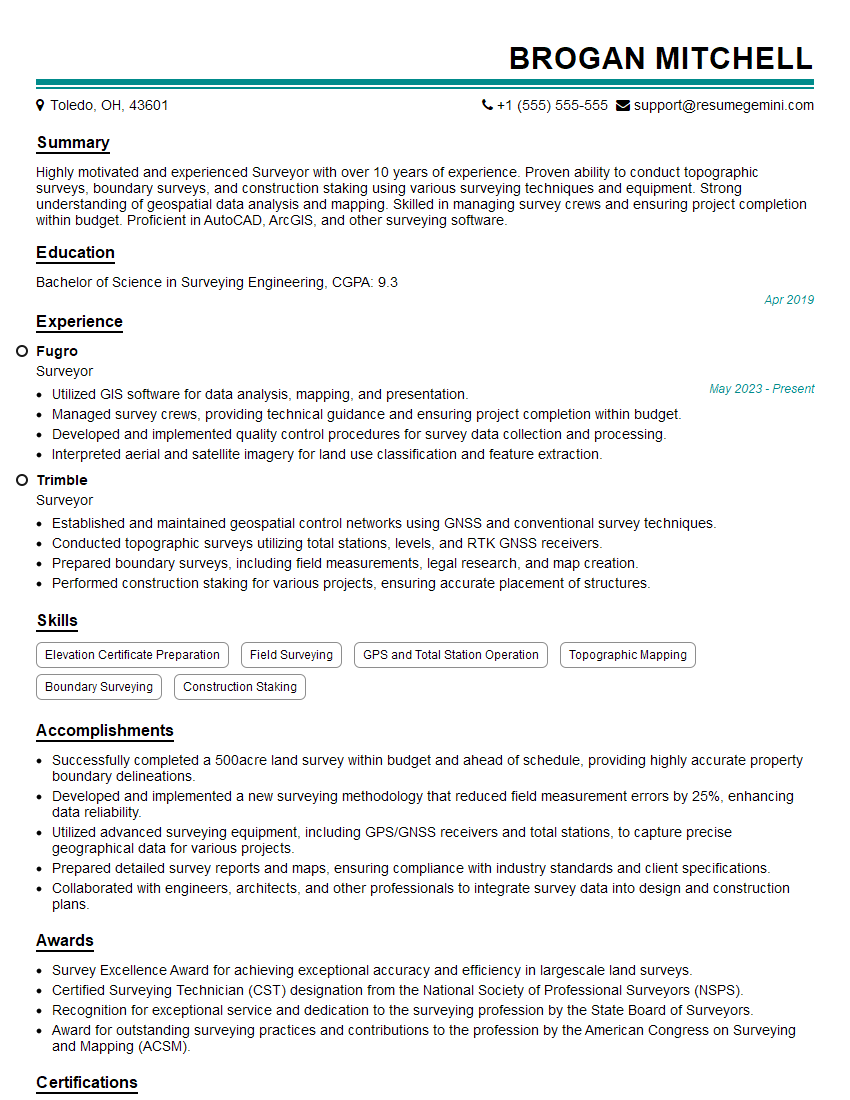

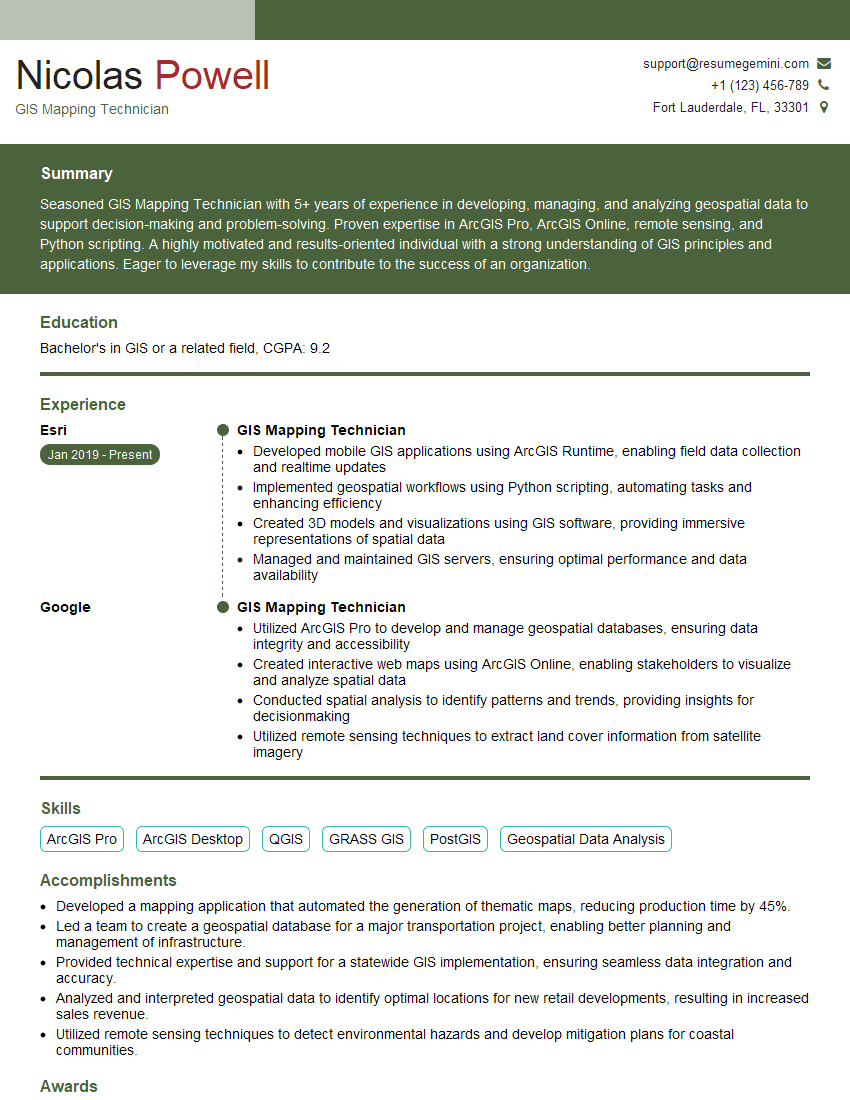

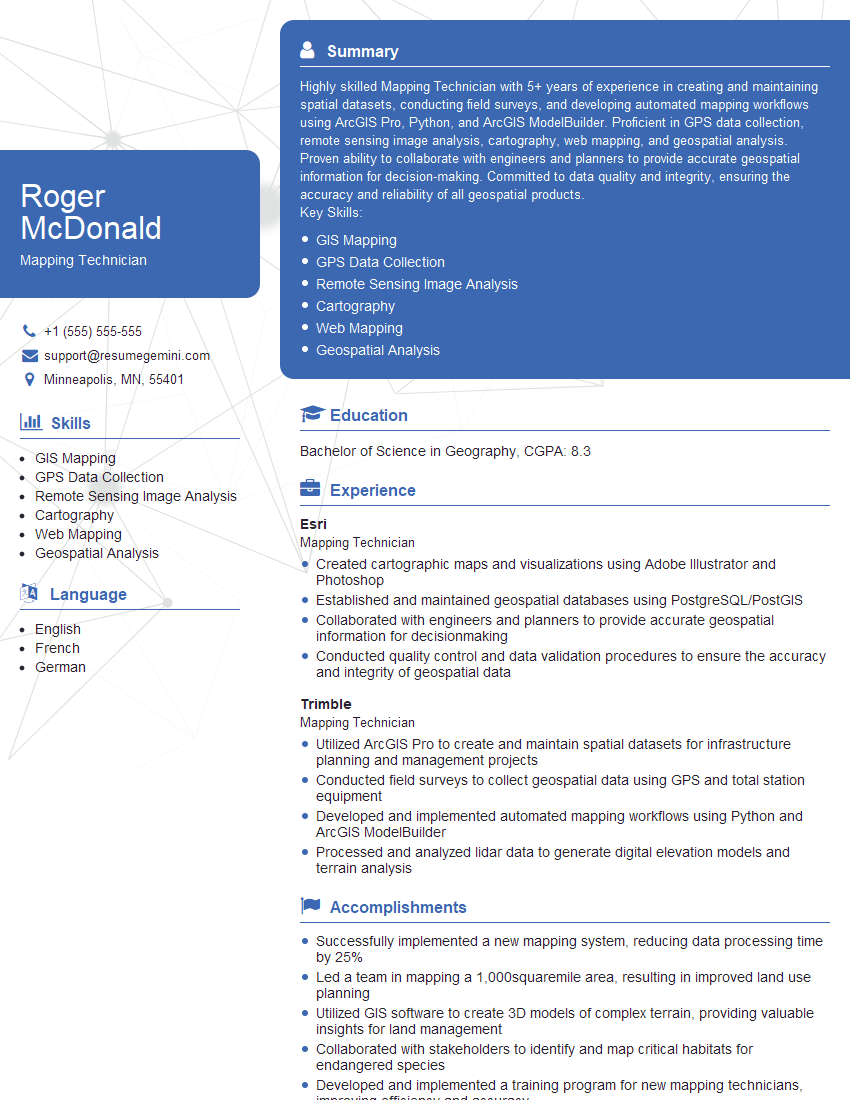

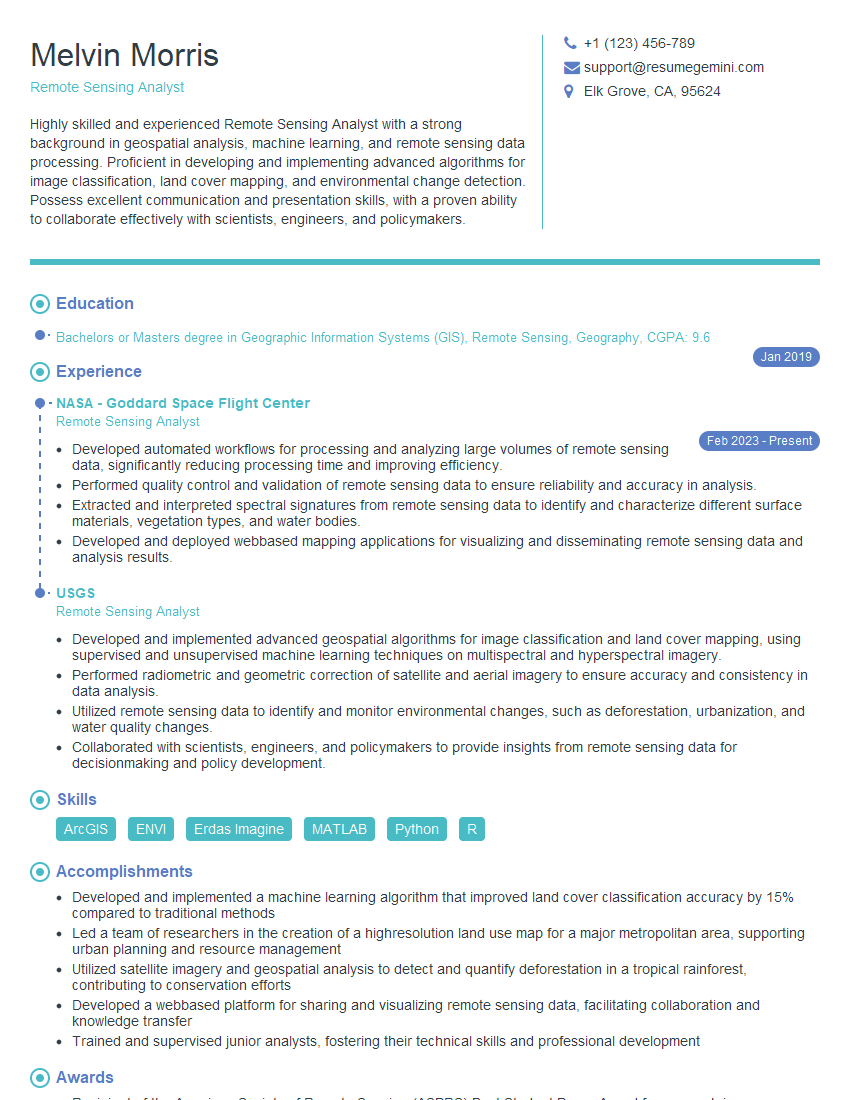

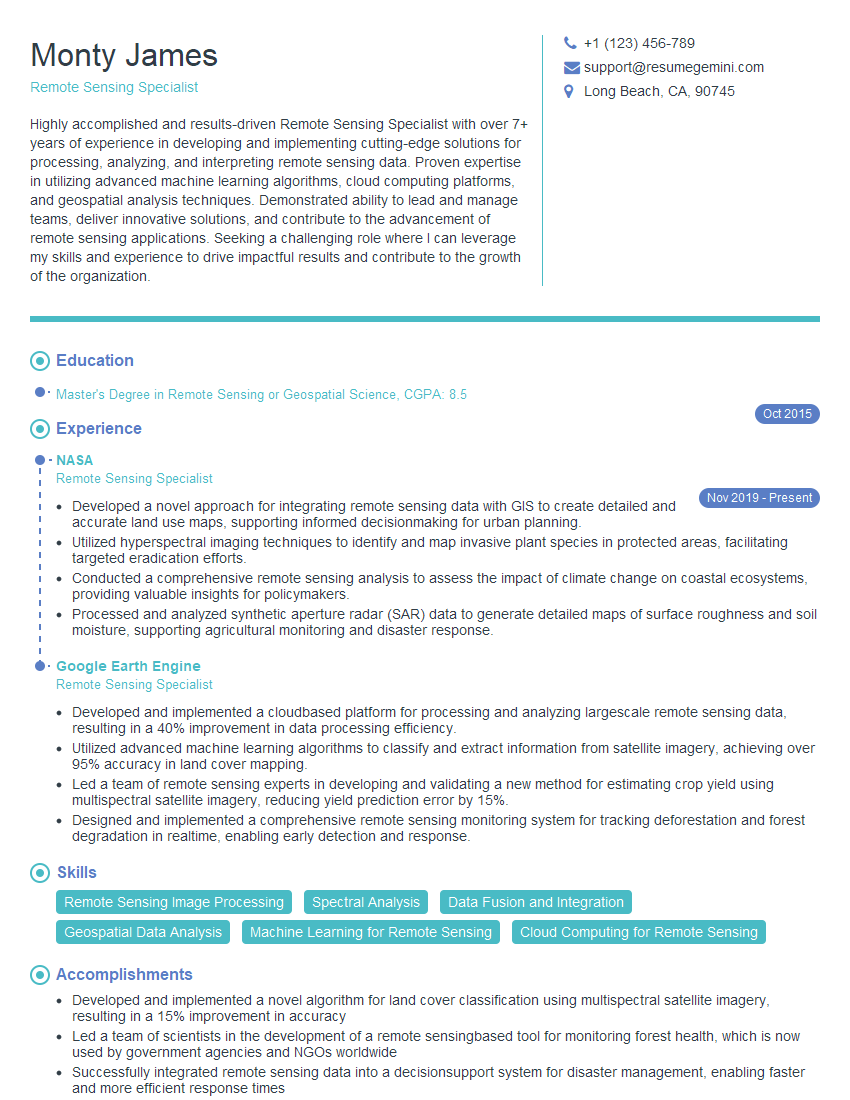

Mastering GPS and GIS data collection opens doors to exciting careers in surveying, environmental science, urban planning, and many more. To maximize your job prospects, it’s crucial to present your skills effectively. An ATS-friendly resume is key to getting your application noticed by recruiters. We strongly encourage you to leverage ResumeGemini to build a professional and impactful resume that showcases your expertise. ResumeGemini provides valuable resources and examples of resumes tailored to GPS and GIS data collection, ensuring your qualifications shine.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good