Preparation is the key to success in any interview. In this post, we’ll explore crucial Human Factors Modeling interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Human Factors Modeling Interview

Q 1. Explain the difference between human factors and ergonomics.

While often used interchangeably, human factors and ergonomics are distinct but closely related fields. Human factors is the broader discipline encompassing the understanding of human capabilities, limitations, and characteristics in relation to the design of systems, products, and environments. It considers the cognitive, physical, and social aspects of human interaction. Ergonomics, on the other hand, focuses specifically on the physical aspects of the human-machine interface, aiming to optimize workplace design to reduce physical strain and improve efficiency and safety. Think of ergonomics as a subset of human factors, concentrating on the physical interaction, while human factors takes a more holistic view, including cognitive and social factors.

For example, designing a comfortable chair is an ergonomic concern. However, designing an entire cockpit of an airplane, considering pilot workload, display readability, and emergency procedures, falls under the broader umbrella of human factors.

Q 2. Describe your experience with different human factors modeling techniques (e.g., HTA, FMEA, fault tree analysis).

I have extensive experience applying various human factors modeling techniques. Hierarchical Task Analysis (HTA) is a powerful tool for breaking down complex tasks into smaller, manageable subtasks, revealing potential usability bottlenecks. I’ve used HTA in projects involving air traffic control system design, identifying cognitive overload points in the workflow. Failure Modes and Effects Analysis (FMEA) helps predict potential failures and their consequences, allowing for proactive risk mitigation. I utilized FMEA in a medical device project, anticipating potential user errors and designing safeguards. Finally, Fault Tree Analysis (FTA) is a top-down approach used to analyze potential system failures. I’ve applied FTA to analyze the causes of accidents in industrial settings, identifying contributing human factors.

My experience spans various software and hardware applications, and I’m proficient in utilizing these methods both individually and in a combined approach, adapting the methodology to the specific context of the project.

Q 3. What are the key principles of human-centered design?

Human-centered design prioritizes the needs, capabilities, and limitations of users throughout the entire design process. Key principles include:

- User focus: Understanding user needs, tasks, and context through various research methods (e.g., interviews, usability testing).

- Early user involvement: Integrating user feedback at every stage, from conceptualization to prototyping and evaluation.

- Iterative design: Continuously refining the design based on user feedback and testing results.

- Collaboration: Working closely with designers, engineers, and other stakeholders to ensure a cohesive design.

- Measurable goals: Defining clear usability goals and metrics to evaluate the effectiveness of the design.

Essentially, human-centered design ensures the final product or system is not only functional but also intuitive, efficient, and enjoyable for the intended users.

Q 4. How do you incorporate user feedback into the human factors modeling process?

User feedback is crucial for validating assumptions and identifying usability issues. I incorporate it throughout the modeling process using several techniques:

- Usability testing: Observing users interacting with prototypes or the system to identify pain points and areas for improvement.

- Surveys and questionnaires: Gathering quantitative and qualitative data on user satisfaction and preferences.

- Interviews and focus groups: Conducting in-depth discussions to understand users’ mental models and perspectives.

- Heuristic evaluations: Applying established usability principles to identify potential problems.

The feedback gathered is then analyzed, and design iterations are made to address the identified issues. This iterative process ensures that the final design truly meets user needs.

Q 5. Describe a project where you used human factors modeling to improve a system’s usability.

In a project involving a complex medical device, I employed HTA and FMEA to enhance its usability and safety. The device had a multi-step procedure requiring precise actions from medical professionals under time pressure. Initially, the workflow was cumbersome and prone to errors. Through HTA, we decomposed the procedure into smaller, more manageable steps, highlighting areas of potential confusion. FMEA identified critical steps where errors could have catastrophic consequences. We then redesigned the device’s interface, simplifying the workflow and adding visual cues to guide users. Post-intervention usability testing demonstrated a significant reduction in errors and a marked improvement in user satisfaction.

The success of this project demonstrated the effectiveness of a combined approach, using modeling techniques to proactively address potential human factors issues before they impacted users in real-world clinical settings.

Q 6. What are some common usability heuristics?

Jakob Nielsen’s 10 usability heuristics are widely used guidelines for evaluating and improving interface design. Some key heuristics include:

- Visibility of system status: Keeping users informed about what is going on.

- Match between system and the real world: Speaking the users’ language, using familiar concepts.

- User control and freedom: Providing clear ways to exit or undo actions.

- Consistency and standards: Maintaining consistent language and behavior throughout the system.

- Error prevention: Designing systems to prevent errors from occurring in the first place.

- Recognition rather than recall: Making objects, actions, and options visible.

- Flexibility and efficiency of use: Catering to both novice and expert users.

- Aesthetic and minimalist design: Avoiding unnecessary information.

- Help users recognize, diagnose, and recover from errors: Providing clear and helpful error messages.

- Help and documentation: Providing easy-to-understand help and documentation.

These heuristics provide a framework for identifying potential usability problems and guide the design process towards creating more user-friendly systems.

Q 7. How do you evaluate the effectiveness of a human factors intervention?

Evaluating the effectiveness of a human factors intervention requires a multi-faceted approach. Key methods include:

- Usability testing: Measuring task completion time, error rates, and user satisfaction before and after the intervention.

- Performance metrics: Tracking key performance indicators (KPIs) related to efficiency, safety, and productivity.

- Surveys and questionnaires: Gathering user feedback on the perceived usability improvements.

- Qualitative data analysis: Analyzing interview transcripts and observations to gain deeper insights into user experiences.

- Statistical analysis: Using statistical methods to compare performance and satisfaction data before and after the intervention.

The specific evaluation methods chosen will depend on the goals of the intervention and the nature of the system being evaluated. A comprehensive evaluation should incorporate both quantitative and qualitative data to provide a holistic picture of the intervention’s impact.

Q 8. What are some common pitfalls in human factors modeling?

Common pitfalls in human factors modeling often stem from oversimplification, inadequate data, and neglecting the human element. For example, assuming a perfectly rational user is a major flaw; real users make mistakes, get distracted, and have varying levels of expertise.

- Oversimplification of Human Behavior: Models often reduce complex cognitive processes to simple rules, neglecting factors like fatigue, stress, and emotional state, leading to inaccurate predictions.

- Insufficient Data: Models require substantial, high-quality data on human performance. Using limited or biased data leads to unreliable results and flawed conclusions.

- Ignoring Context: Human behavior isn’t isolated; environmental factors, social dynamics, and the specific task context heavily influence performance. Ignoring these aspects leads to unrealistic model outputs.

- Lack of Validation: A model’s usefulness hinges on its ability to accurately reflect reality. Failing to rigorously validate the model through comparison with real-world data or experimental results renders it ineffective.

- Poor Model Selection: Choosing an inappropriate model type for the problem at hand can severely limit its accuracy and usefulness. A simple queuing model might be inadequate for complex decision-making tasks.

To mitigate these pitfalls, it’s crucial to employ iterative model development, including thorough data collection, validation against real-world data, and sensitivity analyses to explore the impact of uncertainties.

Q 9. Explain the concept of human error and its role in system design.

Human error, simply put, is any deviation from the expected or intended behavior that negatively impacts system performance. It’s not simply carelessness; it often stems from systemic issues within the system’s design, operational procedures, or the environment. Understanding human error is vital in system design because it allows us to anticipate potential problems and build safeguards to prevent accidents and improve efficiency.

For instance, consider a pilot accidentally activating the wrong switch during a critical phase of flight. This could be due to poorly designed controls (human-machine interface issues), lack of sufficient training (procedural issues), or excessive workload (environmental issue). By analyzing potential error types (slips, lapses, mistakes), we can improve the design of the cockpit layout, create better training programs, and implement automation strategies to reduce workload and enhance safety.

In system design, we incorporate human error considerations through techniques like error tolerance, fail-safes, and clear communication protocols. For example, designing a system with clear visual cues, redundancy in critical systems, and providing decision support tools can significantly mitigate the impact of human error.

Q 10. How do you account for individual differences in human factors modeling?

Individual differences significantly impact human performance, and ignoring them can lead to inaccurate models. Factors like age, experience, cognitive abilities, and personality all contribute to variability in how people interact with systems. Accounting for these differences is paramount for building robust and inclusive systems.

- Using Distributions: Instead of using single values for parameters such as reaction time or decision-making speed, we use probability distributions to reflect the variability within the population. For example, reaction time might follow a normal distribution with a mean and standard deviation.

- Agent-Based Modeling: This approach allows us to simulate multiple agents with distinct characteristics and behaviors. Each agent might have unique skills, experience levels, and response styles, leading to a more realistic simulation of team performance.

- Adaptive Models: Models can be designed to adapt to individual performance over time. For example, a model might track a user’s learning curve and adjust the difficulty of tasks accordingly.

- Personalized Models: In some cases, we might create highly personalized models based on individual user data (collected ethically and responsibly), tailoring the system’s interface and support to better suit their needs and capabilities.

By using techniques such as these, we can develop models that are more accurate and less biased, reflecting the true variability in human performance and ensuring better system design for all users.

Q 11. Describe your experience using human factors modeling software (e.g., AnyLogic, Arena, Simio).

I have extensive experience using AnyLogic for modeling complex human-system interactions. In a recent project involving the design of a new air traffic control system, we used AnyLogic to simulate the behavior of multiple air traffic controllers under varying workload conditions. The model incorporated elements such as individual controller performance variability, communication delays, and the impact of different interface designs.

// Example AnyLogic code snippet (illustrative): agent AirTrafficController { double reactionTime; // Drawn from a distribution // ... other attributes ... void handleAircraft() { // ... logic for handling aircraft, considering reactionTime ... } }

The simulation allowed us to evaluate different design options and identify potential bottlenecks in the system before implementation. The visual capabilities of AnyLogic were crucial in communicating the findings to stakeholders who may not have a strong technical background.

While AnyLogic was used in this instance, I’m familiar with Arena and Simio as well. The choice of software depends heavily on the specific requirements of the project, including the complexity of the system, the level of detail required in the model, and the resources available.

Q 12. How do you validate your human factors models?

Model validation is a crucial step, ensuring the model accurately reflects reality. This involves comparing the model’s outputs with real-world data and refining the model until the discrepancies are minimized.

- Data Comparison: We compare model outputs (e.g., task completion times, error rates) to data collected from observational studies, experiments, or existing performance records. Statistical methods, such as regression analysis, are used to assess the goodness of fit.

- Expert Review: Subject matter experts (SMEs) in the relevant field review the model structure, assumptions, and outputs, providing valuable feedback to improve accuracy and identify potential biases.

- Sensitivity Analysis: We systematically vary model parameters to understand their impact on the outputs. This helps identify the most critical parameters and those that introduce the most uncertainty.

- Predictive Validation: Once the model is deemed sufficiently accurate, we test its ability to predict future performance under different scenarios. This predictive capability is a strong indicator of model validity.

A well-validated model increases confidence in the insights derived and allows for more reliable decision-making regarding system design and implementation.

Q 13. What are the limitations of human factors modeling?

Despite their utility, human factors models have limitations. These arise from the complexity of human behavior and the inherent uncertainties in predicting it.

- Model Complexity: Accurately capturing the intricacies of human cognition and behavior requires highly detailed models, which can become computationally expensive and difficult to manage.

- Data Availability: Obtaining high-quality data on human performance can be challenging and time-consuming, especially for complex or rare events.

- Unpredictability of Human Behavior: Humans aren’t always rational or predictable; unexpected factors like stress, fatigue, or individual differences can significantly impact performance, making accurate prediction challenging.

- Model Assumptions: All models rely on simplifying assumptions, which can limit their applicability and introduce biases. Carefully considering and justifying these assumptions is essential.

- Limited Scope: Models often focus on specific aspects of human-system interaction, neglecting the broader context or interdependencies within the system.

It’s crucial to be aware of these limitations and to interpret model outputs cautiously, considering the inherent uncertainties and assumptions involved. Models are valuable tools, but they are not perfect representations of reality.

Q 14. How do you handle conflicting requirements in human factors design?

Conflicting requirements in human factors design are common. For instance, a system might need to be both highly efficient and extremely user-friendly, which may be conflicting goals. Resolving this requires a systematic approach.

- Prioritization: Clearly define the relative importance of each requirement. Techniques like weighted scoring or pairwise comparison can help objectively prioritize conflicting needs.

- Trade-off Analysis: Explore the consequences of satisfying one requirement at the expense of another. Quantify the impact on performance, safety, and usability.

- Iterative Design: Develop several design options, each prioritizing different combinations of requirements. Then evaluate these options using quantitative and qualitative methods to identify the best compromise.

- Compromise and Negotiation: Sometimes, it’s necessary to find a compromise that satisfies all stakeholders to a reasonable extent, even if it’s not the ideal solution for any single requirement.

- Human-Centered Design Principles: Throughout the process, emphasize human-centered design principles, ensuring that the final design prioritizes user needs and safety.

Effective communication and collaboration between designers, engineers, and users are vital in resolving conflicting requirements and ensuring that the final design is both functional and user-friendly.

Q 15. Describe your experience with human factors in different design stages.

My experience with human factors spans all design stages, from conceptualization to evaluation. Early on, during the conceptual design phase, I focus on understanding user needs and task analysis. This involves techniques like user interviews, contextual inquiry, and task flow analysis to identify potential usability issues before any design is even sketched. For instance, in designing a new medical device, we would interview doctors and nurses to understand their workflow and identify potential points of frustration.

In the design and prototyping stage, I use human factors principles to guide interface design, including considerations for visual clarity, accessibility, and cognitive load. I might use low-fidelity prototypes (e.g., paper prototypes) for early testing and high-fidelity prototypes (e.g., interactive mockups) for more detailed evaluations. We’d ensure button sizes are large enough for users with dexterity issues, place crucial information in a prominent position, and utilize color coding effectively.

Finally, during the evaluation phase, I conduct usability testing, employing methods like heuristic evaluation, cognitive walkthroughs, and A/B testing to identify and refine areas of improvement. For example, we might use eye-tracking to see where users focus their attention and identify areas of confusion. These iterative testing cycles help ensure the final design is both effective and user-friendly.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the role of cognitive ergonomics in system design.

Cognitive ergonomics plays a crucial role in system design by focusing on the mental processes involved in human-system interaction. It aims to optimize the cognitive load on the user, minimizing errors and maximizing efficiency and satisfaction. This involves considering factors like attention, memory, perception, decision-making, and problem-solving.

For example, in designing a flight simulator, cognitive ergonomics would guide the layout of information on the dashboard, ensuring that critical information is easily accessible and perceived accurately under pressure. It would also influence the design of decision-support systems, helping pilots make faster and more accurate judgments in complex situations. Poorly designed interfaces can lead to cognitive overload, resulting in missed warnings, incorrect actions, and even accidents. Conversely, well-designed systems, taking into account cognitive ergonomics principles, allow users to perform efficiently and effectively with minimal mental effort.

Q 17. How do you apply human factors principles to the design of safety-critical systems?

Designing safety-critical systems requires a rigorous application of human factors principles. The goal is to minimize the potential for human error to lead to catastrophic consequences. This involves a multifaceted approach that begins with thorough hazard analysis, identifying potential failure modes and their associated risks.

We use techniques like Failure Mode and Effects Analysis (FMEA) and Human Error Analysis (HEA) to pinpoint areas of vulnerability and design solutions to mitigate risks. This might involve designing redundant systems, incorporating safety nets to catch errors, using clear and unambiguous warnings, and providing adequate training to users. For instance, in the design of a nuclear power plant control system, we would implement multiple layers of checks and balances, ensuring that a single human error doesn’t trigger a chain of events leading to a meltdown.

Furthermore, we focus on designing for human limitations. We understand that people get fatigued, distracted, and make mistakes. The design should anticipate these human frailties and provide support to compensate for them. This may involve using visual and auditory cues to alert users to critical situations or incorporating automated checks and alerts.

Q 18. What are some common human factors issues in software design?

Common human factors issues in software design frequently revolve around usability, accessibility, and cognitive load. Poor navigation, for instance, can leave users frustrated and unable to find the information or functionality they need. Inconsistent design, with different elements behaving in unexpected ways, further exacerbates this problem. Button labels that don't match their function is a prime example.

Accessibility issues, such as inadequate color contrast or lack of keyboard navigation, can exclude users with disabilities. Cognitive overload, stemming from cluttered interfaces, poorly organized information, and overly complex workflows, often leads to errors and decreased efficiency. For example, an overly complicated user registration process can lead to abandonment. Finally, poor error handling and unhelpful error messages leave users feeling helpless and frustrated.

Q 19. Describe your experience with user research methodologies.

My experience with user research methodologies is extensive, covering a range of qualitative and quantitative techniques. I frequently employ usability testing, both in-person and remote, to observe users interacting with a system and identify usability issues. Heuristic evaluation leverages expert judgment to assess a system against established usability principles. Cognitive walkthroughs simulate user behavior to identify potential points of confusion.

I also utilize user interviews and focus groups to gather in-depth insights into user needs, preferences, and mental models. Surveys provide broader quantitative data, allowing for statistically significant comparisons across user groups. Finally, I’m experienced with eye-tracking and other physiological data collection methods to understand users’ attention and cognitive processes. The choice of methodology always depends on the research question, project goals, and available resources.

Q 20. How do you ensure the ethical considerations in conducting human factors research?

Ethical considerations are paramount in human factors research. Informed consent is crucial; participants must understand the purpose of the study, the procedures involved, and the potential risks and benefits before participating. Their participation should always be voluntary and they should be free to withdraw at any time without penalty.

Data privacy and confidentiality are also critical. Participant data must be protected and anonymized to prevent identification. All research must adhere to relevant ethical guidelines and regulations, ensuring the well-being and rights of participants are always prioritized. For instance, we always obtain IRB approval before conducting any research involving human participants. Transparency in the research process and the dissemination of findings are also key components of responsible ethical practice.

Q 21. Explain the concept of workload and its assessment in human factors.

Workload, in human factors, refers to the mental and physical demands placed on a person by a task or system. It’s a critical concept because excessive workload can lead to errors, decreased performance, and increased stress. Workload assessment aims to quantify these demands and determine whether they are within acceptable limits.

Several methods are used for workload assessment. Subjective measures like NASA-TLX (Task Load Index) involve users rating their perceived workload on various dimensions. Objective measures involve quantifying performance, physiological signals (heart rate, eye movements), or task completion time. For example, in designing a traffic control system, we might measure controller performance (reaction time, accuracy) and their physiological responses (heart rate variability) to assess their workload under different traffic conditions. A high workload indicates the need for design modifications, possibly involving automation or simplifying procedures to reduce the demands on the operator.

Q 22. How do you incorporate human factors considerations into risk assessment?

Incorporating human factors into risk assessment is crucial for building safer and more efficient systems. It’s about understanding how human capabilities, limitations, and behaviors interact with the system to influence the likelihood and consequences of hazards. Instead of focusing solely on technical failures, we consider the ‘human in the loop.’

We do this by using a variety of methods. Human Reliability Analysis (HRA) techniques, like THERP (Technique for Human Error Rate Prediction), help quantify the probability of human error contributing to an incident. We also use Fault Tree Analysis (FTA) and Event Tree Analysis (ETA), modifying them to explicitly include human actions and decisions as potential initiating events or mitigating factors. For example, in an FTA for a power plant, we might include a branch analyzing the probability of an operator failing to correctly respond to an alarm due to fatigue or poor training.

Furthermore, we use Human-centered design principles during the risk assessment process itself, ensuring that the assessment tools and processes are easily understood and usable by the people involved. This reduces the risk of errors in the assessment itself. Ultimately, the goal is to identify areas where human-system interaction might lead to risk, and then propose design changes, training programs, or procedural modifications to mitigate those risks.

Q 23. What is your experience with human factors standards and guidelines (e.g., ISO, ANSI)?

I have extensive experience working with various human factors standards and guidelines, including those from ISO and ANSI. My familiarity extends beyond simply knowing the standards exist; I understand their practical application in real-world projects. For instance, I’ve used ISO 9241-171 on usability to evaluate the user interface of a medical device, focusing on aspects like learnability, efficiency, memorability, errors, and satisfaction.

Similarly, I have utilized ANSI/HFES 000-2017, the Human Factors and Ergonomics Society’s standard on Human Factors Engineering, as a framework for conducting human factors analyses throughout the design life cycle of complex systems. I’m also familiar with specific standards related to workplace design, safety, and human-computer interaction. The key is not just adherence but applying the principles appropriately to the specific situation, considering the context and constraints of the project.

Q 24. Describe a situation where you had to adapt your human factors approach to a specific context.

During a project designing a new control system for a nuclear power plant, I had to adapt my approach significantly. Initially, I planned to conduct a traditional laboratory-based usability study. However, the high-stakes nature of the system and the associated regulatory requirements made this impractical and ethically challenging. The solution involved using a combination of methods:

- High-fidelity simulations: We created a highly realistic simulator replicating the plant’s control room environment. This allowed operators to practice with the new system in a safe environment.

- Cognitive task analysis: We interviewed experienced plant operators to understand their mental models and workflow, identifying potential areas of difficulty with the new system.

- Cognitive walkthroughs: We guided operators through simulated scenarios, observing their actions and thought processes to assess the system’s usability.

This adapted approach allowed us to address the context-specific challenges while still ensuring the system’s usability and safety. It demonstrated flexibility and the ability to select and tailor methods to fit unique project circumstances.

Q 25. How do you communicate your human factors findings to stakeholders?

Communicating human factors findings effectively is crucial for their impact. I tailor my communication strategy to the audience. For technical stakeholders, I provide detailed reports with quantitative data and technical analyses. For management, I focus on the high-level implications and recommendations, using visuals like charts and graphs to highlight key findings.

I often use a storytelling approach, weaving the data into a narrative that demonstrates the importance of human factors. For example, instead of just saying ‘the usability test revealed significant errors,’ I might say ‘during the test, operators made frequent mistakes leading to X, highlighting a critical flaw in Y which could cause Z’. This makes the findings more relatable and memorable. Finally, I always ensure open communication and actively solicit feedback from stakeholders, fostering collaboration and mutual understanding.

Q 26. How do you stay up-to-date with the latest advancements in human factors modeling?

Staying current in human factors modeling involves a multifaceted approach. I actively participate in professional organizations like the Human Factors and Ergonomics Society (HFES), attending conferences and workshops to learn about the latest research and methodologies. I also subscribe to relevant journals and regularly review publications to stay informed on advancements in areas like cognitive modeling, virtual reality applications for training, and new HRA techniques.

Furthermore, I actively network with other human factors professionals through online communities and collaborations on projects, exchanging ideas and insights. This continuous learning ensures my skills and knowledge remain at the forefront of the field, allowing me to leverage the most effective and up-to-date approaches in my work.

Q 27. What are your strengths and weaknesses in human factors modeling?

My strengths lie in my ability to integrate diverse human factors methods into a holistic approach tailored to the specific context of a project. I’m proficient in various HRA techniques, usability testing methods, and cognitive task analysis. I’m also a strong communicator, able to translate complex technical information into accessible language for diverse stakeholders.

An area for continued development is expanding my expertise in advanced modeling techniques like agent-based modeling. While I possess foundational knowledge, I’m committed to broadening my skills in this area to further enhance my ability to address complex, dynamic human-system interactions.

Q 28. What are your salary expectations?

My salary expectations are commensurate with my experience and expertise in the field of human factors modeling, and in line with the market rate for similar roles. I am open to discussing a compensation package that fairly reflects my contributions and the value I bring to the organization. I’m more interested in finding a role that presents significant opportunities for growth and impact than focusing solely on a specific number.

Key Topics to Learn for Human Factors Modeling Interview

- Human-Computer Interaction (HCI): Understand the principles of designing user-friendly interfaces and systems, considering cognitive load, usability, and accessibility.

- Cognitive Modeling: Explore different cognitive architectures and their applications in predicting human performance in various tasks. Practical application: Evaluating the effectiveness of a new control panel design through cognitive task analysis.

- Human Error Analysis: Learn techniques for identifying and mitigating human error, including the use of Human Reliability Analysis (HRA) methods. Practical application: Analyzing accident reports to identify contributing factors and propose design improvements.

- Work Analysis and Design: Master methods for analyzing work systems to optimize efficiency, safety, and job satisfaction. Practical application: Designing a more ergonomically sound workspace to reduce musculoskeletal injuries.

- Modeling Human Performance: Gain proficiency in using simulation and modeling software to predict human behavior in complex systems. Explore different modeling approaches (e.g., queuing theory, discrete event simulation).

- Experimental Design and Data Analysis: Understand the principles of experimental design and statistical analysis for evaluating the effectiveness of human factors interventions. This includes familiarity with statistical software packages.

- Usability Testing and Evaluation: Develop skills in conducting usability testing and interpreting the results to improve system design. This includes a range of testing methodologies, from heuristic evaluation to user interviews.

- Safety and Risk Assessment: Learn to conduct safety and risk assessments to identify and mitigate hazards within human-machine systems. Practical application: Contributing to a risk assessment for a new autonomous vehicle system.

Next Steps

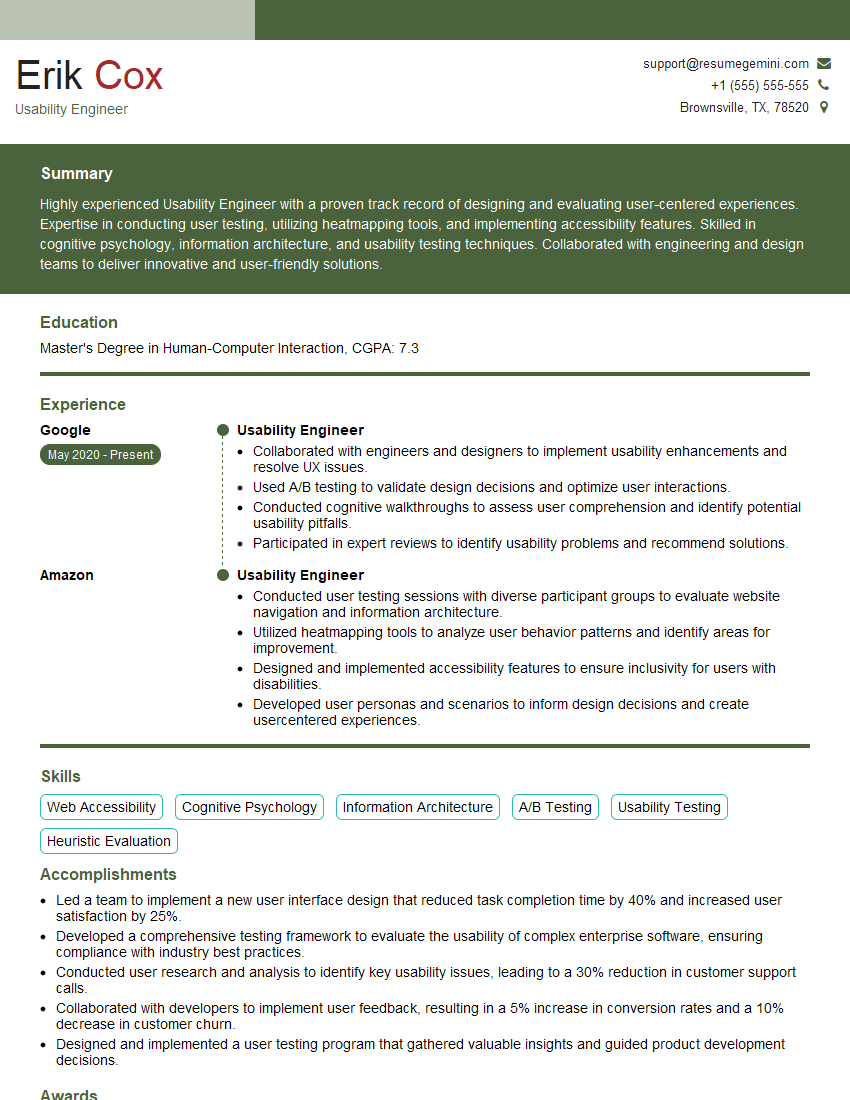

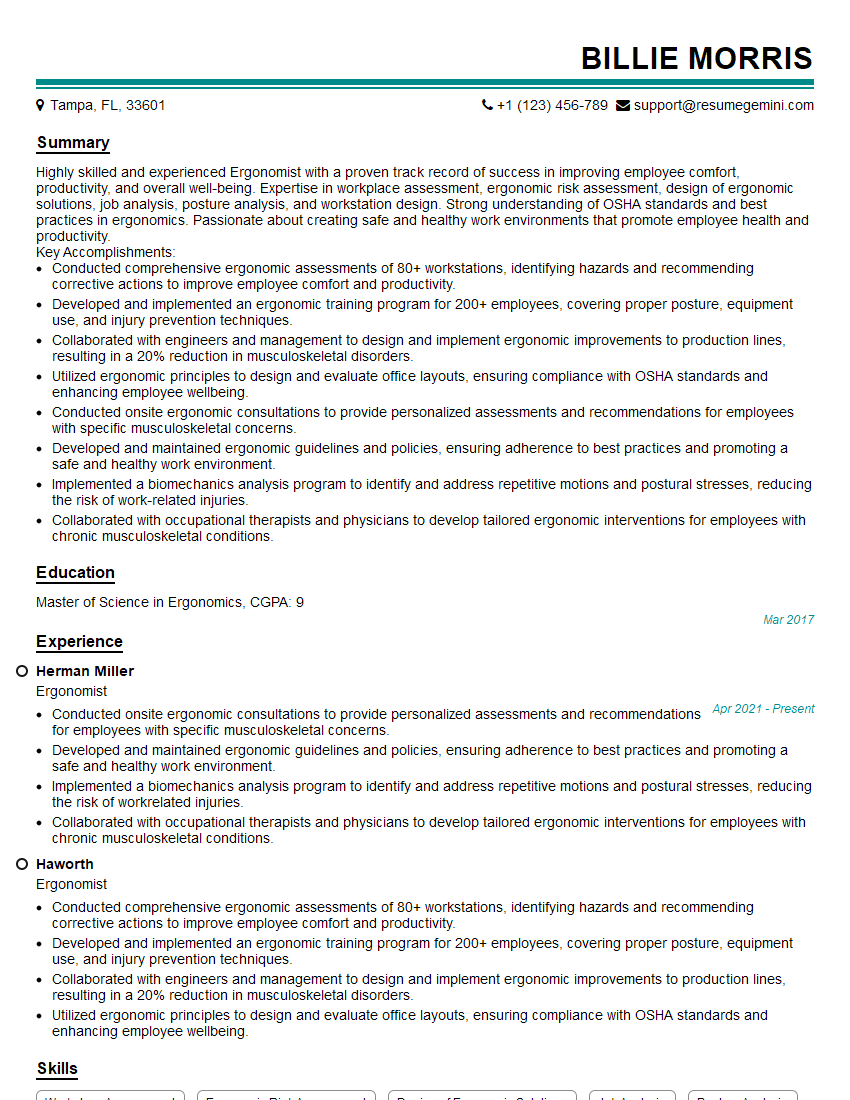

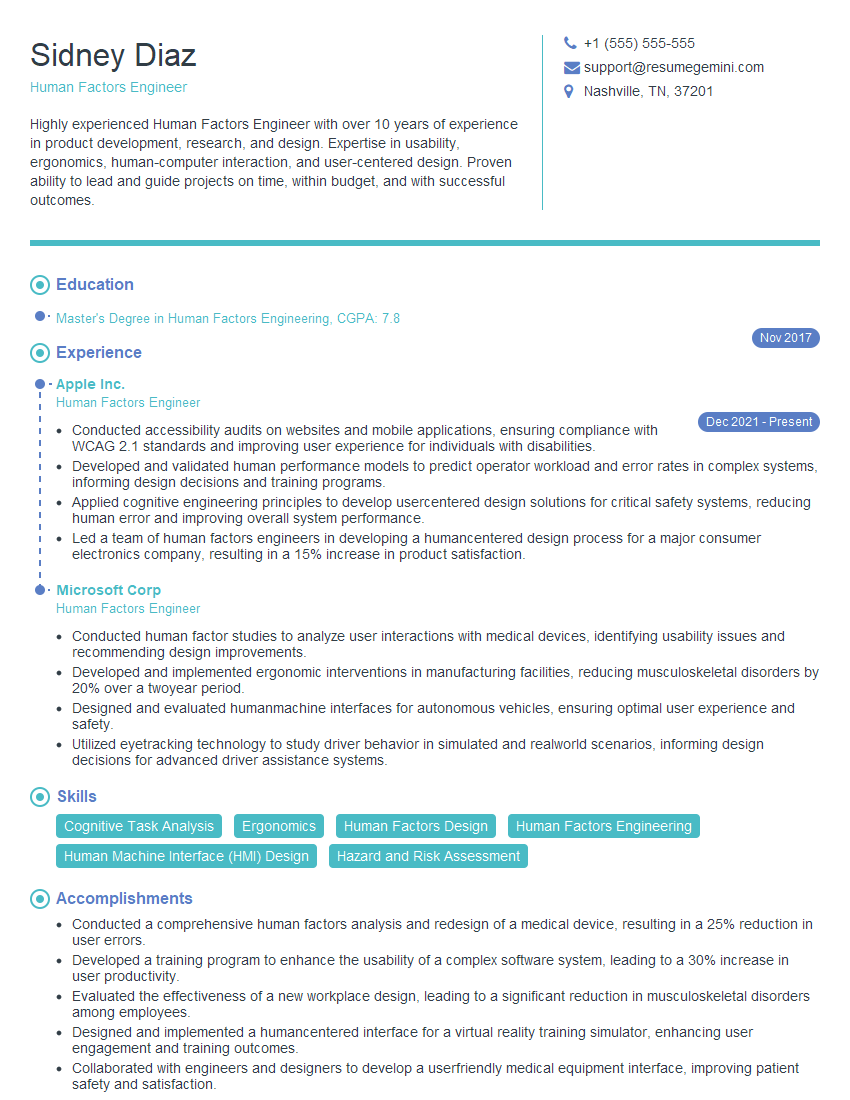

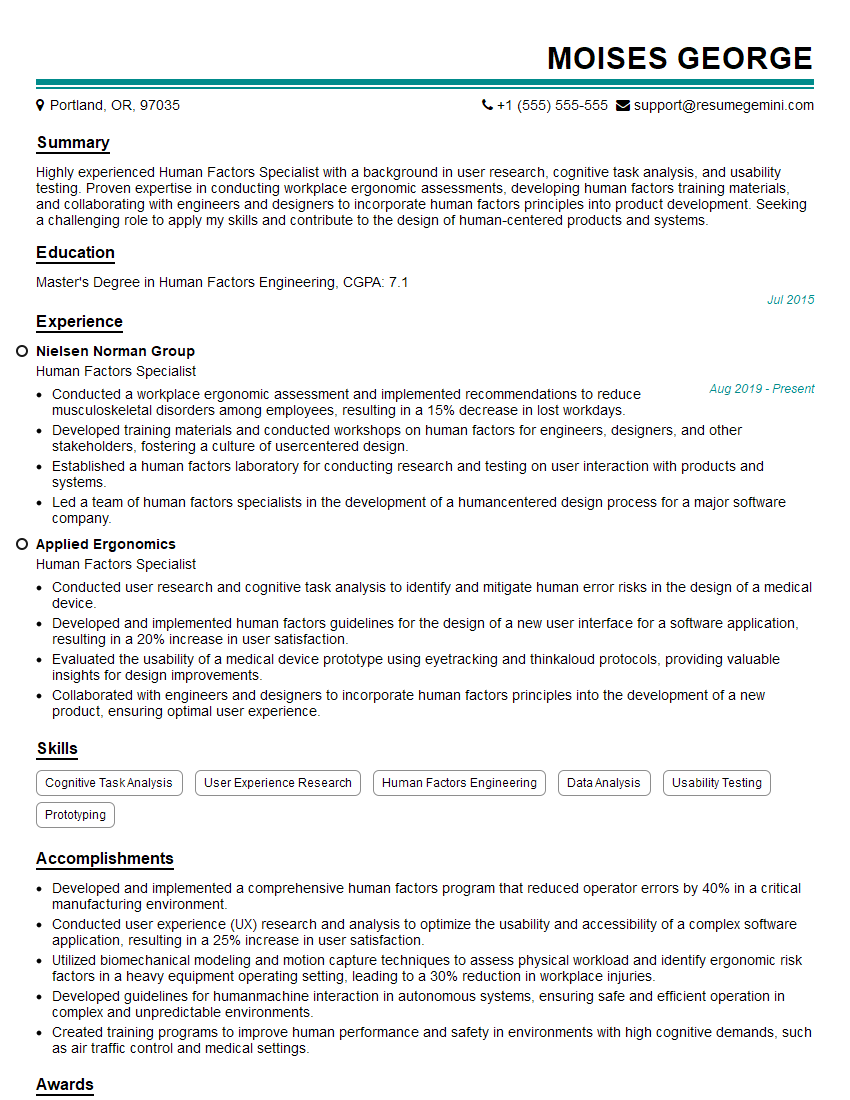

Mastering Human Factors Modeling opens doors to exciting careers in diverse fields, offering opportunities for innovation and impactful contributions to improving safety, efficiency, and user experience. To maximize your job prospects, crafting a compelling and ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you build a professional resume tailored to your skills and experience in Human Factors Modeling. We provide examples of resumes specifically designed for this field to give you a head start. Invest time in crafting a strong resume – it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good