The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Human Factors Research Methods interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Human Factors Research Methods Interview

Q 1. Explain the difference between formative and summative evaluation in human factors research.

Formative and summative evaluations are two crucial stages in human factors research, both aimed at improving design but differing significantly in their timing and purpose. Think of building a house: formative evaluation is like checking the foundation and walls during construction, while summative evaluation is the final inspection before moving in.

Formative evaluation is conducted during the design process. It involves iterative testing and feedback to identify usability issues early on, allowing for adjustments and improvements before the design is finalized. It’s all about continuous improvement and preventing major problems down the line. For example, during the development of a new medical device, formative evaluation might involve user testing prototypes with healthcare professionals to gather feedback on ease of use, safety features, and workflow integration. Changes are made based on this feedback, leading to a better final product.

Summative evaluation, on the other hand, takes place after the design is complete. Its goal is to assess the overall effectiveness and usability of the final product. It’s the assessment of the final product’s performance against predefined criteria. It often involves larger-scale studies to determine user satisfaction and overall performance. For example, after launching the new medical device, summative evaluation might track its use in real-world clinical settings to measure its impact on patient outcomes and healthcare provider efficiency.

Q 2. Describe three different usability testing methods and their respective strengths and weaknesses.

Usability testing employs various methods to understand how users interact with a system. Here are three common approaches:

- Think-Aloud Protocol: Users verbalize their thoughts and actions while interacting with the system. This provides valuable insights into their cognitive processes and problem-solving strategies. Strength: Offers rich qualitative data and reveals users’ mental models. Weakness: Can be time-consuming and may influence users’ natural behavior.

- Heuristic Evaluation: Experts review the system against established usability heuristics (principles) to identify potential usability problems. Strength: Efficient and cost-effective, especially in early design stages. Weakness: Experts might miss subtle usability issues or lack user perspective.

- A/B Testing: Two versions of a system are compared to determine which performs better based on specific metrics (e.g., task completion rate, error rate). Strength: Provides quantitative data to objectively compare different design options. Weakness: Focuses on specific metrics and may not capture all aspects of usability.

Choosing the right method depends on the project’s goals, resources, and stage of development. Often, a combination of methods provides the most comprehensive understanding of usability.

Q 3. What are some key ethical considerations in conducting human factors research?

Ethical considerations are paramount in human factors research. Protecting participants’ rights and well-being is crucial. Key considerations include:

- Informed Consent: Participants must understand the study’s purpose, procedures, risks, and benefits before agreeing to participate. This includes the right to withdraw at any time without penalty.

- Confidentiality and Anonymity: Protecting participant data is vital, employing methods to ensure their identity remains confidential and data is anonymized whenever possible.

- Minimizing Risk: Researchers should minimize any physical, psychological, or emotional harm to participants. This might involve careful task design or providing debriefing sessions after the study.

- Data Security: Securely storing and handling data to prevent unauthorized access or disclosure.

- Debriefing: Providing participants with information about the study’s findings and addressing any concerns they might have.

Ethical review boards (IRBs) play a critical role in ensuring research is conducted ethically. They review research protocols to ensure compliance with ethical guidelines.

Q 4. How would you apply heuristic evaluation to assess the usability of a new mobile application?

Heuristic evaluation involves having usability experts assess a system against established usability principles (heuristics) to identify potential problems. To evaluate a new mobile application, I would follow these steps:

- Select Experts: Recruit usability experts with experience in mobile application design and relevant domain knowledge.

- Choose Heuristics: Select a set of established usability heuristics like Nielsen’s 10 Usability Heuristics or those specific to mobile interfaces.

- Individual Evaluations: Each expert independently evaluates the app, noting usability issues against the chosen heuristics. They should document the severity and impact of each issue.

- Consolidate Findings: Gather and consolidate the findings from all experts, identifying common usability problems and prioritizing them based on severity and impact.

- Severity Rating: Assign severity ratings to each usability problem, reflecting the likelihood and impact of the problem on user experience.

- Generate Recommendations: Provide concrete recommendations for improving the application’s usability, addressing the identified problems.

This process provides a systematic and efficient way to identify potential usability issues in the app, allowing for design improvements before releasing the app to a larger user base.

Q 5. Explain the concept of cognitive workload and how it’s measured.

Cognitive workload refers to the mental effort required to perform a task. It’s like the mental “fuel” needed to drive a car. A simple task requires little fuel, while a complex one uses much more. When workload exceeds capacity, performance suffers, leading to errors and stress.

Measuring cognitive workload is crucial to ensure tasks are manageable and user experience is not overly demanding. Several methods exist, categorized as:

- Subjective Measures: Participants rate their perceived mental effort using questionnaires or scales (e.g., NASA-TLX). These are easy to administer but rely on self-report, which can be subjective.

- Objective Physiological Measures: These methods use physiological signals like heart rate, eye tracking, and brain activity (EEG) to assess workload. These are more objective, but can be costly and require specialized equipment.

- Performance-Based Measures: This method measures task performance (e.g., speed, accuracy, error rate) which indirectly indicates workload. Slower speed or more errors might indicate higher workload.

The best approach often combines subjective and objective methods to get a comprehensive understanding of cognitive workload.

Q 6. What are some common human error models used in human factors analysis?

Human error models help understand the causes of human errors and design systems to mitigate them. Several models exist, each with a different perspective:

- Reason’s Swiss Cheese Model: This model depicts system failures as a consequence of multiple layers of defenses failing simultaneously. Like slices of Swiss cheese, each layer has “holes” representing potential failures. If the holes align, an accident can occur.

- Human Error Classification: This categorizes errors based on their characteristics, such as slips (errors in execution), lapses (errors in memory), mistakes (errors in planning), and violations (deliberate rule-breaking).

- SHEL Model: This focuses on the interaction between the human (H), equipment (E), environment (E), and liveware (L – other people). It considers how these factors contribute to errors.

Understanding human error models is crucial in designing systems that anticipate and minimize human error.

Q 7. Describe the principles of human-computer interaction (HCI).

Human-computer interaction (HCI) studies the design and use of computer technology, focusing on interfaces between humans and computers. It aims to create user-friendly and efficient systems.

Key principles of HCI include:

- Usability: Systems should be easy to learn, use, and remember.

- Learnability: Users should be able to quickly and easily learn how to use the system.

- Efficiency: Users should be able to accomplish tasks quickly and effectively.

- Memorability: Users should be able to easily remember how to use the system after a period of not using it.

- Errors: The system should minimize errors and provide clear, helpful error messages.

- Satisfaction: Users should find the system pleasant and enjoyable to use.

- Accessibility: Systems should be usable by people with disabilities.

Applying these principles leads to systems that are not only functional but also enjoyable and accessible to a broad range of users.

Q 8. How do you conduct a task analysis?

Task analysis is a cornerstone of human factors research, systematically breaking down a task into its constituent parts to understand how humans perform it. It helps identify potential usability issues, inefficiencies, and safety hazards. There are several methods, each with its strengths and weaknesses:

- Hierarchical Task Analysis (HTA): This method decomposes a task into subtasks, then further into sub-subtasks, creating a hierarchical structure. Think of it like an outline for a complex process. For example, analyzing the task of ‘preparing a cup of coffee’ might start with ‘gather materials,’ then branch out to ‘get coffee grounds,’ ‘get water,’ etc.

- Flowcharts: Visual representations that illustrate the sequence of actions within a task. They are especially useful for showing branching paths and decision-making points. Imagine a flowchart for a website checkout process, showcasing different paths depending on payment methods.

- GOMS (Goals, Operators, Methods, Selection rules): A cognitive model that analyzes task execution at a more abstract level. It defines the goals the user wants to achieve, the operators used to achieve them, the methods employed, and the selection rules that guide operator choices. This is particularly useful for designing user interfaces for software applications.

- Cognitive Task Analysis (CTA): This focuses on the mental processes involved in task completion, considering things like memory, attention, and decision-making. A CTA would be beneficial when designing a complex control panel for a spacecraft.

The choice of method depends on the complexity of the task and the research goals. Often, a combination of methods is employed for a comprehensive understanding.

Q 9. Explain the difference between anthropometry and ergonomics.

While both anthropometry and ergonomics are crucial in human factors, they address different aspects of human-system interaction. Anthropometry is the study of human body measurements, including height, weight, reach, and limb dimensions. It provides the foundational data for designing systems that physically accommodate the human body. Think of designing a car seat – anthropometric data ensures it fits a wide range of body sizes comfortably.

Ergonomics, on the other hand, is a broader field that encompasses anthropometry but also considers other factors influencing human performance and well-being in relation to their work environment. It takes into account physiological, psychological, and cognitive aspects. Ergonomics would consider not only the physical dimensions of the car seat but also the driver’s posture, visibility, and the ease of using the controls. Essentially, ergonomics aims to optimize the entire system to match the needs and capabilities of the human user for safety, efficiency, and comfort.

Q 10. What are some common methods for data analysis in human factors research?

Data analysis in human factors research depends heavily on the type of data collected. Common methods include:

- Descriptive Statistics: Calculating measures like mean, median, mode, and standard deviation to summarize the data. This is a starting point for understanding basic trends.

- Inferential Statistics: Using statistical tests (e.g., t-tests, ANOVA, regression analysis) to draw conclusions about populations based on sample data. This helps determine the significance of findings.

- Qualitative Data Analysis: For data like interview transcripts or observation notes, techniques like thematic analysis, content analysis, and grounded theory are used to identify recurring patterns and themes. This helps unveil more nuanced understandings.

- Statistical Software Packages: Tools like SPSS, R, and SAS are essential for conducting more advanced analyses. For example, regression analysis in R could be used to model the relationship between task completion time and system complexity.

The selection of appropriate statistical methods depends on the research question, the type of data (nominal, ordinal, interval, ratio), and the assumptions of the statistical test.

Q 11. Describe your experience with different types of experimental designs.

My experience encompasses a wide range of experimental designs, including:

- Between-subjects designs: Different groups of participants are assigned to different experimental conditions. For example, comparing the performance of two groups on a driving simulator, one using a new heads-up display and the other using a traditional one.

- Within-subjects designs: The same participants are exposed to all experimental conditions. This reduces individual differences as a source of error. For instance, measuring a participant’s reaction time under various levels of background noise.

- Factorial designs: Incorporating multiple independent variables (factors) and their interactions. A classic example would be testing the effect of both screen size and font size on reading comprehension.

- Repeated measures designs: Similar to within-subjects, but with multiple measurements over time. This can track the effects of training or habituation.

The best design depends on the research question and ethical considerations. For example, a within-subjects design might be inappropriate if there is a risk of carry-over effects between conditions.

Q 12. How do you handle conflicting results from different research methods?

Conflicting results from different research methods necessitate careful consideration. A systematic approach is crucial:

- Examine Methodological Differences: Carefully analyze the differences in the methodologies used. Were the samples comparable? Were the measurement instruments reliable and valid? Did the procedures differ in ways that might explain discrepancies?

- Explore Potential Moderator Variables: Are there factors (e.g., participant demographics, situational contexts) that might moderate the relationship between the independent and dependent variables and explain the differences in findings?

- Qualitative Data Integration: If qualitative data is available, use it to enrich the quantitative findings and explore the ‘why’ behind the inconsistencies. Qualitative insights can help make sense of statistical anomalies.

- Meta-analysis: If multiple studies using diverse methods address the same research question, a meta-analysis can be conducted to synthesize the findings and identify potential sources of variation.

Often, conflicting results highlight the need for a more nuanced understanding of the phenomenon being studied. They may point towards the inadequacy of any single method to capture the full complexity of human behavior and performance.

Q 13. Explain the importance of iterative design in human factors.

Iterative design is fundamental to effective human factors engineering. It involves cyclical processes of design, testing, and refinement, with feedback informing each stage. Think of building a house: you wouldn’t just build it all at once without checking the foundation or walls along the way. Iterative design mimics this approach.

The process typically begins with an initial design concept. This is then tested using appropriate human factors methods (e.g., usability testing, heuristic evaluation). The results are analyzed, leading to improvements in the design, which is then retested. This cycle continues until the design meets the specified performance criteria and user needs. For instance, a software application might undergo several iterations of usability testing, each revealing new usability problems and suggesting improvements to the interface. This continuous feedback loop guarantees a more user-centered, effective, and enjoyable final product.

Q 14. How do you ensure the generalizability of your research findings?

Ensuring generalizability means that the findings from a study can be applied to a broader population beyond the specific sample studied. Several strategies enhance generalizability:

- Representative Sampling: Using a sample that accurately reflects the characteristics of the target population. For example, if studying the usability of a website for older adults, the sample should represent the diversity in age, technological proficiency, and health status within this population.

- Appropriate Sample Size: A larger, more diverse sample increases the confidence in generalizing the results. Statistical power analysis is used to determine the appropriate sample size to detect meaningful effects.

- Multiple Contexts and Settings: Conducting studies in various settings (e.g., laboratory, field) can help determine the robustness of findings and their applicability across different environments.

- Replication Studies: Independent researchers repeating the study with different samples and potentially modified procedures can strengthen the reliability and generalizability of the results. If different teams obtain similar findings, the confidence in generalizability increases significantly.

It is essential to acknowledge the limitations of the research and to clearly state the population to which the findings can realistically be generalized. No study can guarantee perfect generalizability, but employing these strategies maximizes the chances of obtaining meaningful and widely applicable results.

Q 15. What are some key considerations for designing accessible interfaces?

Designing accessible interfaces means creating products and services that are usable by people with a wide range of abilities and disabilities. This goes beyond simply adhering to accessibility guidelines; it requires a deep understanding of inclusive design principles.

- Consider diverse impairments: Think beyond visual impairments (blindness, low vision). Consider motor impairments (limited dexterity, tremors), cognitive impairments (learning disabilities, memory issues), auditory impairments (deafness, hard of hearing), and neurological conditions (e.g., epilepsy).

- Employ multiple modalities: Don’t rely solely on visual information. Use auditory cues, alternative text for images, and keyboard navigation. For example, providing audio descriptions for videos and clear, concise text alternatives for images improves accessibility for visually impaired users.

- Follow accessibility guidelines: Adhere to standards like WCAG (Web Content Accessibility Guidelines) and Section 508. These provide a framework for creating accessible digital content.

- Usability testing with diverse participants: Incorporate participants with different abilities into usability testing. Observing how they interact with your interface reveals critical usability issues that might otherwise go unnoticed.

- Simple and intuitive design: Avoid complex layouts, jargon, and unnecessary steps. A clear and consistent interface reduces cognitive load for all users.

For example, designing a website with clear visual hierarchy, sufficient color contrast, and keyboard-only navigation greatly enhances its accessibility.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with eye-tracking technology.

I have extensive experience using eye-tracking technology in various human factors research projects. I’ve employed both mobile and stationary eye trackers to understand user attention, visual search patterns, and cognitive load during human-computer interaction. The data provides invaluable insights into where users look, how long they fixate on specific elements, and how their gaze patterns change in response to different interface designs.

In one project, we used eye-tracking to evaluate the effectiveness of a new dashboard design for air traffic controllers. The results revealed that certain critical information was often overlooked, leading to design modifications that improved situational awareness and task performance. In another study, we used eye-tracking to assess the usability of a mobile application for managing medication. We identified areas where users struggled to locate information, prompting improvements to the application’s layout and navigation.

I’m proficient in analyzing eye-tracking data using software like Tobii Studio and SR Research’s EyeLink software. I’m experienced in interpreting heatmaps, fixation durations, saccades, and other metrics to draw meaningful conclusions about user behavior.

Q 17. How do you incorporate user feedback into the design process?

User feedback is paramount in the design process. It’s the voice of the customer, providing invaluable insights into their needs, preferences, and pain points. I incorporate user feedback throughout the design lifecycle using various methods:

- Usability testing: Observing participants as they interact with the system provides direct feedback on usability issues. I conduct both moderated and unmoderated tests, using think-aloud protocols to understand user thought processes.

- Surveys and questionnaires: These are efficient tools for collecting quantitative and qualitative data on user satisfaction, preferences, and perceived ease of use. I employ both pre- and post-testing surveys to track changes in user attitudes.

- Interviews: In-depth interviews provide rich qualitative data, allowing users to elaborate on their experiences and identify unmet needs. This is particularly useful for understanding the ‘why’ behind user behavior.

- A/B testing: Comparing different design options through A/B testing allows for data-driven decision-making. This method efficiently identifies which design performs better based on user interactions.

- Feedback forms and in-app feedback mechanisms: These allow for continuous feedback collection throughout the system’s lifecycle.

The feedback is analyzed using both qualitative and quantitative methods. For example, I may use thematic analysis to identify recurring patterns in user comments from interviews, while analyzing survey results to identify statistical differences in user satisfaction across different design variations.

Q 18. What are some common software tools used for human factors analysis?

Many software tools facilitate human factors analysis. The choice depends on the specific research question and data type.

- Eye-tracking software: Tobii Studio, SR Research EyeLink, and SMI BeGaze are commonly used for analyzing eye-tracking data.

- Usability testing software: UserTesting, Optimal Workshop, and TryMyUI allow for remote usability testing and data collection.

- Statistical software: SPSS, R, and SAS are used for statistical analysis of quantitative data collected through surveys, experiments, and usability testing.

- Qualitative data analysis software: NVivo and Atlas.ti are used for qualitative data analysis, such as analyzing interview transcripts and user feedback.

- Simulation software: Specialized software for simulating specific environments or tasks is often used, such as flight simulators for aviation studies or driving simulators for automotive studies.

Beyond these dedicated tools, general-purpose software like Microsoft Excel or spreadsheets are often employed for basic data management and analysis.

Q 19. Explain your understanding of human factors principles related to safety.

Human factors principles related to safety focus on minimizing human error and maximizing system resilience to prevent accidents and injuries. Key principles include:

- Human error analysis: Understanding the causes of human error, such as slips, lapses, mistakes, and violations, is crucial for designing safer systems. Techniques like Human Error Analysis and Classification System (HEACS) help identify these causes.

- Task analysis: Defining tasks, identifying critical steps, and understanding the human-machine interface are essential for designing systems that are easy to understand and operate safely.

- Workload management: Preventing cognitive overload and ensuring appropriate task allocation are vital for maintaining safety. Techniques like workload assessment and task prioritization are used.

- Automation design: Automation should be designed to augment, not replace, human capabilities. Careful consideration of how automation integrates with human operators is key to maintaining safety.

- Safety culture: A strong safety culture emphasizes proactive risk management, training, and communication. This ensures that safety is a shared value and responsibility within an organization.

For example, designing a user interface with clear visual cues and alerts for critical events reduces the likelihood of human error and enhances safety.

Q 20. How would you design a study to evaluate the effectiveness of a new safety intervention?

To evaluate the effectiveness of a new safety intervention, I would design a study using a rigorous experimental design. A controlled experiment, comparing a group exposed to the intervention with a control group that is not, is ideal.

- Define the intervention: Clearly specify the safety intervention being evaluated.

- Identify outcome measures: Determine the key safety metrics to track. Examples include accident rates, near-miss incidents, and task completion time.

- Recruit participants: Recruit a representative sample of participants and randomly assign them to either the intervention group or the control group. This ensures that any observed differences are attributable to the intervention.

- Data collection: Collect data on the outcome measures before and after the intervention. Data might be gathered through observations, accident reports, surveys, or physiological measures.

- Statistical analysis: Analyze the data using appropriate statistical methods to determine if the intervention had a statistically significant effect on the outcome measures.

- Report the findings: Document the study methodology, results, and conclusions clearly and concisely.

For example, if evaluating a new training program for crane operators, I would measure accident rates and near-miss incidents in both the training group and a control group before and after the training to determine its effectiveness. A pre-post design with a control group would allow us to determine if any changes in safety performance were due to the training program itself, rather than other factors.

Q 21. What is the difference between usability and user experience?

While usability and user experience (UX) are closely related, they are distinct concepts. Usability focuses on the ease of use and efficiency of a system, while UX encompasses the overall user satisfaction and emotional response to the system.

- Usability: Concerns the effectiveness, efficiency, and satisfaction with which users can achieve their goals using a system. It’s measurable and often assessed through metrics like task completion rate, error rate, and time on task.

- User Experience (UX): Encompasses the totality of a user’s interaction with a system, including its usability, emotional response, and overall satisfaction. It’s broader and more holistic than usability and includes aspects such as aesthetics, branding, and emotional engagement.

Think of it this way: a system can be usable (easy to use and efficient), but it might not offer a good UX if it’s unattractive, boring, or frustrating to use. A system with excellent UX is usually also highly usable, but high usability doesn’t automatically translate to a positive UX. For instance, a simple calculator might be highly usable, but it might not have a great UX if the design is visually unappealing. Conversely, a social media platform might have a stunning UX with sophisticated animations but a poor usability if users struggle to find essential functions.

Q 22. Explain your experience using statistical software (e.g., SPSS, R).

I’m highly proficient in several statistical software packages, most notably SPSS and R. My experience spans the entire analytical process, from data cleaning and manipulation to advanced statistical modeling and visualization. In SPSS, I’m comfortable performing a wide range of analyses, including t-tests, ANOVAs, regression analyses, and factor analysis. I frequently utilize SPSS’s graphical capabilities to create compelling visuals for presentations and reports. My R skills are equally robust. I leverage R’s extensive libraries, such as ggplot2 for data visualization and dplyr for data manipulation, to perform complex statistical modeling and custom analyses. For example, in a recent study on human-computer interaction, I used R to perform a survival analysis to model user task completion times, identifying key factors influencing performance. This allowed us to make data-driven recommendations for interface improvements.

Beyond specific software, my strength lies in understanding the underlying statistical principles. This ensures I choose the appropriate methods and interpret results accurately, avoiding common pitfalls associated with statistical analysis in human factors research.

Q 23. How do you determine the appropriate sample size for a human factors study?

Determining the appropriate sample size for a human factors study is crucial for obtaining reliable and statistically significant results. It’s not a one-size-fits-all answer, but rather a careful consideration of several factors. The primary considerations are the desired level of power, the expected effect size, the significance level (alpha), and the variability of the data.

Let’s break this down: Power refers to the probability of correctly rejecting the null hypothesis when it’s false. A higher power (typically 80% or higher) means a lower risk of missing a true effect. The effect size represents the magnitude of the difference you expect to observe between groups or conditions. A larger effect size requires a smaller sample size. The significance level (alpha), usually set at 0.05, determines the probability of incorrectly rejecting the null hypothesis (Type I error). Finally, the variability of your data (standard deviation) impacts sample size; higher variability requires a larger sample size.

I typically use power analysis software or online calculators to determine the appropriate sample size. These tools take the above factors as input and provide the necessary sample size. For example, if I’m conducting a study comparing two user interface designs, I’d estimate the effect size based on previous research or pilot studies, input this into a power analysis calculator along with my desired power and alpha, and then obtain the required sample size for each group. Failing to adequately address sample size can lead to inconclusive results and wasted resources.

Q 24. Describe a time you had to overcome a challenge in a human factors project.

In a recent project evaluating the usability of a new medical device, we encountered unexpected challenges during user testing. We initially designed the study with a highly controlled laboratory setting, but users struggled to perform tasks as naturally as they would in a real-world clinical environment. This significantly impacted the data reliability and the overall validity of our findings.

To overcome this, we implemented a flexible approach. We quickly adapted our methodology by incorporating contextual inquiry, conducting observations in a simulated clinical setting, and incorporating user feedback throughout the process. This involved revisiting our research design, modifying our protocols, and extending the project timeline. While challenging, this pivot ultimately yielded far more valuable and realistic results than we could have achieved with the original rigid design. This experience emphasized the importance of adaptability and iterative design in human factors research, recognizing that unexpected issues frequently arise, and a flexible approach is key to overcoming them.

Q 25. What are your strengths and weaknesses as a human factors researcher?

My strengths as a human factors researcher include a strong analytical ability, a keen eye for detail, and the capacity to translate complex data into actionable insights. I’m proficient in various research methods, from experimental designs to qualitative techniques such as interviews and usability testing. I also possess excellent communication skills, capable of presenting technical information clearly and concisely to both technical and non-technical audiences.

One area I’m actively working to improve is my proficiency in advanced statistical modeling techniques, specifically Bayesian statistics. While I have a foundational understanding, I am actively pursuing further training and exploring real-world applications to deepen my expertise in this area. This continuous learning approach is vital in a field that is constantly evolving.

Q 26. What are your salary expectations?

My salary expectations are in line with the industry standard for a human factors researcher with my experience and qualifications. I am open to discussing a specific salary range after learning more about the comprehensive compensation package offered for this position.

Q 27. Why are you interested in this specific position?

I’m particularly interested in this position because of [Company Name]’s commitment to [mention specific company values or projects that align with your interests]. The opportunity to contribute to [mention specific projects or tasks] within a team that values [mention specific qualities, e.g., innovation, collaboration] strongly resonates with my professional goals. My skills and experience in [mention specific skills relevant to the position] are directly applicable to the challenges outlined in the job description, and I am confident that I can make significant contributions to your team.

Q 28. Where do you see yourself in five years?

In five years, I see myself as a highly respected senior human factors researcher at [Company Name], making significant contributions to product development and design. I envision myself leading projects, mentoring junior researchers, and actively contributing to the advancement of human factors principles within the organization. I also hope to be actively publishing my research findings in reputable journals and presenting at international conferences. My goal is to not just be technically proficient but also a recognized leader in the field.

Key Topics to Learn for Human Factors Research Methods Interview

- User-Centered Design Principles: Understanding core principles like usability, accessibility, and human error prevention. Practical application: Analyzing user interfaces for potential usability issues and proposing design improvements.

- Experimental Design & Data Analysis: Mastering various experimental designs (e.g., A/B testing, within-subjects, between-subjects) and statistical methods for analyzing human performance data. Practical application: Designing an experiment to evaluate the effectiveness of a new safety feature and interpreting the results.

- Cognitive Ergonomics: Exploring human cognitive processes (attention, memory, decision-making) and their impact on system design. Practical application: Designing a complex control panel that minimizes cognitive workload and maximizes operator performance.

- Human-Computer Interaction (HCI): Understanding the principles of designing effective and efficient interfaces between humans and computers. Practical application: Evaluating the usability of a software application and suggesting improvements based on user feedback.

- Data Collection Methods: Familiarity with various data collection techniques (e.g., questionnaires, interviews, observation, physiological measures). Practical application: Selecting the most appropriate method for collecting data in a specific human factors research study.

- Ethical Considerations in Research: Understanding ethical guidelines and best practices in conducting human factors research. Practical application: Ensuring informed consent and maintaining participant confidentiality in a research study.

- Human Factors in Specific Domains: Exploring the application of human factors principles in specific industries (e.g., aviation, automotive, healthcare). Practical application: Analyzing human error contributing factors in a specific industrial accident and proposing preventative measures.

Next Steps

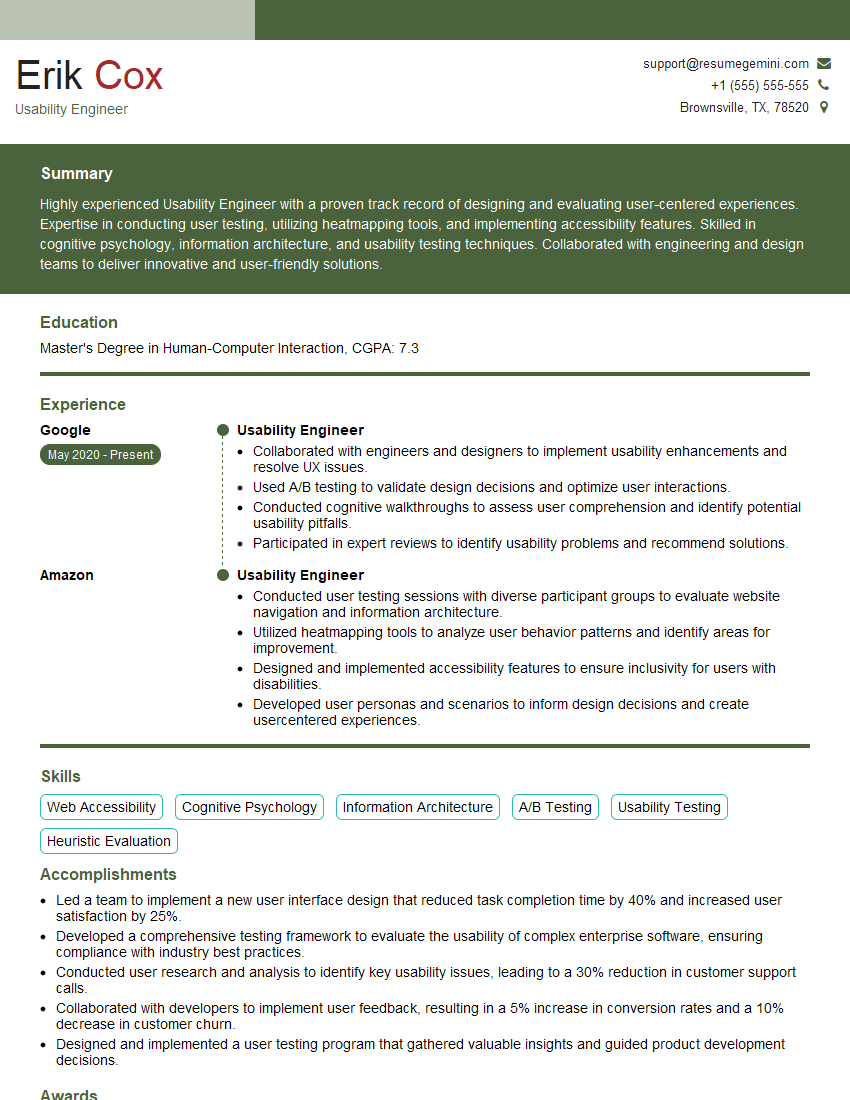

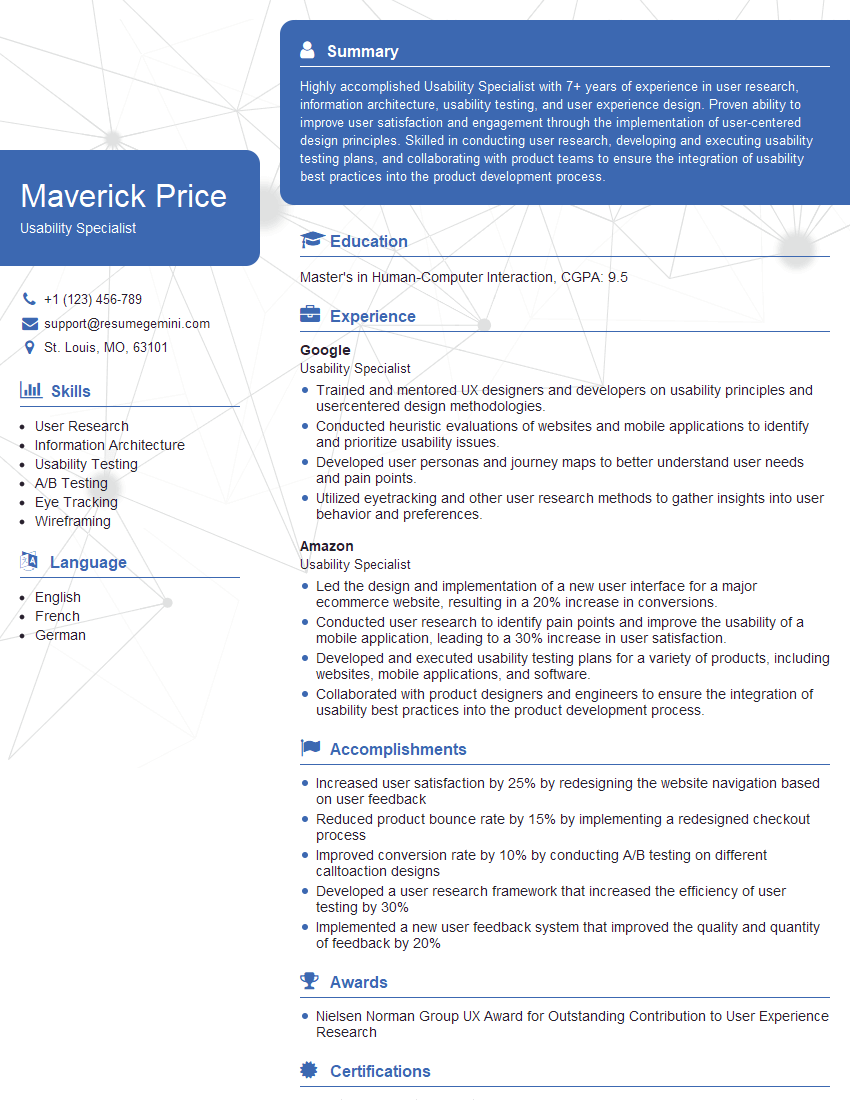

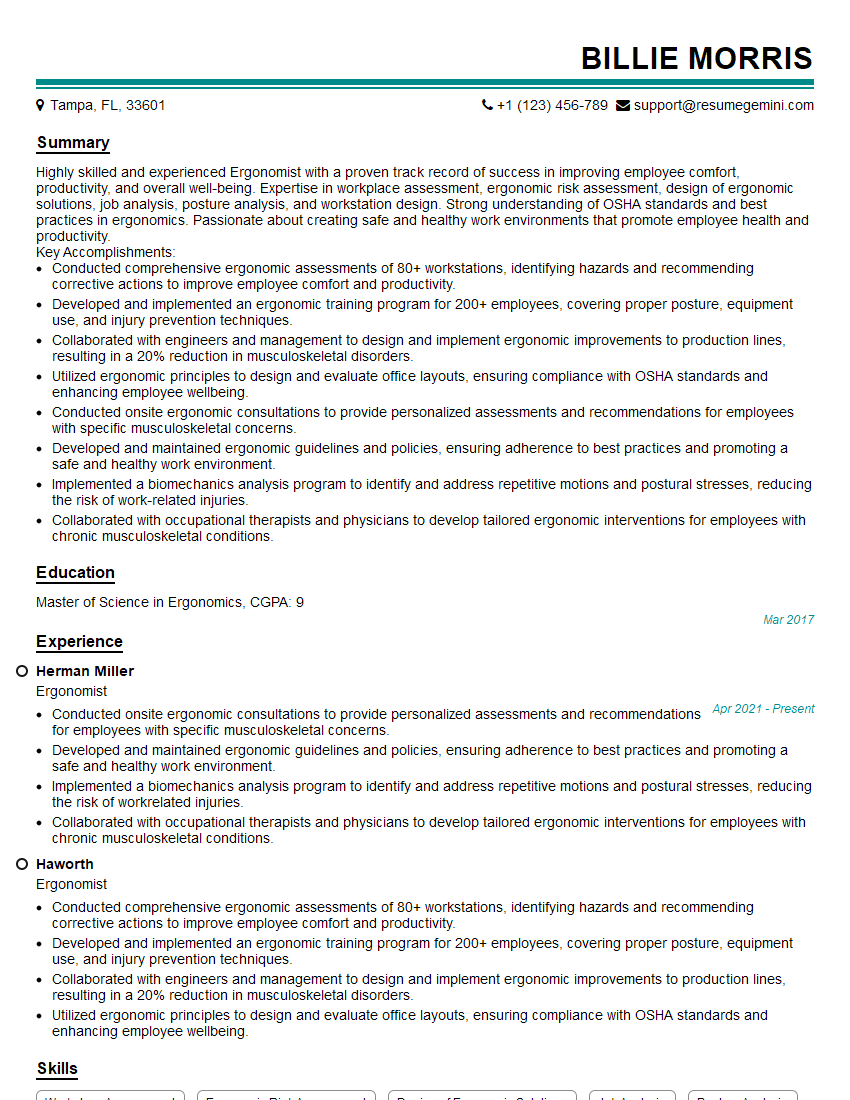

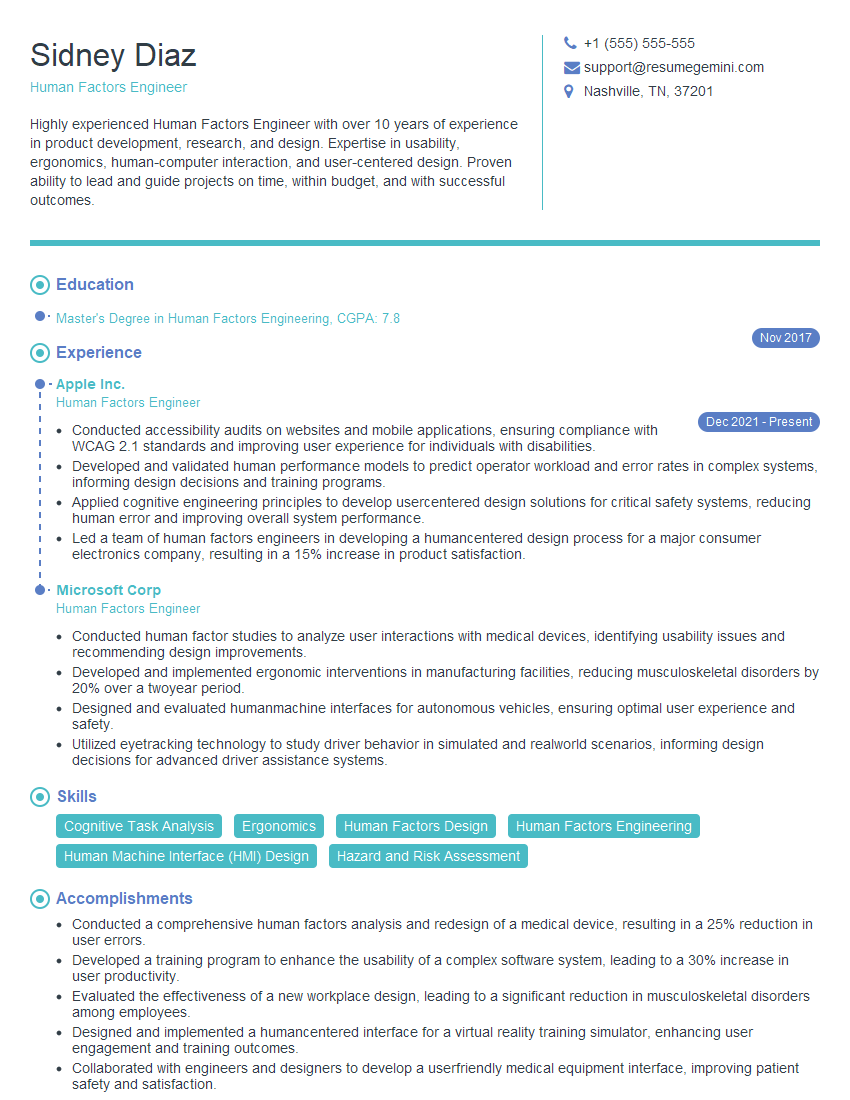

Mastering Human Factors Research Methods is crucial for a successful and fulfilling career. A strong understanding of these principles opens doors to exciting opportunities in various fields. To maximize your job prospects, crafting an ATS-friendly resume is vital. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience effectively. Examples of resumes tailored to Human Factors Research Methods are available to guide you through the process. Invest time in crafting a compelling resume – it’s your first impression with potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good