Preparation is the key to success in any interview. In this post, we’ll explore crucial Identify and Mitigate Network Anomalies interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Identify and Mitigate Network Anomalies Interview

Q 1. Explain the difference between a network anomaly and a security incident.

A network anomaly is any deviation from the expected or normal network behavior. Think of it like a hiccup in the smooth operation of your network. It might be unusual traffic patterns, unexpected device activity, or a sudden spike in resource consumption. An anomaly isn’t necessarily malicious; it could be a legitimate event that simply deviates from the established baseline. For example, a sudden increase in network traffic during a company-wide software update is an anomaly but not necessarily a security incident.

A security incident, on the other hand, is a violation of the network’s security policy that could result in a compromise of confidentiality, integrity, or availability (CIA triad). A security incident is always an anomaly, but not every anomaly is a security incident. A successful hacking attempt, data breach, or denial-of-service attack are all examples of security incidents. They represent a threat that requires immediate action and remediation.

Q 2. Describe common methods for detecting network anomalies.

Detecting network anomalies involves employing various methods, each with its strengths and weaknesses. These methods include:

- Statistical analysis: This approach uses statistical models to establish a baseline for normal network behavior and then flags any significant deviations from this baseline. This could involve monitoring metrics like bandwidth usage, packet rates, and connection attempts.

- Machine learning (ML): ML algorithms can identify complex patterns and anomalies that might be missed by simpler statistical methods. They learn from historical network data to identify deviations from the norm and flag potential threats.

- Signature-based detection: This traditional approach relies on pre-defined signatures (patterns) of known malicious activities. While effective against known attacks, it’s less effective against zero-day exploits or novel attack techniques.

- Heuristic analysis: This method uses rules and heuristics to identify suspicious behavior, even if it doesn’t match a known signature. This might flag unusual connections to known malicious IP addresses or unusual access attempts.

- Network flow analysis: Examining the flow of network traffic can reveal anomalies, such as unusual communication patterns or unexpected data transfers between network segments.

Often, a combination of these methods is used for comprehensive anomaly detection, providing a multi-layered defense.

Q 3. How do you prioritize network anomalies based on severity and impact?

Prioritizing network anomalies requires a structured approach. We use a framework that considers both severity and impact.

- Severity: This focuses on the inherent risk of the anomaly. A high-severity anomaly might indicate a critical vulnerability or a direct threat to network security, while low severity suggests a minor issue with little impact.

- Impact: This looks at the potential consequences of the anomaly. A high-impact anomaly could result in significant downtime, data loss, financial damage, or reputational harm. A low impact anomaly might be more of an annoyance than a serious threat.

I typically use a matrix combining severity and impact levels to categorize anomalies. High severity and high impact anomalies receive immediate attention; low severity and low impact anomalies might be investigated later. This matrix ensures that resources are focused on the most critical threats.

For example, a denial-of-service attack (high severity, high impact) would take precedence over unusual login attempts from an unknown location (moderate severity, low impact).

Q 4. What are the key components of an effective network anomaly response plan?

An effective network anomaly response plan should include the following key components:

- Incident identification and classification: Clear procedures for detecting, identifying, and classifying network anomalies based on their severity and impact.

- Escalation procedures: A defined process for escalating incidents to the appropriate personnel, such as security teams or management, based on the severity of the issue.

- Containment strategies: Methods for isolating affected systems or network segments to prevent further damage or compromise.

- Eradication and remediation: Steps to remove the cause of the anomaly and restore network operations to normal, including patching vulnerabilities, removing malware, and implementing necessary security enhancements.

- Recovery and restoration: Procedures for recovering lost data or restoring network services to their pre-incident state.

- Post-incident review: A process for conducting a thorough review of the incident to identify root causes, lessons learned, and areas for improvement in the network security infrastructure and response plan.

- Documentation: Maintaining comprehensive records of the incident, including all actions taken, timelines, and outcomes.

Regular testing and updates of the response plan are crucial to ensure its effectiveness.

Q 5. Explain your experience with Intrusion Detection Systems (IDS) and Intrusion Prevention Systems (IPS).

I have extensive experience deploying, managing, and configuring both Intrusion Detection Systems (IDS) and Intrusion Prevention Systems (IPS). An IDS passively monitors network traffic for malicious activity, generating alerts when suspicious patterns are detected. An IPS, on the other hand, actively intervenes to block or mitigate threats in real time.

In previous roles, I’ve worked with various IDS/IPS solutions, including Snort, Suricata, and commercial offerings from vendors like Cisco and Palo Alto Networks. My experience encompasses configuring alert rules, fine-tuning detection parameters to minimize false positives, integrating IDS/IPS with SIEM systems for centralized security management, and analyzing logs to identify trends and improve security posture. For instance, I once used Snort to detect and alert on a SQL injection attack attempt, which allowed us to block the attack before any data was compromised. This involved creating custom rules based on the attack patterns and integrating the alerts into our SIEM system for centralized monitoring and analysis.

Q 6. How do you use network monitoring tools to identify anomalies?

Network monitoring tools are indispensable for identifying anomalies. I frequently use tools like SolarWinds, PRTG, Nagios, and Zabbix to monitor network performance and security. These tools allow me to track various metrics, such as bandwidth utilization, latency, packet loss, CPU usage, and memory consumption. Deviations from established baselines can indicate anomalies. For example, a significant increase in bandwidth utilization in a specific network segment might suggest a denial-of-service attack or a malware infection. Similarly, unusually high CPU usage on a server could indicate a resource exhaustion attack or a compromised system.

I also leverage the built-in network monitoring features of many operating systems and network devices, such as Windows Performance Monitor or Cisco’s NetFlow. These tools provide valuable insights into system-level activity that might be indicative of anomalies.

By setting up alerts and thresholds, I am notified immediately whenever a metric exceeds the predefined limit, allowing for quick reaction times to mitigate potential threats.

Q 7. Describe your experience with Security Information and Event Management (SIEM) systems.

My experience with Security Information and Event Management (SIEM) systems is extensive. SIEM systems are crucial for centralizing security logs and events from various sources across the network, providing a holistic view of the security posture. I have worked with several SIEM platforms, including Splunk, QRadar, and LogRhythm. These platforms allow for efficient correlation of security events, which is vital in detecting anomalies. For example, a SIEM system can detect unusual login attempts combined with high bandwidth usage from a particular IP address, indicating a potential compromise. The ability to correlate these seemingly disparate events is a key advantage of using SIEM systems.

Beyond anomaly detection, SIEM systems allow us to generate reports, perform forensic analysis, and meet compliance requirements. I’ve utilized SIEM systems to conduct root cause analysis of security incidents, generating reports to demonstrate compliance with industry regulations, and providing valuable insights to inform our security strategy and improve our network security posture.

Q 8. How do you correlate events from different security tools to identify anomalies?

Correlating events from different security tools is crucial for identifying anomalies that might go unnoticed by individual systems. Think of it like piecing together a puzzle: each security tool provides a piece of the picture. To effectively correlate, we need a centralized Security Information and Event Management (SIEM) system. This system gathers logs from various sources – firewalls, intrusion detection systems (IDS), anti-malware software, and more. The key is to establish relationships between seemingly disparate events. For example, a firewall log showing a surge in connections from an unusual IP address, coupled with an IDS alert detecting a port scan from the same IP, and an anti-malware alert on a compromised host trying to connect to that IP address, all points towards a potential attack. The SIEM uses rules and algorithms to analyze these events and identify patterns that deviate from the norm. This requires careful configuration of the SIEM to define relevant baselines and to filter out false positives. The process often involves using techniques like time correlation, source/destination IP correlation, and protocol analysis.

A practical example would be detecting a sophisticated, multi-stage attack. One tool might detect unusual login attempts (failed logins from unusual locations), while another spots a user accessing unauthorized files soon after. The correlation of these seemingly separate incidents would reveal a coordinated breach attempt, much earlier than if you only looked at the individual security logs.

Q 9. What are some common network attacks that can lead to anomalies?

Many common network attacks manifest as anomalies. Here are some examples:

- Distributed Denial of Service (DDoS) attacks: These attacks flood a network with traffic, causing a dramatic increase in bandwidth consumption and potentially crashing services. This is easily detectable as an anomaly by monitoring network traffic volume.

- Port scans: Malicious actors use port scanning tools to identify open ports on systems, allowing them to pinpoint vulnerabilities. A sudden increase in port scan attempts from various IP addresses constitutes a significant anomaly.

- Malware infections: Infected machines often communicate with Command & Control (C&C) servers, generating unusual network traffic patterns. This could manifest as excessive outgoing connections to unusual IPs or high data transfer rates to specific destinations.

- Man-in-the-middle (MitM) attacks: These attacks intercept communication between two parties. They can result in anomalies like unexpected TLS/SSL errors, certificate problems, or modifications to encrypted data.

- Data exfiltration: When attackers steal sensitive data, they often transfer large amounts of data over a prolonged period. This manifests as a high volume of outgoing traffic, possibly to a less frequently contacted destination IP address or using unconventional transfer methods.

Detecting these anomalies requires establishing baselines for normal network behavior and using intrusion detection systems to flag deviations.

Q 10. Explain how you would investigate a suspicious network activity.

Investigating suspicious network activity requires a systematic approach. My methodology involves these steps:

- Identify the anomaly: This usually starts with an alert from a security tool or a manual observation of unusual traffic patterns. Document the time, source, destination, and any other relevant details.

- Gather evidence: Collect logs from various sources: firewall, IDS, routers, switches, endpoint security tools, and potentially the affected systems themselves. The more detailed and comprehensive the data, the better.

- Analyze the data: Correlate the logs to understand the sequence of events leading to the anomaly. Use packet capture tools like Wireshark to inspect network traffic in detail. This may involve analyzing DNS queries, HTTP requests, or other protocol-specific data.

- Isolate the affected system(s): If the source is identified, isolate the system(s) to prevent further damage. This might involve temporarily disconnecting them from the network.

- Determine the root cause: Investigate the reason behind the suspicious activity. This could involve examining malware samples, reviewing system configurations, and potentially conducting forensic analysis on affected systems.

- Remediation and prevention: Implement corrective actions, such as patching vulnerabilities, removing malware, and updating security policies. Put measures in place to prevent similar incidents from occurring again.

- Document the incident: Create a comprehensive report detailing the incident, the investigation process, and the remediation steps. This serves as a record and aids in future investigations.

For instance, if a large number of failed login attempts from a specific IP address are detected, the next step would be to review firewall logs, check the involved user accounts for compromised credentials, and potentially analyze network traffic to look for malware communication from the originating IP.

Q 11. How do you determine the root cause of a network anomaly?

Determining the root cause of a network anomaly demands careful and methodical investigation. It’s like detective work, where we piece together clues to find the culprit. The process often involves the following:

- Review logs from multiple security tools: This helps establish a timeline of events and identify the sequence that led to the anomaly. We need to understand what happened *before* the anomaly was detected.

- Analyze network traffic: Packet captures provide granular details of network communication, allowing for deep inspection of suspicious activities. Wireshark or similar tools are essential here.

- Examine system configurations: Check server settings, firewall rules, and network policies for misconfigurations that might have contributed to the anomaly.

- Investigate affected systems: Analyze system logs, registry keys (on Windows systems), and potentially memory dumps to identify malware or other malicious software.

- Consult with other teams: This often involves working with system administrators, application developers, or security engineers to gather additional information.

For example, if an anomaly shows a sudden surge in database access from a specific IP, analyzing database logs will help determine the type of queries made, the amount of data accessed, and the user accounts involved. This could reveal a SQL injection attack or unauthorized access.

Q 12. Describe your experience with various network protocols and their vulnerabilities.

My experience with network protocols is extensive, covering both common and less frequently used protocols. I understand the intricacies of TCP/IP, UDP, HTTP, HTTPS, DNS, FTP, SMTP, and many others. Understanding their underlying mechanisms allows me to identify deviations from normal behavior. For instance, I am well-versed in recognizing anomalies related to TCP handshakes, DNS amplification attacks, and vulnerabilities in specific protocol implementations. A thorough grasp of these protocols is critical in network security, as they often become the vectors for attacks.

Regarding vulnerabilities, I’m aware of numerous vulnerabilities across these protocols. Some common examples include:

- TCP SYN floods: A denial-of-service attack exploiting the TCP three-way handshake.

- DNS spoofing: A technique where an attacker redirects DNS queries to a malicious server.

- Man-in-the-middle attacks targeting SSL/TLS: Exploiting weaknesses in encryption or certificate handling to intercept traffic.

- SQL injection vulnerabilities within web applications (affecting HTTP): Allowing attackers to manipulate database queries to gain unauthorized access.

My experience enables me to effectively identify and mitigate these vulnerabilities, as well as design network configurations that reduce their impact.

Q 13. What are some common network segmentation techniques to mitigate the impact of anomalies?

Network segmentation is a crucial security strategy that limits the impact of anomalies. It involves dividing a network into smaller, isolated segments, limiting the blast radius of any compromise. Imagine a building with firewalls acting as fire doors between different departments – if a fire (security breach) starts in one area, it won’t necessarily spread to the rest.

Common network segmentation techniques include:

- VLANs (Virtual LANs): These logically separate devices on the same physical network, improving security and performance. For example, separating user traffic from server traffic.

- Firewalls: These devices control network traffic flow, blocking unauthorized access between segments.

- DMZs (Demilitarized Zones): These buffer zones isolate publicly accessible servers from the internal network, reducing the risk of an attack on the internet affecting internal systems.

- Micro-segmentation: A granular approach that isolates individual applications or workloads, offering highly targeted security. This limits the impact of a compromised application.

By strategically segmenting the network, we prevent a single point of failure from impacting the entire infrastructure. Even if one segment is compromised, the rest remain protected. Effective segmentation is essential to minimizing the damage caused by network anomalies and attacks.

Q 14. Explain your understanding of network forensics.

Network forensics is the application of forensic science to computer networks. It involves the identification, collection, and analysis of digital evidence from network devices and traffic to investigate security incidents, cybercrimes, or other network-related issues. It’s essentially digital detective work, but focused on the network itself.

The process typically involves:

- Data acquisition: This is the crucial initial step, involving collecting data from various network sources using techniques like network packet capture (using tools like Wireshark or tcpdump), log analysis, and image acquisition from network devices.

- Data analysis: This stage focuses on the examination of acquired data to identify patterns, anomalies, and evidence relevant to the investigation. This often requires deep knowledge of network protocols and security technologies.

- Evidence presentation: The final stage is presenting the findings in a clear, concise, and legally admissible format, typically including reports, timelines, and visual representations of network traffic and events.

Network forensics is essential for understanding the scope and impact of security incidents, identifying attackers, and building a strong case for prosecution. It enables organizations to respond effectively to security breaches, improve their security posture, and comply with legal and regulatory requirements. In essence, network forensics allows us to reconstruct the digital crime scene, answering critical questions like ‘What happened?’, ‘How did it happen?’, and ‘Who is responsible?’.

Q 15. How do you document and report network anomalies?

Documenting and reporting network anomalies is crucial for effective incident response and future threat prevention. My process involves a structured approach, combining automated logging with manual analysis. I begin by meticulously documenting the anomaly’s characteristics, including the timestamp, affected systems or services, observed behavior (e.g., unusually high traffic volume, failed login attempts, unexpected port scans), and any associated alerts from security monitoring tools.

- Automated Logging: I rely heavily on Security Information and Event Management (SIEM) systems to capture detailed logs of network events. These logs are timestamped and include relevant metadata, facilitating efficient analysis. For example, a SIEM system might log every login attempt, failed or successful, recording the source IP address, user credentials attempted, and the time of the attempt.

- Manual Analysis: Once an anomaly is detected, I perform a deeper analysis, examining network traffic captures (PCAP files) and correlating the information with system logs. This step is essential to understand the anomaly’s root cause and potential impact. For instance, if we see unusual outbound connections to a known malicious IP address, we’d analyze the PCAP file to determine the type of data being transmitted and the volume of traffic.

- Reporting: I create a concise yet comprehensive report that includes the anomaly’s details, my analysis, and recommended mitigation strategies. The report is formatted clearly, using visualizations like charts and graphs where applicable, to improve understanding. The report is distributed to relevant stakeholders, including management and the incident response team, with a clear action plan and timeline.

Imagine a scenario where we detect a sudden spike in traffic from a specific subnet. My report would include the timeframe of the anomaly, the affected subnet, the volume of traffic, any unusual patterns observed, and whether it impacted application performance. The recommended actions might involve temporarily blocking the subnet, investigating the source of the increased traffic, and reinforcing security controls within that subnet.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle false positives in network anomaly detection systems?

False positives are an inherent challenge in anomaly detection. My approach to handling them focuses on minimizing their occurrence and effectively triaging them when they do arise. A key aspect is fine-tuning the anomaly detection system’s parameters. This might involve adjusting thresholds, refining rules, and incorporating contextual information. For example, a machine learning model might trigger a false positive if it’s not properly trained on normal network behavior under various conditions like peak usage hours. Proper training using a robust and representative dataset is vital.

- Threshold Adjustments: If the system is too sensitive, leading to frequent false positives, I would adjust the thresholds to make it more tolerant of minor deviations from normal behavior.

- Rule Refinement: I carefully review the rules governing anomaly detection. Sometimes, a poorly defined rule can trigger false positives. I might refine the rule to include additional conditions or refine existing ones, focusing on more meaningful patterns of deviation.

- Contextual Information: Incorporating additional data sources and context can help discriminate true anomalies from false positives. This could involve analyzing logs from other systems, integrating geographical data, or using time-of-day information. For instance, unusually high traffic during normal peak usage times might not warrant an alert, whereas a sudden spike outside peak times would warrant deeper investigation.

- Manual Review: A human-in-the-loop approach is vital for addressing remaining false positives. A dedicated team reviews alerts flagged by the system, validating them against their expertise and understanding of the network environment. Automation can be used for initial filtering, leaving the most ambiguous cases for manual triage.

For instance, if our intrusion detection system (IDS) flags a pattern of network access from a specific IP address that happens to be a new remote worker’s device, it could trigger a false positive. Careful verification by manual review will prevent unwarranted blocks.

Q 17. How do you ensure the accuracy and reliability of your anomaly detection methods?

Ensuring the accuracy and reliability of anomaly detection methods is paramount. My approach involves a multi-faceted strategy. First, I choose appropriate methods based on the specific network environment and the type of anomalies expected. Statistical methods might be suitable for detecting simple deviations, while machine learning approaches can handle more complex patterns. The critical point is that the chosen method should be rigorously tested and validated to ensure its effectiveness.

- Data Quality: Accurate and complete data is crucial. I ensure the quality of data feeding the anomaly detection system by routinely auditing logs, verifying data integrity, and handling missing data appropriately. Inconsistent or incomplete data can lead to inaccurate conclusions.

- Baseline Establishment: A reliable baseline is essential for comparing against observed behavior. I establish a baseline using historical network data, taking into account seasonal variations and other factors that might affect network traffic. This might require using different methodologies for baselining in different times of the year or day.

- Regular Testing and Validation: I regularly test the accuracy and reliability of the anomaly detection system using synthetic datasets and real-world scenarios. This helps me identify and address any weaknesses or biases in the system. I also conduct regular audits of alert accuracy to refine detection methods.

- Performance Monitoring: Continuous monitoring is essential to ensure the system performs as intended. I monitor key metrics, such as false positive rates, detection rates, and response times. This allows us to identify and address any performance issues promptly.

Think of it like a weather forecast: To be accurate, a weather model needs to be calibrated with accurate historical data, handle incomplete data reasonably and account for seasonal patterns. Similarly, my anomaly detection methods require continuous refinement and validation to maintain accuracy.

Q 18. What are your experiences with different anomaly detection techniques (e.g., statistical, machine learning)?

I’ve extensive experience with various anomaly detection techniques, both statistical and machine learning-based. Statistical methods, like threshold-based detection, are useful for simple anomalies, but they struggle with complex, evolving patterns. Machine learning offers more sophisticated approaches, adapting to changing network behaviors. For example, a simple statistical method might detect an unusual increase in login failures, but a machine learning model could learn to detect more subtle variations that a simpler method would miss.

- Statistical Methods: I use statistical methods such as moving averages and standard deviation calculations to detect deviations from the established baseline. These are simple to implement and interpret but lack the adaptability of machine learning.

- Machine Learning Methods: I’ve worked with various machine learning algorithms, including Support Vector Machines (SVMs), neural networks, and clustering algorithms like K-means. These algorithms can learn complex patterns from large datasets, making them suitable for detecting intricate anomalies. For example, a neural network could be trained to identify malicious network traffic patterns based on features like packet sizes, source/destination IP addresses, and application protocols.

- Hybrid Approaches: Often, a hybrid approach combining statistical and machine learning techniques proves the most effective. Statistical methods can be used for initial filtering, and machine learning models can analyze the remaining data to identify more subtle anomalies. In this way, we leverage the simplicity and speed of statistical approaches while exploiting the power of machine learning models.

In a recent project, we initially used a simple statistical method to detect unusually high network traffic. However, this method produced a high number of false positives due to expected network traffic fluctuations. Therefore, we transitioned to a machine learning model that considered network traffic patterns across different times of day and weekdays. This helped to reduce false positives significantly while enhancing accuracy.

Q 19. Describe your experience with automating anomaly detection and response.

Automating anomaly detection and response is critical for improving efficiency and reducing response times. I’ve worked extensively on automating these processes, focusing on integrating various tools and technologies to create a seamless workflow. Automation reduces human intervention in routine tasks, freeing up human expertise for more complex investigations.

- Automated Alerting and Triaging: I’ve implemented automated systems that trigger alerts when anomalies are detected. These alerts are categorized and prioritized based on severity, helping to focus our attention on the most critical issues. For example, alerts are escalated automatically to on-call teams based on pre-defined severity levels.

- Automated Response Actions: Automation can be extended to automate certain response actions, such as temporarily blocking malicious IP addresses or isolating infected systems. This reduces the time it takes to contain threats and minimizes their potential impact. This capability needs to be carefully designed and tested in order to avoid unintended consequences.

- Orchestration Tools: I’ve used orchestration tools to manage and automate complex workflows involving multiple security tools. These tools help coordinate actions between different components of the security infrastructure, such as SIEM, intrusion detection, and firewalls. This coordination is vital for effective threat response and remediation.

For example, if our system detects a Distributed Denial of Service (DDoS) attack, an automated response system could automatically block traffic from malicious sources, scale up resources to mitigate the attack and send notifications to our team. This automated response is much faster and more effective than manual intervention.

Q 20. How do you stay up-to-date with the latest network security threats and vulnerabilities?

Staying current with the latest threats and vulnerabilities is crucial. I employ a multi-pronged approach, combining various sources of information to stay informed. It’s like being a detective – continuously updating your case files and techniques to solve new crimes.

- Threat Intelligence Feeds: I subscribe to several threat intelligence feeds from reputable sources, receiving up-to-date information on emerging threats and vulnerabilities. These feeds provide data on malware, attack techniques, and compromised systems.

- Security Newsletters and Publications: I regularly read security newsletters and publications, keeping myself abreast of the latest security research and trends. This gives me broad context for evaluating current threats.

- Security Conferences and Webinars: Participating in security conferences and webinars allows me to learn from experts in the field and network with other professionals. It’s a great way to learn about the latest methodologies and techniques.

- Vulnerability Scanners and Penetration Testing: Regularly performing vulnerability scans and penetration testing on our network helps to identify potential weaknesses before attackers can exploit them. This is proactive security, preventing vulnerabilities from being exploited.

A recent example is the rapid emergence of new ransomware variants. Through threat intelligence feeds and security newsletters, I stay informed about their capabilities, targets, and associated vulnerabilities to better protect our systems.

Q 21. How do you handle a critical network anomaly during off-hours?

Handling critical network anomalies during off-hours requires a well-defined incident response plan and robust monitoring and alerting systems. This demands preparedness and established procedures to ensure rapid and effective action.

- On-Call Rotation: We have an on-call rotation schedule for our security team, ensuring that someone is available to address urgent issues at any time. Each member is equipped with the tools and information necessary to respond to incidents outside of normal working hours.

- Automated Escalation Procedures: Our automated systems are configured to escalate critical alerts to the on-call team immediately, regardless of the time of day. This immediate notification reduces response times and ensures prompt attention to critical events.

- Remote Access Capabilities: The on-call personnel have secure remote access capabilities, allowing them to diagnose and resolve issues remotely, even if they are not physically present at the office. This is essential for many of our modern IT environments.

- Pre-defined Runbooks: We maintain pre-defined runbooks for common network anomalies. These documents outline clear steps to follow in specific situations, ensuring consistent and effective response procedures. This saves time and reduces confusion during stressful situations.

- Communication Plan: A clear communication plan is crucial to keep stakeholders informed during off-hours incidents. This plan outlines the communication channels, the recipients of the alerts, and the frequency of updates. This is crucial to ensure transparency and control during critical events.

For example, if a major outage occurs during the night, the on-call engineer is alerted immediately and can remotely access and diagnose the problem using pre-defined runbooks to get the network back online as swiftly as possible. They also follow a communications plan to keep relevant stakeholders such as executives and end-users updated throughout the night.

Q 22. Describe a situation where you had to identify and mitigate a network anomaly. What was the outcome?

In a previous role, we experienced a significant spike in outbound network traffic originating from a specific server. Initially, this appeared as a normal increase in activity, but closer examination revealed an anomaly: the traffic was directed to a known malicious IP address range associated with command-and-control servers often used in botnet activities. This wasn’t a gradual change, but a sudden, dramatic surge.

Our mitigation strategy involved several steps. First, we immediately isolated the affected server from the network to prevent further compromise. Next, we performed a full system scan to identify the malware responsible – it turned out to be a crypto-miner that had exploited a known vulnerability in outdated software. We then cleaned the infected server, updated its software, and re-imaged the operating system as a precaution. Finally, we implemented stronger access controls and deployed an intrusion detection system (IDS) with enhanced real-time monitoring capabilities to prevent similar future incidents. The outcome was successful containment of the malware, restoration of network stability, and prevention of data loss or further compromise. We also used this event as a learning experience to improve our patching schedules and security awareness training.

Q 23. Explain your understanding of different network topologies and their impact on anomaly detection.

Network topology significantly influences anomaly detection. Different structures have varying impacts on how easily anomalies can be identified and where they might manifest. Think of it like a city’s road system: a simple grid (bus topology) is easy to monitor, while a complex web of interconnected highways (mesh topology) can make finding blockages or unusual traffic patterns much harder.

- Bus Topology: Simple, linear structure. Anomalies are relatively easy to spot as they impact the entire network.

- Star Topology: All devices connect to a central hub (e.g., a switch). Monitoring the hub provides a central view, making anomaly detection more efficient.

- Ring Topology: Data flows in a circle. Anomalies can disrupt the entire ring, making them noticeable, though troubleshooting might be more complex.

- Mesh Topology: Highly interconnected; offers redundancy but also complexity in anomaly detection. Localized anomalies might go unnoticed.

- Tree Topology: Hierarchical structure; similar to a star topology but with multiple levels. Detection requires monitoring at each level.

For instance, a Denial-of-Service (DoS) attack will manifest differently depending on the topology. In a bus topology, it will likely bring down the entire network. In a mesh topology, it might impact only a portion of the network, making detection more challenging but also potentially limiting the damage.

Q 24. How do you incorporate network anomaly detection into your overall security strategy?

Network anomaly detection is a critical component of my overall security strategy, acting as a first line of defense against sophisticated attacks and unexpected disruptions. It works in conjunction with other security measures to provide a layered approach.

- Prevention: Implementing strong access controls, firewalls, and intrusion prevention systems (IPS) to minimize the risk of attacks in the first place.

- Detection: Utilizing network anomaly detection tools to identify unusual patterns and behaviors that may indicate an attack or malfunction.

- Response: Having well-defined incident response procedures, including isolation, investigation, and remediation, to handle detected anomalies effectively.

- Recovery: Implementing disaster recovery plans to ensure business continuity in case of major network outages or security breaches.

The data from anomaly detection systems feeds into my Security Information and Event Management (SIEM) system, enabling correlation with other security logs for a holistic view of potential threats. This layered approach allows me to detect and respond to anomalies quickly and effectively, minimizing downtime and damage.

Q 25. What are some common challenges in identifying and mitigating network anomalies?

Identifying and mitigating network anomalies presents several challenges:

- High volume of data: Networks generate massive amounts of data, making it difficult to sift through and identify anomalies amidst the noise. This requires efficient data processing and filtering techniques.

- Evolving attack techniques: Attackers constantly develop new techniques, making it difficult for static anomaly detection systems to keep up. This necessitates adaptable and machine-learning-based approaches.

- Defining ‘normal’: Establishing a baseline of normal network behavior can be tricky, especially in dynamic environments. This requires sophisticated algorithms that can adapt to changing conditions.

- False positives: Anomaly detection systems can generate false alarms, diverting resources away from actual threats. Careful tuning and analysis are necessary to minimize these.

- Lack of skilled personnel: Analyzing and interpreting the output of complex anomaly detection systems requires specialized expertise.

These challenges necessitate a combination of robust technology, well-defined processes, and skilled professionals to effectively address network anomalies.

Q 26. Explain your experience with network flow analysis for anomaly detection.

Network flow analysis is a crucial technique I use for anomaly detection. It involves collecting and analyzing network traffic data to identify unusual patterns. Instead of focusing on individual packets, it aggregates data into flows, representing communication sessions between different sources and destinations. Tools like tcpdump and Wireshark are used for this.

For example, a sudden increase in the number of flows to a particular external IP address, or a significant jump in the amount of data transferred within a short period, could indicate malicious activity. Analyzing flow statistics like packet size distributions, inter-arrival times, and source/destination IP addresses can reveal anomalies that are not easily detectable by looking at individual packets. I often use this data to create visualizations and identify outliers to pinpoint areas needing further investigation.

Example: A sudden surge in flows from internal IP addresses to a known malicious Tor exit node would immediately trigger an alert.

Q 27. How do you measure the effectiveness of your network anomaly mitigation strategies?

Measuring the effectiveness of network anomaly mitigation strategies is vital. I use a combination of metrics to assess performance:

- Reduction in security incidents: Tracking the number of detected and mitigated security incidents before and after implementing the strategy is a key indicator of success.

- Mean Time To Detect (MTTD): Measuring the time taken to identify anomalies is crucial. Lower MTTD reflects improved system responsiveness.

- Mean Time To Respond (MTTR): Evaluating the time taken to contain and remediate anomalies is essential. Lower MTTR indicates efficient incident response.

- False positive rate: Monitoring the number of false alarms helps to fine-tune the system and optimize its accuracy. Lower false positive rates are desirable.

- Network uptime and availability: Tracking network uptime helps to assess the overall impact of the mitigation strategy on system availability and performance.

Regularly reviewing these metrics allows for continuous improvement and adaptation of my strategies to maintain a high level of network security.

Q 28. What are some emerging technologies used for network anomaly detection?

Several emerging technologies are revolutionizing network anomaly detection:

- Artificial Intelligence (AI) and Machine Learning (ML): AI/ML algorithms can analyze vast datasets, identify complex patterns, and adapt to evolving threats more effectively than traditional methods. They can learn from past incidents to predict and prevent future attacks.

- Deep Packet Inspection (DPI): DPI examines the payload of network packets, allowing for a more in-depth analysis of network traffic. This helps in identifying subtle anomalies that might be missed by shallow inspection methods.

- Blockchain technology: Blockchain can be used to enhance the integrity and security of network data, making it harder for attackers to tamper with logs and creating an immutable record of network activity.

- Network Telemetry: High-fidelity network telemetry provides detailed visibility into network behavior, enabling more accurate anomaly detection and faster response times. Tools like sFlow and NetFlow offer valuable insights.

These technologies are making anomaly detection more accurate, efficient, and adaptable, leading to more robust network security.

Key Topics to Learn for Identify and Mitigate Network Anomalies Interview

- Network Monitoring Tools and Techniques: Understand the practical application of various monitoring tools (e.g., Wireshark, tcpdump, Nagios) and their role in identifying anomalies. Explore techniques like log analysis and real-time traffic monitoring.

- Anomaly Detection Methods: Grasp theoretical concepts like statistical anomaly detection, machine learning approaches (e.g., clustering, classification), and signature-based detection. Consider how these methods are applied to practical scenarios like intrusion detection and prevention.

- Network Security Protocols and their Vulnerabilities: Familiarize yourself with common protocols (TCP/IP, UDP, HTTP, HTTPS) and how vulnerabilities within these protocols can lead to network anomalies. Understand how these vulnerabilities are exploited and the resulting impact.

- Mitigation Strategies and Incident Response: Explore various mitigation techniques, including firewall rules, intrusion prevention systems (IPS), and content filtering. Develop a strong understanding of incident response procedures and best practices for containing and remediating network anomalies.

- Network Segmentation and Security Zones: Learn about the importance of network segmentation in limiting the impact of anomalies and the principles behind creating secure network zones. Understand how to design and implement effective network segmentation strategies.

- Root Cause Analysis: Develop your skills in identifying the underlying causes of network anomalies through methodical investigation and troubleshooting. Practice using various diagnostic tools and techniques to pinpoint the source of problems.

Next Steps

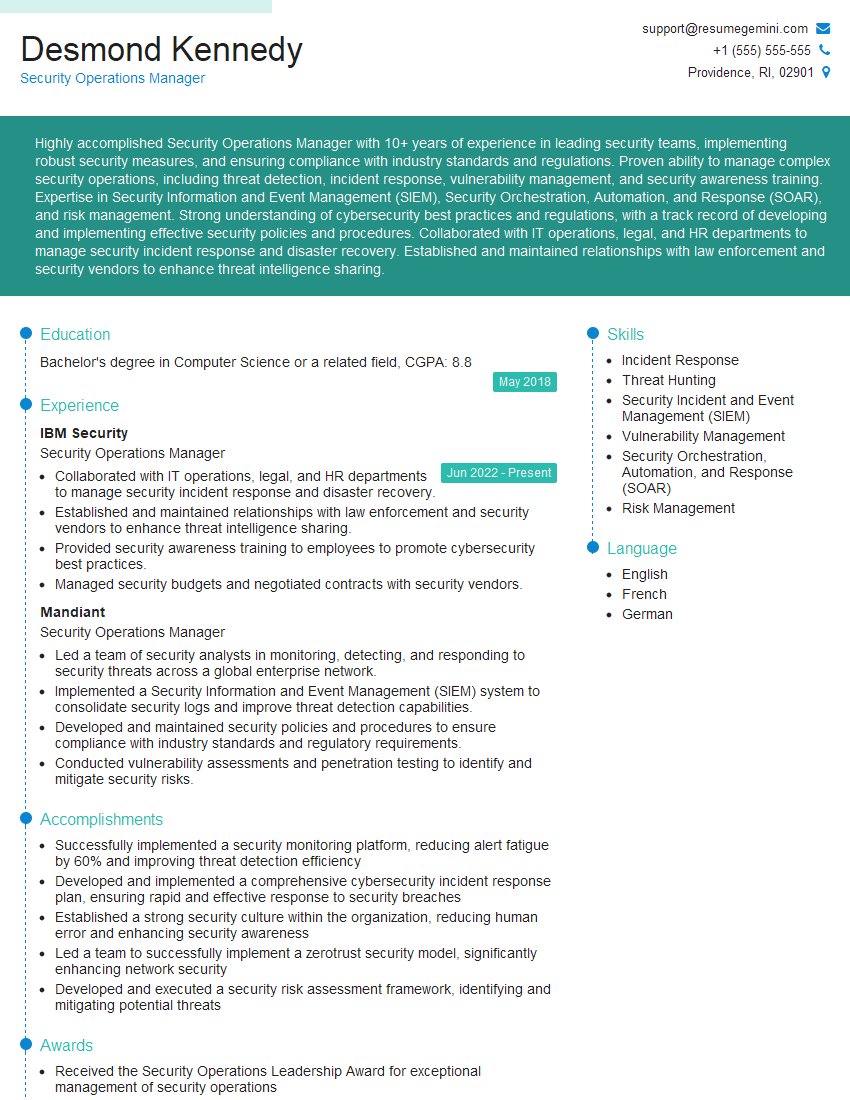

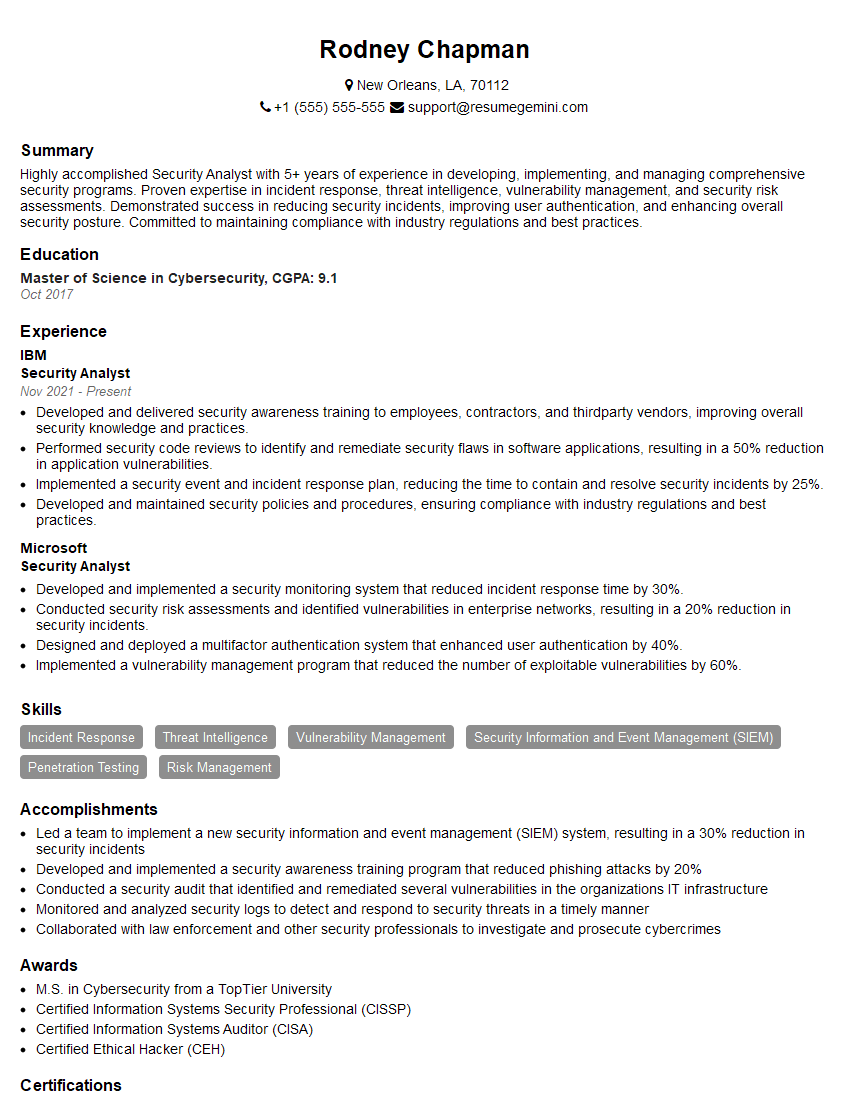

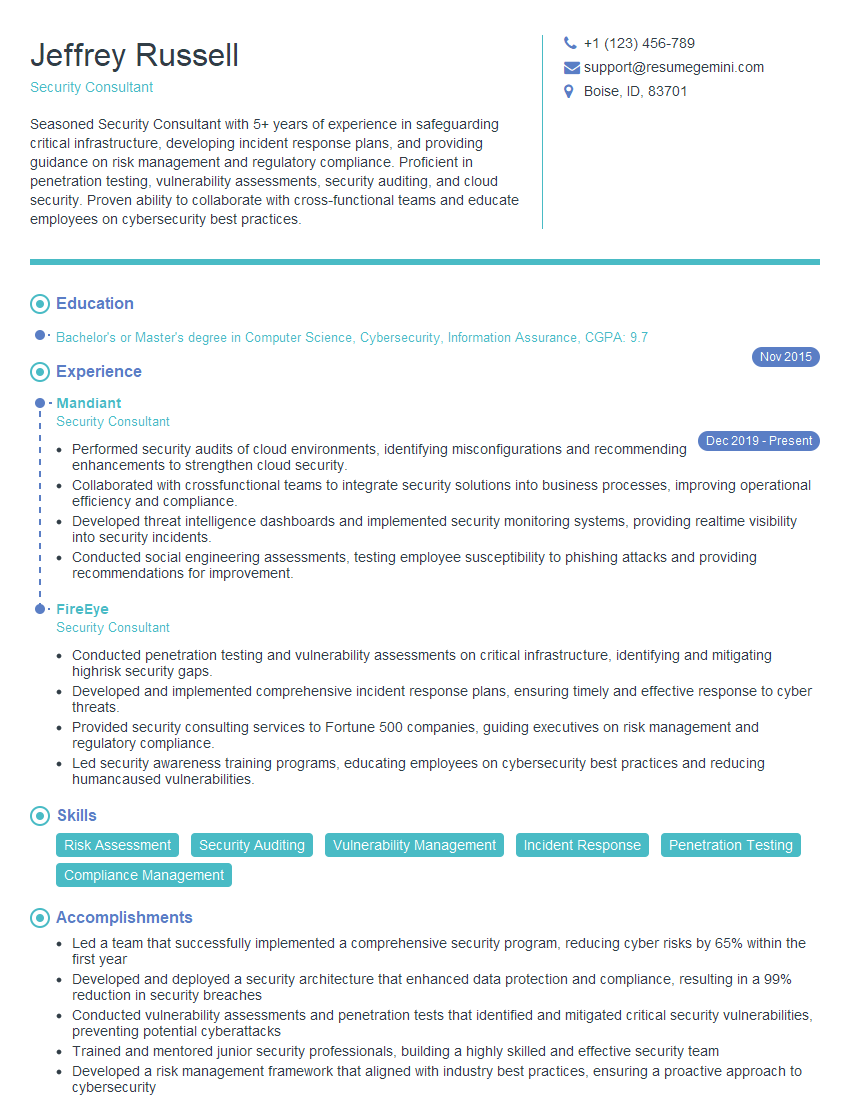

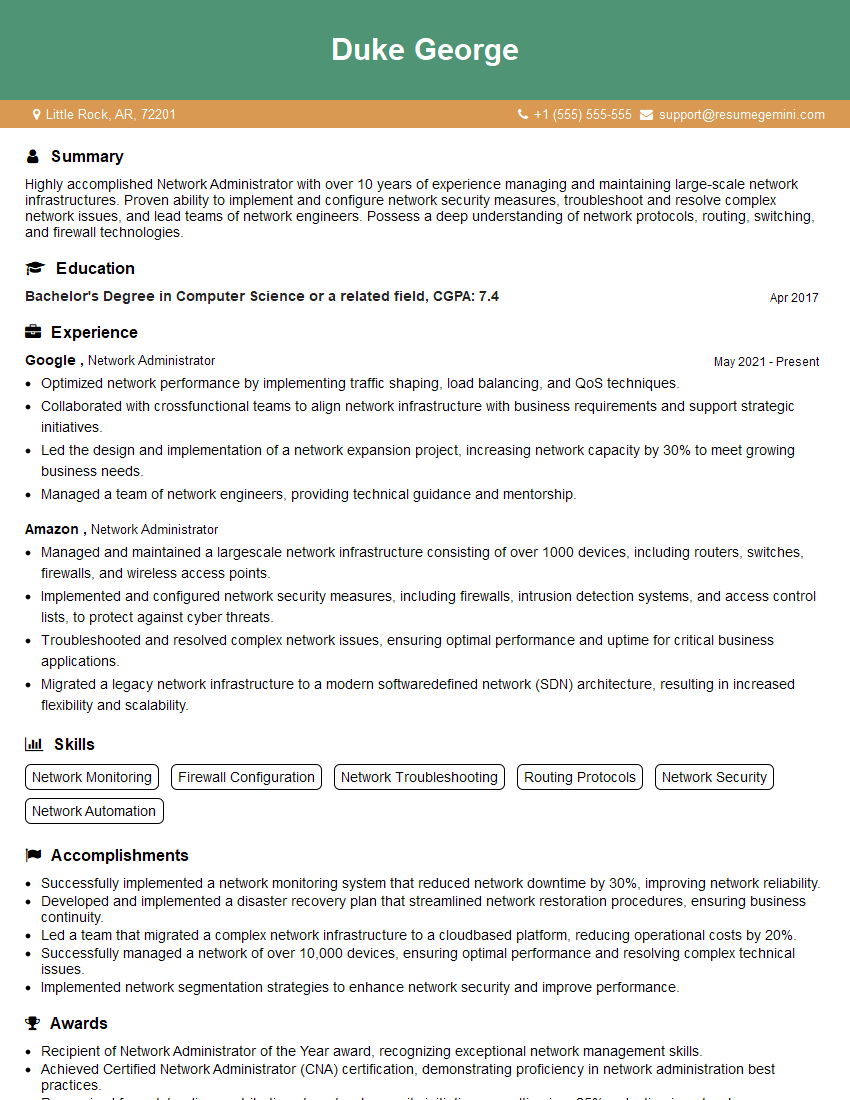

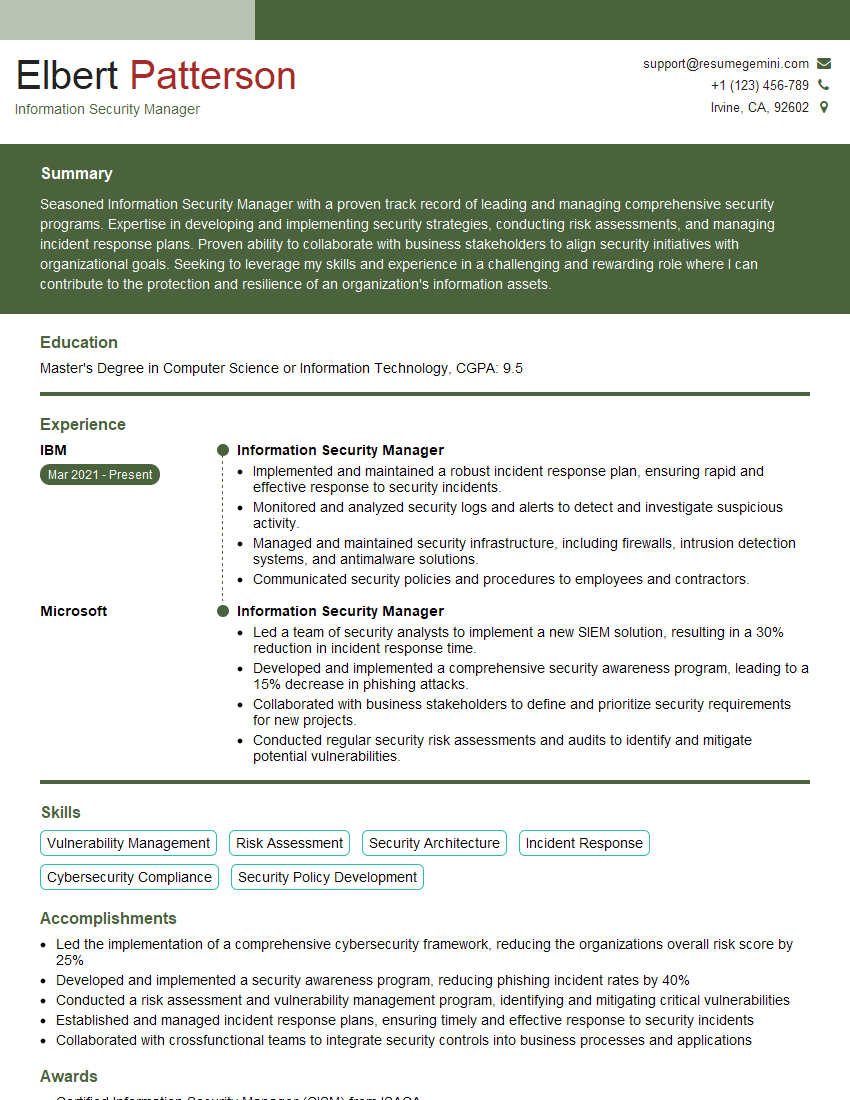

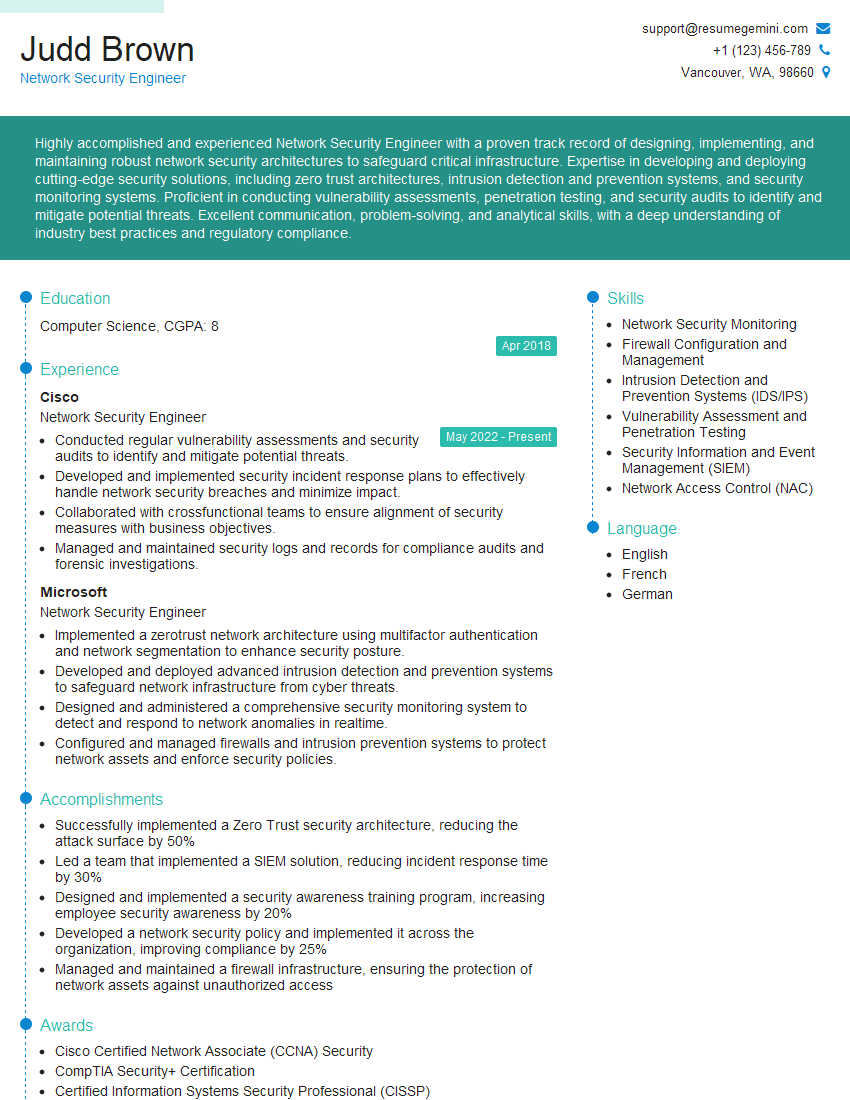

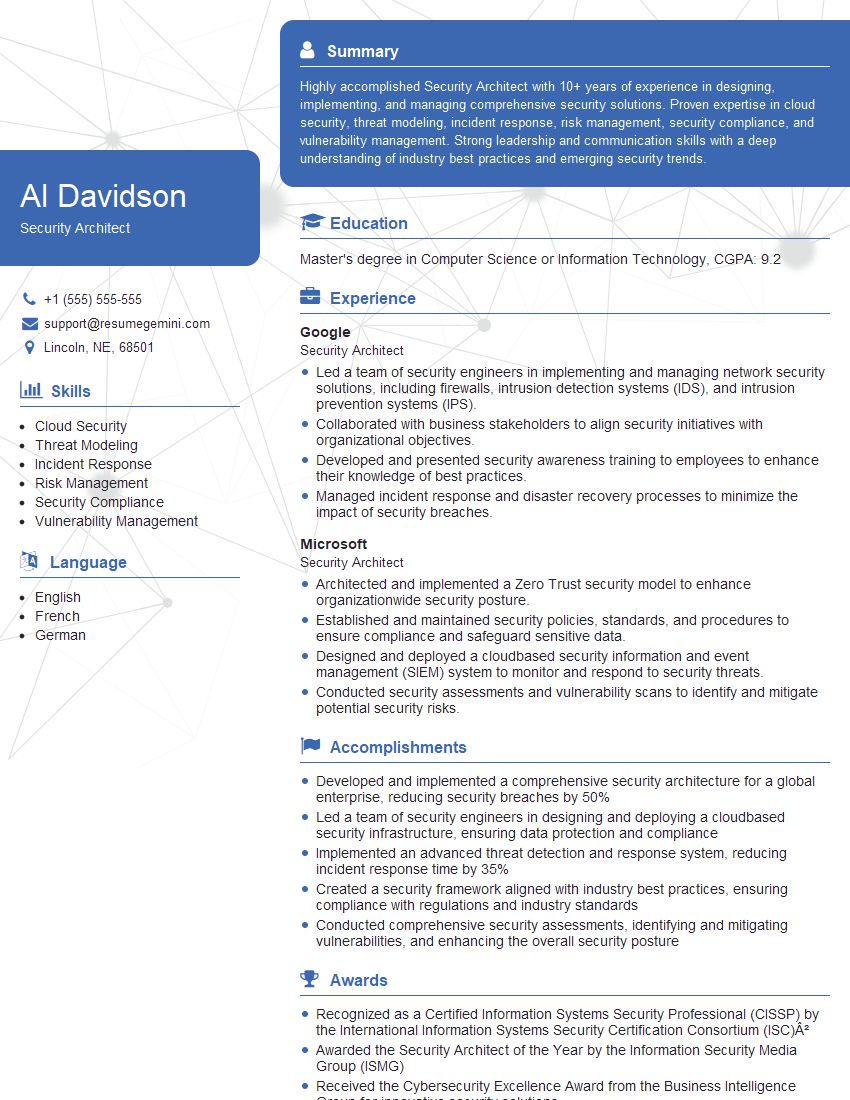

Mastering the identification and mitigation of network anomalies is crucial for career advancement in cybersecurity and networking. A strong understanding of these concepts demonstrates valuable problem-solving skills and a commitment to network security, making you a highly desirable candidate. To enhance your job prospects, focus on creating an ATS-friendly resume that highlights your relevant skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. Examples of resumes tailored to showcasing expertise in Identify and Mitigate Network Anomalies are available within ResumeGemini to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good