Are you ready to stand out in your next interview? Understanding and preparing for Imagery Analysis and Interpretation interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Imagery Analysis and Interpretation Interview

Q 1. Explain the difference between panchromatic and multispectral imagery.

The key difference between panchromatic and multispectral imagery lies in how they capture light. Panchromatic imagery records light across the entire visible spectrum (and sometimes extending into the near-infrared) as a single grayscale band. Think of it like a black and white photograph, but with potentially higher resolution details due to the combined light information. Multispectral imagery, on the other hand, captures light in several distinct wavelength bands or channels. This allows for the differentiation of features based on their spectral signatures. For example, healthy vegetation reflects strongly in the near-infrared, while unhealthy vegetation does not, allowing for detailed vegetation health assessments. Imagine it as multiple black and white photographs, each showing the scene through a different color filter, revealing different information about the scene’s composition.

In essence: Panchromatic provides high spatial resolution in one band, while multispectral provides lower spatial resolution across multiple bands, each offering different spectral information.

Q 2. Describe the process of orthorectification.

Orthorectification is the process of geometrically correcting an image to remove distortions caused by terrain relief, sensor position, and Earth curvature. Imagine taking a photograph from an airplane – features on hillsides will appear stretched and distorted compared to flat areas. Orthorectification ‘flattens’ this perspective. The process involves:

- Acquiring elevation data: This is typically done using a Digital Elevation Model (DEM), which provides height information for each point on the ground.

- Geometric modeling: The software uses the DEM, image sensor parameters (like position and orientation), and ground control points (GCPs) – known locations in both the image and on the ground – to build a mathematical model of the image geometry.

- Resampling: The software then applies this model to warp the image pixels, correcting for distortions and projecting the image onto a map projection. Common resampling methods include nearest neighbor, bilinear, and cubic convolution, each offering a different balance between speed and accuracy.

The result is an orthorectified image, where all features are accurately positioned and scaled as if viewed from directly above, facilitating accurate measurements and analysis.

Q 3. What are the limitations of using aerial imagery for terrain analysis?

While aerial imagery is invaluable for terrain analysis, it has limitations:

- Cloud cover: Clouds obscure the ground, hindering observation of terrain features.

- Atmospheric effects: Haze, fog, and atmospheric scattering can reduce image clarity and distort color balance.

- Shadowing: Especially in mountainous areas, shadows cast by terrain features can obscure details in low-lying areas. This complicates accurate elevation measurements and feature extraction.

- Image resolution: The resolution may be insufficient to resolve small-scale features or subtle variations in terrain. The level of detail you can see depends heavily on the sensor’s spatial resolution and flying altitude.

- Cost and logistical constraints: Aerial imagery acquisition can be expensive and logistically challenging, particularly in remote or inaccessible areas.

For example, trying to analyze the exact slope of a steep, heavily shadowed mountainside using aerial imagery alone might be very difficult. LiDAR data, often used in conjunction with aerial imagery, can help mitigate some of these issues.

Q 4. How do you identify and correct geometric distortions in imagery?

Geometric distortions in imagery are corrected using several techniques. The most common is geometric rectification, which uses ground control points (GCPs) and a mathematical model to transform the image to a desired map projection. Identifying GCPs requires knowing the coordinates of identifiable features in both the image and a reference map. Software then uses these points to create a transformation model, warping the image to match the reference map’s geometry. We can correct distortions caused by:

- Relief displacement (terrain): Orthorectification (as explained earlier) addresses this.

- Camera tilt and roll: Using sensor orientation data (often included in metadata) and GCPs, the software can account for variations from perfect nadir (straight down) viewing.

- Earth curvature: This is typically accounted for through the use of appropriate map projections.

- Sensor distortions: Modern sensors have minimal distortions, but historical imagery might require correction using sensor-specific models.

In practice, the process often involves iterative refinements, adjusting the transformation model until the GCPs align accurately in the corrected image. Software packages, such as ENVI, ArcGIS, and ERDAS IMAGINE, provide tools to automate much of this process.

Q 5. Explain the concept of spatial resolution and its impact on analysis.

Spatial resolution refers to the size of the smallest feature that can be discerned in an image. It’s typically expressed as the ground sample distance (GSD), which is the size of a pixel on the ground. A high spatial resolution image (e.g., GSD of 0.5 meters) shows finer details, like individual trees or cars, whereas a low spatial resolution image (e.g., GSD of 10 meters) only shows larger features, such as fields or buildings as larger blocks of pixels.

The impact on analysis is significant. High-resolution imagery is crucial for applications requiring detailed information, like urban planning, damage assessment after a natural disaster, or precise mapping of infrastructure. Low-resolution imagery is suitable for broader-scale analyses, such as land cover classification over large regions. Choosing the appropriate spatial resolution depends on the scale and detail required for the specific analysis task. For example, analyzing individual tree health requires much higher resolution than classifying broad forest types.

Q 6. What are the different types of image enhancement techniques?

Image enhancement techniques aim to improve the visual quality and interpretability of imagery. They can be broadly categorized into:

- Spatial enhancement: These techniques improve the spatial characteristics of an image, such as sharpness and contrast. Examples include:

- Sharpening: High-pass filtering or unsharp masking techniques improve edge definition.

- Smoothing: Low-pass filtering reduces noise and enhances homogenous areas.

- Spectral enhancement: These techniques modify the spectral characteristics, such as brightness and color, to enhance feature identification. Examples include:

- Contrast stretching: Improves the visibility of features by expanding the range of pixel values.

- Principal Component Analysis (PCA): Reduces data dimensionality while preserving essential information.

- Ratioing: Creates ratio images to highlight specific features (e.g., Normalized Difference Vegetation Index (NDVI)).

- Geometric enhancement: This involves geometric corrections, discussed previously.

The choice of enhancement techniques depends on the specific image characteristics, the target features, and the analysis goals. For example, sharpening might be used to highlight roads in an urban area, while NDVI could be used to map vegetation health.

Q 7. How do you interpret changes detected in a time series of satellite images?

Interpreting changes detected in a time series of satellite images involves a systematic approach:

- Image registration and co-registration: Ensure all images are accurately aligned to a common coordinate system to accurately compare features across time.

- Change detection methods: Several methods exist, including image differencing, image rationing, and post-classification comparison. Image differencing simply subtracts the pixel values of one image from another, highlighting areas with significant changes. Ratioing helps to highlight proportional changes, while post-classification involves classifying the images individually and comparing the resulting maps.

- Visual interpretation: Examine the change detection results visually, identifying areas of change and their nature. This can involve identifying pixels that have changed significantly and relating those pixels to real-world changes. For example, a large number of pixels that changed from a forest class to a bare land class between two time points may indicate deforestation.

- Ground truthing: Validate the detected changes using field data or other sources of information (e.g., aerial photography or historical maps) to ensure accuracy. This is crucial for ensuring the results of your image analysis reflect reality.

- Qualitative and quantitative analysis: Describe the types of changes detected, quantify their extent (e.g., area of deforestation), and analyze the trends over time. Summarize your results with both qualitative descriptions and quantitative measurements.

Interpreting these changes often requires knowledge of the region’s history, geography, and environmental factors. For instance, observing a significant change in land cover near a river might suggest the impact of a flood. Careful analysis, grounded in both the technology and the context, is key to drawing meaningful conclusions.

Q 8. Describe your experience with different image classification methods.

Image classification involves assigning predefined categories to image pixels or regions. I’ve extensive experience with various methods, ranging from simple techniques to sophisticated deep learning approaches.

- Traditional methods: These include techniques like thresholding (simple classification based on pixel intensity), k-Nearest Neighbors (KNN) which classifies based on proximity to known samples, and Support Vector Machines (SVM) which find optimal hyperplanes to separate classes. For example, I used KNN to classify land cover types in satellite imagery, achieving good accuracy for straightforward distinctions like water vs. land.

- Object-based image analysis (OBIA): This involves segmenting an image into meaningful objects (e.g., buildings, trees) before classifying them. OBIA often leads to higher accuracy than pixel-based methods, especially for heterogeneous scenes. I’ve employed OBIA with eCognition software for urban planning projects, analyzing aerial photos to differentiate buildings of different types and ages.

- Deep learning methods: Convolutional Neural Networks (CNNs) have revolutionized image classification. They automatically learn hierarchical features from raw image data and achieve state-of-the-art results. I’ve used TensorFlow and PyTorch to train CNNs for tasks like identifying specific types of vegetation from hyperspectral imagery, significantly improving accuracy compared to traditional classifiers.

The choice of method depends on factors such as data availability, computational resources, and desired accuracy.

Q 9. Explain the concept of feature extraction in imagery analysis.

Feature extraction is the process of identifying and quantifying meaningful characteristics from raw imagery data. Think of it as extracting the essence of the image – the information that’s crucial for analysis. Instead of dealing with millions of raw pixel values, we focus on a smaller set of features that represent important patterns and relationships.

- Spectral features: These are derived from the spectral signature of objects, measuring the reflectance or emission at different wavelengths. For example, the amount of near-infrared reflectance can indicate vegetation health.

- Textural features: These describe the spatial arrangement of pixels, like smoothness, coarseness, or directionality. Think about how you distinguish a grassy field from a paved road – the texture is very different.

- Spatial features: These describe the shape, size, and location of objects within an image. For example, the compactness of a building or the distance between objects.

Feature extraction is a critical preprocessing step in many image analysis tasks. Well-chosen features greatly improve the performance of subsequent classification or object detection algorithms. For example, I’ve used Gray-Level Co-occurrence Matrices (GLCMs) to extract texture features from satellite images to improve land cover mapping accuracy. The selection of appropriate features often requires domain expertise and experimentation.

Q 10. What are the common file formats used for storing imagery data?

Imagery data comes in various file formats, each with its own strengths and weaknesses. The most common ones include:

- GeoTIFF (.tif, .tiff): A widely used format that stores both raster image data and geospatial metadata, meaning location information is embedded. It’s highly versatile and widely supported by GIS software.

- ERDAS IMAGINE (.img): Proprietary format by Hexagon Geospatial, known for its ability to handle large datasets and various data types. It is efficient but less widely adopted than GeoTIFF.

- JPEG (.jpg, .jpeg): A common image format for photographs but less suitable for scientific imagery due to compression artifacts that can distort information.

- PNG (.png): A lossless compression format offering better image quality than JPEG, making it preferable for some imagery applications.

- HDF (.hdf, .h5): Hierarchical Data Format is suited for storing very large multi-dimensional arrays like hyperspectral imagery.

Choosing the right format depends on the specific application. For geospatial analysis, GeoTIFF is often the best choice due to its metadata support. For very large datasets or hyperspectral data, HDF might be more appropriate.

Q 11. How do you assess the quality of imagery data?

Assessing imagery quality is crucial for reliable analysis. It involves evaluating several factors:

- Spatial resolution: This refers to the size of a pixel on the ground. Higher resolution means more detail, but also larger file sizes. We assess this by considering the level of detail needed for the analysis. A high-resolution image might be necessary to detect small objects, while lower resolution may suffice for larger-scale mapping.

- Spectral resolution: This refers to the number of wavelength bands recorded. Hyperspectral imagery has many bands, providing detailed spectral information, while multispectral imagery has fewer bands. The appropriateness depends on the application; for vegetation analysis, hyperspectral is ideal, while for simple land cover mapping, multispectral might suffice.

- Radiometric resolution: This relates to the precision of the digital numbers (DNs) representing brightness values. Higher radiometric resolution allows for more subtle distinctions between objects.

- Geometric accuracy: This assesses how well the image aligns with real-world coordinates. Geometric distortions can affect measurements and analysis. We use ground control points and georeferencing techniques to ensure accuracy.

- Atmospheric effects: Haze, clouds, and other atmospheric phenomena can affect image clarity and color. Atmospheric correction techniques are used to mitigate these effects.

I use various quality assessment metrics, visual inspection, and software tools to evaluate these aspects and make informed decisions about the suitability of the data for the analysis.

Q 12. Explain your experience with GIS software and its application to imagery analysis.

GIS software is fundamental to imagery analysis. It provides the tools for georeferencing, spatial analysis, and visualization. My experience includes extensive use of ArcGIS and QGIS.

- Georeferencing: Aligning the imagery to a geographic coordinate system, allowing for integration with other geospatial data.

- Image processing: Enhancing imagery through techniques like atmospheric correction, geometric correction, and orthorectification.

- Spatial analysis: Performing operations like buffer analysis, overlay analysis, and proximity analysis to extract meaningful information from the imagery and related datasets. For example, I analyzed land use changes over time using time-series satellite imagery within a GIS environment by overlaying images from different years and calculating changes in land cover types.

- Data visualization: Creating maps, charts, and other visualizations to communicate results effectively. I frequently utilize GIS for creating compelling visualizations of our findings, which helps stakeholders understand the results clearly.

GIS software is integral to my workflow, allowing me to integrate imagery with other geospatial data (e.g., elevation models, vector data) for comprehensive analysis and decision-making.

Q 13. Describe your familiarity with different sensor systems (e.g., LiDAR, hyperspectral).

I’m familiar with various sensor systems used in imagery acquisition, each offering unique capabilities:

- LiDAR (Light Detection and Ranging): This active remote sensing technology uses laser pulses to measure distances to the Earth’s surface, creating highly accurate 3D point clouds. I’ve used LiDAR data for tasks such as creating Digital Elevation Models (DEMs) and generating high-resolution terrain models for applications like flood risk assessment and infrastructure planning. The highly accurate elevation data provided by LiDAR helps immensely in creating accurate terrain models that are crucial in flood simulations and identifying areas at higher risk.

- Hyperspectral imagery: This captures images across a wide range of narrow, contiguous spectral bands, providing detailed information about the spectral signature of materials. This is incredibly useful for precise material identification and classification, e.g., distinguishing subtle differences in vegetation types or identifying mineral compositions. I’ve utilized hyperspectral data for precision agriculture, identifying specific crop types and assessing their health with great detail.

- Multispectral imagery: This captures images in a limited number of broad spectral bands (e.g., red, green, blue, near-infrared). Common sources include Landsat and Sentinel satellites. It’s widely used for mapping land cover and monitoring environmental changes. The readily available data from Landsat and Sentinel make it cost-effective for large-area monitoring projects.

Understanding the strengths and limitations of each sensor system is key to selecting the appropriate data for a given task.

Q 14. How do you handle large volumes of imagery data?

Handling large volumes of imagery data requires efficient strategies and tools. My approach involves:

- Cloud computing: Utilizing cloud platforms like Amazon Web Services (AWS) or Google Cloud Platform (GCP) to store and process imagery data. Cloud computing provides the scalability and computational power needed for large datasets. I commonly leverage cloud-based platforms for processing and analysis, especially for large datasets, as the scalable nature of these platforms allow for handling datasets that may exceed local processing capabilities.

- Data compression and storage optimization: Employing lossless or lossy compression techniques to reduce storage needs. Choosing appropriate file formats and using efficient data storage solutions are crucial. Selecting efficient file formats (like GeoTIFF with appropriate compression) and utilizing cloud storage optimized for geospatial data are critical.

- Parallel processing: Utilizing parallel processing techniques to distribute computationally intensive tasks across multiple cores or machines, significantly speeding up processing time. This ensures that computationally intensive tasks are processed efficiently.

- Data tiling and subsetting: Dividing large images into smaller tiles or subsets for easier processing and analysis, reducing memory requirements. This strategy helps in processing imagery in a manageable way, focusing on specific areas at a time.

- Specialized software: Using specialized software designed for handling large geospatial datasets (e.g., GDAL, ENVI). This software offers optimized functions and algorithms for efficient processing of large datasets. It’s crucial to utilize tools optimized for geospatial data processing.

A well-planned strategy for data management and processing is crucial for efficient handling of large imagery datasets.

Q 15. Describe your workflow for analyzing a new dataset of imagery.

My workflow for analyzing a new imagery dataset is a systematic process, broken down into distinct phases. It begins with data understanding: I assess the metadata (sensor type, resolution, acquisition date, etc.) to understand the dataset’s characteristics and limitations. This informs the next step, preprocessing, where I address issues like geometric distortions, atmospheric effects (e.g., haze), and radiometric inconsistencies using techniques like orthorectification, atmospheric correction, and histogram equalization. Then comes feature extraction, where I identify and quantify relevant features within the images using techniques such as edge detection, texture analysis, and spectral indices (e.g., NDVI for vegetation). Next is classification or analysis, employing methods like supervised or unsupervised machine learning (e.g., support vector machines, random forests, or clustering algorithms), depending on the specific goals. The final phase is post-processing and validation, where I assess the accuracy of the results, refine the model if needed, and generate reports or visualizations to communicate the findings. For instance, in analyzing satellite imagery of a deforestation area, I might use NDVI to identify changes in vegetation cover, followed by object-based image analysis (OBIA) to classify different land cover types.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some common challenges in imagery analysis, and how have you overcome them?

Common challenges in imagery analysis include data quality issues like noise, cloud cover, and atmospheric effects; computational complexity dealing with massive datasets; and interpretability of complex algorithms. To overcome these, I employ robust preprocessing techniques as mentioned earlier. For computational complexity, I utilize parallel processing and cloud computing resources. Improving interpretability often involves feature visualization and explainable AI (XAI) methods. For example, when analyzing aerial imagery with significant cloud cover, I use cloud masking techniques and data fusion with other data sources to minimize data loss. If computational resources are limited, I might employ dimensionality reduction techniques to work with smaller feature sets.

Q 17. Explain your understanding of different image processing algorithms.

My understanding of image processing algorithms encompasses a wide range of techniques. Filtering algorithms (e.g., Gaussian, median) are used for noise reduction. Transformation algorithms (e.g., Fourier, wavelet) decompose images into different frequency components for feature extraction. Geometric corrections (e.g., orthorectification) adjust for distortions. Segmentation algorithms (e.g., thresholding, region growing) partition images into meaningful regions. Classification algorithms (e.g., Support Vector Machines, Random Forests) assign labels to image pixels or objects. I’m also proficient in deep learning algorithms like Convolutional Neural Networks (CNNs), which are particularly effective for tasks like object detection and semantic segmentation. For instance, in a project involving the identification of different types of trees in a forest, I would use a CNN trained on labeled imagery to automatically classify each tree based on its visual characteristics.

Q 18. How do you validate the accuracy of your image analysis results?

Validating the accuracy of image analysis results is critical. I use several methods, including ground truthing, where I compare the results with on-site observations or high-accuracy reference data. Accuracy assessment metrics like overall accuracy, producer’s accuracy, and user’s accuracy are used for quantitative evaluation. Confusion matrices provide a detailed breakdown of classification performance. For object detection, I use metrics like precision, recall, and F1-score. Additionally, I perform cross-validation to ensure the model’s generalizability and robustness. For example, in a land cover classification project, I would compare my automatically generated land cover map to a manually created map based on high-resolution aerial photos and field surveys to assess the accuracy of my automated analysis.

Q 19. What software and tools are you proficient in using for image analysis?

I am proficient in several software and tools for image analysis. This includes ENVI and ERDAS IMAGINE for remote sensing data processing, ArcGIS for geospatial analysis, and QGIS for open-source geospatial processing. For programming and algorithm development, I utilize Python with libraries like NumPy, Scikit-learn, OpenCV, and TensorFlow/PyTorch. My expertise extends to using cloud-based platforms like Google Earth Engine for large-scale image processing.

Q 20. Explain your experience with object detection and recognition techniques.

My experience with object detection and recognition techniques is extensive. I’ve applied both traditional computer vision methods (e.g., feature-based approaches using SIFT or SURF) and deep learning-based approaches (e.g., Faster R-CNN, YOLO, SSD) for various applications. Traditional methods are often used for simpler tasks or when labeled data is scarce, while deep learning excels in complex scenarios with abundant data. For example, in a project involving detecting vehicles in satellite imagery, I used a YOLOv5 model, which is known for its speed and accuracy. The model was trained on a large dataset of labeled satellite images, and it successfully identified vehicles with high accuracy. I frequently fine-tune pre-trained models on specific datasets to improve performance and adapt to specific challenges. The choice of algorithm depends on factors like the image resolution, the complexity of the objects, and the availability of training data.

Q 21. Describe a time you had to interpret ambiguous imagery; what was your approach?

In one project involving analyzing historical aerial photographs for archaeological site identification, I encountered ambiguous imagery due to low resolution and degradation. My approach was multi-faceted. First, I enhanced the image quality using various image processing techniques, including noise reduction and contrast enhancement. Second, I employed contextual information from historical maps and documents to aid interpretation. Third, I used object-based image analysis (OBIA) to segment the image and identify potential features of interest based on shape, texture, and spectral characteristics. Finally, I compared my findings with expert knowledge from archaeologists to validate my interpretations and refine my methodology. This iterative process, combining image processing, contextual information, and expert validation, was essential to make reliable interpretations from the ambiguous imagery.

Q 22. How do you incorporate ground truth data into your analysis?

Ground truth data is essential for validating and calibrating imagery analysis. It’s basically the real-world information that corresponds to what we see in the imagery. Think of it as the ‘answer key’ for our analysis. We use it to train algorithms, assess accuracy, and improve the reliability of our interpretations.

Incorporating ground truth involves several steps:

- Data Collection: This could involve fieldwork to collect measurements (e.g., GPS coordinates of features, soil samples, species identification), or using existing datasets like cadastral maps or survey data.

- Data Alignment: We carefully align the ground truth data with the imagery, often using georeferencing techniques to ensure they correspond to the same geographic location.

- Accuracy Assessment: Once aligned, we compare the information derived from the imagery to the ground truth. This reveals the accuracy of our image classification, object detection, or other analytical methods.

- Model Refinement: The results of this comparison are used to refine our analytical models. For instance, if an algorithm misclassifies forest types, we’ll adjust its parameters based on the ground truth data to improve performance.

Example: Imagine analyzing satellite imagery to map deforestation. Ground truth would involve conducting field surveys to verify the presence or absence of trees at specific locations identified in the imagery. This allows us to measure the accuracy of our automated deforestation mapping process.

Q 23. Explain the concept of scale in imagery and its relevance to analysis.

Scale in imagery refers to the ratio between a distance on the image and the corresponding distance on the ground. It’s crucial because it dictates the level of detail visible and the types of analyses we can perform.

Large scale imagery (e.g., aerial photos taken from a low altitude) shows a small geographic area with high detail. This is great for detailed feature identification, such as individual trees in a forest or cracks in a building.

Small scale imagery (e.g., satellite imagery) shows a large geographic area but with less detail. Useful for large-scale mapping tasks such as monitoring land use change or identifying large-scale infrastructure.

Relevance to Analysis: Choosing the appropriate scale is essential for a successful analysis. If you need to identify individual vehicles in a parking lot, large-scale imagery is required. However, if you are monitoring the spread of a wildfire across a region, small-scale imagery would be more suitable. Scale also affects the resolution of the imagery and therefore the accuracy of the analysis.

Example: A 1:10,000 scale image means that 1 centimeter on the image represents 100 meters on the ground. A 1:1,000,000 scale would show a much larger area, but with much less detail.

Q 24. How familiar are you with different map projections and their impact on imagery?

Map projections are crucial in imagery analysis because they represent the curved surface of the Earth on a flat map. Different projections distort distances, areas, and shapes in different ways. This distortion directly impacts the accuracy and reliability of measurements and analyses performed on the imagery.

I’m familiar with several common map projections, including:

- Mercator Projection: Preserves direction, but distorts areas significantly at higher latitudes. Often used for navigation.

- Lambert Conformal Conic Projection: Minimizes distortion in areas of moderate latitude and longitude. Common for topographic maps.

- UTM (Universal Transverse Mercator): Divides the Earth into zones, minimizing distortion within each zone. Widely used for geospatial data.

Impact on Imagery: If you perform area calculations on imagery using the wrong projection, your results will be inaccurate. Similarly, distance measurements will be affected. Understanding the projection of your imagery is essential for ensuring the accuracy and validity of your findings.

Example: Greenland appears much larger than South America on a Mercator projection, although this is a cartographic distortion, not a reflection of reality. Using this projection for analyzing the relative sizes of these continents would lead to significant errors.

Q 25. What are some ethical considerations in using and interpreting imagery?

Ethical considerations in imagery analysis are paramount. We must be mindful of privacy, bias, and potential misuse of the technology.

Key ethical considerations include:

- Privacy: High-resolution imagery can potentially reveal sensitive information about individuals and their activities. Anonymization techniques should be employed where appropriate.

- Bias: Algorithms used in imagery analysis can inherit biases present in the training data, leading to unfair or discriminatory outcomes. It’s crucial to identify and mitigate these biases.

- Transparency: The methods used for analysis should be clearly documented and explained. The results should be presented accurately and without misrepresentation.

- Misuse: Imagery analysis can be used for malicious purposes, such as surveillance or targeting of individuals. It’s important to use this technology responsibly and avoid contributing to harm.

- Data Security: The imagery data itself should be secured from unauthorized access and modification. Strong data management protocols are necessary.

Example: Using facial recognition technology on imagery without explicit consent raises significant privacy concerns. Similarly, using biased training data for land-use classification could lead to inaccurate and potentially unfair outcomes.

Q 26. Describe your understanding of cloud computing platforms for imagery analysis.

Cloud computing platforms are revolutionizing imagery analysis. Services like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer powerful tools and infrastructure for processing and analyzing large datasets.

These platforms provide:

- Scalable computing resources: Easily handle massive imagery datasets that would be difficult to manage on local machines.

- Pre-trained models and algorithms: Access to sophisticated image processing and analysis tools, reducing development time.

- Storage solutions: Secure and cost-effective storage for massive datasets.

- Collaboration tools: Facilitate team collaboration on projects.

Example: Using AWS’s cloud-based tools, we can process terabytes of satellite imagery to detect changes in agricultural land use over time. The scalability of the cloud allows us to quickly analyze vast areas, something that would be impossible with limited local resources.

Q 27. How do you stay current with advancements in imagery analysis technology?

Keeping current with the rapid advancements in imagery analysis is crucial. I actively engage in several strategies:

- Reading scientific literature: I regularly read peer-reviewed journals and conference proceedings focused on remote sensing, computer vision, and machine learning.

- Attending conferences and workshops: Directly engage with researchers and practitioners in the field and learn about the latest breakthroughs.

- Online courses and tutorials: Utilize online platforms like Coursera, edX, and others to learn about new techniques and algorithms.

- Professional networks: Engage with online communities and professional organizations dedicated to imagery analysis.

- Experimentation: I actively experiment with new software, algorithms, and datasets to gain practical experience.

Example: I recently completed a course on deep learning for object detection in satellite imagery, which has significantly enhanced my ability to perform detailed analyses.

Key Topics to Learn for Imagery Analysis and Interpretation Interview

- Image Acquisition and Preprocessing: Understanding various sensor types (e.g., aerial, satellite, hyperspectral), data formats, and preprocessing techniques like geometric correction, atmospheric correction, and noise reduction.

- Visual Interpretation Techniques: Mastering the skills of visual pattern recognition, feature identification, and contextual analysis within imagery. Practical application: identifying objects of interest (OOI) in satellite imagery for urban planning or disaster response.

- Photogrammetry and 3D Modeling: Understanding principles of creating 3D models from 2D imagery and their applications in various fields, such as surveying, archaeology, and architecture.

- Image Classification and Object Detection: Familiarizing yourself with supervised and unsupervised classification methods, object detection algorithms, and their use in automated feature extraction and analysis. Practical application: classifying land cover types from aerial imagery using machine learning techniques.

- Change Detection and Time Series Analysis: Understanding techniques for analyzing changes over time in imagery data, such as deforestation monitoring or urban sprawl analysis.

- Spatial Data Analysis and GIS Integration: Understanding how to integrate imagery data with other geospatial datasets using GIS software for comprehensive analysis.

- Interpretation Reporting and Communication: Effectively communicating findings and insights from imagery analysis through clear and concise reports, presentations, and visualizations.

- Ethical Considerations and Data Privacy: Understanding ethical implications of imagery analysis, including data privacy and responsible data handling.

Next Steps

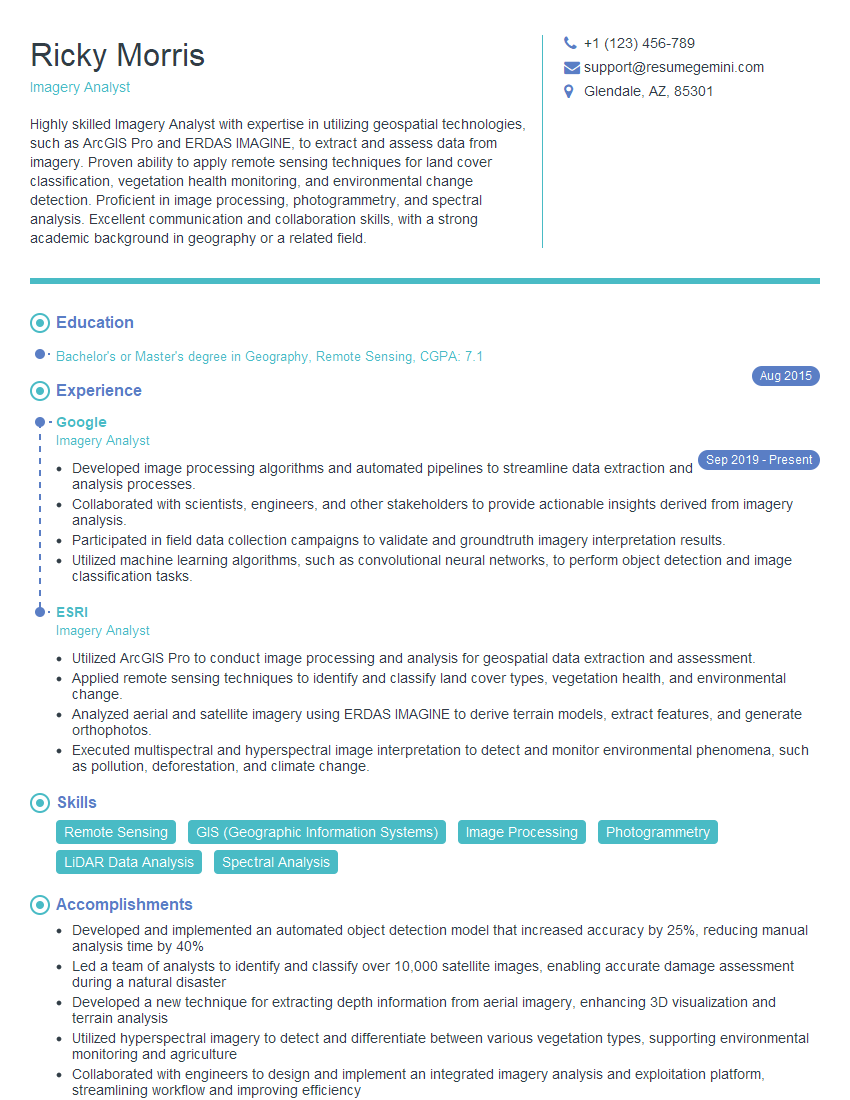

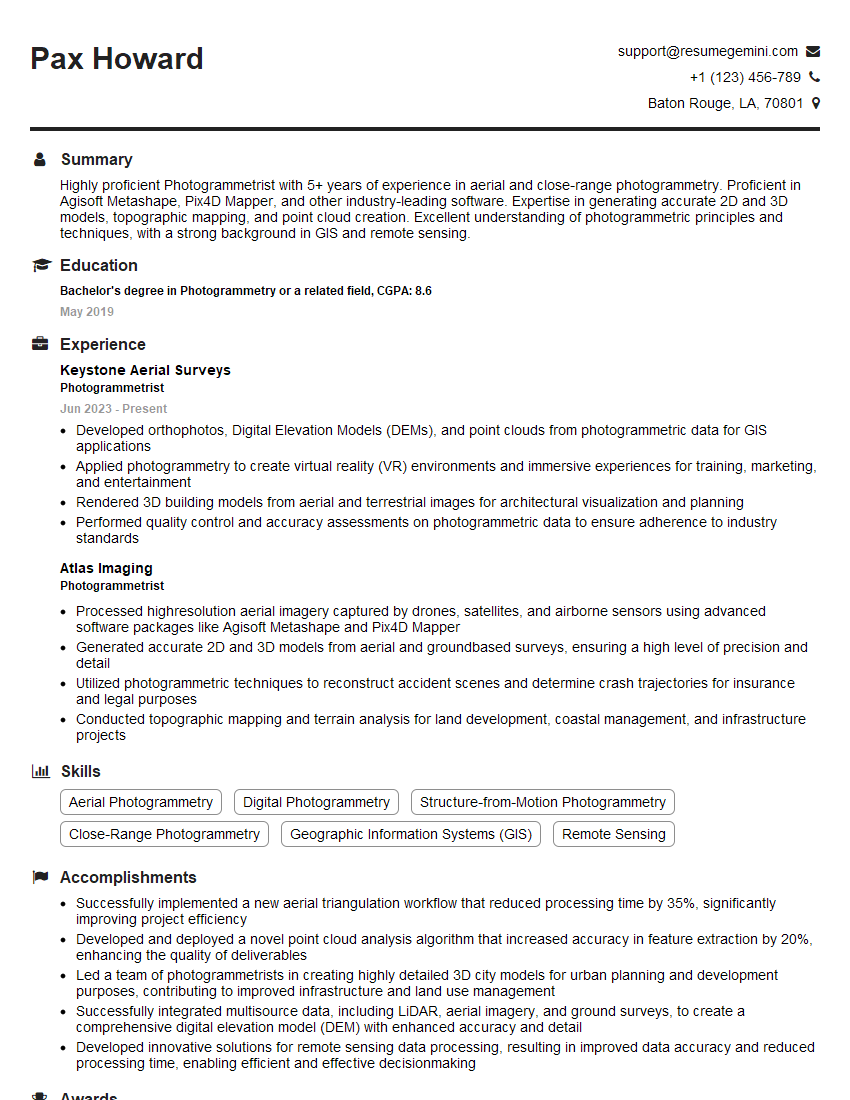

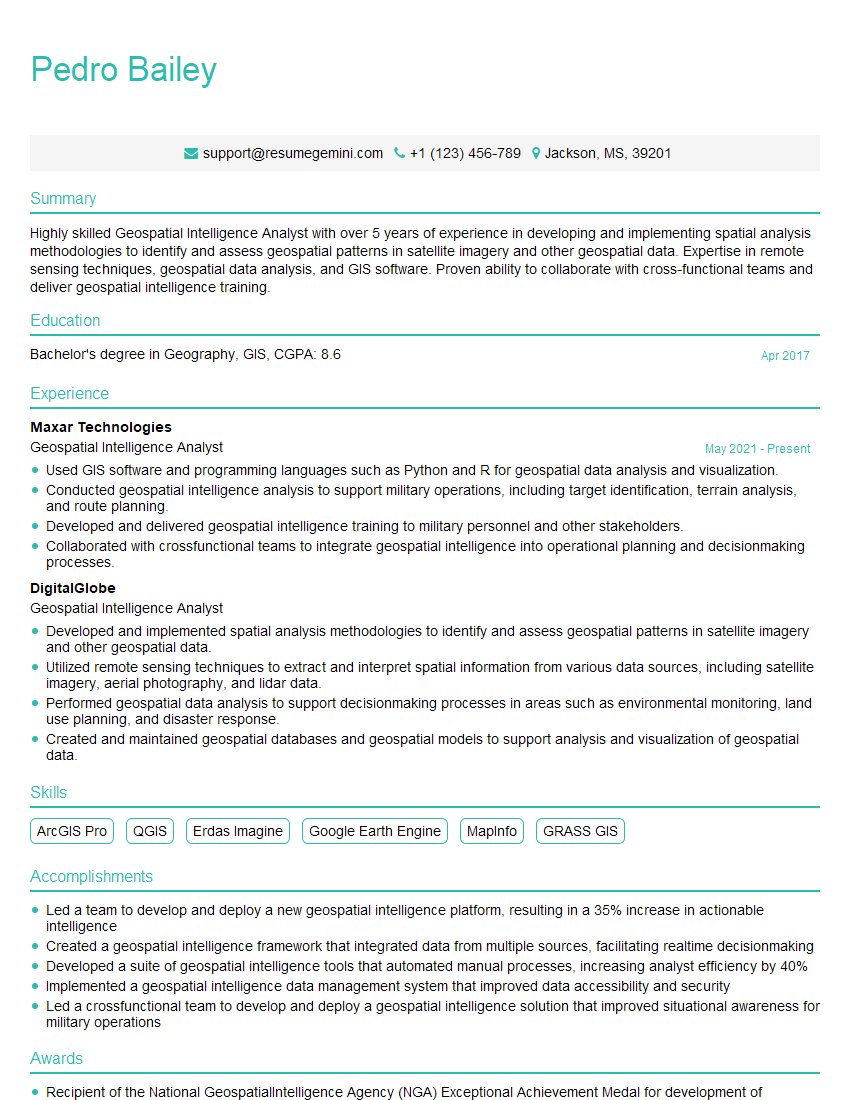

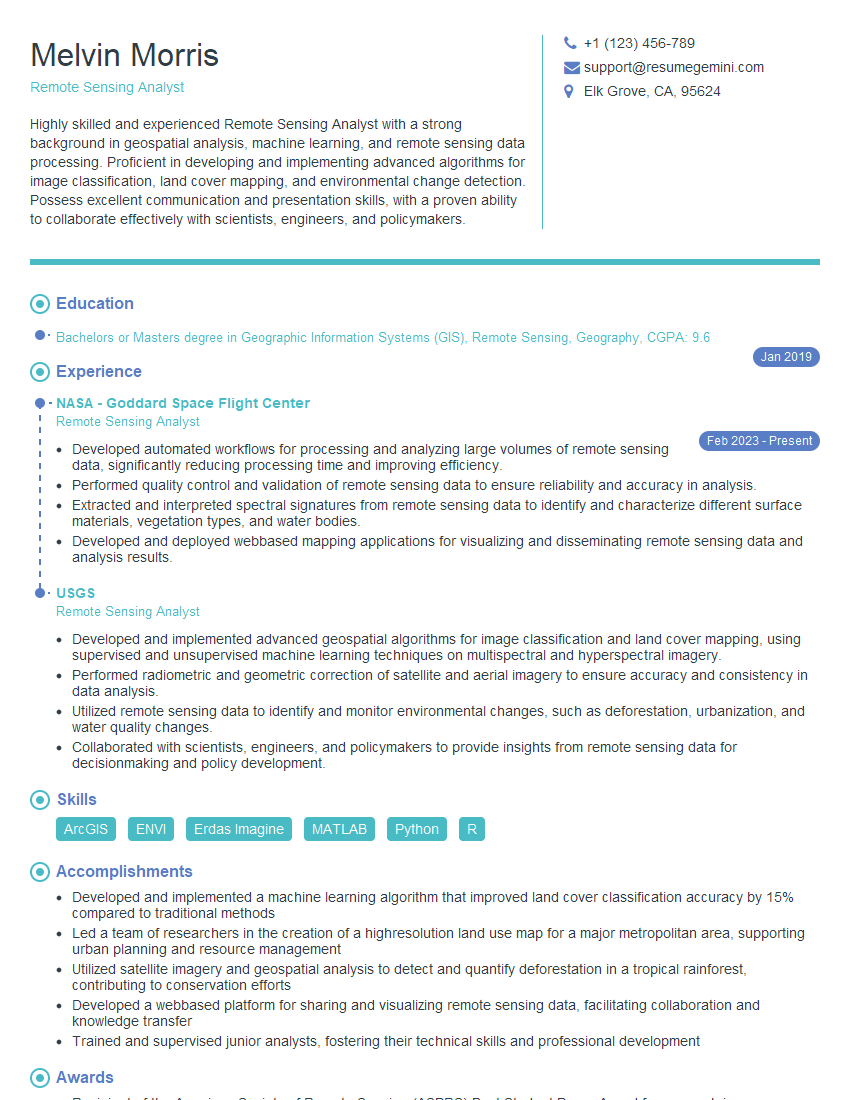

Mastering Imagery Analysis and Interpretation opens doors to exciting and impactful careers in various sectors, from environmental monitoring and urban planning to defense and intelligence. To maximize your job prospects, crafting a compelling and ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can significantly enhance your resume-building experience. It provides tools and templates to create a professional document that highlights your skills and experience effectively. Examples of resumes tailored to Imagery Analysis and Interpretation are available within ResumeGemini to help guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good