Are you ready to stand out in your next interview? Understanding and preparing for In-Circuit Testing (ICT) interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in In-Circuit Testing (ICT) Interview

Q 1. Explain the fundamental principles of In-Circuit Testing (ICT).

In-Circuit Testing (ICT) is a crucial manufacturing process that verifies the functionality of electronic components and their interconnections on a printed circuit board (PCB) before the board is assembled into a final product. Imagine it as a thorough health check for your circuit board. It works by applying electrical signals to individual components and measuring their responses against expected values. This allows for early detection of defects like shorts, opens, incorrect component values, and solder bridge issues, preventing faulty products from progressing further down the manufacturing line.

Fundamentally, ICT relies on a specialized test fixture that physically connects to the PCB, delivering test signals and measuring the responses. This fixture is designed specifically for the PCB under test. The test results are then compared to a pre-programmed test plan which defines the expected behavior. Any deviation indicates a potential fault.

Q 2. Describe the different types of ICT test methods and their applications.

ICT employs several test methods, each with its strengths and applications:

- Analog Testing: Measures voltage, current, and resistance using analog signals. This is suitable for testing basic components like resistors, capacitors, and diodes.

- Digital Testing: Tests digital circuits and logic gates using digital signals (high/low voltage levels). This method is crucial for verifying the functionality of microcontrollers, logic chips, and digital interfaces.

- Boundary-Scan Testing (JTAG): Uses a standardized interface to access and test internal nodes of integrated circuits. It’s particularly effective for detecting hidden faults within complex integrated circuits.

- Mixed-Signal Testing: Combines analog and digital testing techniques, allowing comprehensive verification of PCBs with both analog and digital components. This is a common approach in modern electronics, which increasingly integrate both analog and digital functionality.

The choice of method depends on the complexity of the PCB, the types of components involved, and the required level of test coverage. For instance, a simple circuit might only need analog testing, while a sophisticated board with microprocessors might require mixed-signal testing with Boundary-Scan capabilities.

Q 3. What are the advantages and disadvantages of ICT compared to other test methods?

ICT offers several advantages over other testing methods, like functional testing or automated optical inspection (AOI):

- Early Fault Detection: ICT identifies component-level faults before assembly, minimizing rework and scrap costs.

- High Accuracy: ICT provides precise measurements, leading to accurate fault identification.

- High Throughput: ICT can be highly automated and allows for testing many boards efficiently.

However, ICT also has some limitations:

- Fixture Cost: Designing and manufacturing test fixtures can be expensive, particularly for complex PCBs.

- Test Program Development: Developing robust and efficient test programs requires specialized expertise and time.

- Limited Coverage: ICT primarily focuses on component-level faults and may not detect all functional issues.

The decision to implement ICT involves carefully weighing these advantages and disadvantages based on factors such as production volume, product complexity, and cost constraints. For high-volume production runs with complex PCBs, the upfront investment in ICT often pays for itself through reduced rework and improved product quality.

Q 4. How does ICT contribute to improving product quality and reducing manufacturing costs?

ICT significantly improves product quality and reduces manufacturing costs by:

- Reducing Rework and Scrap: Early detection of faulty components prevents the assembly of defective boards, saving time and resources associated with rework or discarding defective units.

- Improving First-Pass Yield: By identifying and correcting defects early in the process, ICT boosts the percentage of boards that pass the initial test, minimizing production delays and wasted materials.

- Reducing Warranty Claims: By ensuring higher product quality, ICT minimizes the risk of field failures and associated warranty costs.

- Improving Overall Efficiency: Streamlined testing reduces bottlenecks in the production line, improving overall manufacturing efficiency.

For example, consider a manufacturer producing 100,000 circuit boards per year. If ICT reduces the failure rate by even 1%, that translates to a significant savings in terms of materials, labor, and warranty claims.

Q 5. Explain the role of a test fixture in ICT and its design considerations.

The test fixture in ICT is a critical component that serves as the physical interface between the ICT system and the PCB. Think of it as a precisely engineered bed for your circuit board, complete with numerous probes that make contact with specific test points on the board. The design of the fixture is crucial for the accuracy and reliability of the test results.

Key design considerations for an ICT fixture include:

- Accuracy of Probe Placement: Probes must precisely contact the designated test points to ensure accurate measurements.

- Durability and Reliability: The fixture should withstand repeated use and maintain consistent contact throughout its lifespan.

- Accessibility: The fixture design should allow easy access to all necessary test points on the PCB.

- Cost-Effectiveness: The design should balance functionality with cost-effectiveness, considering factors like material selection and manufacturing complexity.

Poor fixture design can lead to inaccurate test results, false failures, and damage to the PCBs under test. A well-designed fixture is essential for achieving reliable and efficient ICT.

Q 6. Describe the process of developing an ICT test program.

Developing an ICT test program involves several steps:

- Netlist Analysis: The first step involves analyzing the circuit’s netlist—a description of the connections between components—to identify the test points and the connections between them.

- Test Point Selection: Strategically selecting test points is critical to ensure adequate fault coverage with a minimal number of tests. This often involves a trade-off between test coverage and test time.

- Test Algorithm Development: This involves creating a set of instructions that the ICT machine will follow to test each component and connection on the PCB. Algorithms need to be designed considering the specific characteristics of the tested components and the tolerances of measurements.

- Test Program Generation: The test algorithms are then translated into a language understood by the ICT machine. Specialized software is typically used for this purpose.

- Test Program Verification: Before deploying the program, thorough testing is essential to validate its accuracy and efficiency. This frequently involves testing on a prototype fixture and making adjustments as needed.

Test program development requires a deep understanding of electronics, circuit analysis, and the ICT system’s capabilities. Experienced engineers use specialized software and often rely on simulation to optimize the test procedures before actual implementation.

Q 7. What are the common challenges faced during ICT implementation?

Implementing ICT can present several challenges:

- Fixture Design Complexity: Designing effective and cost-efficient fixtures for complex PCBs can be a significant challenge.

- Test Program Development Time: Developing comprehensive and reliable test programs requires significant expertise and time, particularly for complex boards.

- Test Coverage Limitations: ICT might not detect all possible faults, requiring additional test methods to achieve complete coverage. For instance, ICT excels at detecting shorts and opens but may not fully test complex signal integrity issues.

- Cost of Implementation: The initial investment in ICT equipment, fixtures, and software can be substantial.

- Maintenance and Calibration: Regular maintenance and calibration of the ICT equipment are crucial for maintaining accuracy and reliability.

Overcoming these challenges often requires careful planning, collaboration between design and test engineers, and a commitment to ongoing maintenance. Investing in proper training and utilizing advanced software tools can greatly ease the implementation process and enhance the effectiveness of ICT.

Q 8. How do you troubleshoot ICT test failures?

Troubleshooting ICT test failures requires a systematic approach. Think of it like detective work – you need to gather clues and eliminate possibilities. The first step is to analyze the test results. Which tests failed? What are the specific failure codes? This pinpoints the area of the board where the problem lies.

Next, I’d visually inspect the board in the area indicated by the failed tests, looking for obvious issues like shorts, open circuits, or misplaced components. A multimeter is invaluable at this stage for verifying connections and component values.

If the visual inspection yields nothing, I’d then move to more sophisticated techniques. This could involve using a logic analyzer or oscilloscope to probe signals and determine if they meet the expected specifications. Sometimes, using a known good board for comparison helps identify differences in signal behavior. Finally, I’d refer to the test program itself to ensure the tests are accurate and that the fixture is properly configured. This process eliminates human error or software bugs as the root cause.

For example, if a test fails indicating a short on a particular net, I would first visually inspect that net for any bridging solder or component placement errors. Then, I’d use a multimeter to confirm the short exists and trace the source to the problematic component or connection.

Q 9. Explain different fault detection techniques used in ICT.

ICT employs several fault detection techniques, each with its strengths and weaknesses. Think of them as different tools in a toolbox, each best suited for a specific task.

- Resistance Measurement: This is a fundamental technique, checking the resistance between various nodes to identify shorts, opens, or incorrect component values. It’s like checking if a pipe is blocked or leaking.

- Capacitance Measurement: This measures the capacitance between nodes, helping detect faulty capacitors or shorts involving capacitive elements. It’s similar to testing the capacity of a water tank.

- Inductance Measurement: Used to check inductors and coils for opens or shorts. It’s analogous to checking the flow resistance in a complex piping system.

- Diode Testing: This verifies the functionality of diodes by measuring forward and reverse voltage drops. It’s like checking if a one-way valve works correctly.

- Transistor Testing: Tests the amplification characteristics and functionality of transistors. This is like checking if a relay or switch operates as designed.

- Boundary Scan Testing (JTAG): This technique, which I’ll elaborate on later, provides access to internal circuit nodes for testing, even with the board populated.

The specific techniques used depend on the board’s design and the types of components present. A well-designed ICT program will employ a combination of these methods to ensure comprehensive testing.

Q 10. What are the key performance indicators (KPIs) for ICT?

Key Performance Indicators (KPIs) for ICT are critical to ensuring efficient and effective testing. They help us monitor and improve our process.

- First Pass Yield (FPY): The percentage of boards that pass the ICT test on the first attempt. A higher FPY indicates fewer defects and a more efficient manufacturing process.

- Test Time: The time it takes to test a single board. Shorter test times reduce production bottlenecks and improve throughput.

- Test Coverage: The percentage of components and nets tested. Higher coverage means greater confidence in the quality of the manufactured boards.

- Defect Detection Rate (DDR): The percentage of actual defects identified by the ICT. This is crucial for assessing the effectiveness of the test program in finding real problems.

- False Failure Rate: The percentage of tests that indicate a failure when there isn’t one. A high rate indicates problems with the test program or fixture.

- Cost per Test: This includes hardware, software, labor, and maintenance costs, providing a financial perspective on testing efficiency.

Tracking these KPIs helps identify areas for improvement, optimizing the ICT process for better quality and cost-effectiveness.

Q 11. How do you ensure the accuracy and reliability of ICT test results?

Ensuring accurate and reliable ICT test results requires a multi-pronged approach, focusing on various aspects of the process.

- Fixture Design and Calibration: A well-designed fixture is critical. It must accurately contact the test points on the PCB, minimizing the risk of false failures due to poor contact. Regular calibration of the fixture ensures its accuracy over time.

- Test Program Development and Verification: The ICT test program must be meticulously developed, thoroughly tested, and verified against the board’s design. This includes simulating the tests and verifying against known good boards.

- Component Library Management: The ICT system needs an accurate and up-to-date component library to ensure the test parameters match the actual components used. Incorrect library data can lead to false failures.

- Regular Maintenance: Regular maintenance and calibration of the ICT equipment and software are crucial. This includes checking for worn-out probes, cleaning the fixture contacts, and ensuring the software is updated.

By addressing these factors, we can drastically reduce the chances of inaccurate or unreliable test results, giving us confidence in the quality of our manufactured products.

Q 12. Explain the concept of boundary scan testing and its role in ICT.

Boundary scan testing, often implemented using the JTAG (Joint Test Action Group) standard, is a powerful technique that allows us to test a board’s internal nodes without directly accessing them via probes. Imagine it as having access to the board’s internal wiring diagram without having to physically touch each wire.

It utilizes dedicated boundary scan cells embedded within integrated circuits (ICs). These cells form a chain that allows data to be shifted in and out, effectively probing various points within the IC and between them. This allows testing of connections and functionalities even when the board is fully populated and components are soldered in place.

In ICT, boundary scan is particularly useful for testing complex boards with high component density where it’s difficult to access all the test points. It’s particularly beneficial for detecting hidden shorts or opens that might be difficult to pinpoint through other methods. Boundary scan testing extends the reach and capabilities of ICT, giving us a more comprehensive test coverage.

Q 13. What are the different types of ICT test equipment?

The types of ICT test equipment vary depending on the complexity and volume of testing. However, some key components are common across most systems:

- ICT Tester: The central unit that controls the entire testing process. It’s the brain of the operation.

- Test Fixture: A specialized device that makes contact with the PCB’s test points. It’s custom-designed for each board and is often the most critical component for accuracy.

- Probes/Needles: These create the physical connection between the fixture and the PCB. Their condition and maintenance are crucial.

- Power Supplies: Provide the necessary power to the board under test.

- Signal Generators/Analyzers: Used to generate and analyze signals for testing various board functionalities.

- Software: The software controls the entire test process, including test program execution, data analysis, and reporting.

Depending on the level of automation, there can also be automated handlers and conveyor systems for efficient throughput in high-volume manufacturing.

Q 14. Describe your experience with specific ICT software and hardware.

Throughout my career, I’ve extensively worked with several ICT software and hardware platforms. My experience includes using Teradyne’s UltraFLEX and J750 systems, both of which are industry-leading solutions. I’m proficient in using their respective software packages, including test program development, debugging, and data analysis.

I’m also familiar with the creation of test fixtures, leveraging my CAD experience to optimize their design for efficient testing and reliable probe contact. In one project involving a high-density board with many fine-pitch components, I used the J750 system with a custom-designed fixture to achieve over 99% test coverage, resulting in a significant reduction in the number of post-manufacturing failures. The expertise I gained in that project led to improvements in both fixture design and test program efficiency.

Furthermore, I’ve worked with various types of test probes and adaptors, understanding their limitations and how to select the optimal ones for a given application. My practical experience in selecting, maintaining and troubleshooting hardware and software ensures reliable and efficient ICT operation.

Q 15. How do you manage and analyze large volumes of ICT test data?

Managing and analyzing large ICT test datasets requires a structured approach. Think of it like organizing a massive library – you can’t just throw everything on the shelves and expect to find what you need. We utilize a combination of automated data analysis tools and sophisticated database systems. For example, we often employ specialized software that can filter, sort, and aggregate data based on various parameters like test results, board IDs, and timestamps. This allows us to quickly identify trends, pinpoint problematic components or tests, and generate insightful reports.

Furthermore, we leverage statistical analysis techniques to identify outliers and potential systematic errors. Imagine a situation where a specific component consistently fails a particular test. Statistical analysis can help us confirm if this is a genuine issue or just random noise within the data. Finally, we use data visualization tools to represent the vast data sets graphically – charts and dashboards help us identify patterns and problems far quicker than manually reviewing spreadsheets.

In a recent project involving millions of test records, we used a combination of Python scripts and a relational database to process the data. The Python scripts automatically identified failed tests and generated summary reports, while the database provided efficient data storage and retrieval. This approach significantly reduced our analysis time and helped pinpoint the root cause of several manufacturing defects.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with ICT test program debugging and optimization.

Debugging and optimizing ICT test programs is a crucial part of my role. It’s like being a detective, solving a puzzle piece by piece. The first step involves meticulous examination of the test program’s logic and the test fixture’s physical connections to the unit under test. I frequently use simulation tools to model the circuit behavior and check the accuracy of my test program. This helps prevent costly mistakes.

Optimization is about efficiency. We aim to reduce test time while maintaining accuracy. This often involves identifying redundant test steps or optimizing the test sequence to improve throughput. A specific example involved a test program that ran for over 20 minutes per unit. By reorganizing the test steps and optimizing the data acquisition routines, we reduced the time to under 5 minutes, dramatically increasing efficiency.

For example, if a test program consistently fails to detect a short circuit on a specific component, I will systematically check the test sequence, the test fixture connections, and finally the actual test parameters to see if I’ve defined the short-circuit thresholds correctly. We often employ advanced debugging techniques such as logic analyzers and oscilloscopes to pinpoint the exact cause of test failures.

Example code snippet (Illustrative): // Check if voltage is within tolerance if (measuredVoltage > expectedVoltage + tolerance || measuredVoltage < expectedVoltage - tolerance){ // Log error and mark as fail }Q 17. How do you handle discrepancies between ICT test results and expectations?

Discrepancies between ICT test results and expectations require a systematic investigation, almost like a scientific experiment. First, we verify the accuracy of the test program and the test fixture through repeated tests and simulations. This ensures that the test is properly set up and working as designed.

Next, we carefully examine the component specifications to confirm the expected results align with what the manufacturer provides. Sometimes a discrepancy can arise from outdated specifications or even errors in the documentation. Following this, we examine the board itself. This might involve visual inspection, using magnification to check for any damaged components or faulty soldering.

If the problem is not apparent, we then employ more advanced techniques like X-ray inspection or microscopic examination to identify potential hidden defects. I recall an instance where ICT consistently reported a failure on a specific resistor. Visual inspection and X-ray scans revealed that the resistor had a manufacturing defect not visible to the naked eye. Thorough investigation always finds the root cause.

Q 18. Describe your experience working with cross-functional teams in an ICT environment.

Cross-functional collaboration is essential in the ICT environment. My experience involves working closely with design engineers, manufacturing engineers, and quality control personnel. We frequently use shared databases and collaborative software (e.g., project management platforms) to coordinate efforts and ensure consistent communication. For example, I often collaborate with design engineers to validate the testability of new designs, preventing issues that could arise later during production testing.

In one project, we worked closely with the manufacturing team to optimize the ICT process to adapt to changes in the production line. This involved modifying the test fixtures to accommodate new assembly techniques and adjusting the test program parameters to account for variations in component tolerances. Effective communication and a shared understanding of goals are paramount for successful collaboration in these cross-functional projects.

Q 19. How do you stay updated with the latest advancements in ICT technology?

Staying current with ICT advancements is crucial in this rapidly evolving field. I regularly attend industry conferences and webinars, read specialized publications, and participate in online forums and communities dedicated to ICT. Additionally, I actively seek out training courses and workshops to expand my skillset in newer technologies.

I also stay abreast of new test equipment and software releases. This includes monitoring manufacturers' websites, studying new specifications and features, and regularly evaluating if upgraded software and equipment would increase efficiency and test coverage.

For instance, I recently completed a course on high-speed digital testing techniques, which has enhanced my ability to troubleshoot complex digital circuits and improved the quality and speed of our test procedures.

Q 20. What are the safety precautions you would take while working with ICT equipment?

Safety is paramount when working with ICT equipment. This involves adhering to strict protocols and taking necessary precautions. Before working on any equipment, we always ensure the power is turned off and disconnected from the mains supply. We use proper grounding techniques to prevent electrical shocks and utilize personal protective equipment (PPE) like safety glasses and anti-static wrist straps.

We follow established safety procedures for handling test probes and fixtures to avoid short circuits and accidental damage to components. Furthermore, we regularly inspect the equipment for any signs of damage or wear and tear, and we have regular maintenance to ensure the equipment is in good working condition. Finally, our workplace adheres to strict safety regulations and provides necessary training for all personnel involved with ICT operations.

Q 21. Explain your experience with different types of test adapters and their application in ICT.

My experience encompasses various types of test adapters, each suited to specific needs. Think of adapters as specialized tools allowing us to connect ICT equipment to a wide range of devices. We commonly use bed-of-nails fixtures for through-hole and surface-mount PCBs. These fixtures use numerous needles to contact test points on the board. The design of these fixtures is critical for ensuring good contact and avoiding damage to the PCB.

For more complex devices, we use flying-probe systems, which use robotic probes to make contact with individual test points. These systems are highly versatile and can accommodate boards with a wider range of designs and configurations. I have also worked with specialized adapters for testing devices with non-standard connectors or unique test requirements.

In one project, we used a custom adapter to test a device with a very high-density connector. The design of this adapter required careful consideration to avoid signal interference and ensure reliable contact. Adapters are an essential component of ICT testing that greatly enhances the flexibility of the equipment.

Q 22. Describe your experience with ICT testing of different PCB types and complexities.

My experience in ICT spans a wide range of PCB types and complexities, from simple single-layer boards with a handful of components to highly complex multi-layer boards incorporating high-speed digital and analog circuitry, microcontrollers, and memory devices. I've worked with various technologies including surface mount technology (SMT), through-hole technology (THT), and mixed technology PCBs. The complexity affects the testing strategy significantly. Simple boards might only require basic continuity and short tests, while complex boards demand comprehensive testing including boundary-scan, functional tests, and even in-system programming verification. For instance, testing a simple power supply board is fundamentally different from testing a complex automotive control unit. The former might focus on component presence and correct connections, while the latter requires extensive functional verification based on the expected behavior of the entire system.

I'm proficient in using various ICT test fixtures and software, adapting my approach based on the specific requirements of each PCB. This includes understanding and troubleshooting test failures, identifying root causes, and working collaboratively with design and manufacturing teams to resolve issues efficiently. For example, on a recent project involving a high-frequency communication board, we had to carefully design the test fixture to minimize signal reflections and ensure accurate measurements, which wasn’t trivial.

Q 23. How do you determine the appropriate ICT test coverage for a given product?

Determining appropriate ICT test coverage is crucial for balancing test effectiveness and cost. It's a process that requires careful consideration of several factors. First, a thorough Fault Mode and Effects Analysis (FMEA) is performed to identify potential failures and their impact. This helps prioritize tests on critical components and circuits. Next, the design's complexity, the potential for manufacturing defects, and the required product reliability dictate the necessary test coverage.

For high-reliability applications, a higher test coverage is needed, often aiming for 100% testing of critical nets. This might involve testing every connection and component individually. However, for less critical applications, a more targeted approach might suffice, focusing on key functionalities and critical paths. I use statistical analysis to determine acceptable levels of test coverage based on historical data and risk assessment. Ultimately, the goal is to achieve a balance that ensures high quality while remaining cost-effective.

For example, if a particular component has a high failure rate in the past, we might increase the test coverage for it through additional tests such as component value verification, rather than relying solely on continuity tests.

Q 24. Explain your understanding of ICT test specifications and documentation.

ICT test specifications and documentation are the foundation of a successful ICT process. They detail every aspect of the test program, from fixture design to test procedures and expected results. This documentation must be precise and unambiguous to ensure consistent and repeatable tests across different production runs. The specifications typically include:

- Test program code: This defines the sequence of tests, including measurement points, tolerance limits, and pass/fail criteria. Often written in a specific ICT programming language.

Example: TEST_NET(Net1, Continuity, 0.1ohm); - Fixture design documentation: This outlines the physical arrangement of the test fixture, including probe positions, component identification, and grounding strategy.

- Test procedures: A step-by-step guide to executing the test program and interpreting the results.

- Acceptance criteria: Defines the acceptable limits for measurements and the conditions under which a unit is considered to have passed or failed the test.

- Test report format: Specifies the information that needs to be recorded for each tested unit, including test results, failure codes, and timestamps.

Good documentation ensures that the test process is repeatable, auditable, and easily maintained, allowing for quick troubleshooting and efficient problem solving.

Q 25. How do you contribute to continuous improvement of ICT processes?

Continuous improvement of ICT processes is vital to increase efficiency, reduce costs, and enhance product quality. My contributions include:

- Data analysis: Regularly analyzing test data to identify trends, common failures, and areas for optimization. This often involves using statistical process control (SPC) techniques.

- Fixture optimization: Working with the design and manufacturing teams to improve the efficiency and effectiveness of the test fixtures. This may involve redesigning fixtures to accommodate new products or improving probe placement for better contact reliability.

- Test program refinement: Continuously refining and improving the test programs by incorporating feedback from production, failure analysis, and design reviews. This might involve adding new tests or adjusting existing tests to increase their accuracy and sensitivity.

- Automation: Implementing automation to streamline the test process and reduce human intervention. For example, automating test report generation and failure analysis.

- Training: Providing training to technicians on best practices for fixture maintenance, test program execution, and troubleshooting.

For instance, by analyzing failure data, we identified a recurring issue with a specific component during a particular stage of the test process. This led to an investigation which revealed a fixture design flaw that was corrected, ultimately reducing the failure rate by 15%.

Q 26. Describe a situation where you had to solve a complex ICT problem.

One challenging ICT problem I encountered involved a high-speed digital board with embedded microprocessors. Initially, the ICT test program was failing to detect intermittent opens related to fine-pitch BGA components. The failures were sporadic and difficult to reproduce consistently.

My approach was systematic:

- Thorough review of test program and fixture: We carefully reviewed the test program for any potential flaws, such as insufficient test frequencies or incorrect test limits. Additionally, we inspected the test fixture for any loose connections or potential damage to the probes.

- Failure analysis: A detailed analysis of the failed boards was undertaken, including microscopic inspection of the BGA solder joints, X-ray inspection, and signal analysis.

- Improved test methodology: We moved away from simple continuity tests and implemented more advanced techniques, including boundary scan tests and impedance measurements. This helped us detect the intermittent opens more reliably.

- Fixture modification: After identifying areas for fixture improvement, we adjusted the probe pressure and re-designed specific probe points to ensure better contact with the problematic BGA pins.

Through this multi-pronged approach, we ultimately resolved the issue, resulting in a much more reliable and effective ICT testing process.

Q 27. What is your experience with statistical process control (SPC) in ICT?

My experience with Statistical Process Control (SPC) in ICT is extensive. SPC is essential for monitoring and controlling the ICT process, ensuring consistent test quality and identifying potential problems early. I regularly utilize control charts, such as X-bar and R charts, to track key process parameters like test pass/fail rates, test times, and fault counts. This allows for proactive identification of trends and deviations from established baselines.

Control charts are not just used for detecting out-of-control conditions; they also help me to assess the capability of the ICT process. Process Capability Indices, such as Cp and Cpk, are used to determine whether the process is capable of meeting the required specifications consistently. This information is crucial for making data-driven decisions related to process optimization, fixture maintenance, and test program adjustments.

For example, by monitoring the pass/fail rate of the ICT process using control charts, I can quickly identify an upward trend of failures, potentially indicating a deterioration in the test fixture or a change in component quality. This enables us to take corrective action before a major issue arises, saving time, money, and potentially preventing the shipment of defective products.

Q 28. How do you balance the need for thorough testing with production speed and cost constraints?

Balancing thorough testing with production speed and cost constraints is a constant challenge in ICT. It requires finding the optimal balance between test coverage, test time, and cost. The approach is often iterative and involves several strategies:

- Prioritization: Focusing on critical components and functionalities ensures the highest impact testing, even if full coverage isn’t feasible.

- Test program optimization: Streamlining the test program, eliminating redundant tests, and employing efficient algorithms to reduce test times without compromising accuracy.

- Fixture design: Efficient fixture design minimizes test times and enhances ease of use. Multiple products can be tested on one fixture where appropriate.

- Automation: Automating repetitive tasks, such as test execution and data logging, minimizes manual intervention and increases throughput.

- Risk assessment: A thorough risk assessment helps to justify trade-offs between test coverage and cost. High-risk components receive the most rigorous testing.

For instance, we might choose to perform a complete functional test only on a sample of units, relying on statistical analysis to confirm the quality of the remaining units. This allows for cost-effective high-volume testing while still maintaining the assurance of high product quality. The key is to find the sweet spot where we're detecting the most important potential failures while respecting production schedules and budget constraints.

Key Topics to Learn for In-Circuit Testing (ICT) Interview

Preparing for your In-Circuit Testing (ICT) interview can feel daunting, but with focused learning, you'll be well-equipped to showcase your expertise. We've broken down key areas to help you ace that interview!

- ICT Fundamentals: Understand the basic principles of In-Circuit Testing, including its purpose, methodologies, and advantages over other testing methods. Consider the different types of ICT systems and their applications.

- Test Fixture Design and Development: Explore the practical aspects of designing and building effective test fixtures. This includes understanding component placement, probe selection, and signal integrity considerations. Be ready to discuss troubleshooting techniques related to fixture issues.

- Test Program Development: Gain a solid understanding of programming languages and software used in ICT programming. Focus on writing efficient and reliable test programs, including understanding data acquisition and analysis.

- Data Analysis and Interpretation: Learn to interpret ICT test data effectively. This involves identifying failures, understanding statistical process control (SPC) concepts, and communicating results clearly.

- Troubleshooting and Problem Solving: Practice identifying and resolving common issues encountered during ICT. This includes dealing with false positives, understanding component behavior, and effective debugging strategies.

- ICT Equipment and Technologies: Familiarize yourself with common ICT equipment, software, and technologies used in the industry. Be prepared to discuss their capabilities and limitations.

- Quality Control and Compliance: Understand the role of ICT in maintaining quality control and adhering to industry standards and regulations.

Next Steps

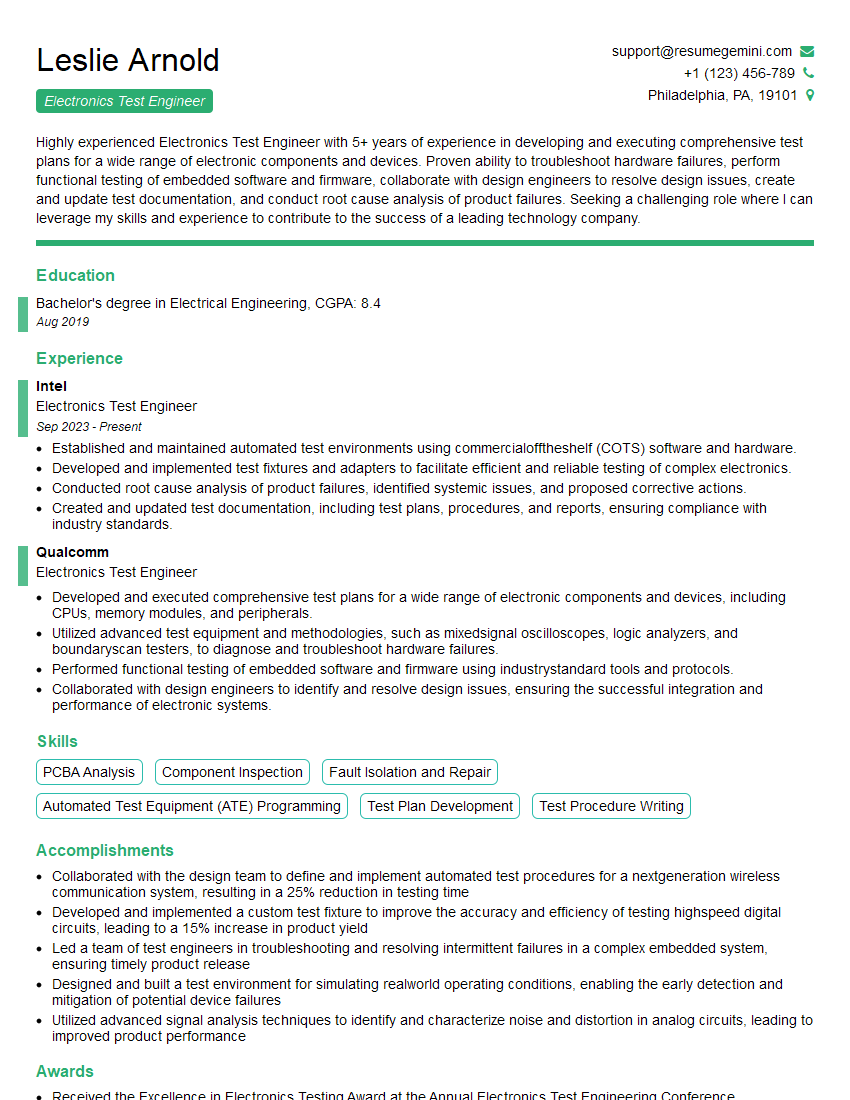

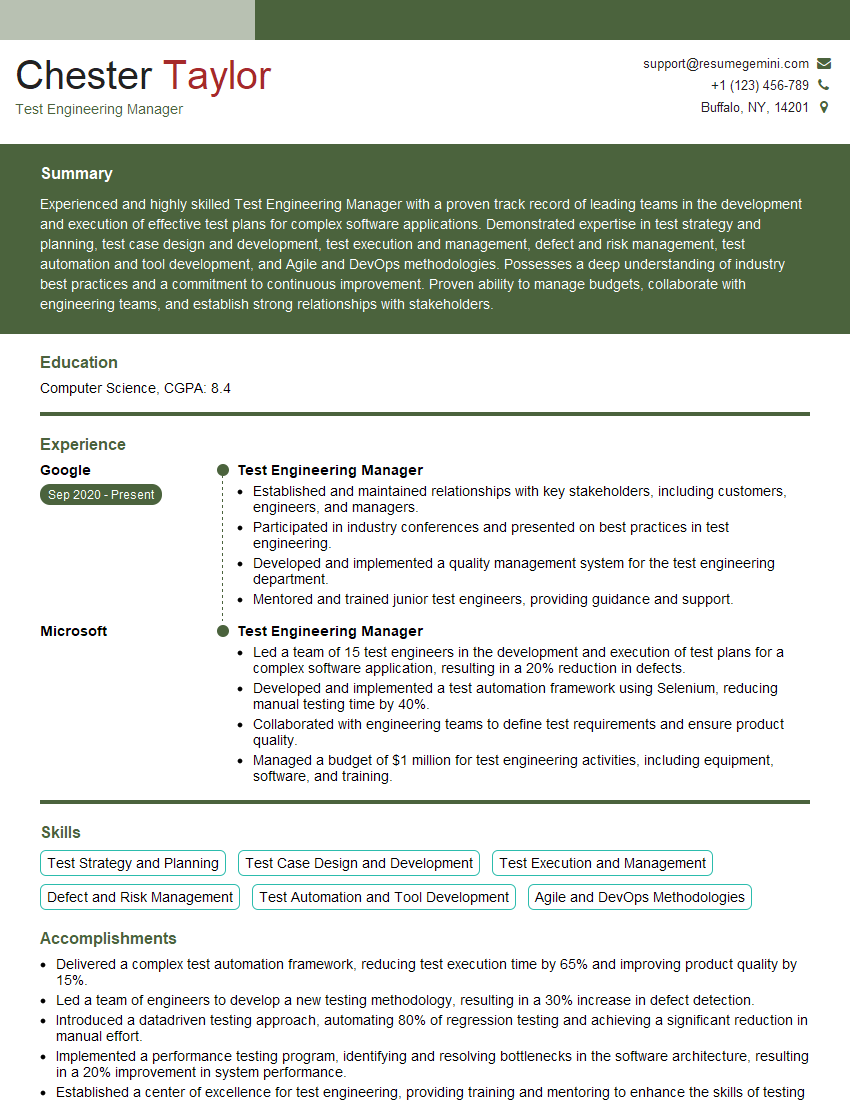

Mastering In-Circuit Testing (ICT) opens doors to exciting career opportunities in manufacturing, electronics, and quality control. To maximize your chances of landing your dream job, a strong resume is crucial. Crafting an ATS-friendly resume that highlights your ICT skills and experience is essential. We highly recommend using ResumeGemini to build a professional and impactful resume that gets noticed. ResumeGemini provides examples of resumes tailored to In-Circuit Testing (ICT) roles, giving you a head start in presenting your qualifications effectively. Invest the time to create a compelling resume – it's your first impression and a key to unlocking your career potential.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good