Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Knowledge of quality control and testing standards interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Knowledge of quality control and testing standards Interview

Q 1. Explain the difference between Quality Control and Quality Assurance.

Quality Control (QC) and Quality Assurance (QA) are often used interchangeably, but they represent distinct yet complementary processes within a quality management system. Think of QA as the preventative measure and QC as the corrective measure.

Quality Assurance (QA) is a proactive process focused on preventing defects. It involves establishing and maintaining a comprehensive system to ensure that the product or service meets predetermined quality standards. This includes defining processes, procedures, and guidelines; conducting regular audits and reviews; and ensuring that the development team has the necessary resources and training. It’s about building quality into the process.

Quality Control (QC) is a reactive process focused on identifying and rectifying defects. It involves inspecting and testing the product or service at various stages of the process to ensure that it conforms to the specified requirements. This might include testing individual components, conducting system tests, and performing user acceptance testing. It’s about verifying the quality of the output.

Analogy: Imagine building a house. QA would be like ensuring you have the right blueprints, skilled builders, and high-quality materials before construction begins. QC would be like inspecting the foundation, walls, and plumbing during and after construction to ensure everything meets specifications and correcting any problems found.

Q 2. Describe your experience with various testing methodologies (e.g., Agile, Waterfall).

I have extensive experience working with both Agile and Waterfall methodologies. In Waterfall, quality control is heavily focused on the testing phase, often involving significant testing at the end of the development cycle. This can lead to costly rework if significant defects are found late in the process. My experience with Waterfall projects involved rigorous test planning, meticulous test case design, and thorough execution of various testing types, including unit, integration, system, and user acceptance testing. We meticulously documented every stage, ensuring traceability from requirements to test results.

In Agile, the approach is drastically different. Quality is built into each iteration (sprint) through continuous testing and feedback. This involves frequent integration testing, automated testing, and close collaboration between developers and testers. My experience with Agile projects has involved employing techniques such as Test-Driven Development (TDD) and Behavior-Driven Development (BDD), allowing for early defect detection and rapid iteration. Continuous integration and continuous delivery pipelines are heavily utilized to automate testing and deployment processes, enhancing efficiency and reducing risks.

Q 3. What are the different types of software testing you are familiar with?

My expertise encompasses a broad range of software testing types:

- Unit Testing: Verifying individual components or modules of code.

- Integration Testing: Testing the interaction between different modules or components.

- System Testing: Testing the entire system as a whole to ensure it meets requirements.

- User Acceptance Testing (UAT): Validating the system’s functionality from an end-user perspective.

- Regression Testing: Ensuring that new code changes haven’t introduced new bugs or broken existing functionality.

- Performance Testing: Evaluating system performance under various load conditions.

- Security Testing: Identifying vulnerabilities and weaknesses in the system’s security.

- Usability Testing: Assessing the system’s ease of use and user-friendliness.

- Compatibility Testing: Verifying the system’s compatibility across different browsers, operating systems, and devices.

I am also experienced with exploratory testing, which is a less structured approach that emphasizes learning and discovery during testing.

Q 4. Explain the software development lifecycle (SDLC) and its relation to quality control.

The Software Development Lifecycle (SDLC) is a structured process for planning, creating, testing, and deploying software. Quality control is integral throughout the entire SDLC. Different SDLC models exist, such as Waterfall, Agile, and Spiral. Regardless of the model, effective quality control involves integrating testing activities into each phase.

Typical SDLC Phases and QC Integration:

- Requirements Gathering: QA reviews requirements for clarity, completeness, and testability.

- Design: QA participates in design reviews to identify potential issues early.

- Coding: Unit testing is performed by developers.

- Testing: Various testing types are conducted (unit, integration, system, UAT) to verify functionality and identify defects.

- Deployment: QA verifies the deployment process and checks for any post-deployment issues.

- Maintenance: Ongoing monitoring and bug fixes are handled, often involving regression testing.

By weaving quality control throughout the SDLC, organizations can significantly reduce the cost and effort required to fix defects later in the process.

Q 5. How do you prioritize testing activities in a time-constrained environment?

Prioritizing testing activities in a time-constrained environment requires a strategic approach. I typically employ a risk-based prioritization strategy. This involves:

- Identifying critical functionalities: Focusing on features that are core to the system’s functionality and those that pose the highest risk of failure.

- Assessing risk levels: Evaluating the potential impact of a failure on the business and users. Higher impact features require more rigorous testing.

- Using test coverage analysis: Determining which parts of the system have been adequately tested and focusing on areas with low coverage.

- Leveraging automation: Automating repetitive tests to increase efficiency and free up time for more complex testing.

- Employing Pareto Principle (80/20 rule): Focusing on the 20% of tests that cover 80% of the most critical functionality.

- Regular communication: Keeping stakeholders updated on progress and adjusting priorities as needed.

For example, in an e-commerce application, the checkout process would be given high priority due to its critical role in revenue generation. Less critical features might have less extensive testing or delayed testing until after the most critical components are validated.

Q 6. Describe your experience with test case design and execution.

My experience in test case design involves creating detailed, comprehensive, and reproducible tests. I use various techniques such as equivalence partitioning, boundary value analysis, and decision table testing to ensure thorough test coverage. I typically structure my test cases with clear steps, expected results, and pass/fail criteria. This allows for easy execution and reporting. Examples of tools used include TestRail, Zephyr, and Xray.

During test execution, I meticulously follow the designed test cases, recording actual results and documenting any deviations from the expected outcome. I use defect tracking systems (e.g., Jira, Bugzilla) to report and manage discovered defects. I regularly review test results to identify trends and patterns which might indicate deeper issues in the system. In addition, I contribute to the improvement of test cases, ensuring ongoing maintenance and updating based on past experiences and feedback from stakeholders.

Q 7. How do you handle bugs and defects found during testing?

Handling bugs and defects is a crucial aspect of my role. My approach is systematic and focuses on clear communication and effective resolution:

- Reproducing the defect: I meticulously document steps to reproduce the defect to ensure consistency.

- Categorizing and prioritizing: Assigning severity and priority levels (e.g., critical, major, minor) based on the impact on the system and users.

- Reporting the defect: Using a defect tracking system (e.g., Jira) to report the defect with clear details, steps to reproduce, and expected vs. actual results. Adding screenshots and logs are vital for clear communication.

- Collaborating with developers: Working closely with developers to analyze the root cause of the defect and propose solutions.

- Verifying fixes: After the developers fix the defects, I conduct regression testing to ensure the fix hasn’t introduced any new issues.

- Closing the defect: Once verified, the defect is closed in the tracking system.

Throughout this process, maintaining clear and concise communication with the development team and stakeholders is paramount to ensure prompt and effective resolution. Regular follow-up is also critical to prevent defects from slipping through the cracks.

Q 8. What is your experience with test automation frameworks (e.g., Selenium, Appium)?

My experience with test automation frameworks is extensive, encompassing both Selenium and Appium. Selenium, primarily used for web application testing, allows for automating browser interactions such as clicking buttons, filling forms, and verifying content. I’ve leveraged Selenium’s WebDriver API in various projects to create robust and maintainable automated test suites, utilizing programming languages like Java and Python. For example, I developed a suite of Selenium tests for an e-commerce website, automating the checkout process to ensure a smooth user experience and identify any potential issues. Appium, on the other hand, extends this capability to mobile applications (both Android and iOS). I’ve used Appium to test native, hybrid, and mobile web apps, using similar scripting approaches to Selenium but with the added complexity of handling device-specific functionalities. For instance, I automated the login functionality of a mobile banking app using Appium, ensuring consistency across different iOS and Android versions.

Beyond the core frameworks, I’m also proficient in implementing Page Object Models (POM) to improve test code maintainability and readability, and TestNG or JUnit for test management and reporting. I understand the importance of choosing the right framework for the project based on factors such as technology stack, budget, and project timelines.

Q 9. Explain your experience with different testing levels (unit, integration, system, acceptance).

My experience encompasses all levels of software testing: unit, integration, system, and acceptance testing. Think of it like building a house: Unit testing is like testing individual bricks to make sure they are strong; Integration testing checks how the bricks fit together to form a wall; System testing is assessing the whole house’s functionality; and Acceptance testing verifies it meets the client’s needs.

- Unit Testing: I’ve extensively used JUnit and other unit testing frameworks to verify the correctness of individual components or modules of software. This typically involves writing small, focused tests that exercise specific functions or methods.

- Integration Testing: I conduct integration tests to verify the interaction between different modules or components. This might involve testing the interaction between a database and an API, for example.

- System Testing: At this level, I perform end-to-end testing of the entire system to ensure that all components work together seamlessly and meet the specified requirements. This often involves scenarios simulating real-world usage.

- Acceptance Testing: This final phase involves verifying the system against the client’s or user’s requirements and expectations. This could be done through User Acceptance Testing (UAT) with real users or through formal acceptance criteria defined in a contract.

Understanding these distinct testing levels is crucial for a comprehensive QA strategy, ensuring early detection of defects and preventing costly issues later in the development lifecycle.

Q 10. How do you measure the effectiveness of your testing efforts?

Measuring the effectiveness of testing efforts is crucial. It’s not just about finding bugs, but also about demonstrating the value of QA activities. I primarily use these metrics:

- Defect Density: This metric tracks the number of defects found per lines of code or per feature, providing insights into the quality of the codebase.

- Defect Severity and Priority: Categorizing defects by severity (critical, major, minor) and priority (urgent, high, medium, low) helps prioritize fixing efforts and understand the impact of unresolved issues.

- Test Coverage: Measuring the percentage of code or requirements covered by tests helps assess the comprehensiveness of the testing effort. We aim for high coverage, but it’s also essential to focus on testing critical functionalities.

- Test Execution Time: Monitoring the time taken to execute test suites provides valuable information on efficiency and potential areas for improvement (e.g., test optimization).

- Escape Rate: Tracking how many defects made it into production after testing reveals gaps in the QA process and needs improvement.

By regularly monitoring these metrics, we can identify trends, pinpoint areas needing improvement, and ultimately improve software quality and release confidence.

Q 11. Describe your experience with performance and load testing.

My experience with performance and load testing is significant. I’ve used tools like JMeter and LoadRunner to simulate various user loads on applications to assess their responsiveness, stability, and scalability under stress. Performance testing ensures the application meets performance requirements (response times, throughput), while load testing determines the breaking point—the maximum user load the system can handle before performance degradation or failure.

For example, I used JMeter to conduct load tests on a banking application to determine the maximum concurrent user transactions it could handle without significant performance issues. This involved designing test plans, creating virtual users, monitoring server resources, and analyzing the results to identify potential bottlenecks. The findings were crucial in making informed decisions about server infrastructure scaling and application optimization.

I also have experience with stress testing (pushing the system beyond its normal limits to identify failure points) and soak testing (long-duration tests to check for memory leaks and resource exhaustion).

Q 12. How do you ensure test data security and integrity?

Ensuring test data security and integrity is paramount. We use several strategies:

- Data Masking and Anonymization: Sensitive data (like PII) is masked or anonymized to protect privacy and comply with regulations. Tools and techniques are used to replace sensitive information with realistic but non-identifiable substitutes.

- Data Subsets and Test Environments: Using smaller, representative subsets of production data in test environments minimizes risk. This reduces the potential impact of data breaches or accidental data modification.

- Data Encryption: Data at rest and in transit is encrypted to protect against unauthorized access. This includes securing databases, backups, and communication channels.

- Access Control: Strict access controls are in place, limiting access to test data based on roles and responsibilities. Only authorized personnel have the necessary permissions.

- Data Refresh and Cleanup: Regularly refreshing test data with realistic values is important, as is securely deleting or anonymizing the data after testing is complete.

These measures ensure that our testing activities do not compromise the security or integrity of sensitive information.

Q 13. Explain your experience with reporting and tracking testing progress.

Effective reporting and tracking are vital for managing testing progress. I utilize various tools and techniques to achieve this:

- Test Management Tools: Tools like Jira or Azure DevOps are used to create and track test cases, manage defects, and monitor test execution. Dashboards provide a clear overview of the testing status and progress.

- Automated Reporting: Automation frameworks generate reports that automatically summarize test results, including pass/fail rates, execution times, and detailed logs. This saves time and ensures accuracy.

- Custom Reporting: For specific needs, I can generate custom reports that focus on particular aspects of the testing process, such as defect trends or performance metrics.

- Regular Status Meetings: Keeping stakeholders informed via regular status meetings helps keep everyone aligned on testing progress and to address any roadblocks.

- Visualizations: Using charts and graphs to visually represent data makes the information easier to understand and digest. This is especially useful for highlighting key trends and areas of concern.

Transparency and regular communication are key to keeping stakeholders informed and building trust.

Q 14. How do you manage conflicts between developers and testers?

Managing conflicts between developers and testers requires a collaborative approach focused on mutual understanding and respect. The key is to remember that we share a common goal: delivering high-quality software. When conflicts arise, I employ these strategies:

- Open Communication: Encouraging open and honest communication between developers and testers is crucial. Creating a safe space for voicing concerns and disagreements is vital.

- Focus on the Problem, Not the Person: Discussions should center on the issue at hand, not on assigning blame or making personal attacks.

- Shared Understanding of Requirements: Ensuring that both developers and testers have a clear and shared understanding of the requirements prevents misunderstandings from escalating into conflicts.

- Collaborative Problem-Solving: Working together to find solutions is far more effective than imposing solutions unilaterally. Brainstorming sessions and joint debugging can help identify root causes and develop effective resolutions.

- Mediation: In some cases, a neutral third party might be needed to facilitate constructive dialogue and help find a resolution.

- Documentation: Clearly documenting discussions, agreements, and decisions helps avoid future misunderstandings and ensures accountability.

Ultimately, fostering a collaborative environment where developers and testers work together as a team is the most effective approach to resolving conflicts.

Q 15. How do you stay up-to-date with the latest testing tools and technologies?

Staying current in the rapidly evolving world of testing tools and technologies requires a multi-pronged approach. It’s not just about learning a new tool; it’s about understanding the underlying principles and adapting them to different contexts.

- Continuous Learning Platforms: I actively engage with online learning platforms like Coursera, Udemy, and LinkedIn Learning, focusing on courses covering the latest advancements in automation frameworks (like Selenium, Cypress, Playwright), performance testing tools (like JMeter, Gatling), and API testing tools (like Postman, REST-assured). I often look for courses with hands-on projects to solidify my understanding.

- Industry Blogs and Publications: I regularly follow influential blogs and publications such as those from software testing companies, industry leaders, and prominent testing professionals. This provides valuable insights into emerging trends and best practices.

- Conferences and Webinars: Attending industry conferences and webinars is invaluable for networking and learning directly from experts. These events often showcase the latest tools and techniques, and offer opportunities to discuss challenges and solutions with peers.

- Open-Source Contributions: Contributing to open-source projects allows me to learn from experienced developers and get hands-on experience with various tools and technologies. It also helps me build a strong portfolio and demonstrate my commitment to the field.

- Community Engagement: Engaging in online communities like Stack Overflow and Reddit (r/testing) allows me to learn from others’ experiences, share my own knowledge, and stay informed about new tools and techniques.

This combination of formal and informal learning keeps me abreast of the latest developments and ensures I can leverage the most effective tools and technologies for any given project.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience working with version control systems (e.g., Git).

Version control systems, particularly Git, are fundamental to my workflow. I’ve used Git extensively throughout my career, not just for tracking code changes but also for managing test cases, test data, and even test environment configurations.

- Branching Strategies: I am proficient in using various branching strategies, such as Gitflow, to manage different versions of tests and to isolate feature development and bug fixes. This ensures that changes are carefully tested and integrated before affecting the main codebase.

- Collaboration: Git allows for seamless collaboration with developers and other testers. I’m comfortable using pull requests, code reviews, and merging strategies to ensure that everyone’s contributions are properly integrated and reviewed.

- Conflict Resolution: I have experience resolving merge conflicts effectively, ensuring that the final version of the code is stable and functional. Knowing how to resolve conflicts efficiently is crucial for a smooth workflow.

- Testing Tools Integration: Many testing tools and CI/CD pipelines integrate directly with Git. For example, automated tests can be triggered on every commit, providing rapid feedback on code changes. I’m adept at setting up and using these integrations.

Example: In a recent project, we used Git to manage multiple branches for different features, allowing us to develop and test features in parallel without impacting the main development line. Pull requests were used for code reviews and merging, ensuring high code quality and effective collaboration.

Q 17. How do you handle unexpected issues during testing?

Unexpected issues are inevitable in testing. My approach focuses on methodical troubleshooting, clear communication, and preventing future occurrences.

- Reproduce the Issue: The first step is to meticulously reproduce the issue. This often involves documenting the exact steps taken to trigger the problem, including system configurations, inputs, and expected vs. actual outcomes.

- Isolate the Root Cause: Using debugging tools and techniques, I investigate the root cause. This might involve analyzing logs, inspecting network traffic, or even using debuggers to step through the code.

- Escalate When Necessary: If the issue is beyond my expertise, I promptly escalate it to the appropriate team members (developers, system administrators) providing them with detailed information gathered during troubleshooting.

- Document and Report: All unexpected issues, including their root causes and resolutions, are carefully documented and reported using the project’s bug tracking system. This prevents similar problems from recurring.

- Preventative Measures: After resolving an issue, I analyze what could have been done to prevent it, such as adding additional test cases, refining test data, or improving documentation.

For instance, if a performance bottleneck occurs, I’d use profiling tools to pinpoint the slow code sections. I’d then work with developers to optimize the code and add performance tests to prevent similar issues in the future.

Q 18. Explain your experience with risk management in testing.

Risk management in testing involves proactively identifying and mitigating potential problems that could jeopardize the quality and timely release of a software product. My approach is structured and proactive.

- Risk Identification: I work with the project team to identify potential risks early in the development lifecycle. This includes considering technical risks (e.g., integration problems, dependencies), schedule risks (e.g., insufficient time for testing), and resource risks (e.g., lack of skilled testers).

- Risk Assessment: Each identified risk is assessed based on its likelihood and impact. This involves assigning a probability and severity level to each risk. This helps prioritize which risks require immediate attention.

- Risk Mitigation Strategies: For each high-priority risk, I develop and implement mitigation strategies. This might involve adding additional test cases, allocating more time for testing, or implementing better communication strategies within the development team.

- Risk Monitoring and Control: Throughout the testing process, I continuously monitor the identified risks, tracking their progress and updating mitigation strategies as needed. Regular risk reviews are crucial.

- Contingency Planning: A critical aspect of risk management is creating contingency plans to deal with situations where the initial mitigation strategies prove insufficient.

A real-world example: In a past project, we identified a high risk related to third-party API integration. To mitigate this, we conducted extensive API testing early in the project and established a clear escalation path to the API provider for any issues.

Q 19. Describe your experience with different types of testing documentation.

Comprehensive testing documentation is vital for ensuring traceability, repeatability, and effective communication among team members. My experience encompasses various types of documentation:

- Test Plan: A high-level document outlining the overall testing strategy, scope, schedule, and resources required.

- Test Cases: Detailed, step-by-step instructions for executing individual tests, including expected results and pass/fail criteria.

- Test Scripts: Automated test scripts written in various programming languages (e.g., Python, Java, JavaScript) to automate repetitive test tasks.

- Test Data: The data used to execute tests, often including both positive and negative test cases.

- Test Results: A record of the execution of tests, including pass/fail statuses, error messages, and other relevant information.

- Bug Reports: Detailed reports documenting defects found during testing, including steps to reproduce, screenshots, and expected behavior.

- Test Summary Report: A high-level summary of the testing process, including overall test coverage, defect counts, and overall assessment of the software’s quality.

I’m proficient in using various tools to manage and generate these documents, ensuring consistency and clarity.

Q 20. How do you ensure the quality of your own work?

Ensuring the quality of my own work involves a combination of self-review, peer review, and adherence to established best practices.

- Self-Review: Before submitting any work, I conduct a thorough self-review, checking for accuracy, completeness, and clarity. I use checklists and guidelines to ensure consistency and adherence to standards.

- Peer Review: I actively seek feedback from colleagues, using pair programming or formal code reviews. This helps catch errors and improve the overall quality of my work.

- Automated Checks: Where applicable, I use automated tools (linters, static code analyzers) to identify potential problems early in the development process.

- Continuous Improvement: I regularly reflect on my work, identify areas for improvement, and actively seek opportunities to enhance my skills and knowledge.

- Test-Driven Development (TDD): When developing automation scripts, I follow TDD principles, writing tests before writing the code itself. This improves code quality and prevents future bugs.

This multi-layered approach ensures that my work meets the highest standards of quality and reliability.

Q 21. What is your experience with test environment setup and maintenance?

Setting up and maintaining test environments is crucial for effective testing. My experience spans various approaches, from simple local setups to complex cloud-based environments.

- Environment Provisioning: I’m proficient in using various tools and techniques for setting up test environments, including virtualization (e.g., VirtualBox, VMware), containerization (e.g., Docker, Kubernetes), and cloud platforms (e.g., AWS, Azure, GCP).

- Configuration Management: I use configuration management tools (e.g., Ansible, Puppet, Chef) to automate the setup and configuration of test environments, ensuring consistency and reproducibility.

- Environment Maintenance: Maintaining test environments involves regular updates, backups, and troubleshooting. I’m familiar with common issues and have strategies for resolving them quickly and efficiently.

- Environment Cloning: Creating clones of test environments allows for efficient parallel testing and reduces the time required for setup.

- Test Data Management: Effective test data management is critical. This involves creating, managing, and cleaning test data to ensure it accurately reflects real-world scenarios.

For example, in a recent project, we used Docker containers to create consistent and easily reproducible test environments across different machines and operating systems.

Q 22. Explain your experience with defect tracking systems (e.g., Jira, Bugzilla).

Defect tracking systems are crucial for managing and resolving bugs throughout the software development lifecycle. My experience spans several years using tools like Jira and Bugzilla. I’m proficient in creating, assigning, prioritizing, and tracking defects through their entire lifecycle, from initial report to final resolution and verification.

In Jira, for example, I utilize its workflow features to ensure that each bug undergoes a structured process: reporting, assignment, reproduction, investigation, resolution, and verification. I’m also adept at using Jira’s query language (JQL) to create custom reports and dashboards for tracking progress, identifying trends, and prioritizing defects. This allows me to quickly identify critical bugs and work with the development team to ensure timely fixes. With Bugzilla, I’ve used its similar features to track defects, albeit with a slightly different interface and workflow. In both systems, I’m careful to document all details including steps to reproduce the bug, expected versus actual behavior, severity, and priority. This ensures that developers have all the information they need to efficiently resolve the issue.

Beyond basic defect tracking, I leverage these systems for reporting and analysis. I use reporting features to track metrics such as defect density, resolution time, and open defect counts. This allows me to identify areas for improvement in the development process and contribute to preventative measures.

Q 23. How do you contribute to continuous improvement in testing processes?

Continuous improvement in testing is a vital part of my approach. I actively contribute through several methods:

- Regular Test Process Reviews: I participate in regular retrospectives where the team analyzes past testing cycles, identifying areas for improvement in efficiency, test coverage, or defect detection. For example, if we consistently miss certain types of defects, we might adjust our testing strategy to include more specific test cases.

- Automation: I actively promote and implement test automation to increase efficiency and reduce human error. Automating repetitive tests frees up time for more complex testing tasks, such as exploratory testing.

- Test Case Optimization: I regularly review and update existing test cases to ensure they remain relevant and effective. Out-of-date or redundant test cases are removed to streamline the testing process.

- Adoption of New Tools and Techniques: I stay abreast of the latest testing tools and methodologies and suggest their implementation when appropriate. This could involve exploring new automation frameworks, performance testing tools, or implementing different test design techniques like risk-based testing.

- Knowledge Sharing: I actively share my knowledge and expertise with other team members through training, mentoring, and knowledge bases. This ensures that the entire team benefits from best practices and new learnings.

For instance, in a recent project, we identified a bottleneck in our regression testing process. By automating a significant portion of these tests, we reduced the testing time by 40%, allowing us to release new versions more quickly while maintaining high quality.

Q 24. Explain your understanding of different quality standards (e.g., ISO 9001).

My understanding of quality standards encompasses a range of frameworks, but ISO 9001 is particularly relevant. ISO 9001 is an internationally recognized standard that outlines requirements for a quality management system (QMS). It focuses on consistently meeting customer requirements and enhancing customer satisfaction through continuous improvement.

In the context of software testing, ISO 9001 principles translate to establishing robust processes for planning, executing, and monitoring testing activities. This includes defining clear roles and responsibilities, implementing documented procedures for testing methodologies, managing non-conformances (defects), and regularly auditing the effectiveness of the QMS. Compliance with ISO 9001 demonstrates a commitment to delivering high-quality software and managing risks effectively.

Other relevant standards include CMMI (Capability Maturity Model Integration), which focuses on improving software development processes, and specific industry-specific standards like those in healthcare or finance, which often dictate stringent regulatory requirements for data security and system reliability. Understanding these various standards allows me to tailor testing strategies to meet the specific needs and regulatory demands of each project.

Q 25. How do you work with stakeholders to define testing requirements?

Defining testing requirements involves close collaboration with stakeholders including product owners, developers, business analysts, and end-users. My approach involves several key steps:

- Requirements Gathering: I start by thoroughly reviewing the project requirements documentation, including user stories, use cases, and functional specifications. I also participate in requirements elicitation meetings to clarify any ambiguities or gaps.

- Risk Assessment: I conduct a risk assessment to identify areas of the system that are critical or prone to errors. This informs the prioritization of testing efforts and helps to focus on the most important aspects of the application.

- Test Planning: Based on the requirements and risk assessment, I develop a comprehensive test plan that outlines the scope of testing, testing methodologies, test environments, resources required, and timelines. This test plan serves as a roadmap for the entire testing process.

- Stakeholder Communication: I maintain open communication with stakeholders throughout the process, providing regular updates on testing progress, any identified issues, and the overall quality status of the software.

- Test Case Design: Based on the approved test plan, I design and develop detailed test cases, ensuring that all critical requirements are covered. These test cases are reviewed and approved by the stakeholders.

For instance, in a recent project, I worked closely with the product owner to define acceptance criteria for user stories. This ensured that the testing efforts were focused on the features that were most critical to the user experience.

Q 26. Describe your experience with mobile application testing.

My experience in mobile application testing is extensive, covering both iOS and Android platforms. I’m proficient in various testing techniques, including:

- Functional Testing: Verifying that all features and functionalities of the app work as expected.

- Usability Testing: Evaluating the app’s ease of use and user experience.

- Performance Testing: Assessing the app’s responsiveness, stability, and resource consumption under different load conditions.

- Compatibility Testing: Ensuring the app works correctly across various devices, operating systems versions, and screen resolutions.

- Security Testing: Identifying and mitigating potential security vulnerabilities.

- Localization Testing: Verifying the app’s functionality and user interface in different languages and regions.

I use a combination of manual and automated testing techniques. For automated testing, I leverage tools like Appium and Espresso to create automated test scripts for repetitive tasks. I’m also experienced with using cloud-based testing platforms to perform cross-device and cross-platform testing efficiently.

For example, in a recent project, we used Appium to automate the testing of the app’s login functionality across multiple Android devices. This significantly reduced the testing time and improved the overall quality of the application.

Q 27. Explain your understanding of different types of testing environments.

Testing environments are critical for ensuring that software is tested under realistic conditions. Different types of environments serve different purposes:

- Development Environment: This is where developers write and test their code. It’s usually a local environment or a shared development server.

- Testing Environment: This is a dedicated environment where testers execute various tests. It should mirror the production environment as closely as possible.

- Staging Environment: This environment is a near-replica of the production environment, used for final testing and user acceptance testing (UAT) before deployment.

- Production Environment: This is the live environment where the software is actually used by end-users. Limited testing is performed here, mostly monitoring and issue resolution.

The key difference lies in the level of control and the similarity to the production environment. Development environments offer maximum flexibility for developers, while production environments are highly controlled and stable. Testing and staging environments bridge the gap, offering a balance between flexibility for testing and the realism of the production environment. Choosing the right environment is crucial for effective testing and risk mitigation.

Q 28. What is your approach to root cause analysis of testing failures?

My approach to root cause analysis of testing failures involves a structured and systematic investigation to pinpoint the underlying cause of the problem, not just its symptoms. I utilize a combination of techniques, including:

- Reproduce the Failure: The first step is to reliably reproduce the failure. Detailed steps to reproduce are essential for efficient analysis.

- Gather Information: Collect all relevant information, including error logs, system performance metrics, test case details, and any related documentation.

- Investigate the Code: If necessary, I work with developers to examine the code related to the failure, looking for bugs, logic errors, or unexpected behavior.

- 5 Whys Analysis: I use the “5 Whys” technique to drill down to the root cause by repeatedly asking “Why?” until the fundamental cause is identified. For example, “Why did the application crash? Because of a memory leak. Why was there a memory leak? Because of a faulty algorithm. Why was the algorithm faulty? Because it wasn’t properly reviewed.” etc.

- Fishbone Diagram (Ishikawa Diagram): This visual tool helps organize potential causes of a problem, categorizing them by different factors like people, processes, materials, equipment, etc. This aids in identifying potential contributing factors.

- Defect Reporting and Tracking: A detailed report of the root cause is documented and linked to the defect tracking system, ensuring that lessons learned are captured and can be used to prevent similar issues in the future.

By systematically investigating failures and documenting the root cause, we can improve the overall quality of the software and prevent similar problems from occurring in the future. This proactive approach helps to build more robust and reliable software.

Key Topics to Learn for Knowledge of quality control and testing standards Interview

- Quality Management Systems (QMS): Understanding ISO 9001 principles, implementation, and auditing. Practical application: Describe your experience with implementing or working within a QMS framework.

- Statistical Process Control (SPC): Mastering control charts (e.g., Shewhart, CUSUM), process capability analysis (Cp, Cpk), and their practical applications in identifying and reducing variation. Practical application: Explain how you’ve used SPC to improve a process or solve a quality problem.

- Testing Methodologies: Familiarize yourself with various testing types (e.g., destructive, non-destructive, functional, regression), and their appropriate applications. Practical application: Discuss your experience designing and executing different testing methodologies.

- Root Cause Analysis (RCA): Learn techniques like the 5 Whys, Fishbone diagrams, and Pareto analysis to effectively identify the root cause of quality issues. Practical application: Describe a situation where you used RCA to solve a quality problem.

- Defect Prevention and Corrective Actions: Understanding the importance of proactive measures to prevent defects and implementing effective corrective actions to address identified issues. Practical application: Detail your experience in implementing corrective actions and preventing recurring defects.

- Documentation and Reporting: Mastering the creation of clear, concise, and accurate quality control documentation and reports. Practical application: Describe your experience documenting quality control processes and presenting findings to stakeholders.

- Industry-Specific Standards: Research relevant standards for your target industry (e.g., automotive, aerospace, medical devices). Practical application: Discuss your familiarity with relevant industry standards and their impact on quality control practices.

Next Steps

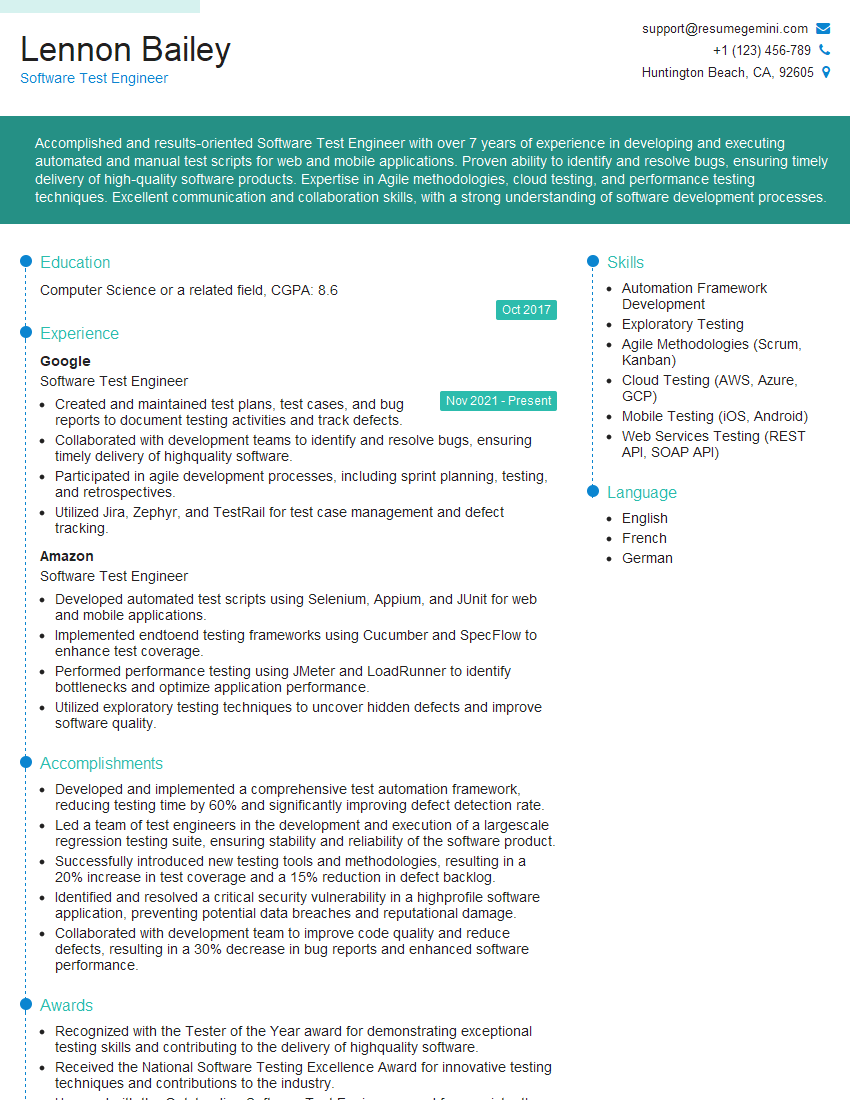

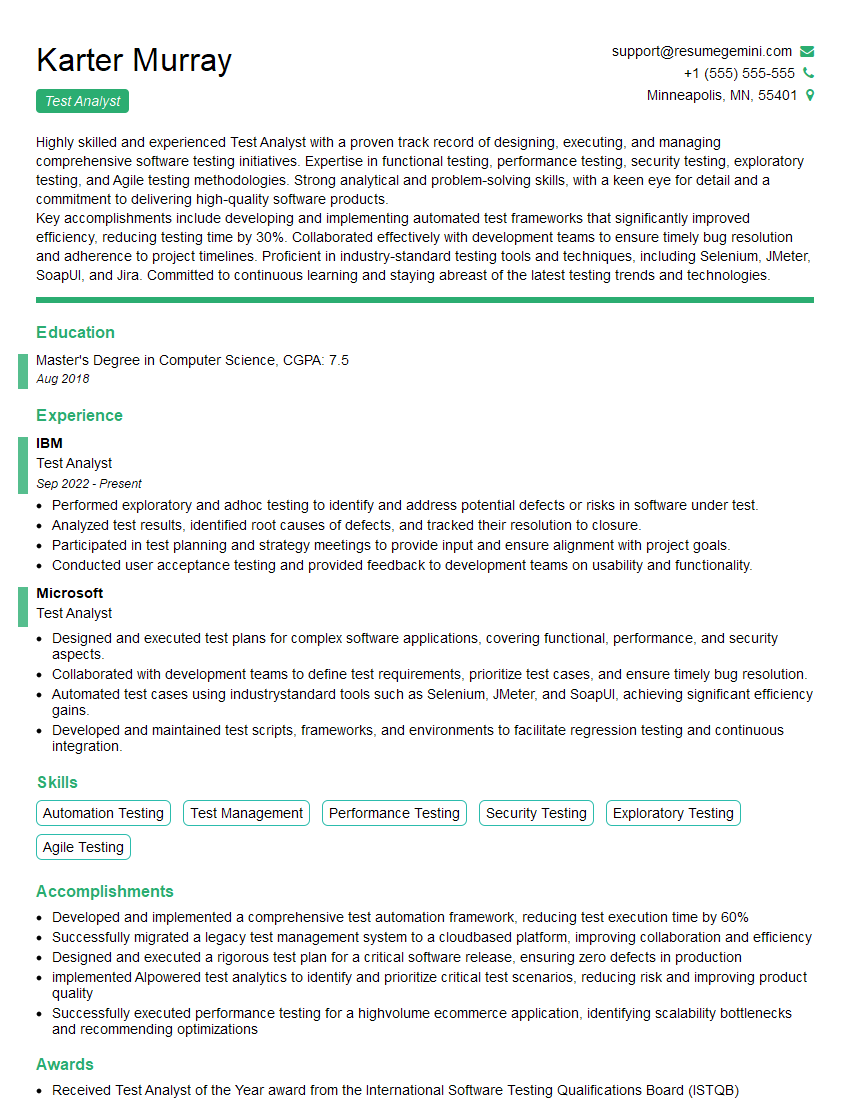

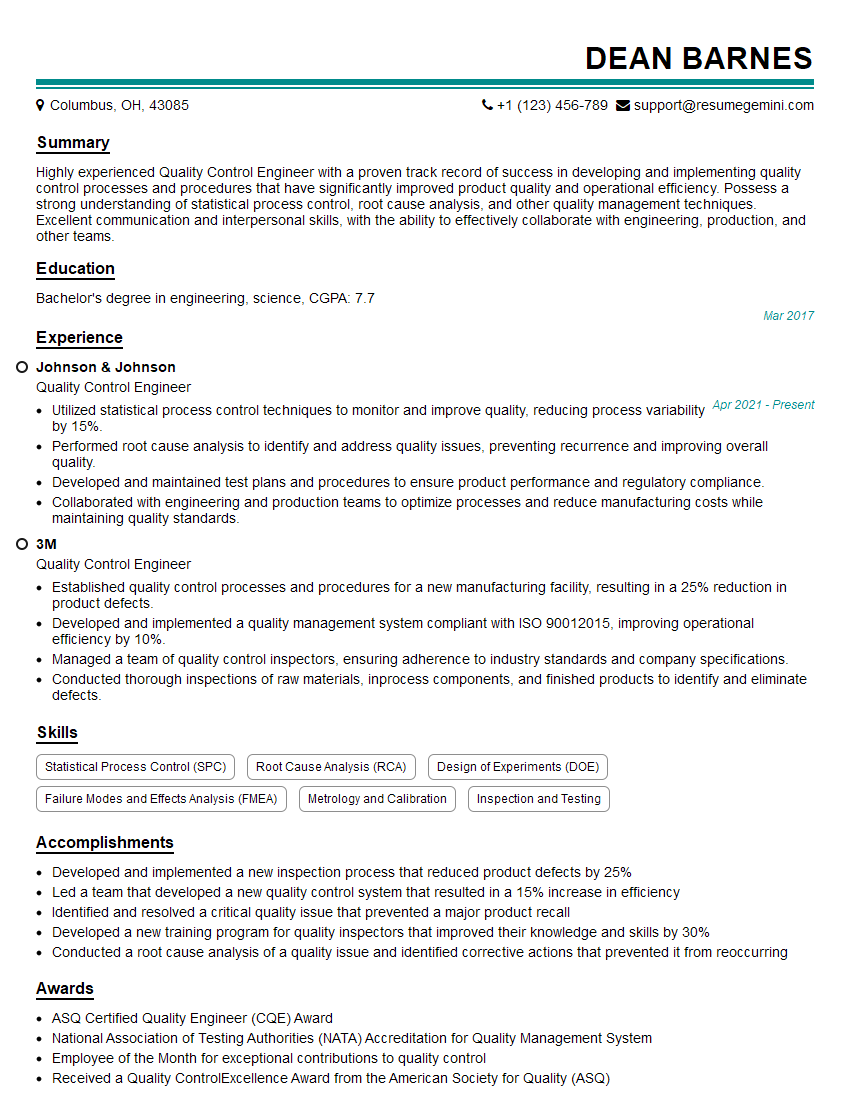

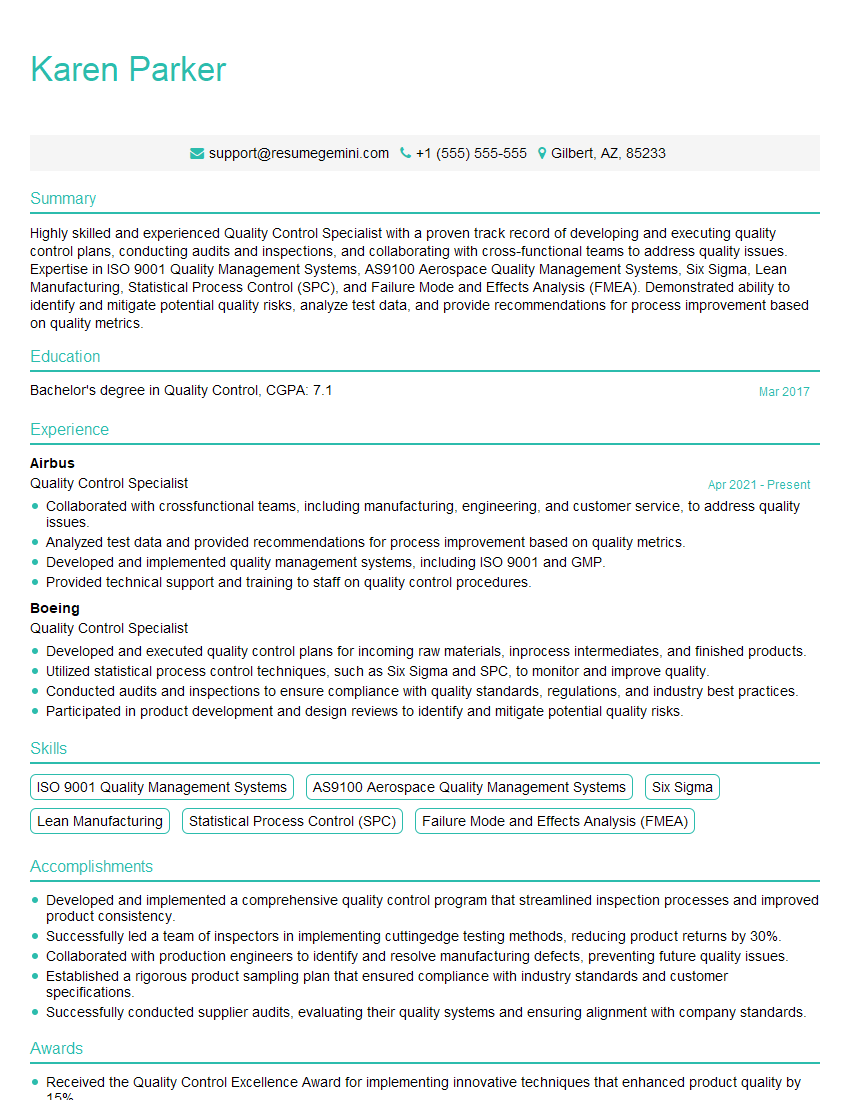

Mastering knowledge of quality control and testing standards is crucial for career advancement in various fields. Demonstrating this expertise through a well-crafted resume is essential for securing your dream role. An ATS-friendly resume increases your chances of getting noticed by recruiters. To build a professional and impactful resume, we highly recommend using ResumeGemini. ResumeGemini offers a streamlined process and provides examples of resumes tailored to roles requiring Knowledge of quality control and testing standards, helping you present your skills effectively. Take the next step in your career journey today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good