Cracking a skill-specific interview, like one for Live Capture Techniques, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Live Capture Techniques Interview

Q 1. Explain the difference between SDI and HDMI in live capture.

SDI (Serial Digital Interface) and HDMI (High-Definition Multimedia Interface) are both digital video interfaces used in live capture, but they differ significantly in their applications and capabilities. SDI is a professional standard designed for high-quality, long-distance transmission with minimal signal degradation. It offers superior signal integrity, particularly important in broadcast environments. HDMI, on the other hand, is a consumer-grade standard primarily used for home theater and consumer electronics. While capable of high resolutions, it’s more susceptible to signal interference over longer distances and generally lacks the advanced features found in SDI.

Think of it like this: SDI is the robust, reliable workhorse for professional broadcasts, while HDMI is the convenient, consumer-friendly option for home setups. In a live broadcast truck, you’ll almost exclusively find SDI, while a smaller-scale production might use HDMI for convenience.

- SDI Advantages: Higher bandwidth options for greater resolutions and frame rates, superior signal quality over longer cables, support for embedded audio, and professional features like genlock.

- HDMI Advantages: Simpler and cheaper cabling, readily available consumer equipment, widely supported by various devices.

Q 2. Describe your experience with various camera control protocols (e.g., VISCA, Pelco).

I have extensive experience with various camera control protocols, including VISCA and Pelco. VISCA (Visual Interface System Control for Audio-Visual) is a common protocol used by Sony cameras, known for its relatively simple command set. Pelco, on the other hand, is more widely adopted and offers a broader range of control options, including pan, tilt, zoom, iris, and focus. I’ve used both in numerous live productions, from small corporate events to large-scale broadcasts. My experience extends to configuring and troubleshooting these protocols, ensuring seamless camera control during live operations. For example, during a recent live music concert, I used VISCA to remotely control multiple Sony cameras, adjusting their positions and zoom levels in real-time to capture different perspectives of the performance. The Pelco protocol was invaluable in another project where we needed precise, coordinated movements of multiple PTZ (Pan-Tilt-Zoom) cameras, following specific choreographed actions during a theatrical production.

Q 3. What are the common video formats used in live capture, and their advantages/disadvantages?

Common video formats used in live capture include various resolutions and codecs. For example, 1080i (interlaced) was prevalent, but 1080p (progressive) is now the standard for high-definition. 4K and even 8K are becoming more common for ultra-high definition. The choice of codec (compression algorithm) significantly impacts quality and file size. Common codecs include H.264, H.265 (HEVC), and ProRes.

- 1080p: Widely adopted, good balance between quality and file size.

- 4K: Higher resolution, sharper image, larger file sizes requiring more storage and bandwidth.

- H.264: Widely supported, good compression, but can exhibit some artifacts at high compression.

- H.265 (HEVC): Better compression than H.264, improved quality at lower bitrates, but requires more processing power.

- ProRes: High-quality, lossless or near-lossless codec, large file sizes, ideal for post-production but less suitable for real-time streaming.

Choosing the right format depends on several factors, including budget, available bandwidth, storage capacity, and desired quality. High-end broadcasts may use uncompressed formats for superior quality during post-production, while live streaming often prioritizes smaller file sizes for smoother delivery.

Q 4. How do you troubleshoot audio sync issues in a live production?

Troubleshooting audio sync issues in a live production requires a systematic approach. The most common cause is a mismatch between the audio and video signal timing. My first step involves checking all connections, ensuring proper routing and clock synchronization. I’d then use a waveform monitor and vectorscope to visually inspect the audio and video signals for any inconsistencies. This helps pinpoint the location of the problem. A common scenario is a delay introduced by an audio processing device or a mismatch in frame rates between the audio and video sources. To rectify this, I may need to adjust audio delays using hardware or software tools. In the case of a camera feed with an audio delay, the camera’s audio settings might need adjustment. For complex issues, I would use specialized test equipment to pinpoint timing errors more precisely.

If the problem persists, it is possible that a faulty device is causing the issue. In that case, a systematic process of elimination would involve isolating each component to determine if the problem continues. This may involve replacing suspect components, one at a time, to find the culprit. Finally, detailed logging throughout the process is essential for maintaining a record of adjustments made and identifying persistent problems.

Q 5. Explain your understanding of frame rates, resolutions, and aspect ratios.

Frame rate, resolution, and aspect ratio are fundamental concepts in video. Frame rate refers to the number of still images (frames) displayed per second, typically measured in frames per second (fps). Higher frame rates result in smoother motion. Common frame rates include 24fps (film standard), 25fps (PAL standard), 30fps (NTSC standard), 50fps, and 60fps. Resolution defines the number of pixels in an image, influencing the image’s sharpness and detail. Common resolutions include 720p, 1080p, 4K (e.g., 3840×2160), and 8K. Aspect ratio is the ratio of the image’s width to its height. Common aspect ratios include 4:3 (standard definition) and 16:9 (widescreen). These parameters must be correctly configured to avoid issues such as tearing, stretching, or letterboxing.

For instance, a film shot at 24fps will have a cinematic look. A gaming broadcast often utilizes 60fps for smoother gameplay, while a news broadcast typically uses 25/30fps.

Q 6. What experience do you have with video switching and routing?

I have extensive experience with video switching and routing, using both hardware and software solutions. This includes operating various types of video switchers, from small, compact mixers used in smaller productions to large, multi-channel switchers used in major broadcast settings. I’m proficient in routing video signals from multiple sources to various outputs, including monitors, recording devices, and streaming platforms. My skills encompass using both traditional hardware switchers with physical buttons and controls, and software-based switchers which provide more flexibility and control through a computer interface.

For example, during a recent live sports event, I was responsible for seamlessly switching between multiple camera angles, replays, and graphics using a large-scale production switcher. I coordinated this with the director to maintain a smooth viewing experience for the audience.

Q 7. Describe your experience with different types of video encoders and decoders.

My experience with video encoders and decoders spans a range of technologies and applications. Encoders compress video signals for transmission or storage, while decoders decompress them for playback. I’ve worked with hardware encoders from various manufacturers, such as Teradek, and software encoders like OBS Studio and vMix. Hardware encoders are often preferred for professional broadcasts due to their reliability and performance, particularly when dealing with high-quality, low-latency streams. Software encoders are more flexible and cost-effective for smaller productions.

Similarly, I’ve worked with various decoders, ranging from dedicated hardware decoders used in professional settings to software-based decoders integrated into media players and streaming applications. Understanding the capabilities and limitations of different encoders and decoders is crucial for optimizing video quality and minimizing latency. For instance, choosing an encoder that supports H.265 can significantly reduce the bitrate required for a certain level of quality. Selecting the right encoder and decoder combination is important for optimizing bandwidth usage and ensuring minimal latency in live streaming applications.

Q 8. How familiar are you with IP-based video transmission protocols?

My familiarity with IP-based video transmission protocols is extensive. I have hands-on experience with protocols like RTP (Real-time Transport Protocol), RTCP (RTP Control Protocol), and RTSP (Real Time Streaming Protocol). RTP is the workhorse, carrying the actual video and audio packets. RTCP provides feedback on packet loss and jitter, crucial for quality monitoring and adjustments. RTSP acts as a control protocol, managing the start, stop, and pause of the stream. Understanding these protocols is fundamental to ensuring reliable and high-quality video transmission in any live capture environment. For instance, I’ve used RTP/RTCP over UDP for low-latency streaming and RTP/RTCP over TCP for more reliable, but potentially higher-latency streaming, depending on the specific needs of the production.

I also have experience with newer protocols like SRT (Secure Reliable Transport), which offers superior performance and security compared to traditional methods, particularly in challenging network conditions. Choosing the right protocol is crucial; factors such as bandwidth, latency requirements, and network stability all inform this choice.

Q 9. What are your strategies for managing multiple video sources simultaneously?

Managing multiple video sources requires a robust system architecture and a methodical approach. I typically employ a multi-camera setup using a production switcher, which allows me to seamlessly switch between different camera angles and sources. This is often complemented by a vision mixer, providing further control over effects and transitions. In larger productions, this could involve routing matrices, which provide more flexible and scalable routing options.

My strategy involves meticulous pre-planning. This includes defining clear camera positions and roles, establishing communication protocols between the camera operators and director, and conducting thorough technical rehearsals before the live broadcast. Real-time monitoring of signal strength and quality for each source using dedicated monitoring tools is critical. For instance, I’ve successfully managed up to eight simultaneous sources including cameras, graphics, and pre-recorded video feeds, utilising sophisticated routing and switching technology to create a dynamic and engaging broadcast.

Q 10. How do you handle unexpected technical issues during a live broadcast?

Unexpected technical issues are unfortunately inevitable in live broadcasting. My approach is proactive and multi-layered. First, I emphasize redundancy wherever possible; having backup cameras, internet connections, and power sources reduces the impact of failures. Secondly, I have a deep understanding of troubleshooting techniques for common problems such as network glitches, equipment malfunctions, and audio dropouts. I always have a troubleshooting checklist ready, and my experience allows me to quickly diagnose the problem and implement the most appropriate solution.

For instance, if we experience a network outage, I can quickly switch to a backup connection or adjust the streaming settings to reduce bandwidth usage. If a camera malfunctions, I have alternative camera angles available. If the audio fails, we can switch to a backup audio source or quickly troubleshoot the existing one. The key is swift action and a calm, decisive approach. This requires a deep understanding of the entire system and the ability to think on your feet.

Q 11. What is your experience with character generators and graphics systems?

I have extensive experience with character generators (CGs) and graphics systems. I’m proficient in using software such as Adobe After Effects, ChyronHego, and Vizrt to create and integrate dynamic graphics, lower thirds, and other visual elements into live broadcasts. This extends beyond simple text overlays; I can design and execute complex animations, incorporate real-time data, and seamlessly transition between different graphic elements. I am very comfortable with these tools, and have extensive experience creating engaging and professional graphics for live productions.

My experience also encompasses integrating these graphics systems with live production switchers, ensuring smooth integration and flawless transitions between different video sources and graphics elements. For example, I have integrated live data feeds into CGs, creating dynamic infographics for sports broadcasts and financial news programs. This demonstrates my ability to handle complex systems and deliver high-quality visual content in high-pressure situations.

Q 12. Describe your experience with live streaming platforms (e.g., YouTube Live, Twitch).

I have worked extensively with various live streaming platforms including YouTube Live, Twitch, and Facebook Live. My experience encompasses setting up and managing live streams on these platforms, from configuring encoders and RTMP settings to managing chat interactions and audience engagement. I understand the unique requirements and best practices for each platform, optimizing stream settings for the best possible quality and reach.

For example, I’ve optimized YouTube Live streams for high-quality video with minimal latency, utilizing advanced encoding settings and appropriate bitrates. On Twitch, I’ve successfully integrated chat functionality, engaging with viewers and moderating the chat, creating a dynamic viewing experience. My ability to adapt to these specific platform needs and workflows is key.

Q 13. What is your understanding of latency in live streaming?

Latency in live streaming refers to the delay between the event occurring and the viewers seeing it on their screens. This delay is caused by encoding, processing, and transmission times. Lower latency is crucial for interactive experiences, such as live gaming or Q&A sessions. High latency can significantly impair the viewing experience, especially in time-sensitive scenarios.

Factors influencing latency include network conditions, encoder settings, and the streaming platform itself. Understanding these factors is crucial for optimizing the stream for the lowest possible latency while maintaining acceptable quality. For example, using low-latency protocols like SRT or adjusting the encoder settings to prioritize speed over quality can greatly reduce latency, but may also impact visual quality. This requires a delicate balance, optimized for the specific production needs.

Q 14. How do you ensure high-quality audio in a live capture environment?

Ensuring high-quality audio is paramount in live capture. My approach is multifaceted and begins with proper microphone selection and placement. The choice of microphone type depends on the environment and the type of audio being captured; lavalier mics for interviews, boom mics for capturing ambient sounds, or boundary mics for table-top setups are a few examples.

Careful monitoring and mixing using a professional audio mixer is crucial. Equalization, compression, and noise reduction can improve the clarity and quality of the audio signal. I also always use headphone monitoring to ensure all audio sources are balanced and free from distortion. In addition to this, employing redundancy, by using multiple microphones and audio interfaces, mitigates risks associated with equipment failure. Finally, conducting thorough sound checks before the live broadcast is essential in preventing unexpected audio issues. Paying attention to the smallest details makes the biggest difference in ensuring a high quality experience.

Q 15. What are the different types of microphones used in live capture, and when would you use each?

Microphone selection is crucial for high-quality live capture. The best choice depends heavily on the application and the environment. Here are some common types:

- Dynamic Microphones: These are robust and handle high sound pressure levels well, making them ideal for loud environments like concerts or sporting events. They’re less sensitive to handling noise and are relatively inexpensive. Examples include the Shure SM58 (vocal) and the Shure SM57 (instrument).

- Condenser Microphones: More sensitive than dynamic mics, capturing subtle nuances and quieter sounds. Excellent for studio recordings or situations requiring detailed audio capture, but they are more susceptible to handling noise and require phantom power (48V). Examples include Neumann U 87 Ai (vocal/instrument) and AKG C414 XLS (versatile).

- Ribbon Microphones: Known for their smooth, warm sound, these mics are highly sensitive to sound pressure and often used for capturing instruments like guitars or horns. They are fragile and require careful handling.

- Shotgun Microphones: Highly directional, these are ideal for picking up distant sounds while minimizing background noise. Commonly used in film and video production for capturing dialogue.

- Lavalier Microphones (Lapel Mics): Small and unobtrusive, these clip onto clothing and are perfect for interviews or presentations where a natural sound is desired. They can be either dynamic or condenser.

For instance, I would choose dynamic mics for a live music performance due to their durability and ability to handle loud stage volumes, while condenser mics might be preferable for a podcast recording in a quiet studio to capture the subtleties of the voices.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with audio mixing consoles?

I have extensive experience with audio mixing consoles, from small analog boards to large digital consoles like those from Yamaha, Avid, and Soundcraft. My experience spans various applications, including live concerts, corporate events, theatrical productions, and broadcast television. I’m proficient in setting up inputs, routing signals, equalization (EQ), compression, gating, and other signal processing techniques to achieve optimal audio quality. I understand the importance of gain staging to avoid clipping and maximizing dynamic range. I’m also comfortable working with different types of microphones, using preamps effectively, and creating effective monitor mixes for performers.

For example, during a recent live music event, I used a Yamaha digital console to manage over 20 inputs, meticulously setting up each channel’s EQ and compression to ensure clarity and balance between various instruments and vocals. Dealing with feedback issues is also a skill I’ve honed – identifying the problem frequency and using EQ or feedback suppression to fix it.

Q 17. Explain your knowledge of different video compression techniques.

Video compression is essential for reducing file sizes without significantly compromising quality. Different techniques achieve this through various methods:

- Intra-frame Compression (I-frames): Each frame is encoded independently, resulting in high quality but larger file sizes. Useful for editing and random access.

- Inter-frame Compression (P-frames, B-frames): These frames refer to previous frames, reducing redundancy and file size. Commonly used in video streaming and requires more processing power for decoding.

- H.264 (MPEG-4 AVC): A widely used codec offering a good balance between compression efficiency and quality. Suitable for various applications, from Blu-ray discs to online streaming.

- H.265 (HEVC): The successor to H.264, offering better compression efficiency at the same quality level or higher quality at the same bitrate. This is becoming more prevalent in high-resolution video and streaming.

- VP9 (Google): A royalty-free codec that competes with H.265 in terms of efficiency and quality.

- AV1 (Alliance for Open Media): A newer, open-source codec aiming to provide even better compression than H.265 and VP9. Its adoption is still growing.

Choosing the right compression technique depends on factors like bandwidth availability, desired quality, storage capacity, and processing power. For live streaming, H.264 is still widely used due to its broad compatibility, while H.265 offers better compression for higher resolutions.

Q 18. What is your experience with monitoring and recording video quality?

Monitoring and recording video quality is critical for ensuring a professional output. My experience includes using waveform monitors, vectorscopes, and other professional monitoring tools to assess luminance, chrominance, and overall image sharpness. I’m skilled in identifying and troubleshooting issues such as color banding, noise, compression artifacts, and other video impairments.

I regularly use tools like Blackmagic Design’s monitoring equipment and software solutions to ensure the video signal is clean, meets broadcast standards, and is suitable for the intended distribution platform. For example, checking for appropriate contrast and brightness levels is crucial for visibility and overall audience experience.

When recording, I ensure proper camera settings (shutter speed, aperture, ISO) and file format selection (ProRes, DNxHD etc.) are optimized for the highest possible quality while considering storage and workflow limitations.

Q 19. How do you handle color correction and balancing in live capture?

Color correction and balancing are essential for achieving a consistent and aesthetically pleasing look in live capture. I use color correction tools both in hardware (external processors) and software (DaVinci Resolve, OBS Studio) to adjust white balance, color temperature, saturation, and contrast. This often requires a combination of techniques:

- White Balancing: Setting the white point ensures accurate color representation throughout the video.

- Color Temperature Adjustment: This corrects for variations in lighting color, creating a unified look.

- Gain Adjustment: Used to increase or decrease signal strength.

- Gamma Correction: Adjusts the overall brightness of the image.

In live settings, I often rely on software-based color correction tools, making real-time adjustments as needed to compensate for changes in lighting or scene content. For example, If the lighting shifts from one scene to another, adjusting the white balance and color temperature live helps maintain consistency.

Q 20. What experience do you have with different types of lighting setups?

My experience encompasses various lighting setups, from basic three-point lighting to more complex arrangements for different scenarios. I understand the use of key lights, fill lights, and backlights to shape and illuminate subjects effectively. I’m familiar with various lighting instruments, including:

- Tungsten Lights: Produce warm light, but require significant power and generate considerable heat.

- Fluorescent Lights: Energy-efficient but can produce a cooler light temperature.

- LED Lights: Versatile, energy-efficient, and available in various color temperatures and intensities.

- HMI Lights: Powerful and produce daylight-balanced light, commonly used in outdoor shoots.

I can design lighting plans to suit different environments and aesthetic preferences, considering factors such as scene mood, subject matter, and camera position. For instance, a dramatic interview might require a low-key lighting scheme with a focused key light and dark background, whereas a bright, energetic event would necessitate a higher overall illumination.

Q 21. Explain your understanding of network infrastructure requirements for live streaming.

The network infrastructure for successful live streaming is critical. It involves several key components:

- Sufficient Bandwidth: Both upload and download speeds need to be substantial, depending on the video resolution, frame rate, and bitrate. Insufficient upload bandwidth leads to buffering and stream interruptions.

- Reliable Internet Connection: A stable and high-speed connection (fiber optic or dedicated line is often preferred) is paramount. Wireless connections are less reliable and should be avoided for professional live streaming.

- Encoding/Streaming Server: This device or software encodes the video and audio into a format suitable for streaming and then transmits it to a Content Delivery Network (CDN).

- Content Delivery Network (CDN): A geographically distributed network of servers that caches the stream, ensuring faster delivery to viewers around the world. CDNs significantly reduce latency and improve viewer experience.

- Streaming Protocol: RTMP (Real Time Messaging Protocol) and HLS (HTTP Live Streaming) are common protocols for live streaming.

- Redundancy and Backup Systems: Multiple network connections, encoders, or streaming platforms are essential for redundancy, preventing outages in case of equipment failure.

Before a live stream, I always thoroughly assess network capabilities and implement redundancy where necessary to ensure a smooth and uninterrupted broadcast. Understanding and using network monitoring tools to troubleshoot issues in real-time is also critical.

Q 22. How do you manage bandwidth during a live stream?

Managing bandwidth during a live stream is crucial for a smooth, high-quality viewing experience. It’s like managing the flow of water through a pipe – too much pressure (bandwidth) and the system bursts, too little and the flow is too weak. We employ several strategies to optimize this.

Encoding Optimization: We use high-quality encoders that allow for adjusting bitrate (the amount of data transmitted per second) and resolution dynamically. A lower bitrate means less bandwidth consumption, but also potentially lower quality. We carefully balance these factors based on the target audience and platform. For example, a live stream targeting mobile users might use a lower bitrate compared to one for desktop viewers.

Content Delivery Network (CDN): CDNs are geographically distributed servers that cache the stream, ensuring viewers are served content from a server closest to their location. This significantly reduces latency and stress on the main server. Imagine having multiple water fountains in different neighborhoods instead of just one central fountain – each person gets their water quicker and more efficiently.

Adaptive Bitrate Streaming (ABR): ABR technology automatically adjusts the bitrate based on the viewer’s connection speed. If the connection is slow, the stream quality adapts to maintain playback. This ensures a more consistent viewing experience for everyone, regardless of their internet speed.

Bandwidth Monitoring Tools: We use sophisticated monitoring tools to track real-time bandwidth usage and identify potential bottlenecks. Think of these as flow meters on our ‘water pipes,’ giving us real-time information on the bandwidth usage.

Q 23. What is your experience with remote live production techniques?

My experience with remote live production techniques is extensive. I’ve led and participated in numerous projects utilizing various remote collaboration tools and workflows. We commonly leverage cloud-based platforms for video and audio routing, switching, and graphics integration. This allows our teams to work from anywhere globally.

For example, during a recent international music festival, our team was spread across three continents. We used a cloud-based platform to connect all the remote cameras, audio feeds, and control rooms, allowing for seamless collaboration in real-time. We used secure protocols like SRT (Secure Reliable Transport) to ensure high-quality transmission even under challenging network conditions.

This remote approach offers significant advantages, including reduced travel costs, increased efficiency, and the ability to access a wider talent pool. However, it requires careful planning and a deep understanding of network protocols and security best practices.

Q 24. What are your strategies for ensuring redundancy in live capture systems?

Redundancy in live capture systems is paramount to preventing outages and ensuring business continuity. It’s like having a backup generator during a power outage – crucial for uninterrupted operation. Our strategies include:

Multiple Encoding Streams: We encode the stream multiple times using different encoders and bitrates. Even if one encoder fails, others can continue the transmission.

Redundant Network Connections: Utilizing diverse network paths (e.g., primary and secondary internet connections) ensures that if one connection fails, the other seamlessly takes over.

Server Redundancy: Our systems are designed with redundant servers. If the primary server fails, the secondary server automatically takes over, ensuring continuous operation.

Failover Mechanisms: We implement automatic failover mechanisms to instantly switch to the backup systems in case of failures. This is like having an automatic switch that instantly redirects the water flow in case of a pipe leak.

Q 25. Describe your experience with troubleshooting network connectivity issues in a live production.

Troubleshooting network connectivity issues is a common occurrence in live production. It requires a systematic approach. We often use tools like ping, traceroute, and network monitoring software to identify the problem’s source. We have specific troubleshooting flow charts which help us methodically approach issues from the simplest to most complex possibilities.

For instance, during a live sporting event, we experienced sudden audio dropouts. Our troubleshooting began with checking the audio cable connections, then moved to analyzing network traffic with packet capture tools. This revealed excessive packet loss on a specific segment of the network. We then worked with the venue’s IT team to identify and fix the network congestion. The problem turned out to be caused by an unexpected surge in network traffic from a nearby event.

Experience shows that a methodical, systematic approach, combined with the use of network analysis tools, is key to efficient problem-solving.

Q 26. How do you ensure data security and integrity in a live capture workflow?

Data security and integrity are critical in live capture workflows. We employ several strategies to protect sensitive information and ensure data quality:

Secure Transmission Protocols: We use secure protocols like SRT and RTMP over HTTPS to encrypt the stream and prevent unauthorized access.

Access Control: Strict access control measures are implemented to limit access to sensitive data and systems only to authorized personnel.

Data Backup and Recovery: Regular backups of all critical data are performed and stored in secure, geographically separate locations. This ensures business continuity in case of data loss or system failure.

Regular Security Audits: Our systems undergo regular security audits to identify and address potential vulnerabilities.

Q 27. What is your experience with cloud-based live streaming solutions?

My experience with cloud-based live streaming solutions is extensive. We frequently utilize platforms like AWS Elemental MediaLive, Azure Media Services, and Wowza Cloud for encoding, transcoding, and distribution of live streams. These cloud-based solutions offer scalability, reliability, and cost-effectiveness. They allow us to easily adjust resources based on the event’s requirements and easily handle large viewer audiences without needing significant upfront investment in infrastructure.

For instance, a recent virtual conference utilized AWS Elemental MediaLive to handle the multi-camera feeds and encoding, resulting in a seamless experience for thousands of simultaneous viewers. Cloud-based solutions also provide a range of advanced features, like analytics dashboards, providing real-time insights into viewer engagement and stream health.

Q 28. Describe a time you had to quickly resolve a technical issue during a live event.

During a live broadcast of a major political debate, we experienced a sudden failure of our primary video switcher just moments before the event began. This was a high-pressure situation as a global audience was waiting. Our team instantly switched to our redundant switcher, which was already online and pre-configured with the same settings. While this ensured a live feed wasn’t lost, we then had to quickly diagnose the issue with the primary switcher. It turned out to be a power supply failure. We swapped out the faulty power supply, ensuring our primary switcher would be ready for the post-debate analysis. Our rigorous testing and redundancy protocols, along with a well-trained team, ensured minimal disruption during the event.

Key Topics to Learn for Live Capture Techniques Interview

- Understanding Different Capture Methods: Explore various live capture techniques, including screen recording, webcam recording, and audio capture. Consider the strengths and weaknesses of each method in different contexts.

- Hardware and Software Considerations: Discuss the technical aspects of live capture. This includes choosing appropriate hardware (microphones, cameras, etc.), mastering relevant software (streaming platforms, editing tools), and troubleshooting common technical issues.

- Audio and Video Quality Optimization: Learn how to achieve professional-quality audio and video. This includes understanding concepts like bitrate, frame rate, microphone placement, and lighting techniques.

- Live Streaming Platforms and Workflow: Familiarize yourself with popular live streaming platforms and their unique features. Develop an efficient workflow for pre-production, live capture, and post-production.

- Encoding and Compression Strategies: Understand different video codecs and their impact on file size and quality. Learn how to choose appropriate settings for different platforms and bandwidth limitations.

- Problem-Solving and Troubleshooting: Be prepared to discuss common problems encountered during live capture, such as audio dropouts, video lag, and connectivity issues. Showcase your ability to identify and resolve these challenges efficiently.

- Live Capture Ethics and Best Practices: Understand the legal and ethical implications of live capture, including copyright, privacy, and responsible content creation.

Next Steps

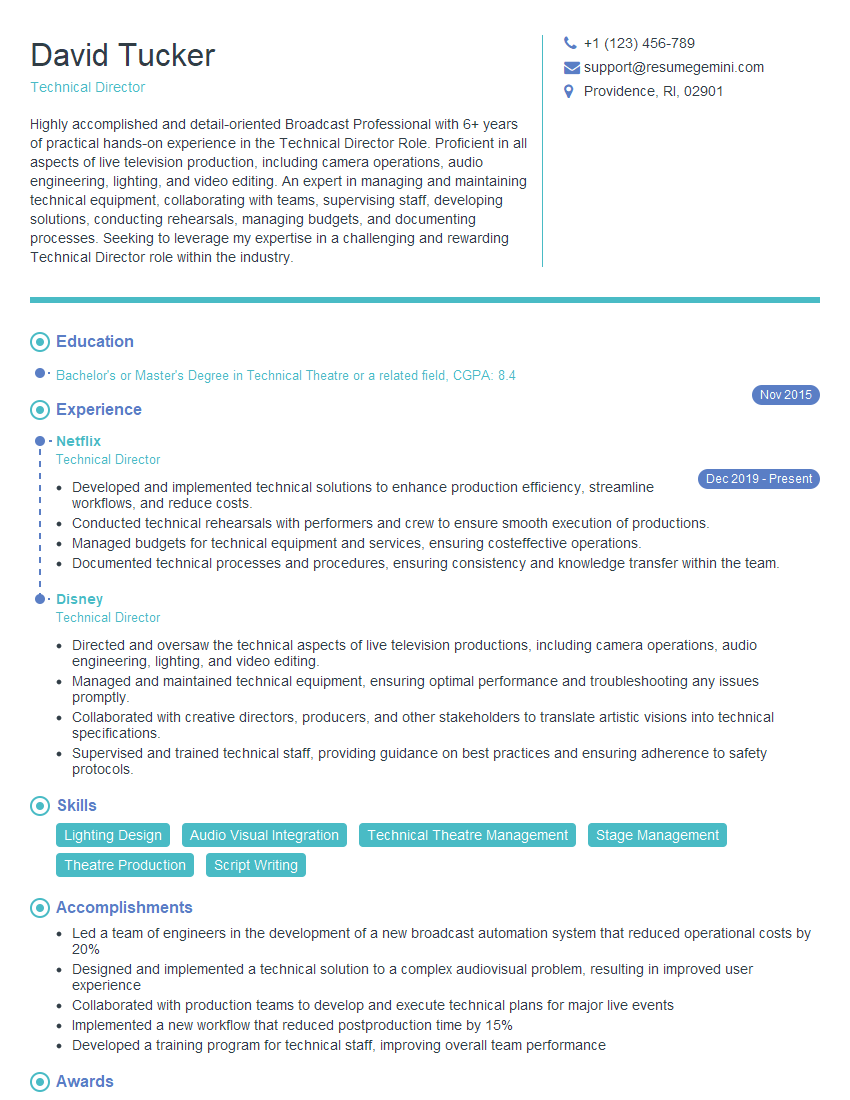

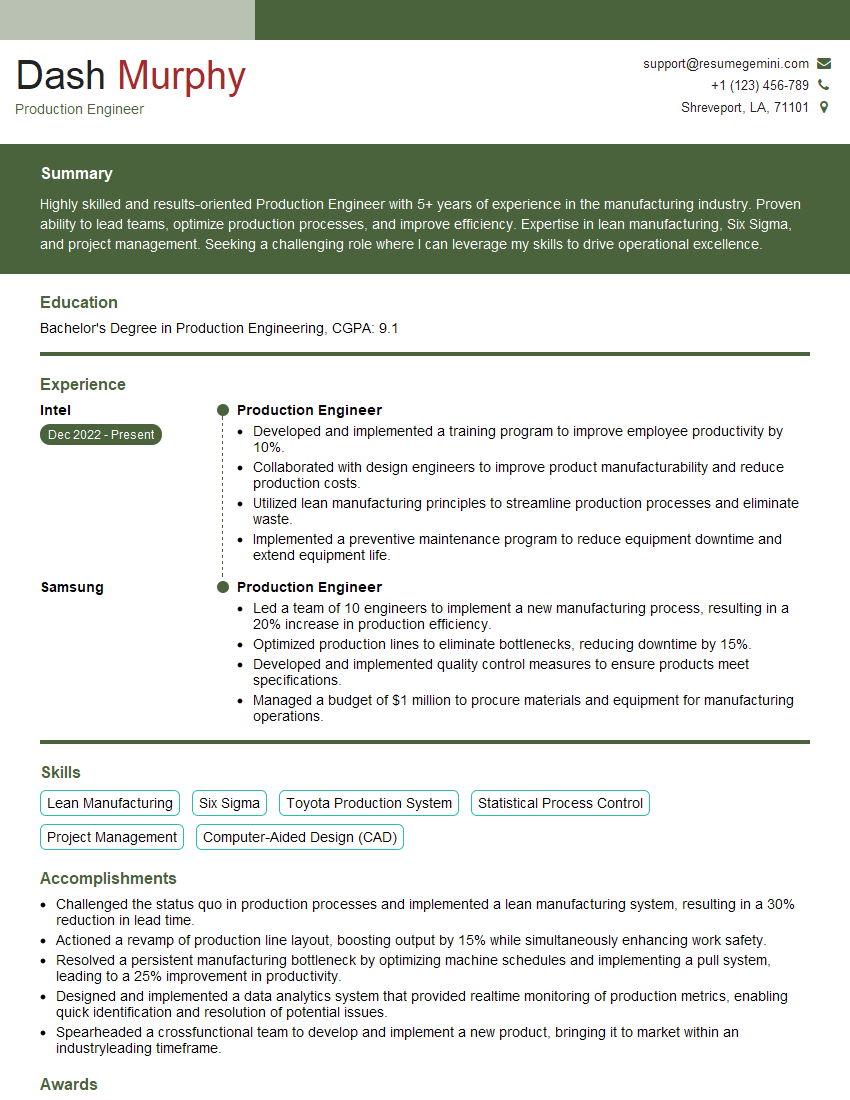

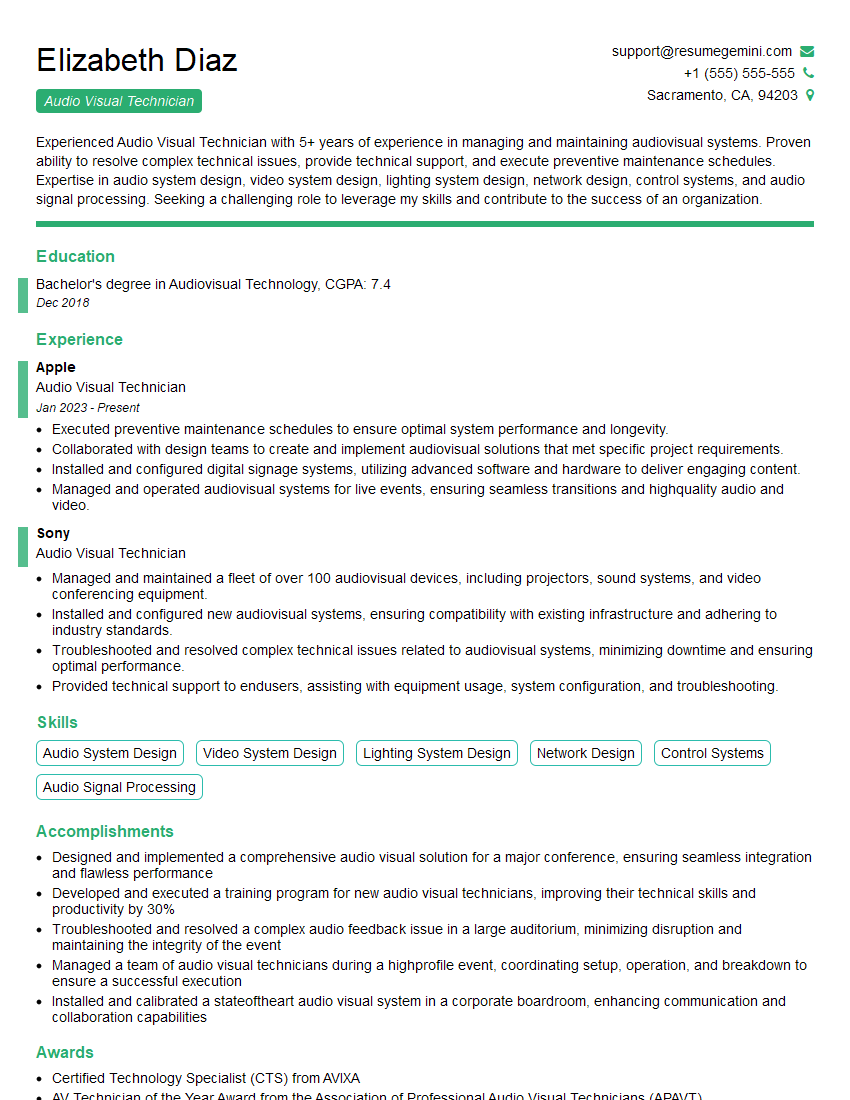

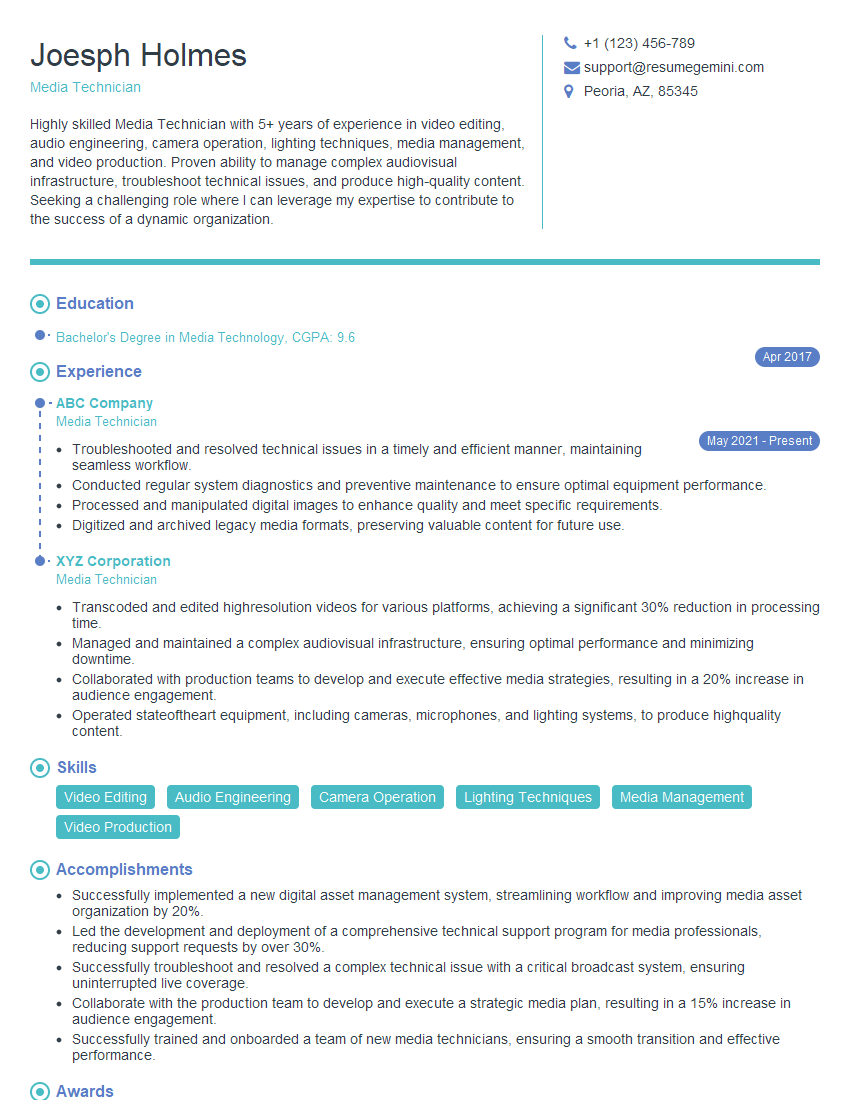

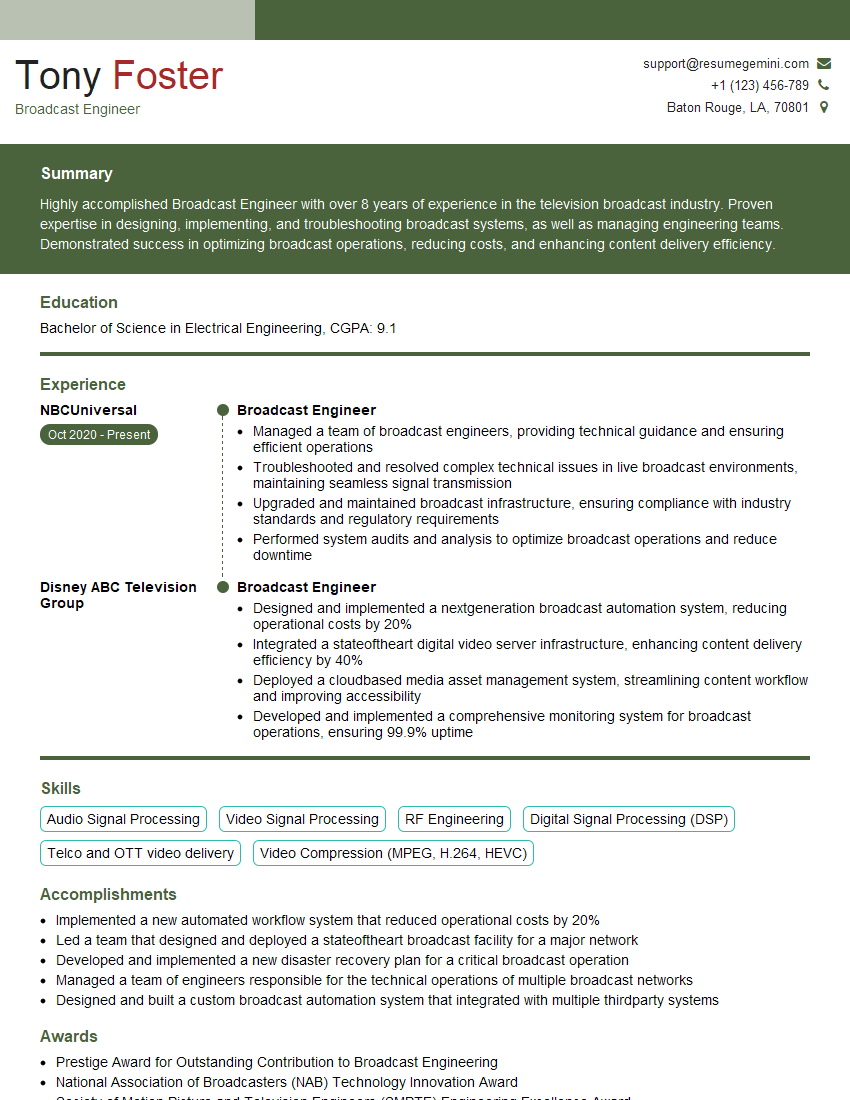

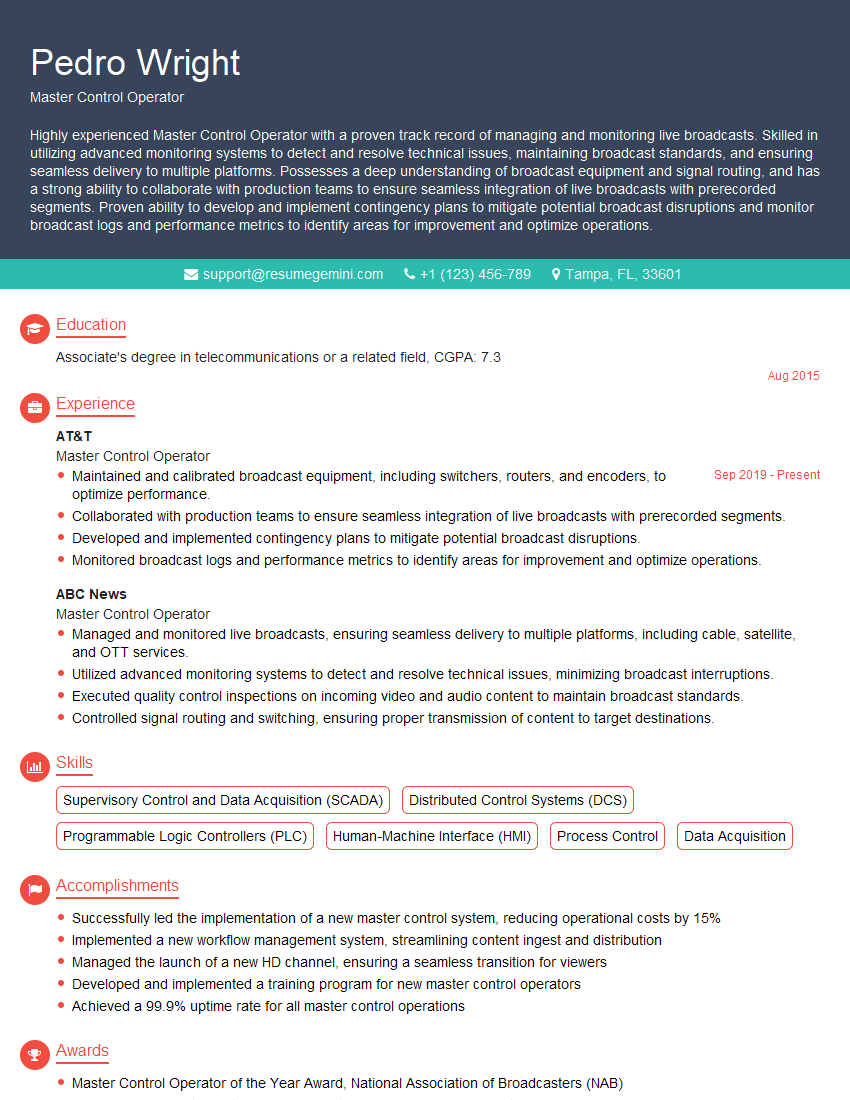

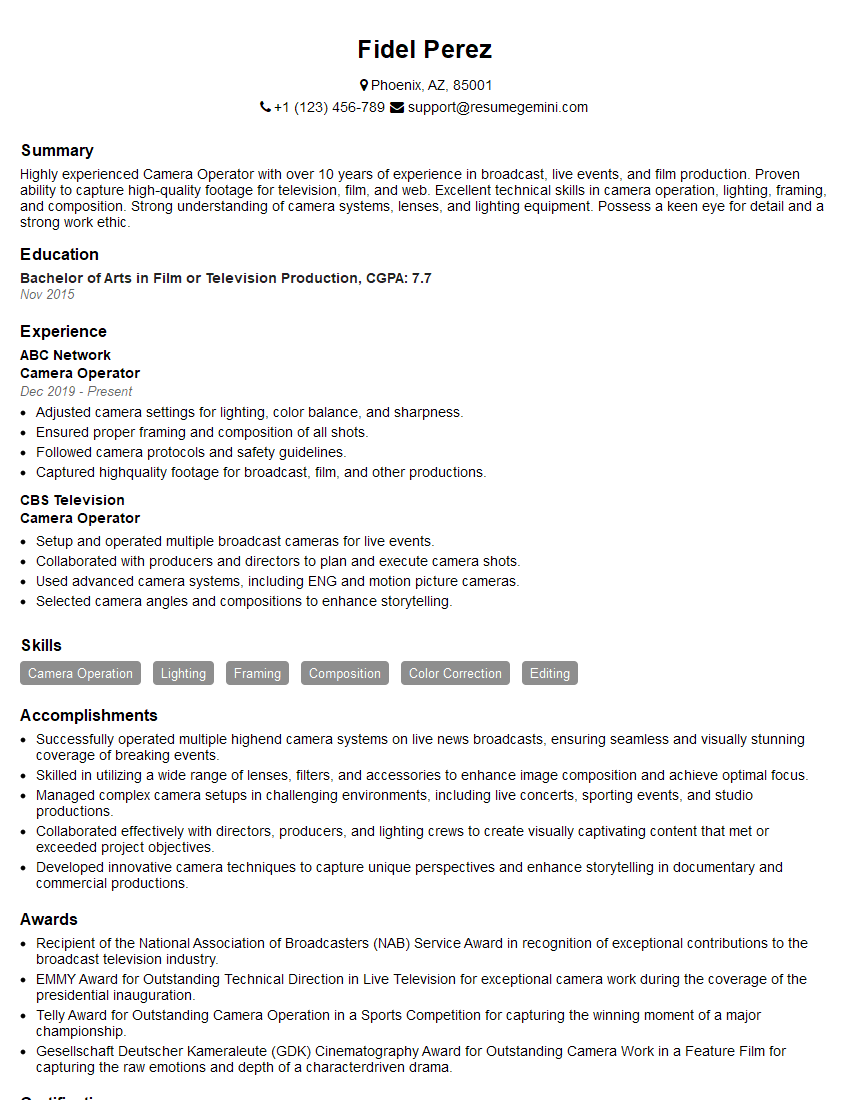

Mastering Live Capture Techniques opens doors to exciting career opportunities in broadcasting, online education, and content creation. A strong understanding of these techniques makes you a highly valuable asset in today’s digital landscape. To maximize your job prospects, it’s crucial to create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource to help you build a professional and impactful resume. Examples of resumes tailored to Live Capture Techniques are available to guide you in crafting a compelling document that showcases your expertise.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good