The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Measurement and Metering Principles interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Measurement and Metering Principles Interview

Q 1. Explain the different types of measurement uncertainties and how to minimize them.

Measurement uncertainty encompasses all the possible errors that can affect a measurement result. It’s not about a single mistake, but rather a range of values within which the true value likely lies. We categorize uncertainties into two main types:

- Random uncertainties: These are unpredictable variations in measurements caused by factors like noise in the sensor, slight variations in the environment, or even human error in reading the instrument. Think of it like slightly inconsistent results when weighing the same object multiple times. They usually follow a statistical distribution and can be reduced by averaging multiple measurements.

- Systematic uncertainties: These are consistent, repeatable errors that are inherent to the measurement system or process. A poorly calibrated instrument, for instance, would introduce a systematic error – each reading would be consistently off by the same amount. Identifying and correcting the source of systematic errors is crucial.

Minimizing uncertainties requires a multi-pronged approach:

- Careful Calibration: Regularly calibrate your instruments against traceable standards to eliminate systematic errors.

- Multiple Measurements: Taking multiple readings and averaging reduces the impact of random uncertainties. Statistical analysis can then quantify the remaining uncertainty.

- Environmental Control: Maintain a stable environment to minimize variations affecting the measurement process. This might involve temperature control or vibration isolation, depending on the application.

- Instrument Selection: Choose instruments with appropriate specifications to match the accuracy requirements of your application.

- Proper Procedures: Follow established procedures carefully to avoid introducing human error.

- Uncertainty Analysis: Quantify uncertainties using statistical methods like standard deviation to accurately estimate the overall uncertainty of a measurement.

For example, imagine measuring the flow rate of a liquid. Random uncertainty could arise from small fluctuations in the flow, while a systematic error could arise from an improperly installed flow meter that consistently underestimates the flow.

Q 2. Describe the principles of operation for a flow meter (e.g., orifice plate, Coriolis).

Flow meters measure the volume or mass flow rate of a fluid. Different principles govern various types:

- Orifice Plate: This is a simple and widely used device. A thin plate with a precisely sized hole (orifice) is inserted into the pipe. As the fluid flows through the smaller opening, its velocity increases, causing a pressure drop across the plate. This pressure difference is measured, and the flow rate is calculated using Bernoulli’s equation and empirically derived discharge coefficients. The accuracy depends on factors like the Reynolds number of the flow and the precise dimensions of the orifice.

- Coriolis Meter: These meters offer high accuracy and are often used for mass flow measurements. They operate by forcing the fluid to flow through a vibrating tube. The Coriolis effect, a deflection caused by the rotation of the earth, creates a measurable force proportional to the mass flow rate. This method directly measures mass flow rate, unaffected by temperature, pressure or fluid density changes. It’s preferred for applications demanding high precision, such as custody transfer.

Other types of flow meters include:

- Turbine Meters:

- Ultrasonic Meters:

- Positive Displacement Meters:

- Vortex Meters:

The choice depends on factors such as the fluid properties (viscosity, density), accuracy requirements, pressure and temperature ranges, and cost considerations.

Q 3. What are the common methods for calibrating pressure transducers?

Pressure transducers are calibrated to ensure their output accurately reflects the applied pressure. Common methods include:

- Deadweight Tester: This is a primary standard method that uses precisely known weights to apply a known pressure to the transducer. The transducer’s output is compared to the known pressure to establish its accuracy. It’s highly accurate but can be expensive and time-consuming.

- Calibration against a Secondary Standard: A secondary standard, such as a calibrated pressure gauge or another highly accurate pressure transducer, can be used to calibrate the transducer. While less accurate than a deadweight tester, this is often more convenient and cost-effective.

- In-situ Calibration: For some applications, in-situ calibration is possible. This involves comparing the transducer’s output to another measurement method while the transducer is installed in the system. This requires additional equipment and careful consideration of the system dynamics.

Regardless of the method, a detailed calibration certificate should be generated, documenting the calibration procedures, results, and the uncertainty associated with the calibration.

Q 4. How do you select the appropriate measurement instrument for a given application?

Selecting the right measurement instrument involves a careful assessment of several factors:

- Measurement Range and Accuracy: Determine the expected range of values and the required accuracy. A high-precision instrument might be necessary for critical measurements, while a less precise instrument may suffice for routine monitoring.

- Environmental Conditions: Consider the operating temperature, pressure, humidity, and potential for vibration or contamination. The instrument must be compatible with these conditions.

- Fluid Properties: For flow measurements, the fluid’s viscosity, density, and conductivity will influence the choice of meter.

- Cost and Maintenance: Weigh the initial cost of the instrument against the cost of calibration, maintenance, and potential downtime.

- Output Signal and Compatibility: Ensure the instrument’s output signal (analog, digital, etc.) is compatible with the data acquisition system.

- Safety: In hazardous environments, the instrument must meet safety standards and be intrinsically safe.

For instance, selecting a temperature sensor for a high-temperature furnace would require a sensor with a suitable high-temperature rating, robust construction and possibly built-in safety features. On the other hand, a less robust sensor might be adequate for measuring room temperature.

Q 5. Explain the concept of hysteresis in measurement systems.

Hysteresis in a measurement system refers to the difference in output for the same input value depending on whether the input is increasing or decreasing. Imagine a spring: if you stretch it to a certain length and then release it, it doesn’t return exactly to its original position. There’s a slight lag or ‘memory’ effect. The same happens in some measurement instruments.

This difference between the increasing and decreasing input curves is hysteresis. It’s caused by internal friction, mechanical compliance or other non-linear behaviors within the instrument. High hysteresis can lead to inaccurate readings, especially if the input value is frequently changing.

Hysteresis needs to be considered when specifying and using sensors. It’s generally characterized by a hysteresis band, which represents the maximum difference in output for the same input value during increasing and decreasing cycles. Low hysteresis is desirable in high precision applications.

Q 6. What is the difference between accuracy and precision in measurement?

Accuracy and precision are often confused, but they represent different aspects of measurement quality.

- Accuracy refers to how close a measurement is to the true value. A highly accurate measurement is very close to the real value. Think of hitting the bullseye on a target.

- Precision refers to how repeatable or consistent a measurement is. A precise measurement yields very similar values when repeated, even if those values are not close to the true value. Think of all the shots clustering tightly together on the target, but not necessarily near the bullseye.

It’s possible to have high precision but low accuracy (consistent but wrong) and vice versa. Ideal measurements are both accurate and precise. Accurate and precise measurements are obtained through careful calibration, instrument selection, and proper measurement techniques.

Q 7. Describe different types of temperature sensors and their applications.

Numerous temperature sensors exist, each suitable for different applications:

- Thermocouples: These are based on the Seebeck effect – the generation of a voltage when two dissimilar metals are joined at different temperatures. They are robust, relatively inexpensive, and can measure a wide range of temperatures, but their accuracy is typically lower than other sensors.

- Resistance Temperature Detectors (RTDs): These sensors utilize the change in electrical resistance of a material (typically platinum) as a function of temperature. They offer high accuracy and stability, but are more expensive and generally have a lower temperature range than thermocouples.

- Thermistors: These are semiconductor devices whose resistance changes significantly with temperature. They are very sensitive to temperature changes and inexpensive, but their accuracy is typically lower and they have a limited temperature range.

- Infrared (IR) Thermometers: These non-contact sensors measure temperature by detecting the infrared radiation emitted by an object. They are useful for measuring the temperature of moving objects or objects that are difficult to access directly. However, emissivity needs to be accounted for.

The choice of sensor depends on factors such as the required accuracy, temperature range, response time, cost, and the application environment.

Q 8. Explain the importance of data logging and its applications in measurement systems.

Data logging is the automated process of recording measurements over time. It’s crucial in measurement systems because it provides a historical record of data, allowing for trend analysis, anomaly detection, and informed decision-making. Imagine trying to diagnose a malfunctioning process without knowing its past behavior; data logging provides that vital context.

- Process Optimization: Monitoring parameters like temperature, pressure, and flow rate allows identification of inefficiencies and optimization opportunities. For instance, a manufacturing plant might log energy consumption to pinpoint energy-wasting processes.

- Predictive Maintenance: Analyzing logged data can help predict equipment failures before they occur, minimizing downtime and maintenance costs. A vibration sensor on a motor, for example, can predict bearing failure by logging vibration levels over time.

- Regulatory Compliance: Many industries require detailed records of process parameters for compliance purposes. Data logging ensures these records are readily available for audits.

- Research and Development: In research settings, data logging provides critical evidence for analyzing experiments and validating hypotheses. A scientist studying the effect of temperature on a chemical reaction would rely heavily on data logging.

Q 9. How do you handle sensor drift and how to compensate for it?

Sensor drift refers to the gradual change in a sensor’s output over time, even when the measured variable remains constant. This can be due to various factors like temperature changes, aging, or component wear. Handling sensor drift requires a multi-pronged approach.

- Calibration: Regular calibration against a known standard is essential. This involves adjusting the sensor’s output to match the actual value. Think of it like recalibrating a kitchen scale to ensure accurate measurements.

- Temperature Compensation: If temperature is a significant factor, incorporating temperature sensors and using software algorithms to correct the output based on temperature readings can mitigate drift. This is frequently done in pressure sensors.

- Zeroing/Span Adjustment: Some sensors allow for adjusting the zero point (output when the measured variable is zero) and the span (the range of measurement). This can correct for some forms of drift.

- Statistical Methods: Using statistical techniques like linear regression to model the drift over time and applying corrections based on the model. This is more complex but can be highly effective for predictable drifts.

- Redundancy: Employing multiple sensors to measure the same parameter provides redundancy. If one sensor drifts significantly, the others can be used to identify and compensate for the error.

Q 10. What are the advantages and disadvantages of using digital versus analog measurement systems?

Both digital and analog systems have their own strengths and weaknesses. The best choice depends on the specific application.

Analog Systems:

- Advantages: Simple circuitry, often lower cost for basic applications, good for continuous signals.

- Disadvantages: Susceptible to noise, less accurate than digital systems, signal degradation over long distances.

Digital Systems:

- Advantages: High accuracy and precision, noise immunity, easy signal transmission over long distances, readily compatible with computers and data processing systems.

- Disadvantages: More complex circuitry, higher initial cost, potential for quantization error (discretization of continuous signals).

Example: A simple thermometer might use an analog system, while a sophisticated industrial process controller would likely use a digital system for its high accuracy and ability to integrate with control algorithms.

Q 11. Explain different signal conditioning techniques used in measurement systems.

Signal conditioning is crucial for adapting a sensor’s raw signal into a form suitable for processing and display. Common techniques include:

- Amplification: Increasing the amplitude of weak signals to improve the signal-to-noise ratio. This is common when dealing with sensors producing millivolt-level signals.

- Filtering: Removing unwanted noise or frequencies from the signal using filters (e.g., low-pass, high-pass, band-pass). This is vital for eliminating interference in noisy environments.

- Linearization: Converting a non-linear sensor output into a linear relationship. Many sensors don’t produce a perfectly linear response, and linearization improves accuracy.

- Isolation: Protecting sensitive electronics from voltage spikes or ground loops using isolation amplifiers. This is critical in industrial applications where there might be significant electrical noise.

- Conversion: Changing the signal’s form (e.g., analog-to-digital conversion (ADC) for digital processing, or current-to-voltage conversion).

Example: A thermocouple (temperature sensor) produces a very small voltage. A signal conditioning circuit would amplify this signal, filter out noise, and perform a linearization to get an accurate temperature reading.

Q 12. Describe the principles of operation of a level sensor (e.g., ultrasonic, radar).

Let’s examine ultrasonic and radar level sensors:

Ultrasonic Level Sensors: These sensors emit ultrasonic sound waves. The time it takes for the waves to reflect off the liquid surface and return to the sensor is measured. The distance, and hence the liquid level, is calculated using the speed of sound. Think of it like echolocation used by bats.

Radar Level Sensors: These use electromagnetic waves (radio waves) instead of sound. The time of flight of the radar signal is measured, similar to ultrasonic sensors. Radar is less affected by factors such as temperature and humidity compared to ultrasonic.

In summary: Both sensors work on the time-of-flight principle, but use different types of waves. Ultrasonic is simpler and generally cheaper but more sensitive to environmental conditions, while radar is more robust and suitable for challenging environments such as high temperatures or dusty conditions.

Q 13. What is the significance of linearity in a measurement system?

Linearity in a measurement system refers to the proportional relationship between the input and the output. A perfectly linear system produces an output that is directly proportional to the input. Deviation from linearity leads to measurement errors. Imagine a perfectly straight line; that’s ideal linearity. Any deviation from that line is non-linearity.

Significance: Linearity is crucial because it ensures that the measurement is consistent and predictable across the entire measurement range. Without linearity, small changes in the input may result in disproportionately large changes in the output, making accurate measurements challenging. This is critical for accurate process control and data analysis.

Example: In a pressure gauge, linearity means that a doubling of the pressure should result in a doubling of the gauge reading. Non-linearity would lead to inaccurate pressure readings at various points within the gauge’s measurement range.

Q 14. How do you perform a root cause analysis of a measurement system malfunction?

A root cause analysis (RCA) for measurement system malfunction involves systematically identifying the underlying causes of the problem, rather than just addressing the symptoms. A structured approach is crucial.

- Define the Problem: Clearly describe the malfunction. What specifically isn’t working? What are the observed symptoms?

- Data Collection: Gather all relevant data – sensor readings, calibration records, maintenance logs, environmental conditions, etc. This step is analogous to a detective gathering evidence.

- Identify Potential Causes: Brainstorm potential causes based on the gathered data. Consider factors like sensor failure, wiring issues, software glitches, environmental effects, or calibration errors.

- Verify Causes: Test each potential cause to determine its validity. This could involve testing individual components, running simulations, or observing the system under different conditions.

- Identify Root Cause: Once potential causes are verified, pinpoint the fundamental cause(s) that triggered the malfunction. This might involve multiple factors contributing to the problem.

- Develop Corrective Actions: Formulate solutions to address the root cause(s). This may involve replacing faulty components, recalibrating sensors, modifying software, or improving environmental controls.

- Verification: After implementing corrective actions, verify that the malfunction is resolved and the system is functioning correctly.

Example: If a flow meter consistently under-reports flow, the RCA might reveal that the flow meter needs recalibration, the sensor is fouled, or there is a pressure drop issue affecting the measurement.

Q 15. Describe different communication protocols used in instrumentation and control systems (e.g., Modbus, Profibus).

Industrial communication protocols are the backbone of modern instrumentation and control systems, enabling seamless data exchange between sensors, actuators, and controllers. Several protocols cater to different needs regarding speed, reliability, and complexity. Let’s look at a few prominent examples:

- Modbus: This is a widely used, robust, and relatively simple serial communication protocol. It’s popular for its open standard nature, making it compatible with a vast range of devices from different manufacturers. Think of it as a common language allowing different instruments to ‘talk’ to each other. Modbus operates over RS-232, RS-485, and even TCP/IP networks, offering flexibility in deployment.

- Profibus: Profibus (PROcess FIeld BUS) is a high-performance fieldbus system designed for demanding industrial automation applications. It offers faster data transfer rates than Modbus, making it suitable for real-time control applications demanding quick responses. Its capabilities include cyclic and acyclic communication, ensuring both regular data updates and immediate responses to events. Profibus is often integrated into complex systems managing various industrial processes.

- Ethernet/IP: This protocol leverages the ubiquitous Ethernet network, offering high bandwidth and easy integration with existing IT infrastructures. It’s a powerful choice for large-scale systems needing high-speed communication and advanced data management capabilities. Ethernet/IP enables seamless communication between programmable logic controllers (PLCs), HMIs (Human Machine Interfaces), and other devices within a plant network.

- Fieldbus Foundation (FF): This is a family of protocols including FOUNDATION Fieldbus and PROFIBUS PA that uses digital communication for process control, specifically designed for intrinsic safety in hazardous environments. They offer advantages like advanced diagnostics and enhanced data handling compared to traditional analog systems.

The choice of protocol depends on factors like the application’s requirements for speed, distance, complexity, and cost. For example, a simple monitoring application might use Modbus, while a complex process control system might utilize Ethernet/IP or Profibus.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of a control loop and its components.

A control loop is the fundamental building block of automated control systems. Imagine it as a feedback mechanism that constantly monitors a process variable and adjusts it to maintain a desired setpoint. It’s like a thermostat in your home, constantly comparing the room temperature to the desired temperature and adjusting the heating/cooling system accordingly.

The main components are:

- Process Variable (PV): This is the actual value being measured (e.g., temperature, pressure, flow rate).

- Sensor/Transducer: This device measures the process variable and converts it into a signal understandable by the controller (e.g., a thermocouple measuring temperature).

- Controller: The ‘brain’ of the system, comparing the PV with the setpoint (SP) and calculating the necessary correction. It can be a simple on/off controller or a more sophisticated PID (Proportional-Integral-Derivative) controller.

- Actuator: This device implements the corrective action calculated by the controller (e.g., a control valve adjusting flow rate).

- Setpoint (SP): The desired value of the process variable.

For instance, in a temperature control loop for a chemical reactor, a thermocouple (sensor) measures the reactor temperature (PV). The controller compares this to the desired temperature (SP) and adjusts the flow rate of a coolant (actuator) to maintain the setpoint. This continuous monitoring and adjustment forms the closed-loop control system.

Q 17. How do you ensure the accuracy and reliability of measurement data?

Ensuring the accuracy and reliability of measurement data is paramount in any application. It involves a multi-faceted approach:

- Calibration and Verification: Regular calibration against traceable standards is essential to confirm the accuracy of measurement instruments. This involves comparing the instrument’s readings to a known standard and adjusting it accordingly. Verification checks the instrument’s performance without necessarily adjusting it.

- Sensor Selection: Choosing the right sensor for the specific application and environment is critical. Consider factors like the measurement range, accuracy, sensitivity, and environmental robustness.

- Data Validation: Implementing data validation checks helps identify and eliminate erroneous data points. This might include range checks, plausibility checks, and consistency checks across multiple sensors.

- Redundancy: Using multiple sensors to measure the same parameter and comparing their readings can significantly improve reliability and detect potential sensor failures.

- Proper Installation and Maintenance: Correct installation and regular maintenance are crucial to minimize errors caused by faulty wiring, environmental influences, or wear and tear.

- Environmental Compensation: Considering and compensating for environmental factors like temperature, pressure, and humidity that can affect sensor readings is essential for maintaining accuracy.

For example, in a flow measurement application, regular calibration of the flow meter ensures that its readings accurately reflect the actual flow rate. Data validation can identify spikes or drops in flow rate that may indicate a problem within the system.

Q 18. What are the common sources of error in measurement systems?

Measurement systems are susceptible to various errors that can compromise the accuracy and reliability of the obtained data. These errors can be broadly classified as:

- Systematic Errors: These errors are consistent and repeatable, often stemming from inherent limitations in the measuring instrument or the measurement process. Examples include zero offset errors (a constant bias), scale errors (non-linearity), and environmental effects (temperature-dependent readings).

- Random Errors: These errors are unpredictable and vary randomly, often due to noise or fluctuations in the measured variable. They can be minimized by averaging multiple readings.

- Human Errors: Mistakes made by operators during instrument handling, calibration, or data recording can lead to significant errors. Proper training and standardized procedures can mitigate this.

- Environmental Errors: External factors like temperature, pressure, humidity, and electromagnetic interference can significantly affect sensor readings.

- Loading Errors: The act of measuring a variable can sometimes affect the variable itself, introducing an error. This is common in low-impedance measurements.

For example, a thermocouple might exhibit a systematic error due to self-heating, while random noise in a signal could introduce random errors. Human error could occur if the wrong calibration procedure is followed.

Q 19. Describe your experience with different types of data acquisition systems.

My experience encompasses a range of data acquisition (DAQ) systems, from simple standalone devices to complex, networked systems. I’ve worked with:

- Standalone DAQ devices: These are self-contained units that collect data from sensors and store it locally, often on an SD card or internal memory. They’re suitable for smaller applications where data transfer is not a major concern. I’ve used these in various field testing and monitoring scenarios.

- PC-based DAQ systems: These systems utilize a computer’s processing power and data storage capabilities for data acquisition. They often involve specialized data acquisition cards (DAQ cards) that plug into the computer’s expansion slots. These offer increased flexibility and more advanced data processing capabilities, and I’ve used them extensively in lab settings and larger-scale data logging projects.

- Networked DAQ systems: These systems allow for remote data acquisition and monitoring over a network. They are useful for distributed sensing applications across large areas. I’ve used industrial Ethernet and wireless communication protocols for these systems, often integrating with SCADA (Supervisory Control and Data Acquisition) systems for centralized monitoring and control.

In one project, I designed a networked DAQ system using an industrial Ethernet backbone to monitor various parameters across a large manufacturing plant. Another project utilized a PC-based system for high-speed data acquisition in a laboratory environment.

Q 20. How do you troubleshoot and repair malfunctioning measurement instruments?

Troubleshooting malfunctioning measurement instruments requires a systematic approach. My strategy generally involves:

- Initial Assessment: First, I’d carefully examine the instrument for any visible damage or signs of malfunction. I’d check connections and power supply.

- Reviewing Historical Data: Analyzing past data can help identify patterns or trends that might indicate the nature of the malfunction.

- Calibration Check: I’d check if the instrument is properly calibrated. Calibration discrepancies can be a major source of errors.

- Signal Tracing: Following the signal path from the sensor to the display can identify any breaks or signal degradation along the way. This often involves using specialized test equipment like multimeters and oscilloscopes.

- Component-Level Diagnosis: If the problem persists, I’d resort to checking individual components for faults, potentially replacing faulty parts.

- Documentation: Thorough documentation throughout the process is essential for tracking the issue and its resolution.

For example, if a temperature sensor is providing erratic readings, I’d first check its connection, then its calibration. If the issue persists, I would check the wiring and potentially the sensor itself.

Q 21. Explain your understanding of different types of flow measurement technologies.

Flow measurement technologies are crucial in various industries for process control and monitoring. The selection of a specific technique depends on the fluid’s properties (e.g., liquid, gas, viscosity), flow rate, and the accuracy required.

- Differential Pressure Flow Meters: These meters utilize the pressure difference created by a restriction in the flow path (e.g., orifice plate, Venturi tube). The pressure difference is proportional to the square of the flow rate. They are relatively simple, robust, and widely used for liquids and gases.

- Positive Displacement Flow Meters: These meters precisely measure the volume of fluid passing through a chamber. Examples include rotary vane and piston meters. They offer high accuracy but are generally more expensive and suited for lower flow rates.

- Turbine Flow Meters: A rotating turbine is placed in the flow path. The rotational speed of the turbine is proportional to the flow rate. These meters are suitable for clean liquids and gases and are known for their high accuracy over a wide flow range.

- Electromagnetic Flow Meters: These meters use Faraday’s law of induction to measure the flow rate of electrically conductive liquids. A magnetic field is applied across the pipe, and the voltage induced is proportional to the flow rate. They are non-invasive and offer good accuracy for various liquids.

- Ultrasonic Flow Meters: These meters use ultrasonic waves to measure the transit time of sound waves traveling in the flow direction and opposite to the flow direction. The difference in transit time is proportional to the flow rate. They are suitable for liquids and gases, and are often preferred for applications where no flow restriction is desired.

- Coriolis Flow Meters: These meters measure mass flow by exploiting the Coriolis effect. The fluid flows through a vibrating tube, and the Coriolis force causes a phase shift in the vibration, proportional to the mass flow rate. They provide highly accurate mass flow measurements for various liquids and gases, often used in high-precision applications.

The choice of technology depends heavily on the application. For example, a large pipeline might use a differential pressure meter for cost-effectiveness, while a pharmaceutical application requiring precise mass flow measurement might employ a Coriolis meter.

Q 22. What is your experience with PLC programming in the context of measurement and control?

My experience with PLC programming in measurement and control is extensive. I’ve worked extensively with various PLC platforms, including Siemens TIA Portal, Rockwell Automation RSLogix 5000, and Schneider Electric Unity Pro. I’m proficient in developing ladder logic programs to interface with various sensors and actuators for automated control systems. For instance, in a recent project involving a water treatment plant, I used a PLC to monitor flow rate, pressure, and pH levels from various sensors. The PLC then used this data to control valves, pumps, and chemical dosing systems, ensuring optimal treatment parameters were maintained. This involved writing programs to handle analog input/output, digital I/O, and implementing PID control loops for precise regulation. I’m also experienced in using function blocks and structured text programming for complex control algorithms, significantly improving code readability and maintainability.

For example, a segment of code I regularly use for analog input scaling might look like this:

//Example PLC code (pseudocode) Input_Raw := ReadAnalogInput(AI1); //Read raw analog value Engineering_Units := (Input_Raw - Input_Offset) * Scaling_Factor + Engineering_Zero; //Apply scaling This simple snippet demonstrates the conversion of a raw sensor reading to meaningful engineering units, a crucial step in any measurement and control application.

Q 23. Describe your experience with SCADA systems and their role in measurement and control.

SCADA systems are the backbone of many large-scale measurement and control operations. My experience encompasses designing, implementing, and maintaining SCADA systems using various platforms including Wonderware InTouch, Ignition, and GE Proficy. I understand the crucial role SCADA plays in data acquisition, visualization, and control of distributed systems. For example, I was involved in a project implementing a SCADA system for a large oil pipeline network. This involved configuring remote terminal units (RTUs) to collect data from pressure sensors, flow meters, and other instrumentation along the pipeline. The SCADA system then aggregated this data, providing a real-time overview of the entire pipeline’s operational status on a central monitoring system. Alarm management and historical data logging were critical components, allowing for efficient troubleshooting and performance analysis. I’m familiar with various communication protocols used in SCADA systems, such as Modbus, DNP3, and OPC UA, ensuring seamless data exchange between different components of the system.

The importance of robust security measures within SCADA systems cannot be overstated. I have a thorough understanding of implementing cybersecurity protocols to protect these systems from unauthorized access and cyber threats.

Q 24. Explain your understanding of smart metering technologies and their benefits.

Smart metering technologies represent a significant advancement in measurement and control, particularly in energy management. These systems go beyond simple measurement by incorporating advanced communication capabilities and data analytics. My understanding encompasses various smart metering technologies, including AMI (Advanced Metering Infrastructure) systems which use communication networks (e.g., cellular, power line) to remotely read meters and gather real-time consumption data. This data can be used for various purposes, including improving energy efficiency, detecting faults, and optimizing grid management. Smart meters provide granular data that allows for time-of-use pricing, demand-side management programs, and improved customer engagement through personalized energy usage feedback.

For example, a smart water meter can provide detailed information about water consumption patterns, which can help identify leaks or inefficient usage. The benefits extend to utility companies as well, who can use this data to optimize their operations, plan infrastructure upgrades, and reduce non-revenue water.

Furthermore, the integration of smart metering with other IoT (Internet of Things) devices enables the creation of smart grids, leading to enhanced energy efficiency and sustainability.

Q 25. What safety precautions are necessary when working with measurement instruments?

Safety is paramount when working with measurement instruments. Precautions vary depending on the specific instrument and its application, but some general safety guidelines include:

- Proper Training: Before handling any instrument, thorough training on its operation and safety procedures is essential.

- Lockout/Tagout Procedures: When working on live circuits or systems, proper lockout/tagout procedures must be followed to prevent accidental energization.

- Personal Protective Equipment (PPE): Appropriate PPE, such as safety glasses, gloves, and protective clothing, should be worn to prevent injuries from electrical hazards, chemical spills, or other potential risks.

- Calibration and Verification: Regularly calibrated and verified instruments are critical to ensure accurate readings and prevent accidents caused by faulty measurements.

- Environmental Considerations: Instruments should be operated within their specified environmental limits. Extreme temperatures, humidity, or corrosive environments can damage the instruments and create safety hazards.

- Grounding and Bonding: Proper grounding and bonding procedures are crucial when working with electrical instruments to prevent electrical shocks.

Following these safety guidelines is critical for preventing accidents and ensuring a safe working environment.

Q 26. How do you ensure the integrity and security of measurement data?

Ensuring the integrity and security of measurement data is crucial for accurate decision-making and reliable system operation. This involves multiple layers of protection:

- Data Validation: Implementing data validation checks to detect and flag outliers or invalid data points is crucial. This often involves range checks, consistency checks, and plausibility checks.

- Data Encryption: Encrypting data both during transmission and storage protects against unauthorized access and data breaches. This is especially important for sensitive data such as customer energy consumption patterns.

- Secure Communication Protocols: Using secure communication protocols like HTTPS, TLS, or VPNs protects data during transmission.

- Access Control: Implementing robust access control measures, such as user authentication and authorization, restricts access to measurement data to authorized personnel only.

- Redundancy and Backup: Implementing redundant systems and regular data backups ensures data availability and prevents data loss due to hardware failures or cyberattacks.

- Data Logging and Auditing: Maintaining a comprehensive audit trail of data access, modifications, and other relevant events provides traceability and accountability.

A multi-layered approach combining these methods is necessary for comprehensive data protection.

Q 27. Describe your experience with calibration and validation procedures.

Calibration and validation are essential for maintaining the accuracy and reliability of measurement instruments. My experience encompasses a wide range of calibration techniques and procedures, adhering to relevant industry standards (e.g., ISO 17025). Calibration involves comparing the instrument’s readings against a known standard, adjusting the instrument to meet specified tolerances. Validation, on the other hand, is the process of verifying that the instrument is suitable for its intended purpose. For instance, I’ve been involved in calibrating flow meters using calibrated standard flow elements, and validating the performance of temperature sensors by comparing their readings with a traceable reference standard. Accurate calibration and validation procedures are crucial for ensuring the quality of data collected from these instruments, ultimately influencing the accuracy of process control and ensuring compliance with regulatory requirements.

I am adept at using both manual and automated calibration systems and understand the importance of maintaining detailed calibration records, ensuring traceability back to national or international standards.

Q 28. What are your preferred methods for documenting measurement procedures and results?

My preferred methods for documenting measurement procedures and results emphasize clarity, traceability, and compliance. I typically use a combination of electronic and paper-based documentation. Electronic documentation uses specialized software or spreadsheets to record and manage data, ensuring easy access and retrieval. For example, I’ve used LIMS (Laboratory Information Management Systems) for managing calibration and validation records. This software helps automate certain aspects of the documentation process, ensuring consistency and traceability. However, I also maintain physical copies of crucial documents as a failsafe against digital system failures. All documentation adheres to a standardized format, including clear identification of the instrument, date, procedures followed, calibration standards used, and the results obtained. This structured approach ensures data integrity and allows for easy verification and auditing. My documentation includes detailed descriptions of calibration procedures, including step-by-step instructions and calculations where applicable, allowing others to easily replicate the process.

Key Topics to Learn for Measurement and Metering Principles Interview

- Fundamentals of Measurement: Understand accuracy, precision, uncertainty, and error analysis. Explore different types of measurement scales (nominal, ordinal, interval, ratio).

- Static and Dynamic Measurement Systems: Differentiate between static and dynamic systems, and analyze their response characteristics (e.g., time constant, settling time).

- Sensor Technologies: Gain familiarity with various sensor types (e.g., pressure, temperature, flow, level) and their operating principles. Understand signal conditioning techniques.

- Data Acquisition and Signal Processing: Learn about analog-to-digital conversion (ADC), digital signal processing (DSP) techniques, and data logging methods.

- Flow Measurement Techniques: Master different flow measurement methods (e.g., orifice plates, venturi meters, turbine meters, ultrasonic flow meters) and their applications.

- Level Measurement Techniques: Understand various level measurement techniques (e.g., hydrostatic pressure, ultrasonic, radar, capacitance) and their suitability for different applications.

- Pressure Measurement Techniques: Familiarize yourself with different pressure measurement principles (e.g., Bourdon tube, diaphragm, piezoelectric) and their applications.

- Calibration and Verification: Understand the importance of calibration and verification procedures for ensuring measurement accuracy and traceability.

- Practical Applications: Consider real-world examples in industries like oil & gas, water management, manufacturing, and process control.

- Troubleshooting and Problem Solving: Practice diagnosing measurement system issues, identifying sources of error, and developing solutions.

Next Steps

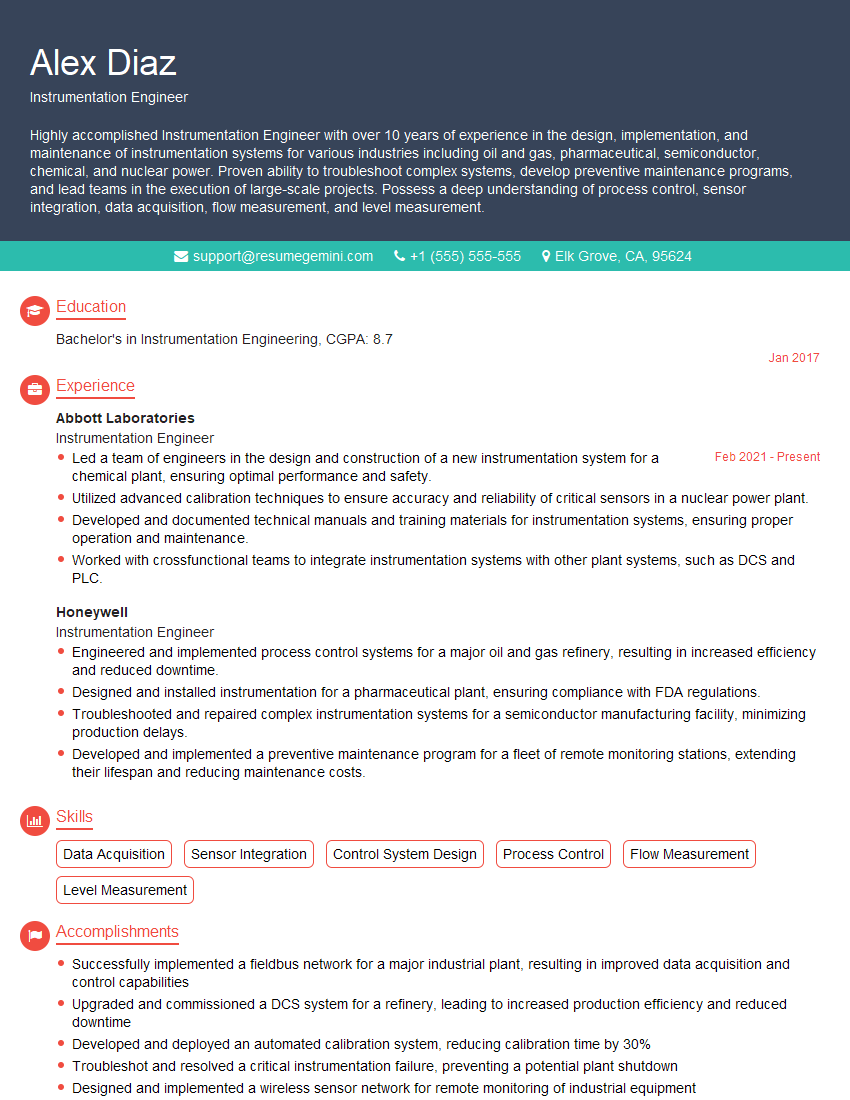

Mastering Measurement and Metering Principles is crucial for a successful career in various engineering and technical fields. A strong understanding of these principles demonstrates your analytical abilities and problem-solving skills, making you a highly desirable candidate. To maximize your job prospects, it’s essential to create a compelling and ATS-friendly resume that showcases your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. They offer examples of resumes tailored to Measurement and Metering Principles, providing you with a template for success. Take the next step towards your dream career by investing in a polished and targeted resume—it’s an investment that will pay off.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good