Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Monitor Machine Performance interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Monitor Machine Performance Interview

Q 1. Explain the difference between proactive and reactive performance monitoring.

Proactive and reactive performance monitoring represent two fundamentally different approaches to maintaining system health. Reactive monitoring is like waiting for a car to break down before addressing the issue – you only act after a problem occurs. Proactive monitoring, on the other hand, is like regularly servicing your car; you anticipate potential issues and address them before they impact performance.

- Reactive Monitoring: This involves waiting for performance degradation to become noticeable (e.g., slow response times, high error rates) before investigating the cause. It’s often driven by user complaints or system alerts indicating an existing problem. This approach is inherently less efficient, as problems can significantly impact users before resolution.

- Proactive Monitoring: This involves continuously monitoring key system metrics, setting thresholds for acceptable performance levels, and using automated alerts to warn of potential issues before they escalate into major incidents. This allows for timely intervention and prevents issues from affecting end-users.

Imagine a website experiencing slow load times. A reactive approach would involve waiting for user complaints to surface, then diagnosing the root cause. A proactive approach would involve continuously monitoring response times, setting an alert if the response time exceeds a defined threshold (say, 500ms), and identifying the problem before users notice any slowdowns. Proactive monitoring is far more effective in preventing outages and maintaining optimal performance.

Q 2. Describe your experience with various performance monitoring tools (e.g., Prometheus, Grafana, Datadog).

I have extensive experience with various performance monitoring tools, each with its own strengths and weaknesses. My experience includes:

- Prometheus: A powerful open-source monitoring system that excels at collecting time-series metrics. I’ve used Prometheus to monitor CPU usage, memory consumption, network traffic, and other crucial metrics in various microservices architectures. Its flexibility and extensibility through custom metrics make it ideal for complex environments. For example, I implemented custom metrics to track the latency of specific API calls within a critical e-commerce platform.

- Grafana: I’ve leveraged Grafana extensively to visualize the data collected by Prometheus and other monitoring systems. Its intuitive interface allows for creating dashboards with informative charts, graphs, and alerts. I’ve built dashboards displaying critical metrics for different services, providing an at-a-glance overview of the system’s health. For instance, I created a dashboard showcasing real-time request processing times and error rates, facilitating rapid problem identification.

- Datadog: A comprehensive SaaS monitoring platform that provides a unified view of various system aspects. I’ve utilized Datadog to monitor application performance, infrastructure metrics, and logs from various sources. Its automated anomaly detection features have proven invaluable in proactively identifying performance issues. In one project, Datadog’s automated alerts helped us identify a memory leak in a database server before it resulted in an outage.

Each tool has its unique advantages; my choice often depends on the specific requirements of the project, whether it involves open-source solutions or the need for a fully managed service.

Q 3. How do you identify performance bottlenecks in a complex system?

Identifying performance bottlenecks in a complex system requires a systematic approach. I typically use a combination of techniques:

- Monitoring Key Metrics: Start by monitoring critical metrics like CPU utilization, memory usage, disk I/O, network latency, and request processing times. Identify metrics that are consistently exceeding thresholds or exhibiting unusual behavior.

- Profiling: Utilize profiling tools to pinpoint specific code sections or database queries that are consuming excessive resources. This provides granular insights into the performance characteristics of individual components.

- Tracing: Implement distributed tracing to follow requests as they traverse the entire system. This reveals latency hotspots and helps identify which components are contributing most to overall slowdowns.

- Logging: Analyze logs to identify error messages or warnings that might indicate performance issues. Comprehensive logging is essential for post-mortem analysis as well.

- Load Testing: Conduct load tests to simulate realistic usage patterns and identify performance bottlenecks under stress. This is crucial for understanding how the system behaves under heavy load.

For example, in a recent project, we found a database query was consuming excessive resources. Profiling revealed the query was poorly written and lacked necessary indexes. Optimizing the query and adding indexes drastically improved overall system performance.

Q 4. What metrics are most important to monitor for application performance?

The most important metrics for application performance monitoring vary depending on the application type, but generally include:

- Response Time/Latency: How long it takes for the application to respond to a request. High latency indicates performance issues.

- Throughput/Requests per Second (RPS): The number of requests the application can handle per unit of time. Low throughput suggests bottlenecks.

- Error Rate: The percentage of requests resulting in errors. High error rates indicate problems with application logic or infrastructure.

- CPU Utilization: How much of the CPU is being used. High CPU usage can indicate overloaded processes.

- Memory Usage: How much memory is being consumed. High memory usage or memory leaks can lead to performance degradation.

- Disk I/O: How much data is being read from and written to disk. High disk I/O indicates potential bottlenecks related to storage.

- Network Latency: The time it takes for data to travel across the network. High network latency impacts communication between different parts of the application.

Monitoring these metrics provides a holistic view of application health and allows for early detection of performance problems.

Q 5. Explain your approach to troubleshooting slow database queries.

Troubleshooting slow database queries involves a methodical approach:

- Identify the Slow Query: Use database monitoring tools (e.g., database-specific performance monitoring features or general purpose monitoring tools) to identify the queries causing the most significant performance issues. Look at execution times and resource consumption.

- Analyze the Query: Examine the query’s structure for inefficiencies such as missing indexes, full table scans, or poorly written joins. Database explain plans can be invaluable here. They show the execution path the database chooses.

- Check Indexes: Ensure appropriate indexes exist on frequently queried columns to speed up data retrieval. Analyze query execution plans to check if indexes are being used efficiently.

- Optimize the Query: Rewrite the query for better efficiency. This may involve using different join types, adding or modifying indexes, or breaking down complex queries into smaller, more manageable ones.

- Database Tuning: Investigate database server configuration to ensure optimal settings for memory, buffer pools, and other parameters. Consider database upgrades or improvements to overall hardware.

- Application Code Review: Sometimes, the problem lies not in the query itself, but in how the application interacts with the database. Review the application code to ensure efficient database usage.

For example, I once encountered a slow query that was performing a full table scan on a large table. By adding an index on the relevant column, I reduced the query execution time from several minutes to milliseconds.

Q 6. How do you handle performance alerts and escalations?

Handling performance alerts and escalations requires a well-defined process and clear communication channels:

- Alerting System: Implement a robust alerting system that triggers alerts based on predefined thresholds for critical metrics. These alerts should clearly specify the nature of the problem and the impacted systems.

- On-Call Rotation: Establish a well-defined on-call rotation to ensure timely response to alerts. Clear communication protocols and escalation paths should be established.

- Incident Management Process: Implement a structured incident management process to guide troubleshooting and resolution. This should include steps for identifying the root cause, implementing a fix, and post-incident review.

- Communication: Maintain open communication with relevant stakeholders during incidents. Regular updates should be provided on the status of the issue and anticipated resolution time.

- Post-Incident Review: Conduct post-incident reviews to identify areas for improvement in monitoring, alerting, or incident response processes.

A well-defined process minimizes downtime and ensures a consistent and effective response to performance issues.

Q 7. Describe your experience with capacity planning and forecasting.

Capacity planning and forecasting are crucial for ensuring system performance and availability. My approach involves:

- Historical Data Analysis: Analyze historical performance data (e.g., CPU utilization, memory usage, network traffic) to identify trends and patterns. This provides a baseline for future capacity planning.

- Load Forecasting: Predict future loads based on historical data, business growth projections, and seasonal variations. Various forecasting techniques can be used, from simple linear regression to more sophisticated methods.

- Resource Modeling: Use resource modeling to estimate the resources needed to support projected loads. This involves simulating different scenarios to understand the impact of varying loads on system performance.

- Performance Testing: Conduct performance tests using simulated loads to validate capacity plans and identify potential bottlenecks. This helps to refine resource estimations and ensure the system can handle projected loads.

- Scalability Planning: Plan for scalability by designing systems that can easily handle increased loads. This might involve deploying systems in cloud environments or using auto-scaling capabilities.

- Regular Monitoring & Adjustment: Continuously monitor system performance and adjust capacity plans as needed. Capacity planning is an ongoing process that requires regular review and adaptation.

For instance, I helped a company plan for a significant increase in users during a promotional campaign by analyzing past traffic data, forecasting future loads, and conducting performance tests to ensure their systems could handle the anticipated surge. This prevented performance degradation and ensured a successful campaign.

Q 8. How do you ensure the accuracy and reliability of performance data?

Ensuring accurate and reliable performance data is paramount. It involves a multi-pronged approach focusing on data collection, validation, and analysis. Think of it like building a sturdy house – you need a strong foundation (data collection), quality materials (validation), and skilled craftsmanship (analysis).

- Robust Monitoring Tools: We leverage industry-standard tools like Prometheus, Grafana, Datadog, or New Relic. These tools provide comprehensive metrics, ensuring we capture relevant data points such as CPU utilization, memory usage, I/O operations, network latency, and database query times. The choice depends on the specific technology stack and the complexity of the system.

- Data Validation: Raw data isn’t always reliable. We employ techniques like anomaly detection to identify unusual spikes or dips in performance. Cross-referencing data from multiple sources (e.g., application logs, system metrics, user feedback) helps us verify the accuracy of our findings. For instance, a sudden increase in error rates coupled with high CPU usage points towards a bottleneck. We also regularly check the health of our monitoring infrastructure itself.

- Data Aggregation and Analysis: We use dashboards and reporting tools to visualize performance data. These dashboards aggregate data from various sources, providing a holistic view of system performance. We use statistical analysis to identify trends and patterns, making informed decisions about system optimization. For example, calculating percentiles (like the 95th percentile latency) gives a more robust picture of performance than just averages, helping us detect occasional slowdowns that affect users.

Ultimately, the goal is to minimize noise and maximize signal in our data, providing a clear understanding of the system’s health and performance.

Q 9. What are some common causes of application performance degradation?

Application performance degradation can stem from various sources, often interconnected. Think of it as a chain – if one link is weak, the whole chain is compromised.

- Resource Constraints: Insufficient CPU, memory, or disk I/O can lead to slowdowns. Imagine trying to cook a complex meal with only one small stove and limited cookware.

- Database Issues: Slow queries, inefficient database design, or lack of indexing can cripple application performance. A poorly organized database is like trying to find a specific ingredient in a cluttered pantry – it takes much longer.

- Network Bottlenecks: High latency or low bandwidth can hinder communication between different components of the application. This is similar to a congested highway where traffic slows everything down.

- Code Inefficiencies: Poorly written code, memory leaks, or inefficient algorithms can significantly impact performance. This is like trying to assemble furniture with poorly written instructions – it’s much slower and prone to errors.

- Third-Party Dependencies: Problems with external APIs or services can cascade into application performance issues. Think of it like a supply chain problem – if one supplier is delayed, the entire production process suffers.

- Increased Load: Sudden spikes in traffic or user requests can overwhelm the system’s capacity. This is like a restaurant suddenly experiencing a huge rush – it can lead to slow service and unhappy customers.

Identifying the root cause requires careful investigation, often involving profiling, logging analysis, and performance testing.

Q 10. How do you use performance monitoring data to improve system design?

Performance monitoring data is invaluable for iterative system design improvements. It provides concrete evidence to guide architectural changes and optimizations. Think of it as a feedback loop – you collect data, analyze it, and use the insights to improve your design.

- Identifying Bottlenecks: Performance data pinpoints areas where the system is struggling. For example, if database queries consistently consume 80% of the processing time, we know this is a critical area to optimize.

- Capacity Planning: Historical performance data helps predict future resource needs. This ensures the system can handle anticipated increases in load without performance degradation.

- Architectural Decisions: Monitoring data informs architectural choices, such as scaling strategies (vertical or horizontal scaling), caching mechanisms, or database technologies.

- Refactoring and Optimization: Performance data highlights code sections or algorithms needing improvement. For example, we might refactor a slow function or implement more efficient data structures based on observed bottlenecks.

A classic example is shifting from a monolithic architecture to microservices based on observed bottlenecks in a specific component of a monolithic application. This allows independent scaling and improvement of that specific component without affecting the rest of the system.

Q 11. Explain your experience with performance testing methodologies.

My experience with performance testing methodologies is extensive. I’ve used various approaches tailored to different project needs. Think of it as having a toolkit for different jobs – each tool is specialized for a particular task.

- Load Testing: This simulates realistic user loads to identify performance bottlenecks under stress. Tools like JMeter or Gatling are commonly employed.

- Stress Testing: This pushes the system beyond its expected limits to find its breaking point, revealing vulnerabilities.

- Endurance Testing (Soak Testing): This tests the system’s stability and performance over an extended period, identifying memory leaks or other long-term issues.

- Spike Testing: This simulates sudden bursts of traffic to determine the system’s responsiveness to rapid load changes.

- Unit Testing and Integration Testing: These are vital for ensuring individual components and their interactions perform efficiently. This is crucial before performance testing the entire system.

I use a combination of these techniques, depending on the specific requirements of the project and the stage of the software development life cycle. I also frequently automate performance tests as part of the continuous integration/continuous deployment (CI/CD) pipeline.

Q 12. Describe your experience with A/B testing for performance optimization.

A/B testing for performance optimization is a powerful technique. It allows us to compare the performance of different versions of a system (or feature) in a controlled environment. Think of it as a scientific experiment – you carefully compare two versions to see which performs better.

For example, we might A/B test two different caching strategies – one using Redis and the other using Memcached. By routing a portion of traffic to each version and monitoring key performance indicators (KPIs) like response time and throughput, we can determine which strategy yields better performance. We carefully monitor relevant metrics to ensure the test is fair and unbiased.

Crucially, A/B testing provides empirical evidence for making informed decisions about performance optimization. It allows for data-driven decision making, reducing the risk of implementing changes that negatively impact performance.

Q 13. How do you prioritize performance issues based on their impact?

Prioritizing performance issues requires a clear understanding of their impact on the business and users. We use a multi-faceted approach, combining quantitative and qualitative data. Think of it like triage in a hospital – the most critical cases are addressed first.

- Impact Assessment: We quantify the impact of each issue using metrics like the number of affected users, the severity of the performance degradation (e.g., response time increase), and the business cost of the downtime or slow performance. Downtime costs money, and slow performance impacts conversion rates.

- Frequency and Duration: How often does the issue occur? How long does it last? Frequent and prolonged issues take precedence.

- User Impact: Subjective measures of user experience are also crucial. User feedback and surveys can provide valuable insights into the real-world impact of performance issues.

- Prioritization Frameworks: We often use frameworks like MoSCoW (Must have, Should have, Could have, Won’t have) or a simple severity/priority matrix to rank issues objectively.

By combining data-driven analysis with business context, we ensure our efforts are focused on the most impactful performance bottlenecks.

Q 14. What are some common performance anti-patterns to avoid?

Avoiding performance anti-patterns is crucial for building efficient and scalable applications. These are common mistakes that can significantly hinder performance. Think of them as traps to avoid on the path to optimal performance.

- N+1 Query Problem: This occurs when you make multiple database queries to fetch related data, leading to excessive database load. Instead, optimize queries to retrieve all necessary data in a single call.

- Lack of Caching: Failing to implement caching mechanisms for frequently accessed data leads to redundant computations and increased database load. Appropriate caching strategies can drastically improve performance.

- Inefficient Algorithms: Using inefficient algorithms can significantly impact performance, especially for large datasets. Choose algorithms with optimal time and space complexity.

- Blocking I/O Operations: Blocking operations can freeze threads and reduce concurrency, impacting responsiveness. Use asynchronous or non-blocking I/O where appropriate.

- Ignoring Logging and Monitoring: Failing to adequately log and monitor application performance makes it difficult to identify and address performance issues proactively.

- Overuse of Global Variables: Excessive use of global variables can increase memory usage and complexity, hindering performance and debugging.

By consciously avoiding these common pitfalls, we can significantly improve application performance and scalability.

Q 15. Explain your experience with logging and log analysis for performance debugging.

Logging and log analysis are crucial for performance debugging. Think of logs as a detailed diary of your application’s activity. By analyzing these logs, we can pinpoint the root cause of performance bottlenecks. My experience involves using various tools, from centralized logging systems like Elasticsearch and the ELK stack (Elasticsearch, Logstash, Kibana) to more specialized solutions tailored to specific application needs. For example, in one project, we used log analysis to identify a specific database query that was consistently consuming excessive resources. By analyzing the query’s performance characteristics and the frequency with which it ran, we were able to optimize it significantly, resulting in a noticeable performance improvement.

My process usually involves several steps: firstly, identifying the relevant logs based on timestamps and error messages; then using tools to filter and aggregate the log data, searching for patterns and anomalies; and finally, correlating the log data with other performance metrics (like CPU utilization or memory usage) to understand the full context of the issue. We often use regular expressions to search for specific patterns within the log files, allowing us to quickly isolate relevant information. For instance, searching for a specific error code or a keyword related to a slow process helps pinpoint the problem area.

Effective log analysis necessitates proper log management – ensuring that logs are consistently generated, formatted in a consistent and easily parsed manner, and stored efficiently. Techniques like structured logging, where data is logged in a standardized JSON format, can significantly simplify analysis compared to unstructured plain-text logs.

Career Expert Tips:

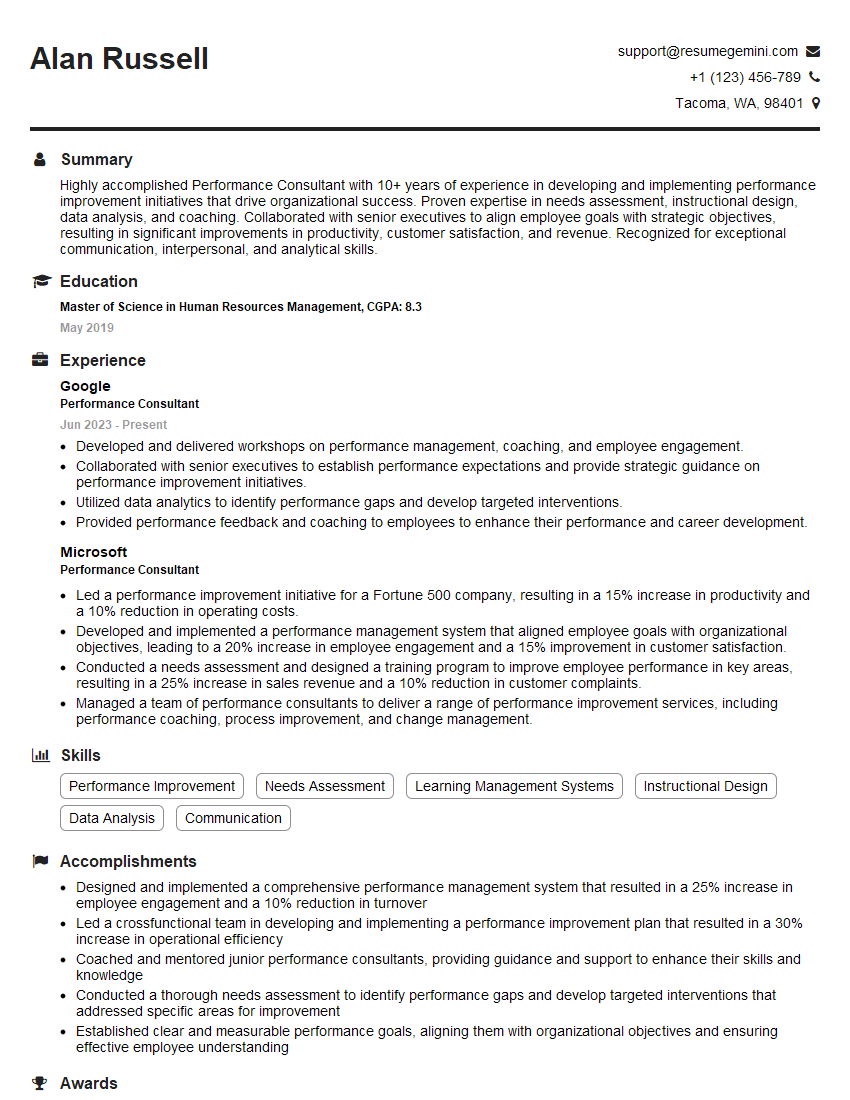

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you ensure performance monitoring is scalable and efficient?

Scalability and efficiency in performance monitoring are paramount, particularly as systems grow in size and complexity. Imagine trying to monitor a thousand servers individually – it’s impossible without automation and efficient tools. The key is to leverage distributed monitoring systems that can collect and aggregate metrics from numerous sources without significant performance overhead on the monitored systems themselves.

My approach involves using tools that employ data aggregation and summarization techniques. Instead of transmitting every single metric from every server, data is processed locally and only summarized information is sent to the central monitoring system. This drastically reduces network traffic and the processing burden on the central system. Tools like Prometheus and Grafana are excellent examples of systems designed for this kind of scalability. They use a ‘pull’ model for data collection, where the central monitoring system periodically queries the individual agents for their metrics, making it particularly efficient.

Another crucial aspect is using appropriate alerting mechanisms. Instead of alerting on every minor deviation, we establish thresholds and utilize intelligent alerting systems. This helps to avoid alert fatigue and focuses attention on significant performance issues. We also regularly review and optimize the monitoring configuration to ensure that it continues to be efficient and effective as the system evolves.

Q 17. Describe your experience with distributed tracing.

Distributed tracing is essential for understanding the performance of applications that span multiple services. Think of it as following a package as it moves through various stages of delivery – we need to understand the entire journey to identify bottlenecks. My experience includes using tools like Jaeger and Zipkin to trace requests as they travel through a distributed microservices architecture.

These tools allow us to visualize the flow of requests, identifying latency hotspots and performance issues within individual services. We can see exactly how long a request spends in each service, pinpoint slow operations or failures, and quickly identify the problematic components. For instance, in a recent project, we used distributed tracing to identify that a slow database response in one service was cascading into performance issues downstream. This allowed for a focused effort on optimizing the database query rather than making assumptions.

Implementing effective distributed tracing requires careful instrumentation of the application. This typically involves adding tracing headers to requests and using libraries or agents that automatically collect and report tracing data. Proper correlation between traces from different services is essential for a comprehensive understanding of the end-to-end performance.

Q 18. How do you correlate performance data from different sources?

Correlating performance data from diverse sources is like assembling a puzzle. We have different pieces of information (CPU usage from the server, database query times, network latency, user experience metrics) that need to be combined to get a complete picture. My approach involves using a centralized monitoring system that can ingest data from different sources and establish relationships between these data points.

This often involves using common identifiers, such as request IDs or transaction IDs, that can be tracked across various systems. For instance, a request ID can link a web server log entry to a database log entry and a network monitoring record, allowing us to reconstruct the complete lifecycle of a single request. We also employ custom scripting or data transformation to align the data from different sources into a unified format before analysis.

Tools like Datadog, Splunk, and New Relic are excellent at providing capabilities for correlating data from various sources. Their dashboards offer visualizations that help understand the interconnectedness of different performance metrics, making it easy to identify the underlying causes of performance issues.

Q 19. How do you use performance monitoring data to inform DevOps practices?

Performance monitoring data is the foundation of informed DevOps practices. It provides actionable insights into the health and performance of our systems, enabling proactive improvements and faster resolution of issues. In essence, it allows us to move from reactive problem-solving to proactive system optimization.

For instance, performance data helps us in capacity planning: By analyzing trends in resource utilization, we can predict future capacity needs and prevent performance degradation. It also improves our deployment processes: By monitoring the performance impact of deployments, we can identify issues quickly and roll back changes if necessary. Continuous integration/continuous delivery (CI/CD) pipelines are directly enhanced by automated performance tests triggered upon code changes, preventing performance regressions early in the development lifecycle.

Furthermore, performance data aids in service level objective (SLO) definition and monitoring. We use historical performance data to set realistic SLOs and continuously track our adherence, allowing us to understand how well we’re meeting our commitments. By using this data, we can improve our systems iteratively, creating a feedback loop that promotes continuous improvement.

Q 20. Explain your experience with synthetic monitoring.

Synthetic monitoring is like having a dedicated team of automated users that constantly check the health and responsiveness of your application from various geographic locations. Instead of relying solely on real-user monitoring, which can be unpredictable and difficult to analyze, synthetic monitoring uses automated scripts to simulate user interactions and measure response times.

My experience involves using tools that simulate various user scenarios, like page loads, API calls, and database queries. This allows us to proactively identify performance issues before they impact real users. For example, we might set up a script that simulates a typical user checkout process, allowing us to quickly detect any degradation in performance before customers experience issues. Synthetic monitors provide early warnings, helping to prevent outages and maintain a positive user experience.

These tools also allow us to monitor the performance of our systems from different geographical locations, mimicking the experience of users in different parts of the world. This is especially important for globally distributed applications.

Q 21. How do you optimize application performance for cloud environments?

Optimizing application performance in cloud environments presents unique challenges and opportunities. The key is to leverage the scalability and flexibility of the cloud while avoiding common pitfalls.

My approach involves several strategies, including right-sizing instances: Choosing the appropriate instance size for your workload is vital. Over-provisioning wastes resources, while under-provisioning leads to performance bottlenecks. We leverage cloud monitoring tools to analyze resource utilization and adjust instance sizes accordingly. Another strategy is using auto-scaling features, which dynamically adjusts the number of instances based on demand. This helps maintain performance even during traffic spikes. Database optimization is also critical: Choosing the right database type (e.g., relational, NoSQL) and optimizing database queries are crucial for performance. Cloud-native database services often offer advantages in terms of scalability and ease of management.

Caching strategies play a crucial role in cloud environments. Leveraging content delivery networks (CDNs) for static assets and using in-memory caches for frequently accessed data reduces latency and improves response times. Finally, efficient code and architecture are fundamental: Well-designed code and a scalable architecture, utilizing microservices where appropriate, are vital for optimal performance in the cloud.

Q 22. Describe your experience with performance tuning operating systems.

Operating system performance tuning is a multifaceted process focused on optimizing resource utilization to enhance application responsiveness and overall system efficiency. My experience encompasses various aspects, from analyzing system logs and resource consumption to adjusting kernel parameters and implementing caching strategies.

For instance, I once worked on a server experiencing slowdowns due to excessive disk I/O. By analyzing system logs with tools like iostat and vmstat, we identified a bottleneck related to inefficient database queries. We subsequently optimized database queries, implemented a more robust caching mechanism, and adjusted the kernel’s I/O scheduler, resulting in a significant performance improvement. In another project, we identified and resolved memory leaks in a critical application by using tools like top and free, leading to a noticeable reduction in system instability and improved overall performance.

My approach typically involves a systematic methodology: First, I’d thoroughly profile the system to pinpoint performance bottlenecks. Next, I’d experiment with different tuning parameters, closely monitoring their impact on key metrics. Finally, I’d thoroughly document the changes made and their effectiveness, creating a baseline for future adjustments. The specific tools and techniques employed vary based on the operating system (Linux, Windows, etc.) and the underlying architecture.

Q 23. How do you use performance monitoring data to identify security vulnerabilities?

Performance monitoring data can indirectly reveal security vulnerabilities. Unusual spikes in CPU usage, memory consumption, or network activity can sometimes signal malicious activity. For example, a sudden increase in network traffic to an unusual destination might indicate a data breach or malware exfiltration attempt.

Similarly, a persistent high CPU load from an unknown process could suggest a rootkit or other malicious software. Analyzing process resource usage with tools like top (Linux) or Task Manager (Windows) is essential. We can correlate these performance anomalies with security logs and intrusion detection system (IDS) alerts to corroborate suspicions.

However, it’s crucial to remember that performance metrics alone aren’t definitive proof of a security breach. They provide clues, which require further investigation using security-specific tools and techniques. This approach is particularly valuable in detecting zero-day exploits or subtle attacks that might evade traditional security systems.

Q 24. Explain your understanding of different performance monitoring architectures.

Performance monitoring architectures vary significantly depending on the scale and complexity of the system. We can broadly categorize them into:

- Centralized Monitoring: A single server collects and analyzes data from all monitored systems. This approach simplifies management but can create a single point of failure and may struggle with scalability for very large environments.

- Decentralized Monitoring: Data is collected and analyzed at multiple points in the network. This offers better scalability and resilience but adds complexity to data aggregation and correlation.

- Hybrid Monitoring: Combines elements of both centralized and decentralized approaches, aiming to leverage the strengths of both while mitigating weaknesses. This often involves using agents on individual servers, collecting data which is then aggregated in a central location.

The choice of architecture depends on factors like the size of the environment, the types of applications being monitored, and the required level of detail in the collected data. Modern approaches often leverage cloud-based solutions which offer highly scalable and manageable infrastructure. For instance, cloud platforms often provide integrated monitoring tools that can handle massive data volumes and sophisticated analysis.

Q 25. How do you balance performance monitoring with security considerations?

Balancing performance monitoring with security is crucial. Extensive monitoring can introduce security risks if not carefully implemented. Overly intrusive monitoring agents can become targets for attacks, and improperly secured monitoring systems can expose sensitive data.

My approach prioritizes the principle of least privilege. Monitoring agents are granted only the necessary permissions to perform their tasks, minimizing potential damage if compromised. Data transmitted between monitoring agents and the central system should be encrypted using robust protocols. Regular security audits of the monitoring infrastructure are essential to identify and mitigate vulnerabilities.

For example, when deploying monitoring agents, I’d always prioritize using secured channels (HTTPS, for instance) and strong authentication methods. Furthermore, sensitive data like passwords or API keys should never be stored directly within monitoring configurations; instead, I would utilize secure secrets management tools.

Q 26. Describe your experience with different types of performance testing (load, stress, endurance).

I have extensive experience with various performance testing methodologies, including load, stress, and endurance testing. Each serves a distinct purpose:

- Load Testing: Determines system behavior under anticipated user loads. This helps identify bottlenecks and capacity limitations before deployment. Tools like JMeter and Gatling are often used.

- Stress Testing: Pushes the system beyond its expected limits to identify breaking points and vulnerabilities. This helps determine the system’s resilience and capacity for handling unexpected surges in load.

- Endurance Testing: Evaluates system stability and performance over extended periods under sustained load. This uncovers potential memory leaks, resource exhaustion, and other long-term stability issues.

In a recent project, we used JMeter to perform load testing on a new e-commerce platform, simulating thousands of concurrent users. This testing identified database performance bottlenecks, which we addressed through database optimization and caching strategies. Subsequently, we employed stress testing to simulate significantly higher user loads than expected, revealing an unanticipated vulnerability in the application’s error handling mechanism. Finally, endurance testing confirmed the stability of the updated system under continuous operation for 72 hours.

Q 27. How would you approach monitoring the performance of a microservices architecture?

Monitoring the performance of a microservices architecture requires a distributed monitoring approach. Each microservice needs to be individually monitored to identify performance bottlenecks within its specific functions. However, merely monitoring individual services is insufficient. Understanding the inter-service communication and dependencies is critical.

I would employ a combination of techniques: First, each microservice should include instrumentation to track key metrics like request latency, error rates, and resource usage. Distributed tracing tools are essential to track requests as they flow through multiple services, helping pinpoint the source of performance problems across service boundaries. Tools like Jaeger or Zipkin are often employed for this purpose. Centralized logging and log aggregation are also crucial for correlating events and identifying patterns.

The approach needs to consider the inherent complexities of distributed systems. For example, network latency between services significantly impacts overall performance. Therefore, detailed monitoring of network performance, including latency and bandwidth usage, is crucial for optimizing the system’s efficiency.

Q 28. Explain your approach to creating and maintaining performance dashboards.

Creating and maintaining effective performance dashboards involves a structured process. First, I’d identify the key performance indicators (KPIs) that accurately reflect the health and performance of the system. These KPIs are tailored to the specific application and the overall business objectives. Then, I’d select an appropriate dashboarding tool – Grafana, Datadog, or custom solutions are all possibilities – based on the existing monitoring infrastructure and the specific requirements.

The dashboard should be intuitive and easy to understand, presenting critical information concisely and effectively. Visualizations, such as graphs, charts, and heatmaps, are essential for quickly grasping the system’s status. Alerts are set up to notify administrators of critical issues, such as exceeding thresholds for key metrics. Regular review and updates are crucial. The dashboard must evolve alongside the system it monitors, adding or removing KPIs as the application’s requirements change.

For example, a well-designed dashboard might show key metrics like CPU and memory usage, response times, error rates, and network traffic, grouped logically and clearly presented for quick interpretation. This ensures timely identification and resolution of performance bottlenecks, allowing for proactive system management and optimization.

Key Topics to Learn for Monitor Machine Performance Interview

- System Monitoring Tools and Technologies: Understand the functionalities and applications of various monitoring tools (e.g., Nagios, Zabbix, Prometheus, Grafana). Explore their strengths and weaknesses in different contexts.

- Metrics and KPIs: Learn to identify and interpret key performance indicators (KPIs) relevant to machine performance, such as CPU utilization, memory usage, disk I/O, network latency, and application response times. Practice analyzing these metrics to diagnose performance bottlenecks.

- Performance Analysis and Troubleshooting: Develop your skills in identifying performance issues through log analysis, system resource monitoring, and performance profiling. Practice different troubleshooting methodologies and learn to prioritize solutions based on impact and feasibility.

- Operating System Fundamentals: Possessing a strong understanding of operating system principles (e.g., process management, memory management, file systems) is crucial for effective machine performance monitoring and optimization.

- Networking Concepts: A solid grasp of networking fundamentals, including TCP/IP, network protocols, and common network performance issues, is essential for comprehensive system monitoring.

- Database Performance: If relevant to the target role, familiarize yourself with database monitoring techniques, query optimization, and common database performance bottlenecks.

- Automation and Scripting: Demonstrate your ability to automate monitoring tasks and analyze data using scripting languages like Python or Bash. This is highly valued in many roles.

- Cloud Monitoring: If the role involves cloud environments (AWS, Azure, GCP), learn the specific monitoring tools and techniques used in those platforms.

- Capacity Planning: Understanding how to predict future resource needs based on current performance trends is a valuable skill.

- Security Considerations: Discuss the importance of security in monitoring systems and how to protect sensitive data collected through monitoring processes.

Next Steps

Mastering machine performance monitoring is crucial for a successful and rewarding career in IT operations, DevOps, or system administration. It demonstrates a critical skillset highly sought after by employers. To significantly increase your job prospects, create a compelling and ATS-friendly resume that showcases your expertise. ResumeGemini is a trusted resource to help you build a professional and impactful resume. We provide examples of resumes tailored to Monitor Machine Performance roles to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good