Are you ready to stand out in your next interview? Understanding and preparing for Multi-Threaded and Asynchronous Processing interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Multi-Threaded and Asynchronous Processing Interview

Q 1. Explain the difference between threads and processes.

Threads and processes are both ways to achieve concurrency, but they differ significantly in their nature and how they operate within an operating system. Think of a process as a fully independent program, with its own memory space, resources, and execution context. A thread, on the other hand, is a lightweight unit of execution that runs *within* a process. Multiple threads can exist within the same process, sharing the same memory space, but having their own program counter and stack.

Analogy: Imagine a factory (process). Processes are like separate factories, each with its own equipment and workers. Threads are like individual workers within a single factory, sharing the same tools and workspace, but performing different tasks concurrently.

- Process: Heavyweight, independent, requires more overhead to create and manage.

- Thread: Lightweight, shares resources within a process, less overhead to create and manage.

Q 2. What are the benefits and drawbacks of multithreading?

Multithreading offers several advantages, but also comes with challenges.

- Benefits:

- Increased Responsiveness: Allows a program to remain responsive even during long-running operations. For example, a GUI application can continue to respond to user input while performing a complex calculation in a separate thread.

- Resource Sharing: Threads within the same process share memory, making communication and data exchange efficient.

- Improved Performance: On multi-core processors, threads can run in parallel, significantly speeding up execution, especially for tasks that can be broken down into smaller, independent units.

- Drawbacks:

- Increased Complexity: Managing multiple threads introduces complexities like synchronization issues, deadlocks, and race conditions. Debugging multithreaded applications can be significantly more challenging.

- Synchronization Overhead: Mechanisms for thread synchronization (e.g., mutexes, semaphores) introduce overhead that can negate performance gains if not carefully managed.

- Resource Contention: Multiple threads accessing shared resources can lead to contention, reducing overall performance.

Example: A web server handling multiple client requests concurrently using multithreading. Each client request is handled by a separate thread, enabling the server to efficiently serve many clients simultaneously.

Q 3. Describe different concurrency models (e.g., thread pools, actors).

Several concurrency models exist, each with strengths and weaknesses:

- Thread Pools: A thread pool manages a fixed-size pool of threads. Tasks are submitted to the pool, and threads pick them up and execute them. This avoids the overhead of constantly creating and destroying threads. It’s efficient for handling numerous short-lived tasks.

- Actors: In the actor model, the focus is on independent actors that communicate asynchronously via message passing. Each actor has its own mailbox, and messages are processed sequentially, avoiding race conditions. This model is well-suited for distributed systems and highly concurrent scenarios. It’s inherently more resilient to errors than thread-based approaches.

- Futures and Promises: These represent the result of an asynchronous operation. The promise handles the execution, while the future provides a way to access the result when it becomes available. Useful for handling I/O-bound operations that would otherwise block the main thread.

Example: A web crawler might use a thread pool to fetch multiple web pages concurrently. Each fetch operation is a task submitted to the pool. A complex simulation could benefit from the actor model, enabling independent agents to interact without shared memory concerns.

Q 4. Explain the concept of race conditions and how to prevent them.

A race condition occurs when multiple threads access and manipulate shared data concurrently, and the final result depends on the unpredictable order in which the threads execute. This leads to inconsistent or erroneous results.

Example: Two threads incrementing a shared counter. If both read the same value, increment it independently, and write it back, the final counter value will be off by one.

Prevention Strategies:

- Mutual Exclusion (Mutexes): A mutex acts like a lock, ensuring that only one thread can access a shared resource at a time.

//Illustrative example (syntax varies by language) Mutex mutex; mutex.lock(); // Access and modify shared data mutex.unlock(); - Semaphores: Generalization of mutexes, allowing multiple threads to access a resource concurrently, up to a specified limit.

- Atomic Operations: Operations that are guaranteed to be executed indivisibly, preventing race conditions on simple data types.

- Thread-local storage: Data stored locally to each thread avoids race conditions altogether.

Q 5. What are deadlocks and how can they be avoided?

A deadlock occurs when two or more threads are blocked indefinitely, waiting for each other to release the resources that they need. It’s like a traffic jam where no car can move.

Example: Thread A holds lock X and is waiting for lock Y. Thread B holds lock Y and is waiting for lock X. Neither thread can proceed.

Deadlock Avoidance Strategies:

- Lock Ordering: Always acquire locks in a consistent order to prevent circular dependencies.

- Timeouts: Introduce timeouts when acquiring locks; if a lock isn’t available after a certain time, release any held locks and retry.

- Deadlock Detection and Recovery: Implement mechanisms to detect deadlocks and take corrective actions, such as terminating one of the involved threads.

- Resource Hierarchy: Establish a hierarchy for resource access.

Q 6. How do you handle thread synchronization?

Thread synchronization ensures that multiple threads access shared resources in a controlled and predictable manner. Without synchronization, race conditions and other concurrency issues can arise.

Mechanisms:

- Mutexes: Provide mutual exclusion, ensuring only one thread can access a shared resource at a time (as described in the Race Conditions answer).

- Semaphores: Allow a limited number of threads to access a shared resource concurrently.

- Condition Variables: Allow threads to wait for a specific condition to become true before proceeding. Useful for coordinating actions between threads.

- Monitors: Higher-level synchronization constructs that encapsulate shared data and synchronization primitives. They provide a more structured approach to thread synchronization.

Choosing the Right Mechanism: The appropriate synchronization mechanism depends on the specific needs of the application. Mutexes are suitable for simple mutual exclusion, while semaphores and condition variables offer more advanced synchronization capabilities.

Q 7. Explain the concept of atomicity.

Atomicity refers to the property of an operation being indivisible; it either completes entirely or not at all. There’s no intermediate state visible to other threads. This is crucial for maintaining data consistency in concurrent programs.

Example: Incrementing a counter should be atomic. If it’s not, it’s possible for a thread to read the value, increment it, and write it back while another thread modifies the value concurrently, leading to data corruption.

Ensuring Atomicity:

- Atomic Operations: Many programming languages offer built-in atomic operations (e.g.,

InterlockedIncrementin C#). These operations are guaranteed to be executed atomically by the hardware or runtime environment. - Mutexes: Enclosing code that modifies shared data within a mutex guarantees atomicity of the entire block.

Atomicity is fundamental to building robust and reliable multithreaded applications. It prevents partial updates and ensures that shared data remains consistent despite concurrent access.

Q 8. What are mutexes and semaphores?

Mutexes and semaphores are synchronization primitives used in concurrent programming to control access to shared resources and prevent race conditions. Think of them as traffic signals for threads.

A mutex (mutual exclusion) is a locking mechanism that allows only one thread to access a shared resource at a time. It’s like a single-key lock on a door; only the thread holding the key can enter. Once a thread acquires the mutex, other threads attempting to acquire it will block until the mutex is released.

A semaphore is a more generalized synchronization tool. It maintains a counter that represents the number of available resources. Threads can increment (signal) or decrement (wait) the counter. If a thread tries to decrement the counter and it’s zero, the thread blocks until the counter becomes positive. Semaphores are more flexible than mutexes because they can control access to multiple instances of a resource. For example, a semaphore with a count of 5 allows 5 threads to access a resource concurrently.

Example: Imagine a printer shared by multiple threads. A mutex would ensure that only one thread can print at a time. A semaphore could allow a certain number of threads to print concurrently, depending on the number of available printers.

Q 9. Explain the producer-consumer problem and its solutions.

The producer-consumer problem describes a scenario where multiple producer threads generate data and multiple consumer threads consume that data from a shared buffer. The challenge is to ensure that producers don’t overwrite data before consumers have processed it, and consumers don’t try to access data that hasn’t been produced.

Solutions typically involve synchronization primitives like semaphores or condition variables. One common approach uses two semaphores: one to count the number of empty slots in the buffer (empty_slots) and one to count the number of filled slots (filled_slots).

Producers wait on empty_slots before producing. After producing, they signal filled_slots. Consumers wait on filled_slots before consuming. After consuming, they signal empty_slots. This ensures that producers and consumers operate within the buffer’s capacity and avoid data corruption.

//Illustrative pseudocode (not production ready) semaphore empty_slots = buffer_size; semaphore filled_slots = 0; producer() { while (true) { wait(empty_slots); // Wait for an empty slot produce(); // Put data into the buffer signal(filled_slots); // Signal that a slot is filled } } consumer() { while (true) { wait(filled_slots); // Wait for a filled slot consume(); // Take data from the buffer signal(empty_slots); // Signal that a slot is empty } } Other solutions involve more sophisticated techniques like condition variables or lock-free data structures but the core principle remains the same – managing access to shared resources to avoid conflicts.

Q 10. Describe different synchronization primitives.

Synchronization primitives are tools that allow concurrent processes or threads to coordinate their actions and avoid data races and other concurrency issues. Besides mutexes and semaphores, several other primitives exist:

- Condition Variables: Allow threads to wait for a specific condition to become true before continuing. They are often used in conjunction with mutexes to protect shared data while threads wait.

- Barriers: Synchronization points where all participating threads must arrive before any can proceed. Useful for situations where all threads need to complete a phase before moving to the next.

- Monitors: Language constructs or libraries that encapsulate shared data and synchronization mechanisms. They provide a higher-level abstraction than individual primitives, simplifying concurrent programming.

- Atomic Operations: Operations that are guaranteed to be executed as a single, indivisible unit. This avoids race conditions on individual variables without the overhead of more complex synchronization mechanisms. Examples include atomic increments or swaps.

- Read-Write Locks: Allow multiple threads to read shared data concurrently but only one thread to write at a time. This improves concurrency when reads are much more frequent than writes.

The choice of primitive depends on the specific concurrency problem being addressed. For simple mutual exclusion, a mutex is sufficient. For more complex scenarios involving multiple resources or conditions, semaphores, condition variables, or monitors may be more suitable.

Q 11. What are the challenges of multithreaded programming?

Multithreaded programming offers performance benefits but presents significant challenges:

- Race Conditions: Multiple threads accessing and modifying shared data simultaneously can lead to unpredictable and incorrect results. This is a major source of bugs.

- Deadlocks: A situation where two or more threads are blocked indefinitely, waiting for each other to release resources.

- Starvation: One or more threads are perpetually denied access to resources, even when they are available. This can happen due to scheduling issues or unfair resource allocation.

- Livelocks: Threads are continuously changing their state in response to each other but never making progress. Like a deadlock, but without complete blocking.

- Debugging Complexity: Identifying and fixing concurrency bugs can be significantly harder than debugging single-threaded code due to the nondeterministic nature of thread execution.

- Increased Development Complexity: Designing, implementing, and testing concurrent code requires specialized knowledge and careful attention to detail.

Addressing these challenges requires careful use of synchronization primitives, robust error handling, and rigorous testing strategies.

Q 12. Explain how asynchronous programming works.

Asynchronous programming allows a program to continue execution without waiting for a long-running operation to complete. Instead of blocking, it registers a callback function to be executed when the operation finishes. This allows the main thread to remain responsive and handle other tasks while waiting for I/O-bound operations, like network requests or file reads, to finish.

Imagine ordering food at a restaurant. In synchronous programming, you would sit at the table and wait for your food. In asynchronous programming, you’d give your order, receive a notification (callback), and do other things in the meantime. The restaurant prepares your food in the background.

This is particularly beneficial for I/O-bound operations, as it prevents the main thread from being blocked while waiting for external resources. This improves responsiveness and efficiency, allowing the program to handle multiple requests concurrently.

Q 13. What are promises and futures?

Promises and Futures are powerful abstractions used in asynchronous programming to represent the eventual result of an asynchronous operation.

A Future represents the result of an asynchronous computation that may not yet be available. It provides methods to check if the result is ready and to retrieve it when available. Think of it as an IOU—a promise of a result in the future.

A Promise is an object that represents the state of an asynchronous operation and provides a mechanism for handling its eventual success or failure. It allows attaching callbacks for the ‘then’ (success) and ‘catch’ (failure) cases. The promise ‘resolves’ (succeeds) or ‘rejects’ (fails) when the asynchronous operation completes.

Many asynchronous programming frameworks use Promises and Futures to manage and handle asynchronous operations. They help to structure asynchronous code, making it more readable and maintainable.

Q 14. What are callbacks and how are they used in asynchronous programming?

Callbacks are functions passed as arguments to other functions, to be executed upon the completion of an asynchronous operation. They are a fundamental part of asynchronous programming.

For example, when making a network request, you would provide a callback function that will be invoked when the request completes (successfully or with an error). This callback function typically handles processing the response or reporting the error.

//Illustrative Javascript example function fetchData(url, callback) { // Asynchronous network request... setTimeout(() => { const data = { message: 'Data fetched!' }; callback(null, data); // callback(error, data) convention }, 1000); } fetchData('someUrl', (err, data) => { if (err) { console.error('Error:', err); } else { console.log('Data:', data); } }); Callbacks are simple yet powerful. They allow asynchronous operations to signal completion without blocking the main thread, and enable handling success and error scenarios separately. Modern asynchronous patterns like Promises often abstract away the explicit use of callbacks, offering a more structured approach to handling asynchronous outcomes.

Q 15. Explain the concept of asynchronous I/O.

Asynchronous I/O allows a program to continue executing other tasks while waiting for an I/O operation (like reading from a file or network request) to complete. Instead of blocking and waiting, the program registers a callback function or uses a mechanism like promises or async/await. Once the I/O operation finishes, the callback is executed or the promise is resolved, handling the result.

Imagine ordering food at a restaurant. Synchronous I/O is like standing there, staring at the chef until your food is ready. Asynchronous I/O is like giving your order and going to sit at a table, perhaps reading a book, and the waiter will let you know when your food is ready. This makes much better use of your time.

Example (Conceptual):

// Synchronous let data = readFromFileSync('myfile.txt'); // Program blocks until file is read process(data); // Asynchronous (using promises) readFileAsync('myfile.txt') .then(data => process(data)) .catch(error => handleError(error)); //Program continues execution, and process(data) is called only when data is available. Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Compare and contrast threads and asynchronous operations.

Threads and asynchronous operations both deal with concurrency, but they differ significantly in their approach. Threads are independent units of execution that run within the same process, sharing memory and resources. Asynchronous operations, on the other hand, are non-blocking operations that allow a program to perform other tasks while waiting for the completion of a long-running operation. Think of it like this: threads are like multiple people working on different parts of the same project simultaneously in the same office, while asynchronous operations are like delegating tasks to different people and only checking on their progress when necessary.

- Threads: Multithreading involves creating and managing multiple threads, each with its own stack but sharing the same heap. They are suitable for CPU-bound tasks where you want to perform multiple calculations concurrently. However, excessive thread creation can lead to performance issues due to context switching overhead.

- Asynchronous Operations: Asynchronous operations are particularly beneficial for I/O-bound tasks where your program spends a lot of time waiting for external resources like network requests or disk I/O. They don’t necessarily use multiple threads; instead, they utilize events or callbacks to manage the flow of execution.

In short: Threads provide true parallelism (potentially running on multiple CPU cores simultaneously), while asynchronous operations optimize resource usage by overlapping I/O waits with other computations.

Q 17. How do you handle exceptions in multithreaded applications?

Handling exceptions in multithreaded applications requires careful consideration because exceptions can originate from any thread. Ignoring them can lead to crashes or data corruption. The best approach depends on the exception’s nature and the application’s design. You should generally aim to handle exceptions within the thread they occur in, but sometimes centralized exception handling is necessary.

- Try-Catch Blocks: Wrap potentially problematic code sections in each thread with

try-catchblocks. This prevents the thread from crashing due to unhandled exceptions, allowing for logging and controlled recovery. - Exception Handling Mechanisms: For structured exception handling across threads, you can use mechanisms like thread-specific storage (TSS) to maintain context, allowing one thread to capture or monitor exceptions from another. Alternatively, a dedicated logging mechanism that’s thread-safe can be used.

- Global Exception Handlers (Careful!): While usually discouraged due to potential complications in identifying the source, some frameworks allow for global exception handlers that catch exceptions in all threads. Use these cautiously, ensuring sufficient logging to pin-point the error’s origin.

Example (Conceptual – Java):

try { // Code that might throw an exception } catch (Exception e) { // Log the exception and take appropriate action. // e.printStackTrace(); //Consider this ONLY for debugging purposes. Do not use in production without proper error handling. // Handle the exception gracefully instead of throwing a new exception here } Q 18. How do you debug multithreaded applications?

Debugging multithreaded applications can be significantly more challenging than single-threaded applications due to non-deterministic behavior (race conditions) and timing dependencies. Here’s a multi-pronged strategy.

- Logging: Thorough logging in each thread is crucial. Include thread IDs, timestamps, and relevant context in log messages. This aids in reconstructing the execution flow and identifying the point of failure.

- Debuggers with Thread Support: Utilize debuggers that offer support for multithreaded debugging. These debuggers allow you to step through each thread independently, inspect variables, set breakpoints, and observe the thread state (running, blocked, waiting).

- Reproducible Scenarios: Try to reproduce the error consistently. The non-deterministic nature of concurrency makes this difficult, but if you can isolate a series of steps that reliably trigger the bug, debugging becomes much easier.

- Tools like Thread Analyzers and Profilers: Specialized tools can analyze thread activity, identify deadlocks, and measure performance bottlenecks. These offer valuable insights not easily obtainable through debugging alone.

- Race Condition Detection Tools: Some tools focus specifically on detecting race conditions, which are a common source of multithreading bugs. These usually involve instrumentation and careful analysis of thread interleavings.

Example (Conceptual): Suppose you’re seeing intermittent crashes. Adding detailed logging might reveal that the crash consistently occurs after Thread A accesses a shared resource before Thread B has finished updating it, pinpointing a race condition.

Q 19. What are thread pools and why are they useful?

A thread pool is a collection of worker threads that are pre-created and managed by a pool manager. Instead of creating a new thread for each task, you submit tasks to the thread pool, and the pool manager assigns the tasks to available worker threads. This improves efficiency by minimizing the overhead of creating and destroying threads. Think of it like a team of workers readily available to handle various tasks instead of hiring and firing people for each new job.

Benefits:

- Resource Management: Prevents excessive thread creation, conserving system resources and reducing context switching overhead.

- Performance Improvement: Faster response time, as threads are readily available. Tasks are processed more efficiently.

- Simplified Management: Centralized management of threads; no need to manually manage thread life cycles for each task.

Example (Conceptual – Python):

import concurrent.futures with concurrent.futures.ThreadPoolExecutor(max_workers=5) as executor: futures = [executor.submit(task, arg) for arg in args] results = [future.result() for future in futures] In this example, a thread pool with a maximum of 5 worker threads processes a list of tasks (represented by task and args).

Q 20. How do you manage resources in a multithreaded environment?

Resource management in multithreaded environments is critical to prevent deadlocks, race conditions, and other concurrency-related issues. Key strategies include:

- Synchronization Mechanisms: Using mutexes, semaphores, condition variables, or other synchronization primitives to control access to shared resources. This ensures that only one thread accesses a resource at a time, preventing data corruption and race conditions.

- Thread-Safe Data Structures: Employing data structures specifically designed for multithreaded access (e.g., concurrent hash maps, concurrent queues). These structures handle synchronization internally, simplifying development and reducing the risk of errors.

- Resource Acquisition Is Initialization (RAII): In languages like C++, using RAII to ensure that resources are automatically released when they are no longer needed, even if exceptions occur. This prevents resource leaks.

- Deadlock Prevention: Designing your application carefully to avoid deadlocks by avoiding circular dependencies on resources. Proper ordering of resource acquisition can prevent deadlocks.

- Resource Pooling: Managing resources in a pool, as discussed with thread pools, enables better resource utilization and control.

Example (Conceptual): A shared database connection should be protected by a mutex to ensure that only one thread accesses it simultaneously, preventing data inconsistency.

Q 21. Describe different approaches to thread scheduling.

Thread scheduling refers to the process of deciding which thread to execute at any given time on a CPU core. Different operating systems and runtime environments use various scheduling algorithms.

- Preemptive Scheduling: The operating system can interrupt a running thread and switch to another thread at any time, typically based on time slices or priority levels. This is common in most modern operating systems. A thread can be preempted even if it’s not finished executing.

- Cooperative Scheduling: Threads voluntarily relinquish control of the CPU; they must explicitly yield control, often through yield calls. This can be less efficient because a long-running thread might hog the CPU, preventing other threads from running.

- Priority-Based Scheduling: Threads are assigned priorities, and the scheduler gives preference to higher-priority threads. This is useful for tasks with different importance levels or real-time constraints.

- Round-Robin Scheduling: Threads are executed in a circular fashion, each getting a time slice. This approach aims for fairness, ensuring that all threads get a chance to run.

- Real-Time Scheduling: Used in real-time systems where responsiveness is critical. Threads have deadlines that must be met, and the scheduler prioritizes threads based on their deadlines.

The specific scheduling algorithm used depends on the operating system and the runtime environment. Developers typically have limited direct control over the finer details of thread scheduling, although priority settings can sometimes influence it.

Q 22. Explain the concept of context switching.

Context switching is the process of the operating system saving the state of a currently running process or thread and loading the state of another process or thread. Think of it like a chef juggling multiple dishes on a stove. The chef can only focus on one dish at a time, but they quickly switch between them to prevent one from burning. The chef’s focus is analogous to the CPU’s processing power, and the dishes represent different threads. Each switch incurs overhead—the time it takes to save and restore the state—which can impact performance if it occurs too frequently. The operating system’s scheduler manages this context switching, deciding which thread to run next based on factors like priority and resource availability.

For example, in a web server application, one thread might be handling a user request while another thread is waiting for data from a database. The OS scheduler might switch between these threads multiple times per second, giving each a small slice of CPU time. This allows seemingly simultaneous execution of multiple tasks.

Q 23. Discuss the impact of multithreading on performance.

Multithreading’s impact on performance is complex and depends on several factors. Ideally, it leads to improved performance by utilizing multiple CPU cores or exploiting parallelism in the application’s tasks. Imagine having multiple assembly lines in a factory; each line (thread) processes parts independently, increasing overall production. However, poorly designed multithreaded applications can experience reduced performance due to increased overhead from context switching, contention for shared resources (like locks), or excessive thread creation and management.

For instance, a CPU-bound application (e.g., complex calculations) might not see significant performance improvement with multithreading on a system with only one core. The threads would simply contend for the single core, and context switching overhead could slow things down. In contrast, an I/O-bound application (e.g., web server) benefits greatly, as threads can wait for network or disk operations without blocking other threads.

Proper synchronization mechanisms are crucial for avoiding performance bottlenecks. Using efficient locking mechanisms and minimizing shared mutable state are key to achieving optimal performance.

Q 24. How do you measure the performance of a multithreaded application?

Measuring the performance of a multithreaded application requires a multifaceted approach. We can’t rely on a single metric; instead, a combination of tools and techniques is necessary. Key performance indicators include:

- Throughput: The number of tasks completed per unit of time (e.g., requests processed per second).

- Latency: The time it takes to complete a single task (e.g., response time of a web request).

- Resource Utilization: CPU usage, memory usage, disk I/O, and network I/O. Tools like

top(Linux) or Task Manager (Windows) can help track this. - Scalability: How performance scales with increased workload or number of threads.

- Concurrency Metrics: Number of active threads, thread wait times, lock contention. Profiling tools like JProfiler (Java) or Visual Studio Profiler (.NET) can provide detailed insights.

Profiling tools are indispensable; they help pinpoint bottlenecks and identify areas for optimization. A/B testing different multithreading strategies with controlled workloads allows for quantitative comparison.

Q 25. What are some common concurrency-related bugs?

Concurrency bugs are notoriously difficult to debug because they are often non-deterministic and depend on timing. Some common concurrency-related bugs include:

- Race conditions: Multiple threads accessing and modifying shared resources simultaneously, leading to unpredictable results. Example: Two threads incrementing a shared counter without proper synchronization.

- Deadlocks: Two or more threads are blocked indefinitely, waiting for each other to release resources. Imagine two people trying to enter a door simultaneously from opposite sides.

- Starvation: A thread is unable to acquire necessary resources to proceed because other threads are constantly getting them first.

- Livelocks: Threads are continually changing state in response to each other, but none make progress. Think of two people constantly trying to yield to each other, never progressing.

- Data corruption: Inconsistent or incorrect data due to race conditions or improper synchronization.

Robust testing, including stress testing and concurrency testing frameworks, and careful synchronization are essential to prevent these bugs.

Q 26. Explain the concept of thread starvation.

Thread starvation occurs when a thread is perpetually prevented from accessing necessary resources or being scheduled for execution. This often happens when higher-priority threads monopolize resources or the scheduler gives preferential treatment to other threads. It’s like a person always being cut in line at a restaurant—they never get served. The starved thread may never complete its tasks, leading to application performance issues or correctness problems.

For example, a low-priority thread might repeatedly attempt to acquire a lock that is continuously held by a high-priority thread. The low-priority thread might never get a chance to execute its code, resulting in starvation. Proper thread scheduling algorithms, fair resource allocation mechanisms, and careful prioritization help mitigate this.

Q 27. How would you implement a thread-safe counter?

Implementing a thread-safe counter requires using synchronization primitives to ensure that only one thread can modify the counter at a time. A simple approach uses a Mutex (mutual exclusion) lock:

class ThreadSafeCounter:

def __init__(self):

self.count = 0

self.lock = threading.Lock() # Use a mutex lock

def increment(self):

with self.lock:

self.count += 1

def get_count(self):

with self.lock:

return self.count

The with self.lock: statement ensures that the increment and get_count methods are atomic. Only one thread can hold the lock at any time; other threads attempting to acquire the lock will be blocked until it’s released. Atomic operations provided by some languages (like atomic.AtomicInteger in Java) can also be used for simpler, often more efficient implementations.

Q 28. Describe your experience with different concurrency libraries or frameworks (e.g., Java Concurrency Utilities, asyncio, etc.)

I have extensive experience with various concurrency libraries and frameworks. In Java, I’ve worked extensively with the Java Concurrency Utilities (JCU), leveraging classes like ExecutorService for managing thread pools, CountDownLatch for coordinating threads, and ConcurrentHashMap for thread-safe data structures. I understand the nuances of ReentrantLock versus synchronized blocks and when to prefer one over the other. I’ve used these tools to build high-throughput, scalable applications handling millions of concurrent requests.

My Python experience includes using asyncio for asynchronous programming, building highly responsive applications capable of handling a large number of concurrent connections efficiently. I’m comfortable using coroutines and async/await syntax, understanding the importance of avoiding blocking operations in an asynchronous context. I understand the differences and advantages of asynchronous vs. multithreaded approaches and can select the appropriate paradigm depending on the application’s nature.

In C++, I’ve worked with standard thread libraries, using mutexes, condition variables, and semaphores for synchronization, understanding the importance of proper lock management and avoiding deadlocks.

Key Topics to Learn for Multi-Threaded and Asynchronous Processing Interviews

- Fundamentals of Concurrency: Understand the core differences between multi-threading and asynchronous programming, including their respective advantages and disadvantages in different scenarios.

- Thread Management: Explore thread creation, lifecycle management (start, join, sleep), and synchronization mechanisms like mutexes, semaphores, and condition variables. Practice implementing these in your chosen language.

- Deadlocks and Race Conditions: Learn to identify, understand, and prevent these common concurrency issues. Practice debugging code with these problems.

- Asynchronous Programming Models: Become familiar with different asynchronous programming patterns, such as callbacks, promises, and async/await. Understand how they improve responsiveness and efficiency.

- Practical Applications: Explore real-world examples of multi-threaded and asynchronous processing, such as web servers, game development, and data processing pipelines. Be ready to discuss how these techniques are applied in specific contexts.

- Performance Considerations: Learn how to profile and optimize multi-threaded and asynchronous code for maximum performance. Understand the impact of factors like context switching and I/O-bound vs. CPU-bound operations.

- Testing Concurrent Code: Master techniques for effectively testing concurrent applications, including the challenges involved and strategies for ensuring correctness and reliability.

- Choosing the Right Approach: Be prepared to discuss when to choose multi-threading versus asynchronous programming based on the specific requirements of a problem.

Next Steps

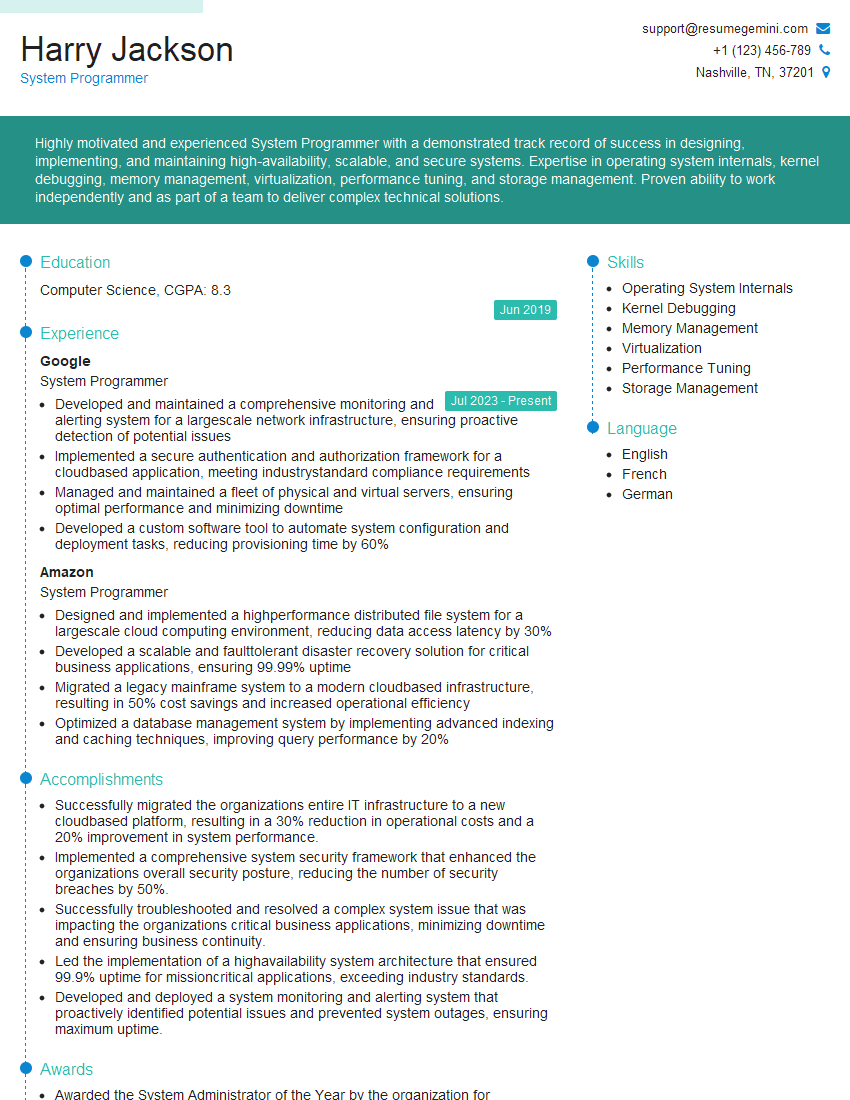

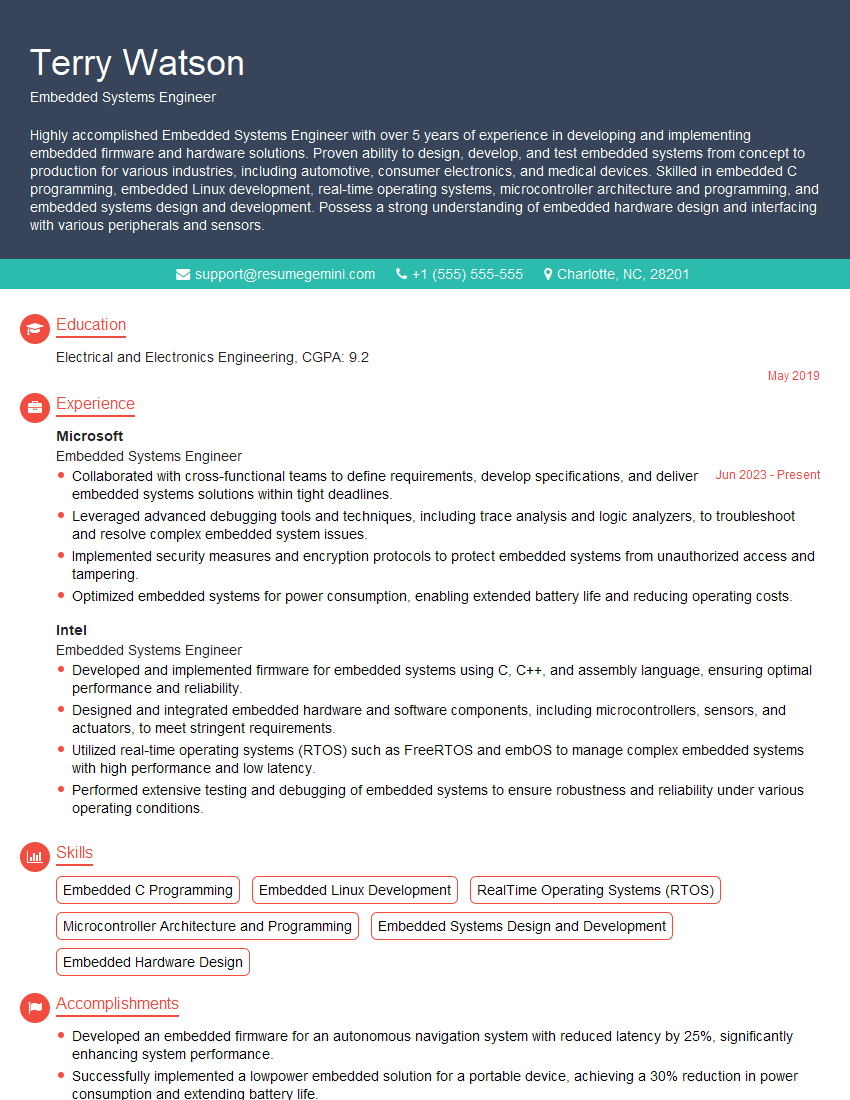

Mastering multi-threaded and asynchronous processing is crucial for advancing your career in software development. These skills are highly sought after and demonstrate a deep understanding of efficient and scalable system design. To maximize your job prospects, crafting an ATS-friendly resume is essential. ResumeGemini can significantly enhance your resume-building experience, helping you create a compelling document that highlights your expertise. ResumeGemini provides examples of resumes tailored to Multi-Threaded and Asynchronous Processing, showcasing how to effectively present these skills to potential employers. Invest in building a strong resume – it’s your first impression!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good