The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Network Optimization and Performance Analysis interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Network Optimization and Performance Analysis Interview

Q 1. Explain TCP/IP model and its layers.

The TCP/IP model is a conceptual framework for understanding how data is transmitted across networks. It’s not strictly layered like the OSI model, but rather a suite of protocols working together. It’s crucial for network engineers because it provides a blueprint for how data moves from your computer to any other device on the internet.

- Application Layer: This is where applications like web browsers (HTTP), email clients (SMTP, POP3, IMAP), and file transfer programs (FTP) reside. It’s the layer that interacts directly with the user. Think of it as the ‘what’ of the communication – what application is being used.

- Transport Layer: This layer handles reliable and unreliable data transmission. TCP (Transmission Control Protocol) provides reliable, ordered delivery (like ordering a pizza – you expect it to arrive whole and in one piece). UDP (User Datagram Protocol) offers speed but not reliability (like sending a postcard – you hope it arrives, but there’s no guarantee).

- Internet Layer: This is where IP (Internet Protocol) addresses come into play. It’s responsible for addressing and routing packets across networks, ensuring data reaches the correct destination. Imagine this as the ‘where’ of communication – the delivery address.

- Network Access Layer: This layer deals with the physical transmission of data over the network medium (Ethernet, Wi-Fi, etc.). It’s the physical hardware and software that gets the data onto the wire. Think of this as the ‘how’ – the method of delivery (truck, plane, etc.).

Example: When you browse a website, your browser (Application Layer) uses HTTP to request a webpage. TCP (Transport Layer) ensures reliable delivery, IP (Internet Layer) routes the request across the internet, and finally, the Network Access Layer sends the data over your Wi-Fi or Ethernet cable.

Q 2. Describe different network topologies and their advantages/disadvantages.

Network topologies describe the physical or logical layout of a network. Choosing the right topology impacts performance, scalability, and cost. Here are a few common ones:

- Bus Topology: All devices connect to a single cable. Simple and inexpensive, but a single cable failure brings down the entire network. Think of it like a single hallway in a building.

- Star Topology: All devices connect to a central hub or switch. Failure of one device doesn’t affect the others, and it’s easy to manage and expand. Most home and office networks use this; it’s like a building with a central reception area.

- Ring Topology: Devices are connected in a closed loop. Data travels in one direction. Reliable but adding or removing devices is disruptive. Imagine a circular track.

- Mesh Topology: Devices have multiple connections to other devices. Highly redundant and fault-tolerant but complex and expensive. Think of a city’s road network.

- Tree Topology: A hierarchical structure combining elements of bus and star topologies. Often used in larger networks.

Advantages and Disadvantages: Each topology has its strengths and weaknesses. Star topology is popular for its ease of management and fault tolerance, while mesh topology offers superior redundancy but higher complexity. The best choice depends on the specific needs and budget of the network.

Q 3. What are the common network performance metrics you monitor?

Monitoring network performance is critical for identifying and resolving issues. Key metrics include:

- Latency: The delay in data transmission. High latency can lead to slowdowns and poor user experience. Measured in milliseconds.

- Throughput: The amount of data transmitted per unit of time. Measured in bits per second (bps), kilobits per second (kbps), megabits per second (mbps), etc.

- Packet Loss: The percentage of data packets that are lost during transmission. High packet loss can indicate network congestion or faulty hardware.

- Jitter: Variation in latency. High jitter can cause issues with real-time applications like video conferencing.

- CPU and Memory Utilization: On network devices (routers, switches), high utilization indicates potential bottlenecks.

- Error Rate: The number of errors detected during transmission. High error rates suggest problems with cabling or network hardware.

Example: In a VoIP system, high latency and jitter will result in choppy calls and poor audio quality. High packet loss would lead to dropped calls.

Q 4. How do you troubleshoot network latency issues?

Troubleshooting latency involves a systematic approach:

- Identify the affected users/applications: Pinpoint which users or applications are experiencing high latency.

- Check the local network: Look for issues like faulty cables, overloaded switches, or software conflicts on the affected devices.

- Use network monitoring tools: Tools like ping, traceroute (tracert on Windows), and Wireshark can help identify bottlenecks and pinpoint the source of latency.

ping google.commeasures latency to Google’s servers. - Examine network devices: Check CPU and memory utilization on routers, switches, and firewalls. High utilization suggests a bottleneck.

- Analyze routing tables: Ensure that data is being routed efficiently.

- Check for congestion: Look for evidence of high traffic volumes that might be causing congestion.

- Consider external factors: ISP outages, internet backbone congestion, or DNS problems can also impact latency.

Example: If `traceroute` shows high latency at a specific hop, you know the problem is between that hop and the previous one. This points you to the segment of the network requiring investigation.

Q 5. Explain the concept of Quality of Service (QoS) and its implementation.

Quality of Service (QoS) is a set of technologies that prioritizes certain types of network traffic over others. This is crucial for applications requiring low latency and high reliability, such as VoIP, video conferencing, and online gaming. It’s like having express lanes on a highway.

Implementation: QoS is implemented using various techniques:

- Traffic Classification: Identifying different types of traffic based on protocols, ports, or applications.

- Traffic Policing and Shaping: Limiting the bandwidth or rate of traffic to prevent congestion.

- Traffic Prioritization: Assigning different priority levels to different types of traffic, ensuring high-priority traffic gets preferential treatment.

- Queue Management: Managing queues of packets to ensure fair access and prevent starvation of low-priority traffic.

Example: In a network with VoIP and web traffic, QoS can prioritize VoIP traffic to ensure clear calls even when the network is heavily loaded. This prevents web browsing from negatively impacting voice communication.

Q 6. Describe different network congestion control mechanisms.

Network congestion control mechanisms aim to prevent networks from becoming overloaded. They work by limiting the rate at which data is sent, preventing data loss and ensuring fair sharing of bandwidth. Key mechanisms include:

- Sliding Window: TCP uses a sliding window to control the amount of data sent before receiving an acknowledgment. This prevents overwhelming the receiver.

- Slow Start: TCP’s slow start algorithm gradually increases the transmission rate, avoiding sudden bursts of data that might overwhelm the network.

- Congestion Avoidance: Algorithms like Additive Increase/Multiplicative Decrease (AIMD) dynamically adjust the transmission rate based on network conditions.

- Random Early Detection (RED): Routers detect congestion before it becomes severe and signal senders to reduce their transmission rate.

Example: Imagine a highway with many cars. Congestion control is like traffic lights and speed limits – they prevent the highway from becoming completely jammed. If congestion is detected (many cars stopped), the speed limit is reduced to prevent further congestion.

Q 7. How do you identify network bottlenecks?

Identifying network bottlenecks requires a combination of monitoring and analysis. Here’s a systematic approach:

- Monitor key performance indicators (KPIs): Track metrics like latency, throughput, packet loss, and CPU/memory utilization on network devices.

- Use network monitoring tools: Tools like ping, traceroute, and Wireshark help pinpoint slowdowns.

- Analyze network traffic patterns: Identify periods of high traffic and the applications or users responsible.

- Examine device utilization: Check CPU and memory utilization on routers, switches, and servers. High utilization suggests a potential bottleneck.

- Look for asymmetric performance: If upload speeds are significantly slower than download speeds, it might indicate a bottleneck on the upload path.

- Investigate capacity limits: Check bandwidth limits on links and interfaces. If a link is operating at close to its capacity, it’s a bottleneck.

Example: If a server’s CPU utilization is consistently high during peak hours, it’s likely the bottleneck. Upgrading the server’s hardware can address this.

Q 8. Explain different network monitoring tools and their functionalities.

Network monitoring tools are essential for maintaining optimal network performance and identifying potential issues. They provide real-time visibility into various aspects of the network, allowing for proactive management and swift troubleshooting. Different tools offer diverse functionalities depending on the specific needs. Here are a few examples:

- SNMP (Simple Network Management Protocol): This is a fundamental protocol used by many network management systems (NMS). SNMP agents residing on network devices (routers, switches, servers) collect data and report it to an SNMP manager, which then presents this information in a user-friendly interface. Data collected can include CPU utilization, memory usage, interface statistics, and more. Think of it as a network’s central nervous system, constantly checking the health of every component.

- Nagios/Zabbix/Prometheus: These are open-source and commercial Network Monitoring Systems (NMS) that use SNMP and other methods to monitor network devices and applications. They offer features like alerting, reporting, and visualization of collected data, allowing administrators to see the network’s health at a glance and receive notifications when issues arise. They’re like a comprehensive dashboard providing real-time updates on network performance.

- Wireshark/tcpdump: These are packet analyzers that capture and inspect network traffic in detail. They’re invaluable for troubleshooting connectivity issues, identifying security threats, and analyzing network performance at a granular level. Think of them as sophisticated magnifying glasses allowing for deep dives into the network’s communication.

- SolarWinds/PRTG: These are commercial Network Monitoring Systems offering a range of features from basic monitoring to advanced capabilities such as network performance analysis, application monitoring, and fault management. They often provide more user-friendly interfaces and automation capabilities compared to open-source options, making them suitable for enterprise environments.

The choice of monitoring tool depends on factors such as budget, network size and complexity, and specific monitoring requirements. A small network might only need basic SNMP monitoring, while a large enterprise network might require a comprehensive NMS with advanced analytics.

Q 9. What are your experiences with network capacity planning?

Network capacity planning is crucial for ensuring a network can handle current and future demands. My experience involves forecasting network bandwidth, storage, and processing needs based on historical data, projected growth, and anticipated application requirements. This is done through a combination of modeling, analysis, and collaboration with various stakeholders.

For example, in a previous role, I was tasked with planning the network infrastructure for a rapidly growing SaaS company. I analyzed historical bandwidth usage, projected user growth, and the bandwidth requirements of new applications that were being deployed. Using forecasting tools and statistical models, I predicted future bandwidth needs and recommended a phased expansion of the network infrastructure to accommodate the expected growth. This involved selecting appropriate hardware, designing network topologies, and implementing appropriate network technologies. This proactive approach avoided costly network bottlenecks and service disruptions as the company expanded.

I also have experience using various capacity planning tools such as network simulation software and traffic analysis tools to assess the impact of different network configurations and to optimize resource allocation. A key aspect is identifying potential bottlenecks and implementing strategies to mitigate risks.

Q 10. How do you optimize network bandwidth utilization?

Optimizing network bandwidth utilization involves identifying and addressing factors that consume bandwidth inefficiently. It’s a multi-faceted process requiring a thorough understanding of network traffic patterns and application behavior. Techniques for optimization include:

- Quality of Service (QoS): QoS prioritizes critical traffic (like VoIP or video conferencing) over less critical traffic (like file transfers). This ensures that crucial applications receive the bandwidth they need, even during periods of high network congestion. Think of it as having a fast lane on a highway for important traffic.

- Traffic Shaping/Policing: These techniques control the rate of traffic entering the network. Traffic shaping smooths out bursts of traffic, preventing congestion, while traffic policing drops or limits traffic that exceeds defined thresholds. This is like having speed limits on a highway to prevent traffic jams.

- Network Segmentation: Dividing the network into smaller, isolated segments can reduce congestion and improve security. Think of it as organizing a large city into smaller, more manageable neighborhoods.

- Bandwidth Monitoring and Analysis: Regular monitoring of network bandwidth usage is crucial for identifying traffic patterns and identifying potential bottlenecks. This allows for proactive optimization and capacity planning. This is like constantly observing traffic flow to identify areas for improvement.

- Application Optimization: Optimizing applications themselves can significantly reduce bandwidth consumption. This includes using compression techniques, optimizing database queries, and efficient coding practices.

For instance, in one project, I improved bandwidth utilization by implementing QoS to prioritize VoIP calls, resulting in significant improvement in call quality and reducing dropped calls.

Q 11. Explain the concept of network virtualization.

Network virtualization is the creation of virtual representations of network devices and functions, such as virtual switches, routers, and firewalls. It decouples the network hardware from the network software, allowing for greater flexibility, scalability, and efficiency. Think of it as having a software-defined version of your physical network equipment.

Key benefits include:

- Increased Agility and Flexibility: Virtual networks can be easily created, modified, and deleted, providing rapid responses to changing business needs.

- Improved Resource Utilization: Virtualization allows for efficient use of hardware resources, reducing costs and improving performance.

- Enhanced Scalability: Virtual networks can be easily scaled up or down to meet changing demands.

- Simplified Management: Centralized management of virtual networks simplifies administration and reduces operational complexity.

Examples of network virtualization technologies include VMware NSX, Cisco ACI, and OpenStack Neutron. These technologies enable the creation and management of virtual networks in various environments, including data centers, cloud platforms, and hybrid cloud deployments.

Q 12. Describe your experience with network security protocols.

My experience with network security protocols encompasses a wide range of technologies used to protect network assets from unauthorized access and malicious activities. This includes both implementing and troubleshooting these protocols. Some key protocols and my experience with them:

- IPsec (Internet Protocol Security): I’ve used IPsec to establish secure communication tunnels between networks or devices, encrypting data in transit to protect it from eavesdropping. This is crucial for securing remote access and inter-office communication.

- TLS/SSL (Transport Layer Security/Secure Sockets Layer): These protocols provide secure communication over the internet, commonly used for encrypting web traffic (HTTPS). I’ve worked on configuring and troubleshooting SSL certificates and ensuring secure communication between web servers and clients.

- Firewalls: I have extensive experience in configuring and managing firewalls, both hardware and software-based, to control network access and prevent unauthorized traffic from entering or leaving the network. This involves defining rules, monitoring logs, and responding to security alerts.

- VPN (Virtual Private Network): I’ve implemented and managed VPNs to provide secure remote access to corporate networks. This involves configuring VPN gateways, setting up authentication mechanisms, and ensuring the security of the VPN tunnel.

Understanding security protocols is paramount for designing and maintaining secure networks. It’s an ongoing process requiring vigilance and adaptation to the ever-evolving landscape of cyber threats. In one project, I detected a security breach attempt by analyzing firewall logs and identified a misconfigured rule that allowed unauthorized access. This was immediately corrected, preventing a potential data breach.

Q 13. How do you handle network outages and failures?

Handling network outages and failures requires a systematic approach that combines proactive planning, robust monitoring, and efficient troubleshooting. My approach involves:

- Rapid Identification: Utilizing monitoring tools to quickly identify the location and nature of the outage. This could involve alerts from network monitoring systems, user reports, or performance degradation in key applications.

- Root Cause Analysis: Investigating the underlying cause of the outage, which might involve analyzing logs, checking network device status, and testing connectivity. Tools like packet analyzers are vital in this step.

- Isolation and Containment: Containing the impact of the outage by isolating affected areas of the network if possible. This prevents the problem from spreading and minimizing disruption.

- Resolution and Restoration: Implementing the appropriate fix, whether it involves restarting a device, replacing a faulty component, or applying a software patch. Prioritizing the restoration of critical services is essential.

- Post-Incident Review: Analyzing the event to identify areas for improvement in network design, monitoring, and incident response procedures. This proactive approach prevents similar events from happening in the future. Documentation of the event is vital for future reference.

For instance, in a previous incident involving a major network outage, we were able to identify the issue as a faulty router within minutes, thanks to our comprehensive monitoring system. By isolating the affected segment, we minimized the impact on other parts of the network and restored service within an hour. A post-incident review led to improvements in our backup infrastructure, further minimizing risks.

Q 14. Explain your experience with load balancing techniques.

Load balancing distributes network traffic across multiple servers or devices, preventing overload on any single component. This ensures high availability and optimal performance, especially under heavy load. Several techniques exist:

- Round-Robin DNS: This technique directs client requests to different servers in a sequential manner. It’s simple to implement but doesn’t consider server load.

- Weighted Round-Robin: Similar to round-robin, but assigns weights to each server based on capacity. Servers with higher capacity receive more requests.

- Least Connections: This method directs new requests to the server with the fewest active connections, ensuring a balanced load across servers.

- IP Hashing: This assigns requests to servers based on the client’s IP address, ensuring consistent server assignment for each client.

- Hardware Load Balancers: Dedicated hardware devices perform load balancing, offering advanced features like health checks and session persistence.

- Software Load Balancers: Software applications running on servers handle load balancing, often more flexible and cost-effective than hardware solutions.

The choice of load balancing technique depends on the specific application and network requirements. In a previous project involving a high-traffic e-commerce website, we implemented a hardware load balancer with least-connections scheduling to ensure optimal performance during peak shopping seasons. This prevented server overload and maintained website responsiveness.

Q 15. Describe different types of network attacks and their mitigation strategies.

Network attacks come in many forms, each requiring a tailored mitigation strategy. Think of it like a castle under siege – you need different defenses against different types of attackers.

- Denial of Service (DoS) and Distributed Denial of Service (DDoS) Attacks: These attacks flood a network with traffic, overwhelming its resources and making it unavailable to legitimate users. Mitigation involves techniques like rate limiting (filtering out excessive requests from a single IP address), using Content Delivery Networks (CDNs) to distribute traffic, and employing DDoS mitigation services from cloud providers.

- Man-in-the-Middle (MitM) Attacks: An attacker secretly intercepts communication between two parties, potentially stealing data or altering messages. Using HTTPS (with strong encryption), employing Virtual Private Networks (VPNs) for secure remote access, and regularly updating security certificates are vital mitigation strategies.

- Phishing Attacks: These involve deceptive emails or websites tricking users into revealing sensitive information like passwords or credit card details. Employee security awareness training, strong password policies, multi-factor authentication (MFA), and email filtering are crucial for preventing phishing attacks.

- SQL Injection Attacks: These target vulnerabilities in database applications, allowing attackers to execute malicious SQL code. Input validation, parameterized queries, and regularly updating database software are essential countermeasures.

- Malware Infections: Malicious software can infect devices and networks, causing data loss, system damage, and even enabling further attacks. Antivirus software, intrusion detection systems (IDS), firewalls, and employee education about safe browsing habits are necessary defenses.

Effective network security is a layered approach. It’s not enough to rely on a single solution; a multi-layered strategy is crucial to thwarting attacks. Think of it like Swiss cheese – each slice represents a security measure, and even if one has a hole, others can prevent an attacker from getting through.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with network automation tools?

I have extensive experience with several network automation tools. My expertise spans a range of tools, each tailored for specific tasks. For example, I’ve used Ansible for automating configuration management, ensuring consistency across a large network of devices. This is incredibly efficient compared to manual configuration, minimizing human error and ensuring rapid deployment of changes. I’ve also leveraged tools like Netmiko for interacting with network devices through their CLI, allowing for automated troubleshooting and remote management. This reduces downtime substantially. Furthermore, I’m proficient with Terraform for provisioning and managing network infrastructure as code, ensuring repeatability and scalability.

In one project, we used Ansible to automate the deployment of a new VPN gateway across multiple sites. This process, previously manual and prone to errors, was streamlined, reducing deployment time from days to hours and enhancing reliability.

Q 17. How do you ensure network security and compliance?

Ensuring network security and compliance is paramount. It’s a continuous process that involves several key elements, not just one-time fixes. Think of it as maintaining a clean and secure home – regular maintenance is key.

- Regular Security Audits and Penetration Testing: These assessments identify vulnerabilities and weaknesses before attackers can exploit them. It’s like having a home inspection to catch potential problems before they escalate.

- Implementing Robust Access Control: Restricting access to network resources based on roles and responsibilities. This involves using strong passwords, multi-factor authentication, and least privilege access controls, just as you would lock doors and only give keys to authorized individuals.

- Intrusion Detection and Prevention Systems (IDS/IPS): These systems monitor network traffic for malicious activity and take action to block or alert on suspicious events. They act as the security guards watching for intruders.

- Regular Security Patching and Updates: Keeping software and firmware up-to-date is crucial to prevent known vulnerabilities from being exploited. It’s like keeping your antivirus software updated to protect your home computer.

- Compliance with Regulations: Adhering to relevant industry standards and regulations, such as GDPR, HIPAA, or PCI DSS, is essential, and often legally mandated. This ensures your network operates within legal boundaries.

Compliance isn’t just a checklist; it’s a framework that guides our security practices and ensures that we’re protecting sensitive data and meeting legal obligations.

Q 18. Explain your experience with cloud networking technologies (AWS, Azure, GCP).

I possess significant experience with cloud networking technologies across AWS, Azure, and GCP. Each platform offers unique features, and my experience allows me to choose the best fit for the task at hand.

- AWS: I’ve worked extensively with VPCs (Virtual Private Clouds), EC2 instances, Route53 for DNS management, and various load balancing services. I’m familiar with using AWS Direct Connect for hybrid cloud environments.

- Azure: My experience includes working with Azure Virtual Networks, Azure Load Balancers, and Azure ExpressRoute for connecting on-premises networks to Azure. I’ve also utilized Azure’s security features, such as Azure Firewall.

- GCP: I’ve leveraged Google Cloud’s Virtual Private Cloud (VPC), Cloud Load Balancing, and Cloud DNS, alongside their robust security features. I’ve designed and implemented highly available and scalable architectures on GCP.

I can design, implement, and manage cloud-based network solutions, tailoring them to specific needs and optimizing for cost and performance. For example, I recently migrated a client’s on-premises infrastructure to AWS, resulting in significant cost savings and improved scalability.

Q 19. Describe your experience with Software Defined Networking (SDN).

Software-Defined Networking (SDN) allows you to manage and control network infrastructure through software rather than traditional hardware. Imagine controlling your home lighting system with a smartphone app instead of physical switches. SDN offers centralized control, programmability, and flexibility. I have experience deploying and managing SDN solutions using Open vSwitch (OVS) and controller software such as OpenDaylight.

In one project, we used SDN to implement a dynamic network segmentation strategy. This allowed us to isolate different parts of the network based on policy, improving security and simplifying network management. Previously, this level of granularity would have been exceptionally complex and costly with traditional hardware-based approaches.

Q 20. How do you perform network performance testing and analysis?

Network performance testing and analysis are crucial for identifying bottlenecks and ensuring optimal network performance. It’s like a car’s diagnostic check-up to spot potential issues before they lead to major problems.

My approach involves a multi-step process:

- Defining Objectives: Clearly identifying the specific performance metrics to measure (latency, throughput, jitter, packet loss).

- Choosing the Right Tools: Selecting tools like iPerf, ping, traceroute, and Wireshark, depending on the specific needs of the test.

- Designing Test Scenarios: Creating realistic scenarios that simulate typical network usage patterns.

- Executing Tests: Running tests under various load conditions to identify performance limitations.

- Analyzing Results: Interpreting test results to pinpoint performance bottlenecks and identify areas for optimization. This could include analyzing network diagrams, traffic flows, and device statistics.

- Reporting and Recommendations: Documenting findings and providing actionable recommendations for improvement.

For example, I recently used iPerf to measure the throughput of a network link, revealing a bottleneck that was resolved by upgrading the network hardware.

Q 21. What are your experiences with network diagnostics tools?

My experience with network diagnostic tools is extensive. They are indispensable for troubleshooting and analyzing network issues effectively, much like a mechanic uses various tools to diagnose a car problem.

- Wireshark: A powerful packet analyzer for deep-dive analysis of network traffic. It allows you to inspect individual packets, identify patterns, and diagnose various network issues.

- tcpdump: A command-line network packet analyzer, valuable for quick troubleshooting and capturing specific network events.

- ping and traceroute: Fundamental tools for checking network connectivity, identifying path issues, and measuring latency.

- SNMP (Simple Network Management Protocol): Used to collect performance data from network devices, enabling monitoring and proactive issue detection.

- Network Monitoring Systems: Tools like Nagios, Zabbix, or PRTG provide centralized monitoring and alerting, proactively identifying potential problems.

Recently, using Wireshark, I identified a specific application causing high latency on a network, leading to targeted optimization efforts rather than general network upgrades.

Q 22. Explain your understanding of network protocols (e.g., BGP, OSPF).

Network protocols are the set of rules and standards that govern how data is transmitted across a network. They define how devices communicate, format data, and handle errors. Two prominent examples are BGP and OSPF, both crucial for routing data packets.

BGP (Border Gateway Protocol) is an exterior gateway protocol used to exchange routing information between different autonomous systems (ASes) on the internet. Think of ASes as large networks like those belonging to internet service providers (ISPs). BGP determines the best path to reach a destination network across these vast interconnected networks. It’s based on path vector routing, meaning routers exchange information about the entire path to a destination, not just the next hop.

OSPF (Open Shortest Path First) is an interior gateway protocol used within a single AS. Unlike BGP, OSPF uses link-state routing. Each router in the network builds a complete map of the network topology, calculating the shortest path to all other routers using Dijkstra’s algorithm. This provides a more efficient and stable routing solution within a single administrative domain.

In essence, BGP handles routing between large networks, while OSPF handles routing within a single, large network. Imagine BGP as the interstate highway system connecting major cities, and OSPF as the city streets connecting individual addresses within those cities.

Q 23. How do you analyze network traffic patterns?

Analyzing network traffic patterns involves understanding the flow of data across the network. This is done using various techniques and tools to identify bottlenecks, security threats, and application performance issues. My approach is typically multifaceted:

- Packet Capture and Analysis: Using tools like Wireshark or tcpdump, I capture network packets to examine their content, source/destination, protocols used, and timing. This provides granular insight into network behavior.

- Flow Monitoring: Tools like NetFlow or sFlow aggregate network traffic into flows, providing a summary of communication patterns between IP addresses or subnets. This is less granular than packet capture but provides a broader overview of traffic volume and patterns.

- Network Monitoring Systems: Systems like Nagios, Zabbix, or SolarWinds provide real-time monitoring of network devices and overall network health. They alert administrators to potential problems and help in tracking key performance indicators (KPIs) like latency, bandwidth utilization, and packet loss.

- Statistical Analysis: I use statistical methods to analyze trends and patterns in the collected data. This helps identify anomalies and predict future network behavior. For instance, identifying unusual spikes in traffic during specific times of day.

Ultimately, the goal is to identify areas of congestion, security vulnerabilities, or application-specific performance issues. For example, a sudden increase in HTTP requests to a specific server might indicate a DDoS attack or a surge in user activity requiring capacity planning.

Q 24. Describe your experience with network design and implementation.

I have extensive experience in designing and implementing various network architectures, from small office networks to large enterprise networks. My approach always starts with a thorough understanding of the client’s needs and requirements. This includes assessing current infrastructure, identifying future growth needs, and defining key performance objectives.

Example: In a recent project for a financial institution, I designed a highly available and secure network infrastructure utilizing redundant firewalls, load balancers, and geographically diverse data centers. This involved specifying hardware, configuring network devices, implementing security policies, and integrating various network management tools. The design prioritized data security, high availability, and scalability to handle future growth. We used a layered approach with VLAN segmentation for enhanced security and performance. I also worked closely with the security team to ensure compliance with industry regulations and best practices.

My experience covers a wide range of technologies, including routing protocols (BGP, OSPF, EIGRP), switching technologies (VLANs, STP, RSTP), network security (firewalls, intrusion detection/prevention systems), and cloud networking solutions (AWS, Azure).

Q 25. What are your experiences with various network monitoring tools (e.g., Nagios, Zabbix, SolarWinds)?

I’ve worked extensively with various network monitoring tools, including Nagios, Zabbix, and SolarWinds. Each tool has its strengths and weaknesses, and the best choice depends on the specific needs of the network and the budget.

Nagios is a widely used open-source monitoring system known for its flexibility and customizability. I’ve used it to monitor server uptime, network connectivity, and resource utilization. Its robust plugin ecosystem allows for monitoring of a wide variety of systems and applications.

Zabbix is another popular open-source option, offering a comprehensive suite of monitoring features. I appreciate its scalability and ease of use for managing large and complex networks. I’ve used it to create dashboards that provide a clear overview of network performance and alert on critical events.

SolarWinds is a commercial product known for its user-friendly interface and comprehensive features. While more expensive than open-source alternatives, its advanced features and dedicated support team make it a valuable choice for managing complex networks. I’ve utilized its capabilities for detailed performance analysis and capacity planning.

My experience extends beyond just using these tools; I have configured them, customized them to meet specific monitoring needs, and integrated them with other systems.

Q 26. Explain the concept of network segmentation and its benefits.

Network segmentation is the practice of dividing a network into smaller, isolated segments. Each segment has its own security policies and access controls, limiting the impact of security breaches and improving overall network performance. Think of it as dividing a large city into smaller neighborhoods, each with its own security and access rules.

Benefits:

- Enhanced Security: By isolating different parts of the network, a breach in one segment is less likely to affect other parts. This minimizes the attack surface and prevents lateral movement of attackers.

- Improved Performance: Segmentation reduces network congestion by isolating traffic within specific segments. This improves application response times and overall network efficiency.

- Better Management: Smaller, isolated segments are easier to manage and monitor. This simplifies troubleshooting and allows for more granular control over network resources.

- Compliance: Network segmentation often aligns with regulatory compliance requirements, like those in the healthcare or financial industries, that mandate data separation and security.

Example: A typical implementation involves using VLANs (Virtual LANs) to create logical segments within a physical network. Different departments or applications can be assigned to different VLANs, ensuring that their traffic remains isolated.

Q 27. How do you use network analytics to improve performance?

Network analytics involves collecting, analyzing, and interpreting network data to identify performance bottlenecks, security threats, and other issues. I use network analytics to improve performance by:

- Identifying Bottlenecks: By analyzing traffic patterns, I can pinpoint network segments or devices that are causing congestion. This might involve analyzing bandwidth utilization, latency, and packet loss.

- Optimizing Routing: Analyzing routing tables and traffic flows helps identify inefficient routes or routing loops that can degrade performance. This could involve adjusting OSPF or BGP configurations.

- Improving Application Performance: By analyzing application traffic, I can identify performance issues related to specific applications or services. This could involve optimizing application configurations or adjusting network QoS policies.

- Predictive Analysis: Using historical data and machine learning techniques, I can predict future network performance and proactively address potential issues before they impact users.

Example: In a recent project, network analytics revealed a significant increase in latency during peak hours, impacting user experience. By analyzing traffic patterns, we identified a specific network segment experiencing congestion. Upgrading the bandwidth of that segment resolved the latency issue and improved overall network performance.

Q 28. Describe a situation where you had to optimize a slow network. What was your approach?

I once encountered a situation where a company’s network experienced significant slowdowns impacting employee productivity. My approach was systematic and involved these steps:

- Gather Data: I started by collecting performance data using network monitoring tools like SolarWinds and Wireshark. This included analyzing bandwidth utilization, latency, packet loss, and CPU/memory usage on key network devices.

- Identify Bottlenecks: The data revealed high CPU utilization on the core switch and significant congestion on a specific VLAN carrying voice traffic. This pointed to a combination of insufficient switch capacity and a lack of Quality of Service (QoS) policies.

- Develop Solutions: Based on the findings, I proposed two solutions: upgrading the core switch to increase its processing power and implementing QoS policies to prioritize voice traffic over other types of traffic.

- Implement Solutions: The core switch was upgraded, and QoS policies were configured on the switch to prioritize voice traffic using Differentiated Services Code Point (DSCP) markings.

- Test and Monitor: After implementing the changes, I closely monitored network performance to ensure the improvements were sustainable. The network was significantly faster.

This case highlighted the importance of a data-driven approach to network optimization. Relying solely on intuition would have likely resulted in inefficient solutions. A systematic approach ensured we addressed the root causes and implemented effective solutions.

Key Topics to Learn for Network Optimization and Performance Analysis Interview

- Network Protocols and Architectures: Understanding TCP/IP, OSI model, routing protocols (BGP, OSPF), and network topologies is fundamental. Consider practical applications like troubleshooting network connectivity issues or designing efficient network layouts.

- Performance Monitoring and Measurement: Mastering tools like Wireshark, tcpdump, and performance monitoring systems is crucial. Learn how to analyze network traffic, identify bottlenecks, and interpret key performance indicators (KPIs) like latency, jitter, and packet loss.

- Network Security and Optimization: Explore concepts like firewalls, intrusion detection systems, and security protocols. Understand how security measures impact network performance and how to optimize for both security and efficiency.

- Quality of Service (QoS): Learn how QoS mechanisms prioritize critical traffic, ensuring optimal performance for voice, video, and data applications. Practice analyzing QoS configurations and troubleshooting QoS-related issues.

- Network Capacity Planning and Forecasting: Develop your skills in predicting future network needs based on current usage patterns and projected growth. Learn to use various forecasting techniques and understand the impact of different network technologies on capacity planning.

- Troubleshooting and Problem-Solving: Develop a systematic approach to diagnosing and resolving network issues. Practice applying theoretical knowledge to real-world scenarios and explaining your troubleshooting methodology clearly.

- Cloud Networking and Optimization: Understand the intricacies of cloud networking architectures (e.g., AWS, Azure, GCP) and how to optimize performance within cloud environments. This includes aspects like Virtual Private Clouds (VPCs) and load balancing.

Next Steps

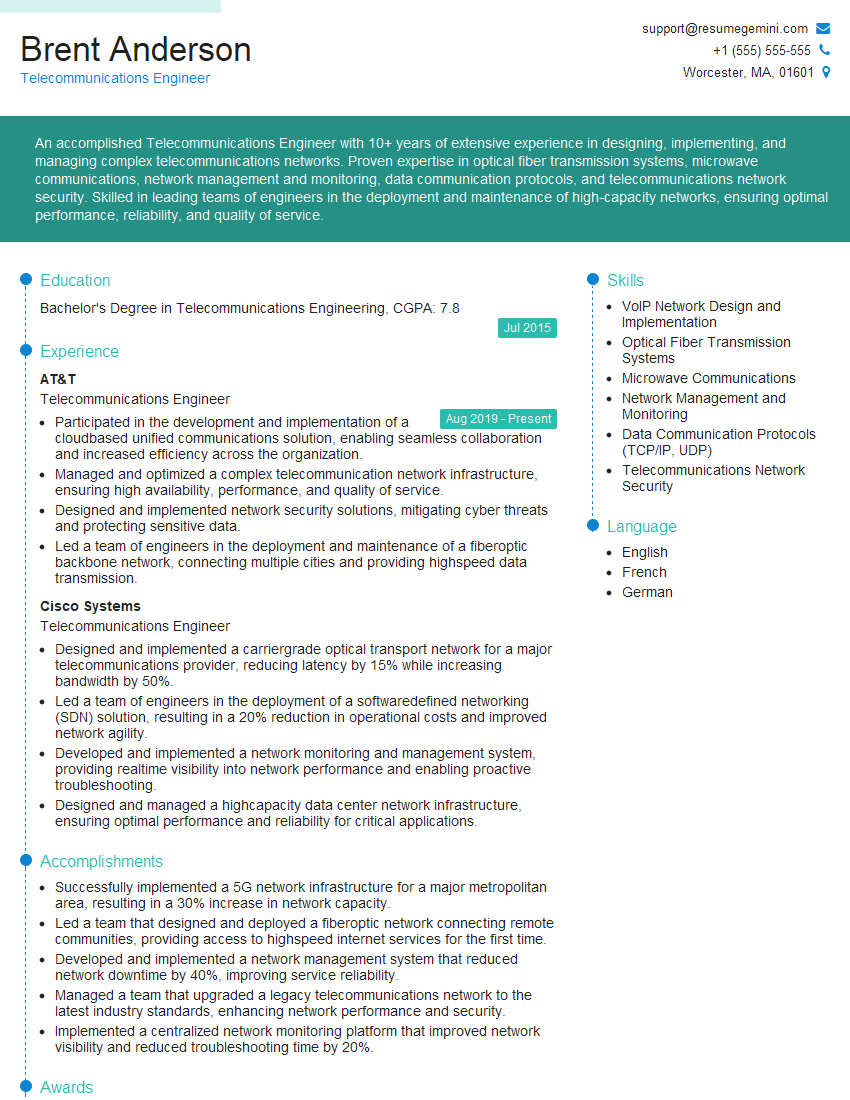

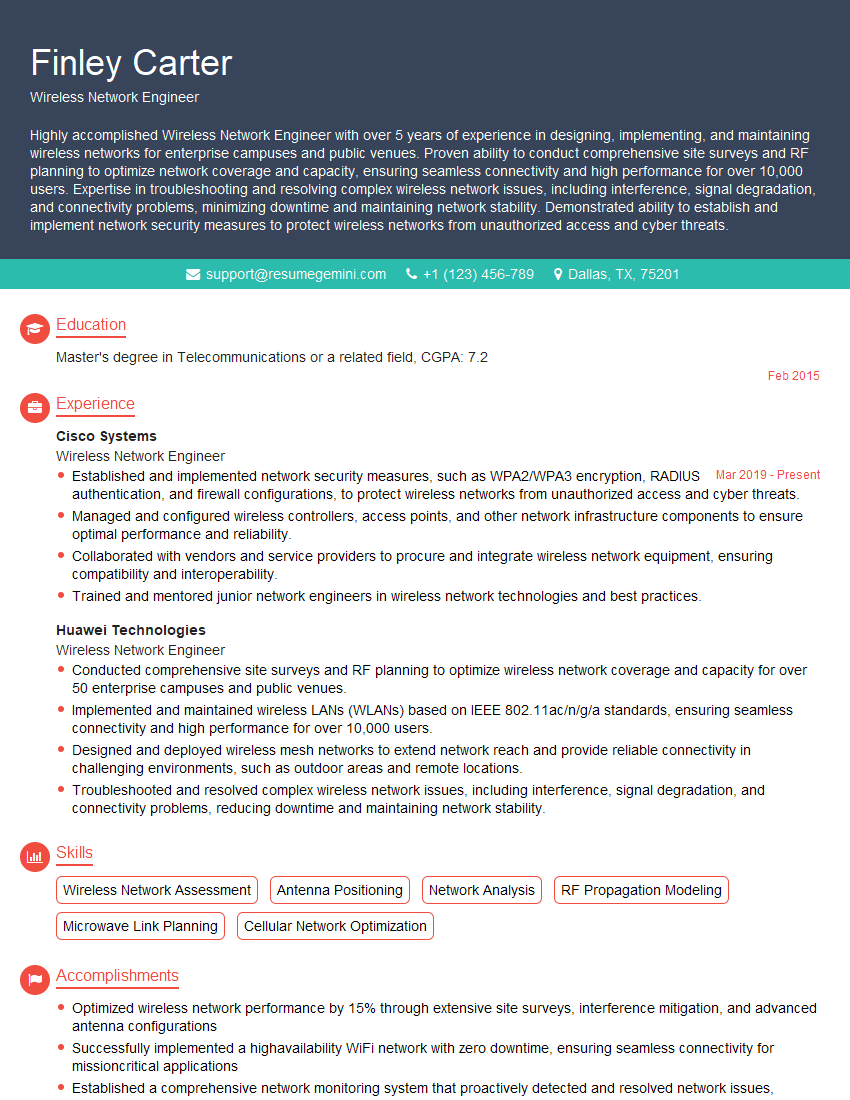

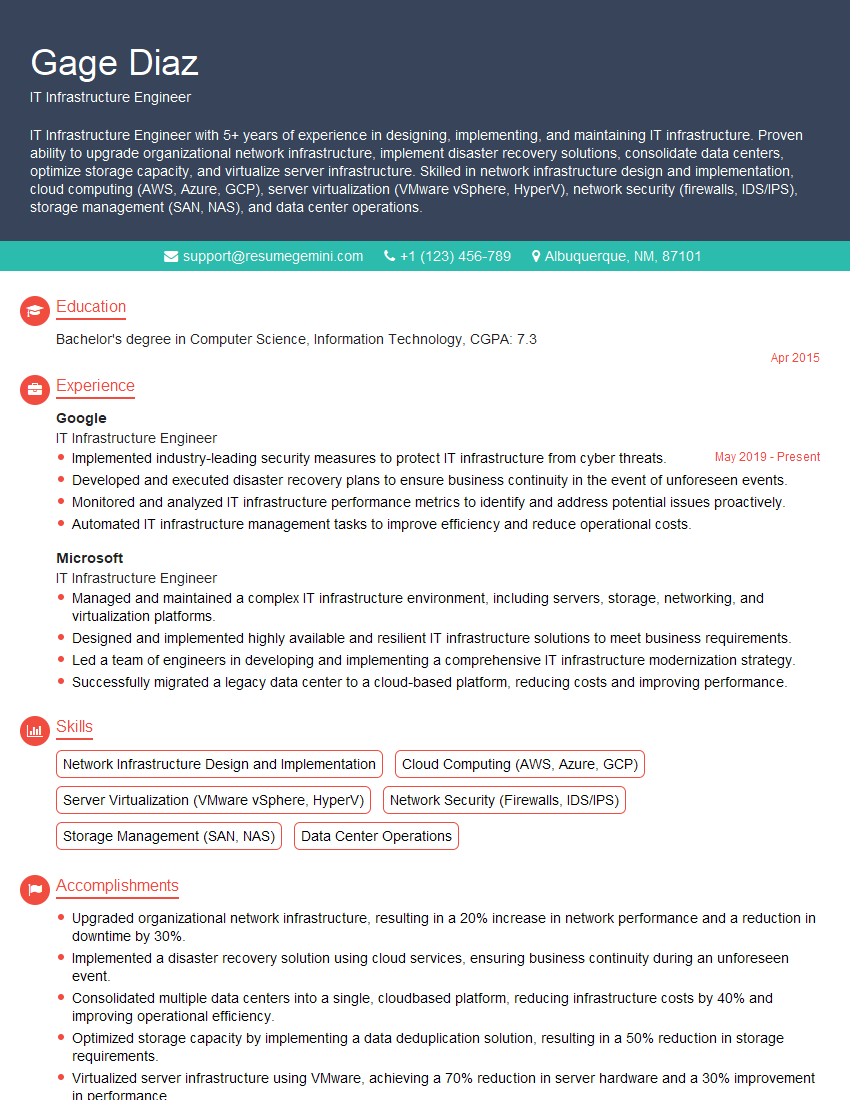

Mastering Network Optimization and Performance Analysis opens doors to exciting and rewarding career opportunities in a rapidly evolving field. Demonstrating a strong understanding of these concepts is vital for securing your ideal role. To maximize your job prospects, creating a compelling and ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you build a professional resume that showcases your skills and experience effectively. We provide examples of resumes tailored to Network Optimization and Performance Analysis to help you get started. Take the next step towards your dream career – build a standout resume with ResumeGemini today.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good