Cracking a skill-specific interview, like one for Precision Measurement Instruments, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Precision Measurement Instruments Interview

Q 1. Explain the concept of measurement uncertainty and its impact on precision.

Measurement uncertainty quantifies the doubt associated with a measured value. It reflects the range of possible values within which the true value likely lies. It’s crucial because it directly impacts the precision of the measurement; a smaller uncertainty indicates higher precision. Imagine shooting an arrow at a target: high precision means the arrows are clustered tightly together, regardless of whether they hit the bullseye. High accuracy means the arrows hit close to the bullseye, even if they are spread out. Measurement uncertainty encompasses both random and systematic errors, contributing to the overall spread of results.

For example, if we measure the length of a component as 10.00 ± 0.02 cm, the uncertainty is ±0.02 cm. This means the true length is likely between 9.98 cm and 10.02 cm. In manufacturing, a high uncertainty in the dimensions of parts could lead to assembly failures or poor functionality. Understanding and minimizing uncertainty is vital for quality control and reliable product performance.

Q 2. Describe different types of measurement errors and how to mitigate them.

Measurement errors can be categorized into systematic and random errors. Systematic errors are consistent and repeatable, resulting from flaws in the instrument or measurement process. For instance, a miscalibrated scale consistently adds 0.5 grams to every reading. Random errors are unpredictable variations due to environmental factors or operator influence; think of slight variations in the way a technician aligns a part in a measuring device.

Mitigation strategies include: Calibration: Regular calibration with traceable standards ensures instrument accuracy. Environmental control: Controlling factors such as temperature and humidity helps minimize random errors. Proper instrument handling: Adhering to manufacturer instructions minimizes errors caused by incorrect usage. Multiple measurements: Taking multiple readings and analyzing statistically reduces the impact of random errors. Error analysis: Applying statistical methods helps identify and quantify systematic and random errors. For instance, calculating the standard deviation of multiple measurements offers insights into random error.

Q 3. What are the key characteristics of a high-precision measurement system?

A high-precision measurement system exhibits several key characteristics:

- High Resolution: The ability to distinguish between small differences in the measured quantity. A digital caliper with 0.01 mm resolution is more precise than one with 1 mm resolution.

- Low Uncertainty: Minimal variability in repeated measurements under identical conditions.

- Linearity: Consistent response across the entire measurement range. The output should be proportional to the input.

- Stability: Maintaining consistent performance over time without drift or significant changes in calibration.

- Repeatability: The ability to obtain consistent results when the same operator performs multiple measurements using the same instrument under the same conditions.

- Reproducibility: Consistency in results obtained by different operators using different instruments under different conditions, but still adhering to standardized procedures.

- Robustness: Resistance to external factors (like temperature fluctuations) that might influence the measurement result.

These characteristics ensure that measurements are reliable, repeatable, and accurately reflect the true value of the measured quantity.

Q 4. How do you calibrate a precision instrument? Detail the process.

Calibrating a precision instrument involves comparing its readings against a known standard of higher accuracy. This process establishes the instrument’s accuracy and corrects any deviations. Here’s a typical process:

- Prepare the instrument and standard: Ensure the instrument is properly powered and prepared according to manufacturer instructions. The standard should be traceable to a national or international standard.

- Establish baseline: Take several readings on the standard using the instrument. This establishes a baseline for comparison.

- Compare readings: Compare the instrument’s readings with the known values from the standard. Note any discrepancies.

- Adjust the instrument (if necessary): Some instruments have internal adjustments to correct deviations. This step may involve adjusting calibration knobs or using specialized software.

- Document the calibration: Record the date, calibration standards used, and any corrections made. This documentation is crucial for maintaining traceability and quality control.

- Generate a Calibration Certificate: A formal calibration certificate verifies the instrument’s accuracy and validity for a specified period.

The frequency of calibration depends on the instrument type, usage, and required accuracy. More frequently used instruments in demanding applications may require calibration more often.

Q 5. Explain the difference between accuracy and precision.

Accuracy refers to how close a measurement is to the true value. Precision refers to how close repeated measurements are to each other. Think of a dartboard: high accuracy means the darts are clustered around the bullseye, whereas high precision means the darts are clustered together, regardless of where they hit on the board. An instrument can be precise but not accurate (systematic error), accurate but not precise (random error), or both accurate and precise (ideal).

Example: A scale consistently reads 1 kg off (systematic error), it’s precise (always reads the same amount off), but not accurate. A scale whose readings vary widely around the true weight (random error), it’s not precise, but may be accurate on average. A precise and accurate scale consistently shows the true weight. Understanding this difference is essential for selecting appropriate instruments and interpreting results.

Q 6. What are the common types of precision measurement instruments and their applications?

Numerous precision measurement instruments exist, each serving specific applications:

- Micrometers: Used for precise linear measurements with high resolution (e.g., measuring the diameter of a shaft).

- Digital calipers: Similar to micrometers, but often provide both inside and outside measurement capabilities.

- Optical comparators: Project magnified images of parts to allow for precise measurements and inspection of details.

- Coordinate Measuring Machines (CMMs): Used for three-dimensional measurements of complex shapes.

- Spectrometers: Measure the wavelengths of light to identify and quantify substances.

- LCR meters: Measure inductance (L), capacitance (C), and resistance (R) in electrical components.

- Multimeters: Measure voltage, current, and resistance in electrical circuits.

The application of each instrument depends on the required accuracy, precision, and the nature of the measured quantity. Choosing the right instrument is critical for reliable and accurate results.

Q 7. Describe your experience with statistical process control (SPC) in precision measurement.

Statistical Process Control (SPC) is integral to maintaining precision in measurement. In my experience, SPC tools like control charts (e.g., X-bar and R charts) have been invaluable in monitoring measurement processes. I have used these charts to track the stability and capability of measurement systems over time. By plotting measurement data, we identify trends, shifts, and variations which could indicate issues such as instrument drift, environmental effects, or operator inconsistencies. These charts enable early detection of problems, preventing propagation of inaccurate measurements and allowing for timely corrective actions.

For example, in a manufacturing environment producing high-precision bearings, I implemented X-bar and R charts to monitor the diameter measurements. The charts revealed a systematic bias in measurements during the afternoon shift. Further investigation discovered a slight temperature drift in the measurement room affecting the micrometers’ performance. Corrective actions—including improved environmental control and more frequent calibration—were put in place, substantially improving the accuracy and stability of the measurement process. SPC ensures that the precision of our measurements is maintained and consistently meets the required specifications.

Q 8. How do you handle outliers in measurement data?

Handling outliers in measurement data requires a careful approach, balancing the need for accurate representation with the potential for erroneous data points to skew results. Outliers are data points that significantly deviate from the overall pattern. Before discarding data, it’s crucial to understand the cause.

Identifying Outliers: I typically use statistical methods like the box plot or Z-score to identify outliers. The box plot visually represents the data’s distribution, highlighting points falling outside the whiskers (typically 1.5 times the interquartile range from the quartiles). The Z-score measures how many standard deviations a data point is from the mean; values exceeding a certain threshold (e.g., ±3) are considered outliers.

Handling Outliers: The best course of action depends on the outlier’s source. If a systematic error is suspected (e.g., a malfunctioning sensor), addressing the source is vital. If the cause remains unclear, several approaches are available:

- Removal: Removing outliers is a simple option, but only justified if there’s strong evidence of error (e.g., a clear recording error). Always document the reason for removal.

- Transformation: Logarithmic or other transformations can sometimes reduce the influence of outliers.

- Winsorizing/Trimming: Replacing outliers with less extreme values (Winsorizing) or removing them entirely (Trimming) is another option but should be done cautiously and documented.

- Robust Statistical Methods: Methods like median instead of mean are less sensitive to outliers.

Example: In a series of length measurements, one value is significantly larger than the others. If investigation reveals a recording error (e.g., an extra zero added), it’s appropriate to correct or remove the outlier. However, if no error is found, it’s safer to use robust methods like median or to carefully consider whether it represents a real, albeit rare, event.

Q 9. Explain your understanding of traceability in calibration.

Traceability in calibration is the demonstrable link between the results of a measurement and national or international standards. It ensures the accuracy and reliability of measurement equipment by providing a chain of comparisons. Without traceability, measurements lack a verifiable reference, making them essentially meaningless for many applications.

The chain typically begins with national standards maintained by metrology institutes (e.g., NIST in the US). These institutes calibrate primary standards against fundamental physical constants or other highly accurate methods. Secondary standards are then calibrated against these primary standards, followed by working standards, and finally, the instruments used in daily measurements. Each step in this chain must be documented to maintain traceability.

Importance of Traceability:

- Confidence in Results: It provides confidence that measurements are accurate and reliable.

- Compliance: Many industries have regulations requiring traceability for quality control and assurance.

- Comparability: Traceable measurements allow for comparison of data from different sources and times.

Example: A laboratory calibrates a digital multimeter against a traceable voltage standard. The certificate will show the traceability chain back to national standards, validating the accuracy of the calibration. Without this chain, the multimeter readings would be questionable.

Q 10. How do you select the appropriate precision instrument for a specific measurement task?

Selecting the appropriate precision instrument involves careful consideration of several factors to ensure accurate and reliable measurements:

- Measurement Requirement: What needs to be measured (length, mass, voltage, temperature, etc.)? What is the required accuracy and precision?

- Range: What is the expected range of values?

- Resolution: What is the smallest increment the instrument can measure?

- Environmental Conditions: Will the instrument be used in extreme temperatures, humidity, or other challenging environments?

- Budget: Cost is a significant factor; more accurate and sophisticated instruments are generally more expensive.

- Ease of Use: The instrument should be user-friendly and provide clear, understandable results.

Example: If measuring the diameter of a tiny wire, a high-resolution optical micrometer would be needed. For measuring the mass of a large object, a precision balance with a suitable capacity would be appropriate. If measuring temperature within a high-temperature furnace, a rugged thermocouple with a suitable range and accuracy would be necessary. A simple digital multimeter suffices for routine electrical measurements but would be insufficient for highly precise electrical testing.

Q 11. Describe your experience with different types of sensors (e.g., optical, capacitive, inductive).

My experience encompasses various sensor technologies, each with its strengths and limitations:

- Optical Sensors: These sensors utilize light to perform measurements. Examples include photodiodes (measuring light intensity), interferometers (measuring displacement with extremely high precision), and optical fiber sensors (measuring temperature, pressure, or strain in remote or hazardous locations). I’ve worked extensively with optical interferometers in precision length measurements. The high precision makes them crucial for applications needing nanometer accuracy.

- Capacitive Sensors: These sensors measure changes in capacitance caused by variations in proximity, position, or dielectric properties. They are used in proximity sensors, touchscreens, and level measurement systems. Their advantages are high sensitivity and non-contact measurement.

- Inductive Sensors: These sensors detect changes in inductance, commonly used to sense the presence or position of metallic objects. They’re robust and often used in industrial automation and metal detection applications. I’ve used inductive sensors in proximity sensing for automated machinery, noting their sensitivity to metallic objects and their relatively simple design.

The choice of sensor depends on the application requirements. Optical sensors are preferred for high-precision applications, while capacitive and inductive sensors are suitable for robust, non-contact sensing.

Q 12. How do you ensure the proper maintenance and care of precision instruments?

Proper maintenance and care are essential for ensuring the accuracy and longevity of precision instruments. My approach involves:

- Regular Cleaning: Cleaning according to the manufacturer’s instructions is crucial. Dust, debris, or fingerprints can affect accuracy. Appropriate cleaning agents must be used to avoid damaging delicate surfaces.

- Calibration: Regular calibration against traceable standards is necessary to maintain accuracy. The calibration frequency depends on the instrument and its use.

- Proper Storage: Instruments should be stored in a controlled environment (temperature and humidity) to prevent damage and maintain stability.

- Handling with Care: Avoid dropping or rough handling. Follow manufacturer guidelines for handling and transportation.

- Preventative Maintenance: This might include lubricating moving parts, replacing worn components, or performing other tasks as recommended by the manufacturer.

- Documentation: Maintaining detailed records of calibration, maintenance, and repairs is essential.

Example: A high-precision balance needs regular cleaning to prevent dust accumulation that can skew weight readings. Calibrations should be scheduled according to the manufacturer’s recommendations or based on frequency of use, which might be annually or even more frequently depending on usage.

Q 13. What are some common sources of systematic errors in measurement?

Systematic errors are consistent, repeatable errors that affect all measurements in a similar way. Unlike random errors, they are not easily identified statistically. Common sources include:

- Instrument Bias: A systematic error in the instrument itself, such as a miscalibration or inherent design flaw. For example, a thermometer consistently reads 1°C too high.

- Environmental Factors: Temperature, humidity, or pressure changes can systematically affect measurement results. For example, changes in ambient temperature affect the length of a metal measuring rod.

- Observer Bias: Subjective errors introduced by the observer, such as parallax error when reading a scale. For instance, consistently reading a scale from an angle can introduce bias.

- Loading Effect: The act of measurement can alter the quantity being measured. For example, a voltmeter with high impedance may affect the circuit it’s connected to.

- Zero Offset: A consistent discrepancy between the measured value and the true value, due to a non-zero starting point.

Mitigation: Careful calibration, environmental control, and proper measurement techniques are crucial to minimize systematic errors. Using multiple instruments or measurement methods helps in identifying and correcting systematic errors.

Q 14. Explain your experience with data acquisition systems.

Data acquisition systems (DAS) are crucial for collecting and processing data from multiple sensors and instruments. My experience includes designing, implementing, and utilizing DAS for various applications. These systems typically involve:

- Sensors: A variety of sensors to measure physical parameters (temperature, pressure, strain, etc.).

- Signal Conditioning: Amplification, filtering, and other processing steps to prepare the sensor signals for acquisition.

- Analog-to-Digital Converters (ADCs): Convert analog sensor signals into digital data that can be processed by a computer.

- Data Acquisition Hardware: This might include data loggers, multi-meter interfaces, or specialized hardware for high-speed data acquisition.

- Software: Software for controlling the data acquisition process, storing data, and performing analysis.

Applications: I have worked with DAS in various contexts, including environmental monitoring, industrial process control, and precision metrology. For instance, in a precision manufacturing process, a DAS might acquire data from numerous sensors to monitor and control parameters like temperature, pressure, and vibration in real-time, ensuring product quality. In environmental monitoring, a DAS might collect data from various remote sensors to continuously monitor air or water quality.

Challenges: Designing a robust DAS involves considerations like data sampling rates, noise reduction, data storage and management, and data synchronization between multiple sensors. I have experience in addressing these challenges through proper hardware selection, signal processing, and careful software development.

Q 15. How do you interpret measurement data and draw meaningful conclusions?

Interpreting measurement data involves more than just looking at numbers; it’s about understanding the context, identifying trends, and drawing conclusions that are both statistically sound and practically meaningful. This process typically involves several steps:

- Data Cleaning: First, I’d check for outliers, errors, and inconsistencies in the data. This might involve identifying and removing bad data points caused by equipment malfunction or environmental factors. For example, if I’m measuring the length of a part and one reading is drastically different from the rest, I’d investigate – was there a vibration, a calibration issue, or a simple recording error?

- Statistical Analysis: Next, I’d perform relevant statistical analyses, depending on the data and research question. This could involve calculating means, standard deviations, or applying more advanced techniques like regression analysis or ANOVA to identify relationships between variables. For instance, I might use a t-test to compare the means of two different measurement sets to see if there’s a significant difference.

- Uncertainty Analysis: Crucially, I’d assess the uncertainty associated with each measurement. Precision measurement is not about getting a single, perfect number; it’s about understanding the range of values within which the true value likely lies. This involves considering the uncertainties contributed by the instrument itself, the measurement method, and the environment.

- Contextualization: Finally, I’d interpret the results in the context of the specific application. A small difference might be insignificant in one context but critical in another. For example, a 0.1mm difference in a large structural component might be acceptable, but catastrophic in a microelectronic device.

Ultimately, the goal is to communicate findings clearly and concisely, highlighting both the certainty and uncertainty in the conclusions.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your proficiency in using different software for data analysis (e.g., LabVIEW, MATLAB).

I’m proficient in several software packages for data analysis, including LabVIEW and MATLAB. My experience with LabVIEW centers around its excellent capabilities for data acquisition and instrument control. I’ve used it extensively to automate measurement processes, develop custom data logging systems, and create user-friendly interfaces for complex instruments. For example, I developed a LabVIEW program to control a laser interferometer, automatically collect data, and perform real-time analysis of surface roughness.

MATLAB, on the other hand, is my go-to tool for more advanced statistical analysis and signal processing. I’ve used it to perform sophisticated data fitting, develop algorithms for noise reduction, and create visualizations to help communicate complex results. For example, I used MATLAB to analyze the frequency response of a high-precision sensor and identify potential sources of noise.

My experience extends beyond these two – I’m also comfortable working with Python and its scientific computing libraries (NumPy, SciPy, Matplotlib), which offer flexibility and powerful data manipulation capabilities.

Q 17. How do you troubleshoot issues with precision instruments?

Troubleshooting precision instruments requires a systematic approach, combining technical knowledge with a methodical process of elimination. My approach generally follows these steps:

- Identify the Problem: First, I clearly define the issue. Is the instrument not responding? Are the readings inaccurate or inconsistent? Is there an error message?

- Check the Obvious: I start with the simplest checks, such as power supply, connections, and calibration status. A loose cable or a power fluctuation can often be the culprit.

- Consult Documentation: I refer to the instrument’s manual, checking for troubleshooting guides or known issues. Manufacturers often provide detailed information about error codes and possible solutions.

- Systematic Testing: If the issue persists, I perform controlled tests to isolate the problem. This might involve comparing the instrument’s output to a known standard or using a different measurement method to verify the results.

- Calibration and Maintenance: I ensure the instrument is properly calibrated and has undergone any necessary maintenance procedures. Regular calibration is crucial for maintaining accuracy in precision measurements.

- Seek External Expertise: If the problem remains unsolved after these steps, I might seek assistance from the manufacturer or a specialist in precision instrumentation.

For instance, I once encountered a drift in a high-precision balance. After systematic checks ruled out environmental factors and calibration issues, I traced the problem to a faulty internal component that required replacement by a qualified technician.

Q 18. What are your experiences with different calibration standards and certifications?

My experience encompasses various calibration standards and certifications. I’m familiar with ISO 17025, which specifies the requirements for the competence of testing and calibration laboratories. This standard ensures that calibration procedures are traceable to national or international standards, minimizing uncertainty and ensuring the reliability of measurement results.

I’ve worked extensively with calibration certificates issued by accredited laboratories, verifying the traceability of measurements to national standards like those provided by NIST (National Institute of Standards and Technology) in the US. I understand the importance of calibration intervals and the need to maintain detailed records of calibration history.

Furthermore, I’m adept at interpreting calibration data and assessing the uncertainty associated with calibrated instruments. Understanding these uncertainties is critical when evaluating the overall uncertainty of a measurement system.

Q 19. Explain your understanding of ISO 9000 and its relevance to precision measurement.

ISO 9000 is a family of international standards that focuses on quality management systems. Its relevance to precision measurement is paramount because it provides a framework for ensuring the accuracy, reliability, and consistency of measurement processes within an organization. Adherence to ISO 9000 principles ensures that measurements are traceable, documented, and meet specified requirements.

For example, in a precision measurement laboratory, ISO 9000 principles would guide the development and implementation of procedures for instrument calibration, data handling, and quality control. This includes defining roles and responsibilities, implementing internal audits, and continuously improving processes to minimize errors and ensure the reliability of measurement results. The ultimate goal is customer satisfaction by delivering consistent and high-quality measurement services.

Q 20. Describe your experience with different types of measurement standards (e.g., NIST, ISO).

My work involves frequent interaction with various measurement standards, including those established by NIST (National Institute of Standards and Technology) and ISO (International Organization for Standardization). NIST provides highly accurate and traceable measurements that serve as the foundation for many national measurement systems. I utilize NIST-traceable standards to calibrate instruments and verify the accuracy of measurement results.

ISO standards, on the other hand, define procedures and guidelines for various measurement techniques and quality management systems. These standards ensure consistency and comparability of measurements across different organizations and countries. My experience includes working with ISO standards related to specific measurement methods and calibration procedures, ensuring adherence to best practices.

Understanding the interplay between these national and international standards is crucial for maintaining the traceability and reliability of precision measurements in a globalized environment.

Q 21. How do you validate the accuracy of a measurement system?

Validating the accuracy of a measurement system is a critical aspect of ensuring reliable results. This involves a combination of techniques to assess both the systematic and random errors present in the measurement process. A common approach involves:

- Comparison to a Known Standard: This is the most direct method. I’d compare the system’s output to a known standard, preferably one with significantly lower uncertainty than the system being validated. The difference reveals the system’s bias or systematic error.

- Repeatability and Reproducibility Studies: Repeated measurements under identical conditions assess repeatability, while measurements across different operators, instruments, or locations assess reproducibility. The variation observed quantifies the random error.

- Uncertainty Analysis: A formal uncertainty analysis, according to guidelines like the Guide to the Expression of Uncertainty in Measurement (GUM), is crucial to quantify the overall uncertainty of the measurement system. This combines systematic and random errors to define a confidence interval around the measured value.

- Control Charts: Monitoring measurements over time using control charts helps identify trends or shifts in the system’s performance, indicating potential problems that need attention. This proactive monitoring is vital for maintaining long-term accuracy.

For instance, when validating a new temperature measurement system, I might compare its readings against a calibrated reference thermometer, perform repeatability studies, analyze the system’s uncertainties, and implement a control chart to monitor its performance over time. The goal is to demonstrate that the system consistently delivers measurements within acceptable limits of uncertainty, fulfilling the requirements for the intended application.

Q 22. What are some advanced techniques for improving measurement precision?

Improving measurement precision involves a multifaceted approach, combining advanced instrumentation with meticulous techniques. Here are some key strategies:

- Environmental Control: Maintaining a stable environment is crucial. Temperature fluctuations, vibrations, and air currents can significantly impact precision. This involves using climate-controlled labs and vibration isolation tables.

- Calibration and Traceability: Regular calibration against national or international standards is paramount. Traceability ensures the accuracy of your measurements can be linked back to a known, reliable source. This is often documented using a calibration certificate.

- Statistical Process Control (SPC): SPC techniques help identify and minimize sources of variation in the measurement process. Control charts and other statistical tools allow for proactive monitoring and adjustments.

- Advanced Sensors and Instrumentation: Utilizing high-resolution sensors, such as those found in atomic force microscopes (AFMs) or laser interferometers, significantly enhances accuracy. These instruments are capable of measuring at the nanometer or even angstrom level.

- Data Acquisition and Analysis: Sophisticated data acquisition systems coupled with powerful analytical software allow for the processing of large datasets and the identification of subtle patterns or outliers that might indicate measurement errors.

- Error Compensation: Many instruments incorporate algorithms to compensate for known systematic errors. For example, a CMM might correct for thermal expansion of its own components.

Imagine trying to measure the thickness of a human hair with a ruler – highly imprecise. But using an optical interferometer, we can achieve sub-nanometer accuracy, a world of difference.

Q 23. Explain your experience with dimensional metrology techniques.

My experience in dimensional metrology spans various techniques, including:

- Coordinate Measuring Machine (CMM) operation: I’m proficient in operating various types of CMMs (bridge, gantry, and articulated arm), performing dimensional inspections, and generating detailed reports. I’ve used CMMs extensively for quality control in manufacturing, ensuring parts meet specified tolerances. For instance, I helped a client identify a subtle flaw in their injection-molded plastic parts that was only detectable using a high-accuracy CMM.

- Optical metrology: This includes techniques like laser scanning and structured light scanning, used for rapid and non-contact 3D surface measurement. These are useful for complex shapes that are difficult to measure using traditional CMMs.

- Interferometry: I’ve utilized interferometry, including laser interferometry, for highly precise length measurements, often in calibration labs. This involves comparing the wavelength of a laser to the distance being measured, achieving extremely high accuracy.

I’m particularly adept at selecting the appropriate metrology technique based on the specific application and the desired level of accuracy. For example, while a CMM might be suitable for measuring the dimensions of a machined part, laser interferometry would be preferred for calibrating a high-precision linear scale.

Q 24. How do you manage and document calibration records effectively?

Effective calibration record management is crucial for maintaining the integrity of measurement data. My approach involves:

- Centralized Database: I use a computerized system to track calibration records, ensuring easy access and searchability. This database typically includes instrument details, calibration dates, results, and any corrective actions taken.

- Unique Identification: Each instrument is assigned a unique identification number to avoid confusion and ensure traceability.

- Calibration Certificates: Calibration certificates are meticulously filed and archived, either digitally or physically, adhering to appropriate storage conditions.

- Automated Reminders: The system generates automated reminders for upcoming calibrations, preventing overdue checks and potential measurement inaccuracies.

- Auditable Trail: The system maintains a complete audit trail of all actions taken, ensuring traceability and accountability.

- Compliance with Standards: All procedures follow relevant ISO standards, such as ISO 17025 for calibration laboratories.

Think of it like maintaining a meticulous medical record – each entry is crucial, and proper organization ensures the patient (in this case, the measurement system) remains healthy and accurate.

Q 25. Describe your understanding of the principles of uncertainty propagation.

Uncertainty propagation describes how uncertainties in individual measurements contribute to the uncertainty in the final result. It’s based on the principle that the uncertainty of a calculated quantity is related to the uncertainties of the input measurements.

For instance, if you’re calculating the area of a rectangle (Area = Length x Width), the uncertainty in the area will depend on the uncertainties in both the length and width measurements. The formula for calculating the combined uncertainty depends on the mathematical relationship between the variables. Simple linear relationships involve using the square root of the sum of the squares (RSS) of the individual uncertainties.

Understanding uncertainty propagation is critical for reporting measurement results responsibly. Instead of presenting a single value, we provide a range of values within which the true value is likely to lie, along with a confidence level. This acknowledges the inherent uncertainties in any measurement process.

For example, if we measure length as 10 ± 0.1 mm and width as 5 ± 0.05 mm, we don’t simply calculate the area as 50 mm². We’d use uncertainty propagation techniques to determine the combined uncertainty in the area, resulting in a statement like “Area = 50 ± 0.5 mm² (at a 95% confidence level), for instance.”

Q 26. What is your experience with laser interferometry or other advanced measurement techniques?

I have extensive experience with laser interferometry, a powerful technique for highly precise distance measurements. I’ve used it for various applications, including:

- Calibration of linear scales and encoders: Laser interferometers provide the reference standard for calibrating these crucial components of many precision measurement systems.

- Precision displacement measurements: In applications requiring sub-micrometer resolution, like semiconductor manufacturing or nanotechnology research.

- Straightness and flatness testing: By measuring the distance between a reference surface and a test surface at multiple points, we can determine the straightness or flatness with extreme accuracy.

Beyond laser interferometry, I’m also familiar with other advanced techniques like white-light interferometry (for surface profiling) and confocal microscopy (for high-resolution imaging and surface topography measurements). Each technique has its own strengths and weaknesses, and I choose the optimal approach depending on the application.

Q 27. How familiar are you with different types of coordinate measuring machines (CMMs)?

My familiarity with coordinate measuring machines (CMMs) includes various types:

- Bridge CMMs: These are commonly used in industrial settings for measuring the dimensions and geometry of parts. They offer good accuracy and versatility.

- Gantry CMMs: Typically larger than bridge CMMs, they provide a larger measurement volume and are suitable for inspecting large parts or assemblies.

- Articulated Arm CMMs: These are portable CMMs offering flexibility in accessing hard-to-reach areas. They are less precise than bridge or gantry CMMs but are convenient for on-site measurements.

Beyond the mechanical design, my understanding includes various probing systems (touch-trigger, scanning, optical), software packages used for programming measurement routines and data analysis, and the importance of proper setup and calibration for achieving accurate results. I’m familiar with the limitations of each type and select the optimal CMM based on the specific application requirements.

Q 28. Describe your experience with quality control procedures related to precision measurement.

My experience with quality control (QC) procedures related to precision measurement is extensive and encompasses:

- Measurement System Analysis (MSA): This is crucial for assessing the capability of the measurement system itself. Techniques like gauge repeatability and reproducibility (Gage R&R) studies are used to quantify the variability of the measurement process.

- Statistical Process Control (SPC): Implementing control charts to monitor the stability of the measurement process and identify potential sources of variation.

- Calibration Management: Ensuring all measuring instruments are regularly calibrated and their calibration status is tracked effectively.

- Standard Operating Procedures (SOPs): Adhering to and developing SOPs for all measurement procedures to maintain consistency and accuracy.

- Data Analysis and Reporting: Proper analysis of measurement data, including the calculation and reporting of measurement uncertainty, is essential for making informed decisions regarding product quality.

- Root Cause Analysis: Investigating and rectifying sources of measurement error or inconsistencies.

A real-world example: During a QC audit at a manufacturing plant, we identified significant variation in the measurements taken by different operators using the same CMM. Through a Gage R&R study, we pinpointed inconsistencies in the operators’ measurement procedures, leading to revised training and a significant improvement in data consistency.

Key Topics to Learn for Precision Measurement Instruments Interview

- Calibration and Uncertainty Analysis: Understanding systematic and random errors, calibration procedures, and methods for minimizing uncertainty in measurement results. Practical application includes analyzing data from various instruments to determine accuracy and reliability.

- Sensor Technologies: Familiarity with different types of sensors (e.g., optical, capacitive, inductive, piezoelectric) and their applications in precision measurement. Practical application includes selecting appropriate sensors for specific measurement tasks and understanding their limitations.

- Data Acquisition and Signal Processing: Knowledge of data acquisition systems, analog-to-digital conversion, signal conditioning techniques, and digital signal processing methods for enhancing measurement accuracy. Practical application includes designing and implementing a data acquisition system for a specific measurement challenge.

- Metrology Principles: A strong grasp of fundamental metrological concepts, including traceability, standards, and quality control procedures. Practical application includes developing and implementing quality control procedures for a precision measurement lab.

- Specific Instrument Knowledge: Depending on the role, in-depth understanding of specific types of precision measurement instruments (e.g., interferometers, CMMs, spectrometers) and their operating principles. Practical application includes troubleshooting and maintaining these instruments.

- Problem-Solving and Troubleshooting: Ability to diagnose and resolve issues related to measurement inaccuracies, instrument malfunctions, and data interpretation. Practical application includes developing effective strategies for troubleshooting measurement problems in a real-world setting.

Next Steps

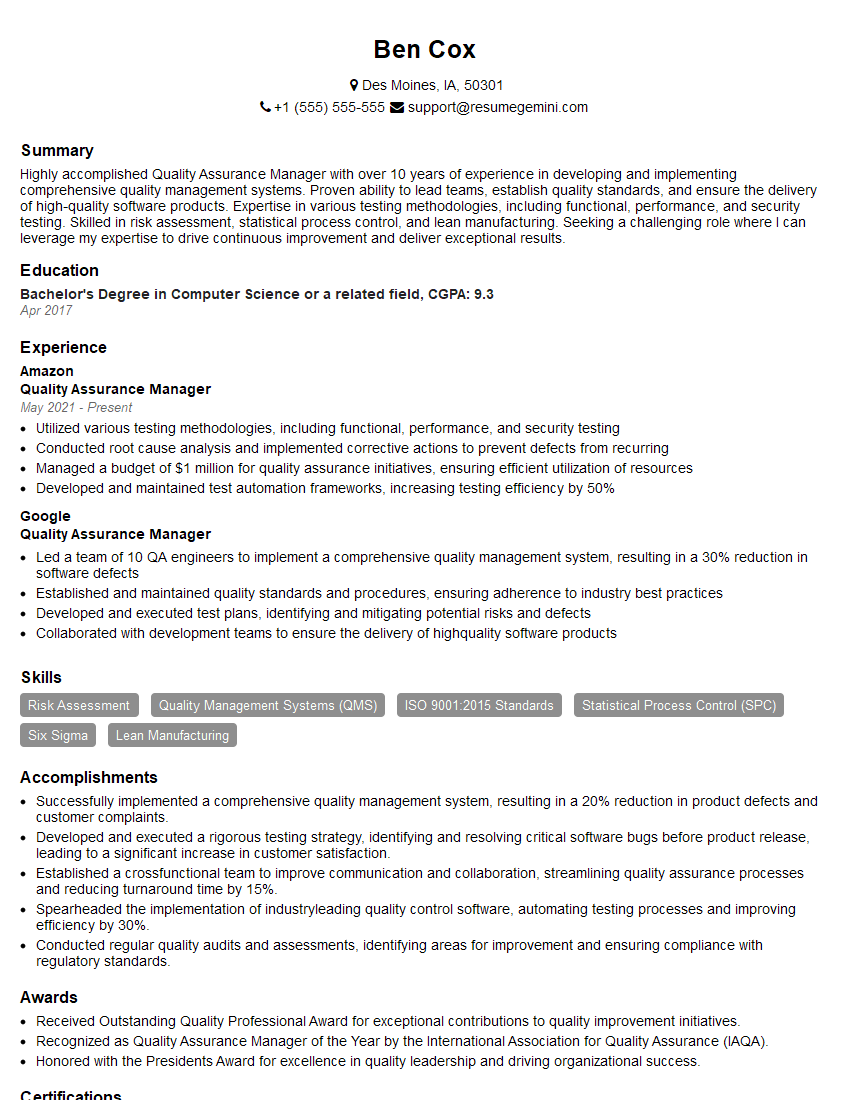

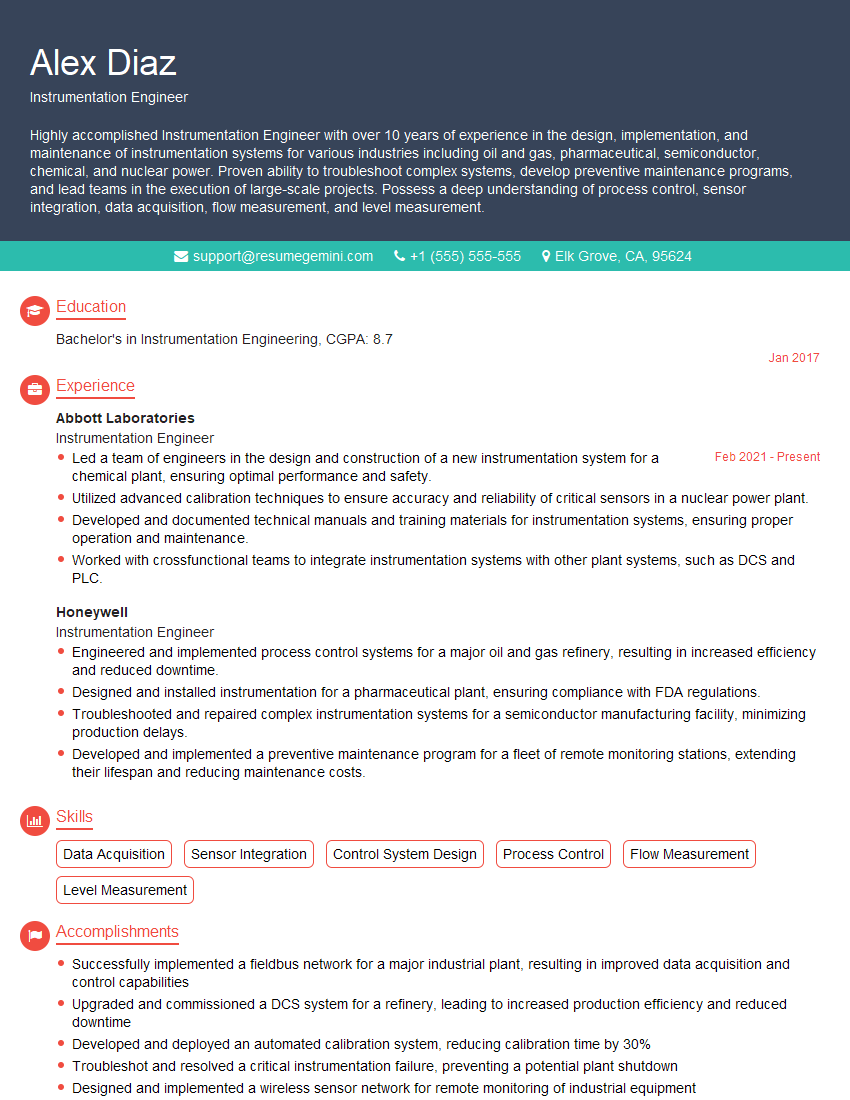

Mastering precision measurement instruments opens doors to exciting career opportunities in diverse fields, offering excellent growth potential and high earning potential. A strong resume is crucial for showcasing your expertise to potential employers. Creating an ATS-friendly resume significantly increases your chances of getting noticed by recruiters. ResumeGemini is a trusted resource that can help you build a professional and impactful resume tailored to the specifics of your experience in precision measurement instruments. Examples of resumes tailored to this field are available to guide you through the process.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good