Preparation is the key to success in any interview. In this post, we’ll explore crucial Proficient in digital archiving and storage systems interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Proficient in digital archiving and storage systems Interview

Q 1. Explain the difference between cold storage and hot storage.

The difference between hot and cold storage lies primarily in accessibility and cost. Think of it like this: hot storage is your readily available, frequently used information – like your kitchen pantry. Cold storage is your long-term storage, like your attic or basement. You access it less frequently, and retrieving items takes longer.

- Hot Storage: Characterized by fast access times and high cost per gigabyte. Ideal for frequently accessed data such as active databases, operational files, and short-term backups. Examples include SSDs (Solid State Drives) and fast, readily accessible network storage.

- Cold Storage: Designed for infrequent access, featuring low cost per gigabyte but slower retrieval times. Perfect for long-term archiving, inactive data sets, and disaster recovery. Examples include tape libraries, cloud-based archival storage, and infrequently accessed hard drives.

In practice, organizations use a tiered storage strategy combining hot and cold storage to optimize costs and access speeds. Frequently used data resides in hot storage, while less frequently accessed data is migrated to colder tiers over time.

Q 2. Describe your experience with different archiving formats (e.g., TIFF, PDF/A, etc.).

My experience spans a range of archiving formats, each with its own strengths and weaknesses. The choice of format depends heavily on the content being archived and its intended longevity.

- TIFF (Tagged Image File Format): Excellent for preserving high-resolution images, supporting lossless compression to maintain image quality. I’ve utilized TIFF extensively in projects archiving historical photographs and engineering drawings, where preserving detail is paramount.

- PDF/A (Portable Document Format/Archive): A standard specifically designed for long-term archiving of documents. It ensures that the document remains viewable and searchable across different software and operating systems over time. I’ve worked with PDF/A extensively in legal and governmental archiving projects, where compliance with archival standards is critical.

- Other Formats: I’ve also worked with formats such as Open XML (for office documents), preservation-focused audio/video formats, and even specialized formats for scientific data sets. The key is always to select the format most appropriate for the data type and long-term preservation requirements.

Format selection is a crucial decision. A poorly chosen format can lead to data loss or corruption over time, undermining the whole purpose of archiving.

Q 3. What are the key considerations for metadata in digital archiving?

Metadata is absolutely vital in digital archiving; it’s the descriptive information that makes your archived data searchable and understandable. Without it, your archive becomes a digital black hole.

- Descriptive Metadata: This includes basic information like title, author, date created, and subject. It’s the stuff you’d find on a library card.

- Administrative Metadata: This captures information about the file itself, like file size, format, creation date, and location within the archive. It helps manage the archive.

- Structural Metadata: This describes how different parts of a digital object relate to each other. For example, the chapters in a book, or frames in a video.

- Preservation Metadata: Crucially, this covers information necessary for long-term preservation, such as checksums (to ensure data integrity), format details, and migration strategies.

Think of it like organizing a large library. Descriptive metadata is like the book title and author, administrative metadata is like the library catalog number, and preservation metadata is like the instructions on how to keep the book safe from damage.

Q 4. How do you ensure data integrity in a digital archive?

Ensuring data integrity is paramount in digital archiving. It involves a multi-pronged approach.

- Checksums: These are cryptographic hashes that act like a digital fingerprint for your files. Any change to the file, even a tiny bit, will result in a different checksum. Regularly calculating and verifying checksums is essential.

- Redundancy: Storing multiple copies of your data in different locations protects against hardware failure and other unforeseen events. This could be on different drives, in different buildings, or even using geographically distributed cloud storage.

- Data Validation: Regularly checking the data itself, perhaps using automated processes, to ensure its consistency and completeness.

- Format Migration: Recognizing that storage formats evolve, planning for the migration of data to newer formats as needed. This avoids future obsolescence and access issues.

- Access Controls: Implementing strong access controls to prevent unauthorized modification or deletion of data.

A robust data integrity strategy minimizes the risk of data loss or corruption and ensures the long-term value of your archive.

Q 5. Explain your experience with different backup and recovery strategies.

My experience with backup and recovery strategies involves a combination of approaches tailored to the specific needs of different projects.

- 3-2-1 Backup Rule: I frequently utilize the 3-2-1 rule: three copies of data, on two different media types, with one copy stored offsite. This provides a strong level of protection against data loss.

- Incremental and Differential Backups: For efficiency, I often implement incremental or differential backups, copying only the changes since the last full or incremental backup, reducing storage requirements and backup time.

- Cloud-Based Backup: Leveraging cloud storage for offsite backups provides redundancy and protection against local disasters. I frequently utilize this to protect from physical damage or theft.

- Disaster Recovery Planning: I also ensure the development of comprehensive disaster recovery plans, which outline detailed procedures for recovering data and systems in the event of a major incident. This includes regularly testing the recovery procedures.

The choice of backup and recovery strategy should be carefully considered based on factors such as data sensitivity, budget, and recovery time objectives (RTO).

Q 6. What are your experiences with version control in digital archives?

Version control in digital archives is crucial for tracking changes and ensuring the availability of previous versions. It’s like having a detailed history of how a document evolved.

- Software-Based Version Control: I utilize software-based version control systems like Git for managing text-based files and metadata. This allows for tracking changes, reverting to earlier versions, and collaborating with others.

- Metadata-Based Versioning: For non-textual data, such as images and videos, I rely on metadata to record version information. This might involve adding version numbers or timestamps to filenames or embedding version information within the file metadata itself.

- Retention Policies: Implementing clear retention policies determines which versions are retained and for how long. This helps to manage storage space and ensure compliance with relevant regulations.

Proper version control maintains the integrity of your archive by ensuring you always have access to previous versions of your files, protecting against accidental data loss or corruption.

Q 7. Describe your experience with different storage technologies (e.g., cloud, tape, disk).

My experience encompasses a wide range of storage technologies, each offering distinct advantages and disadvantages.

- Cloud Storage: Offers scalability, accessibility, and cost-effectiveness for many projects. I frequently use cloud services like AWS S3, Azure Blob Storage, and Google Cloud Storage for archiving and backup. However, cost can become a significant factor for very large archives, and data security and privacy must be carefully considered.

- Tape Storage: Provides a cost-effective solution for long-term archiving of large datasets. Tape libraries are ideal for cold storage, offering high storage density and durability. However, access speeds are significantly slower compared to disk-based systems.

- Disk Storage: Offers faster access speeds compared to tape, making it suitable for both hot and warm storage. Disk arrays or networked storage area networks (SANs) are commonly used for this purpose. However, the cost per gigabyte is typically higher than tape, and the lifespan of disk drives is a factor to consider.

The optimal storage technology depends on factors like budget, access requirements, data volume, and long-term preservation goals. Often a hybrid approach, combining different technologies, is the most effective solution.

Q 8. How do you handle data migration from legacy systems to modern archives?

Migrating data from legacy systems to modern archives is a crucial process requiring careful planning and execution. It involves a multi-stage approach focusing on data assessment, extraction, transformation, and loading (ETL), validation, and finally, the archival process itself.

First, we conduct a thorough assessment of the legacy system, identifying the data to be migrated, its format, structure, and potential challenges. This involves understanding the system’s architecture, data types, and any existing metadata. For example, migrating from a COBOL-based mainframe system to a cloud-based archive requires careful mapping of data fields and structures. This step often involves working closely with database administrators and system architects.

Next, the ETL process begins. Data is extracted from the legacy system, transformed to be compatible with the modern archive (e.g., converting file formats, cleaning up inconsistencies), and loaded into the new system. Automated tools are heavily utilized, and rigorous testing is critical at every step to ensure data integrity. Imagine migrating images – we’d need to standardize resolution and file formats to ensure consistency across the archive.

Validation is crucial. We use checksums and other verification methods to confirm data integrity during and after the migration. Finally, the migrated data is indexed and organized within the modern archive, ready for retrieval and future use. This may involve creating new metadata schemas or enriching existing metadata to improve searchability and discoverability.

Q 9. Explain your understanding of data retention policies and compliance regulations.

Data retention policies define how long an organization must keep different types of data, while compliance regulations dictate the legal and regulatory requirements for data storage, security, and access. Understanding and adhering to both is essential for mitigating legal risk and maintaining data integrity.

Retention policies vary depending on data sensitivity, legal obligations, and business requirements. For instance, financial records might need to be kept for seven years, while marketing data may have shorter retention periods. These policies must be documented and regularly reviewed, taking into account changes in regulations and business needs.

Compliance regulations, such as GDPR (General Data Protection Regulation), HIPAA (Health Insurance Portability and Accountability Act), and SOX (Sarbanes-Oxley Act), impose strict rules on data handling. They dictate how data is collected, processed, stored, secured, and ultimately disposed of. Non-compliance can lead to hefty fines and reputational damage. For instance, ensuring compliance with GDPR requires detailed documentation of consent processes and enabling user rights concerning access, correction, and deletion.

My experience includes developing and implementing data retention policies aligned with industry best practices and specific regulatory requirements, ensuring that the organization maintains a compliant and efficient archival system.

Q 10. How do you ensure the long-term accessibility of digital archives?

Ensuring long-term accessibility of digital archives requires a multi-faceted approach centered around format preservation, metadata management, and technological foresight. Think of it like preserving historical documents – we need to ensure they remain readable even centuries later.

Format preservation focuses on utilizing open, stable file formats that are less likely to become obsolete. We avoid proprietary formats and regularly assess the risk of format obsolescence, potentially migrating data to newer, supported formats as needed. Imagine converting all our Word 97 documents to a modern, widely supported format to prevent future incompatibility issues.

Metadata management is key for discoverability and retrieval. Rich, accurate metadata is crucial. This includes descriptive metadata (title, author, date), structural metadata (file organization), and administrative metadata (access rights). Regular metadata audits and updates are necessary to ensure accuracy and completeness.

Technological foresight involves anticipating future technological changes. This includes selecting storage systems and technologies that are expected to remain relevant for many years. It also includes choosing vendors with strong track records and reliable support services, allowing for smooth upgrades and potential technology transitions down the line. Regular audits and technology refreshes are essential to stay ahead of the curve.

Q 11. What is your experience with disaster recovery planning for digital archives?

Disaster recovery planning for digital archives is critical. It requires a robust strategy that protects against data loss from various threats, including natural disasters, cyberattacks, and equipment failures. Think of this as having a backup plan for your backup plan.

A comprehensive plan typically involves:

- Offsite backups: Regularly backing up the archive to a geographically separate location, protecting against local disasters.

- Redundancy and replication: Implementing redundant storage systems and data replication to ensure data availability even if one system fails.

- Data security measures: Implementing robust cybersecurity measures, such as encryption and access control, to protect against unauthorized access and data breaches.

- Recovery procedures: Developing detailed recovery procedures outlining steps to restore the archive in case of a disaster. These procedures must be regularly tested through drills and simulations.

- Business continuity planning: Ensuring that essential archival processes can continue even during disruptions.

My experience includes designing and implementing disaster recovery plans for large-scale digital archives, including regular testing and refinement to ensure plan effectiveness and preparedness.

Q 12. Describe your familiarity with different data deduplication techniques.

Data deduplication techniques are used to reduce storage space and improve efficiency by eliminating redundant copies of data. Several techniques exist:

- Exact deduplication: Identifies and removes identical copies of files byte-by-byte. This is the most common approach and is often used in backup and archival systems.

- Near deduplication: Identifies and removes nearly identical files, allowing for minor variations. This can be useful for handling files that have undergone minor edits or revisions.

- Content-aware deduplication: Analyzes the content of files to identify similarities and eliminate redundancy even if the file names or metadata differ. This approach requires sophisticated algorithms and is more computationally intensive.

Choosing the appropriate technique depends on factors such as the type of data, storage requirements, and performance considerations. Exact deduplication is generally effective for many types of data. Near deduplication is useful for managing versions of documents, and content-aware techniques offer better efficiency for large, complex datasets. I have extensive experience in implementing and managing various deduplication strategies in different archival contexts.

Q 13. How do you manage the security and access control of digital archives?

Security and access control are paramount for digital archives. A robust approach is required to safeguard sensitive data and prevent unauthorized access.

Measures include:

- Access control lists (ACLs): Defining granular access permissions based on roles and responsibilities. This ensures only authorized users can access specific data.

- Encryption: Protecting data both in transit and at rest using strong encryption algorithms. This prevents unauthorized access even if data is intercepted.

- Authentication and authorization: Implementing multi-factor authentication and robust authorization mechanisms to verify user identities and control access privileges.

- Regular security audits: Conducting regular security audits and vulnerability assessments to identify and address potential security weaknesses.

- Data loss prevention (DLP) tools: Utilizing DLP tools to monitor and prevent sensitive data from leaving the organization’s control.

Implementing these security measures requires a holistic approach integrating technological safeguards and clear security policies. My experience includes implementing and managing comprehensive security frameworks for digital archives, ensuring compliance with relevant security standards and regulations.

Q 14. What are your experiences with implementing data governance frameworks?

Data governance frameworks provide a structured approach to managing data throughout its lifecycle, from creation to disposal. It ensures data quality, consistency, and compliance. Think of it as a comprehensive set of rules and guidelines for how we handle data, ensuring we don’t lose control.

Implementing a data governance framework typically involves:

- Defining data ownership and responsibilities: Assigning clear roles and responsibilities for data management across the organization.

- Establishing data quality standards: Defining standards for data accuracy, completeness, consistency, and timeliness.

- Developing data policies and procedures: Creating policies and procedures that govern data access, use, storage, and disposal.

- Implementing data management tools and technologies: Utilizing tools and technologies to support data governance activities, such as metadata management systems and data quality monitoring tools.

- Monitoring and enforcement: Regularly monitoring adherence to data governance policies and procedures, and taking corrective action when necessary.

My experience includes designing and implementing data governance frameworks in diverse organizational settings, aligning them with business objectives and regulatory requirements. This often involves working closely with stakeholders across different departments to ensure buy-in and effective implementation.

Q 15. Explain your understanding of different archival storage formats and their suitability.

Archival storage formats are crucial for long-term data preservation. The choice depends heavily on factors like data type, storage capacity needs, budget, and expected access frequency. Here are some key formats:

- TIFF (Tagged Image File Format): Excellent for preserving image integrity, offering lossless compression. It’s a standard in libraries and archives for handling scanned documents and photographs.

- PDF/A (Portable Document Format/Archival): Designed specifically for archiving documents, ensuring long-term readability and accessibility. Different levels (PDF/A-1, PDF/A-2, etc.) offer varying features and compression options. It’s widely adopted due to its cross-platform compatibility.

- OpenEXR: A high-dynamic-range (HDR) image file format commonly used in film and visual effects, offering superior color depth and quality for long-term preservation of visual assets.

- WAV (Waveform Audio File Format): A lossless audio format offering high fidelity suitable for archiving music and sound recordings. It is less compressed than MP3, leading to larger files but retaining better quality.

- Object Storage (Cloud-based): This utilizes cloud platforms like AWS S3 or Azure Blob Storage. It offers scalability and cost-effectiveness for vast datasets but relies heavily on the vendor’s reliability and data security measures. Data can be stored in various formats, but metadata management becomes particularly crucial.

The suitability of each format is determined by a careful analysis of the project requirements. For instance, while TIFF is ideal for preserving image quality, its large file size might make it impractical for archiving massive image collections. In such cases, a lossless compression format, or a combination of formats with different compression levels, might be more efficient.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with using archival software.

My experience with archival software encompasses a range of applications, from open-source tools to commercial solutions. I’ve worked extensively with systems such as Archivematica (open-source, robust workflow management), Rosetta (for digital preservation planning), and various proprietary digital asset management (DAM) systems.

In a recent project, I used Archivematica to ingest, process, and store thousands of born-digital files and scanned documents from a university library. The software’s features, including checksum verification, metadata enrichment, and audit trails, proved invaluable in ensuring the integrity and authenticity of the archival materials. The ability to create and manage preservation copies (e.g., creating a copy using different compression algorithms or using different storage systems) using tools like Archivematica is crucial for long-term data survival. The software facilitated the establishment of a secure and scalable archive, adhering to best practices.

Q 17. How do you assess the risks associated with data loss or corruption in digital archives?

Assessing risks to digital archives requires a multi-faceted approach. I typically use a risk assessment framework that considers:

- Hardware failure: Risk of hard drive crashes, network outages, or storage device degradation. Mitigation strategies include RAID configurations, redundant backups, and employing geographically diverse storage locations.

- Software obsolescence: Risk of data becoming inaccessible due to outdated software or file formats. Addressing this involves using open formats, file format migration strategies, and regularly testing access with modern systems.

- Data corruption: This can occur due to software bugs, power outages, or physical damage. Employing checksums and periodic data validation helps to detect and address corruption. Employing version control systems can also help to detect changes which may indicate corruption.

- Cybersecurity threats: Data breaches, ransomware attacks, and unauthorized access are serious risks. Mitigation involves robust security protocols, encryption, access controls, and regular security audits.

- Human error: Accidental deletion, improper handling, or lack of proper documentation can lead to data loss. Well-defined procedures, training, and careful monitoring are critical.

I typically document these risks in a formal risk assessment report and implement a detailed mitigation plan tailored to the specific context of the archive. I also regularly perform routine checks to detect potential problems before they become critical.

Q 18. What is your approach to managing large-scale digital archiving projects?

Managing large-scale digital archiving projects necessitates a structured and methodical approach. I typically use a project management framework like Agile, breaking down the project into manageable phases, tasks, and deliverables. This involves:

- Detailed planning: Defining scope, objectives, timelines, and resources. This includes choosing appropriate formats, selecting storage solutions, and setting up metadata schemas.

- Ingestion and processing: Developing and implementing robust workflows for importing, validating, and processing data. Automation is crucial here to reduce manual effort and potential human error.

- Metadata creation and management: Establishing a comprehensive metadata schema that aligns with relevant standards (e.g., Dublin Core, PREMIS) and ensures data discoverability and searchability.

- Storage and preservation: Selecting appropriate storage solutions, implementing backup and disaster recovery plans, and regular monitoring of storage integrity. Employing methods like checksums and periodic data validation are critical here.

- Access and retrieval: Designing user interfaces and access protocols for retrieving and using archival materials. This frequently involves providing a search interface and user permissions to ensure access control and confidentiality.

- Ongoing maintenance: Establishing regular monitoring and maintenance procedures to ensure data integrity, address obsolescence, and adapt to evolving technologies and standards.

Throughout the process, I prioritize clear communication with stakeholders, ensuring alignment on goals and progress. Regular reporting and reviews help to monitor progress and address any challenges.

Q 19. How do you prioritize different archiving tasks based on business needs and regulatory requirements?

Prioritizing archiving tasks requires balancing business needs with regulatory compliance. I typically use a risk-based approach, considering factors such as:

- Legal and regulatory requirements: Archiving data that’s subject to legal hold or retention policies is a high priority. Understanding local and international laws regarding data retention is crucial.

- Business criticality: Data essential for operations, decision-making, or revenue generation should be prioritized. These often include records required for audits, financial reporting, or legal defense.

- Data sensitivity: Confidential or sensitive data requires higher security and priority archiving to ensure compliance with privacy regulations (e.g., GDPR, CCPA).

- Data volume and format: Large datasets or those in uncommon formats may require more time and resources to archive effectively. A phased approach may be necessary.

- Cost and resources: Resource allocation is critical. Prioritizing tasks based on available budget and personnel is essential. A cost-benefit analysis is crucial in this phase to determine how to best prioritize limited resources.

I often create a prioritized task list using a framework such as a MoSCoW method (Must have, Should have, Could have, Won’t have) to help determine the priority levels based on these criteria. Regular review and adjustments are crucial to adapt to changing circumstances.

Q 20. Explain your experience with metadata schemas and their application in archiving.

Metadata schemas are fundamental to effective archiving. They provide structured descriptions of archival materials, making them discoverable, searchable, and understandable. I have experience working with various schemas, including Dublin Core, PREMIS, and MODS.

Dublin Core provides a simple and widely used set of metadata elements, useful for basic descriptive metadata such as title, creator, subject, and date. PREMIS, on the other hand, is more comprehensive and designed specifically for archival and preservation purposes. It includes detailed information about file integrity, preservation actions, and events related to the item. MODS (Metadata Object Description Schema) is another popular choice focusing on bibliographic descriptions of materials.

In a recent project, we implemented a PREMIS-based metadata schema to manage a large collection of digital photographs. This allowed us to track not only descriptive metadata (e.g., photographer, date taken, subject matter) but also preservation metadata (e.g., file format, checksums, preservation events). This comprehensive metadata ensures the long-term preservation of the collection and its accurate description.

Q 21. Describe your familiarity with different data compression techniques.

Data compression techniques are essential for managing storage space and reducing transfer times in digital archives. The choice depends on the balance between compression ratio and preservation of data integrity. Here are some common techniques:

- Lossless compression: Algorithms such as ZIP, FLAC (for audio), and PNG (for images) compress data without losing any information. This is critical for archival purposes where data integrity is paramount. Examples include

gzipandbzip2. - Lossy compression: Techniques like JPEG (for images) and MP3 (for audio) achieve higher compression ratios by discarding some data. These are suitable for situations where some data loss is acceptable, but they’re generally avoided in archival contexts unless a proper evaluation of quality loss is performed.

- Delta compression: This method only stores the differences between successive versions of a file, reducing storage requirements for versioned documents. For example, instead of storing every version of a document, it might store only the changes between versions. A command like

rsyncemploys delta transfer which is very useful for backing up archives and for efficient file transmission across networks.

Choosing the right compression technique depends on the nature of the data and the acceptable level of data loss. For archival purposes, lossless compression is generally preferred to ensure long-term data integrity.

Q 22. How do you measure the success of a digital archiving strategy?

Measuring the success of a digital archiving strategy isn’t solely about terabytes stored; it’s about achieving defined objectives. We need to establish Key Performance Indicators (KPIs) upfront, aligning with business needs and regulatory requirements. These KPIs might include:

- Data accessibility: How quickly and easily can authorized users retrieve archived data? We’d track average retrieval time and success rate.

- Data integrity: Is the data accurate, complete, and unaltered? This involves regular checksum verification and data validation checks. A low error rate signifies success.

- Storage cost efficiency: Are we using storage optimally, minimizing costs while maintaining accessibility? We monitor storage usage, cost per GB, and explore options like tiered storage.

- Compliance adherence: Are we meeting all relevant legal and regulatory requirements regarding data retention and access? Regular audits and compliance reports are crucial here.

- System uptime and reliability: How often is the archive system available and operational? We track system downtime and mean time to recovery (MTTR).

For example, in a healthcare setting, a successful strategy might be measured by the speed of retrieving patient records during an audit, while in a financial institution, it might be measured by the successful retrieval of transaction data for regulatory compliance.

Q 23. What are your experiences with auditing digital archives for compliance?

Auditing digital archives for compliance is a critical process. My experience involves a multi-step approach, starting with understanding the relevant regulations (like HIPAA, GDPR, etc.). Then, I develop an audit plan that focuses on key areas such as:

- Data retention policies: Are we adhering to defined retention periods for different data types? This includes verifying file metadata and comparing it against retention schedules.

- Access controls: Are access permissions correctly configured to ensure only authorized personnel can access sensitive data? We’d review access logs and configurations.

- Data integrity: Are data backups and checksums valid, ensuring data hasn’t been corrupted or tampered with? We employ checksum verification tools and compare with known good copies.

- Security measures: Are appropriate security measures in place to protect the archive from unauthorized access, cyberattacks, and data breaches? This includes reviewing encryption, firewall rules, and intrusion detection systems.

I’ve used tools like automated audit software to streamline the process and generate comprehensive reports. Addressing any discrepancies found during the audit is crucial, often involving policy updates, system enhancements, or data remediation.

Q 24. Describe your understanding of data lifecycle management within the context of digital archiving.

Data lifecycle management (DLM) is crucial for effective digital archiving. It’s a structured approach covering the entire lifespan of data, from creation to disposal. Within archiving, DLM involves:

- Creation and ingestion: How data enters the archive, including metadata capture and format standardization.

- Storage and access: The methods used to store and access data, potentially utilizing different storage tiers based on frequency of access.

- Retention and disposition: Defining how long data is retained and the procedures for secure deletion or transfer to long-term storage (like cold storage).

- Preservation and migration: Strategies for ensuring long-term data integrity, including data migration to newer storage technologies as needed.

Think of it like managing a library: you acquire books (creation), shelve them (storage), keep them for a certain time (retention), and eventually remove obsolete ones (disposition). DLM ensures the entire process is well-defined and efficient.

Q 25. Explain your experience with working with different types of databases (SQL, NoSQL) for archiving purposes.

I’ve worked extensively with both SQL and NoSQL databases for archiving. The choice depends on the nature of the data and the access patterns.

- SQL databases (like PostgreSQL or MySQL) are ideal for structured data with well-defined schemas, offering robust transactional capabilities and efficient querying. This is suitable for archiving metadata about the archived files, enabling efficient searching and retrieval based on specific attributes.

- NoSQL databases (like MongoDB or Cassandra) are better suited for unstructured or semi-structured data. They offer flexibility and scalability, making them suitable for handling large volumes of diverse data types. For example, storing indexes or pointers to unstructured data within a NoSQL database can be efficient.

Often, a hybrid approach is used, leveraging the strengths of both. For example, I might use an SQL database to manage metadata and a NoSQL database to store pointers to large binary files stored in object storage.

Q 26. How do you handle the challenges of managing unstructured data in a digital archive?

Managing unstructured data in a digital archive presents unique challenges. The lack of predefined schema makes organization, search, and retrieval complex. My strategies include:

- Metadata enrichment: Adding descriptive metadata (tags, keywords, classifications) to unstructured files. This allows for improved searchability and organization.

- Content analysis: Using techniques like natural language processing (NLP) to extract information from unstructured data, further enhancing searchability.

- Data classification: Categorizing unstructured data based on content, type, and sensitivity to improve management and security.

- Version control: Implementing version control systems to track changes in unstructured data, maintaining data integrity and facilitating recovery.

- Storage optimization: Employing object storage solutions optimized for handling large volumes of unstructured data.

For example, imagine archiving project files from various design teams. Metadata like project name, author, date, and file type are crucial for retrieval. Content analysis might even identify key terms within design documents.

Q 27. Describe your understanding of cloud-based archiving solutions and their security implications.

Cloud-based archiving solutions offer scalability, cost-effectiveness, and accessibility. However, security is paramount. My understanding encompasses:

- Data encryption: Ensuring data is encrypted both in transit and at rest, using strong encryption algorithms. This protects against unauthorized access, even if a breach occurs.

- Access control: Implementing robust access control mechanisms, using granular permissions to limit who can access specific data within the archive.

- Compliance certifications: Choosing cloud providers with relevant certifications (like ISO 27001, SOC 2) to demonstrate their commitment to security and compliance.

- Data governance policies: Establishing clear policies for data retention, access, and disposal, aligning with regulatory requirements.

- Regular security audits: Conducting regular security assessments and penetration testing to identify vulnerabilities and strengthen security posture.

It’s vital to carefully choose a provider and continuously monitor their security practices. A shared responsibility model exists where the provider ensures the underlying infrastructure’s security, and the organization is responsible for its data and configurations.

Q 28. What strategies would you employ to minimize storage costs while maintaining data accessibility?

Minimizing storage costs while maintaining data accessibility requires a multi-pronged approach:

- Tiered storage: Utilizing different storage tiers (hot, warm, cold) based on data access frequency. Frequently accessed data resides in faster, more expensive storage, while infrequently accessed data is stored in cheaper, slower storage.

- Data deduplication: Eliminating redundant copies of data to reduce overall storage needs. This is especially effective for large archives with many similar files.

- Data compression: Compressing data to reduce its size before storage. This can significantly lower storage costs without impacting accessibility (decompression is done upon retrieval).

- Storage optimization: Regularly reviewing storage usage and identifying opportunities to optimize storage allocation. This might involve deleting obsolete data, archiving less critical data to less expensive storage tiers, or migrating to more cost-effective storage providers.

- Cloud storage options: Exploring different cloud storage options and pricing models to find the best balance between cost and performance.

Imagine a library: frequently borrowed books are kept on easily accessible shelves, while rarely used books are stored in less accessible locations. This analogy reflects the principle of tiered storage for cost optimization.

Key Topics to Learn for Proficient in Digital Archiving and Storage Systems Interview

- Data Lifecycle Management: Understanding the stages of data from creation to disposal, including retention policies and compliance requirements.

- Storage Technologies: Familiarity with various storage solutions (cloud, on-premise, hybrid), their advantages, disadvantages, and appropriate use cases. This includes understanding concepts like object storage, block storage, and file storage.

- Archiving Methods and Formats: Knowledge of different archiving techniques (e.g., compression, deduplication) and file formats (e.g., TIFF, PDF/A) suitable for long-term preservation.

- Metadata Management: Understanding the importance of accurate and comprehensive metadata for efficient retrieval and management of archived data. Explore different metadata schemas and standards.

- Data Security and Access Control: Implementing robust security measures to protect archived data from unauthorized access, loss, or corruption. This includes encryption, access control lists, and disaster recovery planning.

- Data Migration and Integration: Strategies for migrating data between different storage systems and integrating archiving processes with existing workflows.

- Disaster Recovery and Business Continuity: Developing and implementing plans to ensure data availability and recovery in the event of a disaster.

- Compliance and Regulations: Familiarity with relevant regulations and industry best practices for data archiving and retention (e.g., GDPR, HIPAA).

- Practical Application: Be prepared to discuss specific examples of how you have applied these concepts in past roles. Focus on quantifiable results and problem-solving approaches.

- Troubleshooting and Problem Solving: Be ready to discuss how you would troubleshoot common issues related to data storage and archiving, such as data loss, performance bottlenecks, or compliance violations.

Next Steps

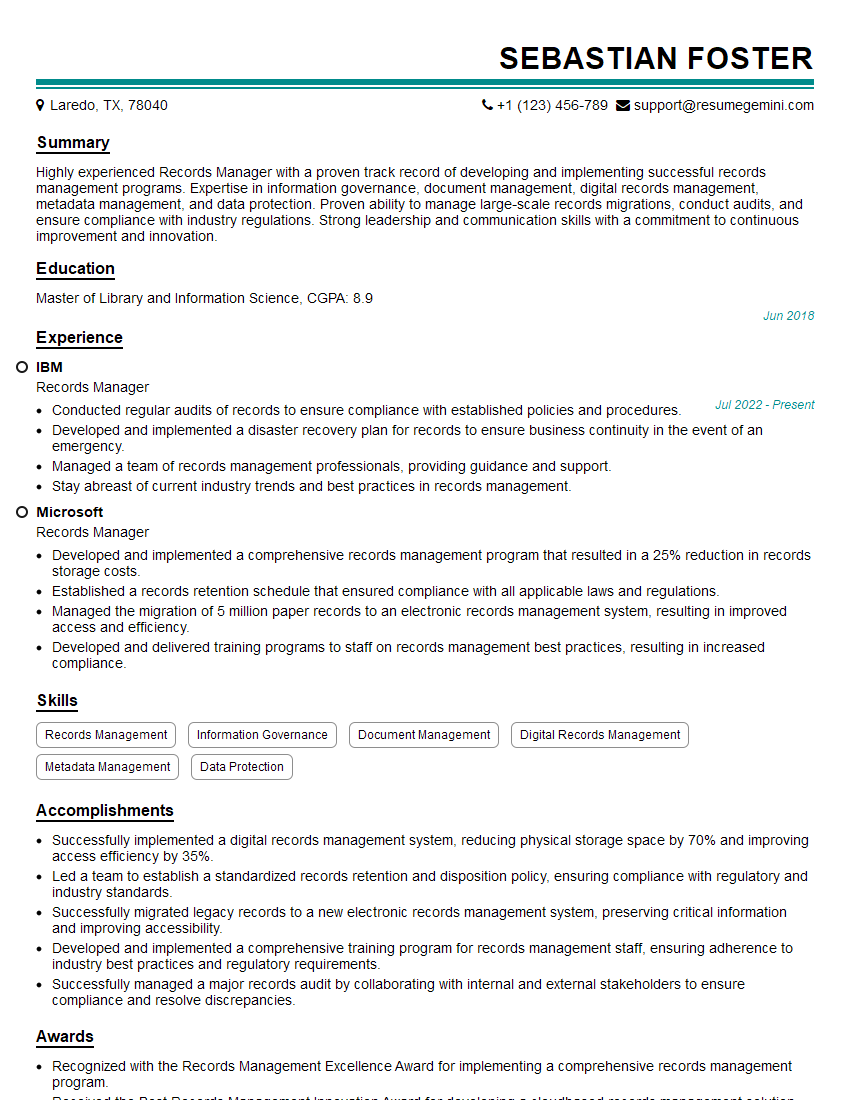

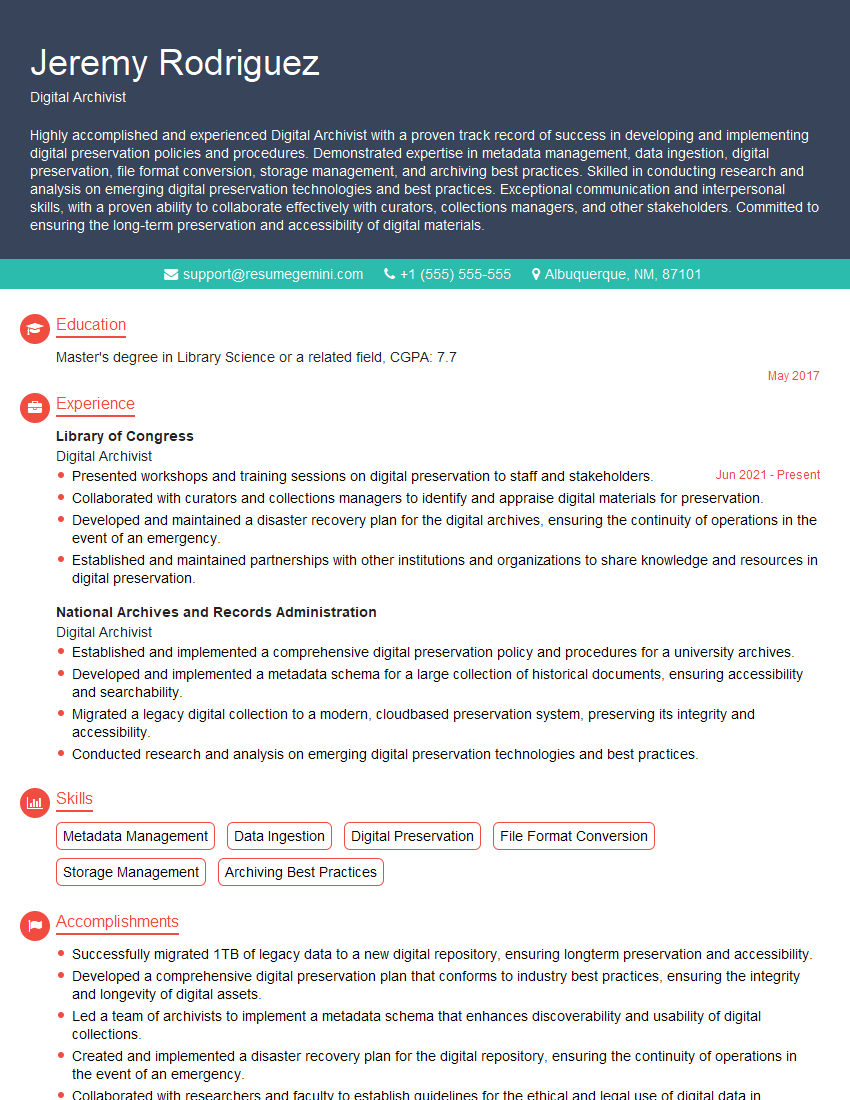

Mastering digital archiving and storage systems is crucial for career advancement in today’s data-driven world. These skills are highly sought after, opening doors to diverse and rewarding opportunities. To maximize your job prospects, focus on building an ATS-friendly resume that highlights your expertise effectively. ResumeGemini is a trusted resource to help you create a professional and impactful resume. We provide examples of resumes tailored to “Proficient in digital archiving and storage systems” roles to help guide you. Invest time in crafting a strong resume – it’s your first impression to potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good