Unlock your full potential by mastering the most common Proficient in Python or R programming languages interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Proficient in Python or R programming languages Interview

Q 1. Explain the difference between lists and tuples in Python.

Lists and tuples are both fundamental data structures in Python used to store sequences of items, but they differ significantly in their mutability—the ability to be changed after creation.

- Lists: Lists are mutable, meaning you can modify their contents (add, remove, or change elements) after they’re created. They are defined using square brackets

[]. - Tuples: Tuples are immutable; once created, their contents cannot be altered. They are defined using parentheses

().

Think of a list like a whiteboard—you can erase and rewrite on it. A tuple is more like a printed document—it’s fixed and cannot be easily changed.

Examples:

my_list = [1, 2, 'apple', 3.14]my_tuple = (1, 2, 'apple', 3.14)You can append to my_list but not to my_tuple. This immutability makes tuples suitable for situations where data integrity is paramount, like representing coordinates or database records.

Q 2. What are the different types of data structures in R?

R boasts a rich collection of data structures, each suited for different tasks. Key types include:

- Vectors: The fundamental data structure in R, holding a sequence of elements of the same type (numeric, character, logical, etc.). Think of it as a single column in a spreadsheet.

- Matrices: Two-dimensional arrays with rows and columns, containing elements of the same type. Similar to a table with only one data type.

- Arrays: Similar to matrices but can have more than two dimensions. Useful for multi-dimensional data representations.

- Lists: Ordered collections of elements of different types. They’re like lists in Python, but more flexible in terms of element types.

- Data Frames: Arguably the most important structure for data analysis. It’s a tabular data structure similar to a spreadsheet or SQL table, where each column can have a different data type.

- Factors: Represent categorical data. They’re particularly helpful for statistical modeling, as they handle categories more efficiently than character vectors.

For example, a data frame would be perfect for storing information about customers (name, age, city), with each piece of information being a different column and each customer a row. Vectors would hold the individual customer attributes (like city names) themselves.

Q 3. Describe the concept of object-oriented programming in Python.

Object-Oriented Programming (OOP) in Python is a powerful paradigm that organizes code around ‘objects’ that encapsulate data (attributes) and methods (functions) that operate on that data. This promotes modularity, reusability, and maintainability.

- Classes: Blueprints for creating objects. They define the attributes and methods that objects of that class will have.

- Objects: Instances of a class. They are concrete realizations of the class blueprint.

- Encapsulation: Bundling data and methods that operate on that data within a class, hiding internal details from the outside world. This protects data integrity and simplifies interaction.

- Inheritance: Creating new classes (child classes) based on existing classes (parent classes). Child classes inherit attributes and methods from their parents and can add their own unique features.

- Polymorphism: The ability of objects of different classes to respond to the same method call in their own specific way. This allows for flexible and extensible code.

Example:

class Dog: def __init__(self, name, breed): self.name = name self.breed = breed def bark(self): print('Woof!') my_dog = Dog('Buddy', 'Golden Retriever') my_dog.bark()Here, Dog is a class, and my_dog is an object of that class. The bark method is specific to the Dog class.

Q 4. How do you handle missing data in R?

Missing data is a common challenge in R. There are several strategies for handling it, depending on the nature and extent of the missingness.

- Detection: R functions like

is.na()readily identify missing values (represented asNA). - Listwise Deletion: Removing entire rows (observations) that contain any missing values. Simple but can lead to significant data loss if missingness is widespread.

- Pairwise Deletion: Excluding observations only when needed for specific analyses. For instance, a regression might use only complete cases for a given pair of variables.

- Imputation: Replacing missing values with estimated values. Common methods include:

- Mean/Median/Mode Imputation: Replacing with the average, middle value, or most frequent value for that variable. Simple but can distort the distribution.

- Regression Imputation: Using regression models to predict missing values based on other variables. More sophisticated but assumes relationships between variables.

- Multiple Imputation: Creating multiple plausible imputed datasets to account for uncertainty in the imputation process. More computationally intensive but provides more robust results.

The choice of method depends on the dataset and the analytical goals. Multiple imputation is generally preferred for its statistical rigor when dealing with substantial missing data.

Q 5. Explain different methods for data cleaning in Python.

Data cleaning is crucial for ensuring data quality and accuracy in Python. It often involves several steps:

- Handling Missing Values: Similar to R, you can use techniques like dropping rows with missing data (

dropna()in pandas), imputation (usingfillna()with mean, median, or other values), or more advanced methods like k-Nearest Neighbors imputation. - Removing Duplicates: Pandas provides the

drop_duplicates()function to easily remove rows that are identical or duplicate based on specified columns. - Data Transformation: This may include converting data types (using

astype()), standardizing or normalizing values (usingStandardScaler()orMinMaxScaler()from scikit-learn), or handling outliers (using techniques like Winsorization or removing outliers based on standard deviation). - Data Consistency: Checking for inconsistencies in data entry (e.g., inconsistent spellings or formats). This may involve using regular expressions to standardize formats or creating custom functions to identify and correct inconsistencies.

- Outlier Detection and Treatment: Identifying outliers using methods like boxplots or scatter plots, and then deciding to remove, transform, or cap them depending on the context. Context matters greatly here, as outliers may be genuine data points that need preservation.

Example (using pandas):

import pandas as pd #Example: Dropping rows with missing values df.dropna(inplace=True)Q 6. What are the advantages and disadvantages of using Python vs. R for data analysis?

Python and R are both powerful tools for data analysis, but they have different strengths and weaknesses:

- Python:

- Advantages: More general-purpose language; strong in scripting, web development, and automation; extensive libraries for various tasks beyond data analysis (e.g., machine learning, web scraping); larger community support.

- Disadvantages: Data analysis-specific features might require more coding compared to R; can be less intuitive for purely statistical tasks.

- R:

- Advantages: Specifically designed for statistical computing and data analysis; rich ecosystem of packages for statistical modeling and visualization; often more concise syntax for statistical tasks.

- Disadvantages: Less versatile than Python; steeper learning curve for non-statistical programming; community size and resources are smaller than Python’s.

The best choice depends on your project needs. Python might be preferred for broader projects involving machine learning, data engineering, and integration with other systems. R excels in purely statistical analysis and tasks involving specialized statistical modeling.

Q 7. Explain different types of joins in SQL.

SQL joins combine rows from two or more tables based on a related column between them. Different types of joins exist, each with distinct behaviors:

- INNER JOIN: Returns only the rows where the join condition is met in both tables. Think of it as finding the intersection of data.

- LEFT (OUTER) JOIN: Returns all rows from the left table (the one specified before

LEFT JOIN) and the matching rows from the right table. If there’s no match in the right table, it fills inNULLvalues. - RIGHT (OUTER) JOIN: Similar to

LEFT JOINbut returns all rows from the right table and matching rows from the left.NULLs fill in unmatched rows from the left. - FULL (OUTER) JOIN: Returns all rows from both tables. If a row in one table doesn’t have a match in the other, it fills in

NULLvalues for the missing columns.

Example (INNER JOIN):

SELECT * FROM Customers INNER JOIN Orders ON Customers.CustomerID = Orders.CustomerID;This would only show customer records with corresponding order records. Using LEFT JOIN instead would show all customer records, even those without any orders, showing NULL values for order-related columns in those cases.

Q 8. What are lambda functions in Python and how are they used?

Lambda functions in Python, also known as anonymous functions, are small, single-expression functions defined without a name using the lambda keyword. They’re incredibly useful for short, simple operations where defining a full function using def would be overkill. Think of them as quick, disposable functions.

Syntax: lambda arguments: expression

Example: Let’s say you need a function to square a number. A regular function would look like this:

def square(x):

return x*x

print(square(5)) # Output: 25Using a lambda function, this becomes:

square = lambda x: x*x

print(square(5)) # Output: 25

Lambda functions are particularly handy within higher-order functions like map, filter, and reduce, where you need to apply a simple operation to each item in an iterable without the need for a separate named function. For example:

numbers = [1, 2, 3, 4, 5]

squared_numbers = list(map(lambda x: x*x, numbers))

print(squared_numbers) # Output: [1, 4, 9, 16, 25]

In a professional setting, lambda functions can significantly improve code readability and conciseness when used appropriately, especially in data manipulation tasks or functional programming paradigms.

Q 9. How do you perform data manipulation with dplyr in R?

dplyr is a powerful R package for data manipulation. It provides a set of functions that operate on data frames in a consistent and intuitive way, making data wrangling much easier and more readable. It uses a grammar of data manipulation, making complex operations easily chained together.

Key dplyr verbs include:

filter(): Selects rows based on conditions.select(): Selects columns.mutate(): Adds new columns or modifies existing ones.arrange(): Sorts rows.summarize(): Summarizes data (e.g., calculating means, sums).group_by(): Groups data for operations within groups.

Example: Let’s say we have a data frame called df with columns ‘name’, ‘age’, and ‘city’.

library(dplyr)

df <- data.frame(name = c("Alice", "Bob", "Charlie", "Alice"),

age = c(25, 30, 28, 25),

city = c("New York", "London", "Paris", "New York"))

# Filter for people older than 25

filtered_df <- df %>% filter(age > 25)

# Select name and city

selected_df <- df %>% select(name, city)

# Group by city and calculate the average age

grouped_df <- df %>% group_by(city) %>% summarize(mean_age = mean(age))This demonstrates how efficiently dplyr allows us to perform complex data manipulations with a clear and readable syntax. This approach is crucial in professional data analysis workflows for its clarity and maintainability.

Q 10. Describe the process of building a linear regression model in Python.

Building a linear regression model in Python typically involves these steps:

- Import necessary libraries:

import numpy as npandimport statsmodels.api as sm(orfrom sklearn.linear_model import LinearRegression). - Load and prepare your data: Ensure your data is in a suitable format (e.g., NumPy arrays or Pandas DataFrames). Handle missing values and outliers.

- Define dependent and independent variables: The dependent variable (y) is what you’re trying to predict, and the independent variables (X) are the predictors.

- Add a constant to the independent variables (for statsmodels):

X = sm.add_constant(X). This adds an intercept term to the model. - Create and fit the model:

- Using

statsmodels:model = sm.OLS(y, X).fit() - Using

scikit-learn:model = LinearRegression().fit(X, y) - Examine the model summary (statsmodels):

print(model.summary())provides valuable statistics like R-squared, p-values, coefficients, etc. - Make predictions:

predictions = model.predict(X)(orpredictions = model.predict(X_test)for new data). - Evaluate the model: Use metrics like Mean Squared Error (MSE), R-squared, etc., to assess the model’s performance.

Example using statsmodels:

import numpy as np

import statsmodels.api as sm

X = np.array([1, 2, 3, 4, 5])

y = np.array([2, 4, 5, 4, 5])

X = sm.add_constant(X)

model = sm.OLS(y, X).fit()

print(model.summary())

This provides a comprehensive framework for building and evaluating a linear regression model in Python. Remember to choose the appropriate library (statsmodels for detailed statistical analysis or scikit-learn for a simpler, more streamlined approach) based on your needs.

Q 11. Explain different regularization techniques in machine learning.

Regularization techniques in machine learning help prevent overfitting by adding a penalty to the model’s complexity. Overfitting occurs when a model learns the training data too well, including noise, and performs poorly on unseen data. Regularization techniques constrain the magnitude of the model’s coefficients.

Common regularization techniques include:

- L1 Regularization (LASSO): Adds a penalty proportional to the absolute value of the coefficients. This tends to shrink some coefficients to exactly zero, performing feature selection.

- L2 Regularization (Ridge): Adds a penalty proportional to the square of the coefficients. This shrinks coefficients towards zero but rarely sets them to exactly zero.

- Elastic Net: A combination of L1 and L2 regularization, offering the benefits of both.

How they work: The penalty term is added to the model’s loss function. The model is then trained to minimize this augmented loss function, balancing model fit and coefficient size. The strength of the penalty (often denoted by λ or α) is a hyperparameter that needs to be tuned.

Example (L2 regularization with scikit-learn):

from sklearn.linear_model import Ridge

model = Ridge(alpha=1.0) # alpha controls the regularization strength

model.fit(X_train, y_train)

predictions = model.predict(X_test)

Choosing the right regularization technique and tuning the hyperparameter is crucial for optimal model performance and generalization to new, unseen data. Cross-validation is often used to find the best value for the regularization parameter (λ or α).

Q 12. How do you handle imbalanced datasets in machine learning?

Imbalanced datasets, where one class significantly outnumbers others, are a common problem in machine learning. This can lead to models that perform poorly on the minority class, which is often the class of interest. Several techniques can address this:

- Resampling:

- Oversampling: Increases the number of instances in the minority class (e.g., by duplication or generating synthetic samples using techniques like SMOTE).

- Undersampling: Reduces the number of instances in the majority class (e.g., by random removal or more sophisticated techniques like Tomek links).

- Cost-sensitive learning: Assigns different misclassification costs to different classes. This penalizes misclassifying the minority class more heavily, encouraging the model to pay more attention to it.

- Ensemble methods: Techniques like bagging and boosting can be effective, especially when combined with resampling.

- Anomaly detection techniques: If the minority class represents anomalies or outliers, algorithms designed for anomaly detection might be more suitable.

Example (Oversampling with SMOTE in Python):

from imblearn.over_sampling import SMOTE

smote = SMOTE(random_state=42)

X_resampled, y_resampled = smote.fit_resample(X_train, y_train)

The choice of technique depends on the specific dataset and the problem. It’s often beneficial to experiment with different approaches and evaluate their performance using appropriate metrics (e.g., precision, recall, F1-score, AUC).

Q 13. What are different model evaluation metrics for classification problems?

Model evaluation metrics for classification problems assess how well a model predicts class labels. The best choice depends on the specific problem and the relative importance of different types of errors.

- Accuracy: The percentage of correctly classified instances. Simple but can be misleading with imbalanced datasets.

- Precision: Out of all instances predicted as positive, what proportion was actually positive? Focuses on minimizing false positives.

- Recall (Sensitivity): Out of all actual positive instances, what proportion was correctly predicted as positive? Focuses on minimizing false negatives.

- F1-score: The harmonic mean of precision and recall. Balances precision and recall.

- AUC (Area Under the ROC Curve): Measures the model’s ability to distinguish between classes across different thresholds. A higher AUC indicates better performance.

- Confusion Matrix: A table showing the counts of true positives, true negatives, false positives, and false negatives. Provides a detailed breakdown of the model’s performance.

For imbalanced datasets, accuracy can be deceptive. Precision, recall, F1-score, and AUC are more informative.

Q 14. Describe different approaches to feature scaling and selection.

Feature scaling and selection are crucial preprocessing steps in machine learning to improve model performance and efficiency.

Feature Scaling: Transforms features to a similar scale to prevent features with larger values from dominating the model. Common techniques include:

- Standardization (Z-score normalization): Centers the data around zero with a standard deviation of one.

(x - mean) / std - Min-Max scaling: Scales features to a specific range (usually 0-1).

(x - min) / (max - min)

Feature Selection: Reduces the number of features by selecting the most relevant ones. This can improve model performance by reducing noise, overfitting, and computational cost. Methods include:

- Filter methods: Rank features based on statistical measures (e.g., correlation, chi-squared test) and select the top-ranked ones.

- Wrapper methods: Evaluate subsets of features using a model’s performance as a criterion (e.g., recursive feature elimination).

- Embedded methods: Integrate feature selection into the model training process (e.g., L1 regularization).

The choice of scaling and selection methods depends on the dataset and the chosen model. Experimentation and evaluation are key to finding the optimal approach.

Q 15. Explain the difference between supervised and unsupervised machine learning.

Supervised and unsupervised learning are two fundamental approaches in machine learning, distinguished by how they use data to train models. Think of it like teaching a child:

- Supervised learning is like showing a child many labeled pictures of cats and dogs, telling them which is which. The model learns to classify new images based on these labeled examples. It’s ‘supervised’ because we provide the correct answers during training. We have both input features (image data) and the corresponding output (cat or dog). Common supervised tasks include classification (predicting categories) and regression (predicting continuous values).

- Unsupervised learning is like giving a child a box of mixed shapes and colors and asking them to group similar items. The model learns patterns and structures in the data without explicit labels. We only have input features, no predefined outputs. Common unsupervised tasks include clustering (grouping data points) and dimensionality reduction (reducing the number of variables while preserving important information).

Example:

- Supervised: Predicting house prices (regression) based on features like size, location, and number of bedrooms. The dataset includes the price (output) for each house (input).

- Unsupervised: Customer segmentation. Grouping customers based on their purchasing behavior without pre-defined segments. We only have data on customer purchases (input).

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you perform A/B testing?

A/B testing is a controlled experiment used to compare two versions of something (A and B) to determine which performs better. Imagine you’re testing two different website designs – one is version A and the other is version B. You randomly split your users into two groups: one group sees version A, and the other sees version B. You then measure key metrics (e.g., click-through rate, conversion rate) for both groups. Statistical tests are used to determine if the differences observed between groups are statistically significant or simply due to random chance.

Steps in A/B testing:

- Define your hypothesis: What are you trying to test? For example, ‘Version B will have a higher conversion rate than Version A.’

- Choose your metrics: What will you measure to determine success? (e.g., click-through rate, conversion rate, time on site)

- Design your experiment: Randomly assign users to groups A and B.

- Run the experiment: Collect data for a sufficient duration.

- Analyze the results: Use statistical tests (e.g., t-test, chi-squared test) to determine if the differences between groups are statistically significant.

- Interpret the results: Draw conclusions based on the analysis and make decisions based on your findings.

Example: A company wants to test two different email subject lines to see which one results in higher open rates. They randomly assign half their email list to receive email with subject line A and the other half to receive email with subject line B. They then compare the open rates of the two groups using a statistical test.

Q 17. What are different methods for model deployment?

Model deployment is the process of integrating a trained machine learning model into a production environment so it can be used to make predictions on new, unseen data. There are several methods, each with its own strengths and weaknesses:

- Batch processing: The model processes a large batch of data at once. This is efficient for tasks where real-time predictions aren’t required, like generating monthly reports.

- Real-time processing: The model makes predictions immediately as new data arrives. This is crucial for applications like fraud detection or recommendation systems.

- REST APIs: The model is exposed as a web service through an API, allowing other applications to interact with it. This is a common approach for integrating machine learning models into existing systems.

- Serverless functions: The model runs in a serverless environment, scaling automatically based on demand. This is cost-effective and simplifies deployment.

- Embedded systems: The model is integrated directly into a device, such as a smartphone or IoT sensor. This is suitable for offline applications.

The choice of method depends on factors such as the model’s complexity, the volume of data, the required latency, and the overall system architecture. For instance, a real-time fraud detection system would require real-time processing and a low latency API, while a monthly sales forecasting model could use batch processing.

Q 18. How do you handle outliers in your dataset?

Outliers are data points that significantly deviate from the rest of the data. They can skew the results of your analysis and impact the performance of your models. There are several ways to handle them:

- Detection: Identifying outliers is the first step. Common techniques include box plots, scatter plots, Z-scores, and Interquartile Range (IQR).

- Removal: Simply removing outliers can be effective if they are truly errors or anomalies. However, be cautious, as removing too many data points can reduce the representativeness of your dataset.

- Transformation: Applying transformations such as logarithmic or Box-Cox transformations can sometimes reduce the impact of outliers by compressing the data’s range.

- Winsorizing/Trimming: Winsorizing replaces extreme values with less extreme ones (e.g., replacing the highest value with the 95th percentile value). Trimming involves removing a percentage of the highest and lowest values.

- Robust methods: Using statistical methods that are less sensitive to outliers, such as median instead of mean, or robust regression techniques.

Example: If you’re analyzing house prices, a house with a price significantly higher than the others could be an outlier. Before deciding to remove it, one should investigate the reason for the high price (e.g., is it unusually large, in a premium location, or is there an error in the data?). Depending on the investigation, you can remove the outlier, winsorize the price, or leave it as is if its high price is justified.

Q 19. Describe different data visualization techniques in Python or R.

Data visualization is crucial for exploring, understanding, and communicating insights from data. Python’s matplotlib and seaborn, and R’s ggplot2 are popular libraries. Here are some techniques:

- Histograms: Show the distribution of a single numerical variable.

- Scatter plots: Show the relationship between two numerical variables.

- Box plots: Show the distribution of a numerical variable, including quartiles, median, and outliers.

- Bar charts: Compare the values of different categories.

- Line charts: Show trends over time.

- Heatmaps: Visualize correlation matrices or other two-dimensional data.

- Pair plots: Show scatter plots for all pairs of numerical variables in a dataset.

Python Example (using matplotlib):

import matplotlib.pyplot as plt

plt.hist([1, 2, 2, 3, 3, 3, 4, 4, 5], bins=5)

plt.show()This code creates a simple histogram.

Q 20. Explain the concept of cross-validation.

Cross-validation is a resampling technique used to evaluate the performance of a machine learning model and prevent overfitting. Overfitting happens when a model learns the training data too well and performs poorly on unseen data. Imagine teaching a child the answers to a specific test – they might ace that test but fail a similar one. Cross-validation helps us avoid this.

k-fold cross-validation: The most common type. The dataset is split into k folds (subsets). The model is trained k times, each time using k-1 folds for training and the remaining fold for testing. The average performance across all k folds is reported.

Example: 5-fold cross-validation means splitting your data into 5 folds. You train your model 5 times, each time testing on a different fold while training on the remaining 4. The final evaluation is the average of all 5 test results.

Cross-validation provides a more robust estimate of how well your model will generalize to new, unseen data compared to simply splitting the data into a training set and a test set once.

Q 21. What is the difference between type I and type II errors?

Type I and Type II errors are related to hypothesis testing in statistics. Think of it like a courtroom trial:

- Type I error (false positive): Rejecting a true null hypothesis. In a courtroom, this is convicting an innocent person.

- Type II error (false negative): Failing to reject a false null hypothesis. In a courtroom, this is acquitting a guilty person.

Example: Let’s say the null hypothesis is ‘the new drug is ineffective’.

- Type I error: We conclude the drug is effective (reject the null hypothesis), but it’s actually not.

- Type II error: We conclude the drug is ineffective (fail to reject the null hypothesis), but it actually is effective.

The probability of making a Type I error is denoted by α (alpha), and the probability of making a Type II error is denoted by β (beta). The power of a test (1-β) is the probability of correctly rejecting a false null hypothesis.

Q 22. How do you optimize model performance?

Optimizing model performance is crucial for building effective data science solutions. It involves a multi-faceted approach, focusing on improving accuracy, speed, and resource consumption. This typically involves several strategies, applied iteratively and evaluated rigorously.

- Feature Engineering: This is often the most impactful step. Creating new features from existing ones (e.g., combining age and income to create a ‘spending power’ feature) or transforming features (e.g., log-transforming skewed data) can significantly improve model performance. For example, instead of using raw date information, creating features like ‘day of the week’ or ‘month of the year’ can be more insightful for time series models.

- Hyperparameter Tuning: Machine learning models have adjustable parameters (hyperparameters) that control their learning process. Techniques like grid search, random search, or Bayesian optimization are used to find the optimal combination of hyperparameters that minimize error and maximize performance. Imagine tuning the knobs on a radio to find the clearest station – that’s similar to hyperparameter tuning.

- Model Selection: Choosing the right model for the data and task is vital. A linear model might suffice for simple relationships, while a complex neural network might be needed for intricate patterns. Experimenting with different algorithms (e.g., linear regression, decision trees, support vector machines, neural networks) and evaluating their performance on validation data helps determine the best fit.

- Regularization: This technique prevents overfitting by adding a penalty to the model’s complexity. L1 (LASSO) and L2 (Ridge) regularization are common methods that constrain the magnitude of model coefficients, discouraging the model from learning noise in the data. Think of it as adding a constraint to prevent the model from being too specific to the training data and not generalizing well to new data.

- Cross-Validation: To ensure reliable performance estimates, cross-validation techniques like k-fold cross-validation are used. The data is split into k subsets, and the model is trained and evaluated k times, each time using a different subset as the validation set. This gives a more robust estimate of the model’s generalization performance compared to using a single train-test split.

- Data Cleaning and Preprocessing: Addressing missing values, outliers, and inconsistencies in the data is paramount. Techniques such as imputation (filling missing values), outlier removal, and data transformation (e.g., standardization, normalization) are crucial for ensuring data quality and model robustness.

The optimization process is iterative. You’ll likely cycle through these steps, evaluating performance metrics (e.g., accuracy, precision, recall, F1-score, AUC) at each stage to determine the best approach.

Q 23. Explain the concept of bias-variance tradeoff.

The bias-variance tradeoff is a fundamental concept in machine learning that describes the relationship between a model’s ability to fit the training data (variance) and its ability to generalize to unseen data (bias). It’s a delicate balance that needs careful consideration.

Bias refers to the error introduced by approximating a real-world problem, which is often complex, by a simplified model. High bias means the model is too simple and makes strong assumptions, leading to underfitting – it performs poorly on both training and testing data. Think of aiming at a target with a very inaccurate weapon – you’ll consistently miss by a large amount.

Variance refers to the model’s sensitivity to fluctuations in the training data. High variance means the model is too complex and learns the training data too well, including its noise. This leads to overfitting – it performs well on training data but poorly on unseen data. Think of aiming at the target precisely but with shaky hands – your shots will be scattered around the bullseye.

The goal is to find a sweet spot that minimizes both bias and variance. A model with low bias and low variance generalizes well to unseen data. This is achieved through careful model selection, feature engineering, regularization, and hyperparameter tuning. It’s often a compromise – reducing bias might increase variance, and vice versa. The optimal balance depends on the specific problem and data.

Q 24. How do you debug code in Python or R?

Debugging code in Python and R involves a combination of techniques, and the specific approach depends on the nature of the error.

- Print Statements (

print()in Python,print()in R): Strategic placement of print statements throughout the code can help trace the values of variables and identify where errors occur. This is a basic but powerful approach for understanding the code’s flow. - Debuggers (

pdbin Python,debug()in R): Integrated debuggers allow stepping through the code line by line, inspecting variables, setting breakpoints, and more. This provides a much more powerful way to analyze code behavior than simple print statements. - Error Messages: Carefully reading and understanding error messages is crucial. They provide valuable information about the type of error, its location, and often suggestions for fixing it. Pay close attention to the line number and error type indicated in the message.

- Unit Tests: Writing unit tests ensures individual functions or modules of code work as intended. This can help identify problems early in the development process, before they escalate into larger issues.

- Linters (

pylintfor Python,lintrfor R): These tools analyze code for style inconsistencies, potential bugs, and other problems. They can catch minor issues that might otherwise go unnoticed. - Logging: For large projects, logging provides a structured way to record events and errors. This is valuable for tracking down issues that may not immediately appear during execution.

- Version Control (Git): Using Git helps track changes made to the code, allowing you to easily revert to previous versions if a new bug is introduced.

Example in Python using pdb:

import pdb

def my_function(x, y):

pdb.set_trace()

result = x + y

return result

my_function(5, 3)The pdb.set_trace() line will pause execution, allowing you to inspect the variables and step through the code.

Q 25. What are some common packages used in data science?

Data science relies on a rich ecosystem of packages in both Python and R. Here are some commonly used ones:

- Python:

- NumPy: Fundamental package for numerical computation, providing support for arrays and matrices.

- Pandas: Essential for data manipulation and analysis, offering DataFrame structures.

- Scikit-learn: A comprehensive library for machine learning algorithms, including classification, regression, clustering, and dimensionality reduction.

- Matplotlib & Seaborn: Powerful visualization libraries for creating static, interactive, and animated plots.

- TensorFlow & PyTorch: Leading deep learning frameworks for building and training neural networks.

- R:

- dplyr & tidyr: Form the core of data manipulation in the tidyverse framework, providing efficient ways to transform and reshape data.

- ggplot2: A grammar of graphics-based visualization library, creating elegant and informative plots.

- caret: Provides a unified interface for various machine learning algorithms, simplifying model training and evaluation.

- randomForest: Implements random forest algorithms for classification and regression.

These packages provide the building blocks for most data science projects, offering efficient tools for data cleaning, transformation, analysis, modeling, and visualization.

Q 26. Describe your experience with version control systems like Git.

Version control systems like Git are indispensable for collaborative data science projects. I have extensive experience using Git for managing code, data, and project documentation.

- Branching and Merging: I regularly use branching to isolate new features or bug fixes, allowing parallel development without interfering with the main codebase. Merging changes back into the main branch is a familiar process, and I am comfortable resolving merge conflicts.

- Committing and Pushing: I commit my code changes frequently with descriptive messages, ensuring a clear record of the project’s evolution. Pushing changes to remote repositories (like GitHub, GitLab, or Bitbucket) is standard practice.

- Pull Requests: For collaborative projects, I use pull requests to propose changes and facilitate code reviews, ensuring quality and consistency.

- Conflict Resolution: I am proficient in resolving merge conflicts using various strategies, carefully reviewing and integrating conflicting changes.

- Collaboration: I frequently use Git for team collaborations, including managing shared repositories, coordinating work, and reviewing colleagues’ code.

My Git workflow enhances reproducibility, facilitates collaboration, and provides a safety net for managing changes, which is crucial for large and complex data science projects.

Q 27. Explain your approach to solving a complex data problem.

My approach to solving complex data problems involves a structured, iterative process.

- Understanding the Problem: This initial phase is critical. I begin by carefully defining the problem, identifying the key questions to be answered, and clarifying the desired outcomes. This often involves discussions with stakeholders to ensure a shared understanding of the goals.

- Data Acquisition and Exploration: I gather the necessary data, considering various sources and formats. Exploratory data analysis (EDA) is crucial here. I use visualization and summary statistics to gain insights into the data’s structure, identify patterns, and detect anomalies or missing values.

- Data Preprocessing and Cleaning: This stage involves handling missing values, outliers, and inconsistencies. I choose appropriate methods based on the nature of the data and the problem. Feature engineering often occurs here, creating new features to improve model performance.

- Model Selection and Training: I select appropriate machine learning models based on the problem type (classification, regression, clustering, etc.) and the characteristics of the data. I then train and evaluate the models using appropriate metrics, often employing cross-validation techniques to ensure reliable performance estimates.

- Model Evaluation and Selection: I compare the performance of different models, carefully considering various metrics to choose the best performing one. I assess not only the accuracy but also other factors such as interpretability, computational cost, and scalability.

- Deployment and Monitoring: Once a suitable model is chosen, I work on deploying it in a production environment, and then monitor its performance over time to ensure continued accuracy and reliability.

This iterative process allows me to refine my approach as I gain more understanding of the data and the problem. Flexibility and adaptation are key in addressing the challenges inherent in complex data problems.

Q 28. Walk me through a recent data science project you worked on.

In a recent project for a telecommunications company, I worked on developing a predictive model to identify customers at high risk of churn. The data included demographic information, billing history, usage patterns, customer service interactions, and promotional offers.

My approach involved:

- Data Exploration: I performed exploratory data analysis to understand the relationships between different variables and the characteristics of customers who churned. Visualizations helped uncover patterns and potential predictors of churn.

- Feature Engineering: I engineered several new features, including average call duration, frequency of customer service calls, and the ratio of data usage to plan limits. These features provided additional insights into customer behavior.

- Model Selection and Training: I experimented with various classification algorithms, including logistic regression, support vector machines, and random forests. I used cross-validation to evaluate their performance and selected a random forest model as it provided the best balance of accuracy and interpretability.

- Model Optimization: I fine-tuned the hyperparameters of the random forest model using techniques like grid search to optimize performance. Feature importance analysis helped identify the most influential predictors of churn.

- Deployment and Monitoring: The final model was integrated into the company’s customer relationship management system, providing real-time predictions of churn risk. I implemented monitoring to track the model’s performance over time and ensure continued accuracy.

The project resulted in a significant improvement in the company’s ability to identify and retain at-risk customers, leading to a demonstrable reduction in churn rates. This involved close collaboration with business stakeholders to ensure the model’s output was actionable and aligned with business goals.

Key Topics to Learn for Proficient in Python or R Programming Languages Interview

- Data Structures and Algorithms: Understanding fundamental data structures (lists, dictionaries, arrays, data frames) and algorithms (searching, sorting) is crucial for efficient code and problem-solving. Practice implementing these in both Python and R.

- Data Manipulation and Cleaning: Mastering data cleaning techniques (handling missing values, outliers, inconsistencies) and data manipulation (filtering, sorting, aggregation) using libraries like pandas (Python) and dplyr (R) is essential for real-world projects.

- Data Visualization: Learn to create compelling visualizations using libraries like Matplotlib and Seaborn (Python) or ggplot2 (R). Practice creating various chart types to effectively communicate insights from data.

- Statistical Modeling and Analysis: Develop a strong understanding of statistical concepts and their application in R and Python. Familiarize yourself with regression analysis, hypothesis testing, and other relevant statistical methods.

- Programming Fundamentals: Reinforce your understanding of core programming concepts like control flow, functions, object-oriented programming, and modularity. Demonstrate clean, efficient, and well-documented code.

- Version Control (Git): Showcase your proficiency in using Git for collaborative coding and project management. Understanding branching, merging, and pull requests is highly valuable.

- Libraries and Packages (Specific to Python/R): Deepen your knowledge of relevant libraries specific to your chosen language. For Python, explore NumPy, Scikit-learn, TensorFlow/PyTorch (for machine learning). For R, explore caret, randomForest, and other relevant packages.

- Problem-Solving and Communication: Practice approaching problems systematically, breaking them down into smaller, manageable parts. Be prepared to articulate your thought process clearly and concisely.

Next Steps

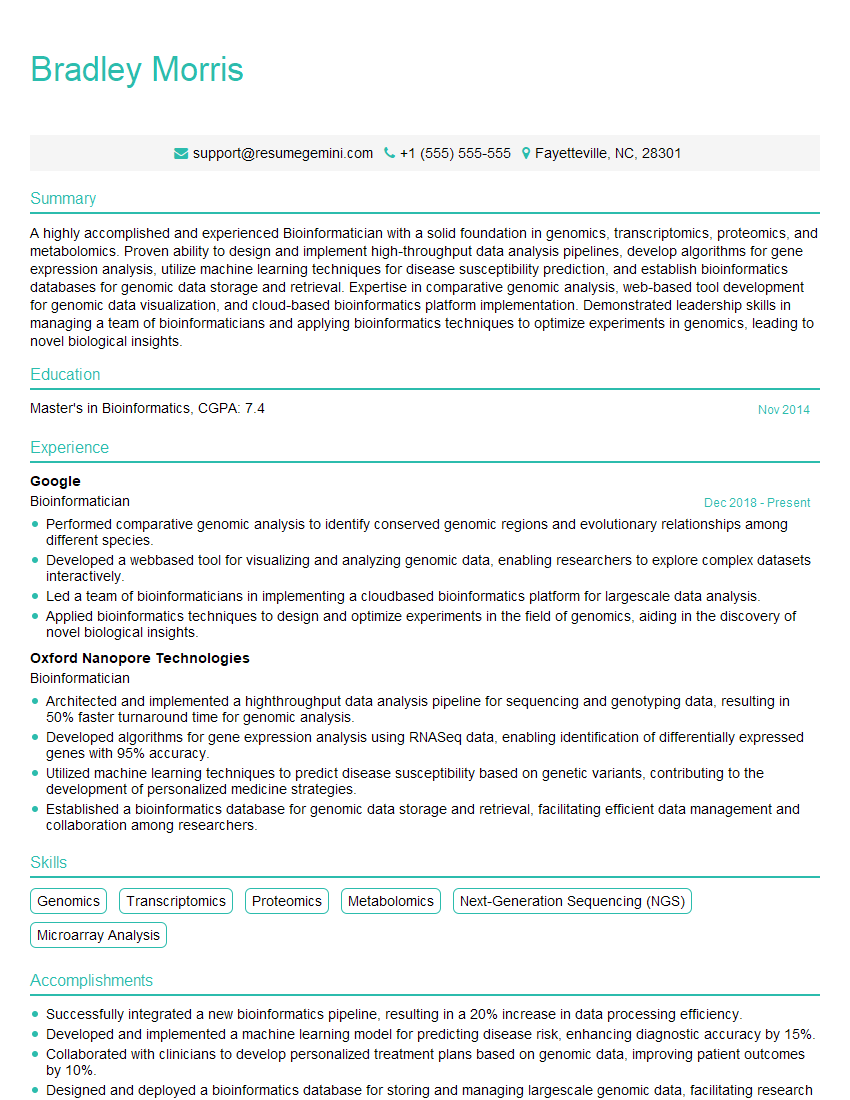

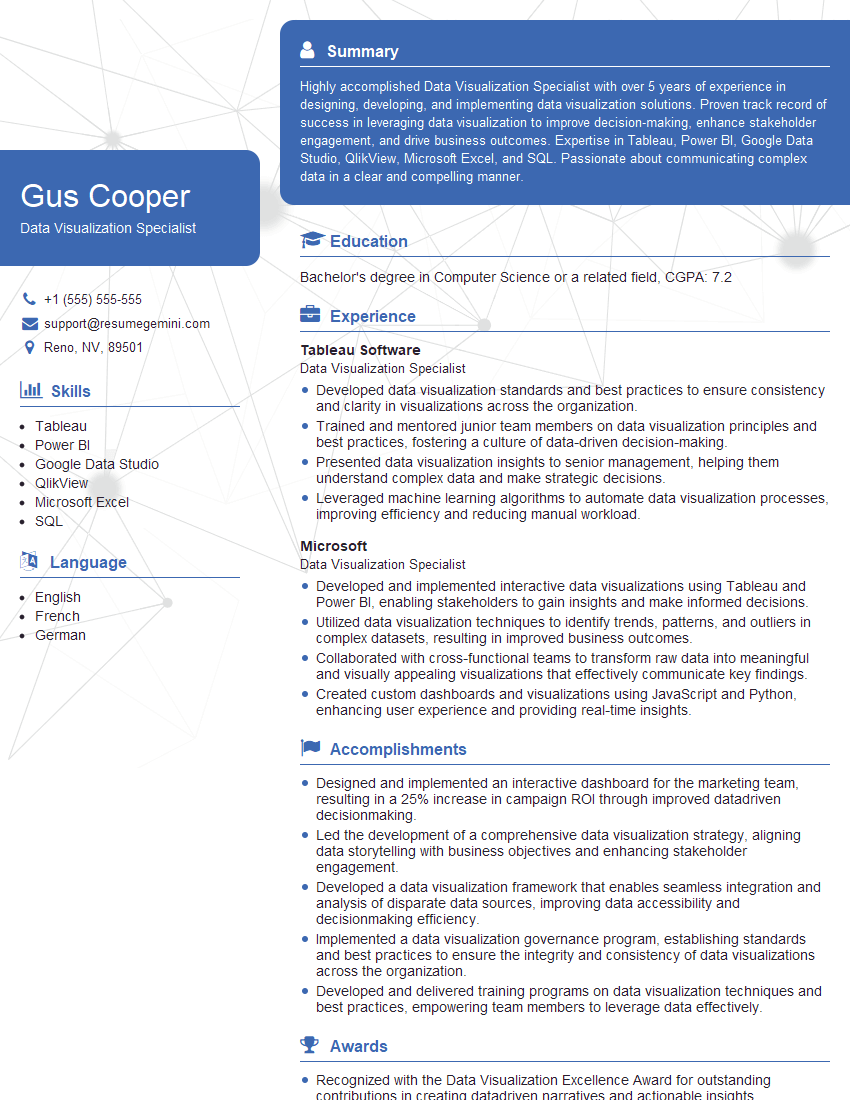

Mastering Python or R programming opens doors to exciting careers in data science, machine learning, and analytics. To significantly boost your job prospects, invest time in creating a professional, ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a compelling resume tailored to your specific qualifications. Examples of resumes tailored to candidates proficient in Python or R programming languages are available to guide you. Take this opportunity to showcase your abilities and land your dream job!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good