Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Proficient in using relevant software and tools interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Proficient in using relevant software and tools Interview

Q 1. Describe your experience with [Specific Software/Tool 1].

My experience with Adobe Photoshop spans over eight years, encompassing both personal projects and professional assignments. I’m proficient in all its core functionalities, including image manipulation, retouching, compositing, and creating digital paintings. I’ve used it extensively for tasks such as image enhancement for e-commerce product photography, creating marketing materials, and designing website banners. My workflow typically involves utilizing layers effectively, employing adjustment layers for non-destructive editing, and utilizing masking techniques for precise selection and manipulation.

For example, in a recent project for a clothing retailer, I used Photoshop to remove backgrounds from hundreds of product images, ensuring consistent and clean product presentations across their online store. I automated parts of this process using actions to increase efficiency.

Q 2. What are the limitations of [Specific Software/Tool 2]?

AutoCAD’s limitations primarily stem from its focus on 2D and 3D drafting. While powerful in its domain, it lacks the robust capabilities of dedicated 3D modeling software like Blender or Maya for organic modeling or complex animations. Its interface, while functional, can feel somewhat dated and less intuitive for users accustomed to more modern software design paradigms. Another limitation is the steep learning curve, especially for users unfamiliar with CAD principles. Finally, managing large, complex projects within AutoCAD can sometimes be cumbersome, demanding significant system resources and potentially leading to performance issues.

Q 3. How have you used [Specific Software/Tool 3] to solve a complex problem?

I used Python with its data analysis libraries (Pandas, NumPy, Scikit-learn) to solve a complex problem involving customer churn prediction for a telecommunications company. The challenge was to identify factors contributing to customers cancelling their services and to develop a predictive model to proactively mitigate churn.

My approach involved several steps: First, I cleaned and pre-processed a large dataset containing customer demographics, service usage patterns, and churn history. Then, using Pandas, I performed exploratory data analysis to identify correlations between different variables and churn rates. I leveraged NumPy for efficient numerical computations. Finally, I employed Scikit-learn to train several machine learning models (logistic regression, random forest, etc.), evaluating their performance using appropriate metrics. The resulting model allowed the company to proactively identify at-risk customers and offer tailored retention strategies.

# Example Python code snippet (simplified):

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

# ... (data loading, preprocessing, model training and evaluation)Q 4. Compare and contrast [Software/Tool A] and [Software/Tool B].

Adobe Premiere Pro and DaVinci Resolve are both professional-grade video editing software, but they cater to slightly different needs and workflows. Premiere Pro, while offering excellent features, is often considered more user-friendly, especially for beginners. It seamlessly integrates with other Adobe Creative Cloud applications, facilitating a smooth workflow for those already using the suite. However, it can be more expensive than Resolve.

DaVinci Resolve, on the other hand, is a powerful, free and open-source video editor known for its exceptional color grading capabilities and advanced features. Its professional-level color correction tools are unmatched, making it a favourite among colorists. While offering a steeper learning curve than Premiere Pro, its vast feature set and flexibility make it a compelling option for experienced editors. In essence, Premiere Pro prioritizes ease of use and integration within a creative ecosystem, while DaVinci Resolve champions power, flexibility, and color grading excellence.

Q 5. Explain your workflow when using [Specific Software/Tool 4].

My workflow when using Jira typically follows an Agile methodology. I begin by creating and assigning tasks to team members within a specific sprint. I then track progress using the Kanban board, moving tasks through different stages (To Do, In Progress, Review, Done). Regular sprint reviews and retrospectives are conducted to discuss progress, identify roadblocks, and refine processes. I leverage Jira’s reporting features to monitor team velocity, identify bottlenecks, and track project progress against deadlines. Communication is paramount, so I utilize Jira’s commenting and notification features to ensure transparency and efficient collaboration within the team.

Q 6. Describe a situation where you had to troubleshoot a problem with [Specific Software/Tool 5].

During a project using Microsoft SQL Server, I encountered a performance bottleneck affecting query execution times. After analyzing query execution plans and examining server logs, I identified a missing index on a frequently accessed table. This index was crucial for optimizing query performance. Adding the index resolved the performance issue significantly, reducing query execution times by over 80%. The solution involved understanding SQL Server’s query optimization mechanisms, utilizing system monitoring tools, and applying database administration best practices. This highlighted the importance of regular database maintenance and performance tuning.

Q 7. What are some best practices for using [Specific Software/Tool 6]?

Best practices for using GitHub include: Employing a consistent branching strategy (e.g., Gitflow) for managing code changes and releases; writing clear and concise commit messages; regularly pushing changes to the remote repository; utilizing pull requests for code review and collaboration; adopting a clear naming convention for branches and repositories; leveraging GitHub’s issue tracking system for managing bugs and feature requests; and integrating continuous integration/continuous deployment (CI/CD) pipelines for automated testing and deployment. These practices promote efficient collaboration, maintain code quality, and enhance the overall software development process.

Q 8. How do you stay up-to-date with the latest developments in [Specific Software/Tool 7]?

Staying current with the latest developments in any software, say, a specific data visualization tool like Tableau, requires a multi-pronged approach. I regularly check Tableau’s official website for announcements, new feature releases, and updated documentation. I also actively participate in online communities like forums and dedicated subreddits, where users share tips, tricks, and troubleshoot issues. This helps me learn about workarounds, best practices, and even upcoming changes directly from the user base. Furthermore, I subscribe to relevant newsletters and industry blogs focused on data visualization and business intelligence to stay informed on broader trends and advancements that might impact Tableau’s development or my usage.

Attending webinars and online courses offered by Tableau or third-party trainers is another effective method. These often provide in-depth knowledge of new features and techniques, usually exceeding what’s found in the basic documentation. Finally, I dedicate time each week to experimenting with new features and exploring advanced functionalities to maintain a practical, hands-on understanding of the tool.

Q 9. What are the security considerations when using [Specific Software/Tool 8]?

Security considerations when using, for example, a cloud-based project management tool like Asana, are crucial. Firstly, I always ensure strong, unique passwords, utilizing password managers to help with this. Secondly, I meticulously review Asana’s security settings, enabling two-factor authentication (2FA) for enhanced protection. This adds an extra layer of security, requiring a second form of verification besides the password.

I’m also cautious about the information I share within Asana. Sensitive data, like financial records or personally identifiable information (PII), is generally avoided unless absolutely necessary and protected with additional access controls within the platform. Regularly reviewing access permissions for team members is vital; I ensure that users only have access to the information they need to perform their roles. Finally, I stay informed about Asana’s security updates and patches, ensuring our organization’s instance is kept up-to-date to mitigate potential vulnerabilities.

Q 10. How would you integrate [Software/Tool A] with [Software/Tool B]?

Integrating, say, a CRM like Salesforce with a marketing automation tool like HubSpot, can be achieved through several methods. The most common approach is using their respective APIs (Application Programming Interfaces). This allows for direct data exchange between the two systems. For example, we might use the Salesforce API to send customer data to HubSpot, automatically updating contact information or triggering automated email campaigns based on CRM activity. Conversely, HubSpot’s API can send lead data back to Salesforce, updating opportunity statuses or assigning leads to specific sales representatives.

Another method is using integration platforms like Zapier or Integromat, which offer a more user-friendly, no-code approach. These platforms use pre-built connectors to facilitate data transfer between different applications, often requiring less technical expertise. Choosing the right method depends on the complexity of the integration and the technical capabilities of the team. If a very customized and sophisticated integration is required, direct API usage would provide the flexibility needed, but it would demand more coding experience.

Q 11. Explain the concept of [Specific Software/Tool concept].

Let’s say we’re discussing ‘version control’ in the context of Git. Version control is a system that records changes to a file or set of files over time so that you can recall specific versions later. Think of it like a ‘track changes’ feature on steroids, but for entire projects. It allows multiple developers to work on the same codebase simultaneously without overwriting each other’s work.

Git uses a system of branches and commits. A ‘commit’ is a snapshot of your project at a particular point in time. ‘Branches’ allow you to work on new features or bug fixes without affecting the main codebase (often called the ‘master’ or ‘main’ branch). Once changes are tested and approved, the branch can be merged back into the main branch. This ensures a clean and organized history of changes, making it easy to revert to previous versions if necessary. In a professional setting, Git is crucial for collaborative software development, providing a reliable and efficient way to manage code evolution.

Q 12. What are the advantages and disadvantages of using [Specific Software/Tool 9]?

Let’s consider the advantages and disadvantages of using a specific type of database, say, a NoSQL database like MongoDB. A primary advantage is its scalability and flexibility. NoSQL databases are excellent for handling large volumes of unstructured or semi-structured data, unlike relational databases which struggle with such data. They also typically offer high availability and fault tolerance, making them suitable for applications that require continuous uptime. Furthermore, NoSQL databases often scale more easily and cost-effectively than relational databases, particularly for large datasets.

However, a key disadvantage is the lack of ACID properties (Atomicity, Consistency, Isolation, Durability) which are fundamental guarantees in relational databases. This means that transactions might not always be perfectly consistent, especially in high-concurrency scenarios. Data consistency can be more challenging to manage in NoSQL, requiring careful design and implementation. Moreover, querying NoSQL databases can sometimes be less efficient than relational databases for complex data relationships. The choice between NoSQL and relational databases often depends on the specific needs of the application.

Q 13. How would you handle a situation where [Specific Software/Tool 10] crashes?

If, for instance, our project management software, Jira, crashes, my first step is to check the Jira status page for any reported outages or maintenance. If an outage is confirmed, I wait for the service to recover. If no outage is reported, I then check our network connection to ensure Jira can be reached. A simple restart of the application or even the computer might resolve minor issues.

If the problem persists, I would investigate logs for any error messages. These usually provide clues about the cause of the crash. I might look for temporary files that may be causing issues and remove them, if appropriate. If the crash is persistent and self-troubleshooting fails, I would reach out to Jira’s support team or our IT department, providing detailed information about the crash and steps already taken to troubleshoot. It’s also crucial to have a backup plan or a secondary project management system readily available in case of unexpected outages, to minimize disruption to workflow.

Q 14. What are some common errors you encounter when using [Specific Software/Tool 11] and how do you fix them?

When using a scripting language like Python, common errors include syntax errors, runtime errors, and logic errors. Syntax errors are usually easy to spot; the Python interpreter will often highlight them precisely, indicating issues with grammar or punctuation in the code. For example, forgetting a colon at the end of an if statement will cause a syntax error. if x > 5: print('x is greater than 5') (Correct). if x > 5 print('x is greater than 5') (Incorrect – Syntax Error)

Runtime errors occur when the code has correct syntax, but encounters an unexpected situation during execution. For example, trying to divide by zero will raise a ZeroDivisionError. Logic errors are more subtle; the code runs without crashing, but produces incorrect results. This often involves a flaw in the algorithm or unexpected input handling. Debugging these usually requires careful analysis of the code logic and using debugging tools to step through the code execution, examining variable values at different points. Careful testing and using tools like linters and static analyzers can significantly reduce the number of these errors.

Q 15. Describe your experience with version control systems like Git.

Git is my go-to version control system. I’m proficient in all its core functionalities, from basic branching and merging to more advanced techniques like rebasing and cherry-picking. My experience spans both individual and collaborative projects. Think of Git like a time machine for your code, allowing you to track changes, revert to previous versions, and collaborate seamlessly with others.

For instance, in a recent project developing a web application, we used Git’s branching strategy extensively. Each new feature was developed on a separate branch, allowing for parallel development without disrupting the main codebase. Once a feature was complete and thoroughly tested, we merged it into the main branch using pull requests, ensuring code reviews and preventing merge conflicts. This workflow significantly improved our team’s efficiency and code quality. I’m also comfortable using Git platforms like GitHub and GitLab, leveraging their features for issue tracking and collaborative code review.

I routinely utilize commands like git clone, git add, git commit, git push, git pull, git merge, and git branch, and understand the importance of writing clear and concise commit messages to maintain a well-documented project history.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you ensure data integrity when working with [Specific Software/Tool 12]?

Assuming ‘[Specific Software/Tool 12]’ refers to a database system like SQL Server or MySQL, data integrity is paramount. I employ a multi-layered approach to ensure its preservation. This includes using appropriate data types, constraints (like primary and foreign keys, unique constraints, and check constraints) to enforce data validity at the database level. I also use stored procedures and triggers to automate data validation and consistency checks whenever data is inserted, updated, or deleted.

Regular backups are crucial. I schedule automated backups to minimize data loss risk, employing a strategy that includes both full and differential backups. Furthermore, I leverage transaction management to ensure that operations are atomic – either all changes are committed successfully, or none are, preventing partial data updates that could compromise integrity. Finally, regular audits and data quality checks using specific tools or scripts are essential to identify and resolve any potential integrity issues proactively.

Q 17. What is your preferred method for debugging code in [Specific Software/Tool 13]?

My preferred debugging method in ‘[Specific Software/Tool 13]’ (assuming it’s an IDE like Visual Studio or Eclipse) relies heavily on the integrated debugger. I begin by setting breakpoints at strategic points in my code, allowing me to step through the execution line by line. I use the debugger’s watch window to monitor the values of variables and expressions at different stages.

If a specific error message is presented, I investigate the stack trace – a record of function calls leading to the error. This helps me pinpoint the exact location and cause of the problem. I also use logging statements within the code to track variable values and program flow during runtime, creating a trail to follow if the debugger doesn’t immediately provide enough information. For more complex issues, I may employ profiling tools to identify performance bottlenecks and optimize resource usage.

Q 18. Explain your experience with Agile development methodologies and relevant tools.

I have extensive experience with Agile methodologies, primarily Scrum and Kanban. In Scrum, I’m comfortable participating in sprint planning, daily stand-ups, sprint reviews, and retrospectives. I understand the importance of iterative development, frequent feedback loops, and adapting to changing requirements. With Kanban, I’m familiar with visualizing workflow, limiting work in progress (WIP), and continuously improving efficiency.

I’ve used tools like Jira and Trello extensively for task management, sprint tracking, and collaboration. In one project, we used Jira to manage our Scrum sprints, creating user stories, assigning tasks, and tracking progress. Trello’s visual Kanban board helped us manage and prioritize tasks in a more flexible, less rigid approach. My experience with these tools extends to using them for both smaller teams and larger, more complex projects, allowing me to tailor the Agile approach to the specific project needs.

Q 19. How do you prioritize tasks when working on multiple projects involving different software tools?

Prioritizing tasks across multiple projects and diverse tools requires a structured approach. I typically use a combination of methods. First, I clearly define the goals and deadlines for each project. Then, I assess the urgency and importance of each task using a prioritization matrix, often a MoSCoW method (Must have, Should have, Could have, Won’t have). This allows for a clear understanding of which tasks are critical and which can be deferred.

Next, I allocate time blocks for focused work on specific projects, ensuring that I have dedicated time slots for each. I also utilize task management tools like Asana or Todoist to track my progress, set reminders, and maintain a clear overview of all my tasks. Regularly reviewing and adjusting my priorities as needed is crucial; project dependencies and shifting priorities might necessitate re-evaluation and a flexible approach.

Q 20. Describe a time you had to learn a new software tool quickly. How did you approach it?

In a previous role, I was tasked with integrating a new CRM system (Customer Relationship Management) into our existing workflow within a very tight deadline. I had little prior experience with this specific CRM. My approach was systematic and focused on learning the essentials first. I started by identifying the core functionalities I needed to master for the integration. The official documentation was my first port of call, supplemented by online tutorials and videos.

I worked through the provided examples and tutorials, practicing the key features until I felt comfortable. I also broke down the integration task into smaller, manageable sub-tasks, testing each component individually before integrating them. Don’t be afraid to experiment and make mistakes—they are crucial learning experiences. Crucially, I sought help from colleagues experienced with the CRM whenever I encountered roadblocks. This combination of self-learning and collaborative support allowed me to integrate the CRM effectively within the short timeframe.

Q 21. What are your preferred methods for documenting your work with software tools?

My preferred method for documenting work involves a combination of approaches tailored to the specific software and project. For code, I rely heavily on inline comments within the code itself to explain complex logic or non-obvious functionalities. I also maintain a detailed README file for each project, outlining the project’s purpose, setup instructions, usage guidelines, and any relevant dependencies. This ensures that others can easily understand and work with the code even if they haven’t been directly involved in the development.

For more complex projects or those involving multiple software tools, I create more comprehensive documentation using tools like Confluence or Microsoft Word, including diagrams, flowcharts, and detailed descriptions of the system architecture and workflows. The key is to make documentation clear, concise, and accessible to the intended audience. Keeping documentation updated consistently is essential; otherwise it becomes stale and loses its value.

Q 22. How do you handle conflicting requirements when using multiple software tools in a project?

Conflicting requirements are a common occurrence when integrating multiple software tools. My approach involves a structured process to prioritize and resolve them. First, I meticulously document all requirements from each tool, noting any potential conflicts. Then, I schedule a meeting with stakeholders (developers, product owners, clients) to discuss the conflicts. We use a prioritization matrix, weighing factors like business value, technical feasibility, and deadlines. We then collaboratively decide on a solution: This could involve compromising, re-prioritizing, or even rejecting certain requirements. For example, in a project integrating CRM and marketing automation software, we might have conflicting requirements around data field naming conventions. Through discussion, we might decide to standardize on the CRM’s naming convention for consistency across the platform.

Once a decision is reached, it’s documented and tracked using a project management tool like Jira or Asana to ensure everyone’s on the same page and that the solution is implemented consistently.

Q 23. What are some common challenges you face when working with large datasets and relevant tools?

Working with large datasets presents several challenges, most notably computational limitations, data quality issues, and storage costs. Computational challenges often arise with tools that aren’t optimized for big data processing. This is where tools like Apache Spark or cloud-based solutions like AWS EMR come in. They’re designed to handle the massive scale efficiently. For example, processing terabytes of customer data for a recommendation engine would require a distributed processing framework.

Data quality is another hurdle – large datasets are prone to inconsistencies, missing values, and outliers. We address this by employing data cleaning and validation techniques during the ETL (Extract, Transform, Load) process. Tools like SQL, Python with Pandas, and data quality assessment tools are crucial. Finally, storage costs can escalate rapidly. We mitigate this by using cost-effective cloud storage solutions and employing strategies like data compression and archival for less frequently accessed data.

Q 24. Explain your experience with API integration and relevant tools.

API integration is a core skill in my toolkit. I’ve extensive experience integrating various APIs using tools like Postman for testing and debugging, and programming languages such as Python and Java. I follow a structured approach which begins with carefully reviewing API documentation to understand endpoints, authentication methods, and data formats. After that, I write well-documented code to handle requests, process responses, and manage error handling.

For example, I once integrated a payment gateway API into an e-commerce application. I used Python’s `requests` library to make secure API calls, handling potential errors like network issues or invalid payment information. Postman was invaluable during the development phase for testing different API scenarios and quickly identifying and resolving issues before integrating into the main application. Throughout the process, I prioritize security best practices, such as using secure HTTPS connections and avoiding hardcoding sensitive information.

Q 25. Describe your experience with cloud computing platforms and tools (e.g., AWS, Azure, GCP).

I have substantial experience with AWS, Azure, and GCP, deploying and managing applications across these platforms. My experience spans various services, including compute (EC2, Azure VMs, Compute Engine), storage (S3, Azure Blob Storage, Cloud Storage), databases (RDS, Azure SQL Database, Cloud SQL), and serverless computing (Lambda, Azure Functions, Cloud Functions).

For instance, I recently migrated a legacy application to AWS. This involved creating EC2 instances, configuring network security groups, setting up an RDS instance for the database, and implementing CI/CD pipelines using AWS CodePipeline and CodeDeploy. The key to success in cloud environments is understanding cost optimization strategies, security best practices, and leveraging the strengths of each platform’s specific services. Choosing the right service for the job is critical to ensuring efficiency and cost-effectiveness. For example, using serverless functions for event-driven tasks can significantly reduce infrastructure costs compared to running always-on virtual machines.

Q 26. How do you ensure the scalability and maintainability of software solutions you develop?

Scalability and maintainability are paramount considerations in software development. I achieve this through a combination of architectural choices, coding practices, and operational strategies. Architecturally, I favor microservices over monolithic applications whenever feasible. Microservices allow for independent scaling of individual components based on their specific needs, improving resource utilization and resilience.

From a coding perspective, I employ design patterns like MVC (Model-View-Controller) to enhance organization and maintainability. Using version control (Git) meticulously, writing modular, well-documented code, and following coding style guides are essential. Operationally, continuous integration and continuous deployment (CI/CD) pipelines automate testing and deployment processes, reducing errors and speeding up release cycles. Regular code reviews and automated testing (unit, integration, and system tests) are crucial for identifying and addressing issues early. Thorough documentation, including API specifications and deployment guides, greatly aids in future maintenance and collaboration.

Q 27. What are some best practices for testing software applications and tools?

Robust testing is fundamental to delivering high-quality software. My approach involves a multi-layered testing strategy comprising unit, integration, system, and user acceptance testing (UAT). Unit testing focuses on individual components; integration testing verifies the interactions between components; system testing assesses the overall functionality; and UAT ensures the software meets user requirements.

I utilize various tools to support these testing phases, including unit testing frameworks (JUnit, pytest), automated testing tools (Selenium, Appium), and test management tools (TestRail, Zephyr). Adopting Test-Driven Development (TDD) allows for early error detection and ensures code meets requirements. Additionally, I advocate for employing static code analysis tools to identify potential bugs and vulnerabilities before testing even begins. The goal is to create a comprehensive testing suite that minimizes bugs and maximizes the quality of the delivered software. Regular code reviews also form an important part of the quality assurance process.

Q 28. Explain your approach to troubleshooting network connectivity issues related to software applications.

Troubleshooting network connectivity issues starts with a systematic approach. I begin by identifying the scope of the problem – is it affecting only a single application, a group of applications, or the entire network? I then use tools like ping, traceroute/tracert (to trace the path of packets), and `netstat` (to view network connections) to pinpoint the location of the fault.

For example, if an application can’t connect to a remote server, I’d first use `ping` to check if the server is reachable. If not, the problem might be with the network infrastructure itself. If `ping` is successful but the application still fails, `traceroute` can help identify any network hops with high latency or packet loss. I also examine firewall rules, DNS settings, and network configuration files, looking for any misconfigurations that might be blocking connectivity. For cloud-based applications, I’d investigate the cloud provider’s network monitoring tools and logs for clues. The process typically involves carefully analyzing logs, network statistics, and configurations, systematically checking each element until the cause is isolated and resolved.

Key Topics to Learn for “Proficient in Using Relevant Software and Tools” Interview

- Understanding Software Proficiency: Go beyond simply listing software; demonstrate a deep understanding of your skills. This includes knowing the nuances of each tool, its limitations, and when it’s the most effective solution.

- Practical Application & Use Cases: Prepare examples from your past experiences showcasing how you’ve utilized these tools to solve problems, improve efficiency, or achieve specific goals. Quantify your accomplishments whenever possible.

- Problem-Solving with Software: Be ready to discuss troubleshooting scenarios. How have you overcome software glitches or limitations? Can you explain your approach to debugging and identifying solutions?

- Software Integration & Workflow: Many roles require integrating various software applications. Showcase your ability to streamline workflows and collaborate effectively using different tools.

- Staying Current: Demonstrate your commitment to continuous learning by discussing any recent updates, new features, or advancements in the software you use. This shows initiative and adaptability.

- Adaptability to New Tools: Interviewers often assess your ability to learn new software quickly. Be prepared to discuss your learning style and experience with adopting unfamiliar technologies.

Next Steps

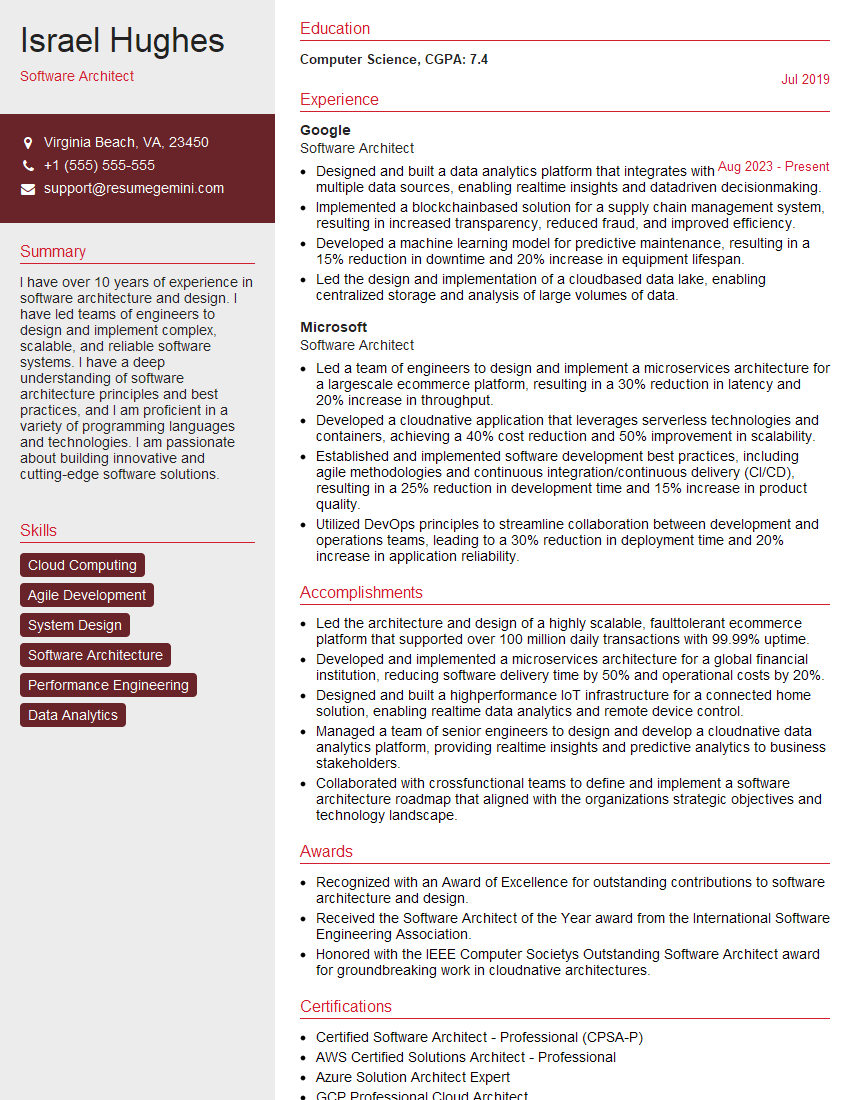

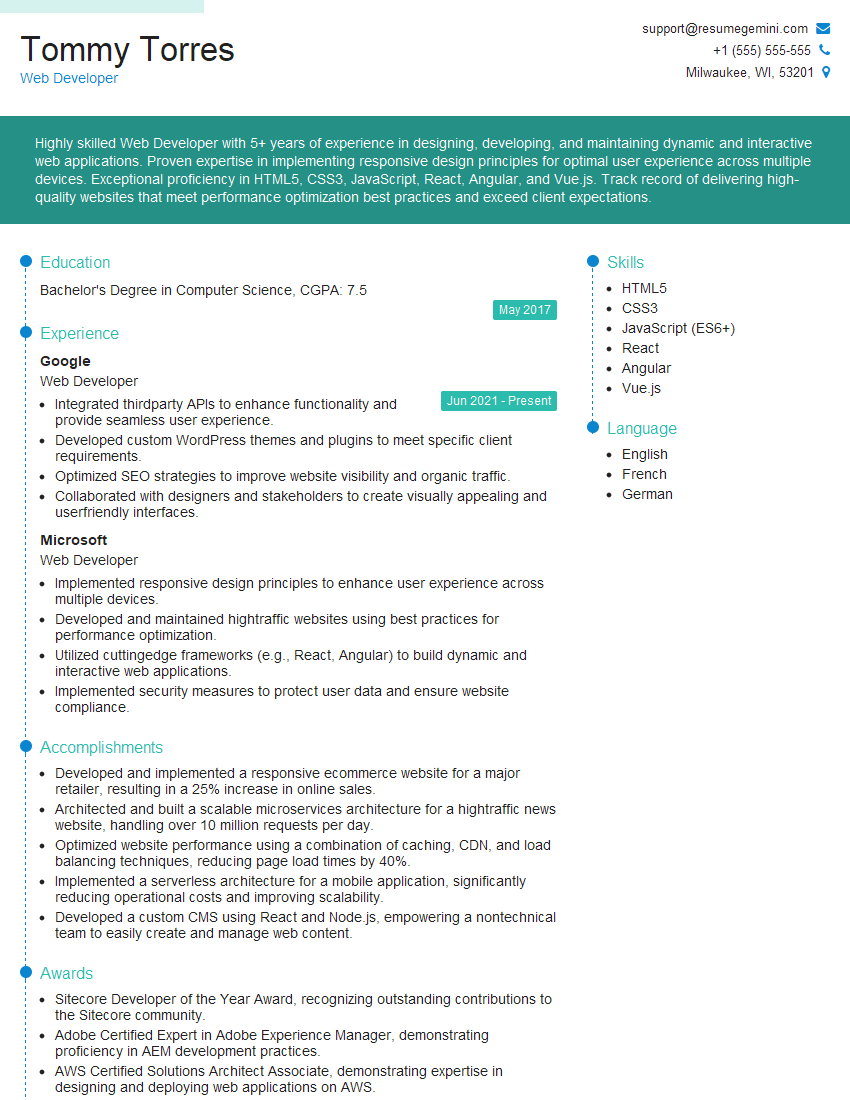

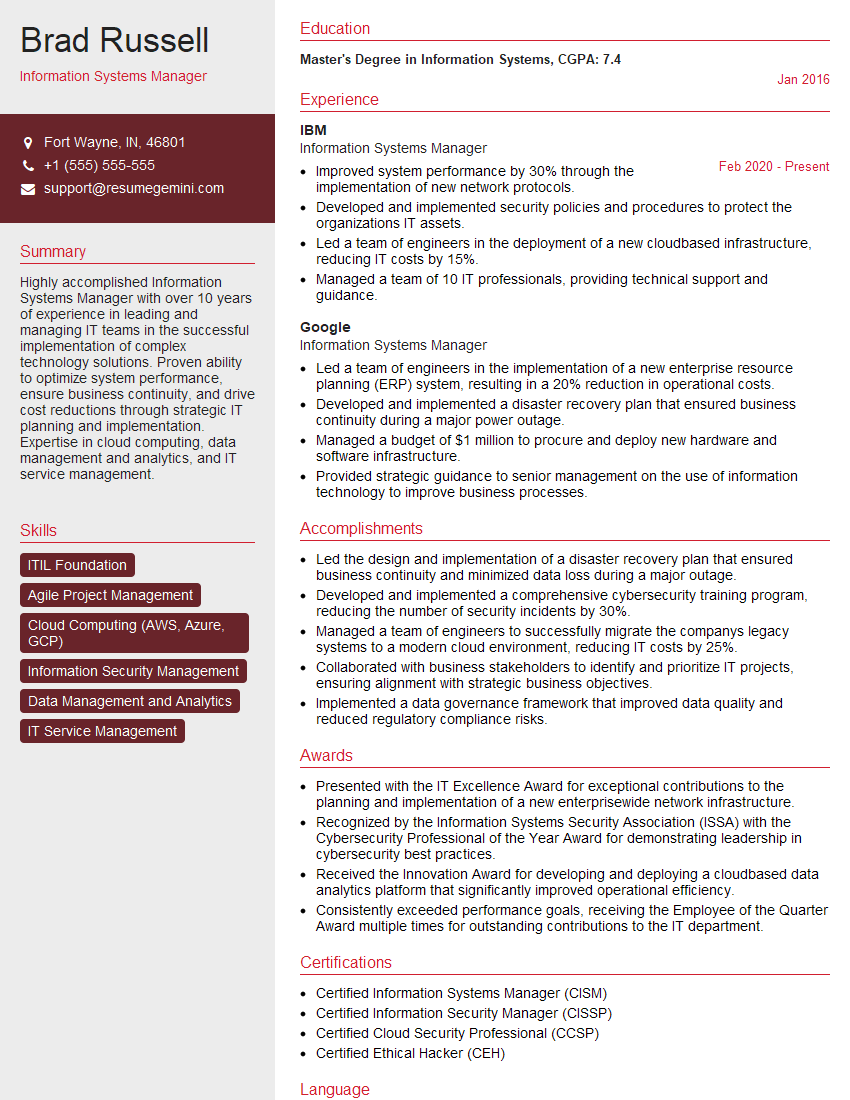

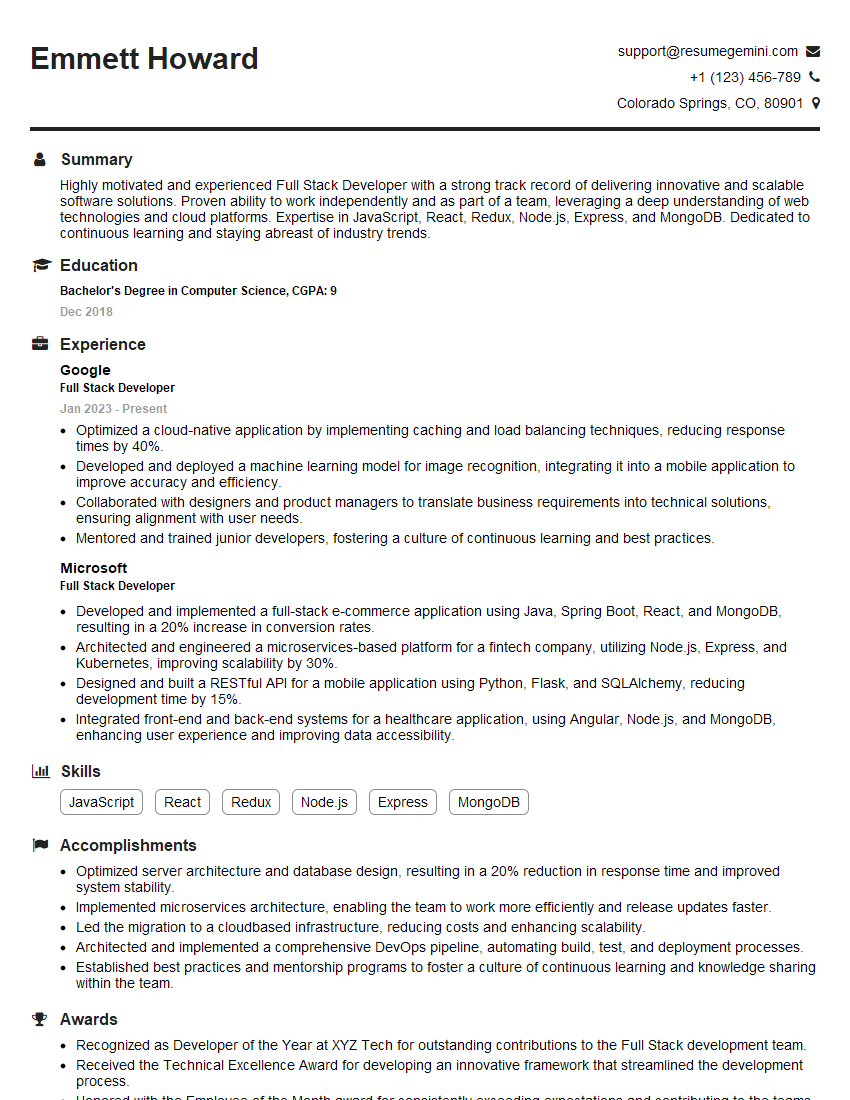

Mastering relevant software and tools is crucial for career advancement. Proficiency in these areas demonstrates valuable skills, increases your marketability, and opens doors to exciting opportunities. To maximize your job prospects, focus on creating an ATS-friendly resume that highlights your key skills and accomplishments. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We provide examples of resumes tailored to showcasing proficiency in using relevant software and tools to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good