Preparation is the key to success in any interview. In this post, we’ll explore crucial Prototyping and Testing Techniques interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Prototyping and Testing Techniques Interview

Q 1. Explain the difference between low-fidelity and high-fidelity prototyping.

Low-fidelity and high-fidelity prototypes represent different stages in the design process, varying primarily in their level of detail and visual appeal. Think of it like sketching a house versus creating a detailed architectural model.

Low-fidelity prototypes are quick, rough representations of an idea. They prioritize functionality over visual polish, often using simple tools like paper sketches, whiteboards, or basic wireframing software. The goal is to quickly test core concepts and gather feedback early on. For example, a low-fidelity prototype for a mobile app might be a series of hand-drawn screens showing the basic layout and navigation flow.

High-fidelity prototypes, on the other hand, are highly detailed and visually polished representations of the final product. They closely resemble the final design in terms of look and feel, often incorporating interactive elements and realistic animations. They’re used for testing usability and user experience in a more realistic setting. A high-fidelity prototype might be an interactive mockup of a website built using tools like Figma or Adobe XD, complete with realistic images and functional buttons.

Choosing between low and high fidelity depends on the project phase and goals. Early in the process, low-fidelity is ideal for rapid iteration and testing core concepts. As the design matures, high-fidelity prototypes help refine the user experience and gather detailed feedback before committing to development.

Q 2. Describe your experience with A/B testing.

A/B testing, also known as split testing, is a crucial method for comparing two versions of a design or feature to determine which performs better. I’ve extensively used A/B testing in various projects to optimize website conversion rates, improve user engagement, and enhance the overall user experience.

In a typical project, I would identify a specific element to test, such as a button’s color, the placement of a call-to-action, or the wording of a headline. Then, I’d create two versions (A and B) and randomly assign users to one version or the other. By tracking key metrics like click-through rates, conversion rates, and time spent on page, I could analyze which version performed better and make data-driven decisions. For example, I once A/B tested two different layouts for an e-commerce product page. Version B, which featured larger product images and a clearer call-to-action, resulted in a 15% increase in conversion rates.

My experience involves defining clear hypotheses, selecting relevant metrics, ensuring statistically significant sample sizes, and accurately analyzing the results to avoid biased conclusions. Proper A/B testing requires meticulous planning and execution to guarantee reliable results.

Q 3. What are some common usability testing methods?

Several usability testing methods exist, each with its own strengths and weaknesses. The choice depends on project constraints, budget, and the specific information needed.

- Moderated usability testing: A researcher guides participants through tasks, observing their behavior and asking questions. This provides rich qualitative data but is more expensive and time-consuming.

- Unmoderated usability testing: Participants complete tasks independently, usually through online platforms. This is scalable and cost-effective, but offers less opportunity for in-depth qualitative feedback.

- Heuristic evaluation: Usability experts evaluate the design based on established usability principles (heuristics). This is quick and cost-effective but relies on the expertise of the evaluators.

- Cognitive walkthrough: Researchers simulate users’ thought processes while completing tasks, identifying potential usability issues. This method is effective in revealing early design flaws.

- Eye-tracking: This technology records participants’ eye movements to understand their visual attention and identify areas of focus or confusion. This provides detailed insight into visual attention, but can be expensive.

I’ve successfully employed all these methods in various projects, choosing the most appropriate approach based on the specific context and available resources.

Q 4. How do you identify and prioritize bugs during testing?

Identifying and prioritizing bugs during testing requires a systematic approach. I typically use a bug tracking system (like Jira or Bugzilla) to log, track and manage all defects.

My process involves:

- Reproducibility: Clearly documenting the steps to reproduce the bug. A vague report is useless.

- Severity: Classifying the bug’s impact (critical, major, minor, trivial). Critical bugs block functionality, while trivial ones are minor annoyances.

- Priority: Determining the urgency of fixing the bug (high, medium, low). High priority bugs need immediate attention.

- Categorization: Grouping bugs by type (functional, performance, usability, security). This allows for efficient analysis and targeted fixes.

- Prioritization Matrix: Using a matrix that plots severity against priority to identify which bugs need to be addressed first. Critical and high priority bugs get immediate attention.

For example, a critical bug might be a system crash, requiring immediate attention, while a minor bug like a typo might have low priority unless it impacts usability significantly. This systematic approach ensures that the most impactful bugs are addressed first.

Q 5. What is the difference between black-box and white-box testing?

Black-box and white-box testing are two fundamental approaches to software testing, differing primarily in the tester’s knowledge of the internal workings of the software.

Black-box testing treats the software as a ‘black box,’ meaning the tester doesn’t know the internal code structure or logic. Tests are based solely on the software’s specifications and functionality. It’s like using a microwave – you know how to use it but don’t need to understand the internal components. Common techniques include functional testing, usability testing, and system testing.

White-box testing, on the other hand, involves testing with full knowledge of the internal structure and workings of the software. The tester examines the code directly to identify potential vulnerabilities or areas of weakness. It’s like understanding the mechanics of a car to perform maintenance. Techniques include unit testing, integration testing, and code coverage analysis. White-box testing can be more thorough but requires programming expertise.

Often, a combination of both approaches is employed for comprehensive testing.

Q 6. Explain your experience with different types of software testing (unit, integration, system, acceptance).

My experience spans all levels of software testing, from unit to acceptance testing. Each level plays a vital role in ensuring software quality.

- Unit testing: This involves testing individual components (units) of the software in isolation. I’ve used various frameworks (like JUnit or pytest) to write unit tests, ensuring that each function or method works correctly.

- Integration testing: This tests the interaction between different units or modules. I focus on verifying that the units work together seamlessly, identifying integration-related issues early.

- System testing: This verifies the complete integrated system meets requirements. This involves testing the whole system as a single entity to ensure all components work together correctly and meet the defined specifications.

- Acceptance testing: This involves verifying the software meets the client’s or user’s acceptance criteria. This could involve user acceptance testing (UAT) where end-users test the system.

In a recent project, I developed a comprehensive test suite encompassing all these levels, improving software quality and reducing the risk of defects reaching production.

Q 7. Describe your experience with automated testing frameworks (e.g., Selenium, Cypress, Appium).

I’m proficient in several automated testing frameworks, including Selenium, Cypress, and Appium. The choice of framework depends on the application under test.

- Selenium: A versatile framework for automating web browser interactions. I’ve used it extensively to create automated tests for web applications, covering various functionalities like form submission, navigation, and data validation.

// Example Selenium code (Python): driver.find_element(By.ID, "username").send_keys("myusername") - Cypress: A JavaScript-based framework known for its ease of use and speed. I find it particularly useful for testing modern web applications built with JavaScript frameworks. It offers excellent debugging capabilities and integrates well with CI/CD pipelines.

- Appium: A powerful framework for automating mobile application testing across different platforms (iOS and Android). I’ve used it to test native, hybrid, and mobile web applications, ensuring compatibility and performance across various devices.

Using these frameworks significantly improves testing efficiency, reduces manual effort, and allows for more frequent and comprehensive testing.

Q 8. How do you handle conflicting priorities between speed and quality in testing?

Balancing speed and quality in testing is a constant challenge. It’s like baking a cake – you want it delicious (high quality) and ready for the party on time (speed). The key is risk-based testing. We prioritize testing features with the highest risk of failure or those impacting the core functionality first. This might involve using risk matrices to assess potential impact and likelihood of failure. For example, a critical payment processing feature would get far more rigorous testing than a minor UI tweak. We might employ techniques like exploratory testing for speed, focusing on quickly finding critical issues, while still incorporating formal test cases for thoroughness in crucial areas. We might also use a combination of automated and manual testing. Automation helps achieve speed for repetitive tasks, while manual testing allows for more nuanced exploration and intuitive problem-finding.

A common approach is to define clear acceptance criteria at the start of a sprint or phase. If time runs short, we ensure the core functionalities that meet these criteria are tested thoroughly before releasing, while less critical aspects might be deferred to the next iteration.

Q 9. What metrics do you use to measure the effectiveness of your testing?

Measuring testing effectiveness goes beyond simply counting passed or failed tests. We use a variety of metrics, tailored to the project and its goals. Key metrics include:

- Defect Detection Rate (DDR): The number of defects found during testing divided by the total number of defects found in production. A higher DDR indicates more effective testing.

- Defect Density: The number of defects found per lines of code or per feature. Helps identify areas with higher defect concentrations.

- Test Coverage: The percentage of code or requirements covered by test cases. Aims for high coverage, but remember 100% is not always realistic or necessary.

- Test Execution Time: Tracks efficiency and identifies bottlenecks in the testing process. Helps us optimize test suites and automation.

- Escape Rate: The number of defects that escape testing and reach production. A low escape rate is a primary goal, demonstrating testing efficacy.

We also track metrics related to test case design and execution time. For example, we might track the average time it takes to execute a test case and use this to identify areas for improvement. These metrics help us continuously improve our testing strategies and process.

Q 10. How do you write effective test cases?

Writing effective test cases is crucial for thorough testing. Each test case should follow a structured format, typically including:

- Test Case ID: A unique identifier for the test case.

- Test Case Name: A concise and descriptive name.

- Objective: What the test case aims to verify.

- Preconditions: Steps to perform before executing the test case.

- Test Steps: A detailed sequence of steps to execute.

- Expected Result: The outcome anticipated after executing the steps.

- Actual Result: The actual outcome after executing the steps.

- Pass/Fail: Indicates whether the test case passed or failed.

Good test cases are:

- Clear and Concise: Easy for anyone to understand and execute.

- Repeatable: Can be executed multiple times with consistent results.

- Independent: One test case’s outcome shouldn’t affect another.

- Atomic: Focus on a single aspect of the application.

Example: Let’s say we’re testing a login feature. A test case might be: Test Case ID: LOGIN_001; Test Case Name: Valid Login; Objective: Verify successful login with valid credentials; Preconditions: Browser open; Steps: Enter valid username, enter valid password, click login button; Expected Result: User is successfully logged in and redirected to the home page.

Q 11. Describe your experience with Agile testing methodologies.

My experience with Agile testing methodologies is extensive. I’ve worked in Scrum and Kanban environments, embracing the iterative and collaborative nature of Agile. In Agile, testing is not a separate phase but an integrated part of the development process. I participate actively in sprint planning, daily stand-ups, sprint reviews, and retrospectives. I collaborate closely with developers to ensure testability is built into the code from the beginning. I use techniques like Test-Driven Development (TDD) and Behavior-Driven Development (BDD) to ensure test cases are written early and frequently.

I am proficient in using various Agile testing techniques, such as:

- Sprint Testing: Testing is conducted within each sprint, ensuring frequent feedback and early defect detection.

- Continuous Testing: Testing is automated and integrated into the CI/CD pipeline, providing immediate feedback on code changes.

- Acceptance Test-Driven Development (ATDD): Collaborating with stakeholders to define acceptance criteria that drive test case development.

Agile’s emphasis on collaboration and quick feedback loops has significantly improved the quality and speed of software delivery in my experience.

Q 12. What is your experience with test-driven development (TDD)?

Test-Driven Development (TDD) is a practice where test cases are written *before* the code they are intended to test. It’s a powerful approach that helps ensure code quality and maintainability. The basic cycle involves:

- Write a failing test: Begin by writing a test case that defines the desired behavior of a piece of code. This test will initially fail because the code hasn’t been written yet.

- Write the minimal code to pass the test: Write the simplest code necessary to pass the newly written test case. Avoid over-engineering at this stage.

- Refactor: Once the test passes, refactor the code to improve its design and readability, ensuring the test continues to pass.

I have significant experience using TDD with various testing frameworks like JUnit (Java) and pytest (Python). TDD helps catch bugs early, improves code design, and provides comprehensive test coverage. It’s particularly effective for complex logic or when working with legacy code. One project where I used TDD successfully was a complex payment gateway integration. Writing the tests first helped clarify requirements and ensure the integration worked seamlessly.

Q 13. Explain the concept of continuous integration and continuous delivery (CI/CD) in relation to testing.

Continuous Integration and Continuous Delivery (CI/CD) are practices that automate the process of building, testing, and deploying software. In relation to testing, CI/CD enables continuous testing, integrating automated tests into the pipeline. This ensures that every code change is thoroughly tested before being deployed.

Here’s how it works:

- Continuous Integration (CI): Developers integrate code changes frequently into a shared repository. Automated builds and tests run automatically after each integration, providing quick feedback on code quality. This catches integration issues early, preventing them from accumulating.

- Continuous Delivery (CD): Automates the deployment process. After successful CI, the code is automatically deployed to various environments (e.g., staging, production). This ensures a faster release cycle and reduces the risk of deployment errors. Automated tests play a vital role in CD by validating each stage of the deployment process.

CI/CD pipelines often use tools like Jenkins, GitLab CI, or CircleCI to orchestrate the process. Automated testing is crucial for the success of CI/CD. Without it, the pipeline will constantly fail or release untested code. My experience includes setting up and maintaining CI/CD pipelines, integrating various testing frameworks, and monitoring test results to ensure continuous quality.

Q 14. How do you handle a situation where a critical bug is discovered late in the development cycle?

Discovering a critical bug late in the development cycle is a serious situation requiring immediate action. It’s like finding a major leak in your ship just before it sets sail! The first step is to assess the severity and impact of the bug. We’ll prioritize fixing it immediately, even if it means adjusting the release schedule. The following steps are crucial:

- Immediate Reproduction and Investigation: Reproduce the bug meticulously to understand the root cause and its impact. This involves gathering detailed information about the environment, steps to reproduce, and affected functionalities.

- Risk Assessment: Assess the risk of releasing the software with the bug. Will it severely impact the user experience or system security? This will help determine the urgency of the fix.

- Quick Fix and Thorough Regression Testing: Develop a targeted fix for the bug, ensuring it does not introduce new problems. This requires thorough regression testing to validate that the fix does not break other parts of the software.

- Communication and Transparency: Keep all stakeholders informed of the situation, including the severity, the fix, and the potential impact on the release schedule. Open communication manages expectations and builds trust.

- Post-Mortem Analysis: After the bug is fixed and deployed, conduct a thorough post-mortem analysis to identify the root cause of the issue and implement preventative measures to avoid similar bugs in the future. This is a critical learning opportunity for the whole team.

In some cases, a workaround might be implemented as a temporary solution while a permanent fix is being developed. However, the goal is always to resolve the root cause and prevent recurrence.

Q 15. What is your experience with performance testing tools (e.g., JMeter, LoadRunner)?

I have extensive experience with performance testing tools like JMeter and LoadRunner, using them to simulate real-world user loads and identify bottlenecks in applications. JMeter, with its open-source nature and ease of use, is my go-to for rapid prototyping and testing of web applications. I’ve used it to create complex test plans involving multiple threads, various HTTP requests, and assertions to validate response times and data accuracy. For enterprise-level applications requiring more sophisticated features and reporting, LoadRunner’s advanced capabilities, such as its robust scripting language and detailed performance analysis, are invaluable. For instance, in a recent project involving a high-traffic e-commerce platform, I used LoadRunner to simulate thousands of concurrent users, identifying a critical database query that needed optimization. This led to a 40% improvement in response time.

My approach involves crafting realistic test scenarios that mirror actual user behavior. This includes designing tests to cover various user actions, from simple browsing to complex transactions. Analyzing the results meticulously is key—I look for trends in response times, resource utilization (CPU, memory), and error rates to pinpoint areas for improvement. I’m comfortable working with both graphical user interfaces and command-line interfaces for these tools.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your approach to security testing.

My approach to security testing is multifaceted and follows a risk-based methodology. I start by identifying potential vulnerabilities based on the application’s architecture and functionality. This includes common vulnerabilities like SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF). Then, I employ a combination of automated and manual techniques to test for these vulnerabilities. Automated tools like OWASP ZAP are useful for identifying common vulnerabilities, while manual testing allows for a more in-depth exploration of the application’s security posture. I also consider authentication mechanisms, authorization controls, and data encryption strategies. For example, in a recent project, I used manual penetration testing to discover a vulnerability in the authentication process that could have allowed unauthorized access. Beyond automated tools, I emphasize understanding the underlying security principles and actively looking for vulnerabilities that aren’t easily detected by automated tools.

Furthermore, I always document all findings thoroughly, including steps to reproduce vulnerabilities and recommended mitigations. Regular security assessments are vital, and I advocate for integrating security testing into the entire software development lifecycle (SDLC), rather than treating it as an afterthought. A robust security testing strategy, incorporating both preventative measures and reactive testing, is fundamental.

Q 17. How do you create effective bug reports?

Effective bug reports are crucial for efficient bug resolution. My approach prioritizes clarity, reproducibility, and detailed information. I use a consistent template that includes the following:

- Summary: A concise description of the bug.

- Steps to Reproduce: Clear, step-by-step instructions on how to reproduce the bug.

- Expected Result: What should happen.

- Actual Result: What actually happened.

- Environment: Operating system, browser, application version, etc.

- Attachments: Screenshots, log files, or any other relevant supporting evidence.

- Severity: Assessing the impact of the bug (e.g., critical, major, minor).

- Priority: The urgency of fixing the bug (e.g., high, medium, low).

For example, instead of simply stating “The application crashes,” a better report would say: “The application crashes when attempting to upload a file larger than 10MB. Steps to reproduce: 1. Open the application; 2. Navigate to the upload page; 3. Select a file larger than 10MB; 4. Click upload. Expected Result: The file uploads successfully. Actual Result: The application crashes. Environment: Windows 10, Chrome 114, Application version 2.0.”

Q 18. What is your preferred method for managing test cases and results?

For managing test cases and results, I prefer a combination of TestRail and a version control system like Git. TestRail provides a centralized platform for organizing test cases, tracking execution progress, and managing defects. Its reporting features are excellent for summarizing test results and identifying areas needing attention. The integration with Git helps maintain version history of test cases, which is important for traceability and collaboration. I also create clear naming conventions for test cases, typically following a structure that reflects the module, functionality, and specific test scenario. This ensures easy searchability and organization. I use spreadsheets only for quick, temporary test case creation or documentation.

The results are meticulously documented within TestRail, linked to the corresponding test cases, and categorized by test runs or builds. Detailed logs from automated tests are also integrated into the reporting process, ensuring a complete record of the testing activities. This systematic approach allows for easy analysis and improves efficiency for future testing cycles.

Q 19. Explain your experience with different types of testing documentation.

My experience encompasses various testing documentation types including Test Plans, Test Cases, Test Scripts, Bug Reports (as discussed previously), Test Summary Reports, and Traceability Matrices. Test Plans outline the overall testing strategy, scope, resources, and schedule. Test Cases detail specific steps to validate individual functionalities. Test Scripts automate the execution of test cases. Test Summary Reports consolidate the overall results of the testing activities, while Traceability Matrices link requirements to test cases to ensure complete coverage. I tailor the documentation to the project’s size and complexity, focusing on clarity and ease of use. For simple projects, a concise summary might suffice, while large, complex projects require more detailed documentation across all types.

The choice of documentation tools depends on project needs; sometimes a simple word processor will suffice, other times specialized tools like TestRail or Jira are necessary. Consistency and accuracy are key regardless of the chosen tool, and maintaining versions is crucial for traceability and auditability.

Q 20. Describe a time you had to deal with a difficult stakeholder during the testing process.

In a previous project, a stakeholder insisted on releasing the software without completing all planned testing due to an aggressive deadline. They minimized the importance of thorough testing, prioritizing speed over quality. To address this, I first presented data demonstrating the potential risks of releasing the software with untested features. I emphasized the financial and reputational consequences of releasing a buggy product. I then proposed a compromise – prioritizing high-risk functionalities for immediate testing while deferring lower-risk aspects to a later sprint. I also clearly articulated the remaining risks with the reduced testing scope. This compromise satisfied their need for a quick release while mitigating the risks involved. This situation highlighted the importance of clear and confident communication, backed by data-driven arguments. Building trust with the stakeholder through demonstrable expertise was also key to finding a resolution that balanced their priorities with the needs of ensuring software quality.

Q 21. What are some common challenges you face during the testing process and how do you overcome them?

Common challenges include tight deadlines, limited resources, changing requirements, and unclear specifications. Tight deadlines require careful prioritization and efficient test planning. I address this through effective risk assessment, focusing on high-impact functionalities. Limited resources necessitate creative solutions; I often leverage automation to improve efficiency and coverage. Changing requirements are managed through agile methodologies, adapting test plans iteratively to incorporate the new elements. Unclear specifications are tackled through early collaboration with developers and stakeholders, ensuring clarity before commencing testing.

Another challenge is dealing with flaky tests—tests that fail intermittently without any code change. These are addressed by investigating the underlying causes – network issues, timing dependencies, or environmental factors. I use debugging tools and careful analysis to identify and resolve these issues, improving the reliability of the test suite. Proactive risk management and clear communication are crucial in overcoming these challenges, building a strong team spirit, and ultimately delivering high-quality software.

Q 22. How do you stay up-to-date with the latest testing tools and techniques?

Staying current in the ever-evolving field of testing tools and techniques requires a multi-pronged approach. I actively participate in online communities like those on Reddit and Stack Overflow, focusing on subreddits and forums dedicated to software testing and UX design. These platforms offer discussions on the latest tools and methodologies, as well as insights from experienced practitioners.

I also subscribe to several industry newsletters and blogs, such as those from leading software testing companies and influential UX experts. These newsletters often feature articles and reviews of new tools and updates to existing ones. Attending webinars and online courses, many of which are offered by platforms like Coursera and Udemy, allows me to delve deeper into specific techniques and gain practical experience. Finally, I actively participate in industry conferences and workshops whenever possible – they’re invaluable for networking and learning about cutting-edge advancements firsthand.

For example, recently I learned about the improvements in automated visual testing through a webinar and immediately incorporated that knowledge into a current project, leading to a significant reduction in testing time.

Q 23. How do you use prototyping to validate design decisions?

Prototyping is crucial for validating design decisions because it allows us to test assumptions early and inexpensively. Instead of investing significant resources in developing a fully functional product only to discover flaws later, prototyping lets us create quick, low-fidelity representations to gather feedback.

For instance, if I’m designing a new e-commerce checkout process, I might initially create a paper prototype to quickly test the flow and sequence of steps. This allows me to identify any major usability issues or confusing elements before moving on to a higher-fidelity digital prototype. After receiving feedback on the paper prototype, I’ll create a digital prototype using a tool like Figma. This allows for a more interactive experience and provides a more realistic representation of the final product. This iterative process—from low to high fidelity—ensures that design decisions are validated at each stage, leading to a more user-centered and effective final product.

Q 24. Describe your experience with user research methods related to prototyping.

My experience with user research methods related to prototyping is extensive and includes a variety of techniques. I frequently employ user testing, where I observe participants interacting with the prototype and gather feedback on their experience. This often involves think-aloud protocols, where participants verbalize their thoughts as they use the prototype, giving valuable insights into their decision-making process.

I also utilize usability testing, which focuses on identifying specific usability issues within the prototype. This often involves using metrics like task completion rate, error rate, and time on task. A/B testing is another valuable technique I use, allowing me to compare different design iterations and determine which performs better with users. Finally, I incorporate heuristic evaluations, where I systematically assess the prototype against established usability principles, to identify potential areas for improvement. For example, in a recent project redesigning a mobile banking app, A/B testing showed a significant increase in user satisfaction with a redesigned login screen, proving the value of iterative testing and user feedback integration.

Q 25. What are some common prototyping tools you have used (e.g., Figma, Adobe XD, InVision)?

My experience spans a range of prototyping tools, each with its own strengths. Figma is a favorite for its collaborative features and ease of use in creating high-fidelity prototypes. Its vector-based design allows for scalable and crisp visuals. Adobe XD excels in creating interactive prototypes with realistic animations and transitions. It also integrates seamlessly with other Adobe Creative Suite applications. InVision is particularly strong in its prototyping capabilities, allowing for the creation of complex interactive flows and integrations with user testing platforms.

I’ve also used simpler tools like Balsamiq for quick, low-fidelity wireframing when brainstorming and exploring early concepts. The choice of tool depends on the project’s complexity, timeline, and specific needs. For instance, for a rapid iteration of design changes, Balsamiq might suffice, whereas for a more complex interaction design, Figma or Adobe XD would be preferred.

Q 26. How do you measure the success of a prototype?

Measuring the success of a prototype isn’t simply about aesthetics; it’s about understanding whether it achieves its intended purpose. Key metrics include task completion rate (how many users successfully completed their tasks), error rate (how many mistakes users made), and time on task (how long it took users to complete tasks). Beyond quantitative data, qualitative data from user interviews and observations is equally important. This allows for in-depth understanding of user behavior, frustrations, and suggestions.

For instance, a successful prototype of a new mobile app might demonstrate a high task completion rate (e.g., over 90% of users successfully completed their intended tasks) and a low error rate (e.g., fewer than 5% of users encountered significant errors), combined with positive feedback in post-testing interviews highlighting ease of use and enjoyable design. Ultimately, the success of a prototype hinges on its ability to reveal insights that influence improved designs and inform crucial design decisions, driving improvements in the final product.

Q 27. What are the key differences between user testing and usability testing?

While both user testing and usability testing aim to improve the user experience, they differ in their scope and focus. User testing is a broader term that encompasses various methods for gathering feedback from users. It aims to understand user behavior and preferences, addressing whether users can complete tasks and feel satisfied with the experience. Usability testing, on the other hand, is a specific type of user testing that focuses primarily on identifying and evaluating usability issues. It examines the efficiency and ease of use, measuring metrics like task completion rate, error rate, and time on task.

Think of it this way: user testing asks “Do users like it and can they use it?” while usability testing zeroes in specifically on “How easy is it to use?” A usability test might reveal that users frequently make errors in a specific form, while a user test might uncover that users don’t find the overall design aesthetically pleasing. Both are vital for developing a truly user-friendly product.

Q 28. Describe your experience with accessibility testing.

Accessibility testing is critical for ensuring that a product is usable by people with disabilities. My experience encompasses evaluating prototypes for compliance with accessibility guidelines such as WCAG (Web Content Accessibility Guidelines). This involves checking for things like sufficient color contrast, alternative text for images, keyboard navigation, and screen reader compatibility.

I use various tools and techniques, including automated testing tools that can identify common accessibility violations and manual testing to assess the user experience for people with different disabilities. For example, I will test prototypes with screen readers to ensure that the information is presented in a logical and understandable manner. I also involve users with disabilities in the testing process to gain firsthand insights and ensure that the prototype meets their needs and expectations. A recent project involved redesigning a website to be fully compliant with WCAG 2.1, resulting in a far more inclusive and accessible experience.

Key Topics to Learn for Prototyping and Testing Techniques Interview

- Prototyping Methods: Understanding various prototyping techniques (low-fidelity, high-fidelity, wireframing, etc.) and their appropriate applications. Consider the trade-offs between speed and fidelity.

- Testing Methodologies: Familiarity with different testing approaches (unit, integration, system, user acceptance testing), their purpose, and when to apply each one. This includes understanding Agile testing principles.

- Test Case Design: Mastering the creation of effective and efficient test cases, including boundary value analysis, equivalence partitioning, and state transition testing. Be prepared to discuss your approach to test case prioritization.

- Defect Tracking and Reporting: Understanding the process of identifying, documenting, and tracking defects using bug tracking systems. Discuss strategies for clear and concise bug reporting.

- Usability Testing: Knowledge of conducting usability tests, analyzing results, and iterating on designs based on user feedback. This includes understanding different usability testing methods.

- Automation Testing: Familiarity with automated testing frameworks and tools, and the benefits and challenges associated with test automation. Be prepared to discuss specific tools you’ve used.

- Performance Testing: Understanding load testing, stress testing, and performance bottleneck identification. Discuss approaches to improving application performance.

- Test Environments: Setting up and managing test environments, including considerations for data management and version control within a testing context.

- Software Development Life Cycle (SDLC): Understanding how prototyping and testing fit within different SDLC methodologies (e.g., Waterfall, Agile).

- Problem-Solving and Analytical Skills: Demonstrate your ability to approach testing challenges systematically, analyze test results, and identify root causes of defects.

Next Steps

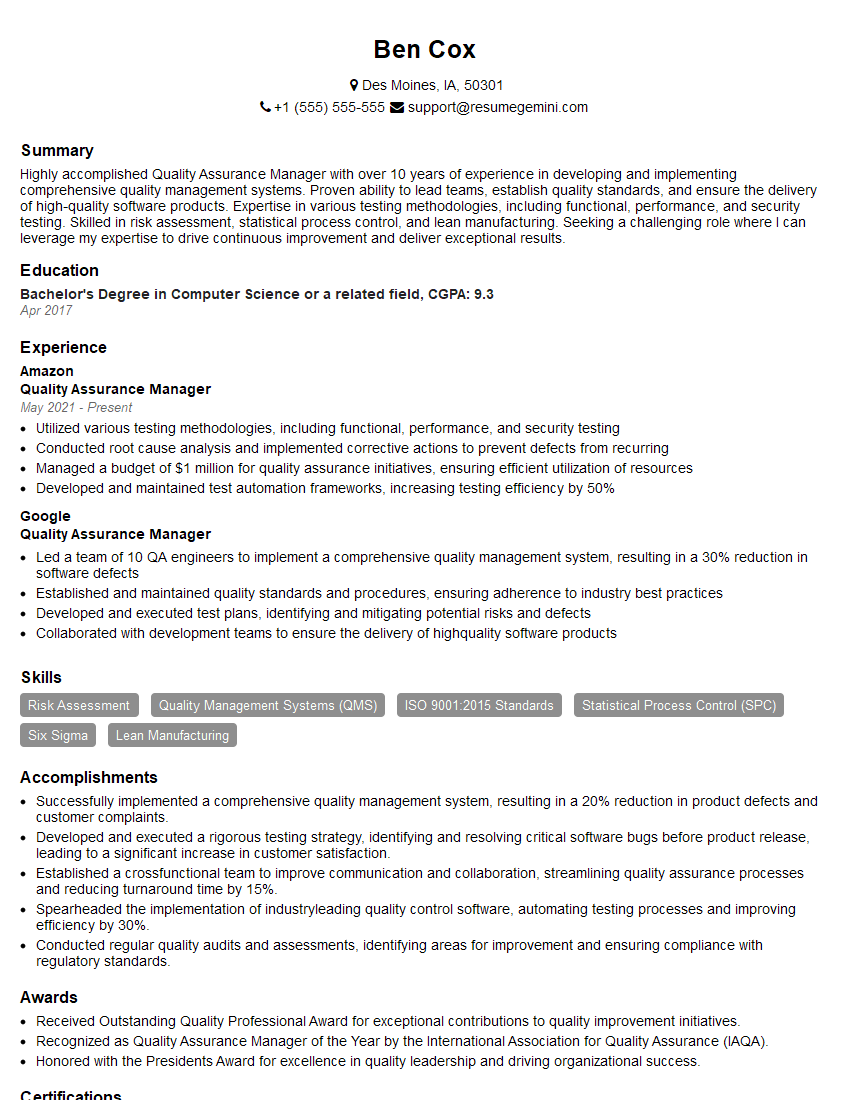

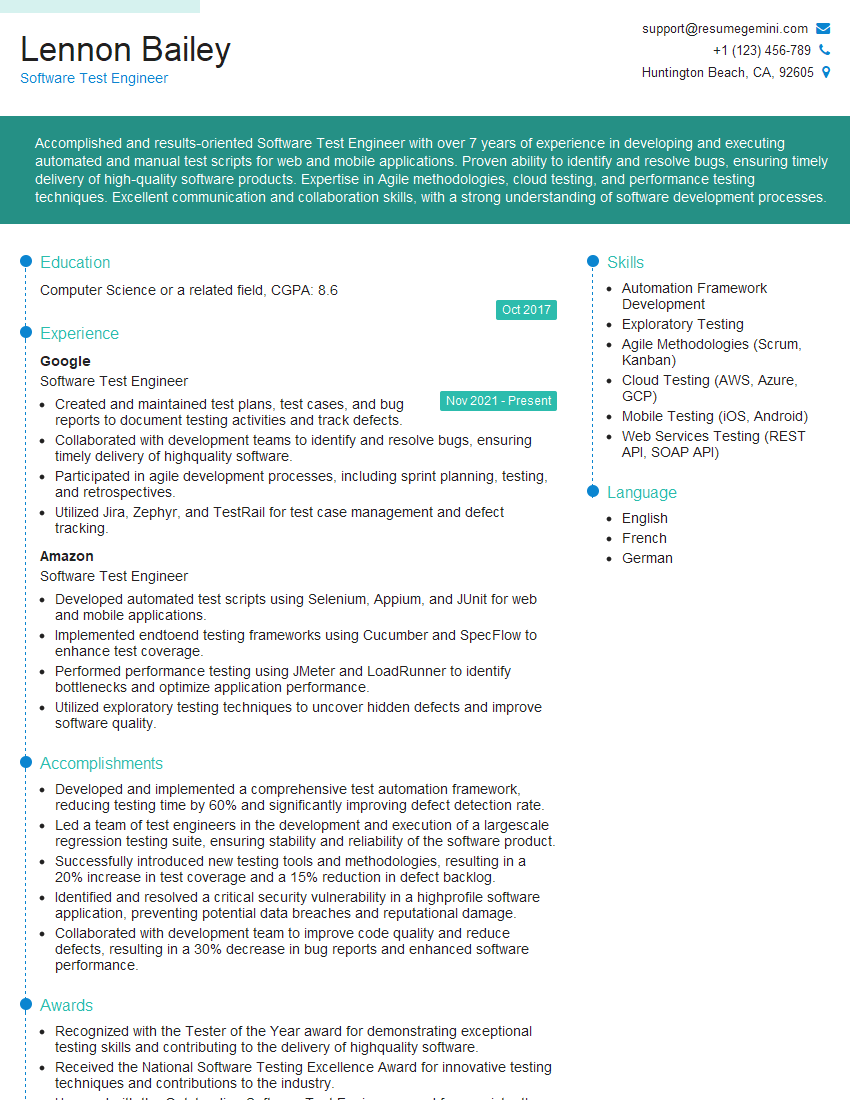

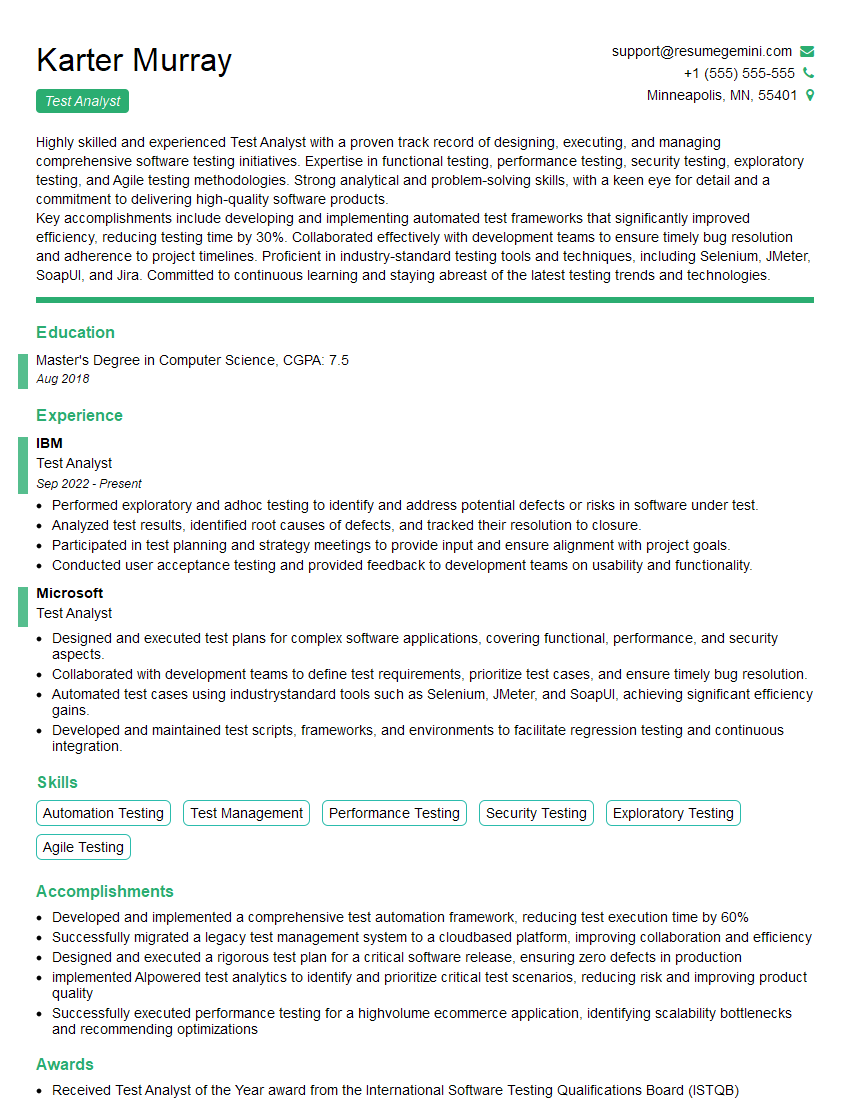

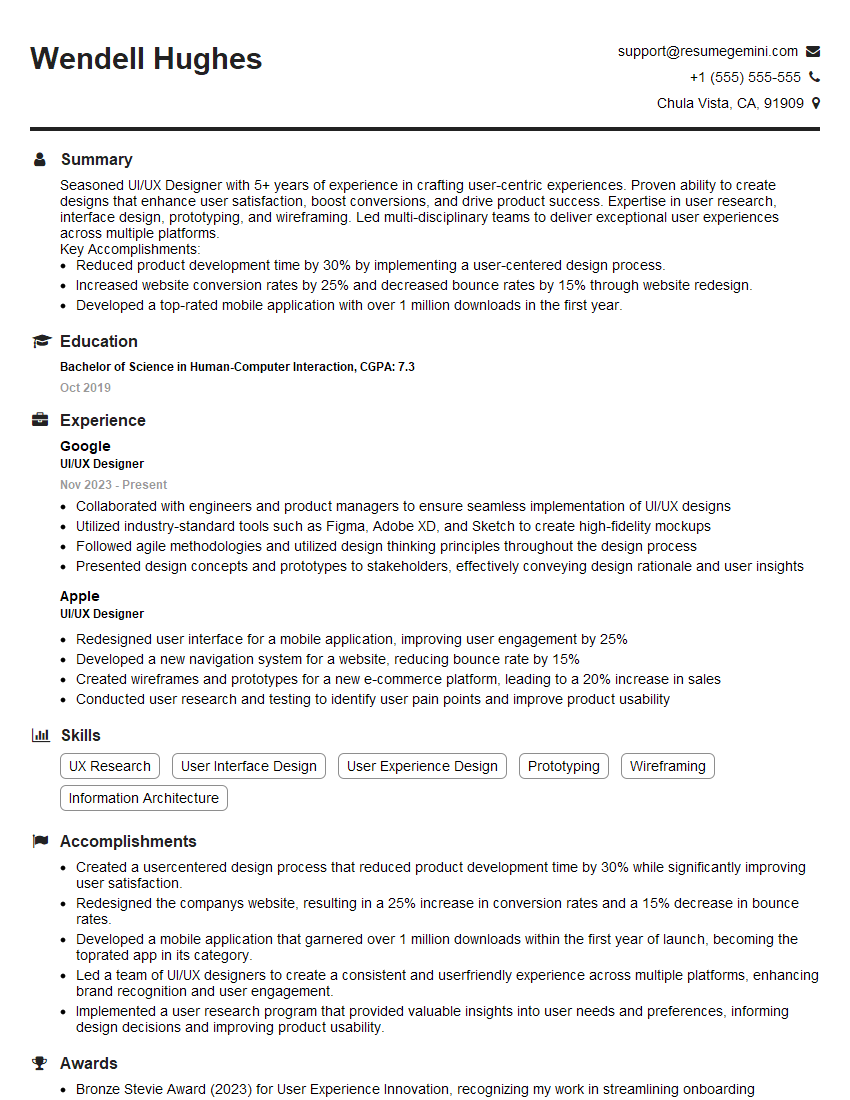

Mastering prototyping and testing techniques is crucial for a successful career in software development and related fields. It demonstrates your ability to build robust and user-friendly applications. To maximize your job prospects, creating an ATS-friendly resume is essential. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience. Examples of resumes tailored to Prototyping and Testing Techniques are available to help you get started. Invest the time to craft a compelling resume; it’s your first impression on potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good