Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential QoS and Traffic Engineering interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in QoS and Traffic Engineering Interview

Q 1. Explain the concept of Quality of Service (QoS).

Quality of Service (QoS) is a set of functionalities that provide better handling of network traffic for specific applications or users. Imagine a highway: without QoS, all vehicles travel at the same speed, leading to congestion. QoS is like having dedicated lanes for ambulances (high priority) and regular cars (low priority), ensuring ambulances reach their destination faster even during peak traffic. In networking, QoS ensures that critical applications, such as video conferencing or online gaming, receive the necessary resources (bandwidth, latency, etc.) to perform optimally, even when network resources are limited or heavily utilized.

Q 2. Describe different QoS mechanisms (e.g., DiffServ, IntServ).

Several QoS mechanisms exist. IntServ (Integrated Services) provides a reservation-based approach, guaranteeing bandwidth and other resources for specific flows beforehand. Think of this as booking a hotel room in advance—you’re guaranteed a space. However, it’s complex to implement and scales poorly in large networks. DiffServ (Differentiated Services), in contrast, uses per-hop behavior to classify and prioritize traffic. It’s simpler to implement and scales better. It’s like having different lanes on a highway—each lane has a different speed limit and priority, but you don’t need a reservation.

Other mechanisms include

- MPLS (Multiprotocol Label Switching): Offers a more efficient way to forward traffic by using labels instead of IP addresses. This is helpful in QoS because you can associate labels with different QoS classes.

- Traffic Shaping and Policing: These techniques control the rate at which traffic enters a network.

Q 3. How does DiffServ prioritize traffic?

DiffServ prioritizes traffic by assigning different Differentiated Services Code Points (DSCPs) to packets. These DSCPs are 6-bit values in the IP header that indicate the packet’s priority. Routers use these DSCPs to apply different queuing and forwarding policies. Packets with higher priority DSCPs (e.g., those destined for real-time applications) are given preferential treatment, such as shorter queueing delays or higher bandwidth allocation. This is often implemented using different queues within a router, with high-priority queues being serviced first. Consider a router with multiple queues – one for high-priority voice traffic, another for low-priority email traffic. Voice packets would be placed in the high-priority queue to avoid delay, whilst email traffic could experience higher latency.

Q 4. Explain the role of Class of Service (CoS) in QoS.

Class of Service (CoS) is a mechanism used to classify network traffic into different priority levels. Think of it as labeling packages in a delivery system – some packages (like a birthday cake) are marked urgent (high CoS) while others (e.g., a book) are less urgent (low CoS). Each CoS level is assigned specific QoS parameters like bandwidth, delay, and jitter. This classification allows for the implementation of QoS policies, ensuring that high-priority traffic receives preferential treatment over lower-priority traffic. CoS is frequently used in conjunction with other QoS mechanisms like DiffServ or IntServ. A common example is using CoS in VLANs (Virtual LANs) to separate traffic streams based on their sensitivity to delay.

Q 5. What are the different QoS parameters (e.g., bandwidth, delay, jitter, packet loss)?

Several key QoS parameters define the quality of network service. These include:

- Bandwidth: The amount of data that can be transmitted over a network connection per unit of time. Insufficient bandwidth can lead to congestion and slowdowns.

- Delay (Latency): The time it takes for a packet to travel from source to destination. High latency is detrimental to real-time applications like voice and video.

- Jitter: Variation in delay across multiple packets. Inconsistent delay can affect the quality of streaming media and voice calls.

- Packet Loss: The percentage of packets that are lost during transmission. Packet loss can disrupt streaming and cause interruptions in communication.

Q 6. How do you measure QoS performance?

Measuring QoS performance requires tools and techniques to monitor the QoS parameters mentioned earlier. Methods include:

- Network Monitoring Tools: Using tools like SNMP (Simple Network Management Protocol), NetFlow, or dedicated network performance monitoring solutions to collect data on bandwidth utilization, latency, jitter, and packet loss.

- End-to-End Measurements: Running tests like ping, traceroute, or dedicated QoS testing tools to measure the performance experienced by applications or users. These give a user’s perspective on QoS.

- Statistical Analysis: Analyzing collected data to identify trends, bottlenecks, and areas for improvement. This involves calculating averages, standard deviations, and other statistical measures to understand the variability in QoS.

Q 7. Explain the concept of traffic shaping and policing.

Traffic shaping and traffic policing are complementary QoS mechanisms used to control the rate of traffic entering a network. Think of a water faucet—shaping is like gradually adjusting the water flow to a steady rate, preventing bursts; policing is like having a valve that shuts off the flow if it exceeds a certain limit.

Traffic shaping conforms traffic to a desired rate, smoothing out bursts and preventing congestion. This often uses queuing mechanisms to buffer and release packets at a controlled pace. Traffic policing monitors traffic against predefined thresholds (e.g., bandwidth limits). If traffic exceeds the limits, packets might be dropped, marked for lower priority, or otherwise penalized. Both are essential for managing network resources and ensuring fair access to bandwidth for all users. They’re often used together: shaping to manage traffic proactively, and policing to react to and mitigate potential violations.

Q 8. What are the common QoS challenges in VoIP deployments?

Voice over IP (VoIP) is highly sensitive to network jitter, latency, and packet loss. These QoS challenges directly impact call quality, leading to frustrating user experiences. Let’s break down the common issues:

Jitter: Variations in packet arrival times cause choppy audio and make conversations difficult. Think of it like a stuttering radio signal – very annoying!

Latency: Delay in transmission creates echoes and one-sided conversations. A high latency is like someone talking and their voice reaching you only after a significant pause.

Packet Loss: Lost packets result in gaps or dropouts in the conversation. Imagine words disappearing mid-sentence – it renders the communication ineffective.

Bandwidth limitations: Insufficient bandwidth leads to poor call quality, dropped calls, and general frustration for users. This is like trying to send a large file over a dial-up connection – it takes forever and might fail.

Addressing these issues requires careful QoS configuration, prioritization of VoIP traffic, and adequate bandwidth provisioning. We often utilize techniques like traffic shaping, prioritization (e.g., using DiffServ or MPLS), and jitter buffers to mitigate these challenges.

Q 9. Describe your experience with MPLS and its role in QoS.

MPLS (Multiprotocol Label Switching) is a powerful technology I’ve extensively used to engineer QoS in large-scale networks. It provides a flexible framework for traffic engineering and prioritization. Instead of relying solely on IP addresses for routing decisions, MPLS uses short labels, which speeds up forwarding and enables sophisticated traffic management.

In QoS, MPLS shines because it allows for the creation of Label Switched Paths (LSPs). These LSPs can be configured with specific QoS parameters like bandwidth allocation and priority levels. This provides granular control over how different types of traffic are handled. For instance, we can dedicate a high-priority LSP for VoIP traffic, ensuring it receives preferential treatment and avoids congestion.

I’ve used MPLS in numerous projects, including setting up dedicated LSPs for critical applications in financial institutions and ensuring high-quality video conferencing across geographically distributed offices. The scalability and flexibility of MPLS have proven invaluable in these environments.

Q 10. How do you troubleshoot QoS issues?

Troubleshooting QoS issues involves a systematic approach. I typically follow these steps:

Identify the affected traffic: Determine which application or service is experiencing QoS problems. Is it VoIP, video conferencing, or something else?

Gather metrics: Collect performance data using network monitoring tools (e.g., SNMP, NetFlow) focusing on metrics such as latency, jitter, packet loss, and bandwidth utilization for the affected traffic.

Analyze the data: Identify patterns and anomalies in the collected data. Are there specific times or locations where the problems occur?

Inspect QoS configuration: Verify that the QoS policies are correctly configured and applied. Are the appropriate traffic classes and QoS mechanisms (e.g., DiffServ, MPLS) used?

Check network infrastructure: Inspect network devices (routers, switches) for any hardware or software issues that might be contributing to the QoS problems. This can involve examining CPU utilization, buffer sizes, and interface statistics.

Simulate the problem: Use packet capture tools (e.g., tcpdump, Wireshark) to capture and analyze network traffic to pinpoint the root cause.

Implement solutions: Based on the analysis, implement appropriate solutions like adjusting QoS policies, upgrading hardware, or optimizing network design.

Monitor and verify: After implementing solutions, closely monitor the network to ensure the QoS problems are resolved and the network performance is improved.

A real-world example involved a video conferencing system experiencing high jitter. By analyzing NetFlow data, we discovered a congested link and implemented traffic shaping to alleviate the issue.

Q 11. Explain the concept of traffic engineering in IP networks.

Traffic engineering in IP networks focuses on optimizing network resource utilization to improve overall network performance and efficiency. It involves strategically planning and managing network traffic flows to prevent congestion, minimize latency, and ensure efficient bandwidth allocation. Think of it as air traffic control for your network data.

It’s not just about moving data, but moving it intelligently. Instead of passively letting traffic find its own path, traffic engineering uses algorithms and protocols to direct traffic along the most efficient routes, avoiding bottlenecks and improving the quality of service for various applications.

Effective traffic engineering considers factors like bandwidth, latency, link utilization, and application requirements. The goal is to create a balanced and resilient network that can handle fluctuating traffic demands efficiently.

Q 12. Describe different traffic engineering techniques (e.g., OSPF, IS-IS, MPLS-TE).

Several techniques facilitate traffic engineering:

OSPF (Open Shortest Path First): A link-state routing protocol that can use metrics beyond just hop count (e.g., cost, bandwidth) to influence routing decisions. However, its inherent limitations make it less optimal for complex traffic engineering scenarios.

IS-IS (Intermediate System to Intermediate System): Another link-state protocol similar to OSPF but generally considered more efficient for larger networks. It also allows for more sophisticated traffic engineering using cost metrics.

MPLS-TE (MPLS Traffic Engineering): This leverages MPLS’s ability to create LSPs and combines it with sophisticated algorithms for path computation and optimization. It provides granular control over traffic flows and allows for the creation of dedicated paths for specific applications, maximizing bandwidth utilization and minimizing latency. This is often the preferred choice for complex networks.

Each technique has its strengths and weaknesses; the optimal choice depends on the network’s size, complexity, and specific requirements.

Q 13. How do you optimize network performance using traffic engineering?

Optimizing network performance using traffic engineering involves several steps:

Network modeling and analysis: Use network simulation tools to understand traffic patterns, identify potential bottlenecks, and assess current network performance.

Traffic characterization: Identify different traffic classes (e.g., VoIP, web traffic, video streaming) and their specific QoS requirements.

Path computation: Employ traffic engineering algorithms to calculate the optimal paths for different traffic flows, minimizing congestion and latency. This often involves using tools or protocols mentioned before like MPLS-TE, IS-IS or OSPF with custom link costs.

LSP provisioning: Create LSPs (if using MPLS-TE) or adjust routing parameters (OSPF/IS-IS) to steer traffic along the computed paths. We might provision dedicated LSPs for high-priority traffic, for example.

Monitoring and adjustment: Continuously monitor network performance and adjust traffic engineering parameters as needed to respond to changing traffic patterns and network conditions. This is iterative and essential for long-term optimization.

Imagine a highway system. Traffic engineering is like intelligently directing traffic flow to avoid jams, using dedicated lanes for emergency vehicles (high-priority traffic), and dynamically adjusting traffic patterns based on current conditions.

Q 14. Explain the importance of bandwidth allocation in traffic engineering.

Bandwidth allocation is crucial in traffic engineering because it directly impacts network performance and the quality of service experienced by different applications. Fair and efficient bandwidth allocation prevents congestion and ensures that critical applications receive the resources they need.

Without proper bandwidth allocation, certain applications could starve while others consume excessive resources. Consider a scenario with VoIP and video streaming on the same network – if bandwidth is not allocated appropriately, the VoIP calls could suffer from significant latency and packet loss due to the video streaming hogging bandwidth. This emphasizes the importance of intelligently allocating bandwidth based on traffic class and application-specific QoS requirements, and using tools and techniques like traffic shaping to ensure fairness.

Q 15. How does traffic engineering impact network security?

Traffic engineering, while primarily focused on optimizing network performance, significantly impacts network security. Effective traffic engineering can indirectly enhance security by reducing vulnerabilities created by congestion and inefficient routing. For instance, properly engineered paths can prevent network bottlenecks that might slow down security protocols, leaving them susceptible to attacks. Conversely, poor traffic engineering can lead to congestion, forcing traffic onto less secure paths or overwhelming security appliances, increasing the risk of breaches. Think of it like this: a well-managed highway system (well-engineered network) ensures smooth and efficient traffic flow, making it harder for malicious actors (accidents or roadblocks) to cause significant disruptions. Conversely, a congested, poorly planned system (poorly engineered network) offers more opportunities for disruptions and accidents.

For example, a Distributed Denial of Service (DDoS) attack can overwhelm a network’s resources. Proper traffic engineering, using techniques like traffic shaping and policing, can mitigate the impact by prioritizing legitimate traffic and limiting the bandwidth available to malicious sources. Another example involves strategic routing to avoid known points of vulnerability or to isolate potentially compromised segments of the network, thus containing the spread of an attack.

Career Expert Tips:

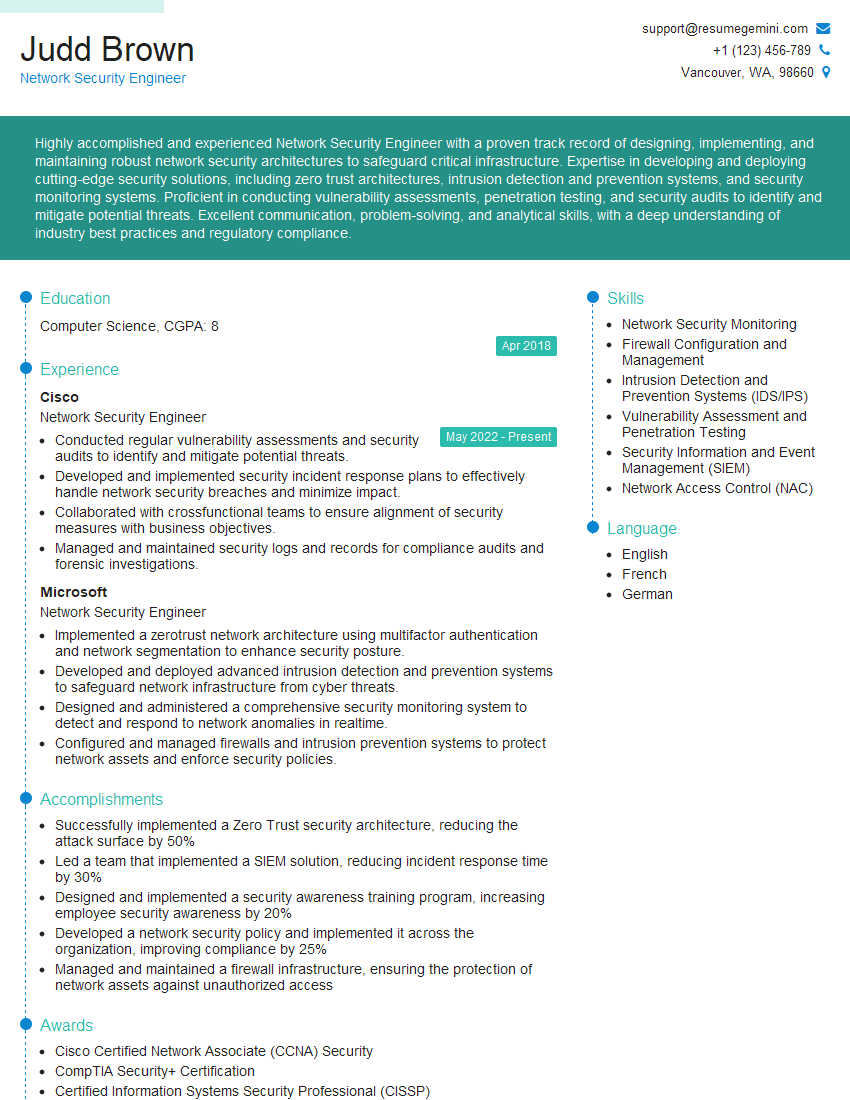

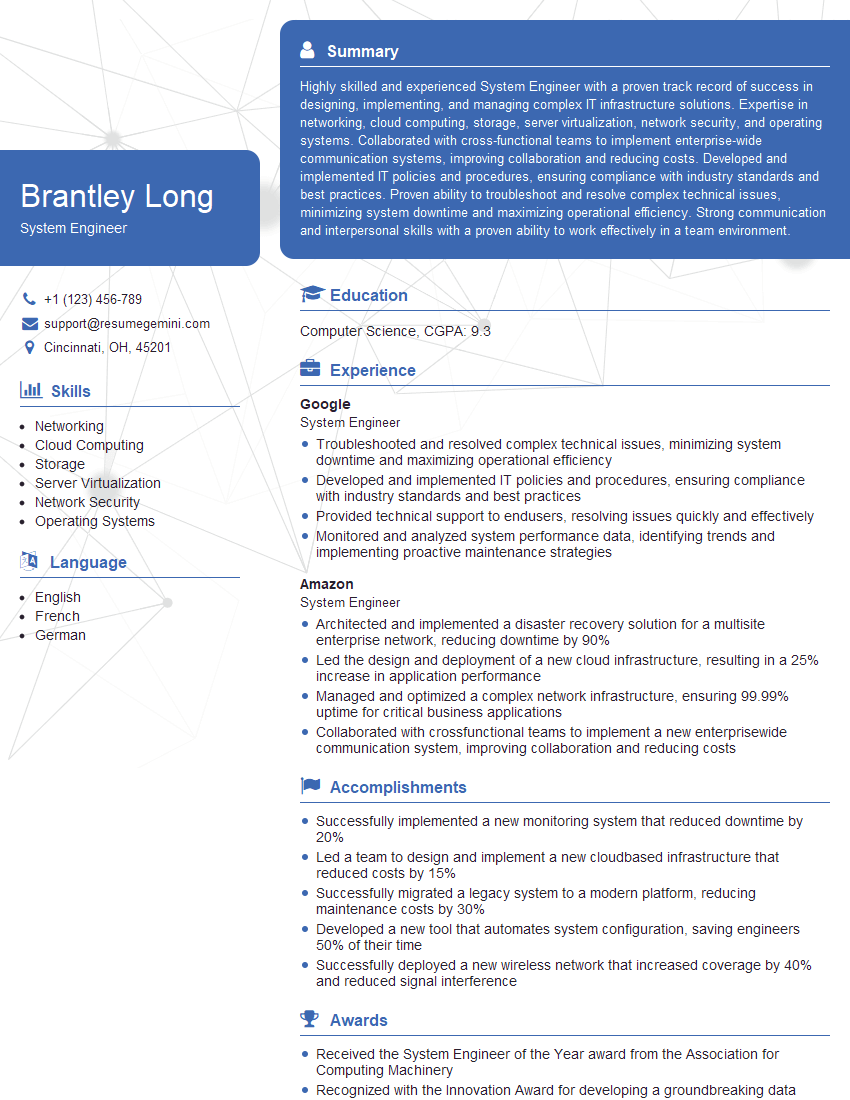

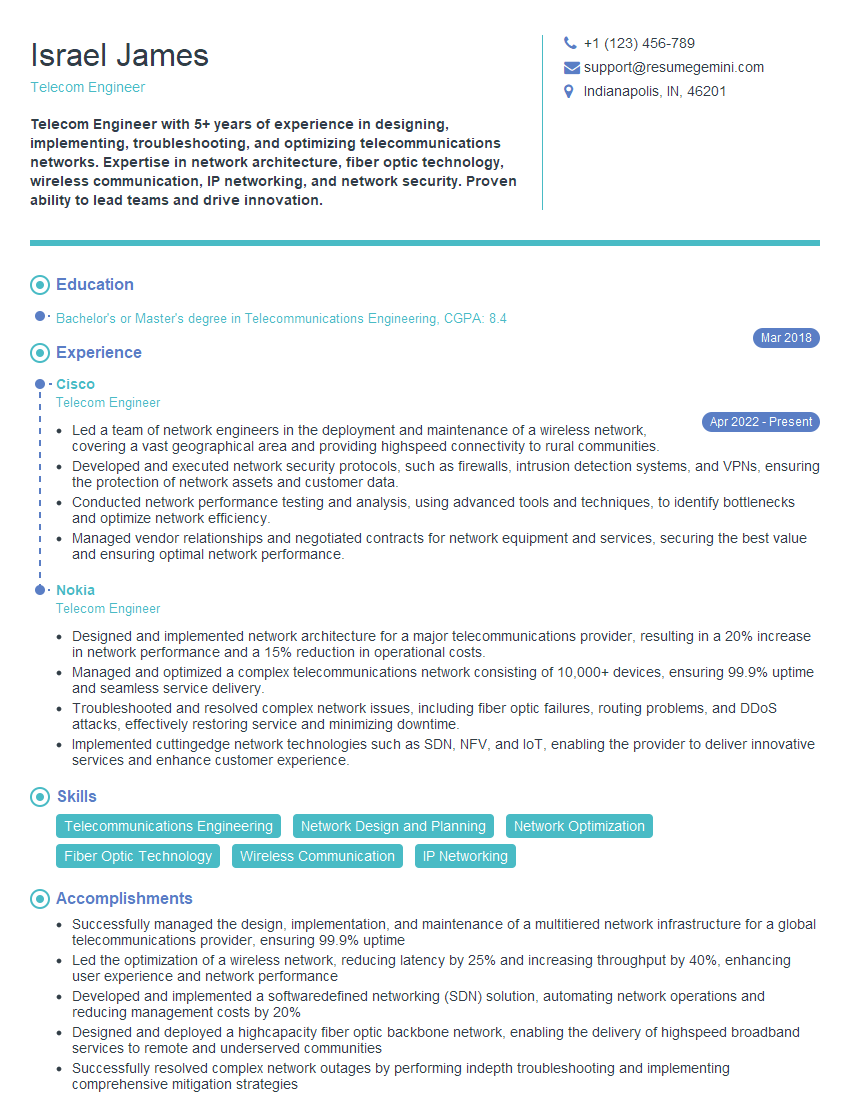

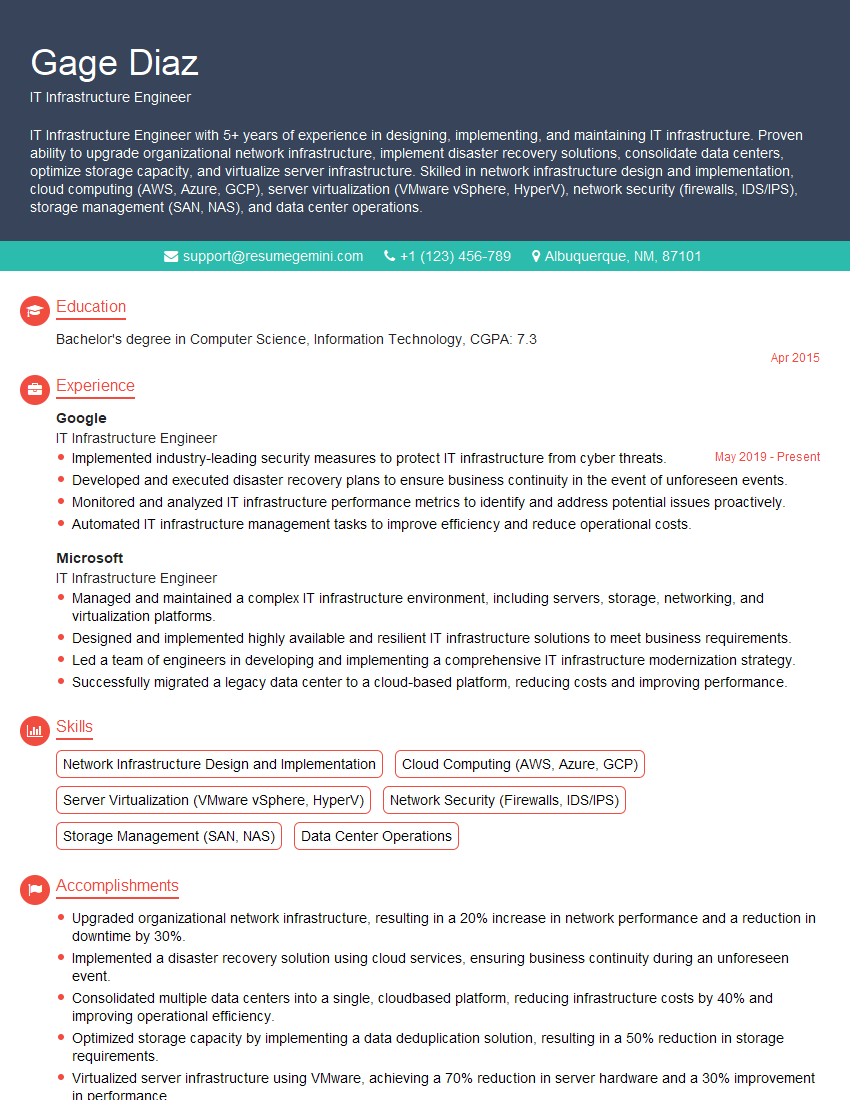

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with network monitoring tools for QoS and traffic management.

My experience with network monitoring tools for QoS and traffic management is extensive. I’ve worked extensively with tools like SolarWinds Network Performance Monitor, PRTG Network Monitor, and Nagios. These tools provide crucial real-time visibility into network performance, allowing me to identify and troubleshoot issues related to bandwidth utilization, latency, packet loss, and jitter. I’m proficient in using these tools to monitor key QoS metrics like delay variation, packet loss rate, and throughput for various applications, enabling proactive identification of potential bottlenecks. For example, using SolarWinds, I once detected unusually high latency on a specific VLAN carrying VoIP traffic. By analyzing the data, I pinpointed a faulty switch port causing significant packet loss, and swiftly resolved the issue, preventing widespread service disruption.

Beyond these commercial tools, I’m comfortable with open-source solutions like Cacti and Zabbix. These require more configuration, but offer flexibility and allow deep customization based on the specific needs of a network. I’ve leveraged their capabilities for creating custom dashboards and alerting systems focused on specific QoS metrics critical to the applications running on the network.

Q 17. Describe your experience with SNMP and its role in QoS monitoring.

SNMP, or Simple Network Management Protocol, plays a vital role in QoS monitoring by providing a standardized way to collect network performance data from various devices. It allows me to remotely query network devices (routers, switches, etc.) for information such as interface utilization, queue lengths, and error rates – all crucial metrics for assessing QoS. I regularly use SNMP to collect data for creating reports and dashboards, helping to identify patterns and trends that impact network performance and QoS. The data collected through SNMP feeds directly into my chosen network monitoring tools mentioned earlier, creating a comprehensive overview of the network’s health and performance.

For instance, using SNMP MIBs (Management Information Bases), I can monitor specific queue lengths on a router to identify potential congestion points before they impact application performance. I can also monitor error counters to detect issues like packet loss or CRC errors, which can negatively affect the quality of voice or video calls.

Furthermore, I can use SNMP traps to receive immediate alerts when certain thresholds are exceeded, ensuring I’m proactively notified of potential QoS problems. This allows for swift intervention and prevents minor issues from escalating into major service disruptions.

Q 18. How do you handle network congestion using traffic engineering techniques?

Handling network congestion requires a multi-faceted approach leveraging various traffic engineering techniques. The first step is accurate identification of the congestion point through the monitoring tools discussed earlier. Once identified, the solution depends on the root cause. Common strategies include:

- Traffic Shaping and Policing: These techniques control the rate at which traffic enters the network. By shaping traffic, we smooth out traffic bursts, preventing sudden spikes that lead to congestion. Policing ensures that traffic conforms to predefined limits, dropping or delaying packets that exceed those limits.

- Quality of Service (QoS): Implementing QoS mechanisms such as prioritization and traffic classification allows us to prioritize critical applications (e.g., VoIP, video conferencing) over less critical applications during congestion. This ensures essential services maintain acceptable performance even under pressure.

- Load Balancing: Distributing traffic across multiple paths or devices prevents overload on any single component. Load balancers intelligently route traffic to optimize resource utilization.

- Bandwidth Upgrades: In some cases, congestion is simply a matter of insufficient bandwidth. Upgrading the network infrastructure to accommodate higher traffic volumes is a necessary solution.

- Route Optimization: Re-evaluating routing tables to ensure traffic is routed efficiently, avoiding congested links or overloaded devices is crucial. Techniques like OSPF or BGP can be dynamically optimized to adapt to changing traffic patterns.

For example, I once encountered severe congestion on a link connecting two data centers. After analyzing the network traffic with monitoring tools, I implemented QoS policies to prioritize critical applications, and also used load balancing to distribute traffic across multiple links. This significantly alleviated the congestion and improved overall network performance.

Q 19. Explain your experience with link aggregation and its impact on QoS.

Link aggregation, also known as port bonding or trunking, combines multiple physical links into a single logical link, increasing bandwidth and redundancy. This significantly impacts QoS by providing greater capacity to handle traffic flows. It improves bandwidth availability, reducing latency and packet loss, especially beneficial for bandwidth-intensive applications. Furthermore, link aggregation enhances fault tolerance; if one link fails, the others continue to operate, maintaining network connectivity and ensuring application uptime.

However, it’s crucial to understand that link aggregation alone doesn’t automatically improve QoS. Proper configuration is vital. For example, using the same QoS policies across all aggregated links is essential to prevent inconsistencies. Mismatched configuration can lead to unequal traffic distribution across the links, potentially negating the benefits of aggregation. In a real-world scenario, I’ve used link aggregation to improve the performance of a video conferencing system. By combining several Gigabit Ethernet links into a 10 Gigabit Ethernet link, we drastically improved the video quality and eliminated the frequent dropped frames experienced earlier.

Q 20. Describe your experience with VPNs and their QoS considerations.

VPNs, or Virtual Private Networks, create secure connections over public networks. QoS considerations for VPNs are crucial because the encryption and tunneling processes inherent in VPNs can introduce latency and overhead, potentially impacting application performance. Proper QoS configuration is needed to ensure that VPN traffic receives adequate bandwidth and priority. This often involves classifying VPN traffic and assigning appropriate QoS policies to guarantee adequate performance for latency-sensitive applications running over the VPN.

For example, in a scenario with remote workers accessing corporate resources via a VPN, prioritizing VPN traffic carrying real-time applications like video conferencing or VoIP is essential. Without proper QoS configuration, the encryption overhead combined with network congestion could render these applications unusable. I’ve encountered situations where a poorly configured VPN significantly impacted VoIP quality, leading to complaints from remote workers. By implementing QoS policies to prioritize VPN traffic carrying VoIP, we successfully resolved the issue and ensured acceptable call quality.

Q 21. What are the key performance indicators (KPIs) for QoS and traffic engineering?

Key Performance Indicators (KPIs) for QoS and traffic engineering are essential for monitoring and improving network performance. These metrics provide insights into various aspects of network health and application performance. Some of the crucial KPIs include:

- Bandwidth Utilization: The percentage of available bandwidth being used. High utilization can indicate potential congestion.

- Latency: The delay experienced by packets traversing the network. High latency negatively impacts real-time applications.

- Packet Loss: The percentage of packets lost during transmission. Packet loss degrades application performance and can lead to data corruption.

- Jitter: Variations in latency, which can significantly affect real-time applications like VoIP and video conferencing.

- Throughput: The actual amount of data successfully transmitted over a period. Low throughput indicates network bottlenecks or QoS issues.

- Queue Lengths: The number of packets waiting to be processed in network queues. Long queues indicate potential congestion points.

- Application Response Time: The time it takes for an application to respond to a request. Slow response times directly impact user experience.

The specific KPIs that are most important will vary depending on the type of network and the applications running on it. Regular monitoring of these KPIs is vital for proactively identifying and resolving performance issues, ensuring a high-quality user experience.

Q 22. How do you handle QoS issues in a multi-vendor network environment?

Managing QoS in a multi-vendor network is like orchestrating a symphony where each instrument (vendor’s equipment) has its own unique way of playing. The key is standardization and abstraction. We can’t expect every vendor to speak the same language, so we need a common framework. This often involves defining consistent QoS policies using standardized protocols like DiffServ (Differentiated Services) or MPLS (Multiprotocol Label Switching). These protocols allow us to classify traffic and apply different service levels, regardless of the underlying vendor technology.

For example, we might define a policy that prioritizes voice traffic over best-effort traffic across all vendor equipment using DiffServ’s PHB (Per-Hop Behavior) model. This policy then translates into specific configurations on each vendor’s device—Cisco’s QoS features might look different from Juniper’s, but the overall outcome—prioritizing voice—remains the same.

Furthermore, robust monitoring and centralized management systems are crucial. These systems provide a unified view across all vendors, allowing us to troubleshoot issues, identify bottlenecks, and adjust policies as needed. This helps maintain consistency and efficiency, akin to a conductor monitoring and adjusting the orchestra to maintain harmony.

Q 23. Explain the impact of network virtualization on QoS and traffic engineering.

Network virtualization significantly impacts QoS and traffic engineering by introducing abstraction layers. Instead of directly managing physical hardware, we now manage virtualized network functions (VNFs) and software-defined networking (SDN) controllers. This allows for greater flexibility and scalability but also introduces new challenges.

On the positive side, virtualization simplifies QoS policy deployment. We can dynamically create and allocate virtual resources with specific QoS profiles. For instance, we can easily assign higher bandwidth or lower latency to a virtual machine (VM) running a critical application using policies programmed within the SDN controller. This level of granularity is difficult to achieve with traditional hardware-centric approaches.

However, challenges arise. We need to ensure that the underlying virtualization infrastructure itself doesn’t introduce latency or packet loss. Proper resource allocation within the virtualized environment is crucial. Also, effective monitoring and troubleshooting require new tools and techniques that can traverse the abstraction layers. Think of it as managing a complex apartment building: you need to manage individual apartments (VMs) while ensuring the building’s infrastructure (virtualization platform) functions correctly.

Q 24. What are the challenges of implementing QoS in cloud environments?

Implementing QoS in cloud environments presents several unique challenges. The shared resource nature of cloud infrastructure necessitates careful consideration of resource contention. Multiple tenants share the same physical resources, hence QoS mechanisms need to prevent one tenant from hogging resources at the expense of others.

Another challenge is the dynamic and unpredictable nature of cloud workloads. Applications scale up and down frequently, and new VMs are constantly being created and destroyed. This makes it difficult to create static QoS policies that remain effective throughout the lifetime of a cloud application.

Furthermore, maintaining visibility and control across a multi-tenant environment can be tricky. Troubleshooting performance issues can be complex, and requires detailed visibility into both the physical and virtualized layers. Finally, ensuring security and isolation between tenants is paramount; it is imperative that a compromised tenant cannot negatively affect the QoS of others.

Q 25. How do you ensure QoS compliance with industry standards and regulations?

Ensuring QoS compliance involves a multi-pronged approach combining policy implementation with rigorous auditing and testing. Industry standards like IEEE 802.1Q (VLAN tagging for traffic classification) and RFC 2475 (DiffServ architecture) form the basis of many QoS deployments. Regulations such as those related to network security or specific industry standards (e.g., financial services) impose additional requirements that must be incorporated.

We typically begin by defining clear QoS policies based on these standards and regulations, followed by rigorous testing and validation. This might involve using network simulation tools to predict network behavior under various load conditions. Regular performance monitoring and capacity planning are key for ensuring ongoing compliance. Finally, detailed documentation of the QoS architecture, policies, and testing procedures is critical for compliance audits and troubleshooting.

Imagine building a house: standards and regulations are like the building codes. Our design, construction (policy implementation), and inspection (testing and monitoring) all must adhere to them to ensure a safe and functional structure.

Q 26. Explain your experience with network simulation and modeling tools.

My experience with network simulation and modeling tools is extensive. I’ve used tools like NS-3, OPNET Modeler, and Cisco Packet Tracer extensively for network design, performance analysis, and QoS optimization. These tools allow us to create virtual representations of networks and test different scenarios before implementing them in production.

For example, using NS-3, I’ve modeled complex network topologies including various QoS mechanisms (e.g., Weighted Fair Queuing, Class-Based Queuing) to simulate different traffic patterns and evaluate their impact on key performance indicators (KPIs) like latency, jitter, and packet loss. This allows for ‘what-if’ analysis, identifying potential bottlenecks, and optimizing network configurations for optimal performance before deploying changes in a live environment. This is far safer and cheaper than experimenting directly on a production network.

Q 27. Describe a situation where you had to optimize network performance using QoS and traffic engineering techniques.

In a previous role, we experienced significant VoIP call quality degradation during peak hours in a large enterprise network. Users reported frequent dropped calls and excessive latency. After investigating, we found that the network was being congested by high-bandwidth video streaming traffic competing for the same bandwidth as VoIP.

To optimize performance, we implemented a tiered QoS architecture using DiffServ. We classified VoIP traffic as high priority (EF – Expedited Forwarding) and video streaming traffic as low priority (BE – Best Effort). We also adjusted queuing mechanisms on key network devices to ensure that VoIP packets experienced minimal queuing delay. This involved configuring Weighted Fair Queuing (WFQ) on core routers and switches to prioritize EF traffic.

The result was a dramatic improvement in VoIP call quality. Dropped call rates decreased by over 90%, and latency was reduced to acceptable levels. This was a clear demonstration of how strategic application of QoS techniques can significantly impact user experience and network efficiency.

Q 28. How do you stay current with the latest advancements in QoS and traffic engineering?

Staying current in this rapidly evolving field requires a multifaceted approach. I regularly attend industry conferences like NANOG (North American Network Operators’ Group) and attend webinars and online courses offered by leading networking vendors and organizations. I actively participate in online communities and forums dedicated to QoS and traffic engineering, exchanging ideas and learning from other experts.

I also keep abreast of new research papers and publications in relevant journals and periodicals. Furthermore, practical hands-on experience is invaluable. I actively seek opportunities to work on challenging real-world projects that push the boundaries of my expertise. This keeps my skills honed and my knowledge fresh. Staying current is akin to a marathon, not a sprint – a continuous process of learning and adaptation.

Key Topics to Learn for QoS and Traffic Engineering Interview

- QoS Mechanisms: Understand various QoS mechanisms like DiffServ, IntServ, and MPLS-TP. Explore their functionalities, limitations, and practical implementations in network environments.

- Traffic Characterization: Learn how to analyze and characterize network traffic patterns (e.g., using statistical models). Understand the impact of different traffic types on network performance.

- Congestion Control: Master congestion control algorithms and their implications for QoS. Be prepared to discuss TCP congestion control mechanisms and their effectiveness in different network scenarios.

- Traffic Engineering Principles: Explore various traffic engineering techniques like OSPF/IS-IS, MPLS Traffic Engineering (MPLS-TE), and constraint-based routing. Understand how these technologies optimize network resource utilization and improve QoS.

- Performance Monitoring and Analysis: Learn about tools and techniques used to monitor network performance, identify bottlenecks, and troubleshoot QoS issues. Be familiar with key performance indicators (KPIs) related to QoS.

- Network Security and QoS: Understand how security measures can impact QoS and vice-versa. Discuss topics like firewalls, intrusion detection systems, and their influence on network traffic.

- Practical Applications: Be ready to discuss real-world examples of QoS and traffic engineering implementations in various network settings (e.g., VoIP, video streaming, data centers).

- Problem-Solving Approach: Develop a systematic approach to troubleshooting QoS and traffic engineering problems. Practice identifying root causes and proposing effective solutions.

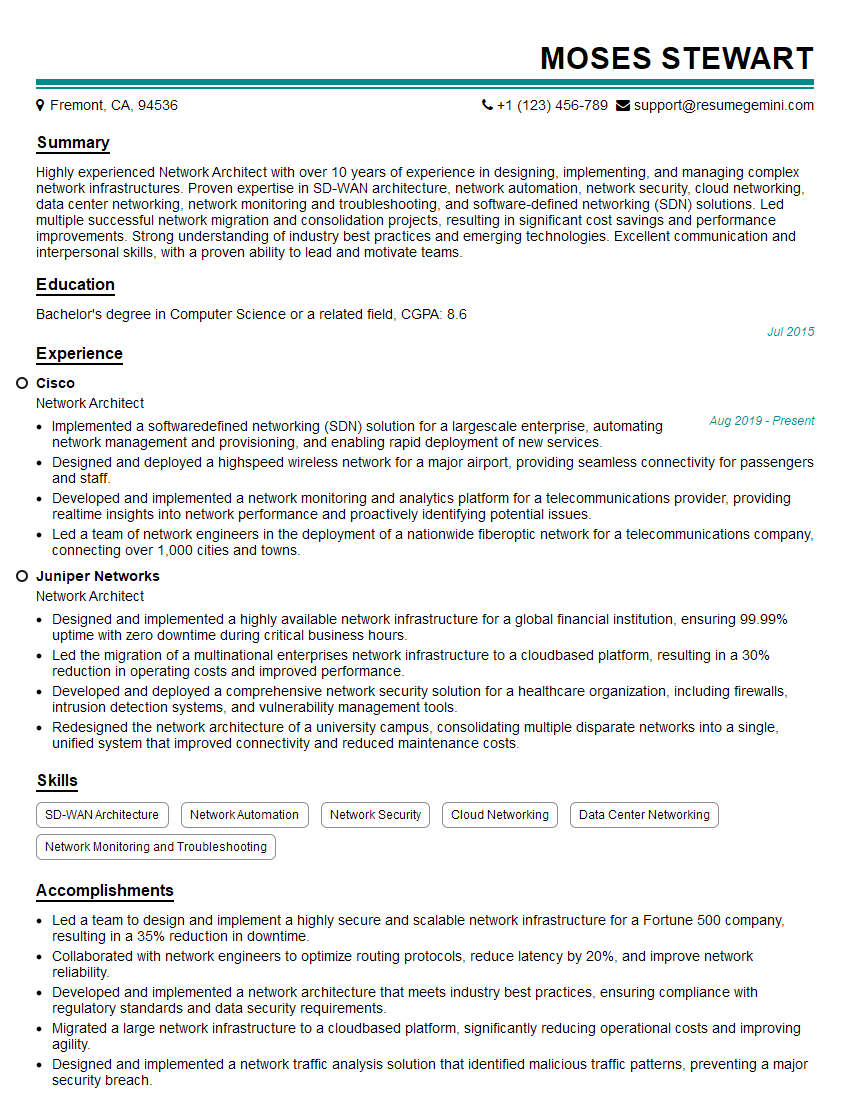

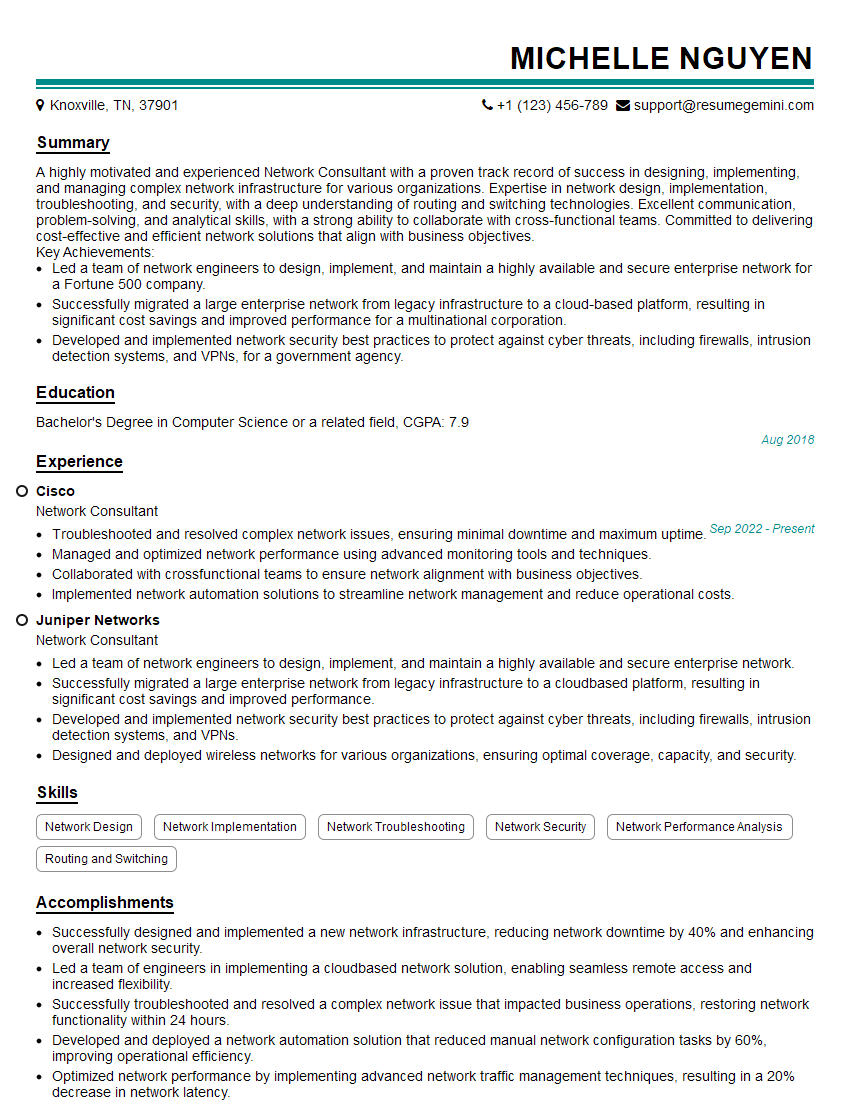

Next Steps

Mastering QoS and Traffic Engineering opens doors to exciting career opportunities in network design, implementation, and management. A strong understanding of these crucial areas significantly enhances your value to potential employers. To maximize your job prospects, focus on creating a compelling and ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource for building professional resumes, and we offer examples tailored specifically to QoS and Traffic Engineering roles to help you showcase your expertise. Invest time in crafting a powerful resume – it’s your first impression and a key factor in securing your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good