Cracking a skill-specific interview, like one for Quality Assurance Process Improvement, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Quality Assurance Process Improvement Interview

Q 1. Explain the DMAIC methodology in the context of QA process improvement.

DMAIC, which stands for Define, Measure, Analyze, Improve, and Control, is a structured five-phase problem-solving methodology used for process improvement. In the context of QA, it’s a powerful tool for systematically identifying and eliminating defects, improving efficiency, and enhancing the overall quality of products or services.

- Define: Clearly define the problem, the project goals, and the scope of improvement. This involves identifying the specific process to be improved and establishing clear metrics for success. For example, if we’re dealing with a high defect rate in software testing, we would define the specific defect types, the target reduction percentage, and the timeframe for improvement.

- Measure: Collect data on the current process performance. This involves identifying key performance indicators (KPIs) and measuring their baseline values. For instance, we would measure the current defect rate, the time taken for testing, and the number of test cases executed.

- Analyze: Analyze the collected data to identify the root causes of the problem. This might involve using tools like Pareto charts, fishbone diagrams, and statistical analysis to pinpoint the key factors contributing to the defects. We might discover that a specific coding practice or insufficient training is the main culprit behind the high defect rate.

- Improve: Develop and implement solutions to address the root causes identified in the analysis phase. This might include implementing new testing procedures, improving training programs, or revising coding standards. We would then test these solutions and make adjustments based on their effectiveness.

- Control: Implement controls to maintain the improved process performance and prevent regression. This involves monitoring KPIs, implementing regular reviews, and establishing a system for continuous improvement. Regular monitoring and analysis would ensure the improvement is sustained and not lost over time.

DMAIC offers a systematic approach, ensuring that improvements are data-driven and sustainable, not just one-off fixes.

Q 2. Describe your experience with implementing a new QA process or improving an existing one.

In my previous role at a financial technology company, we were experiencing significant delays in our software release cycles due to a cumbersome testing process. The existing QA process relied heavily on manual testing, leading to bottlenecks and increased risk of errors. I spearheaded a project to improve this. We transitioned to a more agile approach, incorporating automated testing and continuous integration/continuous deployment (CI/CD). This involved:

- Needs Assessment: We first analyzed the existing process, mapping out the steps involved and identifying pain points. We conducted interviews with QA engineers and developers to gather insights.

- Solution Design: We designed a new process that incorporated automated testing frameworks (such as Selenium or Cypress), continuous integration tools (like Jenkins or GitLab CI), and improved test case management systems. We also implemented a risk-based testing approach, prioritizing testing of critical functionalities.

- Implementation: We phased in the new process, starting with pilot projects and gradually expanding. This minimized disruption and allowed for continuous feedback and adjustment.

- Training: We provided comprehensive training to our QA engineers on the new tools and processes. This was crucial for ensuring adoption and effective utilization.

- Monitoring and Optimization: We closely monitored key metrics like defect density, testing time, and release frequency. Based on our findings, we made continuous adjustments and improvements to optimize the process.

The result was a significant reduction in release cycles, increased efficiency, and a reduction in the number of defects reaching production. This also improved team morale and fostered a culture of continuous improvement.

Q 3. What metrics do you use to measure the effectiveness of a QA process?

Measuring the effectiveness of a QA process requires a multifaceted approach. We need to track both quantitative and qualitative metrics. Here are some examples:

- Defect Density: The number of defects found per unit of code or functionality (e.g., defects per thousand lines of code). Lower defect density indicates a more effective QA process.

- Defect Severity: Categorizing defects based on their impact (critical, major, minor). Focus on reducing the number of critical and major defects is paramount.

- Test Coverage: Percentage of code or functionalities that have been tested. High test coverage provides better assurance of quality.

- Testing Time: The time required to complete testing activities. Shorter testing cycles indicate efficiency gains.

- Mean Time To Resolution (MTTR): The average time taken to resolve a reported defect. A low MTTR suggests a streamlined defect resolution process.

- Customer Satisfaction (CSAT): Gathering feedback from customers on product quality. High CSAT suggests that QA efforts are effective in delivering a positive customer experience.

- Escape Rate: The number of defects that reach production despite QA efforts. A low escape rate demonstrates effective QA practices.

The specific metrics used will depend on the context, but a balanced approach covering both efficiency and quality aspects is vital.

Q 4. How do you identify and prioritize areas for improvement in a QA process?

Identifying and prioritizing areas for improvement requires a systematic approach. I typically employ a combination of techniques:

- Data Analysis: Analyzing historical data on defects, testing time, and other relevant metrics to identify trends and patterns. For example, using Pareto charts to determine the 20% of issues that are causing 80% of the problems.

- Defect Tracking Systems: Utilizing defect tracking software to analyze the types, severity, and frequency of defects. Analyzing these reports can highlight specific areas needing attention.

- Stakeholder Feedback: Gathering feedback from developers, testers, and customers to identify pain points and areas for improvement. Regular meetings and surveys can be invaluable.

- Process Audits: Conducting regular audits of the QA process to identify inefficiencies and potential areas for improvement. This can include reviewing documentation, observing testing activities, and interviewing team members.

- Root Cause Analysis: Using techniques like the 5 Whys or Fishbone diagrams to delve deeper into the root causes of recurring defects. Addressing root causes is crucial for lasting improvement.

Once potential areas for improvement are identified, prioritization is done based on impact and feasibility. We might use a prioritization matrix to weigh the potential benefits of each improvement against the cost and effort involved.

Q 5. What tools and techniques do you use for root cause analysis in QA?

Root cause analysis is fundamental to effective QA process improvement. I use a range of tools and techniques, including:

- 5 Whys: A simple yet powerful technique involving repeatedly asking “Why?” to uncover the underlying causes of a problem. This is especially effective for identifying the chain of events that lead to a defect.

- Fishbone Diagram (Ishikawa Diagram): A visual tool used to brainstorm potential causes of a problem, categorized by different factors (e.g., people, processes, materials, environment). This helps to organize thinking and identify multiple potential root causes.

- Pareto Chart: A bar chart that ranks the causes of a problem in descending order of frequency. This helps to focus on the most significant contributors to defects.

- Fault Tree Analysis (FTA): A top-down, deductive method that visually represents the combination of events that could lead to a specific failure. This is particularly helpful for complex systems or situations.

- Statistical Process Control (SPC): Employing statistical methods to monitor and analyze process variations, identifying sources of variability and indicating areas requiring attention.

The choice of technique depends on the complexity of the issue. Sometimes, a combination of these techniques provides the most comprehensive understanding of the root cause.

Q 6. How do you handle conflicting priorities in QA process improvement projects?

Conflicting priorities are inevitable in QA process improvement projects. Managing them effectively requires careful planning and communication. My approach involves:

- Prioritization Matrix: Using a matrix to rank improvement projects based on factors like impact, urgency, and feasibility. This helps to make objective decisions about which projects to tackle first.

- Stakeholder Alignment: Clearly communicating the priorities to all stakeholders and obtaining buy-in. This helps to ensure everyone is working towards the same goals.

- Phased Approach: Breaking down large projects into smaller, manageable phases. This allows for flexibility and enables adjustments based on changing priorities.

- Negotiation and Compromise: Openly communicating conflicting priorities and finding mutually agreeable solutions. This may involve adjusting timelines, scope, or resources.

- Documentation and Tracking: Maintaining detailed documentation of project priorities, progress, and decisions. This provides transparency and ensures accountability.

Successful conflict resolution requires clear communication, collaboration, and a willingness to compromise. Prioritization based on data and stakeholder input is crucial.

Q 7. Describe your experience with risk assessment and mitigation in QA.

Risk assessment and mitigation are critical for effective QA process improvement. My experience involves:

- Risk Identification: Identifying potential risks associated with the QA process, including technical risks (e.g., tool failures, integration issues), operational risks (e.g., resource constraints, skill gaps), and business risks (e.g., project delays, missed deadlines). Techniques such as brainstorming, SWOT analysis, and checklists can help.

- Risk Analysis: Assessing the likelihood and impact of each identified risk. This often involves assigning a probability and severity score to each risk, allowing for prioritization.

- Risk Response Planning: Developing mitigation strategies to reduce the likelihood or impact of high-priority risks. This might involve implementing contingency plans, acquiring additional resources, or improving training.

- Risk Monitoring and Control: Continuously monitoring identified risks and adjusting mitigation strategies as needed. Regular reviews and updates to the risk register are essential.

- Documentation: Maintaining a risk register that documents all identified risks, their assessment, and mitigation strategies. This provides a clear overview of the risk landscape and facilitates communication among stakeholders.

Proactive risk management is crucial for preventing problems and ensuring the success of QA process improvement initiatives. A well-defined risk management process allows for proactive and well-informed decision-making.

Q 8. How do you communicate QA process improvement initiatives to stakeholders?

Communicating QA process improvement initiatives effectively requires a multi-faceted approach tailored to different stakeholder groups. I begin by understanding each stakeholder’s perspective and their level of technical understanding. For example, executives need high-level summaries focusing on ROI and risk mitigation, while development teams require detailed explanations of process changes and their impact on their workflow.

- Executive Communication: I use concise presentations with clear visuals illustrating the problem, proposed solution, and expected benefits (e.g., reduced defect rate, faster time to market). I emphasize the financial impact, using metrics like cost savings and increased revenue.

- Development Team Communication: I conduct workshops and training sessions to explain the new processes, answer questions, and address concerns. I provide hands-on training and documentation to ensure seamless adoption. I also establish clear communication channels (e.g., regular meetings, email updates) to keep the team informed and address any arising issues.

- Tools and Platforms: I leverage project management tools (e.g., Jira, Asana) to share progress updates, track KPIs, and facilitate collaboration. Internal wikis or knowledge bases can host detailed process documentation and training materials.

Consistent and transparent communication is key. Regular feedback loops ensure that everyone is on the same page and any roadblocks are addressed proactively. For instance, I might utilize A/B testing on different communication approaches to see which resonates best with different groups.

Q 9. How do you ensure buy-in and adoption of new QA processes?

Ensuring buy-in and adoption of new QA processes requires more than just communication; it requires engagement and demonstrating value. It’s like introducing a new tool – people are more likely to use it if they see how it makes their lives easier.

- Demonstrate Value: I start by highlighting the problems the current process has and the tangible benefits the new process will deliver. This could be anything from reducing defects to improving team morale.

- Pilot Programs: A phased rollout allows for testing and refinement of the new processes in a controlled environment. Starting with a small team or project minimizes risk and allows for feedback incorporation before a full-scale implementation.

- Incentivize Adoption: Gamification or reward systems can motivate teams to embrace new processes. Recognizing early adopters and celebrating successes builds momentum.

- Address Concerns: Openly addressing concerns and actively involving teams in the design and implementation of new processes fosters ownership and increases the likelihood of successful adoption. This can be done through surveys, focus groups, or regular feedback sessions.

- Training and Support: Providing comprehensive training and ongoing support is crucial. This might include detailed documentation, hands-on training, and readily available support channels to answer questions and resolve issues.

For instance, if we’re implementing automated testing, I’d first show the team how it reduces manual testing time, which then translates to more time for higher-value tasks. This practical demonstration of value boosts acceptance.

Q 10. What is your experience with Agile and QA process integration?

My experience with Agile and QA process integration is extensive. In Agile environments, QA isn’t a separate phase but an integral part of the development lifecycle. I’ve successfully integrated QA into various Agile methodologies (Scrum, Kanban) through practices like:

- Shift-Left Testing: Incorporating testing activities early in the development cycle. This involves collaborating closely with developers from the sprint planning stage to define test cases and automate tests concurrently with development.

- Test-Driven Development (TDD): Writing automated tests *before* writing code. This helps ensure that code meets requirements and reduces the likelihood of defects.

- Continuous Integration/Continuous Delivery (CI/CD): Automating the testing process as part of the CI/CD pipeline to ensure continuous quality checks and fast feedback loops.

- Sprint Reviews and Retrospectives: Actively participating in sprint reviews to demonstrate testing progress and using retrospectives to identify areas for process improvement and address challenges.

I’ve used tools like Jenkins and GitLab CI to automate test execution and reporting within CI/CD pipelines. By embedding QA throughout the development cycle, we identify and resolve defects early, reducing costs and improving product quality. A successful example involved reducing the number of critical bugs found in production by 60% by implementing TDD and shift-left testing in a recent project.

Q 11. Explain your approach to defect prevention.

My approach to defect prevention is proactive and multi-pronged. It focuses on identifying and addressing potential issues *before* they become actual defects. This is fundamentally different from a reactive approach that only addresses problems after they arise.

- Requirements Analysis: Thoroughly reviewing and clarifying requirements to identify ambiguities and inconsistencies early on. This often involves working with stakeholders to ensure a shared understanding of requirements.

- Design Reviews: Participating in design reviews to assess the architecture and design of the software, identifying potential weaknesses or vulnerabilities before implementation.

- Code Reviews: Conducting code reviews to identify coding errors and style violations. This also helps improve code quality and maintainability.

- Static Analysis: Using static analysis tools to automatically detect potential defects in the code without executing the code.

- Automated Testing: Implementing a comprehensive automated testing strategy to catch defects early in the development cycle.

- Training and Education: Educating developers on best practices in software development and testing.

The goal isn’t just to find defects; it’s to prevent them altogether. By fostering a culture of quality and empowering developers with the right tools and knowledge, we minimize the risk of defects reaching production.

Q 12. How do you measure the return on investment (ROI) of QA process improvements?

Measuring the ROI of QA process improvements requires a clear understanding of the costs and benefits associated with those improvements. It’s not just about the money saved; it’s also about increased efficiency and improved product quality.

- Cost Savings: Calculate the reduction in costs associated with defect fixing, rework, and customer support issues. For example, if the defect rate decreases by 20%, we can quantify the savings based on the cost of fixing each defect.

- Improved Efficiency: Measure improvements in testing time, development cycle time, and release frequency. Faster releases translate to quicker time to market and potential revenue gains.

- Increased Revenue: Assess the impact of improved product quality on customer satisfaction, retention, and ultimately revenue. Higher customer satisfaction translates to positive word-of-mouth and repeat business.

- Risk Mitigation: Quantify the reduction in risks associated with releasing defective software. This might involve calculating the potential financial loss due to reputational damage or legal issues.

I use metrics such as defect density, defect escape rate, and customer satisfaction scores to track the impact of QA process improvements. By meticulously tracking these metrics before and after implementing the improvements, we can accurately calculate the ROI and demonstrate the value of our initiatives.

Q 13. How familiar are you with different testing methodologies (e.g., Waterfall, Agile)?

I am highly familiar with various testing methodologies, including Waterfall and Agile. Each has its strengths and weaknesses, making it suitable for different contexts.

- Waterfall: This is a sequential model where each phase (requirements, design, implementation, testing, deployment) is completed before the next begins. Testing is typically performed at the end of the development lifecycle. This approach is suitable for projects with stable requirements and limited changes.

- Agile: This is an iterative and incremental approach where software is developed in short cycles (sprints). Testing is integrated throughout the development process. This approach is more flexible and adaptable to changing requirements. Within Agile, I have experience with various frameworks like Scrum and Kanban.

- Other Methodologies: I also have experience with other testing methodologies such as V-model, spiral model, and prototyping.

My approach is to choose the methodology that best fits the specific project needs and context. For projects with evolving requirements and a need for rapid iteration, Agile is generally preferred. For projects with well-defined and stable requirements, a Waterfall approach might be more appropriate. Often, a hybrid approach combining elements from multiple methodologies is the most effective solution.

Q 14. What are the key performance indicators (KPIs) you would track in a QA process?

The key performance indicators (KPIs) I track in a QA process depend on the specific goals and context but generally include:

- Defect Density: The number of defects found per lines of code or per unit of functionality. This helps measure the overall quality of the software.

- Defect Escape Rate: The number of defects that make it into production. A low defect escape rate indicates effective testing processes.

- Test Coverage: The percentage of the software that is tested. High test coverage ensures that most parts of the software are thoroughly checked.

- Test Execution Time: The time it takes to execute all tests. This helps assess the efficiency of the testing process.

- Mean Time To Failure (MTTF): The average time until a software system fails. This provides insights into system reliability.

- Customer Satisfaction: This measures customer happiness with the software’s quality. It often involves collecting feedback through surveys or reviews.

- Cycle Time: The time taken to complete a testing cycle. This indicates process efficiency.

Regular monitoring of these KPIs allows me to identify trends, areas for improvement, and the overall effectiveness of the QA process. I use dashboards and reports to visualize these KPIs and make them easily accessible to stakeholders. By continually analyzing these metrics, we can identify and address bottlenecks, optimize our processes, and ensure high-quality software delivery.

Q 15. Describe your experience with test automation frameworks.

My experience with test automation frameworks is extensive, encompassing both designing and implementing them. I’ve worked with various frameworks, including Selenium for web applications, Appium for mobile apps, and Cypress for its speed and ease of debugging. The choice of framework depends heavily on the project’s needs and the technologies used. For example, Selenium’s WebDriver allows for cross-browser testing, crucial for ensuring wide compatibility. In one project, we used Selenium with Java and TestNG to create a robust and maintainable automated regression suite, significantly reducing testing time and improving accuracy. We implemented page object models (POM) to enhance code reusability and maintainability. This involved creating separate classes representing each page element and interaction, making updates and maintenance much cleaner. In another project, focusing on speed and simplicity for a rapidly changing application, we opted for Cypress. Its built-in features for debugging and its speed made it the ideal choice for our rapid development cycle.

I’m also proficient in using API testing frameworks like RestAssured and Postman, crucial for testing the backend services. I understand the importance of selecting appropriate tools and strategies to integrate seamlessly with continuous integration/continuous delivery (CI/CD) pipelines.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle situations where QA processes are not followed?

When QA processes aren’t followed, my approach is proactive and collaborative. It begins with understanding the root cause – is it a lack of awareness, insufficient training, time constraints, or perhaps a breakdown in communication? I would first document the deviations, and then engage in a constructive discussion with the team. I believe in fostering a culture of shared responsibility. Therefore, instead of pointing fingers, I focus on solutions. This could involve offering training on proper QA methodologies, suggesting process improvements, and advocating for more realistic timelines.

For instance, if I observe a lack of unit testing within a development team, I wouldn’t just criticize; I’d show the benefits of unit testing through examples, demonstrating how it reduces bugs and improves overall code quality. I’d also propose workshops to explain best practices and how to integrate it smoothly within their existing workflow. In other cases, a clear visual representation of the impact of skipping a step, such as a simple chart showing the increase in bugs related to a missed testing stage, can be really effective in communicating the necessity of adhering to the process.

Ultimately, the goal is to build a stronger QA culture where process adherence is viewed as crucial, not burdensome, towards delivering high-quality software.

Q 17. How do you stay up-to-date with the latest QA methodologies and technologies?

Keeping up with the ever-evolving QA landscape is paramount. I actively engage in several strategies to stay current. I regularly read industry publications like Testing Trapeze, follow influential QA professionals on platforms like LinkedIn and Twitter, and participate in online communities like Stack Overflow and Reddit’s r/testing. Attending webinars and conferences, such as those hosted by SeleniumConf or similar organizations, provides valuable insights into the latest tools and techniques.

Beyond traditional methods, I invest time in hands-on learning. I experiment with new technologies, such as AI-powered testing tools and emerging frameworks. For instance, I recently explored the use of machine learning in test case generation, which has the potential to drastically streamline the testing process. Experimenting ensures I not only understand the theory but can also practically implement and evaluate these advancements in real-world scenarios. Continual learning is fundamental to remaining a relevant and valuable QA professional.

Q 18. Describe your experience with different types of testing (e.g., functional, performance, security).

My testing experience spans across diverse areas, including functional, performance, security, and usability testing. Functional testing, encompassing unit, integration, system, and regression testing, forms the foundation of my skillset. I’m proficient in creating effective test cases based on requirements and user stories. For instance, I’ve used various techniques like boundary value analysis and equivalence partitioning to ensure comprehensive test coverage during functional tests.

Performance testing is equally crucial. I have experience using tools like JMeter and LoadRunner to simulate user load and identify performance bottlenecks. In one project, we identified a crucial database query that was slowing down the application under heavy load using JMeter; optimizing this query significantly improved the application’s responsiveness. For security testing, I employ techniques like penetration testing and vulnerability assessments, identifying and reporting potential security flaws.

Furthermore, I’m also experienced with usability testing, where I use methods like heuristic evaluation and user feedback to improve the user experience. A well-rounded understanding of these testing types allows for a comprehensive approach to quality assurance, leading to robust and reliable software releases.

Q 19. How do you balance the need for thorough testing with the need to meet deadlines?

Balancing thorough testing with tight deadlines necessitates prioritizing and smart resource allocation. It’s not about compromising quality but about strategically focusing efforts on the most critical areas. This involves risk assessment; we identify features with the highest potential for impact and prioritize testing for those first. We might use risk matrices to rank these and allocate testing resources accordingly. Techniques such as exploratory testing, where testers freely investigate the software, can be combined with automated tests focused on regression areas to quickly provide feedback.

Furthermore, effective communication is key. Openly communicating potential risks and proposing realistic timelines based on the testing scope is vital. This might involve negotiating with stakeholders to adjust expectations or re-prioritizing features if necessary. Agile methodologies, with their iterative development cycles and feedback loops, are incredibly helpful in managing this balance. Regular communication with the development team allows for early detection of issues, preventing last-minute surprises and allowing for iterative improvements. It’s a collaborative effort to deliver high-quality software within the given constraints.

Q 20. Explain your experience with creating and maintaining QA documentation.

I’m experienced in creating and maintaining a comprehensive suite of QA documentation. This includes test plans, which detail the overall testing strategy; test cases, which outline specific test steps; and test reports, which summarize the results of testing efforts. I use tools like TestRail and Jira to manage test cases and track progress. I ensure that all documentation is clear, concise, and easy to understand by both technical and non-technical stakeholders.

Maintaining the documentation is just as important. As the software evolves, I ensure that the test cases are updated to reflect the changes. This involves not only documenting new functionalities but also modifying existing tests to ensure that they remain relevant and effective. This continuous update ensures that the documentation is always an accurate reflection of the testing process and software functionality. This helps maintain a single source of truth and improves collaboration, allowing everyone involved to easily stay informed on the project’s status.

Q 21. How do you collaborate effectively with development teams?

Effective collaboration with development teams is essential for successful software development. I foster a collaborative relationship based on open communication, mutual respect, and shared goals. I believe in proactively engaging with developers from the early stages of the development process, reviewing requirements, participating in design discussions, and providing early feedback. This early involvement helps to identify potential issues before they become major problems.

When bugs are found, I communicate them clearly and concisely, providing detailed steps to reproduce and any relevant screenshots or logs. I avoid blaming and focus on providing constructive feedback to help the developers understand and resolve the issue. I utilize tools like Jira or similar bug tracking systems to manage and track bugs effectively. Regular meetings, such as daily stand-ups or sprint reviews, keep everyone informed of progress and address concerns quickly. This collaborative approach fosters a positive working relationship and improves the overall quality of the software.

Q 22. What are some common challenges in QA process improvement, and how have you addressed them?

Common challenges in QA process improvement often revolve around resource constraints, evolving project requirements, communication breakdowns, and a lack of defined metrics. For example, limited testing time can force compromises on thoroughness, while unclear requirements lead to testing the wrong things. I’ve addressed these by employing several strategies. First, I champion the use of risk-based testing, prioritizing tests based on the likelihood and impact of potential failures. This focuses resources on the most critical areas. Second, I foster proactive communication across development and QA teams. Regular status meetings, collaborative documentation, and the use of shared dashboards ensures everyone is on the same page. Third, I emphasize the importance of defining and consistently tracking key metrics, such as defect density, test coverage, and cycle time. This data-driven approach allows us to identify bottlenecks, measure improvement, and justify resource allocation. Finally, I advocate for automation wherever possible, reducing manual effort and freeing up testers to focus on higher-value tasks like exploratory testing.

Q 23. Describe your experience with implementing a quality management system (QMS).

I have extensive experience implementing Quality Management Systems (QMS), most notably based on ISO 9001. In one project, I led the implementation of a QMS for a medical device software company. This involved defining processes for requirements management, design verification and validation, risk management, and change control. We mapped these processes to ISO 9001 clauses, documented them thoroughly, and trained all relevant personnel. A critical part was establishing a robust internal audit program to ensure continuous compliance and identifying areas for improvement. We utilized a document control system to manage revisions and approvals, ensuring version control and traceability. The result was a significant reduction in non-conformances, improved product quality, and increased customer satisfaction. We also achieved ISO 9001 certification, demonstrating our commitment to quality.

Q 24. What is your experience with using data analytics to improve QA processes?

Data analytics plays a crucial role in enhancing QA processes. I regularly leverage data analysis to identify trends in defect types, severity, and location within the software. For instance, by analyzing historical defect data, we might discover that a specific module consistently produces more defects than others. This allows us to allocate more resources to testing that module or investigate potential underlying causes in the development process. I use tools such as SQL, Excel, and specialized test management platforms to analyze test results, code coverage reports, and other relevant data. Visualizations like charts and dashboards make it easier to identify patterns and communicate findings to stakeholders. This data-driven approach helps us optimize testing strategies, reduce testing time, and ultimately improve software quality.

Q 25. How would you address a situation where a critical defect is discovered late in the software development lifecycle?

Discovering a critical defect late in the software development lifecycle is a serious concern. The response must be swift and decisive. First, we’d conduct a thorough impact assessment to determine the severity and scope of the problem. Then, we’d engage stakeholders – development, product management, and potentially customers – to assess the risk and potential mitigation strategies. Options include a hotfix, a prioritized fix in the next release, or even a workaround for affected users. A critical step is a root cause analysis to understand why the defect was missed earlier. This often reveals gaps in the testing process, requirements documentation, or developer practices. Finally, we’d implement corrective actions to prevent similar issues in the future, which might include improved test coverage, enhanced code reviews, or changes to the development process itself. Transparency with stakeholders is key throughout this process.

Q 26. Describe your experience with different types of testing tools.

My experience encompasses a wide range of testing tools. I’ve worked with test management tools like Jira and TestRail for organizing test cases, tracking defects, and generating reports. For automation, I’ve used Selenium for web application testing and Appium for mobile testing. I’m also familiar with performance testing tools like JMeter and LoadRunner for simulating realistic user loads and identifying performance bottlenecks. In addition, I’ve utilized static analysis tools to detect code vulnerabilities early in the development process. The choice of tool depends heavily on the specific project requirements and the technologies being used. The key is to select tools that integrate well with the existing development environment and streamline the testing process.

Q 27. How do you manage the trade-off between testing speed and testing thoroughness?

Balancing testing speed and thoroughness is a constant challenge. The key is to prioritize. Risk-based testing helps here; we focus on the critical functionalities and areas most prone to defects. Automation plays a crucial role in speeding up testing without sacrificing coverage for repetitive tasks. Techniques like test case prioritization, where the most important tests are run first, also help. Exploratory testing, while less structured, allows testers to identify defects that might be missed by scripted tests. It’s important to have clear metrics that help you understand the trade-off. For example, you could track the number of defects found versus the time spent testing. This data allows for informed decisions about resource allocation and testing strategies.

Q 28. What strategies do you use to reduce QA cycle time?

Reducing QA cycle time requires a multifaceted approach. Automation of repetitive tasks, such as regression testing, is paramount. Parallel testing, running multiple tests simultaneously, can significantly speed things up. Continuous integration/continuous delivery (CI/CD) pipelines integrate testing into the development process, allowing for quicker feedback loops. Improved test design and prioritization, as mentioned before, helps focus efforts. Effective communication and collaboration between development and QA teams eliminate delays caused by misunderstandings. And finally, employing techniques such as shift-left testing, where testing begins earlier in the development cycle, can catch defects sooner, reducing the overall time needed for testing later on.

Key Topics to Learn for Quality Assurance Process Improvement Interview

- Understanding Quality Management Systems (QMS): Learn the principles of ISO 9001 or other relevant standards and how they impact QA processes. Explore practical applications like implementing and auditing QMS within different organizational structures.

- Process Mapping and Analysis: Master techniques like flowcharts, swim lane diagrams, and value stream mapping to identify bottlenecks and areas for improvement in QA workflows. Practice applying these techniques to real-world scenarios, focusing on data-driven decision making.

- Root Cause Analysis (RCA) Techniques: Become proficient in methods such as the 5 Whys, Fishbone diagrams, and Pareto analysis to pinpoint the underlying causes of QA defects and implement effective corrective actions. Develop your ability to present RCA findings clearly and persuasively.

- Metrics and KPIs in QA: Understand key performance indicators (KPIs) relevant to QA, such as defect density, defect escape rate, and test coverage. Learn how to select appropriate metrics, track progress, and use data to drive continuous improvement initiatives.

- Software Testing Methodologies: Familiarize yourself with various testing methodologies like Agile, Waterfall, and DevOps, and understand how QA processes adapt within each framework. Be ready to discuss the advantages and disadvantages of each in relation to process improvement.

- Automation in QA: Explore the role of automation in enhancing efficiency and effectiveness in QA processes. Discuss the benefits and challenges of implementing test automation, including selecting appropriate tools and managing automation frameworks.

- Risk Management in QA: Understand how to identify, assess, and mitigate risks related to software quality and process effectiveness. Practice developing risk mitigation strategies and incorporating them into QA plans.

- Continuous Improvement Models: Learn about frameworks like Lean, Six Sigma, and Kaizen, and how these can be applied to optimize QA processes. Be able to discuss practical examples of implementing these methodologies for tangible improvements.

Next Steps

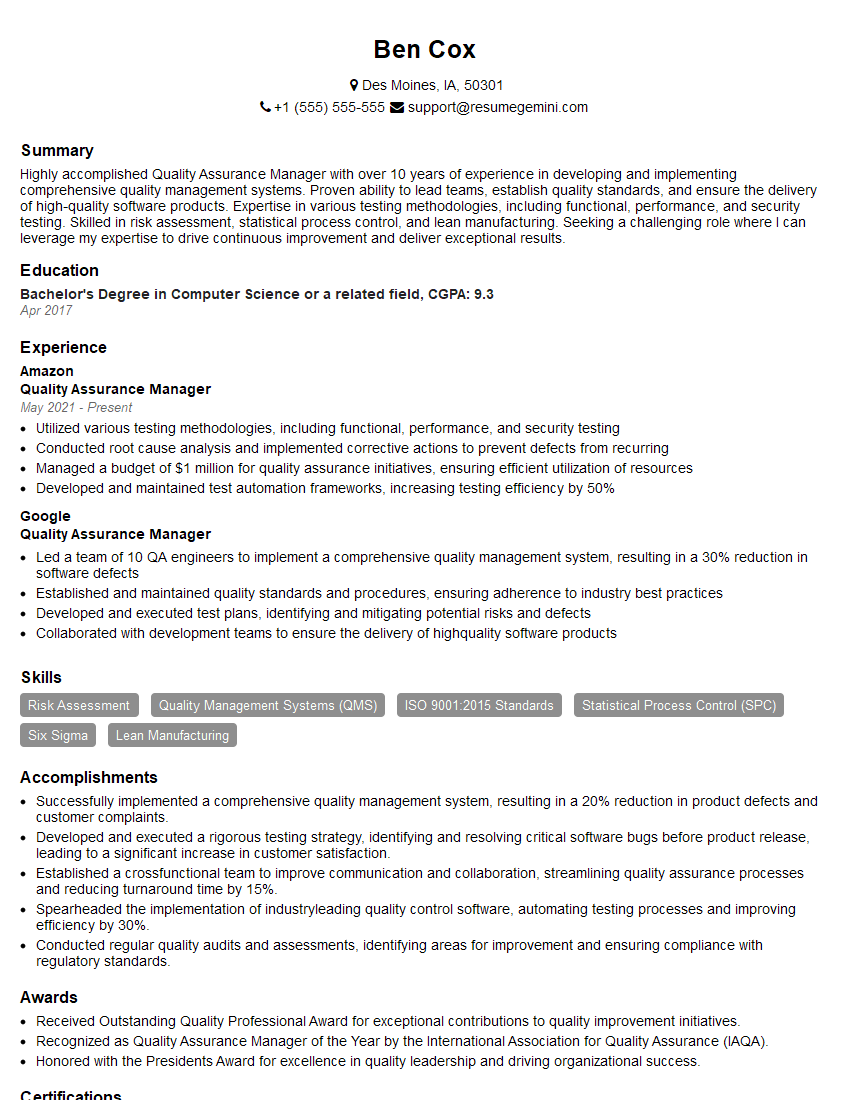

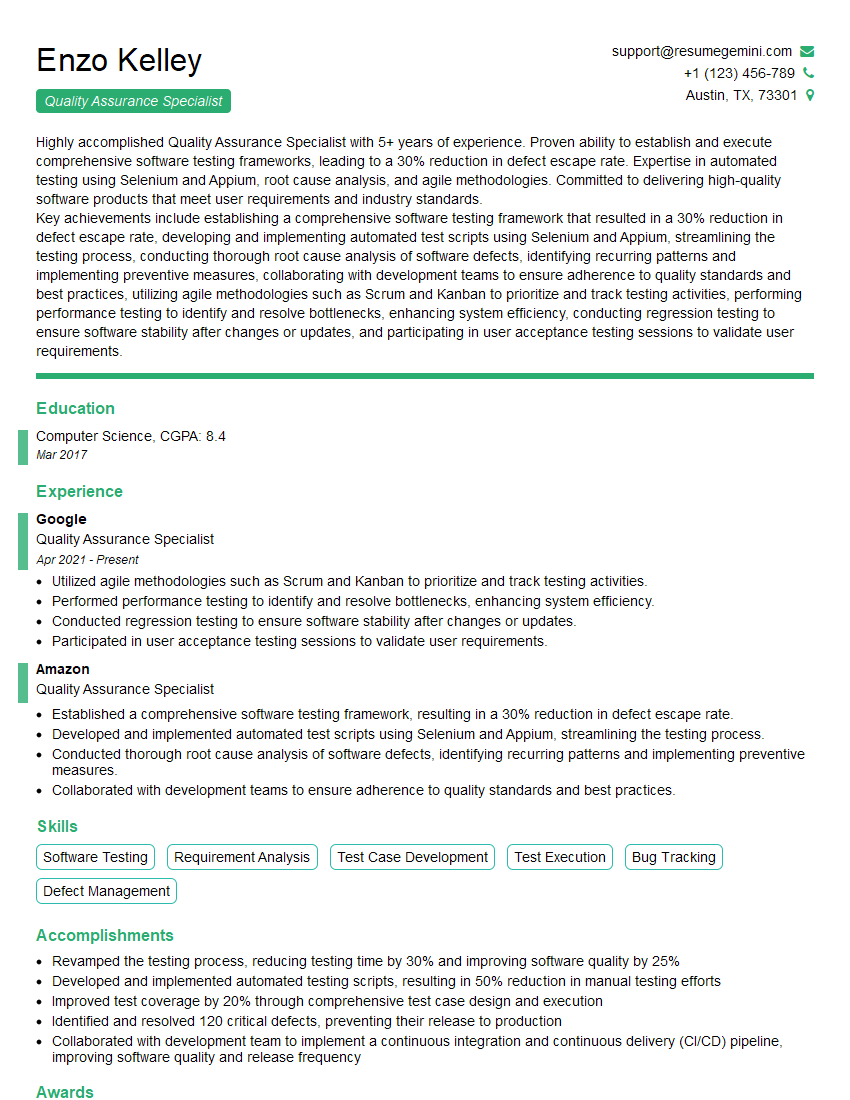

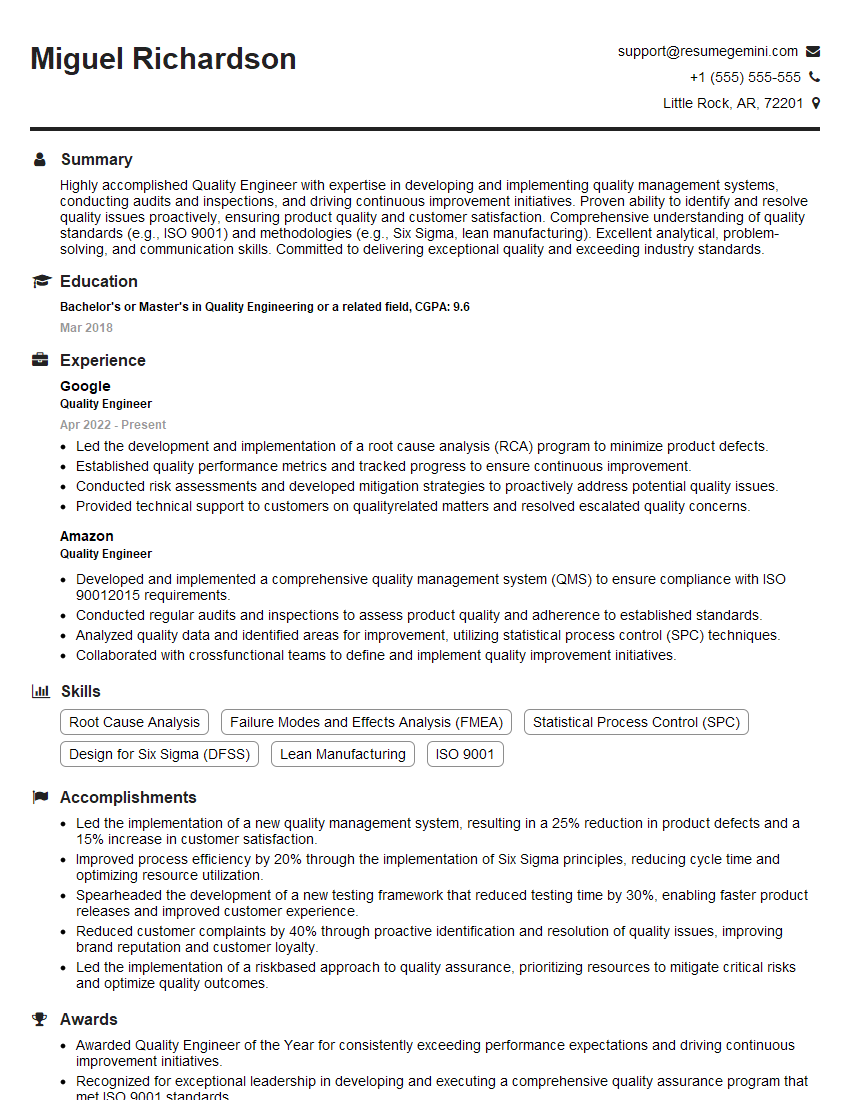

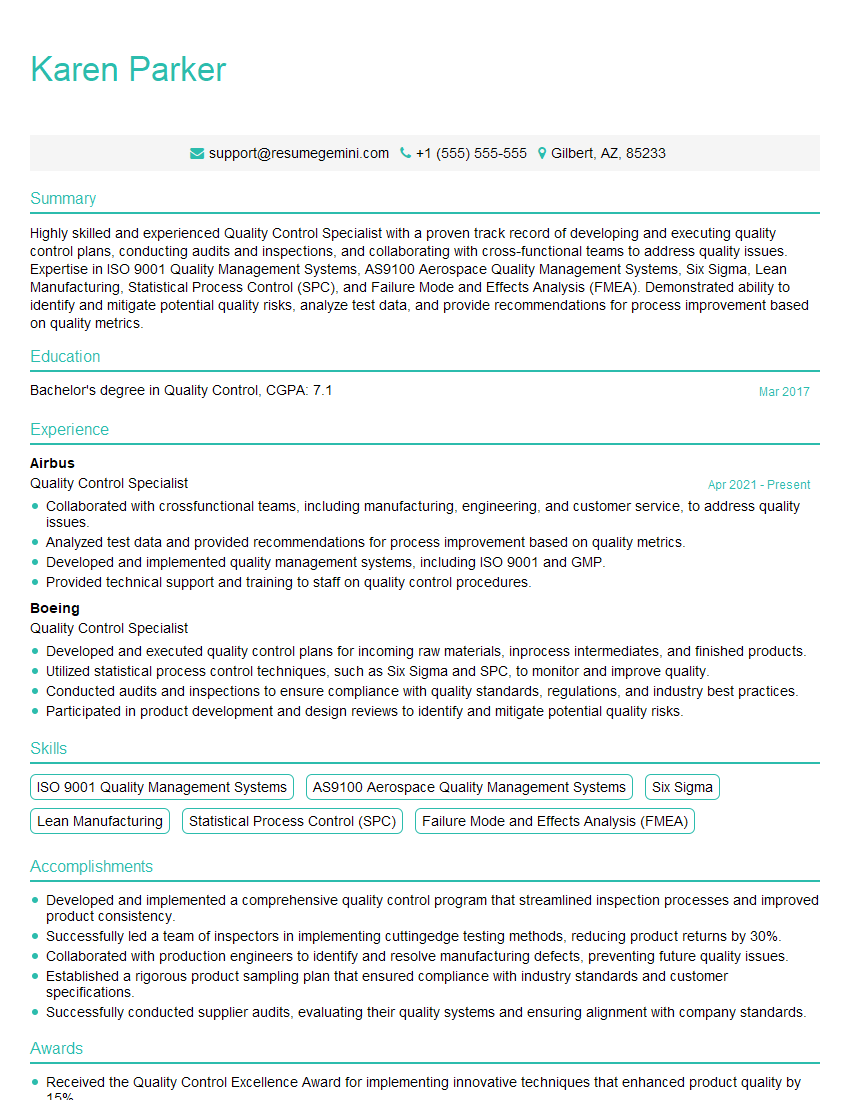

Mastering Quality Assurance Process Improvement is crucial for career advancement, opening doors to leadership roles and higher earning potential. A well-crafted, ATS-friendly resume is essential for showcasing your skills and experience to potential employers. To significantly enhance your job prospects, leverage ResumeGemini, a trusted resource for building professional resumes. ResumeGemini provides examples of resumes tailored to Quality Assurance Process Improvement, helping you present your qualifications effectively and stand out from the competition.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good