Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Quality Assurance (QA) and Regulatory Compliance interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Quality Assurance (QA) and Regulatory Compliance Interview

Q 1. Explain the difference between QA and QC.

While often used interchangeably, QA (Quality Assurance) and QC (Quality Control) represent distinct but complementary approaches to quality management. QA is a proactive process focusing on preventing defects, while QC is a reactive process focused on detecting defects.

Think of building a house: QA is like meticulously reviewing the blueprints, ensuring the foundation is strong, and using only high-quality materials before construction begins. QC, on the other hand, is like inspecting the walls for cracks, checking the plumbing for leaks, and ensuring the paint job is even after construction. Both are crucial for building a high-quality house, but they operate at different stages and with different goals.

In software development, QA involves establishing processes, procedures, and standards to ensure quality throughout the software development lifecycle (SDLC). This includes defining test strategies, selecting appropriate testing methodologies, and ensuring the team follows best practices. QC, conversely, focuses on the actual testing and inspection of the software to identify and report defects. This includes executing test cases, documenting defects, and verifying fixes.

Q 2. Describe your experience with software testing methodologies (e.g., Agile, Waterfall).

I have extensive experience working with both Agile and Waterfall methodologies. In Agile environments, my focus is on continuous testing and integration, collaborating closely with developers in short sprints. I’ve used techniques like Test-Driven Development (TDD) and Behavior-Driven Development (BDD), writing tests before the code is written, ensuring alignment with user stories and acceptance criteria. I use daily stand-ups and sprint reviews to communicate progress and identify potential roadblocks.

In Waterfall projects, my approach involves more formal testing phases. Requirements are thoroughly documented upfront, and a comprehensive test plan is developed, detailing the scope, objectives, and timelines for various testing stages. I’ve effectively managed test execution, defect tracking, and reporting within this more structured framework, ensuring rigorous testing coverage before each milestone.

For example, in a recent Agile project, I implemented a BDD framework using Cucumber and Selenium, resulting in a 30% reduction in defects discovered in the production environment compared to previous projects using a less integrated approach. In a larger Waterfall project, I managed a team of testers to ensure all testing phases were completed thoroughly and on schedule, resulting in a successful product launch.

Q 3. What are your preferred testing tools and why?

My preferred testing tools depend heavily on the project’s context, but some of my favorites include:

- Selenium: For automating web application testing across different browsers. Its versatility and open-source nature make it a powerful and cost-effective solution.

- JUnit/TestNG: For unit testing in Java, offering robust frameworks for creating and running unit tests efficiently.

- Postman: For API testing, allowing for easy creation and management of API requests, validation of responses, and integration with CI/CD pipelines.

- Jira and TestRail: For defect tracking and test case management, offering streamlined workflows for reporting and managing defects throughout the SDLC.

The choice of tools depends on the nature of the application (web, mobile, desktop), the technology stack, and the overall project requirements. However, my preference always leans toward tools that facilitate collaboration, automation, and comprehensive reporting.

Q 4. How do you handle conflicting priorities during a project?

Conflicting priorities are inevitable in project management. My approach involves a structured process to resolve them:

- Prioritization Matrix: I collaborate with the project manager and stakeholders to assess the urgency and importance of each task, utilizing a prioritization matrix (e.g., MoSCoW method – Must have, Should have, Could have, Won’t have).

- Risk Assessment: For each task, I assess the potential risk of not completing it on time or at all. This helps to objectively weigh the importance of various priorities.

- Communication: Transparent communication with all stakeholders is vital. I clearly explain the trade-offs of prioritizing one task over another, ensuring everyone understands the rationale behind the decisions.

- Negotiation and Compromise: Sometimes, compromises are necessary. I aim to find mutually acceptable solutions that balance different priorities and stakeholder expectations.

- Documentation: All decisions, rationale, and trade-offs are documented to maintain transparency and accountability.

For instance, in a project where a critical security vulnerability was identified, I advocated for prioritizing its resolution even though it meant delaying less critical functionalities. This transparent communication ensured buy-in from all stakeholders, despite the temporary shift in focus.

Q 5. Explain your experience with risk assessment and mitigation in QA.

Risk assessment and mitigation are integral to my QA process. I use a systematic approach to identify, analyze, and mitigate potential risks throughout the SDLC:

- Risk Identification: Through brainstorming sessions, requirements reviews, and past experience, I identify potential risks, such as technical issues, scheduling conflicts, or resource limitations.

- Risk Analysis: I assess the likelihood and impact of each risk, prioritizing those with high likelihood and significant impact.

- Risk Mitigation: I develop strategies to reduce or eliminate identified risks. This could involve adding buffer time to the schedule, providing additional resources, or implementing contingency plans.

- Monitoring and Review: I regularly monitor the effectiveness of mitigation strategies and adjust them as necessary. This iterative process ensures that risks remain under control throughout the project.

For example, in a recent project, I identified a high risk of performance issues due to high user load during peak times. We mitigated this risk by implementing performance testing early in the development cycle, identifying and addressing performance bottlenecks proactively, thus ensuring system stability under expected load.

Q 6. Describe your experience with different testing types (unit, integration, system, UAT).

My experience encompasses all levels of testing:

- Unit Testing: I have extensive experience in writing and executing unit tests to validate individual components of the software. This ensures that each module functions correctly in isolation.

- Integration Testing: I verify the interactions between different modules or components, ensuring they work seamlessly together. This involves testing interfaces and data flows between units.

- System Testing: I test the entire system as a whole, verifying that it meets all requirements and functions correctly in its intended environment. This often includes functional, performance, and security testing.

- User Acceptance Testing (UAT): I facilitate UAT by working closely with end-users to validate that the system meets their needs and expectations. This crucial step ensures that the software is usable and acceptable to its intended audience.

In one project, a systematic approach using all these testing types revealed a critical integration issue early in the development cycle, preventing it from becoming a significant problem later. This saved considerable time and resources.

Q 7. How do you ensure traceability in a testing process?

Traceability in testing is paramount for ensuring quality and regulatory compliance. It allows for easy tracking of requirements, test cases, and defects. I achieve this through several mechanisms:

- Requirements Traceability Matrix (RTM): I use an RTM to link requirements to test cases, ensuring that all requirements are adequately covered by tests.

- Test Case Management Tools: Tools like TestRail allow for linking test cases to requirements and defects, facilitating efficient tracking and reporting.

- Clear Naming Conventions: I employ clear and consistent naming conventions for test cases and defects, making it easier to establish clear linkages.

- Version Control: Using version control systems like Git ensures that all changes to test cases and code are tracked, providing complete traceability.

- Defect Tracking System: A robust defect tracking system (like Jira) is vital to track the lifecycle of each defect, from reporting to resolution and closure. Linking defects to specific requirements and test cases provides complete traceability.

This detailed approach allows for easy identification of the root cause of defects and ensures that all requirements are verified through testing, significantly reducing the risk of missed defects and improving overall product quality.

Q 8. How do you handle defects and bug reporting?

Defect and bug reporting is a cornerstone of effective QA. My approach is multifaceted and focuses on clear communication, thorough documentation, and efficient tracking. It begins with meticulous reproduction of the issue. I document the steps needed to consistently reproduce the bug, including the environment (OS, browser, device), input data, and expected versus actual results. This detailed description is crucial for developers to quickly understand and resolve the issue.

I utilize a bug tracking system (e.g., Jira, Bugzilla) to log each defect. My reports include a concise title summarizing the issue, a detailed description, severity level (critical, major, minor, trivial), priority level (urgent, high, medium, low), and screenshots or screen recordings as evidence. I also assign the bug to the appropriate development team and monitor its progress through the resolution cycle. After a fix is implemented, I perform regression testing to ensure that the fix didn’t introduce new problems. Finally, I close the bug report after verification.

For example, if I find a user interface issue where a button is unresponsive, my bug report would clearly outline the steps to reproduce (click button X), the expected behavior (navigation to page Y), the actual behavior (nothing happens), the severity (major, impacting user workflow), priority (high, needs immediate attention), and include a screenshot highlighting the unresponsive button.

Q 9. Describe your experience with test case design and documentation.

Test case design and documentation are critical for ensuring comprehensive and repeatable testing. My experience involves creating detailed test cases that cover various aspects of software functionality, including positive and negative testing, boundary conditions, and edge cases. I employ several test design techniques, such as equivalence partitioning, boundary value analysis, and decision table testing, to optimize test coverage and minimize redundancy.

Each test case is meticulously documented, including a unique ID, test objective, pre-conditions, test steps, expected results, actual results, and pass/fail status. I utilize a structured format, often employing spreadsheets or test management tools for easy tracking and reporting. For example, when testing a login feature, I would have separate test cases for valid credentials, invalid credentials, forgotten password functionality, and password restrictions. The documentation would clearly detail input values, expected system responses, and error messages. The use of a consistent template ensures clear communication and maintainability. This documentation also serves as a valuable repository for future testing cycles and regression testing.

Q 10. What is your experience with test automation frameworks (e.g., Selenium, Appium)?

I have extensive experience with various test automation frameworks, including Selenium and Appium. Selenium is my go-to tool for automating web application testing. I’m proficient in using its WebDriver API to interact with web elements, handle different browsers, and implement various testing strategies like data-driven testing and keyword-driven testing. I have experience developing robust and maintainable automation scripts using programming languages such as Java, Python, or C#.

Appium is my tool of choice for mobile application testing. I leverage its cross-platform capabilities to automate tests on both Android and iOS devices. I’m familiar with its various locators and commands to automate actions such as tapping, swiping, and entering text. I have used Appium in conjunction with cloud-based testing platforms (like BrowserStack or Sauce Labs) to improve test efficiency and parallel execution. For instance, I have automated UI tests for e-commerce websites using Selenium, verifying user login, product browsing, adding to cart, and checkout functions. Similarly, I have automated tests for mobile banking applications using Appium, focusing on security, transaction processing, and user navigation.

Q 11. How do you measure the effectiveness of your testing process?

Measuring the effectiveness of the testing process is essential to ensure quality and efficiency. My approach involves a combination of quantitative and qualitative metrics. Quantitative metrics include defect density (number of defects per lines of code), test coverage (percentage of requirements covered by test cases), and test execution efficiency (number of tests executed per unit of time). These metrics provide a clear picture of the testing progress and help identify areas for improvement.

Qualitative metrics include the overall quality of the software, feedback from stakeholders, and the effectiveness of defect prevention strategies. Regularly reviewing and analyzing these metrics helps me identify and address issues like insufficient testing, poor test case design, or inadequate defect tracking. For example, a high defect density might indicate a need for more thorough testing or improved coding practices. A low test coverage suggests gaps in our testing strategy that need to be addressed. I also track the time taken to resolve defects which helps highlight areas where developer training or improved testing methodologies might increase efficiency.

Q 12. Explain your experience with performance testing.

Performance testing is crucial for ensuring the stability and responsiveness of software applications under various load conditions. My experience encompasses various performance testing techniques, including load testing, stress testing, and endurance testing. I use performance testing tools like JMeter or LoadRunner to simulate real-world user scenarios and measure key performance indicators (KPIs) such as response time, throughput, and resource utilization.

I design performance tests based on the expected user load, identifying potential bottlenecks and assessing the application’s ability to handle peak traffic. For instance, in testing an e-commerce website, I would simulate a large number of concurrent users performing various actions, such as browsing products, adding items to the cart, and completing checkout. Analyzing the results allows me to pinpoint areas that need optimization, such as database queries or server configuration. I then work collaboratively with developers to resolve performance issues and improve the application’s overall performance and scalability.

Q 13. How do you ensure compliance with relevant regulations (e.g., FDA, HIPAA, GDPR)?

Ensuring compliance with relevant regulations is paramount in software development. My experience includes working on projects subject to regulations like FDA (for medical devices), HIPAA (for healthcare data), and GDPR (for personal data). My approach involves a thorough understanding of the specific requirements of each regulation and incorporating compliance considerations into every stage of the software development lifecycle (SDLC).

This includes designing tests to verify data security, privacy, and auditability. For example, when working on a HIPAA-compliant application, I would ensure that all personal health information (PHI) is properly encrypted, access is controlled through role-based permissions, and audit trails are maintained. I collaborate closely with compliance officers and legal teams to ensure that testing activities meet all regulatory requirements. I also participate in regular compliance reviews and audits, ensuring adherence to best practices and mitigating risks.

Q 14. What is your experience with audit preparation and response?

Audit preparation and response are crucial for demonstrating compliance and building trust. My experience includes preparing for and participating in both internal and external audits. I work collaboratively with the audit team to gather necessary documentation, including test plans, test cases, defect reports, and compliance documentation. I ensure that all testing activities are properly documented and traceable, facilitating a smooth and efficient audit process.

During the audit, I clearly and concisely explain the testing methodologies used, the results obtained, and any corrective actions taken to address identified issues. I also respond to any audit findings promptly and effectively, providing detailed explanations and proposing corrective actions where necessary. Maintaining a well-organized and readily accessible repository of testing artifacts is vital for a successful audit response. For example, being able to quickly locate specific test cases that cover security features during a HIPAA audit is crucial for demonstrating compliance.

Q 15. Describe a time you had to deal with a difficult stakeholder.

Dealing with difficult stakeholders requires a blend of empathy, strong communication, and a focus on shared goals. In one project, a senior developer consistently dismissed my QA team’s findings, arguing that our testing was slowing down development. Instead of confrontation, I scheduled a one-on-one meeting. I started by acknowledging his frustration with deadlines, and then presented the potential risks associated with releasing the software without addressing the identified bugs. I used data – the severity of the bugs, potential cost of fixing them post-release, and the impact on the user experience – to support my point. I also suggested a collaborative approach, proposing that he join our team for a walkthrough of some critical test cases. This allowed him to see firsthand the importance of thorough testing. The result was a significant shift in his attitude; he actively participated in testing and even offered valuable insights into potential issues we had not identified.

The key takeaway? Understand the stakeholder’s perspective, focus on objective data, and propose collaborative solutions rather than direct confrontation. Treat disagreements as opportunities for improvement and mutual understanding.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with root cause analysis.

Root cause analysis (RCA) is a systematic approach to identifying the underlying cause of a problem, rather than just treating the symptoms. My experience involves using various techniques, such as the ‘5 Whys’ method and Fishbone diagrams. For example, during a recent software deployment, we experienced a significant performance degradation. We started with the ‘5 Whys’:

- Why was the performance slow? Because the database queries were taking too long.

- Why were the database queries slow? Because the database was overloaded.

- Why was the database overloaded? Because of an unexpected surge in user traffic.

- Why was there an unexpected surge in user traffic? Because of a viral social media campaign promoting the app.

- Why weren’t we prepared for the social media campaign? Because we lacked adequate capacity planning and proactive monitoring.

This led us to implement improved capacity planning, enhanced monitoring tools, and a more robust database architecture. The Fishbone diagram would then visually map these contributing factors, helping to identify areas for process improvement and preventative measures.

In essence, RCA helps move beyond superficial fixes to address the root of issues and prevent recurrence. A well-executed RCA leads to proactive problem-solving and improved product reliability.

Q 17. Describe your experience with creating and maintaining QA documentation.

Creating and maintaining QA documentation is crucial for traceability, consistency, and regulatory compliance. My experience spans various types of documentation, including test plans, test cases, defect reports, and test summary reports. I’ve used tools like TestRail and Jira to manage these documents, ensuring version control and easy collaboration. For instance, a detailed test plan would outline the scope, objectives, resources, and timelines for the entire testing process. A well-structured test case would include steps to reproduce the test, expected results, actual results, and the status (pass/fail). Defect reports would clearly describe the issue, its severity, and steps to reproduce it. This documented information proves invaluable for future releases, audits, and knowledge transfer.

Maintaining these documents isn’t just about storage; it’s about continuous improvement. Regular updates, based on lessons learned and new requirements, ensure the documentation remains relevant and accurate. I always strive for clear, concise, and easily understandable documentation, using consistent templates and terminology to promote efficient use and collaboration.

Q 18. What are some common QA metrics you track?

Common QA metrics I track include defect density (number of defects per line of code), defect severity (critical, major, minor), test coverage (percentage of requirements covered by tests), test execution time, and bug leakage (number of bugs found after release). These metrics, along with others like automation test coverage and time to resolution, help us monitor quality trends, identify areas for improvement, and demonstrate the effectiveness of our testing efforts. For example, a high defect density might indicate a need for more rigorous testing or improved developer coding practices. Tracking bug leakage allows us to identify gaps in our testing processes. The selection and analysis of metrics depend on the project context and organizational priorities.

Q 19. How do you stay up-to-date with industry best practices and regulatory changes?

Staying current in QA and regulatory compliance requires a multi-faceted approach. I actively participate in online communities and forums, attend industry conferences and webinars (like those from ISTQB), and follow leading QA publications and blogs. I also leverage online learning platforms for certifications and specialized training courses, such as those focused on specific compliance frameworks like HIPAA or GDPR. Staying abreast of regulatory changes usually involves subscribing to regulatory updates and newsletters from relevant governing bodies. Additionally, engaging in knowledge sharing with colleagues and peers through internal training sessions and brown bag discussions helps to keep my skills sharp and my understanding of best practices up-to-date.

Q 20. Describe your experience with different types of testing environments.

My experience encompasses various testing environments, including development, testing, staging, and production. Development environments are used for unit testing, where developers test individual components of the software. Testing environments mimic the production environment as closely as possible, allowing for more comprehensive system and integration testing. Staging environments offer a final pre-release verification point. Production environments represent the live, real-world system used by end-users. Each environment has its own configuration, data, and setup requirements, and understanding these nuances is crucial for successful testing. For example, simulating production load in a testing environment requires specialized tools and techniques to ensure accurate testing results. The type of environment used influences the type of testing performed and the level of detail required.

Q 21. How do you prioritize testing activities in a time-constrained environment?

Prioritizing testing activities in a time-constrained environment requires a risk-based approach. I use a combination of techniques, such as risk assessment matrices and the MoSCoW method (Must have, Should have, Could have, Won’t have). First, I identify critical functionalities and high-risk areas using risk assessment matrices that consider the likelihood and impact of potential failures. Then, I apply the MoSCoW method to categorize requirements based on their priority. ‘Must-have’ features get tested first, followed by ‘Should have’, and so on. This helps focus testing efforts on the most critical features, ensuring that the highest-risk areas are adequately covered even if time is limited. Automated testing also plays a significant role in speeding up the process, allowing manual testers to focus on more complex or exploratory testing activities. Effective communication with stakeholders is key throughout this process to ensure that priorities are aligned and expectations are managed realistically.

Q 22. What is your experience with security testing?

Security testing is a critical aspect of software development, aiming to identify vulnerabilities that could be exploited by malicious actors. My experience encompasses a wide range of security testing methodologies, including penetration testing, vulnerability scanning, and security code reviews. I’ve worked on projects ranging from web applications to mobile apps and embedded systems. For instance, on a recent project involving a financial application, I performed penetration testing using tools like Burp Suite and OWASP ZAP to identify and report SQL injection vulnerabilities and cross-site scripting (XSS) flaws. This involved simulating real-world attacks to assess the application’s resilience against various threats. Following the identification of vulnerabilities, I documented my findings with detailed reports, including severity levels, remediation steps, and potential impact. This allowed the development team to prioritize fixes and enhance the overall security posture of the application.

Furthermore, I have experience with static and dynamic application security testing (SAST and DAST). SAST involves analyzing the source code to identify vulnerabilities before deployment, while DAST tests the running application for vulnerabilities. My experience includes using commercial and open-source SAST and DAST tools to identify potential security weaknesses. I am also familiar with secure coding practices and regularly incorporate these principles into my testing strategy.

Q 23. Describe your experience with working in a collaborative team environment.

I thrive in collaborative team environments. My experience working on diverse teams, including developers, designers, project managers, and other QA professionals, has taught me the importance of clear communication, active listening, and mutual respect. I’ve consistently contributed to a positive and productive team dynamic by proactively sharing my expertise, offering constructive feedback, and fostering open dialogue. For example, on a recent project with a geographically dispersed team, I utilized project management tools like Jira to facilitate efficient communication and collaboration, ensuring everyone was aligned on project goals, deadlines, and tasks. I also actively participate in daily stand-up meetings and regular sprint reviews to provide updates, address challenges, and coordinate efforts with team members.

I believe that successful teamwork relies on trust and mutual support. I actively seek out opportunities to mentor junior QA members and share my knowledge to build team capabilities. I firmly believe that a strong team dynamic is crucial for delivering high-quality products, and I actively work to cultivate this within any team I’m part of. I am proficient in using collaborative tools such as Slack, Microsoft Teams, and Confluence to facilitate seamless information exchange and task management.

Q 24. Explain your experience with defining and implementing QA processes.

Defining and implementing QA processes is central to my role. My approach begins with a thorough understanding of project requirements and scope. I then tailor a QA strategy that includes test planning, test case design, test execution, defect tracking, and reporting. This often involves selecting appropriate testing methodologies, such as Agile, Waterfall, or a hybrid approach, based on project needs. For example, in a recent Agile project, I implemented a test-driven development (TDD) approach, where test cases were written before the code. This allowed for early detection of defects and ensured high code quality from the outset. I also incorporated continuous integration and continuous delivery (CI/CD) pipelines to automate testing and deployment processes, significantly reducing testing time and improving efficiency.

My QA process implementations also include defining clear metrics to track quality and progress. These metrics typically include defect density, test coverage, and test execution time. I use these metrics to identify areas for improvement and continuously refine our QA processes. I’m adept at using various QA tools and frameworks, such as Selenium for UI automation and JUnit for unit testing. This allows for efficient and repeatable testing, leading to a more reliable and robust product.

Q 25. How do you handle pressure and meet tight deadlines?

Handling pressure and meeting tight deadlines is a key skill in QA. My approach involves effective prioritization, meticulous planning, and proactive communication. I break down large tasks into smaller, manageable components, focusing on the most critical areas first. I utilize time management techniques like timeboxing and the Pomodoro Technique to maintain focus and avoid burnout. Furthermore, I proactively communicate challenges or potential delays to stakeholders, offering alternative solutions or adjustments to the project timeline when necessary. Transparency and open communication are key to managing expectations and navigating tight deadlines effectively.

For example, on a project facing a critical deadline, I collaborated with the development team to identify the highest-risk areas of the application and focused testing efforts there. This allowed us to address the most important defects and deliver a functional product within the allocated timeframe. I believe in a proactive approach to problem-solving; anticipating potential roadblocks and addressing them before they impact the project timeline is crucial for success under pressure.

Q 26. Explain your experience with defect tracking systems (e.g., Jira, Bugzilla).

I have extensive experience using defect tracking systems like Jira and Bugzilla. I’m proficient in creating and managing bug reports, assigning tasks, tracking progress, and generating reports. My workflow involves using these systems to document each identified defect, including detailed steps to reproduce the issue, expected behavior, actual behavior, severity, and priority levels. I utilize Jira’s workflow features to manage the bug lifecycle, from reporting to resolution and verification. This ensures complete traceability and accountability. I also use the reporting functionalities of these systems to monitor defect trends, identify patterns, and assess the overall quality of the software. For example, I’ve used Jira’s dashboards and reports to track the number of critical bugs resolved over time, providing valuable insights into the effectiveness of our testing and development processes.

I am familiar with customizing workflows and fields within these systems to meet specific project needs. Furthermore, I have experience integrating defect tracking systems with other tools, such as CI/CD pipelines, to automate the process of reporting and tracking defects.

Q 27. Describe your experience with non-functional testing.

Non-functional testing focuses on aspects of the software beyond its core functionality, such as performance, security, usability, and scalability. My experience encompasses various non-functional testing types. For example, I’ve conducted performance testing using tools like JMeter to assess response times, identify bottlenecks, and determine the application’s capacity under different load conditions. I’ve also performed usability testing by observing users interacting with the software and gathering feedback on ease of use and overall experience. Furthermore, I have experience with security testing (as detailed in response to question 1), and scalability testing to ensure the application can handle increasing amounts of data and users.

A real-world example involved a web application where performance testing uncovered significant latency issues during peak usage hours. By analyzing the results from JMeter, we identified a database query that was consuming excessive resources. This issue was then addressed by optimizing the database query, resulting in a significant improvement in performance and user experience.

Q 28. How do you ensure the quality of third-party software or services?

Ensuring the quality of third-party software or services requires a comprehensive approach. This typically involves evaluating the vendor’s reputation, reviewing their security and compliance certifications, and conducting thorough testing of the integrated system. This process involves examining the vendor’s track record, reviewing client testimonials, and verifying their adherence to industry best practices. I would also request detailed documentation, including technical specifications, security audits, and performance benchmarks, from the vendor. This due diligence helps establish a baseline understanding of the quality and reliability of the third-party component.

Once integrated, thorough testing is critical. This involves creating test cases that specifically target the interface between our software and the third-party service. We would test for various scenarios, including error handling, data integrity, and security vulnerabilities at the integration points. This might involve interface testing, contract testing, or API testing, depending on the nature of the integration. The goal is to ensure seamless functionality and avoid any potential conflicts or disruptions resulting from integrating the third-party software or service.

Key Topics to Learn for Quality Assurance (QA) and Regulatory Compliance Interview

- Quality Assurance Methodologies: Understand different QA methodologies like Agile, Waterfall, and DevOps, and their practical application in various project lifecycles. Consider how these methodologies impact testing strategies and documentation.

- Software Testing Techniques: Master various testing techniques including functional testing (unit, integration, system, acceptance), non-functional testing (performance, security, usability), and test automation principles. Be prepared to discuss practical examples of how you’ve applied these techniques.

- Risk Management and Mitigation in QA: Explore how to identify, assess, and mitigate risks throughout the software development lifecycle. Understand the importance of proactive risk management in ensuring product quality and regulatory compliance.

- Test Case Design and Execution: Discuss your experience in designing effective test cases, executing test plans, and managing test data. Be ready to explain your approach to efficient and thorough testing.

- Defect Tracking and Reporting: Understand the importance of accurate and detailed defect reporting. Be familiar with bug tracking systems and best practices for communicating issues to developers and stakeholders.

- Regulatory Compliance Frameworks: Familiarize yourself with relevant regulatory frameworks (e.g., HIPAA, GDPR, FDA regulations – depending on the industry) and how they impact QA processes and documentation. Be prepared to discuss how QA contributes to meeting compliance requirements.

- Quality Metrics and Reporting: Understand key quality metrics (e.g., defect density, test coverage) and how to use them to track progress and communicate QA performance to stakeholders. Discuss your experience creating reports and dashboards.

- Automation Testing Tools and Frameworks: Explore your experience with various automation testing tools and frameworks (mention specific tools if you have experience). Discuss the benefits and challenges of test automation and how to choose the right tools for a given project.

- Software Development Life Cycle (SDLC): Demonstrate a strong understanding of the SDLC and how QA fits into each phase. Be ready to discuss your experience working within different SDLC models.

- Problem-Solving and Analytical Skills: Highlight your ability to analyze complex problems, identify root causes of defects, and propose effective solutions. Use examples from your experience to illustrate these skills.

Next Steps

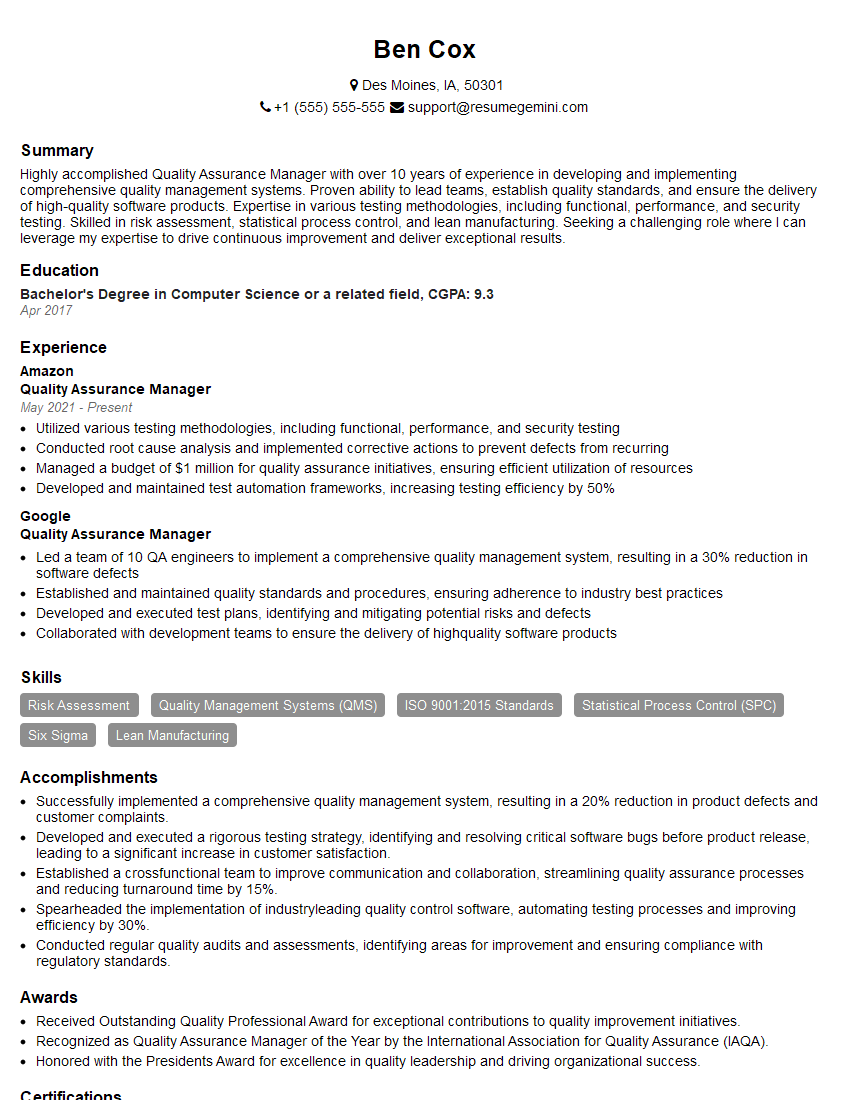

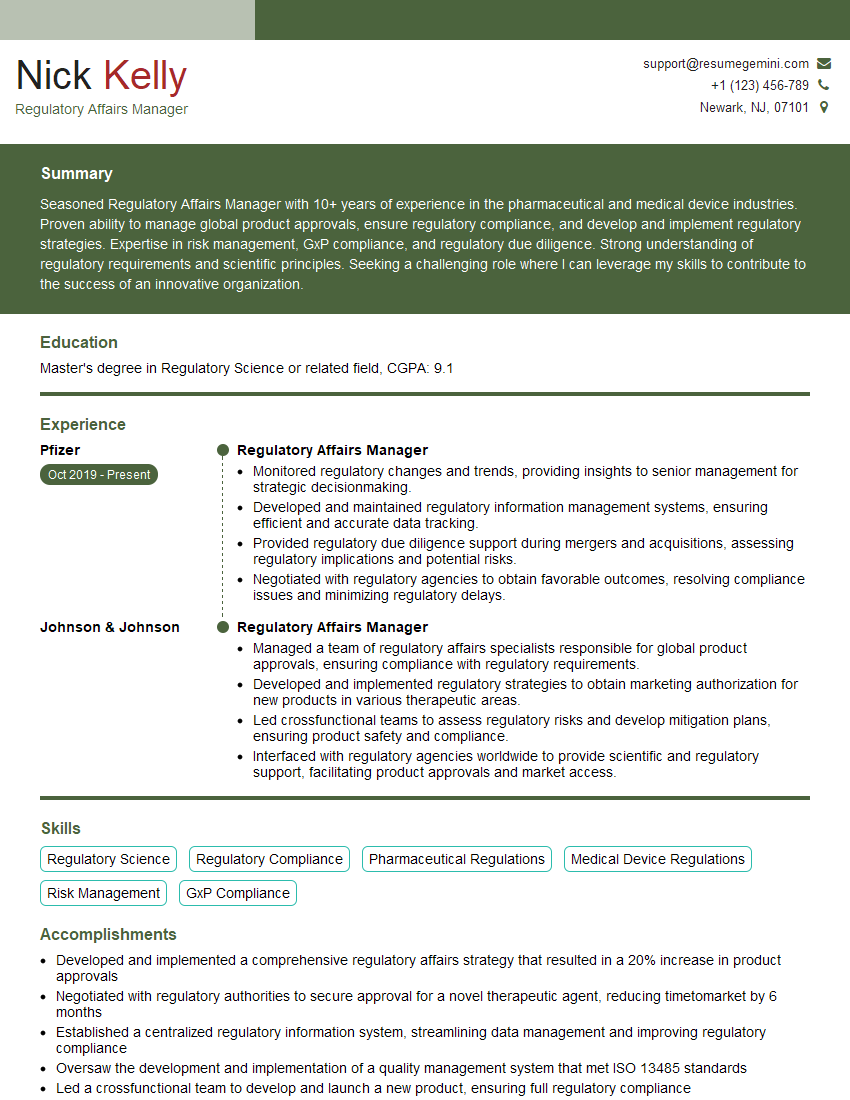

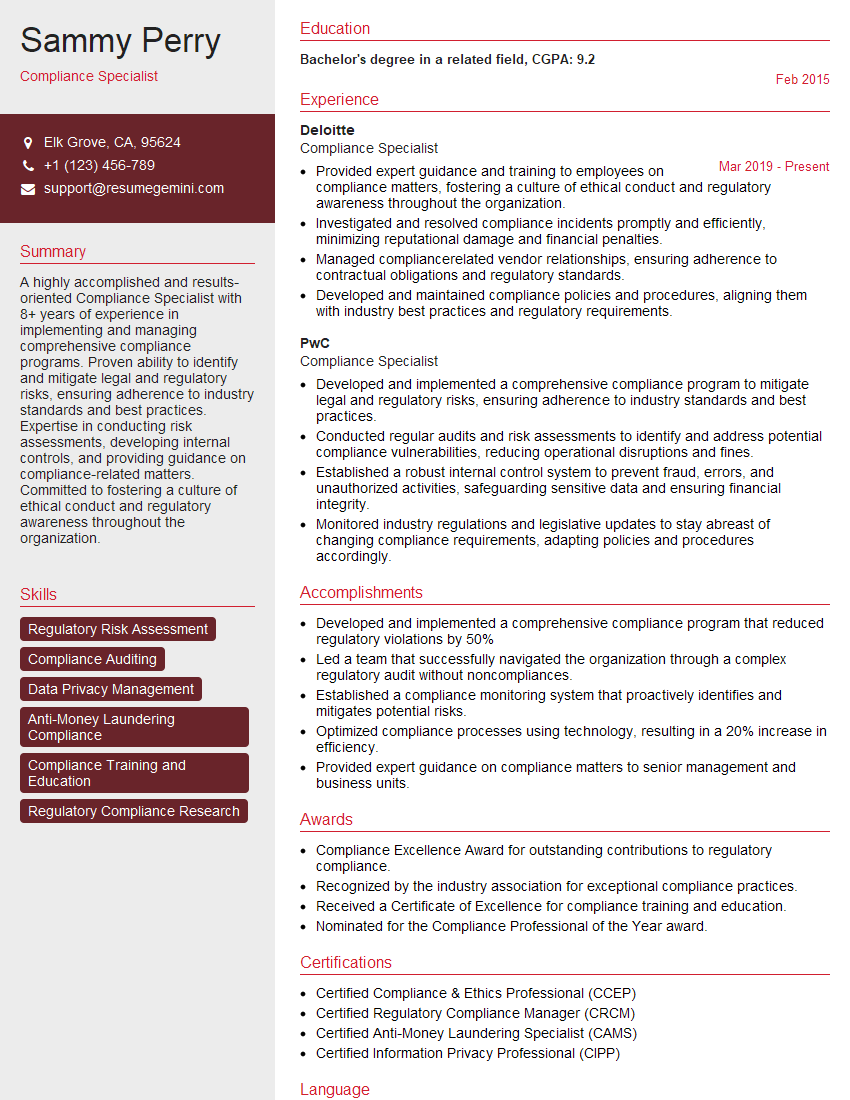

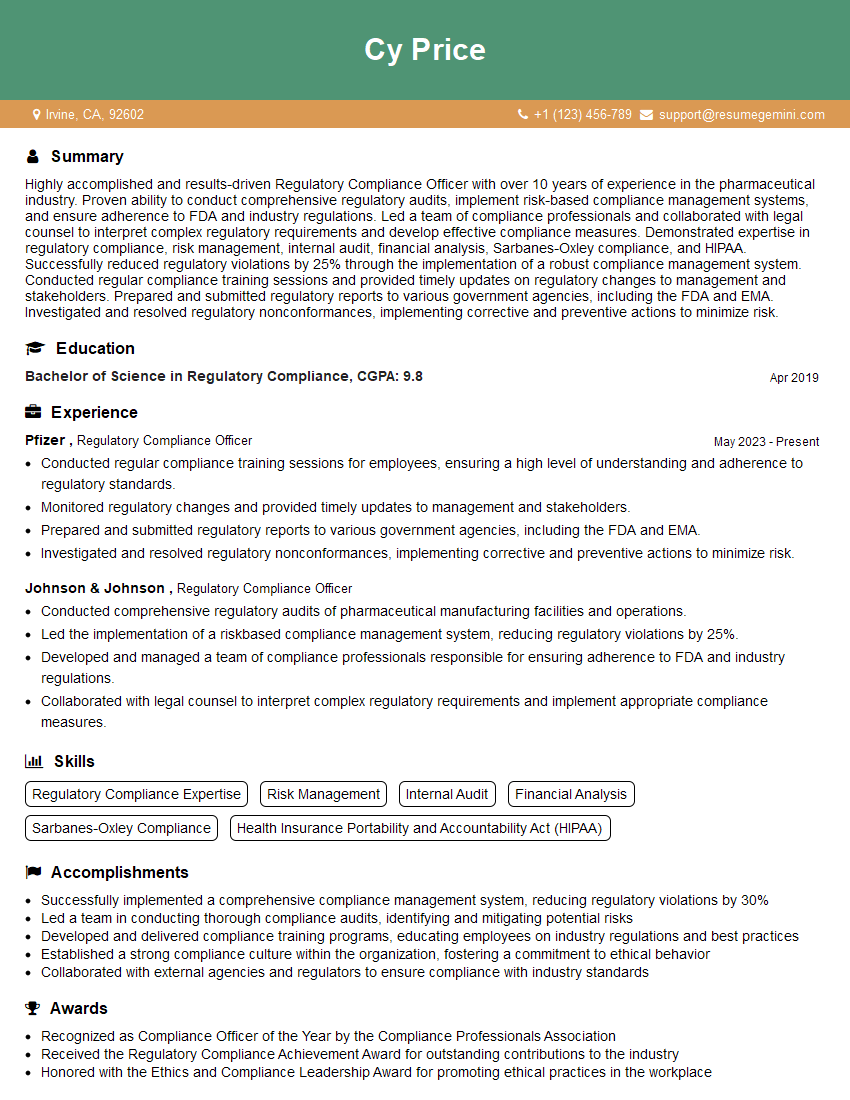

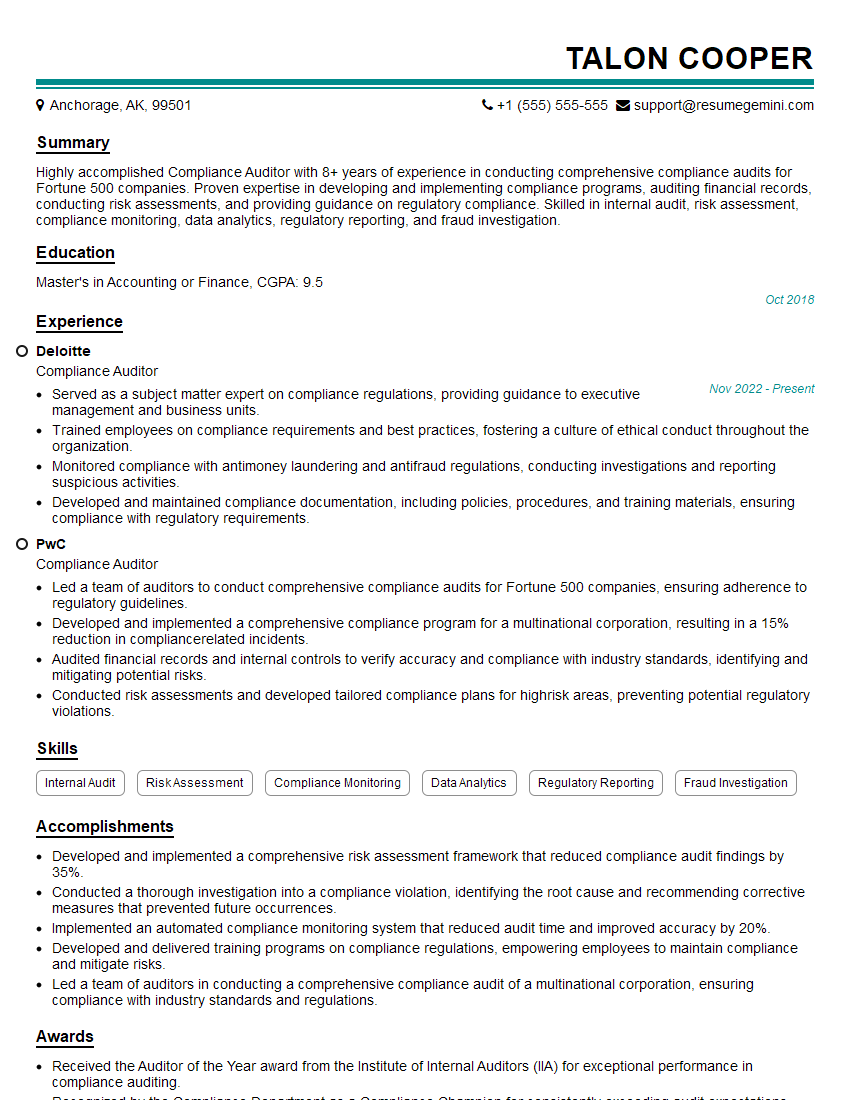

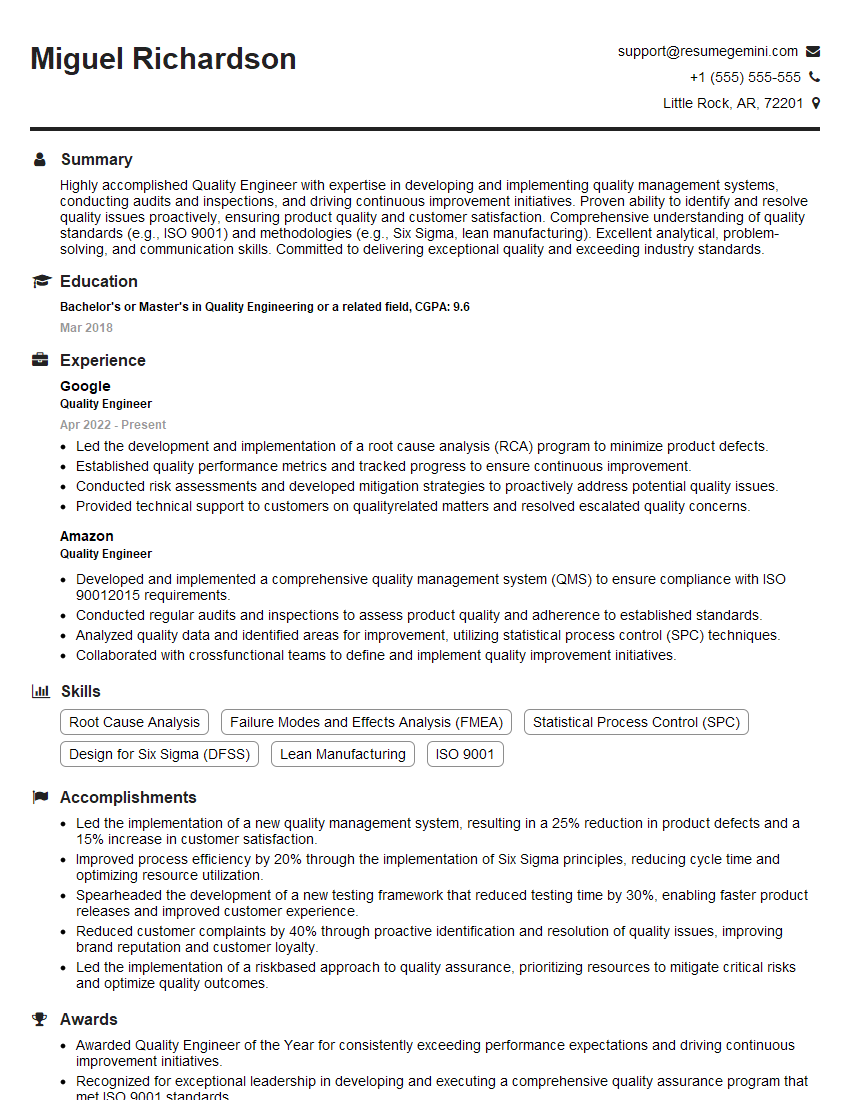

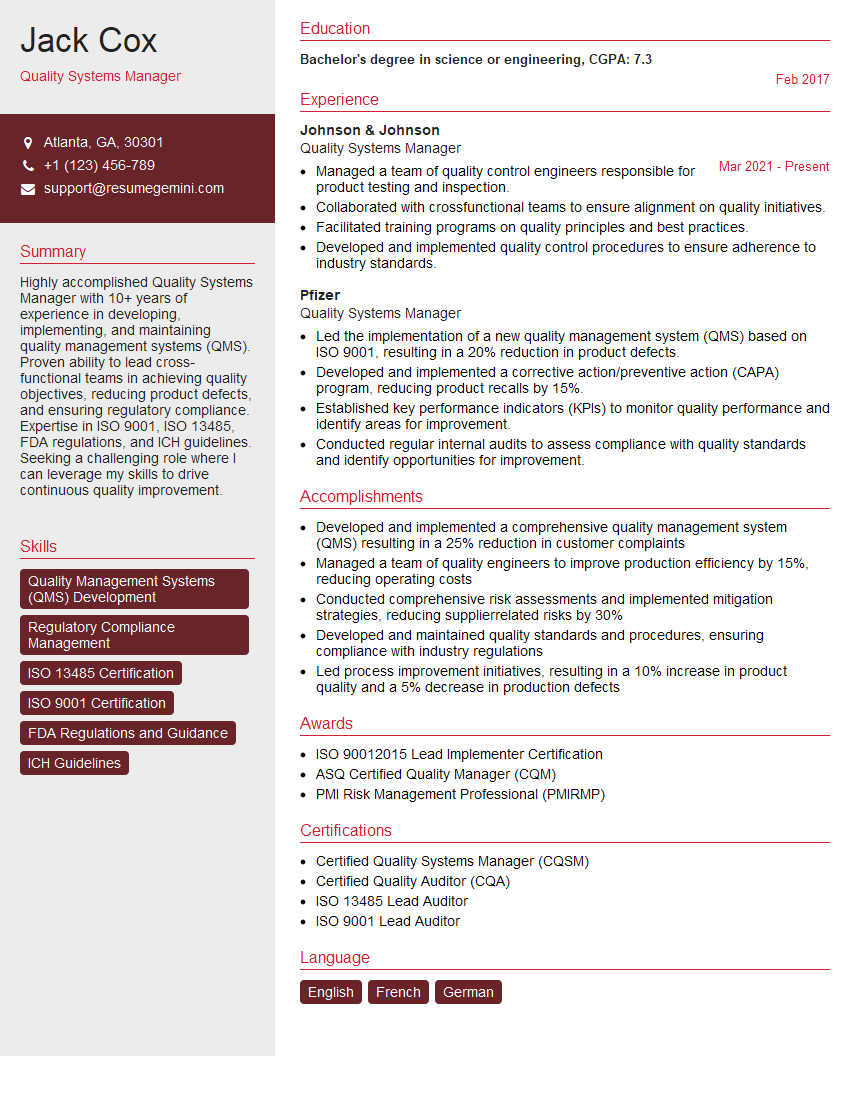

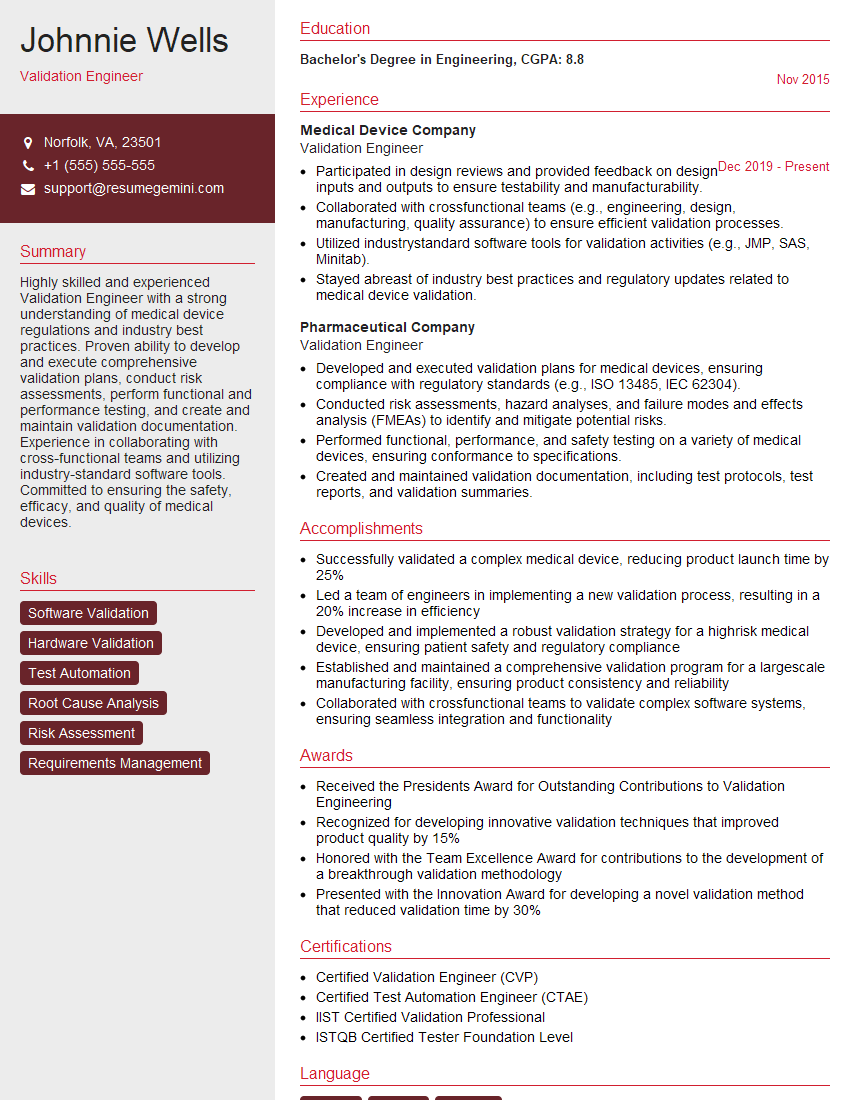

Mastering Quality Assurance and Regulatory Compliance significantly enhances your career prospects, opening doors to higher-paying roles and leadership opportunities. A well-crafted, ATS-friendly resume is crucial for getting your foot in the door. ResumeGemini is a trusted resource to help you build a professional and impactful resume that highlights your skills and experience. Examples of resumes tailored to Quality Assurance (QA) and Regulatory Compliance are available to guide you through the process. Invest time in crafting a compelling resume – it’s your first impression!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good