Are you ready to stand out in your next interview? Understanding and preparing for Radar and Sensor Operation interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Radar and Sensor Operation Interview

Q 1. Explain the difference between pulsed and continuous-wave radar.

The core difference between pulsed and continuous-wave (CW) radar lies in how they transmit their signals. Pulsed radar transmits short bursts of electromagnetic energy, pausing between each burst. Continuous-wave radar, on the other hand, transmits a continuous signal. This seemingly small difference leads to significant variations in their applications and capabilities.

Pulsed Radar: Imagine a strobe light flashing – that’s similar to pulsed radar. The pauses between transmissions allow the radar to measure the time it takes for the signal to reflect back, directly calculating the range to a target. Pulsed radar is excellent for measuring range and is widely used in weather radar, air traffic control, and search and rescue operations.

Continuous-Wave Radar: Think of a constant beam of light – that’s continuous-wave radar. It doesn’t measure range directly using time of flight. Instead, CW radar typically relies on the Doppler effect to measure the relative velocity of a target. It’s particularly useful for detecting moving objects amidst stationary clutter, such as police radar guns for speed detection or tracking aircraft in crowded airspace.

In short, pulsed radar excels at determining range, while CW radar excels at measuring velocity. Some advanced radar systems even combine both techniques to obtain both range and velocity information.

Q 2. Describe the different types of radar modulation techniques.

Radar modulation techniques involve varying a signal’s characteristics (amplitude, frequency, or phase) to encode information and improve performance. Several key methods are commonly employed:

- Amplitude Modulation (AM): The amplitude of the transmitted signal is varied. Simple to implement, but susceptible to noise.

- Frequency Modulation (FM): The frequency of the transmitted signal is varied. Offers better noise immunity than AM and allows for accurate velocity measurement through the Doppler effect. FM-CW radar is a prime example.

- Phase Modulation (PM): The phase of the transmitted signal is varied. Used in advanced radar systems for improved range resolution and clutter rejection. Phase-coded waveforms are often employed.

- Pulse Compression: A technique where a long pulse is encoded with a specific waveform (e.g., linear FM) before transmission, then compressed upon reception. This allows for both high average power (for long-range detection) and high range resolution (characteristic of short pulses).

- Chirp Modulation: A specific type of frequency modulation where the frequency changes linearly over the pulse duration. Widely used in pulse compression radar due to its ease of implementation and effective range resolution.

The choice of modulation technique depends heavily on the specific application requirements, such as range resolution, velocity accuracy, noise immunity, and power constraints.

Q 3. What are the advantages and disadvantages of different antenna types used in radar systems?

Radar antennas play a crucial role in focusing transmitted power and collecting reflected signals. Various antenna types offer different advantages and disadvantages:

- Parabolic Reflectors (Dish Antennas): Provide high gain and directivity, concentrating power in a narrow beam. Excellent for long-range detection but are bulky and less agile. Used in many weather and tracking radars.

- Horn Antennas: Relatively simple and compact, offering a good compromise between gain and beamwidth. Often used as feed antennas for larger reflectors or independently in applications requiring moderate gain and directivity.

- Array Antennas (Phased Arrays): Consist of many smaller antennas arranged in a grid. By electronically controlling the phase of the signal in each element, the beam can be steered electronically, without physically moving the antenna. This offers exceptional agility and allows for rapid scanning of large areas. Used extensively in modern radar systems like air defense radars.

- Microstrip Antennas: Planar antennas etched onto a substrate, offering low profile and ease of integration. Often used in applications where size and weight are critical, such as on aircraft or spacecraft.

The selection of an antenna type is a trade-off between gain, beamwidth, size, weight, cost, and agility, always dictated by the specific application.

Q 4. Explain the concept of range resolution in radar.

Range resolution refers to a radar’s ability to distinguish between two targets located at different ranges. It determines the minimum separation between two targets that can be individually detected as distinct entities. A radar with poor range resolution might see a single, larger target where two smaller targets are actually present.

Range resolution is primarily determined by the transmitted pulse width (τ). The shorter the pulse, the better the range resolution. The relationship can be approximated as: Range Resolution ≈ cτ/2, where c is the speed of light.

Pulse compression techniques greatly enhance range resolution without sacrificing transmission power or detection range. By using longer, encoded pulses and compressing them upon reception, we effectively achieve the range resolution of a much shorter pulse while maintaining the energy of a longer one.

For instance, in air traffic control, high range resolution is crucial to accurately identify and track multiple aircraft in close proximity. In medical imaging using radar, fine range resolution is paramount for accurate organ and tissue visualization.

Q 5. How does clutter affect radar performance, and what techniques are used to mitigate it?

Clutter refers to unwanted radar echoes from objects other than the target of interest. These objects can include ground reflections, rain, birds, buildings, and even atmospheric phenomena. Clutter significantly degrades radar performance by masking target signals and reducing the detection sensitivity.

Effects of Clutter: Clutter can overwhelm the target signal, leading to missed detections or false alarms. It reduces the signal-to-noise ratio (SNR), making it difficult to distinguish between actual targets and clutter echoes.

Clutter Mitigation Techniques: Several techniques are employed to reduce the impact of clutter:

- Moving Target Indication (MTI): This technique exploits the Doppler effect to filter out stationary clutter, preserving echoes from moving targets.

- Space-Time Adaptive Processing (STAP): A sophisticated technique that uses both spatial and temporal information to adapt to and suppress clutter variations across the radar’s field of view.

- Clutter Map: A database of expected clutter returns is created and subtracted from received signals. This works well for static clutter like terrain.

- Polarization Filtering: Using different polarizations of the transmitted and received signals can help to discriminate between target and clutter echoes based on their scattering properties.

The choice of clutter mitigation technique depends on the type of clutter present, the desired performance level, and the complexity allowed in the radar system.

Q 6. Describe the process of radar signal processing, including filtering and detection.

Radar signal processing is the crucial step that transforms raw radar echoes into meaningful information about targets. This involves multiple stages:

- Signal Reception and Amplification: The antenna receives the weak reflected signals, which are then amplified to a usable level.

- Analog-to-Digital Conversion (ADC): The analog signals are converted into digital format for further processing using digital signal processors.

- Pulse Compression (if applicable): If pulse compression was used in transmission, the received signal is compressed to improve range resolution.

- Filtering: Various filters are applied to remove noise and clutter. These can include MTI filters, matched filters, and adaptive filters.

- Detection: Algorithms are used to detect the presence of targets by identifying peaks or significant changes in the filtered signal. Common methods include constant false alarm rate (CFAR) detectors.

- Parameter Estimation: Once targets are detected, parameters like range, velocity, and angle are estimated using sophisticated algorithms.

- Track Initiation and Tracking: Detected targets are grouped into tracks, which follow the targets’ movement over time. Kalman filtering is frequently employed for track prediction and smoothing.

Modern radar signal processing often relies heavily on advanced digital signal processing techniques and algorithms to extract the maximum amount of information from the received echoes, often employing machine learning methods for target classification and identification.

Q 7. Explain the concept of Doppler effect in radar and its applications.

The Doppler effect is the change in frequency of a wave (in this case, an electromagnetic wave) for an observer moving relative to the source of the wave. In radar, this means the frequency of the reflected signal is different from the transmitted frequency if the target is moving.

Doppler Shift: The change in frequency is called the Doppler shift (fd), and it’s directly proportional to the target’s radial velocity (vr) relative to the radar: fd = 2vrft/c, where ft is the transmitted frequency, and c is the speed of light.

Applications: The Doppler effect is crucial in several radar applications:

- Velocity Measurement: Police radar guns rely on the Doppler effect to measure the speed of vehicles.

- Weather Radar: Measures wind speed and direction by observing the Doppler shift of signals reflected from raindrops.

- Moving Target Indication (MTI): As mentioned earlier, MTI uses the Doppler effect to distinguish moving targets from stationary clutter.

- Air Traffic Control: Doppler radar helps track aircraft speed and predict potential collisions.

By analyzing the Doppler shift, radar systems can extract valuable information about the target’s motion, greatly enhancing situational awareness and making it a cornerstone of modern radar technology.

Q 8. What are the different types of radar targets and how are they detected?

Radar targets can be broadly classified into several types, each requiring different detection techniques. The simplest are point targets, like a small aircraft or a bird, which appear as a single, strong reflection. Detecting these is relatively straightforward; the radar simply looks for a significant increase in received power above the noise floor. Then we have extended targets, such as large ships or buildings. These produce a complex return signal lasting longer because the radar illuminates multiple scattering points across the target’s surface. Detecting these usually involves processing techniques like clutter rejection and signal integration. Fluctuating targets, such as rain or flocks of birds, exhibit variations in their radar cross-section (RCS) over time, making their detection more challenging. We use statistical methods and signal processing to discern them from noise. Finally, complex targets can be anything from a swarm of drones to a dense forest, requiring advanced algorithms such as space-time adaptive processing (STAP) for effective detection amidst clutter and interference. The detection process generally involves comparing the received signal against a threshold, with more sophisticated techniques applied for more complex targets to account for signal variability and environmental factors.

Q 9. What is sensor fusion, and how is it used to improve system performance?

Sensor fusion is the process of integrating data from multiple sensors to improve overall system performance beyond what any single sensor could achieve alone. Imagine trying to find your keys in a dark room; using only your eyes might fail, but adding the sense of touch (another sensor modality) significantly improves your chances of success. Similarly, in a radar system, combining radar data with, for instance, infrared (IR) sensor data can dramatically enhance object detection and tracking accuracy. For example, radar provides excellent range and velocity information, but might struggle to distinguish between different types of materials. IR, on the other hand, excels at identifying temperature differences, which helps classify targets. By fusing these data streams, we get a more comprehensive and reliable understanding of the environment. This often involves sophisticated algorithms like Kalman filters (explained in the next question) to handle the uncertainty and noise inherent in sensor measurements. The advantages include improved accuracy, robustness against sensor failures, and the ability to infer information that individual sensors cannot provide alone.

Q 10. Explain the Kalman filter and its application in radar tracking.

The Kalman filter is a powerful algorithm used for estimating the state of a dynamic system from a series of noisy measurements. Think of it as a smart prediction tool that continually refines its understanding of a target’s position and velocity. In radar tracking, we use the Kalman filter to predict the target’s future position based on its past trajectory and then update this prediction using the latest radar measurements. This iterative process effectively smooths out the noise in the sensor data, leading to a more accurate and stable track. The filter works by maintaining a state vector representing the target’s position, velocity, and potentially acceleration, along with its associated covariance matrix representing the uncertainty in these estimates. Each new measurement is incorporated by weighting it against the predicted state, with the weighting factor determined by the relative uncertainties of the prediction and the measurement. The algorithm then propagates the updated state forward in time to predict the next position. This recursive process continually improves the accuracy of the state estimate over time. Real-world applications include air traffic control, missile guidance, and autonomous vehicle navigation, where accurate and robust tracking is critical.

Q 11. What are the challenges associated with integrating different sensor modalities?

Integrating different sensor modalities presents a number of challenges. First, there’s the issue of data heterogeneity; different sensors produce data in different formats, with different sampling rates, resolutions, and levels of noise. Harmonizing this data requires careful pre-processing and data fusion techniques. Timing synchronization is another major hurdle. Ensuring that data from different sensors corresponds to the same point in time is crucial for accurate fusion. Small timing discrepancies can lead to significant errors. Data registration, aligning the coordinate systems of different sensors, is also essential, especially when dealing with sensors that have different fields of view. Finally, the increased computational complexity of fusing multiple data streams should not be underestimated; efficient algorithms and hardware are necessary to handle the increased processing load in real-time applications. For instance, choosing an appropriate fusion method (e.g., weighted average, Kalman filter) will depend on the sensor characteristics and desired performance. Overcoming these challenges necessitates careful system design, selecting appropriate sensors and fusion algorithms, and rigorous testing and validation.

Q 12. Describe different types of sensors (e.g., lidar, infrared, sonar) and their applications.

Different sensors offer unique advantages depending on the application. Lidar (Light Detection and Ranging) uses lasers to measure distances, providing highly accurate 3D point cloud data. It’s excellent for autonomous driving, mapping, and surveying. Infrared (IR) sensors detect heat signatures, enabling applications such as thermal imaging for security, medical diagnostics, and target identification in military settings. Sonar (Sound Navigation and Ranging) utilizes sound waves for underwater detection and ranging, crucial for navigation, submarine detection, and oceanographic research. Choosing the right sensor depends on factors such as range, resolution, cost, environmental conditions, and the specific application requirements. For example, while radar is effective for long-range detection, lidar offers superior resolution for close-range object recognition. The combination of these sensors, as in sensor fusion, allows for a more complete and detailed picture of the surroundings.

Q 13. How do you calibrate a radar system?

Radar calibration is a crucial step to ensure accurate measurements. It involves determining and correcting systematic errors in the radar system. This typically involves a multi-step process. First, we calibrate the transmitter, ensuring the emitted signal has the correct power and pulse characteristics. Next, the receiver is calibrated to establish its sensitivity and linearity. This often involves using known signal sources and comparing the measured output to the expected values. Antenna calibration is essential to determine the antenna gain pattern and pointing accuracy. This might involve using a precise antenna positioning system and measuring the received power from known targets at various angles. Finally, we perform system-level calibration to integrate the individual calibrations and determine overall system accuracy. Techniques such as using a known target at a precisely measured distance are common. Regular calibration is necessary to maintain the accuracy of the radar system over time due to factors like temperature variations and component aging. Accurate calibration ensures reliable range, velocity, and angular measurements.

Q 14. How do you handle noisy sensor data?

Noisy sensor data is a ubiquitous problem in radar systems. Several techniques are used to mitigate this. Filtering is a common approach, using techniques like moving average filters or Kalman filters (discussed earlier) to smooth out short-term fluctuations. These filters average the data over a specific window, reducing the impact of noise spikes. Thresholding involves setting a threshold level and discarding any data points below this level. This is effective for removing low-amplitude noise but can lead to loss of information. Outlier rejection identifies and removes data points that are significantly different from the surrounding data. This requires careful selection of criteria to avoid removing valid but unusual data. Signal processing techniques, such as matched filtering and adaptive beamforming, are more advanced methods designed to improve signal-to-noise ratio (SNR). These techniques exploit the characteristics of the desired signal and the noise to enhance the signal while suppressing the noise. The choice of technique depends on the type of noise, the required accuracy, and the available computational resources. The goal is always to enhance the reliability and accuracy of the sensor data while minimizing information loss.

Q 15. What are the common error sources in radar measurements?

Radar measurements are susceptible to various errors, broadly categorized as systematic and random errors. Systematic errors are consistent and repeatable, stemming from known sources, while random errors are unpredictable and fluctuate.

- Clutter: Ground reflections, weather phenomena (rain, snow, birds), and other unwanted signals can mask the target’s echo, leading to false detections or inaccurate measurements. Imagine trying to hear a whisper in a crowded room – the other voices are the clutter. Mitigation involves sophisticated signal processing techniques like Moving Target Indication (MTI) and clutter cancellation.

- Multipath Propagation: Signals reflecting off multiple surfaces (e.g., ground and buildings) before reaching the radar can cause interference and distort the target’s range and angle information. Think of echoes bouncing off walls in a large hall. Techniques like space-time adaptive processing (STAP) are used to combat this.

- Atmospheric Effects: Refraction and attenuation of radar signals due to atmospheric conditions (temperature, humidity, pressure) can cause errors in range and angle estimates. This is analogous to light bending as it passes through water.

- Noise: Internal noise in the radar receiver, thermal noise from the environment, and jamming signals can all degrade signal quality and increase measurement uncertainties. This is akin to static on a radio.

- Calibration Errors: Inaccuracies in the radar’s internal calibration can lead to systematic biases in measurements. Regular calibration is crucial for accuracy.

- Quantization Errors: The conversion of analog signals to digital data introduces errors due to the finite resolution of the analog-to-digital converter (ADC). The finer the resolution, the smaller the error.

Understanding and mitigating these error sources is crucial for reliable radar operation. Techniques like advanced signal processing algorithms, careful system design, and regular calibration play a vital role in improving measurement accuracy.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of radar cross-section (RCS).

Radar Cross Section (RCS) is a measure of how much of a radar signal a target reflects back towards the radar. It’s essentially the target’s ‘visibility’ to the radar. A larger RCS means more signal is reflected, making the target easier to detect. It’s measured in square meters (m²) and is dependent on several factors:

- Target Shape and Size: A large, flat surface reflects more energy than a small, rounded object. Think of a car versus a ball; the car has a much larger RCS.

- Target Material: The material’s reflectivity impacts how much of the signal is absorbed or reflected. Highly conductive materials generally have larger RCS values.

- Target Orientation: The RCS varies with the target’s aspect angle relative to the radar. A plane will have a different RCS viewed from the front versus from the side.

- Frequency: The RCS can vary with the radar’s operating frequency due to phenomena like resonance.

Calculating the RCS can be complex, often requiring computational electromagnetic (CEM) simulations or measurements. Knowing the RCS is vital for radar system design, predicting detection range, and classifying targets.

Q 17. What is the difference between active and passive radar systems?

The key difference lies in how they acquire information.

- Active Radar: Transmits its own electromagnetic signals and receives the reflections from targets. It’s like shouting and listening for an echo. Examples include weather radar and air traffic control radar. Active radars offer precise range and velocity information but consume more power and reveal their location.

- Passive Radar: Receives signals transmitted by other sources (e.g., FM radio broadcasts, TV transmissions) and analyzes how these signals are reflected or scattered by targets. It’s like listening to someone else’s conversation and inferring information from the way it changes. Passive radars are stealthy as they don’t emit their own signals, but they usually offer less precise information than active radars and are limited by the availability of suitable illuminators.

Choosing between active and passive systems depends on the specific application’s requirements for stealth, range, accuracy, and power consumption. For example, military applications might favor passive radar for stealth operations, while air traffic control prefers active radar for its precise measurements.

Q 18. Describe the different types of radar waveforms.

Radar waveforms are the modulated signals transmitted by the radar to probe the environment. The choice of waveform greatly influences the radar’s performance. Common types include:

- Continuous Wave (CW): A continuous, unmodulated signal. Used for simple applications like measuring velocity using the Doppler effect, but doesn’t provide range information directly.

- Pulsed Waveforms: Short bursts of energy (pulses) separated by periods of silence. These allow for range measurement by measuring the time delay between transmission and reception. Examples include simple pulsed waveforms, pulse-coded waveforms with different pulse widths or frequencies, and frequency-modulated continuous wave (FMCW) waveforms.

- Pulse Compression Waveforms: Wideband signals that are compressed at the receiver to achieve high range resolution and improved signal-to-noise ratio. They are similar to pulsed waveforms but utilize sophisticated modulation techniques to achieve better resolution and range accuracy.

- Chirp Waveforms (FMCW): Frequency-modulated signals where the frequency changes linearly over the pulse duration. FMCW signals are very efficient for high-resolution radar. They offer both range and velocity information.

The selection of a waveform depends on the specific requirements of the application, such as range resolution, velocity accuracy, and clutter rejection capability. For example, high-resolution imaging applications would require waveforms with large bandwidths, while long-range detection might benefit from high-energy pulsed waveforms.

Q 19. Explain the concept of beamforming in phased array radar.

Beamforming in phased array radar allows for electronic steering of the radar beam without mechanically moving the antenna. This is achieved by precisely controlling the phase of the signals transmitted by each element of the antenna array.

By adjusting the phase difference between elements, the radar can electronically steer the beam to different directions. If all elements transmit in phase, the beam points straight ahead. By introducing a phase shift, the beam can be steered to the desired angle. This is analogous to focusing a flashlight with adjustable lenses.

The process involves:

- Phase Shifting: Each antenna element’s signal is phase-shifted according to a calculated pattern to create constructive interference in the desired direction and destructive interference in other directions.

- Signal Summation: The signals from all elements are combined coherently to form the transmitted and received beams.

- Digital Beamforming (DBF): Modern phased arrays often employ DBF where digital signal processors perform the phase shifting and summation, allowing for more flexible and adaptive beam patterns.

Beamforming provides significant advantages over mechanically steered antennas, enabling fast beam switching, electronic scanning, and adaptive beam shaping for enhanced performance in challenging environments. For instance, in air defense systems, beamforming allows for rapid tracking of multiple targets simultaneously.

Q 20. How do you design a radar system for a specific application?

Designing a radar system for a specific application is a systematic process involving several key steps:

- Define Requirements: Clearly specify the application’s needs, including range, resolution, accuracy, field of view, target types, environment, and power constraints. For example, a weather radar will have different requirements than an automotive radar.

- Select Radar Type: Choose between active or passive radar, considering factors like stealth, cost, and performance requirements.

- Waveform Selection: Select an appropriate waveform based on range and Doppler resolution needs, considering signal-to-noise ratio, clutter characteristics, and the desired detection performance.

- Antenna Design: Design the antenna based on the required beamwidth, gain, sidelobe levels, and scanning requirements. Factors like antenna size, type (e.g., phased array, parabolic reflector), and materials are important considerations.

- Receiver Design: Design the receiver to minimize noise, maximize sensitivity, and achieve the desired signal processing capabilities.

- Signal Processing: Develop algorithms for signal detection, target tracking, clutter rejection, and data interpretation. This is often the most computationally intensive part of the system.

- System Integration: Integrate all components into a functional system, ensuring proper synchronization and calibration. This includes software and hardware integration for optimal performance.

- Testing and Evaluation: Thoroughly test and evaluate the system’s performance under various conditions, comparing its actual capabilities with the defined requirements. This involves both simulations and field tests.

Iterative design is crucial. Simulations and prototypes may require refinement before the final system is realized. The process involves close collaboration between engineers and the end-user to ensure the radar effectively meets the application’s unique challenges.

Q 21. What are the key performance indicators (KPIs) for a radar system?

Key Performance Indicators (KPIs) for a radar system vary depending on the application, but some common ones include:

- Range Resolution: Ability to distinguish between closely spaced targets in range.

- Azimuth and Elevation Resolution: Ability to distinguish between closely spaced targets in angle.

- Detection Range: Maximum distance at which a target can be reliably detected.

- False Alarm Rate: Probability of detecting a non-existent target (noise or clutter).

- Probability of Detection: Probability of correctly detecting a real target.

- Accuracy: Precision of range, angle, and velocity measurements.

- Sensitivity: Ability to detect weak signals from distant or small targets.

- Clutter Rejection Capability: Ability to suppress unwanted reflections from ground, weather, etc.

- Data Rate: Speed at which the radar processes and delivers information.

- Power Consumption: Amount of power consumed by the system.

- Reliability: System’s ability to function without failure for a specified period.

- Size and Weight: Physically relevant for many applications, especially mobile systems.

These KPIs are used to benchmark and compare radar systems, ensuring they meet the desired performance levels. Choosing which KPIs are most relevant will directly depend on the demands of the specific application. For example, high range resolution might be crucial for air traffic control, while high sensitivity would be more important for long-range surveillance.

Q 22. Explain the concept of radar ambiguity.

Radar ambiguity arises when the radar system cannot uniquely determine the range and/or velocity of a target. This happens because the radar signal’s return can be interpreted in multiple ways. Imagine dropping a pebble in a pond; the ripples spread outwards, and if you only see a ripple, you can’t definitively say how far away the pebble was dropped. Similarly, a radar signal’s return may match multiple possible target locations.

Range Ambiguity: This occurs when the pulse repetition frequency (PRF) is too low. The radar’s maximum unambiguous range is determined by the PRF. If a target is beyond this range, its return signal will appear as if it came from a closer range, causing ambiguity. For example, if the PRF allows for an unambiguous range of 100km, and a target is 150km away, the radar may show the target at 50km.

Velocity Ambiguity: This is related to the pulse repetition interval (PRI) and the Doppler frequency shift caused by the target’s movement. If the Doppler frequency exceeds half the PRF, the measured velocity will be incorrectly aliased. This is like measuring the speed of a car going around a track. If you only see it once every few laps, you might misjudge its speed.

Dealing with ambiguity usually involves using multiple PRFs or sophisticated signal processing techniques like using multiple frequency channels or incorporating a priori knowledge about target speeds and locations.

Q 23. How do you deal with multipath propagation in radar systems?

Multipath propagation occurs when the radar signal reflects off multiple surfaces before reaching the receiver. This creates multiple copies of the same signal, arriving at slightly different times and with different amplitudes and phases. The resulting signal is a superposition of these different paths, leading to distortions in the target’s range, velocity and amplitude estimations.

Several techniques can mitigate multipath effects:

- Space-Time Adaptive Processing (STAP): This advanced technique uses multiple antennas and sophisticated algorithms to suppress clutter and interference, including multipath. It’s computationally intensive but effective.

- Moving Target Indication (MTI): This method filters out stationary clutter, making the detection of moving targets more resilient to multipath effects. It’s a relatively simple method, but effectiveness depends on the characteristics of the multipath environment.

- Advanced signal processing techniques: Methods such as using wideband signals or employing sophisticated waveform design can improve the resolution and ability to distinguish between direct and multipath signals.

- Careful site selection: Placing the radar in an environment with minimal multipath reflections can significantly reduce the problem.

The choice of method depends on the specific radar application and the severity of the multipath problem. For instance, in high-clutter environments like maritime radar, STAP is often necessary; while MTI might suffice in less challenging scenarios.

Q 24. Describe your experience with radar simulation tools.

I have extensive experience using MATLAB and its various toolboxes (e.g., Phased Array System Toolbox, Signal Processing Toolbox) for radar simulation. I’ve utilized these tools to model various radar systems, from simple monostatic pulsed radars to complex multistatic systems with advanced signal processing techniques. This includes:

- System-level simulations: Modeling radar hardware components (antennas, transmitters, receivers), signal propagation, and target characteristics.

- Signal processing simulations: Simulating matched filtering, pulse compression, Doppler processing, and clutter rejection techniques.

- Performance analysis: Evaluating the performance of different radar systems and signal processing algorithms under various operating conditions (e.g., different clutter levels, noise levels, target types).

A recent project involved simulating a weather radar system to evaluate the impact of different PRF choices on range ambiguity resolution. The simulations helped us optimize the system design for accurate precipitation estimation. Specifically, I used the Phased Array System Toolbox to model antenna patterns and the Signal Processing Toolbox to simulate various signal processing algorithms.

Q 25. What programming languages and tools are you proficient in for radar signal processing?

My primary programming languages for radar signal processing are MATLAB and Python. I’m proficient in using MATLAB’s extensive signal processing and array processing toolboxes for tasks such as FFTs, filter design, beamforming, and target tracking algorithms. Python provides versatility through libraries such as NumPy, SciPy, and Matplotlib, which I utilize for data manipulation, numerical computation, and visualization.

I’ve also used C++ for computationally intensive tasks where performance optimization is crucial, particularly in real-time processing applications. Furthermore, I am familiar with using specialized radar signal processing libraries like the GNU Radio framework.

Beyond the languages, I’m adept at using various tools for data management and analysis including databases (e.g., SQL), version control systems (e.g., Git), and collaborative platforms.

Q 26. Explain your experience with radar data analysis and interpretation.

My experience with radar data analysis encompasses a wide range of tasks, from raw data processing to feature extraction and target identification. I routinely work with radar data in various formats, including raw I/Q data, range-Doppler maps, and target tracks. My analysis usually involves:

- Data cleaning and pre-processing: Removing noise, correcting for range and Doppler distortions, and handling missing data.

- Feature extraction: Deriving meaningful features from the radar data, such as target range, velocity, azimuth, elevation, and radar cross-section.

- Target detection and tracking: Using algorithms such as constant false alarm rate (CFAR) detectors and Kalman filters to detect and track targets in the presence of clutter and noise.

- Classification and identification: Using machine learning techniques, such as support vector machines or neural networks, to classify targets based on their radar signatures.

For example, in one project, I analyzed radar data collected from a maritime surveillance system to identify different types of ships based on their size, speed, and maneuvering patterns. This involved extensive data processing and the development of a custom classification algorithm.

Q 27. Describe your experience with testing and validation of radar systems.

My experience in testing and validation of radar systems is extensive and covers various aspects of the system lifecycle. This includes:

- Requirements verification: Ensuring that the radar system meets its specified performance requirements through rigorous testing.

- Environmental testing: Testing the radar’s performance under various environmental conditions (e.g., temperature, humidity, vibration).

- Functional testing: Verifying that all the radar functions operate correctly and as expected.

- Performance testing: Measuring the radar’s performance metrics (e.g., range resolution, velocity accuracy, detection probability).

- Integration testing: Testing the integration of the different radar subsystems.

I’ve utilized both hardware-in-the-loop and software-in-the-loop simulation techniques to test radar systems efficiently and cost-effectively. A significant project involved testing the performance of a phased array radar under different jamming conditions, necessitating the development of customized test procedures and data analysis techniques.

Q 28. How do you stay up-to-date with the latest advancements in radar technology?

Staying current in the rapidly evolving field of radar technology requires a multi-pronged approach:

- Conferences and workshops: Attending conferences like IEEE Radar Conferences and specialized workshops to learn about the latest research and development.

- Journal publications: Regularly reading leading journals in radar engineering and signal processing, such as the IEEE Transactions on Aerospace and Electronic Systems and the IEEE Transactions on Signal Processing.

- Professional networks: Participating in professional organizations like the IEEE Aerospace and Electronic Systems Society and networking with other radar professionals.

- Online resources: Utilizing online resources such as research databases (e.g., IEEE Xplore), pre-print servers (e.g., arXiv), and online courses to broaden knowledge.

- Industry collaborations: Working on projects with industry partners allows for exposure to real-world applications and emerging technologies.

I believe continuous learning is crucial for success in this dynamic field, and actively seeking out new information is an integral part of my professional development.

Key Topics to Learn for Radar and Sensor Operation Interview

- Fundamentals of Radar Systems: Understanding radar principles, including signal transmission, reflection, and reception; different types of radar (e.g., pulsed, continuous wave); and range, azimuth, and elevation measurements.

- Signal Processing Techniques: Mastering techniques like filtering, matched filtering, pulse compression, and clutter rejection to enhance signal-to-noise ratio and target detection.

- Sensor Fusion and Data Integration: Explore how to combine data from multiple sensors (radar, lidar, camera) to improve accuracy, robustness, and situational awareness. This includes understanding data fusion algorithms and their limitations.

- Target Tracking and Estimation: Learn about Kalman filtering, alpha-beta filters, and other tracking algorithms used to estimate target position, velocity, and trajectory.

- Radar Cross Section (RCS): Understanding how target shape and material properties affect the radar signal return and its implications for detection and identification.

- Practical Applications: Familiarize yourself with real-world applications of radar and sensor systems, such as air traffic control, weather forecasting, autonomous driving, and defense systems. Be prepared to discuss specific use cases and challenges.

- System Design and Implementation: Gain a high-level understanding of the hardware and software components of radar and sensor systems. This includes antenna design, signal generators, receivers, and data processing units.

- Troubleshooting and Problem-Solving: Be ready to discuss your approach to diagnosing and resolving issues in radar and sensor systems, drawing on your experience and analytical skills.

- Emerging Technologies: Stay updated on advancements in radar technology, such as advanced signal processing techniques, AI-based target recognition, and miniaturized sensor designs.

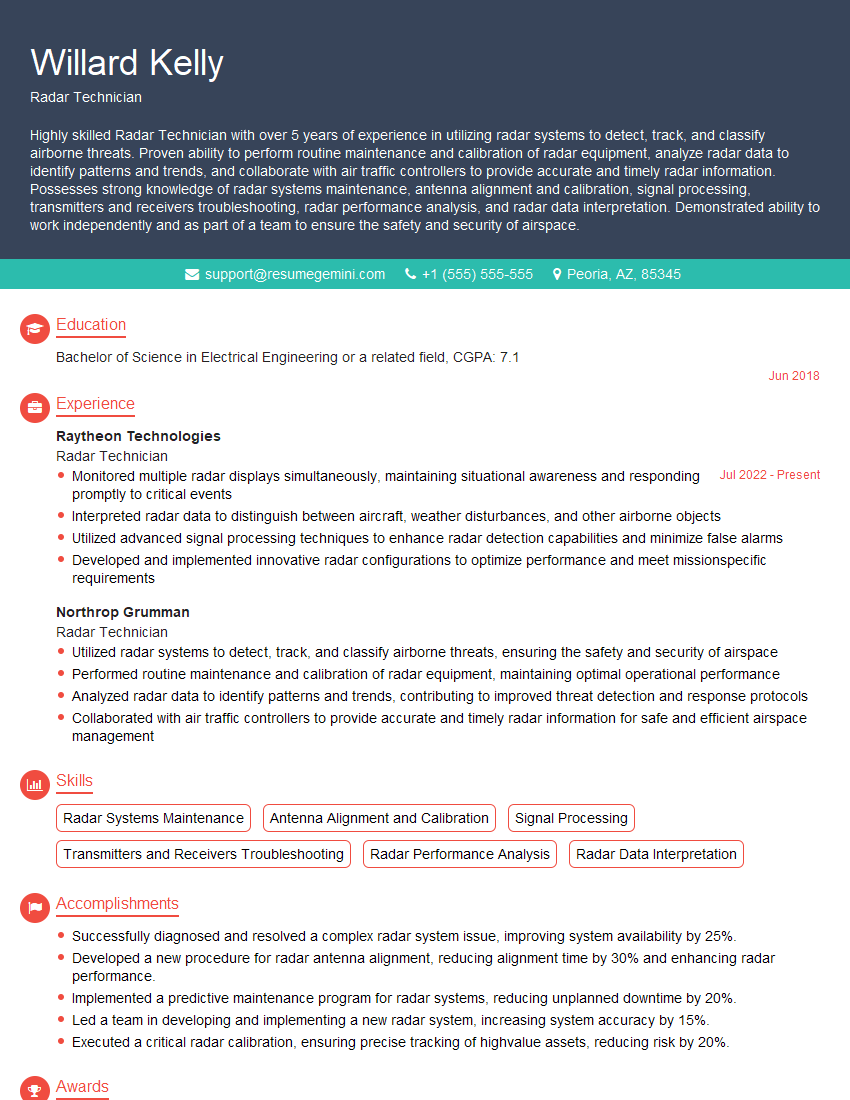

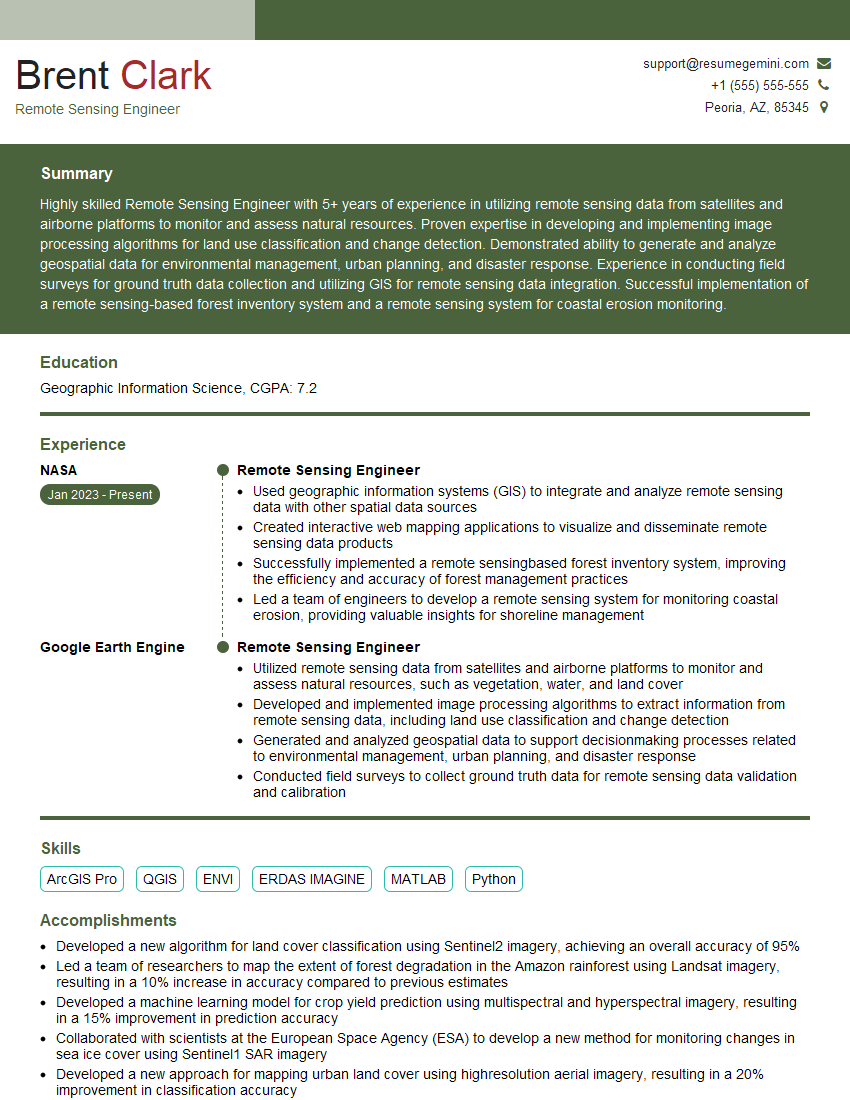

Next Steps

Mastering Radar and Sensor Operation opens doors to exciting and impactful careers in various industries. A strong understanding of these concepts is highly valued by employers. To significantly boost your job prospects, creating an ATS-friendly resume is crucial. This ensures your qualifications are effectively communicated to hiring managers. We recommend using ResumeGemini to build a professional and impactful resume. ResumeGemini provides tools and resources to create a highly effective resume, and offers examples of resumes tailored to Radar and Sensor Operation positions to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

good