Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Reliability Engineering Software (e.g., Weibull++, ReliaSoft) interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Reliability Engineering Software (e.g., Weibull++, ReliaSoft) Interview

Q 1. Explain the difference between Weibull and Exponential distributions in reliability analysis.

Both Weibull and Exponential distributions are used in reliability analysis to model the time-to-failure of a system or component. However, they differ significantly in their flexibility and applicability.

The Exponential distribution is a special case of the Weibull distribution, where the shape parameter (β) is equal to 1. This means it assumes a constant failure rate – the probability of failure is the same regardless of how long the item has been operating. Think of a lightbulb that’s equally likely to fail on day 1 as it is on day 100. This is often a good approximation for components that fail randomly, without any wear-out or infant mortality.

The Weibull distribution is far more versatile. Its shape parameter (β) allows it to model various failure patterns:

- β < 1: Represents infant mortality or early failures. Many failures happen early in the life of the product, likely due to manufacturing defects.

- β = 1: This is the exponential distribution, representing a constant failure rate.

- β > 1: Indicates wear-out failures. Failures become more frequent as the product ages. This is common in mechanical components.

In essence, the Weibull distribution is a more comprehensive model because it can capture a wider range of failure patterns than the simpler exponential distribution. Choosing between the two depends on the nature of the failure mechanism and the data observed.

Q 2. How do you perform a Weibull analysis using Weibull++?

Performing a Weibull analysis in Weibull++ is a straightforward process, largely driven by the type of data you have. Let’s assume we’re dealing with complete data (all units failed). Here’s a simplified outline:

- Data Entry: Input your failure data into Weibull++. This typically involves specifying the time-to-failure for each unit. You might have columns for unit number, failure time, and any censoring information.

- Distribution Selection: Choose the Weibull distribution from the available options. Weibull++ will often automatically suggest this based on the data characteristics, but you always have the option to change it.

- Analysis: Select the appropriate analysis method. Common methods include Maximum Likelihood Estimation (MLE) or Rank Regression. MLE is generally preferred for larger datasets and is typically default in Weibull++.

- Parameter Estimation: Weibull++ will then estimate the parameters of the Weibull distribution: the scale parameter (η), which represents the characteristic life, and the shape parameter (β), as explained before. It will also calculate the confidence intervals for these parameters, giving you an idea of the uncertainty in the estimates.

- Results Interpretation: Examine the results, paying particular attention to the shape parameter (β) and the estimated characteristic life (η). These tell you a lot about the nature of your failures.

- Visualization: Weibull++ provides various plots to visualize the data and the fitted Weibull distribution, allowing for an intuitive understanding of your data.

For censored data (some units haven’t failed yet), the process is similar, but you need to correctly specify the censoring type (right, left, or interval). Weibull++ handles censoring automatically once this information is properly entered.

Q 3. Describe the different types of censoring in reliability data and how they affect analysis.

Censoring in reliability data refers to situations where you don’t observe the exact failure time for all units in your study. This is extremely common. There are several types:

- Right Censoring: This is the most common type. You only know that a unit survived beyond a certain time, but you don’t know its exact failure time. For example, a test is stopped before all units fail, or a unit is still operating at the time of analysis.

- Left Censoring: Here, you only know that a unit failed before a specific time but not the exact failure time. This is less frequent than right censoring in reliability studies.

- Interval Censoring: You only know that the unit failed within a specific time interval. For example, inspections occur at regular intervals and a failure is discovered to have happened somewhere within that interval.

Impact on Analysis: Ignoring censoring will lead to biased and inaccurate results. Appropriate statistical methods are crucial. Software like Weibull++ incorporates censoring information into the analysis, providing more reliable estimates of the distribution parameters. Failure to account for censoring will lead to an underestimation of the mean time to failure and a skewed understanding of reliability characteristics.

Q 4. What are the key assumptions of a Weibull distribution?

The key assumptions of a Weibull distribution in reliability analysis include:

- Constant failure rate (if β = 1): The probability of failure is constant over time.

- Memoryless property (if β = 1): The probability of failure in the future is independent of the unit’s past operational time. This is true only for the exponential distribution (β=1).

- Data independence: The failures of individual units are independent of each other. This is violated if, say, the failure of one unit triggers the failure of others.

- Constant operating conditions: The operating conditions (stress, temperature, etc.) remain constant throughout the study period. Changes to these conditions might affect the failure rate.

- Data represent a homogenous population: This means the units being tested are statistically similar and all have the same potential reliability.

Violating these assumptions can lead to inaccurate results. Therefore, careful consideration should be given to data selection and analysis techniques to ensure they align with these assumptions and provide valid reliability estimations.

Q 5. How do you determine the appropriate distribution for your reliability data?

Choosing the appropriate distribution is crucial for accurate reliability predictions. There’s no single ‘best’ method, but a combination of techniques is generally recommended:

- Visual Inspection of Data: Plot your time-to-failure data (possibly a probability plot). The shape of the plot can offer initial clues about the distribution. Does it seem to show a constant, increasing, or decreasing failure rate?

- Goodness-of-Fit Tests: Software like Weibull++ performs statistical goodness-of-fit tests (e.g., Chi-squared, Kolmogorov-Smirnov). These tests assess how well different distributions fit your data and provide a statistical measure of the fit.

- Hazard Plot: This plot displays the failure rate over time. A constant hazard rate points to the exponential distribution, while an increasing or decreasing trend suggests a Weibull distribution.

- Engineering Judgement: Consider the underlying physics of failure. Is it likely that the component fails randomly (exponential), gradually wears out (Weibull with β>1), or has significant early failures (Weibull with β<1)?

- Consider alternative distributions: Other distributions like lognormal and gamma may also be appropriate depending on your data characteristics. Weibull is very popular and often a good starting point, but exploration is warranted.

Remember: the best-fitting distribution is not necessarily the most realistic one. Sometimes, the simpler model provides sufficient accuracy while avoiding unnecessary complexity.

Q 6. Explain the concept of Mean Time Between Failures (MTBF).

Mean Time Between Failures (MTBF) is a key metric in reliability engineering representing the average time between successive failures of a repairable system. It’s an indicator of the system’s reliability – a higher MTBF means the system is more reliable and is likely to operate for longer periods without failure. For example, an aircraft engine might have an MTBF measured in thousands of flight hours.

It’s crucial to note that MTBF applies only to repairable systems. For non-repairable systems (those that are discarded after failure), the appropriate metric is the Mean Time To Failure (MTTF).

MTBF is often used for:

- Predicting maintenance schedules: A high MTBF allows for longer intervals between scheduled maintenance.

- Comparing the reliability of different systems: Comparing MTBF values allows for objective comparisons of reliability between different designs or manufacturers.

- Evaluating the effectiveness of improvement initiatives: Tracking changes in MTBF can reveal if design or maintenance improvements have had a positive impact on reliability.

Q 7. How do you calculate MTBF using ReliaSoft?

Calculating MTBF in ReliaSoft is generally done within the context of a reliability analysis. The specific steps depend on the chosen analysis method and the type of data. However, a common approach is through the use of its built-in features. Here’s a simplified overview:

- Data Entry: Input your failure and repair data into ReliaSoft. This might include information on the time of each failure and the time taken for repair.

- Analysis Type Selection: Choose an appropriate analysis method within ReliaSoft, such as Non-Homogeneous Poisson Process (NHPP) or a time-series analysis based on the nature of your data.

- MTBF Calculation: ReliaSoft will automatically calculate the MTBF based on the chosen analysis and the entered data. The results section will explicitly state the calculated MTBF along with its confidence interval.

- Results Review: Review the MTBF estimate and any associated statistics, such as confidence bounds. The software may present additional metrics and plots that can provide a broader context of the system’s reliability.

ReliaSoft provides a variety of options and the exact steps may differ slightly based on the specific software version and the type of reliability study you are performing. The software’s documentation should offer detailed guidance.

Q 8. What is a reliability block diagram and how is it used?

A Reliability Block Diagram (RBD) is a graphical representation of a system’s reliability. It shows how individual components or subsystems are connected to achieve the overall system function. Each component is depicted as a block, and the connections between them illustrate the system’s architecture. Think of it like a flowchart for reliability, where the flow represents the success path for the system to function correctly. If any block fails, the flow is interrupted, and the system fails.

How it’s used: RBDs are crucial for identifying critical components (those whose failure directly leads to system failure), calculating system reliability based on component reliabilities (often using series, parallel, or more complex combinations of configurations), and performing fault tree analysis (FTA) to systematically identify potential failure causes. For example, imagine a simple light switch circuit. The RBD would show the power source, the switch, the wiring, and the light bulb, all connected in series. If any one of these fails, the whole system (the light not working) fails.

Practical Application: In aerospace, RBDs are essential for analyzing the reliability of complex systems like aircraft or rockets. Identifying critical components allows engineers to focus on improving their reliability and redundancy to enhance overall mission success.

Q 9. Explain the concept of Failure Rate and its significance.

Failure rate (λ), often expressed as failures per unit time (e.g., failures per million hours), quantifies the probability of a component or system failing within a specific period. It’s a crucial metric in reliability engineering because it directly reflects the component’s dependability. A higher failure rate suggests a higher likelihood of failure.

Significance: Understanding the failure rate allows us to predict the system’s lifespan, determine optimal maintenance intervals, and assess the overall risk associated with the system’s operation. It forms the foundation for many reliability analyses, such as predicting the time to failure using exponential, Weibull, or other distributions. For instance, a high failure rate in a critical component of a medical device could lead to redesign and improved quality control.

Q 10. Describe different reliability testing methods (e.g., accelerated life testing).

Several reliability testing methods exist, each suitable for different circumstances:

- Life Testing: Units are operated under normal conditions until failure. This provides real-world data but can be time-consuming and expensive.

- Accelerated Life Testing (ALT): Units are subjected to higher-than-normal stress levels (temperature, voltage, vibration) to induce failures faster. Statistical methods extrapolate results to predict reliability under normal conditions. This drastically reduces testing time but requires careful design to ensure the accelerated stress accurately reflects the failure mechanisms under normal use. For example, running a hard drive at high temperatures to accelerate wear-out failures.

- Step-Stress Testing: A variant of ALT where the stress level is increased in steps during the test.

- Progressively Censored Testing: The test is stopped before all units fail, saving time and resources. Statistical analysis adjusts for the incomplete data.

Choosing the right method: The selection depends on factors such as the time available, the cost of testing, and the nature of the failure mechanisms involved. For instance, if rapid failure is expected under normal use, life testing might not be practical, making ALT more appropriate.

Q 11. How do you analyze reliability data with multiple failure modes?

Analyzing reliability data with multiple failure modes requires a structured approach. We can’t simply treat them as a single failure mode, because the underlying mechanisms and distributions might differ.

Methods:

- Competing Risks Analysis: This method models the failure modes as independent competing risks, each contributing to the overall failure probability. Each mode is associated with a failure rate and probability, and the overall failure rate is calculated considering all competing risks.

- Cause-and-Effect Diagrams (Fishbone Diagrams): These are used to identify potential failure causes for each mode, organizing them by category (materials, design, manufacturing, etc.).

- Failure Mode and Effects Analysis (FMEA): FMEA goes further than cause-and-effect diagrams by quantifying the risk for each identified failure mode. It uses a Risk Priority Number (RPN) to prioritize the most critical issues for corrective action.

Software like Weibull++ and ReliaSoft offer tools to model competing risks and conduct FMEA, making analysis more efficient.

Q 12. How do you perform a reliability prediction using Weibull++ or ReliaSoft?

Reliability prediction using Weibull++ or ReliaSoft involves these steps:

- Data Entry: Input failure data (times to failure, censored data if any) for the component or system of interest.

- Distribution Fitting: Fit a statistical distribution (often Weibull) to the data using maximum likelihood estimation or other methods. Software tools provide automatic fitting procedures and goodness-of-fit tests (e.g., Chi-square test, Anderson-Darling test).

- Parameter Estimation: Determine the parameters of the fitted distribution (e.g., shape parameter (β), scale parameter (η)). These parameters describe the failure behavior.

- Reliability Prediction: Use the fitted distribution to predict reliability metrics at various times. This can include reliability, failure rate, and MTTF (Mean Time To Failure).

- Confidence Bounds: Calculate confidence bounds to account for uncertainty in the data and parameter estimation. This provides a range of likely values for the reliability metrics.

Example (Conceptual): In Weibull++, you’d import your data, select the Weibull distribution, perform fitting, and then use the software’s built-in functions to calculate the reliability at a specific time, say, 10,000 hours. The software then provides the reliability value and its associated confidence interval.

Q 13. What are the limitations of Weibull analysis?

While Weibull analysis is a powerful tool, it has limitations:

- Assumption of constant failure rate (for β=1): The Weibull distribution doesn’t always accurately reflect complex failure mechanisms where the failure rate isn’t constant over time. For example, infant mortality or wear-out in different phases are not fully captured by the simplest Weibull model.

- Data Requirements: Accurate parameter estimation requires sufficient data. With small datasets, parameter estimation might be inaccurate and lead to misleading conclusions.

- Model Selection: Choosing the correct distribution (Weibull is not always appropriate) is crucial for accurate analysis. Incorrect distribution selection can lead to biased results.

- Censoring Issues: Correct handling of censored data (when the exact time of failure is unknown) is crucial. Incorrect treatment of censored data can bias results.

Despite these limitations, Weibull analysis remains widely used due to its flexibility and ability to handle various failure patterns. However, one should be mindful of its assumptions and limitations when interpreting results.

Q 14. What is a hazard rate and how is it interpreted?

The hazard rate (h(t)), also called the instantaneous failure rate, is the probability of failure at time t, given that the unit has survived up to time t. It represents the risk of immediate failure at a particular point in time. Unlike failure rate which is often averaged over an interval, hazard rate is instantaneous.

Interpretation: A high hazard rate indicates a high probability of immediate failure at that specific time. A bathtub curve illustrates this concept, showing the hazard rate decreasing initially (infant mortality), then staying relatively constant (useful life), and finally increasing (wear-out). For example, a high hazard rate at the beginning of the product lifespan suggests potential issues during early manufacturing or design. Software tools allow plotting the hazard rate to understand better the different stages of the failure process.

Q 15. Explain the concept of confidence bounds in reliability analysis.

Confidence bounds in reliability analysis represent the uncertainty associated with our estimates of reliability parameters. Instead of providing a single point estimate (e.g., the mean time to failure), we calculate an interval within which the true value is likely to fall, with a specified level of confidence. Imagine you’re aiming for a bullseye; a point estimate is a single shot, while confidence bounds represent a circle around the shot indicating the probability of the true center being within that circle.

For example, a 95% confidence interval for the mean time to failure (MTTF) of a component means that if we were to repeat the experiment many times, 95% of the calculated confidence intervals would contain the true population MTTF. The width of the interval reflects the precision of our estimate – a wider interval indicates greater uncertainty. Software like Weibull++ and ReliaSoft readily calculate these confidence bounds using different methods (e.g., normal approximation, bootstrap).

In practice, confidence bounds are crucial for making informed decisions. For example, if the lower bound of a 90% confidence interval for the MTTF of a critical component is below the required operational lifespan, we know there’s a significant risk, even if the point estimate suggests otherwise. This drives us to investigate further, perhaps through more testing or design improvements.

Career Expert Tips:

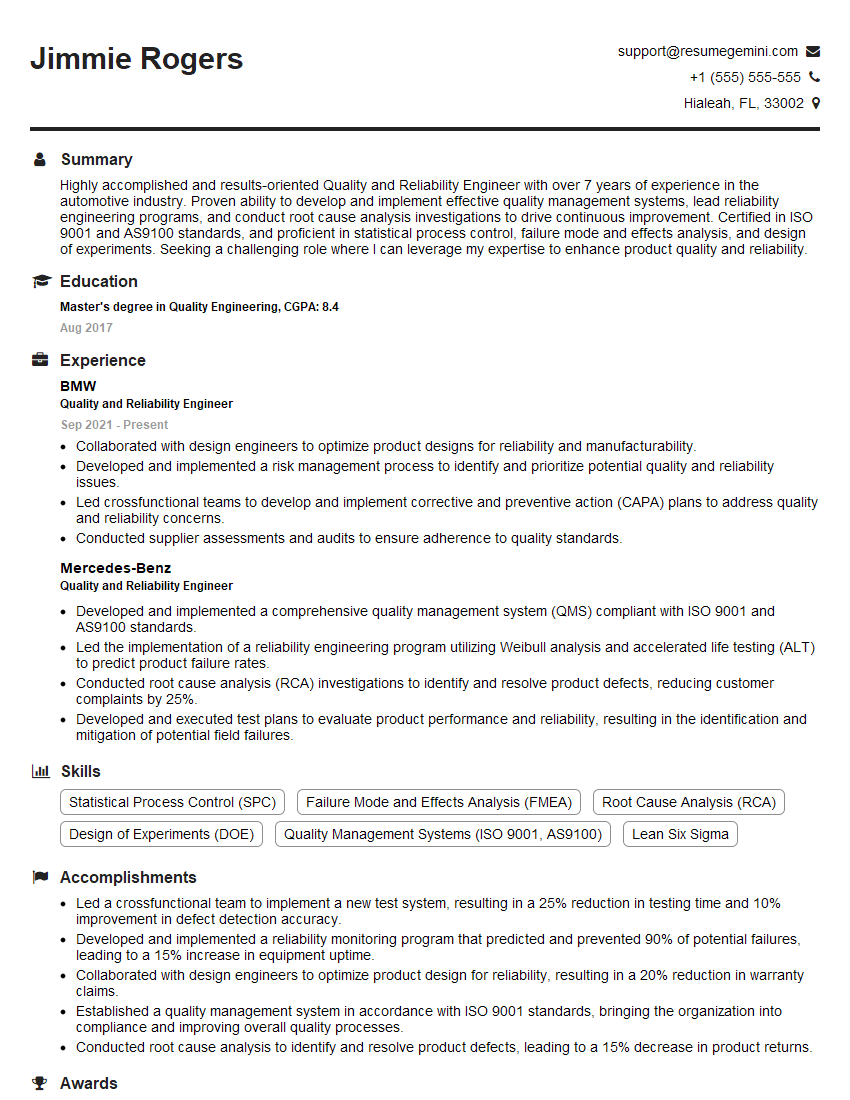

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you handle outliers in reliability data?

Handling outliers in reliability data is critical as they can significantly skew analysis results. Outliers are data points that fall far outside the expected range of values. Identifying and dealing with them requires careful consideration.

First, we need to identify potential outliers. Visual methods like box plots or probability plots are useful. Statistical methods, such as the Grubbs’ test or Dixon’s test, provide a more formal approach, testing the statistical significance of an outlier. However, blind reliance on these tests is unwise; always inspect the data for plausible explanations.

Once identified, the approach depends on the reason for the outlier. If a valid reason exists (e.g., recording error, different operating conditions, a distinct failure mode), we might consider removing the data point or analyzing it separately. If the reason is unclear, it is safer to use methods robust to outliers. For instance, non-parametric methods like Kaplan-Meier estimation are less sensitive than methods that assume normality.

In ReliaSoft and Weibull++, you can often visually inspect the data using various plotting options and then selectively remove data points if justified. But remember, proper documentation of the handling of outliers is crucial for the integrity and transparency of the analysis.

Q 17. What is the difference between point estimates and confidence intervals?

The difference between point estimates and confidence intervals lies in their representation of uncertainty. A point estimate is a single value that best represents a population parameter, like the MTTF calculated from sample data. It’s a ‘best guess’ based on the available information. However, it doesn’t provide any measure of the uncertainty associated with that guess.

A confidence interval, on the other hand, provides a range of values within which the true population parameter is likely to fall with a specified level of confidence (e.g., 95%, 99%). It quantifies the uncertainty associated with the point estimate. The wider the confidence interval, the greater the uncertainty. Think of a dartboard – the point estimate is where your dart landed, while the confidence interval is a circle around it indicating the area where you’re confident the bullseye actually is.

For instance, a point estimate for MTTF might be 1000 hours, but a 95% confidence interval might be (800, 1200) hours. This implies that we are 95% confident that the true MTTF falls between 800 and 1200 hours.

Q 18. Describe different types of failure modes and mechanisms.

Failure modes and mechanisms are crucial concepts in reliability engineering. A failure mode describes how a component or system fails (e.g., short circuit, fracture, wear-out). A failure mechanism explains why a failure occurs at the physical or chemical level (e.g., corrosion, fatigue, creep). Understanding both is crucial for effective reliability improvement.

Failure modes can be broadly categorized as:

- Wear-out failures: Occur due to gradual degradation over time (e.g., bearing wear, corrosion).

- Sudden failures: Occur unexpectedly, often due to inherent weaknesses or external events (e.g., brittle fracture, short circuit).

- Infant mortality failures: Occur early in a product’s life, often due to manufacturing defects or design flaws.

Corresponding failure mechanisms could include:

- Fatigue: Repeated stress causing cracks and eventual fracture.

- Creep: Gradual deformation under constant stress at elevated temperatures.

- Corrosion: Degradation due to chemical reactions with the environment.

- Fracture: Separation of material due to stress exceeding its strength.

Identifying failure modes and mechanisms involves failure analysis techniques, such as visual inspection, material testing, and root cause analysis. Tools like Failure Mode and Effects Analysis (FMEA) are used to systematically identify potential failure modes and their consequences. This information is then used to design more reliable systems and improve maintenance strategies.

Q 19. How do you use ReliaSoft to perform a Fault Tree Analysis (FTA)?

ReliaSoft’s software, such as BlockSim, is purpose-built for Fault Tree Analysis (FTA). An FTA graphically depicts the combination of events that can lead to a top-level system failure. ReliaSoft allows you to build the tree using a user-friendly interface, defining basic events (failures of individual components), gates (AND, OR, XOR gates defining logical relationships between events), and ultimately, the top event (system failure).

Here’s a simplified workflow:

- Define the Top Event: Start by clearly defining the undesired system-level event you are analyzing (e.g., ‘System Shutdown’).

- Identify Basic Events: Identify the individual component or subsystem failures that could contribute to the top event.

- Construct the Fault Tree: Use ReliaSoft’s BlockSim to graphically represent the logical relationships between the basic events and the top event using appropriate gates (AND, OR, etc.).

- Assign Failure Data: Input failure rate data for each basic event, often drawn from historical data, component specifications, or expert judgment. ReliaSoft facilitates importing and managing this data.

- Perform the Analysis: ReliaSoft automatically calculates the probability of the top event occurring based on the probabilities of the basic events and the gate logic. Sensitivity analysis can identify critical components influencing the top event’s probability.

- Interpret Results: The software provides various outputs (e.g., top event probability, cut sets, minimal cut sets) which inform risk mitigation strategies.

ReliaSoft’s capabilities extend beyond basic FTA, allowing you to incorporate different data types, perform quantitative analysis, and create comprehensive reports detailing your analysis and findings. This helps prioritize risk reduction efforts efficiently.

Q 20. How do you use Weibull++ for accelerated life testing data analysis?

Weibull++ is exceptionally well-suited for accelerated life testing (ALT) data analysis. ALT involves testing products under more severe conditions (higher stress levels) than normal operational conditions to accelerate failures and reduce testing time. Weibull++ handles this by incorporating the stress levels into the analysis.

The process usually involves:

- Data Collection: Collect failure time data under different stress levels (e.g., temperature, voltage, humidity). It’s essential to ensure the stress levels are properly controlled and monitored.

- Model Selection: Choose an appropriate ALT model based on the stress factors and failure mechanisms (e.g., Arrhenius model for temperature, Eyring model for chemical reactions). Weibull++ offers several pre-programmed models and the option to define custom models.

- Data Entry: Input the collected data (failure times and corresponding stress levels) into Weibull++.

- Model Fitting: Use Weibull++ to fit the selected ALT model to the data, estimating the model parameters. The software provides tools for goodness-of-fit assessment.

- Parameter Estimation: Weibull++ estimates the model parameters, allowing you to extrapolate the results to predict the reliability under normal operating conditions. It usually provides confidence bounds for these estimates.

- Life Prediction: Use the estimated parameters to predict the life distribution of the product under normal use conditions.

Weibull++ simplifies the complex calculations involved in ALT analysis, allowing you to quickly determine the reliability and lifetime characteristics of the product under normal operating conditions based on the accelerated testing data.

Q 21. Explain the difference between repairable and non-repairable systems.

The distinction between repairable and non-repairable systems is fundamental in reliability engineering. A non-repairable system is one that, upon failure, is either discarded or requires replacement. Think of a lightbulb – once it burns out, it’s typically replaced.

A repairable system, on the other hand, can be repaired or restored to an operational state after a failure. An example is a computer system – if the hard drive fails, it can be replaced and the system is back online. The key difference lies in the ability to restore functionality after failure.

This distinction significantly impacts the choice of reliability models. Non-repairable systems are often analyzed using distributions like the Weibull, exponential, or normal distribution to estimate parameters like MTTF. Repairable systems require different models, such as the Poisson process or renewal processes, to account for the time between failures (or repairs) and to estimate metrics like mean time between failures (MTBF). ReliaSoft and Weibull++ offer tools and features to analyze both types of systems using appropriate models and methods.

Q 22. What are some common reliability metrics and how are they calculated?

Reliability metrics quantify a system’s or component’s ability to perform its intended function over a specified period. Key metrics include:

- Mean Time To Failure (MTTF): The average time a system operates before failure. Calculated as the total operating time divided by the number of failures. For example, if five units run for a total of 10,000 hours before failing, the MTTF is 2,000 hours (10,000/5).

- Mean Time Between Failures (MTBF): Similar to MTTF, but applicable to repairable systems, representing the average time between successive failures. It’s calculated similarly, but considers repair times. Imagine a server that experiences five failures over a year, with each failure resulting in 1 hour of downtime, and 8760 total operating hours in a year. The MTBF is approximately 1752 hours (8760 operating hours / 5 failures).

- Failure Rate (λ): The probability of failure per unit time. Often expressed as failures per million hours (FPMH). It’s calculated as the number of failures divided by the total operating time. For instance, if 10 failures occur in 1 million operating hours, the failure rate is 10 FPMH.

- Availability (A): The percentage of time a system is operational. It’s calculated as MTTF/(MTTF + MTTR), where MTTR is Mean Time To Repair. A system with an MTTF of 1000 hours and an MTTR of 10 hours has an availability of approximately 99% (1000/(1000+10)).

Choosing the right metric depends on the system’s characteristics (repairable or non-repairable) and the specific reliability aspect being assessed. For instance, MTTF is more relevant for non-repairable components like light bulbs, while MTBF is better suited for repairable systems like hard drives.

Q 23. How do you use reliability data to support maintenance decisions?

Reliability data is crucial for data-driven maintenance decisions, moving from reactive (fixing problems after they occur) to proactive and predictive strategies. By analyzing failure patterns and rates, we can:

- Optimize maintenance schedules: Identifying components with high failure rates allows for more frequent preventative maintenance, reducing unexpected downtime. For instance, if analysis shows a specific motor consistently fails after 1000 operating hours, preventative maintenance can be scheduled around this period.

- Prioritize maintenance tasks: Reliability data helps determine which components require the most attention. This prioritization ensures resources are allocated effectively. A critical component with a high failure rate will naturally take precedence over less critical components with low failure rates.

- Assess the effectiveness of maintenance programs: By tracking failure rates before and after implementing maintenance changes, we can assess the program’s success. A decrease in failure rate post-implementation validates the effectiveness of the changes.

- Predict potential failures: Advanced techniques like Weibull analysis can predict the remaining useful life of components, enabling predictive maintenance interventions. Early detection reduces the severity of potential failures and avoids costly downtime.

For example, I once worked on a project where analysis of pump failures revealed a seasonal pattern linked to temperature fluctuations. This insight led to a change in the maintenance schedule, including additional inspections and cleaning during peak seasons, substantially reducing failures.

Q 24. What are some common challenges in reliability data collection?

Collecting reliable reliability data presents several challenges:

- Incomplete data: Missing data points or inaccurate recording can skew results and lead to flawed analyses. For instance, a failure might go unreported, leading to an underestimation of the failure rate.

- Data accuracy: Inaccurate measurements or misclassifications of failures can compromise data integrity. A failure attributed to the wrong root cause obscures real insights.

- Data consistency: Inconsistent data collection methods across different systems or time periods can make comparisons difficult. If the method of reporting changes, the historic and present data will be incomparable.

- Cost and time: Collecting and processing large amounts of data can be expensive and time-consuming. Comprehensive data collection may need significant investment in infrastructure.

- Human error: Mistakes in data entry, reporting, and analysis are common and can impact results. Employing double-checking mechanisms is crucial to minimize these issues.

Addressing these challenges requires careful planning, rigorous data validation procedures, and the use of robust data management systems. Implementing clear guidelines for data collection and employing automated data logging systems can significantly improve data quality and consistency.

Q 25. Describe your experience with reliability software such as Weibull++ or ReliaSoft.

I have extensive experience using both Weibull++ and ReliaSoft. Weibull++ excels in its advanced statistical analysis capabilities, particularly in fitting Weibull and other distributions to failure data. I’ve leveraged it for various tasks, such as performing life data analysis, creating reliability predictions, and conducting failure mode and effects analysis (FMEA). One example is using the software to identify the underlying failure mechanism of a specific component by fitting multiple distributions and comparing the goodness-of-fit. This allowed us to determine the most appropriate maintenance strategy.

ReliaSoft, on the other hand, offers a more comprehensive suite of tools encompassing reliability block diagrams (RBDs), fault tree analysis (FTA), and system simulation capabilities. I’ve utilized ReliaSoft to model complex systems and assess their overall reliability, identify system vulnerabilities, and conduct what-if analyses for different maintenance strategies. A recent project involved using ReliaSoft’s simulation capabilities to compare the effectiveness of two different maintenance policies, helping to save substantial costs and reduce system downtime.

I’m proficient in using both software packages to import, clean, and analyze reliability data; to perform various statistical analyses; and to generate insightful reports for stakeholders.

Q 26. How would you use reliability software to identify potential design improvements?

Reliability software is invaluable for identifying potential design improvements. By analyzing failure data, we can pinpoint areas for enhancement:

- Identifying failure modes: Software helps analyze failure data to identify common failure modes, indicating areas requiring design modifications. For example, if a particular component consistently fails due to fatigue, the design might need to be reinforced or materials upgraded.

- Assessing design changes: Before implementing a design change, we can simulate its impact on reliability using software. This allows for informed decision-making and avoids costly mistakes. Modeling different design options allows for optimal selection.

- Optimizing component selection: Analyzing component reliability data helps select components with higher reliability and longevity. Software allows for comparison of various vendor options based on reliability data.

- Improving testing strategies: Software can help optimize testing plans by identifying critical stress points and developing targeted testing procedures. This ensures more effective testing and earlier detection of potential problems.

For instance, I used Weibull++ to analyze the failure data of a specific electronic component. The analysis revealed that a specific solder joint was a major contributor to failures. This led to a redesign that improved the solder joint, significantly increasing the component’s reliability.

Q 27. How do you present reliability data and results to stakeholders?

Presenting reliability data effectively is crucial for gaining stakeholder buy-in and ensuring that insights are properly used. My approach involves tailoring the presentation to the audience:

- Visualizations: I use clear and concise visualizations like charts and graphs (e.g., Weibull plots, bathtub curves, reliability diagrams) to communicate complex data effectively. Visual representations make the information readily understandable for non-technical audiences.

- Summary reports: I prepare concise reports highlighting key findings, recommendations, and their business implications. Focusing on the key takeaways for the audience allows a faster comprehension of critical insights.

- Interactive dashboards: For more complex analyses or ongoing monitoring, interactive dashboards allow stakeholders to explore data at their own pace. These dashboards enable easy access to vital information, fostering informed decision-making.

- Clear language: I avoid technical jargon when possible and use plain language to explain complex concepts. Using layman’s terms avoids misunderstandings and promotes effective communication.

- Storytelling: I weave the data analysis into a compelling narrative, highlighting the significance of the findings and the potential impact of recommendations. Using storytelling helps connect with the audience and increase the impact of presented data.

For example, when presenting to senior management, I focus on high-level summaries, cost savings, and risk mitigation strategies. For engineers, I provide more detailed analysis, including statistical methods and technical explanations.

Q 28. How do you stay current with the latest advancements in reliability engineering?

Staying current in reliability engineering requires a multi-pronged approach:

- Professional organizations: I’m an active member of professional organizations like the Society of Reliability Engineers (SRE), attending conferences, workshops, and webinars to learn about the latest advancements and best practices.

- Publications: I regularly read relevant journals, industry publications, and research papers to keep abreast of new techniques and methodologies.

- Online courses and certifications: I participate in online courses and pursue certifications to enhance my skills and knowledge in emerging areas like machine learning for reliability analysis.

- Networking: I actively network with colleagues and experts in the field to exchange ideas and learn from their experiences.

- Software updates: I ensure that my reliability software is up to date, taking advantage of the latest features and improvements. Software updates often provide new capabilities, enhancing analytical potential.

Continuous learning ensures I remain proficient and can apply the most current methodologies to solve real-world problems effectively.

Key Topics to Learn for Reliability Engineering Software (e.g., Weibull++, ReliaSoft) Interview

- Data Analysis & Interpretation: Understanding how to import, clean, and analyze reliability data within the software. Mastering the creation and interpretation of various plots (e.g., Weibull probability plots, reliability plots).

- Distribution Fitting: Proficiently fitting different statistical distributions (Weibull, Exponential, Normal, Lognormal) to failure data and understanding the implications of each distribution’s choice on reliability predictions.

- Reliability Prediction & Estimation: Accurately predicting future reliability performance based on historical data. This includes calculating parameters like Mean Time To Failure (MTTF), Mean Time Between Failures (MTBF), and failure rates.

- Life Data Analysis Techniques: Familiarity with various analysis techniques, such as parametric and non-parametric methods, and understanding their applications in different scenarios.

- Reliability Modeling & Simulation: Building and interpreting reliability block diagrams (RBDs) and fault trees, and using simulation techniques to assess system reliability.

- Software Specific Features: Become deeply familiar with the specific features and functionalities of the software you are being interviewed for (Weibull++, ReliaSoft, etc.). This includes understanding report generation, data export options, and advanced analysis capabilities.

- Practical Application & Case Studies: Practice applying your knowledge to real-world scenarios. Consider working through example datasets and interpreting the results. This will demonstrate your ability to solve problems using the software.

- Troubleshooting & Error Handling: Understanding how to identify and resolve common issues encountered while using the software. This showcases problem-solving skills crucial in a reliability engineering role.

Next Steps

Mastering reliability engineering software like Weibull++ or ReliaSoft is crucial for career advancement in this field. These tools are essential for performing in-depth analyses and making data-driven decisions. To maximize your job prospects, creating an ATS-friendly resume is key. This ensures your application is effectively screened by Applicant Tracking Systems. We highly recommend using ResumeGemini to build a professional and impactful resume. ResumeGemini provides valuable resources and examples of resumes tailored to Reliability Engineering Software roles, helping you showcase your skills and experience effectively. Take the next step towards your dream career by crafting a compelling resume that highlights your expertise in reliability engineering software.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good