The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Remote Sensing and LiDAR interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Remote Sensing and LiDAR Interview

Q 1. Explain the differences between active and passive remote sensing.

The core difference between active and passive remote sensing lies in how they acquire data. Passive sensors, like cameras and thermal scanners, detect energy naturally emitted or reflected from the Earth’s surface. Think of it like taking a photograph – you’re relying on existing light. Active sensors, on the other hand, emit their own energy and measure the returned signal. LiDAR is a prime example; it sends out laser pulses and records the time it takes for the pulses to bounce back, allowing for precise distance measurements.

An analogy: Imagine you’re trying to map a room. Passive sensing is like turning on the lights and sketching what you see; you’re dependent on the available illumination. Active sensing is like using a laser rangefinder to precisely measure distances to every object, creating a 3D model regardless of ambient light.

Passive systems are generally less expensive and easier to deploy, but they are limited by available energy sources (sunlight, thermal radiation). Active systems offer higher accuracy and the ability to operate day and night, but they tend to be more complex and costly.

Q 2. Describe the principles of LiDAR technology.

LiDAR (Light Detection and Ranging) uses laser pulses to measure distances to the Earth’s surface. A LiDAR system emits laser pulses, and a sensor measures the time it takes for the reflected pulses to return. The distance is calculated using the speed of light. By rapidly emitting and recording numerous laser pulses, LiDAR creates a dense point cloud representing the 3D surface. This point cloud contains three-dimensional coordinates (X, Y, Z) for each measured point, along with intensity information reflecting the amount of energy reflected from the surface, further enhancing the detail. This intensity data provides valuable information about surface properties such as material type and roughness.

Imagine shining a flashlight in a dark room and timing how long it takes to see the light bounce back from different objects. LiDAR operates on a similar principle, but with much higher precision and speed, allowing it to map vast areas quickly and accurately.

Q 3. What are the different types of LiDAR systems and their applications?

LiDAR systems are categorized based on their deployment platform and scanning methods.

- Airborne LiDAR: Mounted on airplanes or helicopters, these systems provide large-scale data acquisition for applications such as terrain mapping, forestry, and urban planning. They offer a broad view and can cover vast areas efficiently.

- Terrestrial LiDAR (TLS): Ground-based systems used for detailed surveys of smaller areas, such as buildings, archaeological sites, or accident scenes. Their high-density scans allow for precise measurements and the capture of intricate details.

- Mobile LiDAR: Mounted on vehicles, these systems offer a blend of speed and accuracy for applications like road mapping, infrastructure assessment, and 3D city modeling. They can capture data while moving, streamlining data acquisition along routes.

- Bathymetric LiDAR: A specialized type that uses wavelengths able to penetrate water, allowing for underwater mapping of riverbeds, lakes, and coastal areas. This is crucial for navigation and environmental monitoring.

The applications are diverse. Airborne LiDAR is frequently employed in creating Digital Elevation Models (DEMs) for hydrological modeling. Terrestrial LiDAR provides detailed as-built models for construction projects. Mobile LiDAR is used extensively by autonomous vehicle companies for map-building and navigation.

Q 4. How does atmospheric correction affect LiDAR data?

Atmospheric correction in LiDAR data processing accounts for the effects of the atmosphere on the laser pulses. Factors like aerosols, water vapor, and temperature can affect the speed and intensity of the pulses, introducing errors in distance measurements and intensity values. The atmosphere acts as a filter that can distort both the speed and intensity of our laser signals. If left uncorrected this distortion can introduce noise and error in the final product such as a digital elevation model (DEM).

Atmospheric correction algorithms are used to adjust the raw LiDAR data, mitigating these effects and improving the accuracy of the final product. These algorithms typically use atmospheric models and meteorological data to estimate the atmospheric attenuation and delay. Ignoring atmospheric correction can lead to significant errors in elevation and intensity, especially in data collected over longer ranges or in regions with varying atmospheric conditions.

Q 5. Explain the process of point cloud classification in LiDAR data processing.

Point cloud classification is a crucial step in LiDAR data processing that assigns semantic labels to individual points in the point cloud. This involves grouping points into meaningful categories, such as ground, buildings, vegetation, or vehicles. This is typically done using a combination of automated algorithms and manual editing. Automated classification methods often rely on features such as point height, intensity, and neighborhood density. Supervised machine learning techniques are often employed to build a classification model based on training data.

The process generally involves several steps: First, algorithms identify and classify points based on their characteristics. Then, manual review and correction may be necessary to improve accuracy, particularly for ambiguous points or areas of complex topography. Finally, this classified data can be used to create various products such as building footprints, tree inventories, and digital surface models (DSMs).

A common example is classifying ground points from non-ground points. Ground points typically have lower heights, lower intensity values and might show patterns of smoothness. Buildings, on the other hand, have specific height characteristics and exhibit more vertical structures.

Q 6. What are common LiDAR data formats (e.g., LAS, LAZ)?

The most common LiDAR data formats are LAS and LAZ.

- LAS (LASer Scan) is an open, non-proprietary format widely used for storing and sharing LiDAR point cloud data. It’s designed to efficiently store large datasets and can include attribute data describing the characteristics of each point (intensity, classification, etc.).

- LAZ (LASzip) is a compressed version of the LAS format. It uses lossless compression to reduce file sizes significantly without sacrificing data quality, making data storage and transfer more efficient.

While other formats exist, LAS and LAZ have become industry standards because of their wide compatibility with LiDAR processing software and their ability to handle the complexity and size of modern LiDAR datasets.

Q 7. How do you handle noise and outliers in LiDAR point clouds?

Noise and outliers in LiDAR point clouds can stem from various sources, including instrument error, atmospheric effects, and reflections from unexpected objects. Handling these is vital for accurate analysis.

Several techniques are used to address this:

- Filtering: Algorithms like median filtering or statistical outlier removal identify and remove points that deviate significantly from their neighbors. This involves examining the spatial distribution and characteristics of points and removing those that are anomalous.

- Segmentation: Dividing the point cloud into meaningful segments (e.g., ground, vegetation) helps identify outliers within specific regions, improving filtering accuracy.

- Classification-based removal: Removing points classified as noise during the classification process. This is often tied to the initial filtering steps.

The choice of technique depends on the nature and extent of the noise. For instance, a simple median filter might suffice for minor noise, while more sophisticated methods are necessary for dealing with significant outliers or complex data.

Q 8. Describe various methods for registering LiDAR data.

LiDAR data registration, crucial for accurate geospatial analysis, involves aligning point cloud data to a known coordinate system. Several methods exist, each with its strengths and weaknesses.

- Co-registration with existing data: This involves aligning the LiDAR data with a pre-existing dataset, such as a high-resolution orthophoto or a previously surveyed control network. Control points – points with known coordinates in both datasets – are identified and used to perform a transformation (e.g., affine, polynomial) to align the point clouds. This is common and relatively straightforward.

- Self-registration (using internal data): For datasets without external references, techniques like Iterative Closest Point (ICP) algorithms can be used. ICP iteratively finds the best transformation to minimize the distances between overlapping points in different scans. This is useful for large datasets acquired in multiple flight lines.

- GPS/IMU data integration: Many LiDAR systems are equipped with GPS and IMU (Inertial Measurement Unit) sensors. This provides initial positioning information that is then refined using post-processing techniques. This approach combines sensor data for enhanced accuracy and efficiency. However, it’s crucial to address potential biases and errors within the sensor data itself.

- Ground Control Points (GCPs): GCPs are points on the ground with precisely surveyed coordinates. These are measured independently and then used as reference points to register the LiDAR point cloud. GCPs are essential for achieving high-accuracy registration, especially when using co-registration with other data.

The choice of method depends on factors such as the desired accuracy, the availability of reference data, and the complexity of the terrain.

Q 9. What are the advantages and disadvantages of LiDAR compared to other remote sensing techniques?

LiDAR, or Light Detection and Ranging, offers significant advantages over other remote sensing techniques, but it also has limitations.

Advantages:

- High accuracy and precision: LiDAR provides highly accurate 3D point cloud data, allowing for precise measurements of elevation, distances, and object dimensions. This is significantly superior to techniques like photogrammetry in complex or vegetated areas.

- Penetration capabilities: LiDAR can penetrate vegetation and measure the ground surface beneath, making it ideal for creating accurate digital elevation models (DEMs) in forested or heavily vegetated areas.

- Dense point clouds: LiDAR captures a very dense point cloud, resulting in detailed representations of the terrain and objects.

- Data acquisition speed: LiDAR can cover large areas relatively quickly compared to some ground-based surveying techniques.

Disadvantages:

- Cost: LiDAR data acquisition and processing can be expensive compared to other techniques such as satellite imagery.

- Weather dependence: Data acquisition is often hampered by adverse weather conditions like heavy rain or fog, due to signal attenuation.

- Data processing complexity: Processing LiDAR data often requires specialized software and expertise.

- Safety concerns: Airborne LiDAR operations involve aircraft and require careful planning and safety precautions.

Ultimately, the best remote sensing technique depends on the specific application, budget, and required accuracy.

Q 10. Explain the concept of digital elevation models (DEMs) derived from LiDAR.

Digital Elevation Models (DEMs) are digital representations of the Earth’s terrain surface. LiDAR is exceptionally well-suited for creating highly accurate DEMs. The process involves extracting ground points from the raw LiDAR point cloud, interpolating between these points, and creating a raster surface.

Several types of DEMs can be derived from LiDAR:

- Bare-earth DEM: This represents the ground surface without vegetation or buildings. It’s created by filtering out non-ground points.

- Canopy Height Model (CHM): This represents the height of vegetation above the ground. It’s created by subtracting the bare-earth DEM from the top-of-canopy DEM (representing the highest point of the vegetation).

- Digital Surface Model (DSM): This represents the surface of the ground, including all features like buildings, trees, and other objects. It is created from all points in the LiDAR dataset.

LiDAR’s ability to penetrate vegetation and accurately measure ground elevation makes it superior to other methods for generating accurate and detailed DEMs in complex terrain.

Q 11. How do you assess the accuracy and precision of LiDAR data?

Assessing LiDAR data accuracy and precision involves comparing the LiDAR-derived data to a known reference. Several methods are employed:

- Comparison with Ground Control Points (GCPs): The most common method is to compare the LiDAR-derived elevations or coordinates of GCPs with their precisely surveyed coordinates. Root Mean Square Error (RMSE) is commonly used to quantify the differences.

- Comparison with other high-accuracy data: LiDAR data can be compared to other high-accuracy datasets, such as high-resolution aerial photography or existing DEMs, particularly to assess horizontal accuracy.

- Internal consistency checks: Analyzing the point cloud itself for inconsistencies, such as large gaps or unrealistic elevation changes, can provide insights into data quality.

- Statistical analysis: Statistical measures such as standard deviation and variance are used to assess the precision (repeatability) of the LiDAR measurements.

The acceptable level of accuracy and precision depends on the application. For example, engineering projects may require much higher accuracy than broad-scale environmental studies. It’s essential to define accuracy requirements upfront.

Q 12. Describe your experience with LiDAR data processing software (e.g., ArcGIS, QGIS, TerraScan).

I have extensive experience processing LiDAR data using various software packages, including ArcGIS, QGIS, and TerraScan.

ArcGIS: I use ArcGIS Pro extensively for tasks such as point cloud classification, DEM generation, surface analysis, and integration with other geospatial data. I’m proficient in using tools like the 3D Analyst extension for point cloud processing. For example, I’ve used ArcGIS to classify points in a LiDAR dataset into ground and vegetation classes for building a bare-earth DEM of a steep, forested area, ensuring accurate representation of the terrain despite vegetation cover.

QGIS: QGIS provides a cost-effective open-source alternative for LiDAR processing. I leverage QGIS’s plugins, such as LAStools, for efficient point cloud manipulation and filtering. For instance, I used QGIS and LAStools to perform ground filtering on a large LiDAR dataset efficiently by utilizing parallel processing capabilities of the plugin.

TerraScan: I have experience using TerraScan for tasks such as point cloud registration and visualization. TerraScan’s specialized tools provide robust solutions for processing dense LiDAR data, especially for complex environments.

My expertise in these software packages allows me to efficiently and accurately process large and complex LiDAR datasets to meet project-specific needs.

Q 13. Explain the concept of ground filtering in LiDAR data processing.

Ground filtering is a crucial step in LiDAR data processing that involves identifying and separating ground points from non-ground points (vegetation, buildings, etc.). This is essential for creating accurate bare-earth DEMs and performing other geospatial analyses.

Several ground filtering algorithms exist:

- Progressive Morphological Filter (PMF): This algorithm iteratively removes non-ground points based on morphological operations, such as erosion and dilation. It’s robust but computationally expensive.

- Cloth Simulation Filter (CSF): This algorithm simulates the behavior of a cloth draped over the terrain surface. Points below the “cloth” are considered ground points. This method is efficient and produces smooth results.

- Progressive TIN densification: This method builds a triangulated irregular network (TIN) iteratively, adding points that fit within a certain tolerance. It’s effective for smooth terrain but can struggle with complex topography.

- Simple thresholding based on elevation/intensity: This method, while simpler, relies on differences in elevation and intensity to differentiate ground and non-ground points. It is less effective in complex terrain.

The choice of algorithm depends on the characteristics of the LiDAR data and the desired level of accuracy. Often, a combination of methods is used for optimal results. Post-processing is frequently necessary to refine the classification manually and account for any errors.

Q 14. How do you handle data gaps or voids in LiDAR point clouds?

Data gaps or voids in LiDAR point clouds are common and can be caused by various factors, such as obstructions, sensor limitations, or data acquisition errors. Addressing these gaps is crucial for creating complete and accurate models.

Several techniques exist to handle these:

- Interpolation: This involves estimating the missing data based on the surrounding data points. Methods such as linear interpolation, spline interpolation, or kriging can be used. The method’s appropriateness depends on the size and nature of the gap.

- Inpainting: This technique uses more sophisticated algorithms to “fill in” the gaps using information from the surrounding data. It is more robust and appropriate for larger gaps.

- Data acquisition: If feasible, the best solution is to acquire additional LiDAR data to fill the gaps. This may involve planning another flight line, which is the most reliable approach but also the most costly.

- Combination of datasets: If other data is available (e.g., imagery), this may be used to improve the interpolation and potentially fill the gap.

The choice of method depends on the extent and nature of the gap, and the availability of resources. It’s crucial to document the methods used to handle data gaps for transparency and to provide context for the interpretation of results. A quality assessment is critical after gap filling to ensure that the interpolated data is plausible and within the expected quality.

Q 15. What are the applications of LiDAR in forestry or agriculture?

LiDAR, or Light Detection and Ranging, is a powerful remote sensing technique offering high-resolution 3D data. In forestry and agriculture, its applications are transformative.

Forestry: LiDAR excels at measuring tree height, canopy cover, and biomass. Imagine needing to assess the timber volume in a large forest – traditional methods are time-consuming and labor-intensive. LiDAR can quickly and accurately map the entire area, providing crucial data for sustainable forest management. We can even differentiate between different tree species based on their height and density profiles.

Agriculture: Precision agriculture heavily relies on LiDAR for creating Digital Elevation Models (DEMs) of fields. These DEMs help optimize irrigation by identifying areas with varying water needs. Furthermore, LiDAR can detect variations in crop height and density, allowing farmers to identify areas needing targeted fertilization or pest control. This leads to increased yields and reduced resource waste. For example, I once worked on a project using LiDAR to map vineyard canopies. This allowed precise spraying, reducing chemical usage and environmental impact.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with different coordinate reference systems (CRS).

Coordinate Reference Systems (CRS) are fundamental to geospatial data. My experience spans various CRS, including geographic (latitude/longitude) and projected coordinate systems. I’m proficient in using systems like WGS84 (the most common geographic CRS), UTM (Universal Transverse Mercator), and State Plane Coordinate Systems. Understanding the implications of choosing a particular CRS is crucial. For instance, using a projected CRS minimizes distortion when working with large areas, while geographic CRS are better for global applications. I frequently encounter situations requiring datum transformations (shifting from one reference ellipsoid to another), and I’m well-versed in using software tools to ensure accurate data alignment and analysis. In one project involving a large-scale environmental monitoring, the selection of the appropriate CRS was critical for seamlessly integrating data from multiple sources.

Q 17. Explain the concept of spatial resolution in remote sensing.

Spatial resolution in remote sensing refers to the size of the smallest discernible detail in an image. Think of it like the pixels in a digital photograph; a higher spatial resolution means smaller pixels, resulting in a sharper, more detailed image. For example, a satellite image with a 1-meter spatial resolution shows features 1 meter apart, whereas a 30-meter resolution image will only resolve features larger than 30 meters. The choice of spatial resolution depends on the application. High-resolution imagery is necessary for detailed mapping, such as urban planning, whereas lower-resolution data may suffice for broad-scale environmental monitoring. I often work with data sets ranging from very high resolution (sub-meter) LiDAR point clouds to coarser resolution satellite imagery, understanding the strengths and limitations of each resolution is vital for successful data interpretation.

Q 18. How do you interpret spectral signatures in remote sensing imagery?

Spectral signatures represent the unique reflectance or emission characteristics of different materials across various wavelengths of the electromagnetic spectrum. Each material interacts with light differently, resulting in a unique spectral ‘fingerprint’. For example, healthy vegetation reflects strongly in the near-infrared (NIR) region, while bare soil shows high reflectance in the visible red and near-infrared. Interpreting these spectral signatures involves analyzing the digital numbers (DNs) within different spectral bands of remote sensing imagery. This allows us to classify different land cover types such as forests, water bodies, or urban areas. Techniques like spectral indices (e.g., NDVI, Normalized Difference Vegetation Index) are frequently employed to enhance the contrast between different features and aid interpretation. I regularly use spectral unmixing to separate mixed pixels (pixels containing multiple materials) and identify the proportions of different components within them.

Q 19. What are the different types of remote sensing sensors?

Remote sensing employs a wide array of sensors, categorized broadly into passive and active systems.

Passive sensors, like multispectral and hyperspectral cameras on satellites, measure reflected or emitted radiation from the Earth’s surface. Examples include Landsat and Sentinel satellites.

Active sensors, like LiDAR and radar, emit their own radiation and measure the reflected signal. LiDAR uses laser pulses to measure distance, while radar uses radio waves. Each sensor type has its advantages and disadvantages in terms of cost, resolution, penetration capability, and data acquisition speed. For example, I used SAR (Synthetic Aperture Radar) data for mapping flooded areas because it can penetrate clouds and even some vegetation.

The choice of sensor depends on the specific application and the required information.

Q 20. Explain the concept of image classification in remote sensing.

Image classification in remote sensing is the process of assigning different land cover types or thematic categories to pixels in a remote sensing image. This involves grouping pixels with similar spectral signatures into meaningful classes. Several classification techniques exist, including supervised and unsupervised methods.

Supervised classification requires training data – areas where the land cover type is already known. The algorithm learns to associate spectral signatures with specific classes. Maximum likelihood classification and support vector machines are common supervised techniques.

Unsupervised classification, such as k-means clustering, groups pixels based on their spectral similarity without prior knowledge of classes.

Accuracy assessment is vital after classification, comparing the results to ground truth data. Image classification provides crucial information for various applications like urban planning, environmental monitoring, and resource management. In a recent project, I used a supervised classification approach to map deforestation patterns using satellite imagery.

Q 21. What are the ethical considerations related to using remote sensing data?

Ethical considerations in remote sensing are crucial. The high spatial and spectral resolution of modern sensors raise concerns about privacy and security.

Privacy: High-resolution imagery can potentially reveal sensitive personal information. Strict regulations and responsible data handling are essential to prevent unauthorized access and misuse.

Security: Remote sensing data can be vulnerable to malicious use, for example, in military applications or for planning terrorist attacks. Data security measures and access controls are crucial.

Bias and fairness: Algorithms used in image processing and classification can inherit and amplify existing societal biases, leading to unfair or discriminatory outcomes. Careful consideration of algorithm design and data selection is crucial to mitigate these risks.

Data ownership and access: Clear guidelines regarding data ownership and access rights are crucial. Ensuring equitable access to remote sensing data, especially for developing countries, is important for global sustainability.

Responsible use of remote sensing technologies requires a strong ethical framework to ensure that the benefits outweigh the potential risks.

Q 22. Describe your experience working with large datasets.

Working with massive datasets is commonplace in remote sensing and LiDAR. My experience involves handling terabytes of data from various sources, including airborne LiDAR point clouds, multispectral imagery, and DEMs (Digital Elevation Models). I’ve successfully managed this through a combination of strategies. This includes leveraging cloud computing platforms like AWS or Google Cloud for storage and processing, using parallel processing techniques to distribute the computational load, and employing efficient data formats like LAS for LiDAR and GeoTIFF for raster data. For instance, in one project involving a nationwide LiDAR survey, we processed over 50 terabytes of data by partitioning it into manageable chunks and processing them concurrently on a cloud-based cluster. This allowed us to complete the project within a reasonable timeframe and budget, something that would have been impossible with traditional methods.

Moreover, efficient data organization through structured file systems and metadata management is crucial. This ensures traceability and facilitates easy access to specific datasets when needed.

Q 23. How do you manage and organize geospatial data?

Geospatial data management involves a multi-faceted approach that prioritizes organization, accessibility, and data integrity. I typically use a combination of Geographic Information Systems (GIS) software like ArcGIS or QGIS, along with dedicated geospatial databases such as PostGIS. This allows for efficient storage, retrieval, and analysis.

- Data Organization: I organize data using a hierarchical structure, categorizing datasets by project, data type (e.g., LiDAR, imagery), and acquisition date. Clear naming conventions are vital for quick identification.

- Metadata Management: Comprehensive metadata, including acquisition parameters, processing steps, and coordinate systems, are meticulously documented. This ensures data provenance and reproducibility.

- Data Quality Control: Regular data quality checks are incorporated to identify and address errors or inconsistencies. This might involve visual inspection, statistical analysis, and comparison with reference data.

- Version Control: I employ version control systems, similar to Git, for tracking changes and maintaining different versions of processed data.

For example, in a project mapping coastal erosion, I organized LiDAR data from multiple years into a geodatabase, enabling temporal analysis and change detection.

Q 24. Explain the concept of orthorectification.

Orthorectification is a geometric correction process that removes geometric distortions from remotely sensed imagery, such as aerial photographs or satellite images, and LiDAR-derived imagery. These distortions are caused primarily by variations in terrain elevation and sensor platform position. The outcome is an image that accurately represents the Earth’s surface as if viewed from directly overhead (a planimetric view).

The process involves several steps:

- Determining Ground Control Points (GCPs): GCPs are points with known coordinates in both the image and a reference coordinate system (e.g., UTM).

- Creating a Digital Elevation Model (DEM): A DEM provides elevation information for each pixel in the image.

- Geometric Transformation: This step uses the GCPs and DEM to mathematically correct the image’s geometry, removing relief displacement and other distortions.

- Resampling: The corrected image is resampled to the desired resolution and projection.

Think of it like straightening a slightly warped photograph – the orthorectified image provides a more accurate and consistent representation of the real world, essential for accurate measurements and analysis.

Q 25. What are the challenges in processing and analyzing LiDAR data in urban environments?

Processing and analyzing LiDAR data in urban environments presents unique challenges due to the high density of objects and complex surface features.

- High Point Density and Data Volume: Urban areas generate significantly larger LiDAR point clouds compared to rural areas, requiring efficient processing techniques and substantial computing resources.

- Classification Complexity: Distinguishing between different features (buildings, vegetation, ground) is challenging because of dense and overlapping objects. Sophisticated algorithms and manual editing are often required for accurate classification.

- Building Penetration and Noise: LiDAR pulses can penetrate the roofs and walls of buildings, leading to noise and artifacts in the data. This can complicate the extraction of accurate building models.

- Data Occlusion: Tall buildings can obscure ground points and other features, leading to incomplete or missing data in certain areas.

- Motion Blur: Movement of vehicles or other objects during data acquisition can introduce motion blur, degrading the accuracy of the data.

Addressing these challenges often involves employing advanced filtering techniques, sophisticated classification algorithms (e.g., machine learning), and careful quality control procedures.

Q 26. How do you handle data projection and transformation?

Data projection and transformation is crucial for integrating geospatial data from diverse sources. Different datasets may use different coordinate systems and projections, making direct comparison and analysis impossible. This involves converting data from one coordinate system to another to ensure they are spatially compatible.

I use GIS software and libraries like GDAL/OGR to handle these transformations. These tools allow for precise conversions between different coordinate reference systems (CRS), using well-defined mathematical equations. Common projections include UTM (Universal Transverse Mercator), geographic coordinates (latitude/longitude), and State Plane Coordinate Systems.

For example, if I need to overlay a LiDAR DEM (projected in UTM Zone 10) with a satellite image (projected in geographic coordinates), I would use a GIS software or GDAL command-line tools to reproject one of the datasets to match the other’s projection. The process involves defining the source and target CRS and applying the appropriate transformation equations. Accurate transformations rely on the selection of appropriate datum and ellipsoid parameters.

Q 27. Describe your experience with different data visualization techniques.

Data visualization is critical for communicating findings and interpreting results from remote sensing and LiDAR data. My experience encompasses a range of techniques.

- 2D Mapping: GIS software is my primary tool for creating thematic maps displaying various features derived from LiDAR and imagery data (e.g., elevation, vegetation density, land use). I use different symbology and color schemes to effectively communicate information.

- 3D Visualization: I utilize software like ArcGIS Pro, QGIS, and specialized point cloud visualization tools to create 3D models of terrain and urban environments. This is particularly useful for showcasing the results of LiDAR data processing, such as building models or digital surface models (DSMs).

- Interactive Dashboards: For conveying complex datasets to non-technical audiences, I create interactive dashboards using tools such as Tableau or Power BI. These dashboards allow users to explore the data interactively through filters and visualizations.

- Animations and Fly-throughs: To create engaging visualizations, I develop animations and virtual fly-throughs of 3D models. This is especially effective in presenting project results to clients or stakeholders.

In one project, creating a 3D model of a historical site using LiDAR data helped stakeholders visualize the site’s topography and understand its historical significance more effectively than simply using 2D maps.

Q 28. Explain your understanding of the impact of different LiDAR pulse waveforms.

Different LiDAR pulse waveforms significantly impact data quality and the applications for which the data is suitable. The choice of waveform depends on the specific project requirements and the nature of the target surface.

- Single-return waveforms: These waveforms record only the first return signal, providing information about the highest point intercepted by the laser pulse. They are relatively simple to process but may not capture information about underlying features. They’re often used for basic terrain mapping.

- Multiple-return waveforms: These waveforms record multiple return signals, providing information about multiple surfaces intersected by the laser pulse. This allows for the discrimination of vegetation canopy, ground surface, and understory. This type of data is crucial for forest inventory, urban modeling, and detailed terrain characterization.

- Waveform digitization: This advanced technique records the entire waveform, providing detailed information about the shape and amplitude of the return signal. This increases the accuracy of surface classification and allows for the extraction of detailed information about the properties of the surface (e.g., vegetation density).

For instance, in a forestry application, multiple-return waveforms are essential to accurately measure tree height and canopy density. Conversely, single-return waveforms might suffice for mapping relatively flat terrain.

Key Topics to Learn for Remote Sensing and LiDAR Interviews

- Electromagnetic Spectrum & Sensor Principles: Understand the interaction of electromagnetic radiation with the Earth’s surface and the fundamental principles behind various remote sensing sensors (e.g., passive vs. active, spectral resolution, spatial resolution).

- LiDAR Fundamentals: Grasp the working mechanisms of LiDAR systems, including data acquisition, point cloud processing, and various LiDAR platforms (e.g., airborne, terrestrial, mobile).

- Image Processing and Analysis Techniques: Familiarize yourself with common image processing techniques like geometric correction, atmospheric correction, and various image classification methods relevant to remote sensing data.

- Point Cloud Processing and Analysis: Master techniques for filtering, classifying, and analyzing LiDAR point clouds, including applications in digital terrain modeling (DTM) and digital surface modeling (DSM) generation.

- Geospatial Data Management and Analysis: Gain proficiency in handling geospatial data formats (e.g., GeoTIFF, Shapefiles), using GIS software (e.g., ArcGIS, QGIS), and performing spatial analysis operations.

- Applications in Environmental Monitoring: Explore practical applications of remote sensing and LiDAR in areas such as deforestation monitoring, precision agriculture, and urban planning. Be prepared to discuss specific case studies.

- Data Accuracy and Error Assessment: Understand sources of error in remote sensing and LiDAR data and methods for assessing data accuracy and quality.

- Current Trends and Future Directions: Stay updated on emerging trends in remote sensing and LiDAR technologies, such as hyperspectral imaging, UAV-based LiDAR, and AI-driven data analysis.

Next Steps

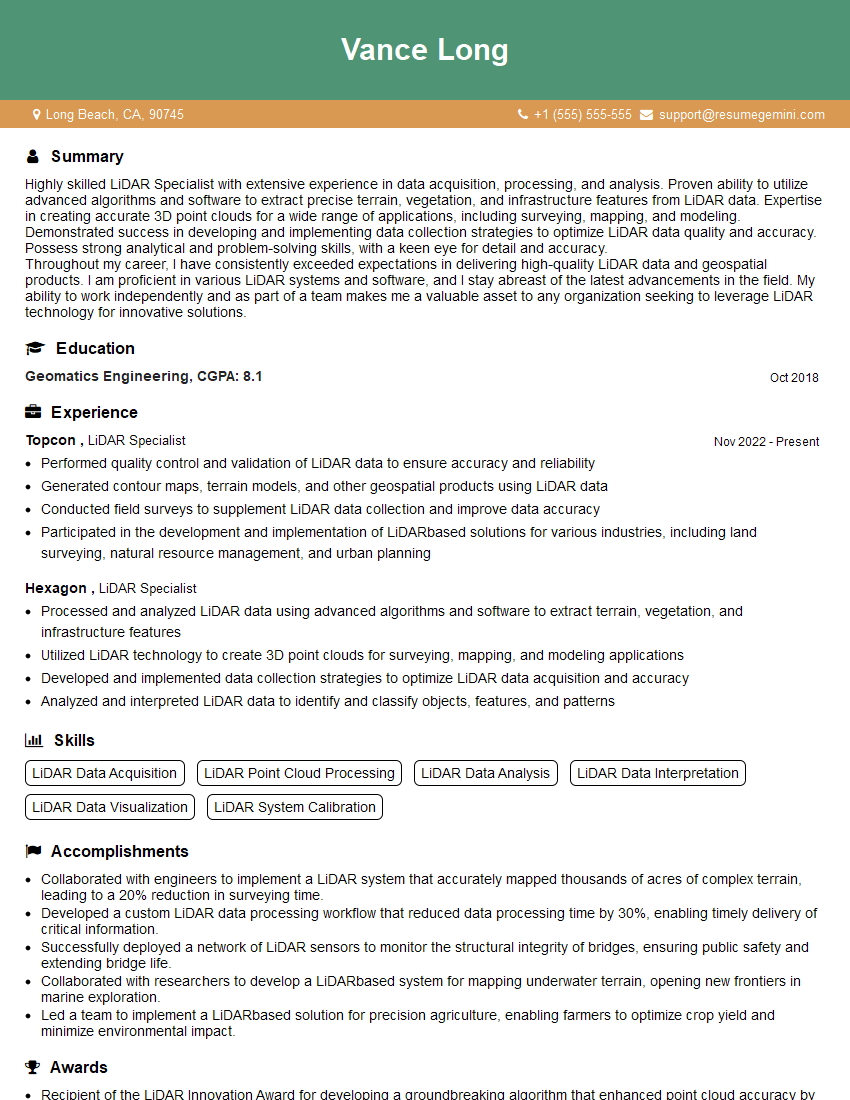

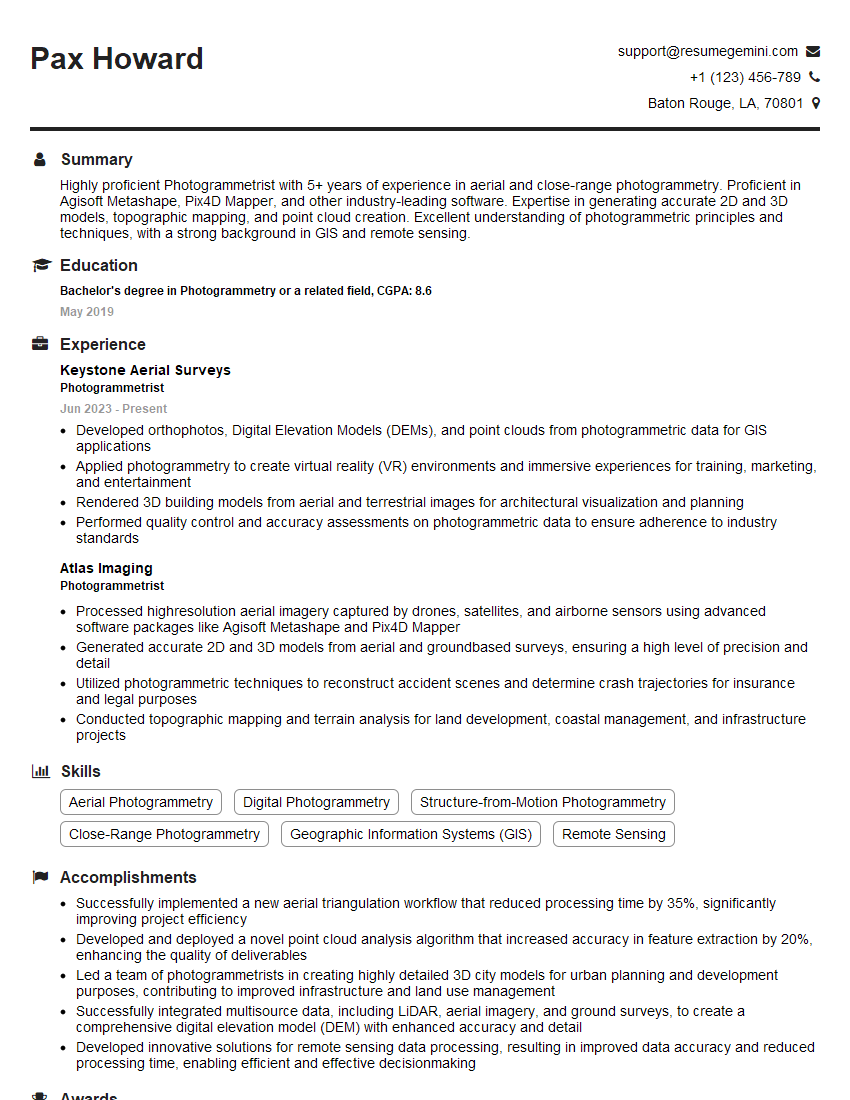

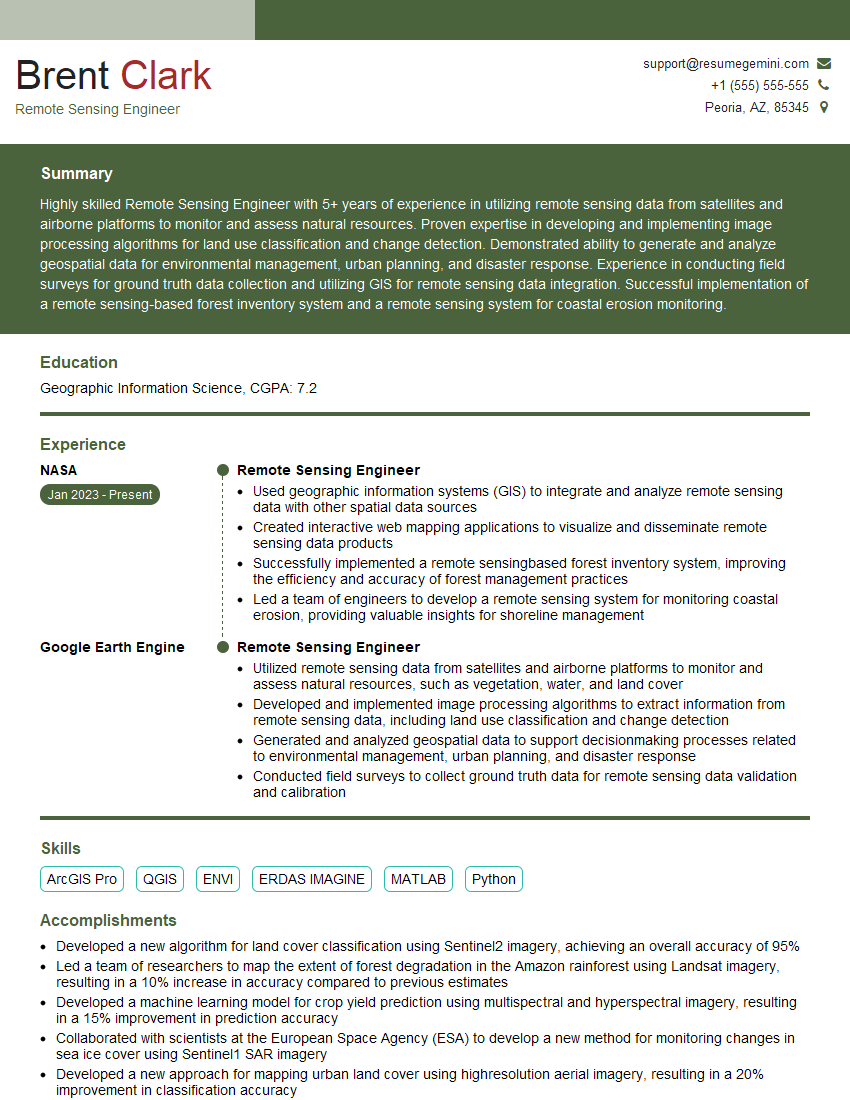

Mastering Remote Sensing and LiDAR opens doors to exciting and impactful careers in various fields. To maximize your job prospects, creating a strong, ATS-friendly resume is crucial. A well-crafted resume effectively showcases your skills and experience, significantly increasing your chances of landing your dream role. ResumeGemini is a trusted resource to help you build a professional and impactful resume. We provide examples of resumes tailored to Remote Sensing and LiDAR to guide you in creating a document that highlights your unique qualifications. Invest time in building a compelling resume – it’s an essential step in your journey to a successful career in this dynamic field.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good