Preparation is the key to success in any interview. In this post, we’ll explore crucial Rendering and visualization interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Rendering and visualization Interview

Q 1. Explain the difference between ray tracing and rasterization.

Ray tracing and rasterization are two fundamental rendering techniques used to create images from 3D models. They differ significantly in their approach to generating pixels on the screen.

Rasterization is a process that essentially ‘paints’ the polygons of a 3D model onto a 2D screen. It works by projecting the polygons and then filling in each pixel based on the polygon’s color and texture. Think of it like filling in a coloring book: you’re filling in pre-defined shapes. It’s computationally efficient, making it suitable for real-time applications like video games.

Ray tracing, on the other hand, simulates how light interacts with the scene. It starts with the camera and casts ‘rays’ of light backward into the scene. Each ray checks for intersections with objects, determines the material properties of the intersected object and calculates lighting and shading effects like reflections and refractions. This process is repeated recursively for each bounce of light, creating highly realistic images. It’s like using a flashlight in a dark room and tracing where the light lands, accounting for reflections and shadows. While very realistic, it’s computationally expensive, making it more suited to offline rendering for things like architectural visualizations and film.

In short: Rasterization is fast but less realistic, while ray tracing is slow but highly realistic. Modern rendering often combines both techniques, leveraging rasterization’s speed for initial rendering and ray tracing for enhancing details and realism where needed (hybrid rendering).

Q 2. Describe your experience with different rendering engines (e.g., Unreal Engine, Unity, V-Ray, Arnold).

I have extensive experience with several rendering engines, each suited for different tasks and workflows. My experience includes:

- Unreal Engine: Primarily used for real-time rendering in interactive applications, particularly games and architectural walkthroughs. I’ve leveraged its Blueprint visual scripting system for rapid prototyping and its robust material editor for creating complex surface properties. A recent project involved creating a photorealistic virtual tour of a museum, using Unreal Engine’s lighting and post-processing capabilities to achieve a high level of fidelity.

- Unity: Similar to Unreal Engine in its real-time capabilities, but I’ve found it particularly useful for simpler projects or those with a focus on mobile platforms. Its asset store offers a vast library of pre-built assets, accelerating development. I used Unity to create an interactive educational experience about the solar system, benefiting from its efficient rendering capabilities and ease of integration with various platforms.

- V-Ray: A powerful offline renderer known for its accuracy and ability to produce high-quality images, ideal for architectural visualization and product design. My expertise includes mastering its material system, GI algorithms, and lighting techniques to achieve photorealism. A recent project involved creating marketing renders for a new line of luxury furniture, where V-Ray’s advanced features were crucial in capturing the intricate details and textures of the pieces.

- Arnold: Another high-end offline renderer, renowned for its speed and versatility. I’ve used Arnold for both architectural and film-related projects, appreciating its robust procedural shading capabilities and its integration with Autodesk Maya. For a recent short film, I used Arnold to render highly detailed characters and environments, benefiting from its ability to handle complex geometry and intricate lighting scenarios.

Q 3. How do you optimize a scene for faster rendering times?

Optimizing a scene for faster rendering involves a multi-pronged approach focusing on reducing the computational load. Here are some key strategies:

- Geometry Optimization: Reducing polygon count is paramount. Use level of detail (LOD) systems, where lower-polygon versions are used at greater distances from the camera. Combine meshes where possible to reduce the number of objects the renderer has to process. Consider using simpler shapes where appropriate.

- Texture Optimization: Use textures with appropriate resolutions. Avoid overly large textures unless absolutely necessary. Use compression techniques like mipmapping to improve performance. Employ texture atlasing to combine multiple smaller textures into one larger sheet.

- Lighting Optimization: Avoid overly complex lighting setups. Use light linking or portals to speed up global illumination calculations. Carefully place and design lights to minimize the number required.

- Material Optimization: Utilize efficient shaders. Avoid complex shader networks that create unnecessary computational overhead. Pre-calculate values where possible.

- Render Settings Optimization: Optimize render settings based on the required image quality. Consider reducing sample counts for faster rendering, though this may reduce the quality of the final image. Utilize denoising algorithms to decrease render times while preserving image quality.

- Scene organization: Keep the scene organized. Use layers, groups, and instances to effectively structure your scene for better render times.

The key is to find the balance between render time and image quality. Profiling tools within the rendering engine can help identify performance bottlenecks.

Q 4. What are your preferred methods for creating realistic lighting and shadows?

Creating realistic lighting and shadows is crucial for achieving believable renders. My preferred methods incorporate a combination of techniques:

- Physically Based Rendering (PBR): I adhere to PBR principles to ensure realistic lighting interactions. This involves using physically accurate materials and light sources to simulate real-world phenomena accurately.

- Image-Based Lighting (IBL): Using HDRI (High Dynamic Range Image) maps captures real-world lighting environments, providing highly realistic and efficient illumination for the scene. It’s a great way to quickly establish an overall mood and lighting style.

- Area Lights: Instead of using point lights, I frequently employ area lights to simulate realistic light sources with soft shadows. Area lights offer a more natural look and feel compared to harsh point lights.

- Global Illumination (GI): GI methods accurately simulate light bouncing around the scene, creating realistic indirect lighting. Implementing algorithms like path tracing or photon mapping creates rich, natural-looking lighting that is essential to realism.

- Light Linking and Portals: These techniques are particularly helpful in optimizing GI calculations by controlling which parts of the scene interact. This enhances performance without sacrificing quality.

A combination of these approaches allows me to create richly lit scenes that accurately capture the subtleties of light and shadow.

Q 5. Explain your understanding of global illumination techniques.

Global Illumination (GI) refers to the process of simulating how light bounces around a scene, affecting the indirect illumination. It is crucial for achieving photorealism as indirect lighting often makes up the majority of the light interacting with objects in a scene. Without GI, scenes often appear flat and unrealistic.

There are several techniques for calculating GI:

- Path Tracing: This method simulates light paths by randomly tracing rays, simulating light bounces from source to camera, producing extremely realistic results but can be computationally expensive.

- Photon Mapping: This technique simulates light by placing ‘photons’ that represent light in the scene and then using these photons to illuminate surfaces. It’s generally faster than path tracing, but can result in noise or artifacts.

- Radiosity: A more traditional approach which solves for diffuse light interactions only, computationally efficient but does not handle specular reflections as well as path tracing.

- Lightmaps (Baked GI): Pre-calculated lighting solutions, suitable for static scenes but not dynamic elements. These are often used in real-time rendering for efficient indirect lighting.

The choice of technique depends on factors such as desired realism, rendering time, and the complexity of the scene. Modern renderers often combine multiple GI techniques to leverage their respective strengths.

Q 6. How do you handle complex geometry in your rendering pipeline?

Handling complex geometry efficiently is vital for preventing rendering slowdowns and crashes. Here’s how I approach this:

- Level of Detail (LOD): Implementing LOD systems is crucial. This involves creating multiple versions of complex models, with reduced polygon counts for increasing distances from the camera. The renderer will automatically switch between the models based on distance, optimizing performance without significantly impacting visual fidelity from a user perspective.

- Mesh Optimization: Tools can help reduce polygon count by simplifying geometry while maintaining visual appearance. Removing unnecessary polygons and optimizing topology improves performance considerably.

- Instancing: Instead of creating many individual copies of the same object, instance them. This reduces memory usage and rendering time by referencing a single copy while duplicating its visual representation in various locations.

- Proxy Geometry: For extremely complex geometry, using simplified proxy models during early stages of rendering or for preview purposes can help speed up feedback times. The proxy model can be replaced with the high-detail model later in the rendering pipeline.

- Out-of-core rendering: For extremely large models which cannot fit into the computer’s RAM, out-of-core rendering strategies, which read and process parts of the data from hard drive, can be applied to enable handling of scenes with vast geometry.

The key is to use a combination of techniques, carefully selecting the optimal strategy based on the specific requirements of the project.

Q 7. What are your preferred texturing techniques and workflows?

My preferred texturing techniques and workflows focus on achieving realism and efficiency:

- Substance Painter/Designer: I heavily utilize Substance Painter and Designer for creating high-quality textures. These tools allow for procedural generation of textures, providing non-destructive workflows and enabling quick iterations.

- Layered Textures: I build textures in layers to control detail and allow for easy modification and adjustment. This allows for flexibility and refinement.

- Normal Maps, Specular Maps, etc.: I commonly incorporate various map types (normal, specular, roughness, etc.) to add detail without increasing polygon count and to improve rendering performance. These maps provide detail information that’s ‘baked’ into the texture, creating surface imperfections or texture variation without increased mesh complexity.

- Texture Atlasing: I combine multiple textures into a single larger ‘atlas’ to reduce draw calls and improve rendering efficiency. This is especially useful in real-time applications.

- Photogrammetry and Scanning: When realism is paramount, photogrammetry (creating 3D models from photographs) or 3D scanning offers extremely accurate and high-detail texture acquisition, which can then be processed and further refined in tools such as Substance Painter.

My workflow typically involves creating base textures in Substance Designer, followed by detailed texturing and painting in Substance Painter, and final adjustments within the rendering engine.

Q 8. Describe your experience with different shader languages (e.g., HLSL, GLSL).

My experience with shader languages is extensive, encompassing both HLSL (High-Level Shading Language) and GLSL (OpenGL Shading Language). I’ve used them extensively in various projects, from real-time game development to offline rendering for architectural visualization. HLSL is primarily used with DirectX, offering excellent performance on Windows-based platforms. I find its syntax relatively straightforward and its features well-suited for complex shading effects. I’ve used it to create realistic materials, such as physically-based rendering (PBR) shaders for metals and plastics, complete with subsurface scattering and accurate energy conservation.

GLSL, on the other hand, is the standard for OpenGL, which is widely used in cross-platform development. I’ve leveraged GLSL’s capabilities for developing shaders targeting various hardware architectures. For instance, I optimized a GLSL shader for mobile devices, reducing its computational cost without sacrificing visual fidelity. A key difference between HLSL and GLSL lies in their execution environments and supported features, requiring a nuanced understanding of each language’s capabilities and limitations.

In my work, I’ve frequently transitioned between these languages depending on the project’s requirements. For example, a project using Unreal Engine would necessitate HLSL expertise, while a project involving WebGL would require proficiency in GLSL. I’m comfortable working with both and can quickly adapt to the specific syntax and features of each.

Q 9. How do you troubleshoot rendering issues and bugs?

Troubleshooting rendering issues is a crucial aspect of my workflow. My approach is systematic, combining debugging tools with a deep understanding of the rendering pipeline. I start by isolating the problem – is it a shader error, a geometry issue, or a problem with the rendering engine itself? For example, if I encounter flickering artifacts, I might investigate texture filtering parameters, or check for potential race conditions in my multi-threaded code. If there are visual glitches, such as incorrect normals, I look for problems in the mesh data or the normal calculation within the shader.

I often utilize renderers’ debugging tools – rendering pipelines often have features for visualizing depth buffers, normal maps, and other intermediate data, which helps to quickly pinpoint problems. For instance, visualizing the depth buffer can reveal z-fighting issues (where two polygons are too close together). Inspecting the normal map can help identify incorrect normal vectors. I also heavily rely on logging and print statements within shaders to understand variable values and track execution flow.

My process involves systematically ruling out possible causes, starting with the simplest explanations and progressively moving towards more complex issues. This methodical approach, combined with strong problem-solving skills, ensures that I can efficiently resolve rendering bugs and deliver high-quality results.

Q 10. Explain your experience with compositing and post-processing techniques.

Compositing and post-processing are vital stages in achieving a final polished render. Compositing involves combining multiple render passes (like diffuse, specular, shadows, ambient occlusion) into a single image. This allows for flexible control over the final image. I often use compositing software like Nuke or After Effects to achieve effects like depth of field, motion blur, or color correction that are difficult or inefficient to implement directly within the rendering engine.

Post-processing techniques are applied after the rendering is complete and typically involve manipulating the rendered image to enhance its visual appeal or add stylistic effects. Examples include bloom (simulating bright light sources), tone mapping (adjusting brightness and contrast to match human perception), ambient occlusion (darkening crevices to enhance depth), and color grading. I have experience using various techniques, adjusting parameters like bloom intensity, or fine-tuning color curves to achieve the desired visual style.

For example, in a project depicting a sunset scene, I might composite multiple layers, including a sky render, a ground render, and separate lighting effects, to achieve realistic atmospheric scattering and depth. Then, I’d use post-processing to add a subtle bloom effect to the sun and apply a color grade to enhance the overall warmth and mood. My experience extends to implementing some post-processing effects directly in shaders for real-time applications as well.

Q 11. What is your experience with different file formats used in rendering (e.g., FBX, OBJ, Alembic)?

I have extensive experience working with a variety of file formats commonly used in rendering pipelines. FBX (Filmbox) is a versatile format supporting animation, materials, and meshes. I often use it for transferring models between different 3D software packages, its robustness making it ideal for collaborative projects. OBJ (Wavefront OBJ) is a simpler, widely supported format, primarily focusing on mesh geometry. I employ it when geometry exchange is the primary concern, especially in cases where simplicity and compatibility are paramount.

Alembic (.abc) is particularly useful for handling complex geometry and animation caches. I find it invaluable when dealing with very high-polygon models or simulations, as it efficiently stores animation data, enabling smoother playback and efficient rendering. I use Alembic in large-scale projects to manage large datasets, especially when working with simulations like cloth or fluid dynamics. Choosing the appropriate format depends on the project’s requirements, prioritizing the specific features needed for a given task. For example, if I’m working with a character model with intricate rigging and animation, FBX is preferred. If I only need to transfer static mesh geometry, OBJ is sufficient.

Q 12. How do you manage large datasets in a rendering project?

Managing large datasets is a significant challenge in rendering. My approach involves a combination of techniques focused on optimization and efficient data handling. This begins with the modeling phase – optimizing polygon counts, using level of detail (LOD) techniques, and using proxies for distant objects to reduce the overall scene complexity. During the rendering process, I leverage techniques like instancing to reduce the amount of data that needs to be processed. Instancing allows multiple copies of the same object to be rendered using a single draw call, drastically increasing performance.

Furthermore, I utilize out-of-core rendering techniques, which allow rendering scenes too large to fit in system RAM. This usually involves splitting the scene into smaller, manageable chunks and rendering them sequentially or in parallel. I’m also familiar with cloud rendering services, which provide the computational resources needed to handle extremely large datasets. Careful asset management is crucial, organizing and naming assets using a consistent convention to maintain order and streamline the process. Employing techniques like baking high-resolution details into textures (normal maps, ambient occlusion maps) minimizes the computational cost associated with rendering intricate details.

Q 13. Describe your experience with version control systems (e.g., Git) in a rendering workflow.

Version control is indispensable in any collaborative rendering project. I’m proficient in Git, using it to track changes to both code and assets. We typically create separate branches for different features or tasks, ensuring that experimental changes don’t affect the main project branch. This allows for parallel development, where multiple team members can work simultaneously without interfering with each other’s work. Pull requests and code reviews are crucial steps to maintain code quality and collaboration.

I employ Git’s branching model to handle different versions of assets. For example, a character model might have multiple revisions, each stored in a separate commit. This allows us to revert to older versions if necessary, avoiding data loss. We also carefully select appropriate file types for version control; binary files (like textures) can bloat the repository, so we often use Git LFS (Large File Storage) to efficiently handle large files. Git’s robust features ensure that the entire project history is carefully managed, and collaboration becomes smooth and productive.

Q 14. How do you collaborate with other team members in a rendering project?

Collaboration is key in complex rendering projects. I foster effective teamwork through clear communication and defined roles. Before commencing a project, I ensure that all team members have a shared understanding of the project goals, timelines, and responsibilities. We use project management tools to track progress, assign tasks, and manage deadlines. Regular meetings help to discuss challenges and share updates. Clear communication channels (e.g., Slack, email) are essential for quick problem-solving and feedback exchange.

To facilitate asset sharing, we typically use a centralized asset management system, ensuring that everyone works with the latest versions of files and that changes are efficiently disseminated. This often involves establishing a standardized naming convention and folder structure. When working on tasks involving multiple team members, I strongly advocate a modular approach, where tasks are divided into smaller, independent units that can be worked on simultaneously. This minimizes conflicts and streamlines the overall workflow. Furthermore, open and honest communication, combined with a willingness to help fellow team members, are essential for maintaining a productive and positive collaborative environment.

Q 15. What are your preferred methods for creating realistic materials?

Creating realistic materials is paramount in achieving high-quality renders. My approach involves a multi-step process focusing on both the technical aspects and the artistic interpretation of the material’s properties. I primarily leverage physically based rendering (PBR) workflows, which allows for predictable and realistic results based on real-world physics.

- Reference Gathering: I start by gathering high-quality reference images and videos of the material. This helps in accurately capturing its color, texture, roughness, and other properties. For example, if I’m rendering polished granite, I’ll study various images of granite under different lighting conditions to understand how light interacts with its surface.

- Parameter Adjustment: Using software like Substance Painter, Blender, or Marmoset Toolbag, I meticulously adjust the PBR parameters. These include albedo (base color), roughness (how much light scatters), metallic (how much light reflects like a metal), normal map (surface detail), and subsurface scattering (how light penetrates the material, crucial for skin or translucent materials). For instance, a rough wooden surface will have a high roughness value, resulting in a diffuse reflection, whereas a smooth metal surface will have a low roughness value, creating sharp specular highlights.

- Texture Creation/Acquisition: I often create or acquire high-resolution textures. This might involve using photogrammetry to capture real-world textures or creating procedural textures within the software. For example, I might create a realistic wood texture using a procedural noise generator, then tweak the parameters to match my reference images.

- Iteration and Refinement: Finally, I iterate and refine the material until it looks convincingly realistic under various lighting scenarios. This involves rendering test renders and making subtle adjustments to the parameters until I am satisfied.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with physically based rendering (PBR).

Physically Based Rendering (PBR) is the cornerstone of my rendering workflow. My understanding of PBR goes beyond simply using pre-built shaders; I grasp the underlying physics that dictate how light interacts with materials. I’ve worked extensively with PBR workflows in various software packages including Unreal Engine, Blender Cycles, and Arnold.

My experience includes implementing different PBR models such as the Cook-Torrance model, which accurately simulates specular reflections based on surface roughness and microfacet theory. I’m comfortable working with both metallic/roughness and specular/gloss workflows. For example, I’ve used this knowledge to create realistic materials ranging from weathered stone to polished chrome, ensuring consistent and physically plausible results across different lighting and camera setups.

Beyond the technical aspects, I also understand the limitations of PBR. I know when to use artistic license to achieve a specific look, even if it deviates slightly from perfect physical accuracy. The goal is always a believable and aesthetically pleasing image, not slavish adherence to the model.

Q 17. What are your strengths and weaknesses as a rendering artist?

My strengths lie in my meticulous attention to detail and my ability to create realistic lighting and materials. I’m highly proficient in PBR techniques and possess a strong understanding of color theory and color management. I thrive in collaborative environments, actively seeking feedback and incorporating it into my work. I am also adept at problem-solving, efficiently identifying and resolving technical challenges that arise during the rendering process.

One area I’m continually striving to improve is my speed of workflow. While I prioritize quality and realism, I acknowledge that optimizing my workflow to achieve the same level of quality in less time is essential for professional projects. I’m actively exploring new techniques and software to enhance my efficiency without compromising quality.

Q 18. How do you stay up-to-date with the latest trends and technologies in rendering?

Staying current in the rapidly evolving field of rendering requires a multifaceted approach. I actively engage in several strategies:

- Following Industry Blogs and Publications: I regularly read blogs from leading companies and individuals in the rendering industry. This keeps me informed about the latest advancements in software, hardware, and rendering techniques.

- Attending Conferences and Workshops: Participating in industry conferences such as SIGGRAPH allows me to network with other professionals and learn about cutting-edge research and development firsthand.

- Experimenting with New Software and Tools: I actively experiment with new software releases and rendering engines, evaluating their strengths and weaknesses for my specific needs. I’m not afraid to try new things and to learn from my mistakes.

- Online Courses and Tutorials: I supplement my knowledge with online courses and tutorials from platforms such as Udemy and Skillshare, particularly for specialized areas like advanced lighting techniques or new rendering engines.

- Following Industry Leaders on Social Media: I follow key influencers and companies on platforms like Twitter, Instagram, and ArtStation, exposing myself to the latest projects and industry discussions.

Q 19. Explain your understanding of color spaces and color management.

Color spaces define how colors are numerically represented and stored. Color management ensures consistent color reproduction across different devices and workflows. Understanding both is vital for accurate and predictable results.

I’m familiar with various color spaces including sRGB (commonly used for web and screens), Adobe RGB (a wider gamut for print), and Rec.709 (used in video). I understand the importance of working in a wide-gamut color space during the creation process and converting to a specific output space (like sRGB for web) at the final stage. This prevents color loss and ensures accurate color representation on the intended display or print medium.

My color management workflow includes profiling my monitor and printer to ensure accurate color reproduction. I also utilize ICC profiles in my rendering software to accurately convert colors between different color spaces, maintaining consistency throughout the entire production pipeline. This is particularly crucial when working with clients to ensure the final rendered images match their expectations.

Q 20. How do you approach creating realistic reflections and refractions?

Realistic reflections and refractions are crucial for creating believable surfaces. My approach utilizes PBR principles and leverages the capabilities of my rendering software.

For reflections, I focus on accurate representation of surface roughness and the environment’s influence. A highly polished surface will have sharp, clear reflections, while a rough surface will have blurry, diffused reflections. I often use environment maps (HDRI images) to accurately simulate the surrounding environment’s impact on the reflections. For instance, rendering a chrome sphere will use an environment map to reflect the scene accurately in its surface.

Refractions are handled similarly, but with an added focus on the refractive index of the material. This value determines how much light bends as it passes through the material. For example, glass has a higher refractive index than air, causing light to bend more sharply. I pay close attention to the material’s transparency and its ability to absorb light at different wavelengths, which can affect the color of the refracted light.

To achieve the highest level of realism, I also utilize techniques like ray tracing or path tracing, which accurately simulate the physics of light bouncing and refracting within a scene. This results in much more accurate and realistic reflections and refractions, especially for complex geometries.

Q 21. Describe your experience with different types of cameras and their settings.

My experience with different camera types and settings is extensive. I’m comfortable using virtual cameras in rendering software to emulate the behavior of real-world cameras. This involves understanding the effects of various parameters on the final image.

- Focal Length: I understand how focal length affects the field of view and depth of field. A wider angle lens captures a larger area, while a longer telephoto lens compresses perspective and creates a shallower depth of field.

- Aperture: I know how aperture size affects depth of field and the amount of light entering the camera. A smaller aperture (larger f-stop number) creates a greater depth of field, while a larger aperture (smaller f-stop number) creates a shallower depth of field.

- Shutter Speed: While less crucial in rendering, understanding shutter speed helps mimic real-world effects. For example, a longer shutter speed can simulate motion blur.

- ISO: Similar to shutter speed, ISO in rendering influences the level of noise. While usually not a primary concern in rendering, it can help simulate certain aesthetic effects.

- Camera Types: I have worked with various virtual camera types, including perspective cameras (the most common type), orthographic cameras (which produce parallel projections), and fisheye cameras (which capture a wide, distorted field of view).

I use these settings strategically to create the desired mood and composition for my renders. For instance, a shallow depth of field can isolate a subject and draw the viewer’s attention, while a wider field of view can capture the grand scale of a scene.

Q 22. How do you handle different types of lighting scenarios (e.g., indoor, outdoor, night)?

Handling diverse lighting scenarios requires a nuanced understanding of light sources, their properties, and their interaction with the environment. For indoor scenes, I typically employ techniques like Global Illumination (GI) algorithms, such as path tracing or photon mapping, to accurately simulate light bouncing around the space, creating realistic shadows and indirect lighting. I might use area lights to represent windows or lamps to achieve a more natural look. For outdoor scenes, I would leverage image-based lighting (IBL) techniques, using HDRI (High Dynamic Range Imaging) maps to capture realistic sky and environmental lighting. This approach allows me to realistically simulate the sun’s position and intensity, creating believable shadows and ambient light. Night scenes often involve a careful balance of artificial light sources (streetlights, building lights) and ambient occlusion to emphasize darkness and highlight illuminated areas. I might also use volumetric fog or atmospheric scattering to enhance the sense of depth and realism in nighttime renders.

For example, in a recent project rendering a bustling city square at night, I used IBL for the ambient light, strategically placed area lights to simulate street lamps, and a subtle volumetric fog to create a sense of atmosphere and depth. This approach ensured the lighting conveyed the mood and time of day accurately.

Q 23. What is your experience with creating realistic water or smoke effects?

Creating realistic water and smoke effects involves simulating fluid dynamics and particle systems. For water, I often employ techniques like displacement mapping, which uses a height map to deform the water surface, creating ripples and waves. Advanced techniques like physically-based fluid simulations can generate even more realistic results, accurately simulating the interaction of water with objects and other forces. For smoke, I rely heavily on particle systems, adjusting parameters like particle density, velocity, and lifetime to achieve the desired look. Subsurface scattering can be used to realistically render the interaction of light with smoke, making it appear less transparent and more visually dense. To further enhance realism, I often use simulation techniques to realistically simulate the behavior of smoke, including how it interacts with wind or heat sources.

In one project, I used a combination of a fluid simulation for the water and a particle system for the foam to create a highly realistic ocean scene. The level of detail allowed us to convincingly depict waves crashing against a rocky shore, producing foam and spray.

Q 24. How do you optimize textures for rendering performance?

Texture optimization is crucial for rendering performance. The primary focus is on reducing the memory footprint and draw calls. This is accomplished through several techniques. First, I would utilize texture compression formats such as BC7 (for DirectX) or ASTC (for OpenGL ES), which significantly reduce the size of texture files without significant visual loss. Second, I would carefully select appropriate texture resolutions. High-resolution textures are beneficial for close-up views but may be overkill for distant objects. I commonly use mipmaps, creating reduced resolution versions of the textures to address this. Finally, I would employ texture atlasing to combine multiple smaller textures into a single, larger texture, minimizing the number of texture lookups during rendering.

For example, in a large-scale environment rendering, I optimized a high-resolution terrain texture by creating mipmaps and compressing it using BC7. This reduced the texture size by 70%, significantly improving rendering times without noticeable visual degradation.

Q 25. Explain your experience with creating realistic human characters.

Creating realistic human characters is a multifaceted process demanding expertise in modeling, texturing, rigging, and animation. I usually begin with high-fidelity 3D modeling using software like ZBrush or Blender, meticulously sculpting the character’s anatomy and details. This is followed by retopology to create a clean, efficient mesh suitable for animation. Texturing requires sophisticated techniques such as procedural texturing or utilizing high-resolution scans to create realistic skin, hair, and clothing. Rigging involves creating a skeleton and assigning weights to the model’s vertices to enable natural and convincing animation. Advanced techniques like subsurface scattering are used to make the skin appear more realistic by showing light scattering within the surface, and simulations for hair and clothing dynamics add to the realism.

In a recent project, we created a photorealistic character for a short film. We utilized high-resolution scans for reference, resulting in extremely detailed textures. The combination of advanced rigging and animation gave the character lifelike expressions and movements.

Q 26. How do you balance artistic vision with technical constraints in rendering?

Balancing artistic vision with technical constraints is a constant negotiation in rendering. The artistic vision often dictates high-resolution textures, complex models, and advanced lighting techniques, pushing the technical limits. However, these demands may lead to excessively long render times or exceeding the hardware capabilities. The solution lies in finding an optimal balance using several strategies. First, I would discuss the desired level of realism and detail with the artist, exploring alternative approaches that can achieve a visually pleasing result without compromising rendering performance. This might involve using simpler models, lower resolution textures, or alternative rendering techniques. Second, I would employ optimization techniques, such as those discussed earlier, to maximize rendering efficiency. This ensures that high-quality visuals can be delivered within reasonable timeframes without compromising the artistic intent.

For instance, in one project, the artist requested highly detailed foliage. To reconcile this with render time constraints, I used a combination of procedural generation and optimized level of detail techniques. This allowed us to maintain a visually appealing result within the required render time.

Q 27. What is your experience with real-time rendering for virtual reality or augmented reality applications?

My experience with real-time rendering for VR/AR applications includes working with game engines such as Unreal Engine and Unity. These engines offer extensive capabilities for real-time rendering, allowing for interactive experiences within virtual or augmented environments. Key considerations include performance optimization to achieve high frame rates (typically 60fps or higher) required for smooth, immersive VR/AR experiences. This often requires a strategic approach to geometry, texture resolution, and lighting techniques. I’ve worked on projects utilizing techniques such as deferred rendering and forward rendering, tailoring my approach to the specific hardware capabilities and visual demands of the project. Furthermore, understanding the limitations of mobile devices is crucial when working with AR applications, requiring more aggressive optimization strategies.

A recent project involved developing an AR application that overlaid virtual furniture onto a user’s real-world environment using Unity. We optimized the models and textures for mobile devices, and used techniques like occlusion culling to significantly improve performance.

Q 28. Describe a challenging rendering project you worked on and how you overcame the challenges.

One particularly challenging project involved rendering a large-scale, photorealistic environment with complex architectural details and dense vegetation for a promotional video. The initial approach led to extremely long render times, making it impractical for the production timeline. To overcome this, we employed a multi-pronged strategy. First, we implemented a tile-based rendering system, breaking the environment down into smaller chunks rendered independently, speeding up processing significantly. Second, we employed advanced level of detail (LOD) techniques, using lower polygon meshes for distant objects, reducing the overall rendering load. Third, we optimized the shaders and lighting to improve efficiency. Through the combined use of these approaches, we managed to reduce the render times by over 80%, delivering the high-quality visuals within the stipulated time constraints.

Key Topics to Learn for Rendering and Visualization Interview

- Ray Tracing and Path Tracing: Understand the fundamental principles, differences, and applications of these rendering techniques. Explore their strengths and limitations in various scenarios.

- Shader Programming (GLSL, HLSL): Develop a strong grasp of shader languages and their use in creating realistic materials, lighting effects, and post-processing effects. Practice writing shaders for different rendering pipelines.

- Real-time Rendering Techniques: Familiarize yourself with techniques optimized for interactive applications, such as level of detail (LOD), culling, and occlusion culling. Understand their impact on performance.

- Image-Based Lighting (IBL): Learn how to utilize environment maps for realistic lighting and reflections. Explore different techniques like cubemaps and spherical harmonics.

- Physically Based Rendering (PBR): Master the principles of PBR, including energy conservation and accurate material representation. Understand the importance of metallic, roughness, and normal maps.

- Data Structures and Algorithms: Optimize your understanding of relevant data structures (e.g., octrees, kd-trees) and algorithms for efficient scene traversal and rendering.

- Rendering Pipelines: Gain a solid understanding of the different stages involved in a modern rendering pipeline, from vertex processing to fragment processing and post-processing.

- Performance Optimization: Develop strategies for optimizing rendering performance, including profiling, identifying bottlenecks, and implementing appropriate optimizations.

- Common Visualization Techniques: Explore techniques like volume rendering, particle systems, and advanced shading models for specific applications like medical imaging or scientific visualization.

- Software and Tools: Demonstrate familiarity with industry-standard software and tools used in rendering and visualization (mention specific tools if appropriate for your target audience, e.g., Blender, Unreal Engine, Unity, etc., without hyperlinking).

Next Steps

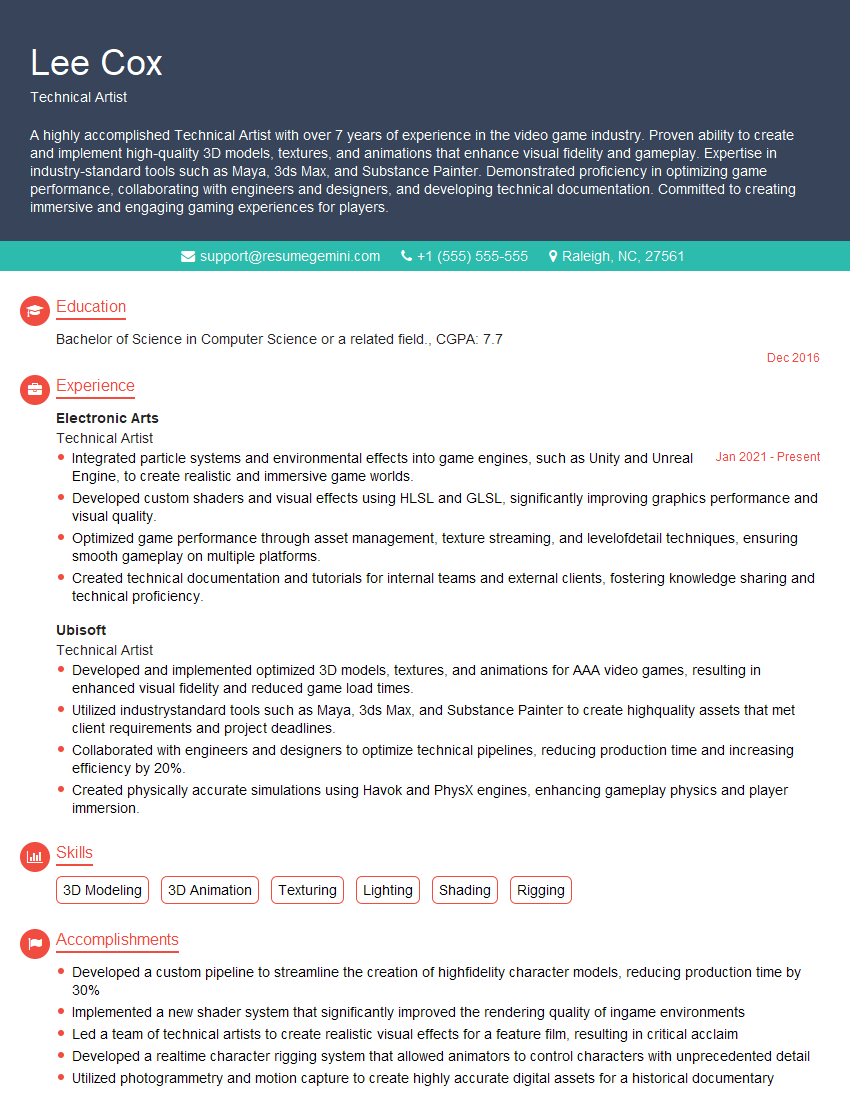

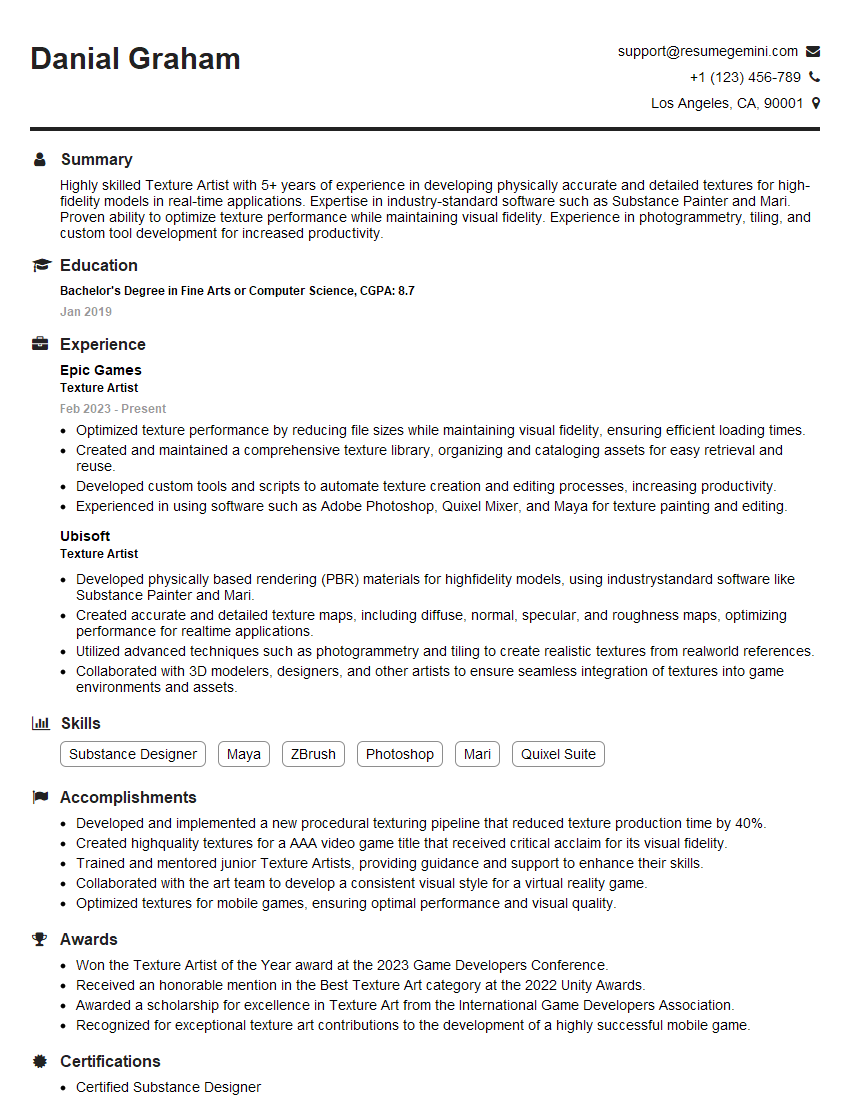

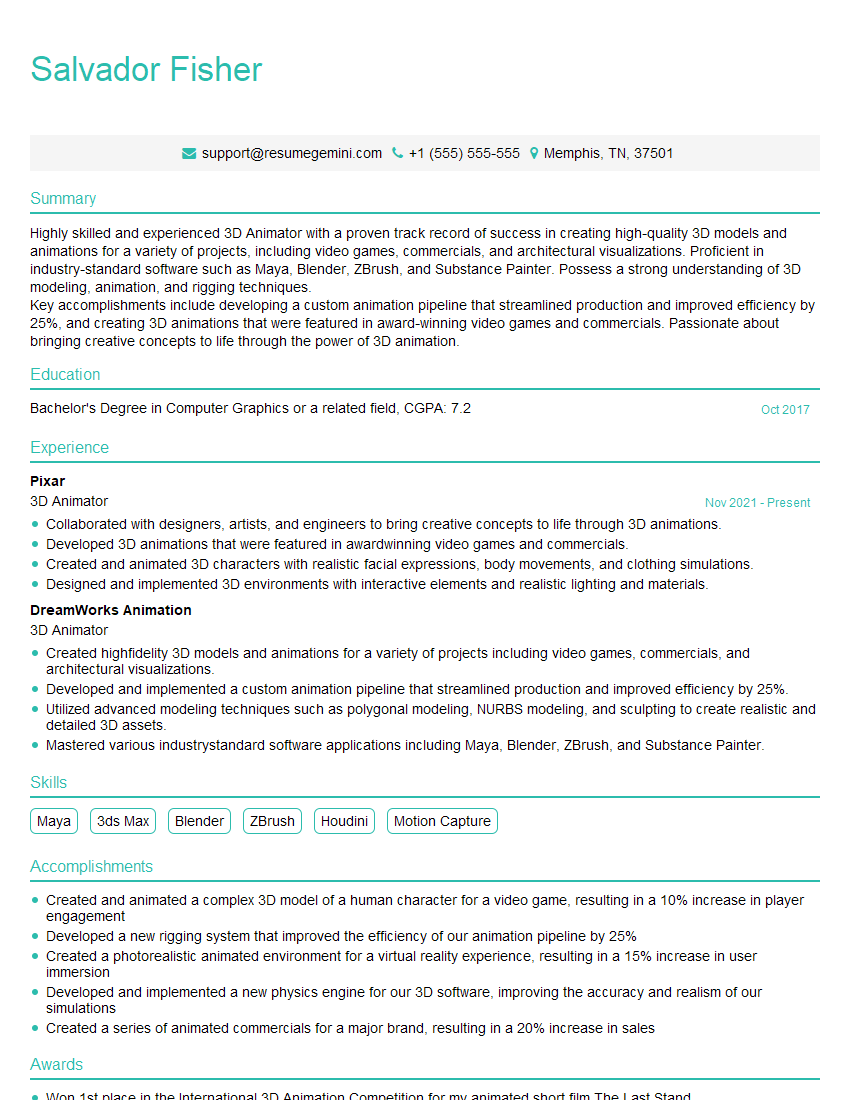

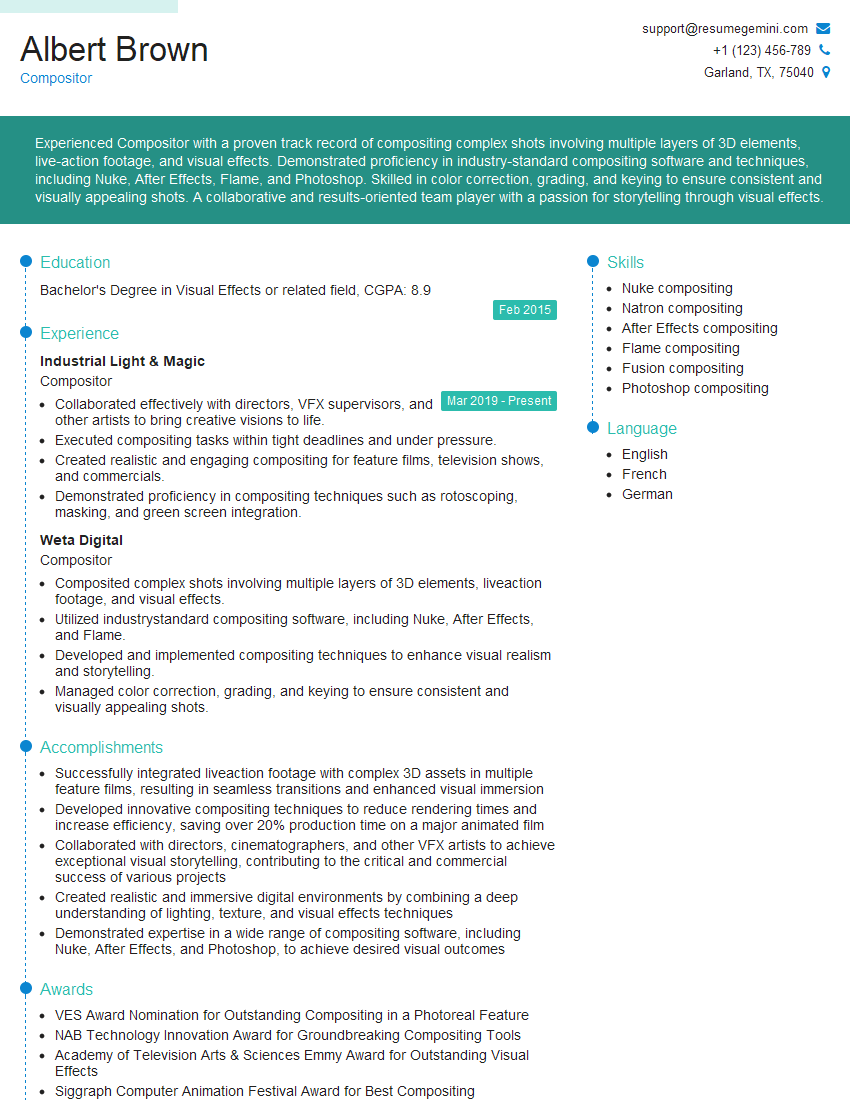

Mastering rendering and visualization opens doors to exciting and rewarding careers in game development, film, architecture, and scientific research. A strong understanding of these techniques is highly sought after, making you a valuable asset to any team. To maximize your job prospects, create an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. Examples of resumes tailored to rendering and visualization are available to help guide your process.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good