Cracking a skill-specific interview, like one for Sensing and Perception Systems, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Sensing and Perception Systems Interview

Q 1. Explain the difference between active and passive sensors.

The core difference between active and passive sensors lies in how they acquire data. Passive sensors simply detect energy or radiation already present in the environment, like a camera capturing light reflected from objects. Think of your eyes – they passively receive light to see. They don’t emit anything themselves. Active sensors, conversely, emit energy and then measure the response from the environment. A LiDAR system, for instance, emits laser pulses and measures the time it takes for the pulses to reflect back, allowing it to create a 3D point cloud. It’s like using a flashlight in a dark room – you’re actively illuminating your surroundings to see.

In essence, active sensors are more controlled and can provide more specific information, but often consume more power and are more easily detected. Passive sensors are generally less powerful but more energy-efficient and discreet.

Q 2. Describe different sensor modalities used in perception systems (e.g., LiDAR, camera, radar).

Perception systems leverage a variety of sensor modalities, each with unique strengths and weaknesses. Let’s look at some common ones:

- Cameras (Vision): These capture images by detecting reflected light. They’re widely used due to their low cost, high resolution, and rich information content, but they struggle in low-light conditions and are sensitive to weather effects like fog or rain.

- LiDAR (Light Detection and Ranging): LiDAR uses laser pulses to measure distances and create detailed 3D point clouds. It excels in providing accurate depth information, even at long ranges, but can be expensive and is susceptible to atmospheric scattering.

- Radar (Radio Detection and Ranging): Radar employs radio waves to detect objects and measure their range, velocity, and angle. It’s less affected by weather conditions than cameras or LiDAR and can penetrate certain materials, but offers lower resolution and less detailed information than LiDAR or cameras.

- Sonar (Sound Navigation and Ranging): Sonar uses sound waves to navigate and create images underwater. It’s crucial for autonomous underwater vehicles (AUVs) and seabed mapping but has limitations in range and resolution compared to other sensors.

- IMU (Inertial Measurement Unit): IMUs measure acceleration and angular velocity. While not directly sensing the environment, they are crucial for motion tracking and sensor fusion, compensating for inaccuracies in other sensors.

The choice of sensor modality depends on the specific application and the trade-offs between cost, accuracy, range, and robustness to environmental conditions.

Q 3. What are the key challenges in sensor fusion and how can they be addressed?

Sensor fusion, the process of combining data from multiple sensors, presents several challenges:

- Data Heterogeneity: Sensors provide data in different formats, coordinate systems, and levels of accuracy, making direct comparison difficult. For example, combining camera images with LiDAR point clouds requires careful alignment and transformation.

- Sensor Noise and Uncertainty: Each sensor is subject to noise and inaccuracies, introducing uncertainty into the fused data. Effective fusion algorithms must handle this uncertainty appropriately.

- Computational Complexity: Processing and fusing data from multiple sensors can be computationally expensive, especially in real-time applications requiring low latency.

- Data Association: Correctly associating measurements from different sensors that correspond to the same object or feature is crucial but challenging, particularly in cluttered environments.

These challenges can be addressed through:

- Calibration: Precisely calibrating sensors to ensure consistent coordinate systems and eliminate systematic errors.

- Filtering Techniques (e.g., Kalman filtering): Using algorithms that estimate the state of the system while accounting for sensor noise and uncertainty.

- Data Association Algorithms: Employing techniques like nearest-neighbor matching or data association algorithms that account for uncertainty and potential measurement errors.

- Robust Statistical Methods: Using methods that are less sensitive to outliers and noise in the data.

- Optimization Techniques: Utilizing methods that efficiently fuse data from numerous sensors while minimizing computational cost.

Careful consideration of these aspects ensures reliable and robust sensor fusion in real-world applications like autonomous driving.

Q 4. Explain the concept of Kalman filtering and its application in sensor data processing.

Kalman filtering is a powerful recursive algorithm used to estimate the state of a dynamic system from a series of noisy measurements. Imagine you’re tracking a moving object with a radar. The radar readings will be noisy, but the Kalman filter uses a model of the object’s motion (e.g., constant velocity) to predict its future position and then updates this prediction based on new noisy measurements. It does this optimally in a statistical sense, minimizing the error between the estimated state and the true state.

The Kalman filter operates in two steps:

- Prediction: The filter predicts the state of the system at the next time step using a model of the system’s dynamics.

- Update: The filter updates its prediction based on the new measurement, weighing the prediction and the measurement based on their respective uncertainties.

Applications include:

- Navigation systems: Combining GPS, IMU, and other sensor data to estimate precise location and velocity.

- Object tracking: Estimating the position and velocity of objects in video or other sensor data.

- Robotics: Controlling the movement of robots and estimating their state.

The beauty of Kalman filtering lies in its recursive nature – it continually updates its estimate with new data, making it ideal for real-time applications where precise and up-to-date estimations are critical.

Q 5. Discuss different methods for feature extraction in computer vision.

Feature extraction in computer vision aims to identify and represent the salient characteristics of an image or video that are useful for higher-level tasks such as object recognition or scene understanding. Think of it as summarizing the image’s essential content. Several methods exist:

- Hand-crafted features: These are features designed based on domain knowledge. Examples include:

- SIFT (Scale-Invariant Feature Transform): Detects local features that are invariant to scale and rotation.

- SURF (Speeded-Up Robust Features): A faster alternative to SIFT.

- HOG (Histogram of Oriented Gradients): Represents image patches by their gradient orientations.

- Haar-like features: Simple features used for object detection, often in cascades.

- Learning-based features: These features are learned automatically from data using machine learning techniques, often deep learning. Convolutional Neural Networks (CNNs) are dominant in this area, automatically extracting hierarchical features from images.

The choice of feature extraction method depends on the application and the available data. Hand-crafted features are often computationally less expensive but may not generalize well, while learning-based features are more computationally expensive but can capture more complex patterns from data. For example, hand-crafted features might be sufficient for simple object detection tasks, while deep learning-based features are often necessary for more complex tasks like image segmentation or scene understanding.

Q 6. How do you handle noisy sensor data in a perception system?

Noisy sensor data is a ubiquitous problem in perception systems. Handling it effectively is crucial for reliable performance. Strategies include:

- Filtering: Applying smoothing filters (e.g., moving average) to reduce high-frequency noise or Kalman filters to estimate the true signal amidst noise.

- Outlier rejection: Identifying and removing data points that are significantly different from the expected values. This could involve statistical methods like thresholding or robust statistics.

- Sensor fusion: Combining data from multiple sensors to reduce the impact of individual sensor noise. The idea is that errors in one sensor may be compensated by the accurate readings of another sensor.

- Data cleaning and preprocessing: Implementing techniques like median filtering or noise reduction algorithms to remove or reduce noise before further processing.

- Error modeling: Developing a model that represents the characteristics of the sensor noise, incorporating this model into the estimation and decision-making process.

The choice of strategy depends on the type of noise, the application, and the available computational resources. A combination of these techniques often provides the most effective solution.

Q 7. Explain the concept of sensor calibration and its importance.

Sensor calibration is the process of determining the relationship between the raw sensor readings and the actual physical quantities they measure. Imagine a scale that consistently reads 10 grams higher than the actual weight – it’s not accurate until calibrated. It’s essential to correct systematic errors and biases in sensor measurements.

For example, a camera needs calibration to determine its intrinsic parameters (focal length, principal point, distortion coefficients) and extrinsic parameters (position and orientation in the world). Without calibration, computer vision algorithms will struggle to accurately interpret the images.

The importance of sensor calibration is multifaceted:

- Accuracy: Calibration eliminates systematic errors, improving the accuracy of measurements.

- Reliability: Reliable data is crucial for robust system performance, particularly in safety-critical applications.

- Consistency: Calibration ensures consistency in measurements across different sensors and over time.

- Data Integration: Proper calibration is critical for seamless sensor fusion, as it aligns data from different sensors into a common coordinate system.

Calibration methods vary depending on the sensor type and can involve using known targets or reference sensors. For instance, camera calibration often uses checkerboard patterns, while IMU calibration involves specific procedures to estimate bias and scale factors.

Q 8. Describe different techniques for object detection and tracking.

Object detection and tracking are fundamental tasks in sensing and perception. Object detection identifies the presence and location of objects within a scene, while object tracking follows the objects’ movement over time. Several techniques exist, often used in conjunction.

- Traditional Computer Vision: This approach uses handcrafted features like Haar cascades (for face detection) or Histogram of Oriented Gradients (HOG) features combined with classifiers like Support Vector Machines (SVMs) or Adaboost. Think of it like teaching a computer to recognize objects based on pre-defined visual characteristics. While simple to implement, they are limited in their adaptability to variations in lighting, viewpoint, and object appearance.

- Deep Learning-based methods: These methods, using Convolutional Neural Networks (CNNs), have revolutionized the field. Networks like YOLO (You Only Look Once), Faster R-CNN (Regions with CNN features), and SSD (Single Shot MultiBox Detector) directly learn features from data, exhibiting superior performance and generalization capabilities. For example, YOLO processes the entire image at once, predicting bounding boxes and class probabilities simultaneously, making it very fast. Faster R-CNN employs a region proposal network to identify potential object locations before classification.

- Tracking Algorithms: Once objects are detected, tracking algorithms follow their trajectories. Common algorithms include Kalman filtering (predicts object location based on previous movement), particle filtering (handles uncertainty better than Kalman), and DeepSORT (combines deep learning features with tracking algorithms for robust performance).

In practice, a system might use YOLO for object detection and DeepSORT for tracking, providing a robust and efficient solution. Imagine a self-driving car leveraging this; YOLO detects pedestrians and vehicles, while DeepSORT predicts their paths, enabling safe navigation.

Q 9. What are some common algorithms used for image segmentation?

Image segmentation partitions an image into meaningful regions or segments based on similarities in color, texture, or other features. A plethora of algorithms exist, broadly categorized into:

- Thresholding: This simplest method assigns pixels to regions based on their intensity values exceeding a predefined threshold. Useful for images with clear intensity differences between objects and background, but less effective for complex scenes.

- Region-based segmentation: These methods group pixels based on their similarity to neighboring pixels, using techniques like region growing or watershed segmentation. Region growing starts from a seed pixel and expands the region iteratively. Watershed segmentation treats the image as a topographic map, finding watershed lines to separate regions.

- Edge-based segmentation: This approach first detects edges in the image using operators like Sobel or Canny and then connects these edges to form regions. Sensitive to noise and may produce fragmented regions.

- Clustering-based segmentation: Algorithms like k-means cluster pixels into groups based on their feature vectors (color, texture etc.), assigning each cluster to a region. K-means requires specifying the number of clusters beforehand.

- Deep Learning-based segmentation: Convolutional Neural Networks (CNNs), particularly U-Net and Mask R-CNN architectures, have shown impressive results. They learn complex features directly from the image data, providing accurate and detailed segmentation even in challenging scenarios. U-Net is known for its encoder-decoder structure, capturing context and details effectively. Mask R-CNN extends Faster R-CNN by adding a branch for generating pixel-wise masks for each detected object, providing instance segmentation.

Choosing the right algorithm depends on the specific application and image characteristics. For medical image analysis, deep learning approaches often provide superior accuracy. For simpler applications like separating foreground from background in a picture, thresholding may suffice.

Q 10. How do you evaluate the performance of a perception system?

Evaluating a perception system’s performance requires a multifaceted approach, considering aspects like accuracy, precision, recall, and speed. The metrics used heavily depend on the specific task.

- Accuracy: The overall correctness of the system. For object detection, this could be the percentage of correctly classified and localized objects. For segmentation, it’s often measured by the Intersection over Union (IoU) score – the overlap between the predicted and ground truth segmentation masks.

- Precision: The ratio of correctly identified positive instances to the total number of predicted positive instances. A high precision indicates few false positives.

- Recall: The ratio of correctly identified positive instances to the total number of actual positive instances. A high recall indicates few false negatives.

- F1-score: The harmonic mean of precision and recall, providing a balanced measure of performance.

- Processing Time: Crucial for real-time applications. It measures the time taken to process an input and generate the output. For autonomous driving, this needs to be very low (milliseconds).

- Robustness: The ability of the system to handle variations in lighting, viewpoint, occlusion, and noise. Testing under diverse conditions is vital.

We often use confusion matrices to visualize and calculate these metrics. Datasets with ground truth labels are essential for evaluation. For example, the COCO dataset is widely used for object detection and segmentation evaluation. A thorough evaluation strategy incorporates multiple metrics and considers the specific application requirements.

Q 11. Explain the trade-offs between different sensor technologies.

Sensor technology selection involves careful consideration of several trade-offs. Different sensors have strengths and weaknesses in terms of cost, range, accuracy, resolution, power consumption, and robustness.

- Cameras (RGB, Depth): RGB cameras provide color information, while depth cameras (like structured light or time-of-flight) provide 3D information. RGB cameras are cheaper and widely available, while depth cameras can be more expensive and less robust in challenging lighting conditions. Depth cameras offer superior scene understanding.

- LiDAR (Light Detection and Ranging): Provides highly accurate 3D point cloud data, but can be expensive, bulky, and sensitive to adverse weather conditions. Offers excellent range and accuracy but consumes more power.

- Radar (Radio Detection and Ranging): Works well in adverse weather conditions but provides less detailed information compared to LiDAR. Useful for detecting moving objects and measuring velocity. Less precise than LiDAR.

- Ultrasonic Sensors: Cheap and easy to use, but have limited range and accuracy. Often used for proximity sensing. Low cost, but limited accuracy.

The choice depends on the application. A self-driving car may use a combination of cameras, LiDAR, and radar to get a comprehensive understanding of the environment, mitigating the weaknesses of individual sensors. A robot arm might rely on simpler ultrasonic sensors for collision avoidance. This sensor fusion approach maximizes system robustness.

Q 12. Describe your experience with different programming languages used in sensing and perception (e.g., C++, Python).

My experience encompasses various programming languages commonly used in sensing and perception.

- C++: I’ve extensively used C++ for projects requiring real-time performance and low-level access to hardware. Its efficiency and deterministic behavior are crucial for developing high-performance algorithms and interacting with sensor drivers. For example, in a robotics project, C++ was essential for achieving the required frame rates for real-time control and image processing.

- Python: Python’s rich ecosystem of libraries (NumPy, OpenCV, scikit-learn, TensorFlow, PyTorch) makes it highly productive for rapid prototyping and experimentation. I use Python extensively for data analysis, machine learning model training, and development of high-level perception algorithms. A recent project involved using TensorFlow to train a deep learning model for object detection, which was significantly faster to develop in Python compared to C++.

- MATLAB: I have used MATLAB for algorithm development and simulation, particularly in the early stages of a project, due to its powerful visualization and prototyping tools.

Choosing the right language depends on the project’s specific requirements. C++ is preferred when real-time constraints are stringent, whereas Python is ideal for rapid prototyping and machine learning tasks. Often, a combination of languages is used, leveraging the strengths of each.

Q 13. How do you handle real-time constraints in perception systems?

Handling real-time constraints in perception systems demands careful consideration of algorithmic efficiency and hardware optimization.

- Algorithmic Optimization: Choosing computationally efficient algorithms is paramount. This might involve using faster algorithms (e.g., YOLO instead of Faster R-CNN), simplifying models, or employing parallel processing techniques.

- Hardware Acceleration: Utilizing hardware like GPUs (Graphics Processing Units) or specialized processors (e.g., FPGAs – Field-Programmable Gate Arrays) can dramatically accelerate computationally intensive operations. GPUs are excellent for parallel processing tasks like deep learning inference, while FPGAs offer fine-grained control and optimization for specific hardware.

- Data Structures and Algorithms: Efficient data structures and algorithms are essential. For example, using hash tables for faster lookups, or optimized search algorithms.

- Multithreading and Concurrency: Dividing the processing workload into multiple threads allows for parallel processing, reducing overall processing time. Care must be taken to manage thread synchronization and avoid deadlocks.

- Code Optimization: Fine-tuning the code to minimize computational overhead and memory usage can improve performance. Techniques like loop unrolling or vectorization can be employed.

In a real-time system, like an autonomous vehicle, meeting stringent timing constraints is critical for safety. Careful profiling and benchmarking are crucial throughout the development process to identify bottlenecks and optimize accordingly.

Q 14. Explain the concept of SLAM (Simultaneous Localization and Mapping).

Simultaneous Localization and Mapping (SLAM) is a fundamental problem in robotics and computer vision. It involves building a map of an unknown environment while simultaneously estimating the robot’s location within that map. This is akin to a person exploring a new building; they create a mental map of the layout while also tracking their position within it.

SLAM algorithms typically involve:

- Sensor Data Acquisition: Gathering sensor data (e.g., from cameras, LiDAR, or IMUs – Inertial Measurement Units) to perceive the environment.

- State Estimation: Estimating the robot’s pose (position and orientation) and the map’s features.

- Data Association: Matching sensor measurements to features in the map.

- Map Building: Constructing a consistent and accurate representation of the environment.

Various SLAM approaches exist, including:

- Filtering-based SLAM: Uses probabilistic methods like Kalman or particle filters to estimate the robot’s pose and map features, effectively handling uncertainty. Examples include Extended Kalman Filter (EKF) SLAM and Unscented Kalman Filter (UKF) SLAM.

- Graph-based SLAM: Represents the robot’s trajectory and map features as a graph, with nodes representing poses and edges representing constraints between them. Optimization techniques are used to refine the map and robot trajectory. Popular algorithms include g2o and GTSAM.

- Visual SLAM (VSLAM): Uses only visual information from cameras to perform SLAM. ORB-SLAM and SVO are prominent examples.

Choosing the right SLAM algorithm depends on factors such as sensor type, environmental conditions, and computational resources. SLAM has applications in autonomous navigation, augmented reality, and robotics exploration.

Q 15. Discuss your experience with different deep learning architectures for perception.

My experience with deep learning architectures for perception is extensive, encompassing various networks tailored for different tasks. Convolutional Neural Networks (CNNs) form the bedrock of many of my projects, particularly for image-based perception. I’ve used them extensively for tasks like object detection (with architectures like YOLO and Faster R-CNN), image segmentation (using U-Net and DeepLab), and image classification. Beyond CNNs, I’ve worked with Recurrent Neural Networks (RNNs), especially LSTMs and GRUs, for tasks involving sequential data like understanding video streams and incorporating temporal context into perception. More recently, I’ve explored Transformer networks, which are proving highly effective for tasks requiring long-range dependencies and global context, such as scene understanding and pose estimation. For example, in one project involving autonomous navigation, I integrated a Mask R-CNN for object detection to identify obstacles and a Transformer network to predict future trajectories of moving objects, enabling safer and more efficient navigation. The choice of architecture always depends on the specific needs of the application, considering factors like computational resources, data availability, and the desired level of accuracy and robustness.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Describe your experience with ROS (Robot Operating System).

My ROS experience is substantial. I’ve used it extensively for integrating diverse sensor data, developing robotic control systems, and deploying perception algorithms in real-world robotic applications. I’m proficient in writing ROS nodes, utilizing various ROS packages, and managing complex ROS systems. I’m familiar with different ROS communication mechanisms, including topics, services, and actions. For instance, in a project involving a mobile manipulator robot, I used ROS to seamlessly integrate data from a stereo camera (for depth perception), a lidar (for 3D point cloud generation), and an IMU (for inertial measurements). I developed ROS nodes for processing this sensor data, performing object detection and localization, and generating control commands for the robot’s motion and manipulation. My expertise extends to utilizing ROS tools for debugging, visualization (RViz), and system monitoring, enabling efficient development and deployment of complex robotic systems. Furthermore, I have experience deploying ROS on embedded systems, which is crucial for resource-constrained robotic applications.

Q 17. How do you deal with occlusions in object detection?

Occlusions are a significant challenge in object detection. My approach involves a multi-faceted strategy. First, I leverage robust object detection algorithms that are designed to handle partial occlusions. For instance, YOLOv5 and Faster R-CNN exhibit reasonable performance even when objects are partially hidden. Secondly, I employ techniques like sensor fusion, combining data from multiple sensors (e.g., cameras, lidar, radar) to obtain a more complete and robust view of the environment. If a camera view is occluded, lidar data can often provide supplementary information. Thirdly, I utilize temporal information by tracking objects over time. By tracking the history of an object’s position and appearance, I can better predict its location and identity even if it’s temporarily occluded. Finally, I incorporate context understanding into the detection process. By understanding the typical arrangement of objects in a scene, I can infer the presence of an occluded object based on the positions of visible objects. For example, if a car is partially hidden behind another car, knowing the typical size and shape of cars can help refine the detection of the occluded vehicle.

Q 18. Explain the difference between extrinsic and intrinsic camera parameters.

Intrinsic and extrinsic camera parameters define the camera’s internal geometry and its position and orientation in the world, respectively. Intrinsic parameters describe the internal characteristics of the camera, such as focal length (f_x, f_y), principal point (c_x, c_y), and radial distortion coefficients (k_1, k_2, etc.). These parameters are needed to map 3D points in the camera’s coordinate system to 2D pixel coordinates on the image sensor. Extrinsic parameters, on the other hand, describe the camera’s pose (position and orientation) in a world coordinate system. They are typically represented by a rotation matrix (R) and a translation vector (t), defining how the camera’s coordinate system is transformed into the world coordinate system. Understanding both is critical for accurate 3D reconstruction, object pose estimation, and camera calibration. Incorrect calibration can lead to significant errors in 3D perception. Imagine trying to pinpoint an object’s location using a distorted image with misaligned pixels; accurate intrinsic parameter calibration is vital here. Similarly, the camera’s location and orientation (extrinsic parameters) determine which part of the world is visible to the camera. Obtaining the accurate pose allows for meaningful interpretation of the images captured.

Q 19. What are the limitations of using only cameras for perception?

While cameras provide rich visual information, relying solely on them for perception has several limitations. Cameras struggle in low-light conditions, often producing noisy or unusable images. They also have difficulty perceiving depth information accurately without additional techniques like stereo vision or structured light. Furthermore, cameras are susceptible to various environmental factors like fog, rain, and snow, which can significantly degrade image quality and hinder accurate perception. Occlusion, as previously discussed, is another significant limitation. Finally, cameras alone provide only 2D information; extracting robust 3D information requires additional sensors or advanced processing techniques. For example, a camera might struggle to differentiate between a box and a similarly sized object due to a lack of depth information. A lidar, on the other hand, provides crucial depth information, overcoming this limitation. Therefore, a robust perception system often integrates multiple sensor modalities to overcome these limitations and ensure reliable performance in various environments.

Q 20. How would you design a perception system for a specific application (e.g., autonomous driving, robotics)?

Designing a perception system for a specific application like autonomous driving requires a systematic approach. First, I would define the specific perception tasks required, such as object detection, lane detection, and free space estimation. Next, I would select appropriate sensors based on the specific needs and constraints of the application. For autonomous driving, this might involve cameras, lidar, radar, and potentially ultrasonic sensors. Then, I would design data processing pipelines to acquire, process, and fuse data from different sensors. This would involve implementing algorithms for calibration, filtering, segmentation, object tracking, and scene understanding. For example, I might use a combination of deep learning algorithms for object detection and traditional computer vision techniques for lane detection. Finally, I would carefully evaluate the performance of the system, utilizing appropriate metrics and benchmarking against established standards. This would involve rigorous testing in various environments and scenarios to ensure robustness and safety. For a robotic application, the process would be similar, tailoring the sensor selection and algorithm choices to meet the specific needs of the robot, its environment, and task(s).

Q 21. Discuss the ethical considerations related to perception systems.

Ethical considerations are paramount in the development and deployment of perception systems. Bias in training data can lead to biased and unfair outcomes. For example, a self-driving car trained on data predominantly featuring drivers of a certain ethnicity might exhibit biased behavior towards individuals from other ethnicities. Privacy concerns are also significant, especially with systems that process visual data of individuals. Ensuring data anonymization and responsible data handling is crucial. Safety is another critical ethical consideration. Perception system failures can have serious consequences, particularly in safety-critical applications like autonomous driving. Thorough testing, validation, and verification are essential to minimize the risk of accidents. Transparency and explainability are also becoming increasingly important. Understanding how a perception system makes decisions is crucial for accountability and trust. Therefore, a comprehensive approach to ethical considerations must be integrated throughout the entire lifecycle of perception system development and deployment, from data acquisition to system testing and deployment.

Q 22. Explain your experience with different data formats used in sensing and perception.

My experience with sensor data formats spans a wide range, from raw sensor readings to processed feature vectors. I’ve worked extensively with image data (JPEG, PNG, TIFF, RAW), point cloud data (PLY, PCD, LAS), lidar data (various proprietary formats), and video streams (H.264, H.265, MJPEG). Each format presents unique challenges. For instance, raw sensor data requires significant preprocessing, while compressed formats demand efficient decoding. Working with point clouds often involves dealing with large datasets and varying densities. In one project, we were working with a combination of high-resolution camera images and dense LiDAR point clouds to build a 3D map of an urban environment. The LiDAR data, initially in a proprietary format, had to be converted to PCD for efficient processing with PCL (Point Cloud Library). Understanding these nuances is critical to effectively designing and implementing a perception system.

Furthermore, I am familiar with metadata associated with these formats, such as timestamps, sensor parameters (focal length, GPS coordinates, etc.), and calibration matrices. Accurate metadata is crucial for data fusion and robust system performance. For example, in a robotics application, precise timestamp synchronization between camera and LiDAR data is essential for accurate object localization.

Q 23. How do you handle different lighting conditions in computer vision?

Handling varying lighting conditions is a fundamental challenge in computer vision. My approach involves a multi-faceted strategy. First, I employ robust image preprocessing techniques, such as histogram equalization and gamma correction, to normalize image intensities and improve contrast. This helps mitigate the impact of uneven illumination. Second, I utilize advanced algorithms that are less sensitive to lighting variations, like those based on SIFT (Scale-Invariant Feature Transform) or SURF (Speeded-Up Robust Features) for feature extraction. These algorithms are designed to identify features that are relatively invariant to changes in lighting and viewpoint.

Furthermore, I incorporate techniques like image normalization, which adjusts the pixel values to have a consistent mean and standard deviation across images. For more sophisticated solutions, I might employ deep learning models pre-trained on diverse datasets that include a wide range of lighting conditions. These models can learn to effectively identify objects and features even with significant variations in lighting. In one project involving autonomous driving, we incorporated a generative adversarial network (GAN) to improve the quality of images captured under low-light conditions, significantly boosting the performance of our object detection system.

#Example of histogram equalization in Python using OpenCV import cv2 img = cv2.imread('image.jpg') equ = cv2.equalizeHist(img) cv2.imshow('Equalized Image', equ) cv2.waitKey(0) cv2.destroyAllWindows()Q 24. Explain the concept of uncertainty modeling in perception systems.

Uncertainty modeling is crucial in perception systems because sensor data is inherently noisy and ambiguous. We cannot always be certain about our measurements. Therefore, incorporating uncertainty allows us to quantify the confidence in our perceptions. This is often done using probabilistic frameworks. For example, instead of representing an object’s location as a single point, we represent it as a probability distribution, reflecting our uncertainty about its precise location. This could be a Gaussian distribution, where the mean represents the most likely location and the variance indicates the uncertainty.

Common methods for uncertainty modeling include Kalman filtering, particle filters, and Bayesian networks. Kalman filters are effective for tracking objects with linear dynamics and Gaussian noise. Particle filters are better suited for nonlinear systems and non-Gaussian noise. Bayesian networks allow for the modeling of complex relationships between multiple uncertain variables. These techniques allow the system to maintain a belief state that accounts for the uncertainty inherent in the sensor data and propagate this uncertainty through the system. For instance, in robot navigation, uncertainty modeling allows the robot to navigate safely, accounting for errors in its position estimation.

Q 25. What are some common performance metrics used in evaluating perception systems?

The performance of a perception system is assessed using various metrics, depending on the specific application. Common metrics include:

- Accuracy: The percentage of correctly classified objects or events. For example, in object detection, accuracy measures the proportion of correctly identified and localized objects.

- Precision: The ratio of correctly predicted positive instances to the total number of predicted positive instances. It measures how many of the predicted positive instances are actually positive.

- Recall (Sensitivity): The ratio of correctly predicted positive instances to the total number of actual positive instances. It measures the ability to find all positive instances.

- F1-score: The harmonic mean of precision and recall, providing a balanced measure of both.

- Mean Average Precision (mAP): Often used in object detection, this metric averages the precision across different recall levels.

- Intersection over Union (IoU): Measures the overlap between predicted and ground truth bounding boxes in object detection.

- Root Mean Squared Error (RMSE): Used to assess the accuracy of continuous variables, such as depth estimation or pose estimation.

The choice of metrics depends heavily on the specific task. For example, in autonomous driving, high recall is crucial to avoid missing obstacles, even if it means accepting some false positives. In medical image analysis, high precision is paramount to avoid misdiagnoses, even if it means missing some instances of the disease.

Q 26. Describe your experience with different sensor data processing libraries.

I have extensive experience with several sensor data processing libraries. These include:

- OpenCV: A comprehensive library for computer vision tasks, providing tools for image processing, feature extraction, object detection, and more. I’ve used OpenCV extensively for tasks ranging from image filtering and feature detection to building custom object tracking systems.

- PCL (Point Cloud Library): A powerful library for processing 3D point cloud data, offering algorithms for filtering, segmentation, registration, and surface reconstruction. I have employed PCL for tasks like 3D mapping and object recognition from LiDAR data.

- ROS (Robot Operating System): While not strictly a sensor processing library, ROS provides a robust framework for integrating various sensor data sources, managing communication between different nodes, and implementing complex robotic systems. My experience with ROS has been instrumental in creating integrated perception systems for robots.

- TensorFlow and PyTorch: These deep learning frameworks are essential for developing and deploying advanced perception models, such as convolutional neural networks (CNNs) for image classification and recurrent neural networks (RNNs) for sequence processing. I’ve utilized these frameworks to build highly accurate object detection and semantic segmentation models.

My proficiency in these libraries enables me to efficiently process and analyze sensor data from various sources, developing effective perception solutions for diverse applications.

Q 27. How do you ensure the robustness and reliability of a perception system?

Ensuring robustness and reliability in a perception system is paramount. This requires a multi-pronged approach:

- Data Augmentation: Expanding the training dataset with variations in lighting, viewpoint, and noise to improve the model’s generalization capabilities.

- Error Detection and Correction: Implementing mechanisms to detect and correct errors introduced by sensors or processing steps. This can involve outlier rejection techniques, consistency checks between different sensors, and data fusion strategies.

- Redundancy: Using multiple sensors or algorithms to provide redundant information and improve overall reliability. If one sensor fails or algorithm makes a mistake, other sources can compensate.

- Fault Tolerance: Designing the system to gracefully handle sensor failures or unexpected inputs without complete system collapse. This often involves designing modular systems with fail-safe mechanisms.

- Rigorous Testing and Validation: Thoroughly testing the system under diverse and challenging conditions, including edge cases and adversarial scenarios, to identify weaknesses and improve robustness.

For instance, in an autonomous vehicle application, we used multiple cameras and LiDAR sensors to ensure robustness to sensor failures and to obtain a comprehensive understanding of the environment. Regular calibration and validation are essential to guarantee the system’s continued accuracy and reliability.

Q 28. Discuss your experience with different cloud platforms for processing sensor data.

My experience with cloud platforms for processing sensor data includes working with AWS (Amazon Web Services), Google Cloud Platform (GCP), and Azure. These platforms offer scalable computing resources and specialized services for processing large sensor datasets. I’ve used them for tasks such as:

- Training deep learning models: Cloud-based GPUs allow for efficient training of complex perception models on massive datasets.

- Real-time data processing: Cloud services provide the infrastructure needed to process streaming sensor data in real time, enabling applications like remote monitoring and autonomous systems.

- Data storage and management: Cloud storage solutions offer a scalable and secure way to store and manage large sensor datasets.

- Data analytics and visualization: Cloud platforms provide tools for analyzing sensor data and visualizing insights.

For example, in a project involving environmental monitoring, we used AWS to process sensor data from a network of remote sensors, storing the data in S3 and using EC2 instances for processing and analysis. The scalable nature of these cloud platforms allows for easy adaptation to changing data volumes and computational needs. The choice of platform often depends on specific requirements regarding cost, scalability, existing infrastructure, and specific services offered.

Key Topics to Learn for Sensing and Perception Systems Interview

- Sensor Fundamentals: Understanding different sensor types (e.g., optical, acoustic, inertial), their operating principles, limitations, and signal characteristics. Explore signal-to-noise ratios and calibration techniques.

- Signal Processing: Mastering techniques like filtering, feature extraction, and data fusion for efficient and accurate data analysis from multiple sensors. Consider practical applications such as noise reduction in audio processing or image enhancement.

- Perception Algorithms: Familiarity with algorithms used for object detection, recognition, tracking, and scene understanding. Explore both classical computer vision methods and deep learning approaches.

- Data Structures and Algorithms: Efficient data representation and manipulation are crucial. Review relevant algorithms for tasks like searching, sorting, and graph traversal within the context of sensor data processing.

- System Integration and Design: Understanding the challenges and approaches involved in integrating various sensors and algorithms into a cohesive system. This includes considerations for hardware, software, and power constraints.

- Robotics and Automation Applications: Explore how sensing and perception systems are utilized in robotics for tasks like navigation, manipulation, and human-robot interaction. Consider examples like autonomous vehicles or industrial robots.

- Real-time Systems: Develop a strong understanding of real-time constraints and techniques for processing sensor data with low latency, crucial for applications like autonomous driving or medical imaging.

- Ethical Considerations: Discuss the ethical implications of sensing and perception systems, including privacy, bias, and safety considerations.

Next Steps

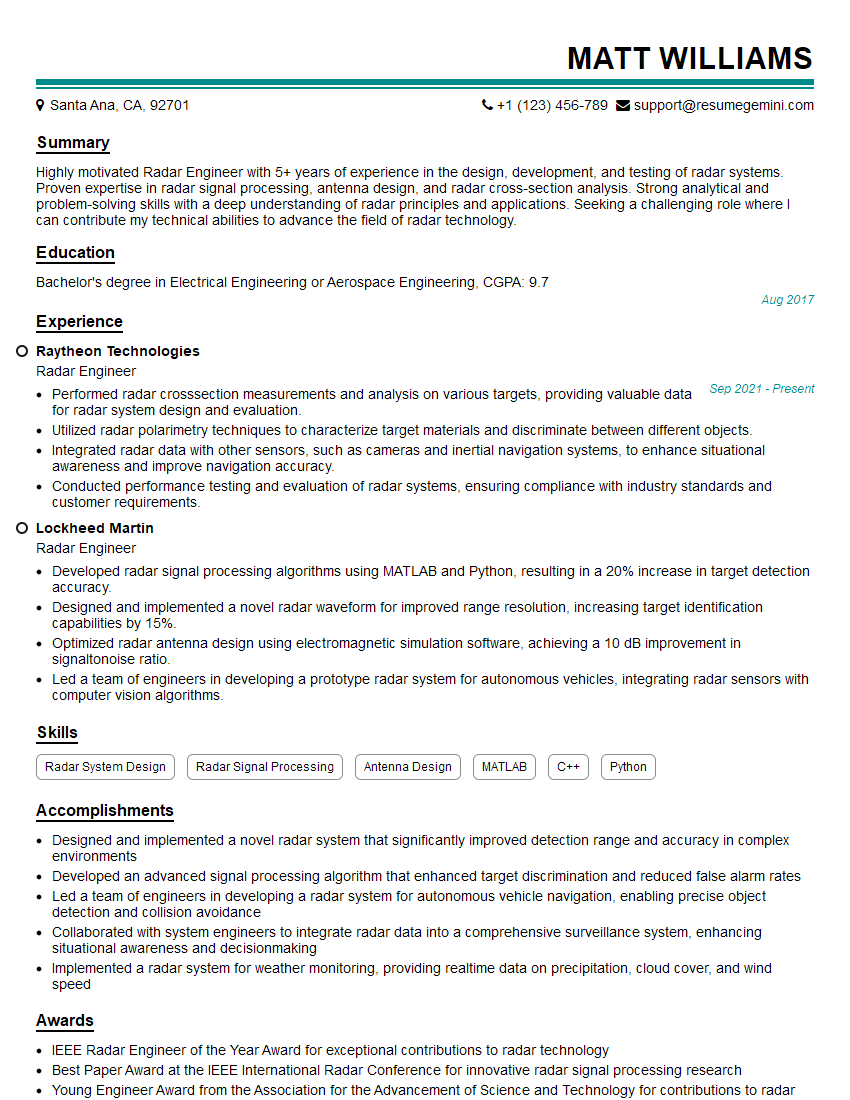

Mastering Sensing and Perception Systems opens doors to exciting careers in robotics, autonomous systems, computer vision, and many more innovative fields. A strong understanding of these concepts is highly valued by employers. To significantly enhance your job prospects, focus on creating a resume that is both ATS-friendly and showcases your skills effectively. ResumeGemini is a trusted resource to help you build a professional and impactful resume tailored to your unique experience. Examples of resumes specifically tailored for Sensing and Perception Systems roles are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good