Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Sensor Exploitation interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Sensor Exploitation Interview

Q 1. Explain the difference between active and passive sensors.

The core difference between active and passive sensors lies in how they acquire information. Active sensors emit energy and then analyze the reflected or returned signal. Think of it like shouting in a cave and listening for the echoes to understand the cave’s shape. Examples include radar, lidar, and sonar. These sensors actively probe the environment and are less affected by ambient light or other sources of interference. They provide a more controlled and often more precise measurement.

Passive sensors, on the other hand, simply detect the energy that is already present in the environment. They’re like listening to the sounds of the forest to understand the animals inhabiting it without making a sound yourself. Examples are cameras (Electro-Optical, EO) and infrared (IR) sensors. They’re less intrusive but can be highly dependent on the available energy sources and may struggle in low-light conditions.

In essence, active sensors are the question-askers, while passive sensors are the listeners.

Q 2. Describe various sensor modalities (e.g., radar, lidar, sonar, EO/IR).

Sensor modalities represent different ways to sense and measure aspects of the environment. Let’s explore some key ones:

- Radar (Radio Detection and Ranging): Uses radio waves to detect objects. It’s great for long ranges, works in all weather conditions (though rain can affect accuracy), and can provide information about velocity and range.

- Lidar (Light Detection and Ranging): Employs lasers to measure distances. Provides highly accurate 3D point cloud data, ideal for mapping and autonomous navigation. However, it can be more expensive and susceptible to atmospheric conditions like fog and dust.

- Sonar (Sound Navigation and Ranging): Uses sound waves to detect objects underwater. Essential for navigation and mapping in aquatic environments. Range and resolution depend heavily on water conditions.

- EO/IR (Electro-Optical/Infrared): These sensors cover the visible and infrared spectrum. EO sensors are essentially cameras providing visual images. IR sensors detect heat, allowing for detection even in low-light or complete darkness. This combination gives valuable information about object temperature and identification.

Each modality has its strengths and weaknesses, making the selection dependent on the application and the specific requirements.

Q 3. How does sensor noise impact data quality and what techniques are used to mitigate it?

Sensor noise is any unwanted signal that interferes with the accurate measurement of the intended signal. It’s like static on a radio, obscuring the actual music. Noise can significantly degrade data quality, leading to inaccurate estimations and erroneous conclusions. Think of trying to identify a faint signal (the actual measurement) in a sea of noise.

Several techniques are employed to mitigate noise:

- Filtering: Applying digital filters (e.g., Kalman filter, median filter) to smooth the data and remove high-frequency noise.

- Averaging: Repeated measurements are averaged to reduce the impact of random noise. The assumption is that the noise is randomly distributed.

- Calibration: Properly calibrating the sensor minimizes systematic errors that contribute to noise.

- Signal Processing Techniques: Advanced signal processing techniques such as wavelet transforms or Fourier transforms can isolate and remove specific types of noise.

Choosing the right technique depends heavily on the nature of the noise (is it random, systematic, or periodic?) and the characteristics of the sensor data.

Q 4. Explain the concept of sensor fusion and its benefits.

Sensor fusion is the process of combining data from multiple sensors to obtain a more complete and accurate representation of the environment than could be achieved by using any single sensor alone. It’s like having several witnesses describing an event—each witness might have a slightly different perspective, but by combining their testimonies, you get a clearer picture of what happened.

Benefits of sensor fusion include:

- Improved Accuracy and Reliability: Combining data from different sensors increases confidence in the results and reduces errors.

- Increased Coverage: Sensors with complementary capabilities can provide a more comprehensive view. For instance, combining radar and EO/IR data improves detection in varied weather conditions.

- Enhanced Situational Awareness: Fusion provides a more holistic understanding of the environment, including aspects that a single sensor might miss.

- Robustness to Failures: If one sensor fails, the system can still operate using data from the remaining sensors.

Sensor fusion is widely used in applications like autonomous driving, robotics, and surveillance systems.

Q 5. What are common challenges in sensor data registration and alignment?

Sensor data registration and alignment are crucial for effective sensor fusion. It’s the process of ensuring that data from different sensors is correctly mapped to a common coordinate system. Imagine trying to merge two maps that are not aligned – you’d have a mess!

Common challenges include:

- Different Coordinate Systems: Sensors may use different coordinate systems (e.g., Cartesian, polar). Transforming data between these systems accurately can be complex.

- Timing Differences: Sensors may not collect data simultaneously, leading to timing discrepancies that need careful handling.

- Geometric Distortions: Lenses, sensor positioning, and other factors can introduce geometric distortions that need correction.

- Environmental Factors: Temperature changes or vibrations can affect sensor positions, causing misalignment over time.

These challenges are addressed through techniques such as calibration, transformation matrices, and data interpolation. Advanced algorithms like Iterative Closest Point (ICP) are often used for accurate alignment.

Q 6. Describe different methods for sensor calibration and their importance.

Sensor calibration is the process of determining the relationship between the sensor’s output and the actual physical quantity being measured. It’s like calibrating a scale to ensure it accurately measures weight. Accurate calibration is critical for obtaining reliable and meaningful data.

Methods for sensor calibration vary depending on the sensor type, but common approaches include:

- Two-Point Calibration: Determining the relationship using two known points. Simple but can be inaccurate for non-linear relationships.

- Multi-Point Calibration: Using multiple known points to better define the sensor’s response curve. Improves accuracy for non-linear sensors.

- Factory Calibration: Pre-calibrated sensors are provided by the manufacturer. This is typically done using sophisticated equipment.

- In-Situ Calibration: Calibration is performed using reference standards or known targets in the actual operational environment.

The importance of calibration cannot be overstated. Inaccurate calibration introduces systematic errors that can propagate through the entire system, rendering the data unreliable and potentially dangerous in safety-critical applications.

Q 7. How do you handle missing or corrupted sensor data?

Handling missing or corrupted sensor data is a crucial aspect of sensor exploitation. Imagine a crucial piece of the puzzle being missing—you cannot get the full picture.

Strategies for addressing this problem include:

- Data Interpolation: Estimating the missing values using neighboring data points. Linear interpolation is a simple approach, while more sophisticated methods like spline interpolation provide better accuracy.

- Data Imputation: Replacing missing values with statistically derived values, such as the mean or median of the available data. More complex techniques involve using machine learning models to predict missing values based on patterns in the available data.

- Sensor Redundancy: Using multiple sensors to measure the same quantity. If one sensor fails, data from the other sensor can be used.

- Error Detection and Correction: Implementing error detection algorithms to identify corrupted data and potentially correct it using error-correction codes.

The best approach depends on the nature of the data, the extent of missing or corrupted data, and the acceptable level of error. In critical applications, it may be necessary to implement several strategies in combination.

Q 8. Explain the concept of signal-to-noise ratio (SNR) and its significance.

Signal-to-noise ratio (SNR) is a measure of the strength of a desired signal relative to the background noise. It’s essentially a ratio indicating how much of the signal is ‘true’ information versus how much is interference. A high SNR means the signal is strong and clear, while a low SNR implies the signal is weak and difficult to discern from the noise. Think of it like trying to hear a conversation in a crowded room; a high SNR would be like a quiet room, making the conversation easy to understand, while a low SNR is like a noisy party, making it hard to hear what’s being said.

In sensor exploitation, a high SNR is crucial. If our sensors are picking up more noise than signal, the data is useless or requires substantial processing to extract meaningful information. For example, in satellite imagery, cloud cover, atmospheric interference, and sensor limitations all contribute to noise. A high SNR ensures that we can accurately interpret the image and extract valuable information like identifying specific objects or changes in land cover.

SNR is typically expressed in decibels (dB) and calculated as 10 * log10(Psignal/Pnoise), where Psignal and Pnoise represent the power of the signal and noise, respectively. Optimizing sensor design, signal processing techniques, and careful data acquisition methods are all important for achieving a high SNR.

Q 9. What are different types of image processing techniques used in sensor exploitation?

Image processing techniques in sensor exploitation are vital for enhancing image quality, extracting features, and ultimately deriving meaningful information. Common techniques include:

- Noise reduction: Techniques like median filtering, Gaussian filtering, and wavelet denoising help remove random noise from images, improving SNR and clarity. This is crucial for improving the visual quality and extracting relevant features.

- Image enhancement: Techniques like histogram equalization, contrast stretching, and sharpening improve the visual appearance of images by enhancing contrast and detail. This helps in visualizing subtle features that may be otherwise difficult to discern.

- Geometric correction: This involves correcting for distortions in images caused by sensor orientation, terrain variations, or atmospheric effects. Accurate geometric correction is crucial for integrating sensor data from different sources and using it with geographic information systems (GIS).

- Image segmentation: Techniques like thresholding, region growing, and edge detection help divide an image into meaningful regions or objects based on their characteristics. This is a critical step in object detection and feature extraction.

- Image classification: Techniques like supervised and unsupervised classification methods, like Support Vector Machines (SVM) or Random Forests, are used to assign labels or categories to pixels or regions within an image. This allows for identifying different land cover types, materials, or other objects.

The choice of techniques depends heavily on the type of sensor data, the specific application, and the quality of the raw data. Often, a combination of techniques is used in a processing pipeline to achieve optimal results.

Q 10. Describe your experience with specific sensor data formats (e.g., GeoTIFF, NetCDF).

I have extensive experience working with various sensor data formats, including GeoTIFF and NetCDF. GeoTIFF (GeoTagged TIFF) is a widely used format for storing georeferenced raster data, meaning the image is linked to a geographic coordinate system. This allows for easy integration with GIS software and spatial analysis. I’ve utilized GeoTIFF extensively in projects involving aerial photography, satellite imagery, and digital elevation models (DEMs). For example, I used GeoTIFFs of high-resolution satellite imagery to map deforestation in the Amazon rainforest, leveraging the format’s georeferencing capabilities to accurately overlay it on a base map.

NetCDF (Network Common Data Form) is a self-describing, binary format particularly suited for storing large, multi-dimensional arrays of scientific data. I have frequently employed NetCDF when working with data from weather satellites, oceanographic sensors, and climate models. The ability of NetCDF to efficiently store multiple variables (e.g., temperature, humidity, salinity) associated with spatiotemporal coordinates makes it extremely useful for processing large volumes of environmental data. In one project, I used NetCDF data from oceanographic buoys to analyze changes in sea surface temperature over time and understand their relationship to large-scale climate patterns. Understanding the nuances of these formats, including metadata handling and efficient data access methods, is key to effective sensor exploitation.

Q 11. How do you evaluate the accuracy and precision of sensor data?

Evaluating the accuracy and precision of sensor data is paramount in ensuring reliable results and informed decision-making. Accuracy refers to how close the measured value is to the true value, while precision refers to the reproducibility of measurements. We use various techniques for this evaluation:

- Ground truthing: This involves collecting data on the ground at locations corresponding to sensor measurements, acting as a benchmark to compare against sensor readings. For example, for a soil moisture sensor, we might measure moisture levels at multiple locations using a calibrated instrument, and then compare these to the sensor data at the same locations.

- Comparison with reference data: This involves comparing sensor data to data from other trusted sources, such as high-accuracy maps or established models. This helps to ascertain the consistency and quality of the sensor data.

- Statistical analysis: Methods like calculating root mean square error (RMSE), mean absolute error (MAE), and bias help quantify the differences between sensor data and reference data or ground truth measurements. Low values indicate higher accuracy.

- Uncertainty analysis: Propagating uncertainties from individual sensor measurements to final results allows for understanding the overall confidence in the derived information.

The approach to data evaluation depends on the sensor type, the application, and the availability of reference data. A rigorous evaluation methodology is critical for establishing the trustworthiness of sensor data and ensuring reliable conclusions from the analysis.

Q 12. What are some common algorithms used for feature extraction from sensor data?

Feature extraction algorithms aim to identify and quantify meaningful patterns and characteristics from raw sensor data. Several common algorithms are employed, and the choice depends on the type of data and the goal of the analysis:

- Principal Component Analysis (PCA): A dimensionality reduction technique that identifies the principal components, which capture most of the variance in the data. This is useful for reducing the computational burden of subsequent analysis steps without significant information loss.

- Wavelet transforms: Used for decomposing signals into different frequency components, enabling the identification of specific features at different scales. Useful for analyzing signals with multiple frequency components, such as those from seismic sensors.

- Fourier transforms: Similar to wavelet transforms, but suited for analyzing periodic signals and identifying dominant frequencies. Often used in spectral analysis of sensor data like hyperspectral images.

- Edge detection (e.g., Canny, Sobel): Algorithms identifying boundaries between regions of different characteristics in images. Useful for object detection, segmentation, and feature identification in imagery.

- Machine learning techniques: Algorithms like convolutional neural networks (CNNs) are frequently used for feature extraction in imagery. CNNs automatically learn relevant features from the data, requiring less manual feature engineering compared to classical methods.

The effectiveness of these algorithms often depends on proper preprocessing of data and careful selection of parameters. Understanding the strengths and limitations of each algorithm is critical for successful feature extraction.

Q 13. Describe your experience with different sensor platforms (e.g., airborne, satellite, ground-based).

My experience spans diverse sensor platforms, encompassing airborne, satellite, and ground-based systems. Each platform presents unique challenges and opportunities. Airborne sensors, such as those on manned aircraft or drones, offer high spatial resolution and flexibility in data acquisition. I’ve worked with airborne LiDAR (Light Detection and Ranging) for creating high-accuracy 3D models of terrain, and with hyperspectral cameras for detailed spectral analysis of ground features.

Satellite sensors provide broad area coverage and are essential for large-scale monitoring applications. I’ve used satellite imagery from Landsat and Sentinel for land cover mapping and change detection. The limitations here are lower resolution compared to airborne sensors and the dependency on weather conditions for data acquisition.

Ground-based sensors, such as weather stations, soil moisture sensors, and seismic sensors, offer high temporal resolution and detailed in-situ measurements. I’ve worked with ground-based sensor networks for monitoring environmental conditions over time and integrating this data with remote sensing data for a more comprehensive understanding. Experience with these different platforms helps me develop tailored strategies for data acquisition, processing, and analysis, taking into account the strengths and weaknesses of each platform.

Q 14. Explain the concept of spatial resolution and its impact on sensor data interpretation.

Spatial resolution refers to the fineness of detail in sensor data, essentially the size of the smallest distinguishable feature. High spatial resolution means smaller features can be identified, leading to more detailed information. Imagine looking at a photograph: a high-resolution image allows you to see small details like individual leaves on a tree, while a low-resolution image only shows the general shape of the tree.

In sensor exploitation, spatial resolution significantly impacts interpretation. High-resolution data enables detailed analysis and identification of small objects or features, leading to more accurate and refined results. For example, high-resolution satellite imagery is necessary for accurate building footprint mapping in urban areas. On the other hand, low-resolution data may be sufficient for large-scale land cover classification where precise location of individual features is less critical. The selection of an appropriate spatial resolution depends on the specific application and the trade-off between data detail, cost, and data volume. Lower resolutions generally result in smaller file sizes and lower processing requirements, but higher resolutions lead to greater accuracy in many applications.

Q 15. How do environmental factors affect sensor performance?

Environmental factors significantly impact sensor performance. Think of it like this: a camera works best in ideal lighting; sensors are similar. Temperature, humidity, pressure, and even electromagnetic interference (EMI) can all affect sensor readings, leading to inaccuracies or complete sensor failure.

- Temperature: Extreme temperatures can damage sensitive components or alter their calibration, leading to drift in measurements. For example, a temperature sensor might report inaccurate readings if exposed to direct sunlight.

- Humidity: High humidity can cause corrosion or condensation on sensor surfaces, affecting conductivity and signal transmission. This is a common issue with humidity sensors themselves, and can affect other sensors indirectly.

- Pressure: Changes in atmospheric pressure can impact the performance of sensors sensitive to pressure differentials, such as altimeters or certain types of pressure sensors used in industrial settings.

- Electromagnetic Interference (EMI): EMI from nearby electrical equipment or radio frequency sources can corrupt sensor signals, resulting in noise and inaccurate data. This is particularly relevant for sensors that use wireless communication.

Understanding these environmental effects is crucial for designing robust sensor systems. This often involves using environmental compensation techniques, selecting sensors with appropriate operating ranges, and incorporating shielding to minimize interference.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are some common challenges in real-time sensor data processing?

Real-time sensor data processing presents unique challenges. The key is dealing with massive volumes of data arriving continuously with strict latency requirements.

- High Data Volume and Velocity: Sensors generate data constantly. Processing this flood of data in real-time requires efficient algorithms and high-performance computing infrastructure. Imagine a network of hundreds of environmental sensors – managing that stream is a significant undertaking.

- Data Latency: Delays in processing can lead to missed opportunities or inaccurate actions. In a self-driving car scenario, even a fraction of a second delay in processing sensor data can be catastrophic.

- Data Variability and Noise: Sensor readings are rarely perfect. Noise, outliers, and errors are common. Robust algorithms are needed to filter out noise and identify reliable data points. Think of a weather sensor affected by a sudden gust of wind; a sophisticated algorithm must be able to filter out the temporary peak.

- Resource Constraints: Real-time systems often operate with limited computing power and memory, requiring optimized algorithms and efficient data structures.

Addressing these challenges requires careful system design, including the selection of appropriate hardware and software, optimization of algorithms, and implementation of robust error-handling mechanisms.

Q 17. Describe your experience with different programming languages used in sensor data analysis (e.g., Python, MATLAB).

My experience spans several programming languages commonly used in sensor data analysis. Python and MATLAB are my favorites for different reasons.

- Python: I leverage Python extensively for its versatility, vast ecosystem of libraries (NumPy, Pandas, Scikit-learn), and ease of use. For example, I’ve used Pandas for data manipulation and cleaning, NumPy for numerical computations, and Scikit-learn for machine learning tasks such as anomaly detection and predictive modeling on sensor data.

import pandas as pd; data = pd.read_csv('sensor_data.csv')is a common starting point for many of my projects. - MATLAB: MATLAB excels in signal processing and visualization, making it ideal for analyzing sensor data with complex waveforms or time-series patterns. Its built-in functions for signal filtering, spectral analysis, and plotting are invaluable for tasks like analyzing sensor noise and identifying trends. I’ve used it extensively in projects involving acoustic or seismic sensors.

I’m also proficient in C++ for performance-critical applications requiring real-time processing and low-level hardware interaction. Choosing the right language depends greatly on the specific application requirements.

Q 18. How do you ensure the security and integrity of sensor data?

Securing and maintaining the integrity of sensor data is paramount. A multi-layered approach is vital.

- Data Encryption: Encrypting data both in transit (using protocols like TLS/SSL) and at rest (using encryption algorithms) protects it from unauthorized access. This prevents data breaches and ensures confidentiality.

- Access Control: Implementing robust access control mechanisms limits access to sensor data based on roles and permissions. Only authorized personnel should have access to sensitive data.

- Data Integrity Checks: Employing checksums, hashing algorithms, and digital signatures verifies data authenticity and detects tampering. If the data integrity check fails, it alerts us to potential compromise.

- Secure Data Storage: Storing data in secure databases with appropriate backup and disaster recovery plans protects against data loss and unauthorized access. We use regularly updated databases and intrusion detection systems.

- Regular Security Audits: Conducting periodic security audits and penetration testing identifies vulnerabilities and weaknesses in the sensor system and data infrastructure.

Think of it like a bank vault: multiple layers of security ensure the safety of the assets within.

Q 19. Explain your experience with different database systems for storing and managing sensor data.

My experience with database systems for sensor data includes both relational and NoSQL databases. The choice depends on the nature and scale of the data.

- Relational Databases (e.g., PostgreSQL, MySQL): Relational databases are well-suited for structured sensor data with defined schemas. They excel at handling complex queries and transactions, and offer robust data integrity features. I’ve used PostgreSQL extensively for projects involving large, structured datasets where data relationships were important.

- NoSQL Databases (e.g., MongoDB, Cassandra): NoSQL databases are excellent for handling high-volume, unstructured, or semi-structured sensor data. Their scalability and flexibility make them suitable for real-time applications with rapidly growing datasets. In projects involving large-scale IoT deployments, I’ve used MongoDB due to its ability to handle high volume, variable data.

Often, a hybrid approach combining relational and NoSQL databases is used to leverage the strengths of each. This architecture allows flexibility to accommodate structured and unstructured sensor data and maintain data integrity.

Q 20. Describe a project where you used sensor data to solve a specific problem.

In a recent project, I used sensor data from a network of soil moisture sensors to optimize irrigation in a large agricultural field. The goal was to reduce water consumption while maximizing crop yield.

The project involved:

- Data Acquisition: Collecting soil moisture data from multiple sensors deployed throughout the field.

- Data Preprocessing: Cleaning and filtering the data to remove noise and outliers.

- Data Analysis: Applying statistical methods and machine learning algorithms to identify patterns and correlations between soil moisture levels, weather conditions, and crop growth.

- Irrigation Control: Developing an irrigation system that automatically adjusts watering schedules based on real-time soil moisture data and weather forecasts.

The results were impressive. We achieved a 20% reduction in water consumption without compromising crop yield, demonstrating the potential of sensor data for creating more efficient and sustainable agricultural practices. This project showcased my skills in data acquisition, data analysis, and the development of practical applications for sensor data.

Q 21. How do you identify and resolve anomalies in sensor data?

Identifying and resolving anomalies in sensor data is crucial for maintaining data quality and system reliability. A combination of techniques is typically employed.

- Statistical Methods: Techniques like Z-score, IQR, or standard deviation can detect outliers that deviate significantly from the norm. For example, a sudden spike in temperature readings from a sensor might indicate a malfunction.

- Machine Learning: Algorithms like One-Class SVM or Isolation Forest can learn the normal behavior of a sensor and identify deviations as anomalies. These algorithms are particularly useful for detecting subtle anomalies not easily identified by simple statistical methods.

- Contextual Analysis: Considering external factors such as weather conditions or equipment status can help distinguish between true anomalies and expected variations. For instance, a drop in temperature might be an anomaly in summer but expected in winter.

- Data Visualization: Visualizing data using graphs and charts can help identify patterns and anomalies that may not be readily apparent in numerical data. This provides a quick overview of the data and highlights suspicious points.

Once an anomaly is identified, its cause must be investigated. This might involve checking sensor calibration, inspecting sensor hardware, or analyzing related data sources to determine the root cause. The resolution strategy depends on the cause. It might involve recalibrating a sensor, replacing a faulty component, or adjusting the anomaly detection thresholds.

Q 22. What are different types of sensor errors and how can they be detected?

Sensor errors are inevitable in any sensor system. Understanding and mitigating these errors is crucial for accurate data interpretation. There are several types, broadly categorized as:

- Systematic Errors: These are consistent, repeatable errors that affect all measurements in a predictable way. For example, a temperature sensor consistently reading 2°C higher than the actual temperature is a systematic error. Detection involves calibration – comparing the sensor’s readings to a known standard.

- Random Errors: These are unpredictable variations in measurements, often due to noise or minor fluctuations in the environment. Think of the slight variations you might see if you repeatedly measure the same object’s length with a ruler. Detection involves statistical analysis – looking at the distribution of readings over time and calculating metrics like standard deviation. If the standard deviation is high, it suggests a high level of random error.

- Drift: This refers to a gradual change in sensor readings over time, often due to aging components or environmental factors. For instance, a pressure sensor might gradually show lower readings as its internal components wear out. Detection involves regular calibration and monitoring of readings over time. Plotting the readings against time often reveals this drift visually.

- Bias Errors: A bias error is a consistent deviation from the true value, often caused by a systematic issue with the sensor’s design or placement. For instance, a wind sensor placed near a building may consistently underestimate wind speed due to wind shadowing.

Detection of sensor errors combines several techniques. Calibration against known standards is vital for systematic errors. Statistical methods, such as calculating mean, median, and standard deviation, help identify and quantify random errors. Data visualization, using time-series plots and histograms, can reveal drift and outliers. Finally, rigorous sensor placement and environmental monitoring can help prevent bias errors.

Q 23. What experience do you have with sensor network design and deployment?

I have extensive experience in sensor network design and deployment, particularly in environmental monitoring applications. In one project, I led the design and deployment of a network of soil moisture sensors across a large agricultural field. This involved:

- Sensor Selection: Choosing appropriate sensors based on factors like required accuracy, range, power consumption, and environmental conditions (e.g., temperature, humidity).

- Network Topology: Designing a network topology (e.g., star, mesh, tree) based on factors like communication range, power constraints, and redundancy requirements. We opted for a star topology in the agricultural project, with each sensor sending data to a central gateway.

- Communication Protocol: Selecting a suitable communication protocol (e.g., Zigbee, LoRaWAN, Wi-Fi) based on factors like data rate, range, power consumption, and security. We used LoRaWAN due to its long-range capabilities and low power consumption, crucial for remote field deployments.

- Data Acquisition and Processing: Designing a system for data acquisition and pre-processing, which included filtering, smoothing, and error correction. We used a cloud-based platform to collect and manage the data.

- Deployment and Maintenance: Physically deploying the sensors and establishing a routine for calibration, maintenance, and data quality checks.

This project successfully demonstrated the ability to monitor soil moisture across a large area, improving irrigation efficiency and crop yields. I also have experience designing networks for other applications, including smart buildings and industrial automation.

Q 24. Explain your understanding of different sensor data visualization techniques.

Sensor data visualization is essential for understanding patterns, trends, and anomalies. Effective visualization techniques depend heavily on the type of data and the insights we want to gain. Common techniques include:

- Time-series plots: Ideal for displaying data collected over time, revealing trends and patterns. These are crucial for monitoring sensor drift or identifying cyclical phenomena.

- Scatter plots: Useful for visualizing the relationship between two variables, helping identify correlations or dependencies between sensor readings.

- Heatmaps: Excellent for displaying data spatially, particularly useful in applications like environmental monitoring or mapping sensor readings across a geographical area.

- Histograms: Help to visualize the distribution of data, revealing the frequency of different values and identifying outliers or unusual readings.

- Geographic Information Systems (GIS) mapping: Incorporating sensor data onto maps is crucial for spatial applications. This provides a visual context, such as displaying temperature variations across a city using color-coded regions.

- 3D visualizations: For complex datasets with multiple dimensions, 3D visualizations can help unravel patterns and correlations not easily visible in 2D representations. For example, combining temperature, humidity, and wind speed data for weather modeling.

The choice of visualization technique is crucial; poor visualization can obscure important insights or mislead the observer. A well-chosen visualization method effectively communicates the sensor data’s key features and facilitates quick data understanding.

Q 25. Describe your familiarity with machine learning techniques applied to sensor data analysis (e.g., classification, regression).

I have extensive experience applying machine learning techniques to analyze sensor data. This ranges from simple classification tasks to more complex regression and anomaly detection problems. For instance:

- Classification: I’ve used Support Vector Machines (SVMs) and Random Forests to classify sensor readings into different categories, such as identifying different types of machinery faults based on vibration sensor data. For example,

model.fit(training_data, training_labels)would train an SVM model for fault detection. - Regression: I’ve used linear regression and neural networks to predict continuous values, such as predicting future energy consumption based on smart meter data.

prediction = model.predict(input_data)would generate a prediction using a trained regression model. - Anomaly Detection: I’ve used techniques like One-Class SVM and isolation forests to identify unusual or anomalous readings that might indicate equipment malfunction or security breaches. This is crucial for maintaining the integrity of sensor data and preventing errors from impacting decision-making.

- Clustering: K-means clustering is used to group similar sensor readings, revealing underlying patterns or identifying different operational states.

The choice of machine learning algorithm depends on the specific problem, data characteristics, and desired outcome. My experience spans model selection, training, evaluation, and deployment – ensuring the chosen models are accurate, robust, and efficient.

Q 26. How do you ensure the ethical use of sensor data?

Ethical considerations are paramount when working with sensor data. My approach is guided by principles of privacy, transparency, and accountability. Specific actions include:

- Data Minimization: Collecting only the necessary data for the specified purpose. Avoid collecting more data than absolutely required, reducing the risk of privacy violations.

- Data Anonymization and Pseudonymization: Implementing techniques to remove or replace personally identifiable information (PII) from the data while retaining its utility for analysis. Techniques such as hashing or differential privacy can be applied.

- Informed Consent: Obtaining informed consent from individuals whose data is being collected, ensuring they are aware of how their data will be used. This is crucial for transparency and respecting individual rights.

- Data Security: Implementing robust security measures to protect the data from unauthorized access, use, or disclosure. This includes encryption, access control, and regular security audits.

- Transparency and Explainability: Ensuring the data collection and analysis processes are transparent and the results are understandable and interpretable. This reduces potential biases and promotes trust.

- Compliance with Regulations: Adhering to all relevant data privacy regulations, such as GDPR and CCPA.

Ethical considerations are integrated throughout the sensor data lifecycle, from design and collection to analysis and disposal. It’s not just about complying with regulations; it’s about building trust and ensuring responsible use of technology.

Q 27. Describe your experience with different sensor modeling and simulation techniques.

Sensor modeling and simulation are crucial for designing, testing, and optimizing sensor systems before deployment. My experience encompasses various techniques:

- Physical Modeling: Creating mathematical models based on the physical principles governing the sensor’s operation. For example, modeling the response of a temperature sensor based on heat transfer equations.

- Empirical Modeling: Developing models based on experimental data, using techniques like curve fitting or regression analysis to represent the sensor’s input-output relationship.

- Agent-Based Modeling: Simulating the behavior of a network of sensors and their interactions with the environment, useful for understanding system-level behavior and optimizing network design. This is often used for complex, distributed systems.

- Discrete Event Simulation: Modeling the timing and sequencing of events in a sensor system, important for understanding delays, throughput, and resource utilization. This is common for analyzing sensor network performance.

Simulation tools like MATLAB and Python libraries (e.g., SimPy) are frequently used for these purposes. Simulation allows us to test different scenarios, identify potential problems, and optimize system design without the cost and time of deploying a full-scale system. For example, simulating various network topologies to determine the optimal configuration for a specific deployment.

Q 28. What are your career aspirations related to Sensor Exploitation?

My career aspirations in sensor exploitation center around leveraging advancements in AI and machine learning to develop more intelligent, autonomous, and ethically responsible sensor systems. I aim to:

- Develop novel algorithms for sensor data analysis: Pushing the boundaries of what’s possible with AI to extract more meaningful information from sensor data, leading to improved decision-making and process automation.

- Design robust and reliable sensor networks: Creating systems that are resilient to failures, adaptable to changing conditions, and capable of self-healing.

- Promote the ethical and responsible use of sensor technology: Leading the development of best practices and guidelines to ensure that sensor technology benefits society while minimizing potential risks and harms. This includes focusing on privacy, security, and transparency.

- Contribute to the advancement of the field: Sharing my knowledge and expertise through publications, presentations, and collaborations to advance the state-of-the-art in sensor exploitation.

Ultimately, my goal is to use sensor technology to address real-world challenges and improve people’s lives in a safe, ethical, and responsible way. This involves continuous learning, exploration of new technologies, and a strong commitment to responsible innovation.

Key Topics to Learn for Sensor Exploitation Interview

- Sensor Fundamentals: Understanding different sensor types (e.g., optical, acoustic, radar), their operating principles, limitations, and signal characteristics.

- Signal Processing Techniques: Mastering techniques like filtering, noise reduction, feature extraction, and signal classification relevant to sensor data.

- Data Fusion and Integration: Exploring methods to combine data from multiple sensors for improved accuracy and situational awareness. Consider exploring Kalman filtering and other data fusion algorithms.

- Algorithm Development and Implementation: Practical experience in developing and implementing algorithms for sensor data analysis, potentially including experience with programming languages like Python or MATLAB.

- Sensor Calibration and Validation: Understanding the importance of accurate sensor calibration and techniques for validating sensor data quality and reliability.

- Cybersecurity in Sensor Systems: Knowledge of vulnerabilities and security considerations related to sensor networks and data integrity.

- Practical Applications: Discuss real-world applications of sensor exploitation in your field of interest (e.g., autonomous vehicles, environmental monitoring, defense systems). Be prepared to discuss specific projects and contributions.

- Problem-Solving and Analytical Skills: Highlight your ability to analyze complex sensor data, identify patterns, and draw meaningful conclusions. Prepare examples demonstrating your problem-solving abilities.

Next Steps

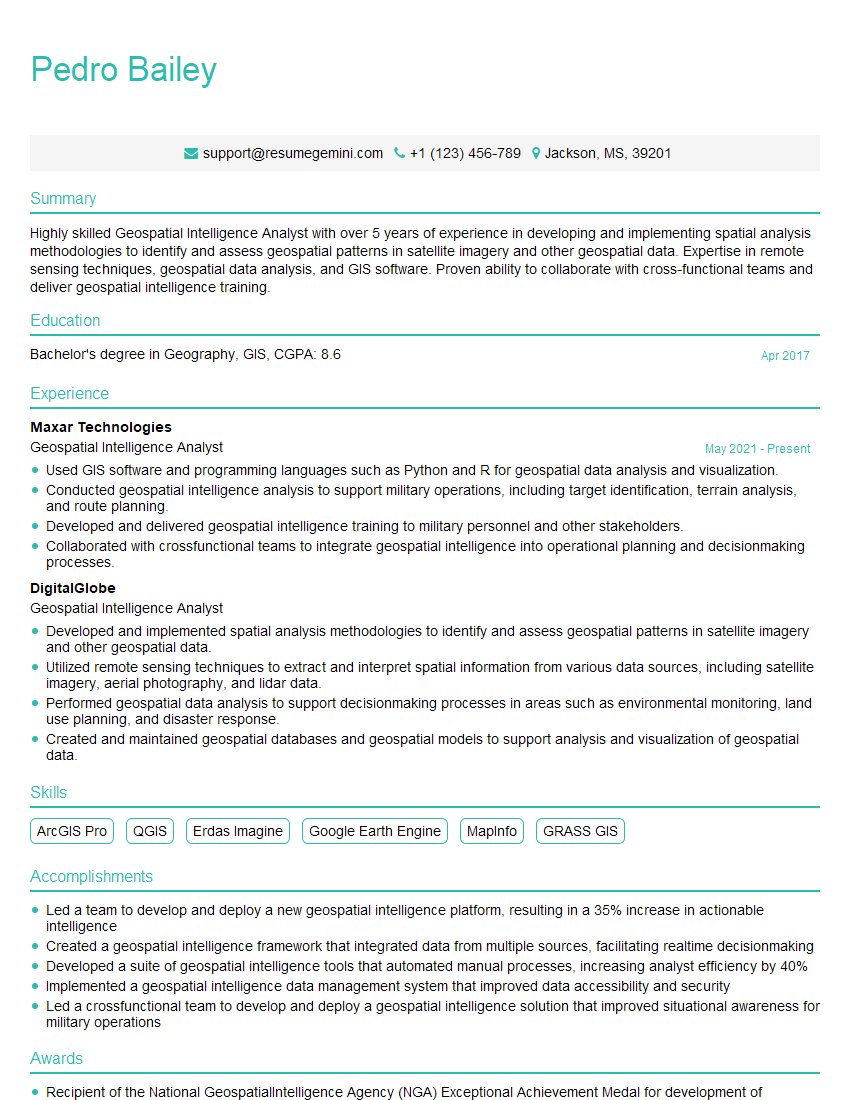

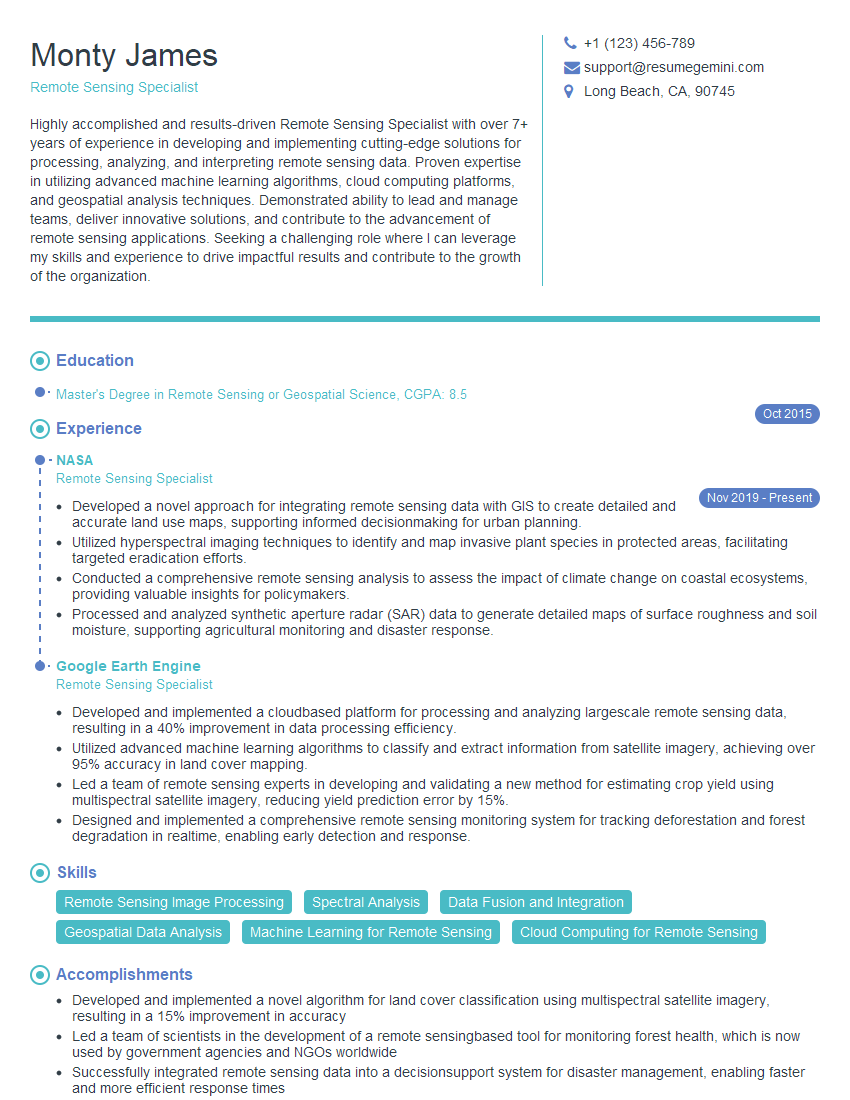

Mastering Sensor Exploitation opens doors to exciting and impactful careers in various high-growth sectors. To maximize your job prospects, crafting a strong, ATS-friendly resume is crucial. ResumeGemini can significantly enhance your resume-building experience, providing tools and resources to create a professional document that highlights your skills and achievements effectively. We provide examples of resumes tailored to Sensor Exploitation to guide you. Invest time in crafting a compelling resume – it’s your first impression with potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good