Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Sensor Systems Exploitation interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Sensor Systems Exploitation Interview

Q 1. Explain the concept of sensor fusion and its benefits.

Sensor fusion is the process of integrating data from multiple sensors to obtain a more accurate, reliable, and comprehensive understanding of a system or environment than could be achieved using any single sensor alone. Think of it like having multiple witnesses to an event – each witness might have a slightly different perspective, but by combining their accounts, you get a much clearer picture of what happened.

Benefits of Sensor Fusion:

- Improved Accuracy: Combining data from different sensors mitigates individual sensor errors and uncertainties, leading to more precise estimations.

- Increased Reliability: If one sensor fails, others can compensate, ensuring continued operation and preventing system failures. This redundancy is crucial in safety-critical applications.

- Enhanced Coverage: Sensors with different capabilities can be used together to monitor a wider range of parameters or cover a larger area.

- Reduced Ambiguity: Combining data from sensors with different measurement principles helps resolve ambiguities and inconsistencies.

Example: In autonomous driving, sensor fusion combines data from cameras (visual perception), lidar (distance measurement), and radar (object detection) to create a complete and robust understanding of the vehicle’s surroundings, enabling safe navigation.

Q 2. Describe different types of sensors and their applications.

Sensors come in a vast array of types, each designed for specific applications. Here are a few key categories:

- Acoustic Sensors: These detect sound waves, used in applications like microphones (speech recognition, audio monitoring), sonar (underwater navigation, object detection), and ultrasound (medical imaging, industrial sensing).

- Optical Sensors: These use light to measure various properties. Examples include cameras (image acquisition, object recognition), photodiodes (light intensity measurement), and spectrometers (chemical analysis).

- Inertial Sensors: These measure acceleration and angular rate, critical in applications like inertial navigation systems (GPS-independent positioning), motion tracking, and stabilization systems. Examples include accelerometers and gyroscopes.

- Magnetic Sensors: These detect magnetic fields, used in applications such as compasses (direction finding), magnetometers (geomagnetic surveys, mineral exploration), and magnetic encoders (position sensing).

- Thermal Sensors: These measure temperature, crucial in applications such as climate control, industrial process monitoring, and medical thermography. Examples include thermocouples and infrared cameras.

- Chemical Sensors: These detect the presence or concentration of specific chemicals, used in applications such as environmental monitoring, industrial process control, and medical diagnostics. Examples include gas sensors and pH sensors.

The application of a sensor depends greatly on the specific task. For instance, a self-driving car relies on a diverse suite of sensors, including cameras, lidar, and radar, to create a comprehensive ‘picture’ of its environment.

Q 3. How do you handle noisy sensor data?

Noisy sensor data is a common problem. Noise can be introduced due to various factors including electronic interference, environmental factors, or sensor limitations. Handling this noise is crucial for accurate analysis.

Techniques to handle noisy sensor data:

- Filtering: This involves using algorithms to smooth out the noise. Common filters include moving average filters, Kalman filters (optimal for dynamic systems), and median filters (robust to outliers).

- Data Smoothing: Techniques like spline interpolation or Savitzky-Golay filtering can smooth the data while preserving important features.

- Outlier Detection and Removal: Statistical methods like Z-score or boxplot analysis can identify and remove outliers caused by extreme noise or sensor malfunctions.

- Calibration and Compensation: Careful sensor calibration can reduce systematic errors and improve accuracy. Compensation techniques can correct known sources of noise.

Example: A Kalman filter is often used in inertial navigation systems to combine noisy accelerometer and gyroscope data with GPS data to estimate position and velocity accurately. The filter accounts for the dynamic nature of the system and effectively minimizes the impact of sensor noise.

Q 4. What are the common challenges in sensor data analysis?

Sensor data analysis presents several challenges:

- Noise and Uncertainty: As discussed previously, noisy sensor readings introduce errors and uncertainties into the analysis.

- Data Variability: Sensor readings can vary due to environmental conditions, sensor drift, or other factors, making consistent interpretation difficult.

- Data Volume and Velocity: Sensor networks can generate massive amounts of data at high speeds, requiring efficient data handling and processing techniques.

- Data Integration and Fusion: Integrating data from different sensors with varying formats, sampling rates, and accuracies can be challenging.

- Missing Data: Sensor failures or communication issues can lead to missing data, requiring imputation or other techniques to handle incomplete datasets.

- Real-time Processing Requirements: Many applications require real-time processing of sensor data, imposing constraints on algorithms and computational resources.

Addressing these challenges often involves a combination of advanced signal processing, machine learning, and robust data management techniques.

Q 5. Explain different signal processing techniques used in sensor systems.

Signal processing techniques are fundamental to extracting meaningful information from sensor data. These techniques aim to enhance signals, remove noise, and extract relevant features.

- Filtering: (Already discussed above) Various types of filters are used to remove noise and unwanted frequencies.

- Fourier Transform: This mathematical tool decomposes signals into their frequency components, allowing for the identification of dominant frequencies and the removal of noise based on frequency characteristics.

- Wavelet Transform: Similar to the Fourier Transform, but better suited for analyzing non-stationary signals (signals whose characteristics change over time).

- Time-Frequency Analysis: Techniques like short-time Fourier transform (STFT) and Wigner-Ville distribution are used to analyze signals that change their frequency content over time.

- Feature Extraction: Techniques like Principal Component Analysis (PCA) and linear discriminant analysis (LDA) are used to reduce the dimensionality of the data while preserving important features.

Example: In speech recognition, the Fourier Transform is used to analyze the frequency components of the audio signal, enabling the identification of phonemes (basic units of sound).

Q 6. How do you perform sensor calibration and validation?

Sensor calibration and validation are critical steps to ensure accuracy and reliability. Calibration involves adjusting the sensor’s output to match known input values, while validation involves verifying the accuracy and precision of the calibrated sensor.

Sensor Calibration:

- Two-point calibration: This involves calibrating the sensor at two known input values (e.g., minimum and maximum).

- Multi-point calibration: This involves calibrating the sensor at multiple known input values, providing a more accurate calibration curve.

- Factory calibration: Many sensors undergo initial calibration during manufacturing.

- In-situ calibration: This involves calibrating the sensor in its operational environment.

Sensor Validation:

- Cross-validation: Comparing sensor readings with readings from a known accurate sensor.

- Accuracy testing: Measuring the deviation between the sensor’s readings and known values.

- Precision testing: Assessing the repeatability of the sensor’s readings under identical conditions.

- Environmental testing: Evaluating the sensor’s performance under different environmental conditions.

Example: A temperature sensor might be calibrated using a known standard temperature source, like a calibrated water bath. Validation would involve comparing its readings to a traceable standard over a range of temperatures.

Q 7. Discuss various sensor network architectures.

Sensor network architectures describe how sensors are interconnected and communicate with each other and a central processing unit. The choice of architecture depends on factors like the application’s requirements, the geographical distribution of sensors, and the power and communication constraints.

- Star Network: All sensors connect directly to a central node. Simple to manage but a single point of failure.

- Bus Network: Sensors are connected in a linear fashion along a shared communication bus. Cost-effective but limited bandwidth and susceptible to single-point failures.

- Mesh Network: Sensors connect to multiple other sensors, providing redundancy and robustness. More complex to manage but highly resilient.

- Tree Network: Hierarchical structure where sensors are organized in a tree-like structure. Combines aspects of star and bus networks.

- Wireless Sensor Networks (WSNs): Use wireless communication to connect sensors, offering flexibility and scalability but limited bandwidth and susceptible to interference.

Example: A smart home security system might utilize a star network where all sensors (door sensors, motion detectors) connect to a central hub. A large-scale environmental monitoring system might employ a wireless mesh network for widespread coverage and robustness against sensor failures.

Q 8. Describe your experience with specific sensor systems (e.g., radar, LiDAR).

My experience encompasses a wide range of sensor systems, with a particular focus on radar and LiDAR technologies. In my previous role, I worked extensively with X-band radar for object detection and tracking in challenging environments, such as dense urban areas and adverse weather conditions. This involved calibrating the radar system, processing the raw signal data to filter noise and isolate relevant targets, and developing algorithms for target classification and trajectory prediction. I’ve also worked extensively with LiDAR, primarily using data from Velodyne and Ouster sensors. My work here focused on point cloud processing techniques, including noise filtering, segmentation, and 3D reconstruction for applications like autonomous driving and high-precision mapping. This included experience with various data formats like LAS and PCD and familiarity with popular processing libraries such as PCL (Point Cloud Library). One specific project involved developing a real-time obstacle detection system for a self-driving car prototype using a combination of radar and LiDAR data fusion techniques.

Q 9. How do you ensure the security and integrity of sensor data?

Ensuring the security and integrity of sensor data is paramount. My approach involves a multi-layered strategy. First, physical security measures are crucial – protecting the sensors themselves from tampering, damage, or unauthorized access. This includes secure mounting, environmental protection, and potentially even encryption of communication channels between the sensors and the data acquisition system. Secondly, data integrity during transmission and storage is vital. We use techniques such as digital signatures and checksums to verify data authenticity and detect any alterations. Data encryption, both in transit and at rest, is essential to protect sensitive information from unauthorized access. Finally, robust data validation and anomaly detection methods are implemented to identify and flag potentially compromised or erroneous data. This might involve statistical process control or machine learning-based anomaly detection algorithms. Regular security audits and penetration testing are also critical for identifying vulnerabilities and proactively addressing potential threats. Think of it like protecting a bank vault – multiple layers of security working together to minimize risk.

Q 10. Explain the concept of sensor placement optimization.

Sensor placement optimization is crucial for maximizing data quality and minimizing redundancy. The goal is to strategically position sensors to achieve optimal coverage, minimize blind spots, and reduce interference. This is often a complex optimization problem that requires considering various factors such as sensor range, field of view, the environment’s geometry (e.g., obstacles), and the specific application requirements. For example, in a surveillance scenario, you might use techniques like Voronoi diagrams to distribute sensors evenly and cover the area effectively, while in a robotic navigation application, strategic placement might be informed by simulations and path planning algorithms. Often, this involves using mathematical modeling and simulation tools to explore different placement scenarios and select the one that best meets performance criteria. Algorithms like genetic algorithms or simulated annealing can also be very effective for finding near-optimal solutions in complex scenarios.

Q 11. How do you evaluate the performance of a sensor system?

Evaluating sensor system performance involves several key metrics, depending on the specific application. These include accuracy, precision, recall, and F1-score for classification tasks; root mean squared error (RMSE) or mean absolute error (MAE) for regression tasks; detection range, false positive rate, and false negative rate for detection systems; and spatial resolution and temporal resolution for imaging systems. We also consider robustness to noise, interference, and environmental factors. A thorough evaluation process includes testing under various conditions, comparing performance against benchmarks, and analyzing the trade-offs between different performance metrics. For instance, improving detection range might increase the false positive rate. Analyzing the receiver operating characteristic (ROC) curve can help visualize this trade-off and determine the optimal operating point. Often, A/B testing is used to compare the performance of different sensor systems or algorithms.

Q 12. What are the ethical considerations related to sensor systems exploitation?

Ethical considerations in sensor systems exploitation are significant and require careful attention. Privacy is a primary concern. The use of sensors to collect personal data raises concerns about potential surveillance and misuse of information. It’s crucial to establish clear guidelines on data collection, storage, and usage, ensuring compliance with privacy regulations and ethical standards. Bias and fairness are also important considerations. Sensor systems can reflect and amplify existing biases in data, leading to unfair or discriminatory outcomes. It is vital to actively mitigate bias during data collection, processing, and analysis. Transparency and accountability are essential. Users should be informed about how sensor data is being collected, used, and protected. This includes clear communication about the purpose of the sensors, the types of data being collected, and the measures taken to safeguard privacy and security. We must always consider the potential societal impact of our work and act responsibly.

Q 13. Describe your experience with data visualization and presentation techniques.

I have extensive experience in data visualization and presentation techniques. I am proficient in using tools such as MATLAB, Python (with libraries like Matplotlib, Seaborn, and Plotly), and Tableau to create informative and engaging visualizations. My approach is guided by the principle of clarity and effective communication. I tailor my visualizations to the specific audience and the message I want to convey, selecting appropriate chart types, color palettes, and annotations. For example, for presenting sensor data trends over time, I might use line charts or area charts. For showing spatial relationships, I might use maps or 3D visualizations. For comparing different sensor systems or algorithms, bar charts or box plots are frequently used. Interactive dashboards are often employed to allow users to explore the data dynamically. The goal is to translate complex technical data into easily understandable and actionable insights.

Q 14. How do you handle missing or incomplete sensor data?

Handling missing or incomplete sensor data is a common challenge. The approach depends on the nature and extent of the missing data. For small amounts of missing data, simple imputation techniques like mean imputation or linear interpolation might suffice. However, for larger amounts of missing data, more sophisticated methods are required. These could include k-Nearest Neighbors (k-NN) imputation, expectation-maximization (EM) algorithms, or model-based imputation. The choice of imputation method depends on factors like the type of data, the pattern of missing data (e.g., missing completely at random (MCAR), missing at random (MAR), missing not at random (MNAR)), and the sensitivity of the analysis to missing data. In some cases, it might be better to exclude data points with missing values or to use algorithms robust to missing data. For example, in machine learning applications, techniques such as multiple imputation and learning with missing data can be used. Careful consideration must be given to ensure that imputation does not introduce bias or artifacts.

Q 15. Explain your experience with specific sensor data formats (e.g., NetCDF, HDF5).

My experience encompasses a wide range of sensor data formats, with a strong focus on NetCDF and HDF5. NetCDF (Network Common Data Form) is excellent for handling gridded, multidimensional data common in environmental monitoring, like atmospheric or oceanographic data. I’ve used it extensively in projects involving satellite imagery analysis, where its ability to efficiently store and manage large datasets with associated metadata is crucial. For instance, I worked on a project analyzing sea surface temperature data from multiple satellites, leveraging NetCDF’s capabilities for data aggregation and analysis. HDF5 (Hierarchical Data Format version 5), on the other hand, offers a more flexible hierarchical structure, making it ideal for complex datasets with heterogeneous data types. I’ve utilized HDF5 in projects involving sensor fusion, where data from various sensors (e.g., lidar, radar, cameras) need to be integrated and analyzed together. The hierarchical structure allowed efficient organization and retrieval of data from different sources.

Both formats provide robust support for metadata, which is crucial for data provenance and understanding sensor characteristics, calibration parameters, and measurement uncertainties – all essential for rigorous data analysis.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What programming languages and tools are you proficient in for sensor data analysis?

My proficiency in programming languages for sensor data analysis centers around Python and MATLAB. Python, with its rich ecosystem of libraries like NumPy, SciPy, Pandas, and scikit-learn, is my primary tool. I frequently use NumPy for efficient numerical computations, Pandas for data manipulation and analysis, and SciPy for scientific algorithms and signal processing. Scikit-learn provides a comprehensive suite of machine learning tools. For example, I used Python to develop a pipeline for processing hyperspectral imagery, including atmospheric correction, feature extraction, and classification using support vector machines.

MATLAB is particularly useful for its signal processing toolbox and visualization capabilities. I’ve used it for analyzing time-series data from accelerometers and gyroscopes, performing Fourier transforms, and developing custom signal processing algorithms. I often combine Python and MATLAB for different stages of a project, leveraging the strengths of each language.

In terms of tools, I’m adept at using GIS software like ArcGIS for spatial data analysis and visualization, and I also have experience with data visualization tools like Matplotlib and Seaborn in Python.

Q 17. Describe your experience with machine learning algorithms for sensor data analysis.

I have extensive experience applying machine learning algorithms to various sensor data analysis tasks. My experience includes supervised learning techniques like Support Vector Machines (SVMs), Random Forests, and neural networks, as well as unsupervised methods like clustering (k-means, DBSCAN) and dimensionality reduction (PCA).

For example, I used Random Forests to classify different types of land cover from multispectral imagery. The ability of Random Forests to handle high-dimensional data and provide feature importance estimations proved invaluable in this context. In another project, I employed a Convolutional Neural Network (CNN) to detect anomalies in sensor data streams, significantly improving the efficiency of real-time monitoring compared to traditional methods. I also have experience with deep learning architectures like Recurrent Neural Networks (RNNs) for sequential data analysis, particularly useful for time series predictions from sensor data.

Model selection and evaluation are critical; I routinely employ techniques like cross-validation and performance metrics (precision, recall, F1-score, AUC) to rigorously evaluate the performance of different algorithms and ensure the robustness of my models.

Q 18. How do you identify and mitigate sensor biases?

Identifying and mitigating sensor biases is crucial for ensuring the accuracy and reliability of sensor data. My approach involves a multi-step process. First, I thoroughly examine sensor specifications and calibration procedures to understand potential sources of bias. This often includes reviewing the sensor’s datasheet and any available calibration reports.

Secondly, I employ statistical methods to analyze the data and identify systematic errors. Techniques like residual analysis, examining histograms and scatter plots, can reveal potential biases. For example, if a temperature sensor consistently reads a few degrees lower than expected, I’d investigate whether there’s a systematic offset that can be corrected.

Mitigation strategies vary depending on the nature of the bias. For systematic biases, I often apply calibration techniques, such as correcting for known offsets or applying linear or non-linear transformations based on empirical data or models. For random errors, I might use filtering techniques to remove noise, or employ robust statistical methods less sensitive to outliers. In some cases, data fusion from multiple sensors with different biases can help improve overall accuracy. Data quality assessment is paramount, ensuring proper documentation of the bias identification, mitigation steps and resulting uncertainties.

Q 19. Explain the difference between active and passive sensors.

The key difference between active and passive sensors lies in how they acquire data. Passive sensors measure energy emanating from the target or environment, such as light, heat, or sound. They simply ‘listen’ or ‘observe.’ Examples include cameras (measuring reflected light), thermal imagers (measuring infrared radiation), and microphones (measuring sound waves).

Active sensors, on the other hand, emit energy and then measure the energy reflected or scattered back to them. They actively ‘interrogate’ the environment. Examples include radar (emitting radio waves), lidar (emitting laser light), and sonar (emitting sound waves). Active sensors are often more capable of penetrating obscurants (e.g., fog, smoke) and providing more detailed information about the target’s properties. However, they are usually more complex, expensive and require more power than passive sensors.

The choice between active and passive sensors depends on the specific application and requirements. For instance, if the goal is covert surveillance, a passive sensor is preferred; however, if accurate range measurements are crucial, an active sensor would be necessary.

Q 20. Discuss your experience with sensor data modeling and simulation.

Sensor data modeling and simulation are integral to my workflow. I use models to predict sensor performance under different conditions, to design optimal sensor configurations, and to test data processing algorithms before deploying them in real-world scenarios.

I’ve developed models using both analytical approaches and empirical data fitting. Analytical models, based on physical principles, allow for a deeper understanding of sensor behavior, but often require simplifying assumptions. Empirical models, derived from experimental data, can be more accurate for specific conditions but lack generalizability.

Simulation tools like MATLAB’s Simulink have been instrumental in this process, allowing me to simulate sensor responses and test different data processing algorithms in a virtual environment. For instance, I simulated the behavior of a network of sensors in a large-scale environmental monitoring project to optimize sensor placement and communication protocols. Accurate sensor models are essential for generating synthetic data to supplement limited real-world datasets, especially for training machine learning models.

Q 21. How do you handle real-time sensor data streams?

Handling real-time sensor data streams requires efficient data ingestion, processing, and analysis techniques. The key is to design a system that can process data at the rate it is being generated without introducing significant latency. This often involves using high-performance computing resources and parallel processing techniques.

I typically employ a data streaming framework, such as Apache Kafka or Apache Flink, to manage incoming sensor data. These frameworks allow for real-time data ingestion, filtering, and aggregation. Then, I leverage efficient data structures and algorithms, often using libraries like NumPy in Python, to perform real-time analysis, anomaly detection, and visualization. For instance, I developed a real-time system to monitor structural health using sensor data from embedded devices on a bridge. This system employed a combination of data streaming, signal processing, and machine learning algorithms to detect potential structural issues promptly.

Scalability and fault tolerance are crucial considerations. The system needs to be able to handle increasing data volumes and maintain operations even if some sensors fail. Robust error handling and logging mechanisms are integral to the design of any real-time sensor data processing system.

Q 22. Describe your experience with sensor system integration and testing.

Sensor system integration and testing is a crucial phase encompassing the seamless merging of diverse sensor types, data acquisition units, and processing algorithms into a functional system. My experience spans various projects, from designing environmental monitoring networks involving numerous soil moisture and temperature sensors, to integrating complex navigation systems incorporating GPS, IMU, and LiDAR data. Testing involves rigorous validation at each stage: unit testing (individual sensor performance), integration testing (interoperability between components), and system testing (overall system functionality under various conditions). We use a combination of simulated and real-world data to stress-test the system’s robustness, identify vulnerabilities, and fine-tune parameters for optimal performance. For example, in one project involving an autonomous underwater vehicle (AUV), we simulated various ocean current conditions to validate the AUV’s navigation system’s accuracy and reliability. This iterative process ensures a robust and reliable final product.

Q 23. What are the limitations of various sensor technologies?

Every sensor technology has inherent limitations. For instance, optical sensors (e.g., cameras) are susceptible to variations in lighting conditions and can be easily obscured by fog or dust. Their range is also limited. Infrared sensors, while effective for detecting heat signatures, are hampered by atmospheric conditions and are not suitable for all materials. Ultrasonic sensors, commonly used in obstacle detection, struggle with highly reflective surfaces and are prone to interference from noise. Acoustic sensors used for underwater applications are influenced by sound speed variations in water, which can affect their accuracy. Finally, even highly precise sensors like GPS can experience errors due to atmospheric conditions (ionospheric and tropospheric delays) and multipath propagation (signal reflections).

Understanding these limitations is paramount; it informs sensor selection, data fusion strategies, and overall system design. For example, in an autonomous driving system, we might combine multiple sensor types (cameras, LiDAR, radar) to mitigate individual sensor limitations and enhance the overall perception system’s robustness. This redundancy helps handle sensor failures or limitations in specific conditions.

Q 24. How do you ensure the reliability and maintainability of sensor systems?

Ensuring reliability and maintainability involves a multifaceted approach. First, we rigorously select high-quality, proven components with a strong track record. Redundancy is implemented wherever possible – using multiple sensors to measure the same parameter – allowing the system to function even with sensor failures. Regular calibration and maintenance schedules are crucial. Moreover, we incorporate self-diagnostic capabilities into the system’s design; this allows it to identify potential problems before they escalate into major issues. Data logging and analysis provide valuable insights into system performance and help identify potential points of failure. Finally, modular design facilitates easy repairs and replacements; if one module fails, it can be replaced without impacting the entire system. This is particularly important in harsh or remote environments where access to repair facilities might be limited.

For example, a remote weather station might utilize redundant power supplies and data transmission paths to ensure continuous operation. Regularly scheduled maintenance will check for sensor drift and replace worn components.

Q 25. Discuss your experience with different types of sensor networks (e.g., wireless, wired).

My experience encompasses both wired and wireless sensor networks. Wired networks, although offering higher bandwidth and lower latency, are inherently less flexible and more expensive to install, particularly in large-scale deployments. They’re more susceptible to physical damage as well. Wireless networks (using technologies like Zigbee, Z-Wave, Wi-Fi, or LoRaWAN) offer greater flexibility and scalability, but they introduce challenges related to signal interference, power consumption, and security. The choice between wired and wireless depends on the specific application requirements. Factors such as the environment, data rate requirements, power constraints, and the required range influence the decision.

For instance, a high-bandwidth, real-time industrial process monitoring system might benefit from a wired network, while a large-scale environmental monitoring network spread across a wide geographical area would be better suited to a wireless network using low-power, long-range technologies.

Q 26. Explain your understanding of sensor data privacy and security concerns.

Sensor data often contains sensitive information, raising significant privacy and security concerns. Unauthorized access to sensor data could lead to breaches of personal privacy, intellectual property theft, or even physical harm. Security measures include data encryption both in transit and at rest, secure authentication protocols to control access to the network and data, and intrusion detection systems to monitor for anomalous activity. Privacy concerns are addressed by data anonymization techniques and adherence to relevant data protection regulations like GDPR. Careful consideration needs to be given to data storage, access control, and data lifecycle management.

In applications such as smart home systems or wearable health trackers, securing user data is paramount, requiring robust encryption and access control mechanisms. In critical infrastructure monitoring, network security is vital to prevent disruptions or malicious attacks.

Q 27. How do you select appropriate sensors for a specific application?

Selecting appropriate sensors involves a thorough understanding of the application’s specific requirements. We start by clearly defining the parameters to be measured, the required accuracy and precision, the operating environment (temperature, humidity, pressure etc.), the required range, power consumption constraints, and budget considerations. Then, we evaluate various sensor technologies based on their specifications and capabilities. We also consider factors such as cost, availability, maintenance requirements, and integration complexities. Trade-offs are often necessary, balancing performance, cost, and practicality.

For example, in a smart agriculture application needing soil moisture monitoring, we might compare different soil moisture sensor types (capacitive, time domain reflectometry) based on their accuracy, sensitivity, cost, and ease of integration with the existing system.

Q 28. Describe your experience with fault detection and diagnosis in sensor systems.

Fault detection and diagnosis in sensor systems is crucial for ensuring system reliability. This involves implementing techniques to identify anomalies, isolate faulty components, and initiate corrective actions. Methods include data analysis using statistical process control (SPC) to identify deviations from normal operating conditions, model-based fault detection using analytical models of sensor behavior, and signal processing techniques to filter out noise and detect unusual patterns. We also employ redundant sensors and sensor fusion algorithms to cross-validate sensor readings and detect discrepancies. The use of diagnostic messages and logs provides detailed information aiding in pinpointing the root cause of failures.

For instance, if a temperature sensor in a critical industrial process shows a sudden and significant drift, a fault detection system would trigger an alarm, and diagnostic logs would help identify whether the issue stems from the sensor itself, faulty wiring, or a problem in the data acquisition system. This allows for timely intervention and prevents potential catastrophic failures.

Key Topics to Learn for Sensor Systems Exploitation Interview

- Sensor Fundamentals: Understanding various sensor types (optical, acoustic, radar, etc.), their operating principles, limitations, and signal characteristics. Consider signal-to-noise ratio, resolution, and accuracy.

- Signal Processing Techniques: Mastering digital signal processing (DSP) algorithms for noise reduction, filtering, feature extraction, and signal classification. Explore techniques like Fourier transforms and wavelet analysis.

- Data Fusion and Integration: Learning how to combine data from multiple sensors to improve accuracy, reliability, and situational awareness. Understand the challenges and strategies involved in data fusion.

- Algorithm Development and Implementation: Practical experience in developing and implementing algorithms for sensor data analysis, pattern recognition, and target identification. Familiarity with relevant programming languages (e.g., Python, MATLAB) is crucial.

- System Architecture and Design: Understanding the overall architecture of sensor systems, including hardware components, software interfaces, and data flow. Consider factors like power consumption, size, weight, and cost.

- Cybersecurity in Sensor Systems: Understanding vulnerabilities and security threats related to sensor systems and implementing appropriate security measures to protect data integrity and confidentiality.

- Practical Applications: Explore case studies and real-world applications of sensor systems exploitation in fields such as defense, environmental monitoring, autonomous vehicles, and medical imaging.

- Problem-Solving and Analytical Skills: Develop your ability to analyze complex sensor data, identify anomalies, and draw meaningful conclusions. Practice troubleshooting common sensor system issues.

Next Steps

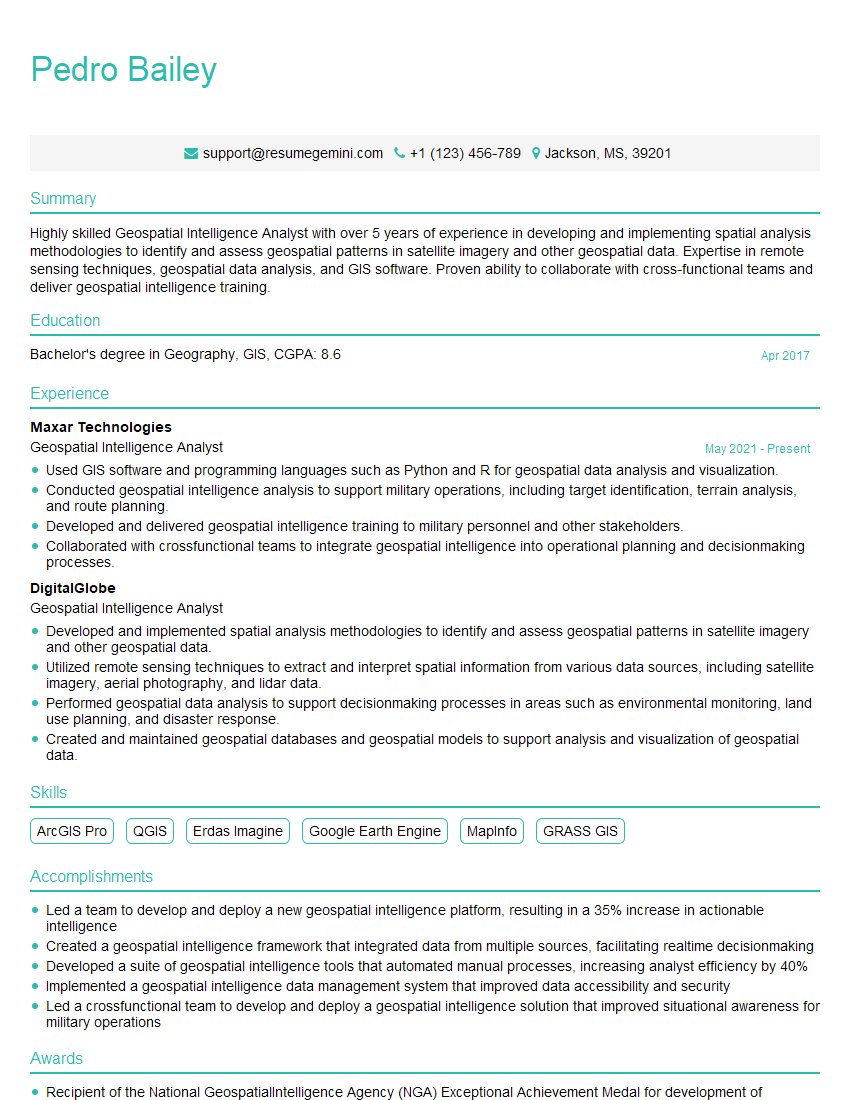

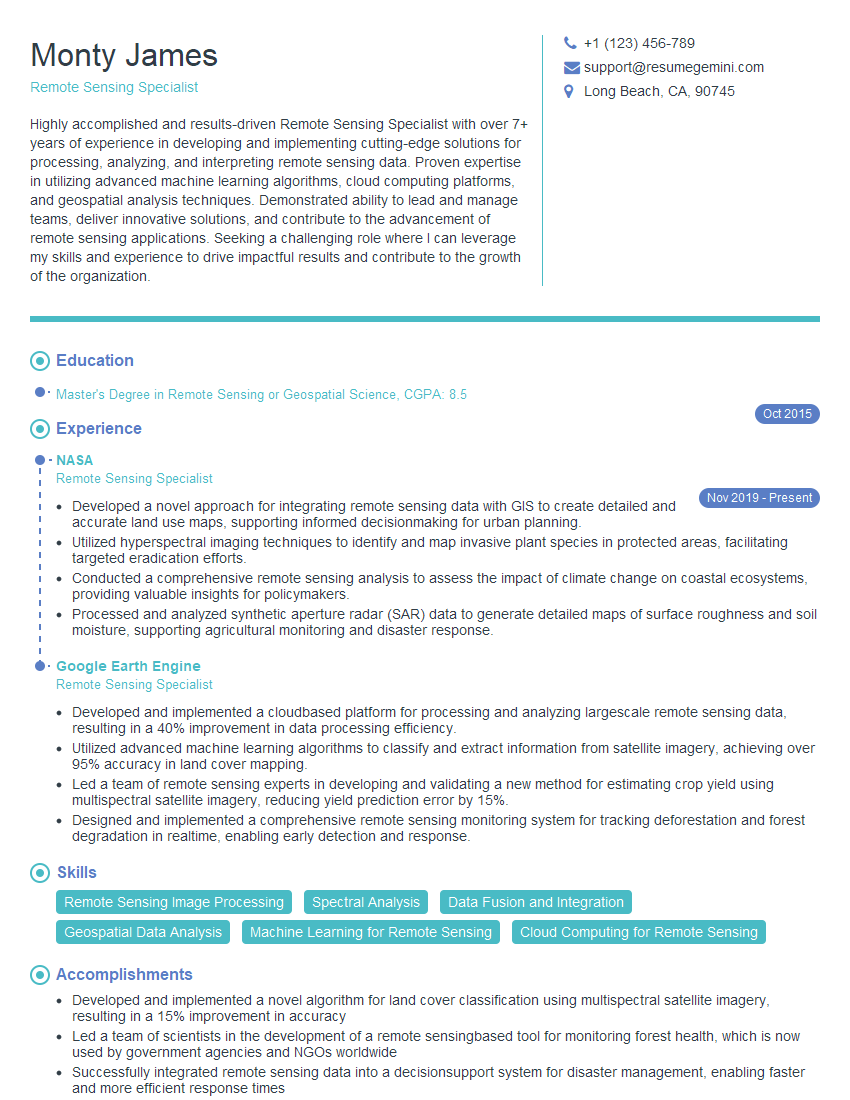

Mastering Sensor Systems Exploitation opens doors to exciting and impactful careers in various high-tech industries. To maximize your job prospects, it’s vital to present your skills effectively. An ATS-friendly resume is key to getting your application noticed by recruiters. We strongly recommend using ResumeGemini to craft a professional and compelling resume that highlights your expertise in Sensor Systems Exploitation. ResumeGemini provides examples of resumes tailored to this specific field, guiding you to create a document that truly showcases your capabilities. Invest time in building a strong resume – it’s your first impression and a crucial step in securing your dream job.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good