Interviews are more than just a Q&A session—they’re a chance to prove your worth. This blog dives into essential Shifting Under Load interview questions and expert tips to help you align your answers with what hiring managers are looking for. Start preparing to shine!

Questions Asked in Shifting Under Load Interview

Q 1. Explain the concept of ‘Shifting Under Load’ in database systems.

Shifting Under Load (SUL) in database systems refers to the ability to perform maintenance or upgrades on a database server or cluster while it remains online and continues to serve client requests. Imagine a busy airport – SUL is like performing runway repairs while planes are still landing and taking off. It’s all about minimizing downtime and disruption to the end-users.

Instead of taking the entire system offline for maintenance, SUL allows for gradual migration of data or resources to new systems or configurations. This minimizes the impact on application performance and prevents service interruptions. The key is to perform these changes incrementally and transparently to the applications relying on the database.

Q 2. Describe different techniques for achieving Shifting Under Load.

Several techniques facilitate SUL:

- Rolling Upgrades: Gradually replacing components, one at a time, in a database cluster. This might involve updating individual servers or upgrading software versions sequentially. For example, updating a 3-node database cluster by taking one node offline at a time, updating it, and bringing it back online.

- Blue-Green Deployments: Maintaining two identical environments (blue and green). Traffic is directed to the ‘blue’ environment while updates are applied to the ‘green’ environment. Once the ‘green’ environment is validated, traffic is switched over, making the ‘green’ environment the active one.

- Canary Deployments: A subset of users is directed to the updated system to test its performance and stability before fully migrating all traffic. It’s a low-risk approach to validate changes before widespread deployment.

- Database Sharding with Incremental Migration: Data is split across multiple database servers (shards). Upgrades can be performed on individual shards without impacting the entire system.

The choice of technique depends on factors like database architecture, application requirements, and the complexity of the upgrade or maintenance task.

Q 3. What are the challenges associated with Shifting Under Load?

SUL presents several challenges:

- Data Consistency: Maintaining data integrity during the migration process is crucial. Inconsistent data can lead to application errors or data loss.

- Downtime Minimization: Although aiming to minimize downtime, some level of disruption is inevitable. Balancing the speed of the migration against the acceptable level of downtime is a delicate act.

- Complexity: Implementing SUL requires careful planning and execution, often involving sophisticated tools and techniques. This increases the complexity of the process significantly.

- Testing: Thorough testing is vital to ensure that the migration process doesn’t introduce bugs or performance issues. Testing must cover all aspects of the process, including failure scenarios.

- Monitoring: Constant monitoring is required to detect and respond to any anomalies or errors that might occur during the migration.

Q 4. How do you ensure data consistency during a Shifting Under Load operation?

Ensuring data consistency during SUL is paramount. Techniques include:

- Transactions: Using ACID (Atomicity, Consistency, Isolation, Durability) transactions to guarantee that data changes are applied atomically and consistently.

- Two-Phase Commit (2PC): A protocol that ensures all participating systems agree on the outcome of a transaction before committing changes. This can be particularly relevant in distributed database environments.

- Versioning: Employing version control systems for database schema changes to allow for rollback if necessary.

- Checksums and Data Validation: Verifying data integrity before, during, and after the migration process using checksums or other validation techniques. Detecting inconsistencies early avoids bigger problems down the line.

- Data Replication and Failover Mechanisms: Using replicated databases to provide redundancy and quick failover in case of problems during the migration.

Q 5. What are the key performance indicators (KPIs) you monitor during Shifting Under Load?

Key Performance Indicators (KPIs) for SUL include:

- Downtime: The total amount of time the system is unavailable or experiencing reduced performance.

- Data Consistency Errors: The number of data inconsistencies detected during and after the migration.

- Application Performance Metrics: Response times, throughput, and error rates for applications that rely on the database.

- Resource Utilization: CPU usage, memory consumption, and disk I/O for database servers.

- Migration Rate: The speed at which data is migrated to the new system.

- Error Rate: The number of errors encountered during the migration process.

Monitoring these KPIs provides critical insight into the success and efficiency of the SUL operation.

Q 6. Explain the role of load balancing in Shifting Under Load.

Load balancing plays a crucial role in SUL by distributing client requests evenly across the available database servers. This prevents any single server from becoming overloaded, even during the migration process when some servers might be operating at reduced capacity. During a rolling upgrade, for instance, load balancers ensure clients are routed to the healthy servers while minimizing impact on the user experience.

Load balancers provide a critical layer of abstraction and resilience, ensuring that even if a server fails or is taken offline for an update, client requests are handled gracefully by other active servers. They also help to manage traffic during a Blue-Green deployment by smoothly shifting traffic between the active and standby environments.

Q 7. How do you handle failures during a Shifting Under Load process?

Handling failures during SUL requires a robust rollback strategy and a well-defined incident response plan. Key steps include:

- Rollback Mechanism: A process to quickly revert to the previous stable state if a failure occurs during the migration. This could involve reverting to a previous database backup or switching back to the older system in a blue-green deployment.

- Monitoring and Alerting: A system to monitor the health of the database and alert the operations team of any anomalies or errors.

- Automated Failover: Mechanisms to automatically failover to a redundant system or standby server in case of a failure. Load balancers play a key role here.

- Incident Response Plan: A documented plan that outlines the steps to be taken in the event of a failure, including roles and responsibilities, communication protocols, and escalation procedures.

- Post-Incident Review: A review process to analyze the cause of the failure and implement corrective actions to prevent similar incidents in the future.

A thorough disaster recovery plan is essential for ensuring business continuity.

Q 8. What are the benefits of using automated tools for Shifting Under Load?

Automated tools are crucial for Shifting Under Load (also known as database migration or live cutover) because they significantly reduce the risk of human error and improve efficiency. Manual processes are time-consuming, prone to mistakes, and often lead to extended downtime. Automated tools provide several key benefits:

- Reduced Downtime: Automated systems can perform complex tasks like data replication and schema migration much faster and more reliably than manual methods, minimizing service interruption.

- Increased Accuracy: Automation minimizes the chance of human error during the complex steps involved in shifting a database under load. This ensures data integrity and consistency.

- Improved Efficiency: Automated tools streamline the entire process, freeing up database administrators (DBAs) to focus on other critical tasks.

- Enhanced Monitoring and Control: Many automated tools provide real-time monitoring and reporting capabilities, giving DBAs complete visibility into the migration process and allowing for immediate intervention if needed.

- Rollback Capabilities: In case of failure, automated tools often have built-in rollback mechanisms that quickly revert to the previous state, minimizing data loss and downtime.

For example, imagine migrating a large e-commerce database. Manual methods might take days or even weeks, leading to significant revenue loss. Automated tools, however, can complete the migration within hours with minimal disruption.

Q 9. Compare and contrast different database replication methods in the context of Shifting Under Load.

Several database replication methods can be employed for Shifting Under Load, each with its own strengths and weaknesses:

- Physical Replication: This involves creating a complete copy of the database on a secondary server. It’s simple to implement but can be resource-intensive, especially for large databases, and requires downtime for the initial copy. Think of it as copying an entire hard drive – it takes time and resources.

- Logical Replication: Instead of copying the entire database, this method copies only the changes made to the database. It’s more efficient than physical replication, requiring less bandwidth and storage, but can be more complex to set up and manage. This is analogous to using version control; you only track changes, not the entire file each time.

- Transactional Replication: This method replicates transactions as they occur. It ensures near real-time data consistency between the primary and secondary databases. It is more complex but minimizes data loss and provides high availability. Imagine this as a live feed; any change is instantly reflected.

The best method depends on factors such as database size, data volume, required downtime, and complexity tolerance. For instance, a small database might tolerate physical replication, whereas a large, high-traffic database would benefit from transactional replication.

Q 10. How do you test the effectiveness of your Shifting Under Load strategy?

Testing the effectiveness of a Shifting Under Load strategy is critical to ensure a successful migration. A comprehensive testing strategy should include:

- Unit Testing: Testing individual components of the migration process, such as data replication and schema changes.

- Integration Testing: Testing the interaction between different components.

- System Testing: Testing the entire migration process in a simulated environment, mirroring production conditions as closely as possible.

- User Acceptance Testing (UAT): Allowing end-users to test the migrated system to ensure it meets their needs and expectations. This should involve realistic load testing to simulate peak usage.

- Performance Testing: Measuring the performance of the migrated system under various load conditions. Look for bottlenecks and areas for improvement.

For each test, you need well-defined metrics to measure success. For instance, for performance testing, you might measure response times, throughput, and error rates. Failure to adequately test can result in unexpected downtime or data loss during the actual migration.

Q 11. Describe your experience with high availability and disaster recovery in relation to Shifting Under Load.

High availability and disaster recovery are paramount when performing Shifting Under Load. A robust strategy ensures minimal disruption in case of failure. My experience involves designing and implementing solutions using techniques like:

- Active-Passive Clustering: One database server is active, while another is passively awaiting a switchover in case of failure. Shifting Under Load is essentially a controlled switchover from active to passive.

- Active-Active Clustering: Both database servers are active, sharing the load. Shifting Under Load involves a more gradual transfer of load between the servers.

- Geographic Replication: Replicating the database to a geographically separate location to ensure business continuity in case of a regional outage.

- Automated Failover Mechanisms: Automated systems that detect failures and automatically switch over to the backup server, minimizing downtime.

In one project, we implemented a geographically redundant setup with automated failover. When the primary data center experienced a power outage, the system automatically switched to the secondary data center, with minimal user-perceived downtime, illustrating the importance of robust planning and testing.

Q 12. How do you minimize downtime during a Shifting Under Load operation?

Minimizing downtime during Shifting Under Load requires careful planning and execution. Key strategies include:

- Zero-Downtime Migration Techniques: Employing techniques like blue/green deployments or rolling upgrades, where new database servers are brought online gradually without interrupting service. This is the most effective way to minimize downtime.

- Minimal Data Replication Lag: Using high-performance replication methods to keep the secondary database up-to-date, minimizing the window of inconsistency during the cutover.

- Thorough Testing: Rigorous testing is essential to identify and resolve potential issues before they affect production. This includes load testing under peak conditions.

- Rollback Strategy: Defining a clear rollback plan to revert to the previous system if the migration fails, minimizing data loss and disruption.

- Communication and Coordination: Effective communication among team members ensures smooth collaboration and timely resolution of any issues encountered during the migration.

For instance, using blue/green deployments, we can have the new system running in parallel with the old system. Once testing is complete, we simply switch over traffic, minimizing interruption.

Q 13. What are some common causes of Shifting Under Load failures?

Several factors can lead to Shifting Under Load failures. These can be broadly categorized as:

- Data Replication Issues: Inconsistent data between primary and secondary databases, data loss during replication, or replication lag.

- Network Problems: Network connectivity issues between the primary and secondary servers can disrupt data transfer and cause failures.

- Schema Inconsistencies: Discrepancies between the database schemas on the primary and secondary servers can lead to data integrity violations.

- Application Errors: Bugs in the application code that interacts with the database might cause the migration to fail.

- Resource Constraints: Insufficient resources (CPU, memory, disk space) on the secondary server can impede the migration process.

- Human Error: Misconfigurations, incorrect commands, or inadequate testing can all lead to failures.

Preventing these failures requires rigorous testing, robust monitoring, and a well-defined rollback plan. For example, a thorough schema comparison tool can prevent inconsistencies from causing problems.

Q 14. How do you troubleshoot performance issues related to Shifting Under Load?

Troubleshooting performance issues related to Shifting Under Load requires a systematic approach:

- Monitoring Tools: Utilize monitoring tools to identify bottlenecks. This includes database performance monitors, network monitors, and system resource monitors.

- Logging: Examine logs to pinpoint errors or unexpected behavior during the migration.

- Profiling: Profile the database queries to identify slow queries and optimize them.

- Replication Lag Analysis: Analyze the replication lag to ensure timely data synchronization.

- Network Analysis: Analyze network traffic to identify any network-related bottlenecks.

- Resource Utilization: Check server resource utilization (CPU, memory, I/O) to detect resource constraints.

In one instance, we found that a poorly performing index was causing slow queries during the migration. By optimizing the index, we drastically reduced response times. A systematic approach using various diagnostic tools is essential for quick problem resolution.

Q 15. Explain the importance of monitoring and logging during Shifting Under Load.

Monitoring and logging are absolutely crucial for successful Shifting Under Load (also known as database migrations or cutover operations). Think of it like performing open-heart surgery – you need constant vital signs monitoring to ensure everything is proceeding smoothly and to react quickly to any complications. Without it, you’re essentially flying blind.

Comprehensive logging allows you to track every step of the process: from initial connection and data replication to final database switch-over and validation. This provides a detailed audit trail, essential for troubleshooting issues, post-mortem analysis, and demonstrating compliance. Monitoring, on the other hand, gives real-time visibility into key metrics like database performance (CPU usage, memory consumption, I/O), application response times, and error rates. Tools that display dashboards showing these metrics, alongside the logging data, are invaluable.

For example, during a database migration, we might monitor the replication lag between the source and target databases. If the lag increases significantly, it indicates a problem that needs immediate attention. Simultaneously, logging detailed progress updates — like the number of records migrated, the time taken, and any encountered errors — aids in problem identification and assists with capacity planning for future migrations.

- Key Metrics to Monitor: Replication lag, database performance metrics (CPU, memory, I/O), application response times, error rates, and network throughput.

- Logging Essentials: Timestamps, event type, source/target database information, error messages, and successful completion indicators.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What is your experience with different database platforms and their capabilities for Shifting Under Load?

I’ve worked extensively with various database platforms, including Oracle, MySQL, PostgreSQL, and SQL Server, all in the context of Shifting Under Load. Each platform offers unique features and challenges in this area.

Oracle, for instance, excels in its robust replication and high availability features, making it relatively straightforward to perform zero-downtime migrations using techniques like GoldenGate. However, its complexity can increase the time and resources required for planning and execution.

MySQL offers features like MySQL Replication and ProxySQL, which are powerful tools for handling data migration with minimal downtime. Its open-source nature and large community offer great support and readily available resources.

PostgreSQL, with its strong emphasis on data integrity and ACID properties, requires careful planning to ensure consistency during the migration. Its robust extension ecosystem provides tools to facilitate this process.

SQL Server provides excellent features for database mirroring and log shipping, supporting efficient zero-downtime migrations. Its integration with Microsoft Azure services also simplifies the management of large database migrations in a cloud environment.

The choice of platform significantly influences the strategy for Shifting Under Load. Understanding the specific capabilities and limitations of each platform is crucial for a successful migration.

Q 17. How do you choose the appropriate Shifting Under Load strategy for a given application?

Selecting the right Shifting Under Load strategy depends on several factors, most importantly the application’s architecture, its tolerance for downtime, and the data volume. It’s a tailored approach, not a one-size-fits-all solution.

Factors to Consider:

- Downtime Tolerance: Can the application tolerate any downtime, or is a zero-downtime migration crucial? This heavily influences the strategy (e.g., blue/green deployment vs. phased rollouts).

- Data Volume: For extremely large databases, a phased approach might be necessary to minimize the migration time and resource consumption.

- Application Architecture: Microservices architecture lends itself to more granular and phased rollouts, allowing for independent migrations of individual services.

- Data Sensitivity: Data consistency and integrity are paramount; the strategy must ensure minimal risk of data loss or corruption. Transaction consistency is key.

Strategies:

- Blue/Green Deployments: Minimal downtime, best for applications that can tolerate brief cutovers.

- Phased Rollouts: Gradual migration of data and/or application functionality, suitable for large databases or complex applications.

- Rolling Upgrades: Sequential upgrades of database instances or application servers, minimizing downtime.

I always begin by carefully assessing these factors before recommending a specific strategy, creating a detailed plan that accounts for potential risks and mitigation strategies.

Q 18. Describe your experience with capacity planning in relation to Shifting Under Load.

Capacity planning is integral to successful Shifting Under Load. It’s about ensuring that the target environment can handle the increased load during the migration and afterward. Underestimating capacity leads to performance bottlenecks, potentially jeopardizing the entire migration.

My approach involves a thorough analysis of current resource utilization (CPU, memory, I/O, network) and projecting future needs based on the anticipated growth and the characteristics of the new environment. This includes considering factors like database schema changes, increased data volume, and expected user traffic.

I utilize various tools and techniques, including:

- Performance Testing: Simulating peak loads on the target environment to identify potential bottlenecks.

- Historical Data Analysis: Studying past usage patterns to predict future needs.

- Resource Monitoring: Constant monitoring of resource utilization during the migration process to detect and address any issues proactively.

For example, in a recent project, we used performance testing to identify that the target database server required additional RAM and CPU cores to handle the increased load after the migration. By addressing this in advance, we avoided any performance degradation post-migration.

Q 19. What is your experience with blue/green deployments and their relation to Shifting Under Load?

Blue/green deployments are a powerful technique often used in conjunction with Shifting Under Load, especially for applications requiring minimal downtime. Imagine having two identical environments: the ‘blue’ environment (live) and the ‘green’ environment (staging). The application is running on the ‘blue’ environment.

During the migration, we deploy the updated application and database to the ‘green’ environment. Once testing confirms its stability and functionality, we switch traffic from the ‘blue’ to the ‘green’ environment. This switch is often instantaneous, resulting in minimal downtime. If issues arise in the ‘green’ environment, we can quickly switch back to the ‘blue’ environment, minimizing disruption.

The ‘blue’ environment then can be decommissioned or used for further testing. This approach simplifies the rollback procedure, reducing risk and ensuring business continuity.

Blue/green deployments streamline Shifting Under Load by providing a safe, controlled environment to test and validate the updated application and database before switching over production traffic. This significantly minimizes the risk of unexpected issues affecting users.

Q 20. How do you ensure data integrity during a Shifting Under Load operation?

Data integrity is paramount during Shifting Under Load. Losing data or experiencing data corruption can have catastrophic consequences. To ensure data integrity, I employ a multi-layered approach:

- Data Validation: Before, during, and after the migration, rigorous data validation checks are performed to ensure data consistency between the source and target databases.

- Transaction Consistency: Utilizing techniques like two-phase commit or other database transaction mechanisms to ensure all data changes are atomic and consistent. Transactions must either complete successfully or roll back completely, preventing partial updates.

- Data Replication and Verification: Employing robust data replication methods to minimize data loss risk. We typically verify the integrity of replicated data through checksums or other comparison mechanisms before switching over.

- Backup and Recovery: Maintaining complete backups of the source database before initiating the migration. This acts as a safety net in case of unexpected failures during the process.

- Post-Migration Verification: After switching over to the new database, we perform a thorough validation process to ensure that no data was lost or corrupted during the migration. This often involves comparing key metrics and performing random data checks.

The specific techniques used depend on the database platform, the migration strategy, and the application’s criticality.

Q 21. Describe your experience with using scripting languages (e.g., Python, Bash) to automate Shifting Under Load tasks.

Scripting languages like Python and Bash are invaluable for automating Shifting Under Load tasks. Manual execution of these tasks is tedious, error-prone, and time-consuming. Automation improves efficiency, reduces human error, and allows for repeatability.

Python is particularly well-suited for tasks involving complex data manipulation, database interactions, and API integrations. I often use Python to create scripts for:

- Data Validation: Comparing data checksums or performing other data integrity checks.

- Database Replication Monitoring: Tracking replication lag and alerting on anomalies.

- Automated Cutover: Orchestrating the switch-over process between databases using API calls and database commands.

Bash is ideal for automating system-level tasks, such as stopping and starting database services, managing network configurations, and triggering other scripts.

Example Python Snippet (Illustrative):

import psycopg2 # Example using PostgreSQL

# ...Database connection details...

cur.execute("SELECT COUNT(*) FROM mytable")

count_before = cur.fetchone()[0]

# ...perform database migration...

cur.execute("SELECT COUNT(*) FROM mytable")

count_after = cur.fetchone()[0]

if count_before != count_after:

raise Exception("Data mismatch detected!")

print("Data migration successful!")By automating these tasks, I can ensure consistency, reduce errors, and significantly improve the overall efficiency and reliability of Shifting Under Load operations.

Q 22. What are some best practices for designing database systems that are easily shifted under load?

Designing databases for seamless Shifting Under Load (SUL) requires a proactive approach focusing on scalability, availability, and minimal disruption. Think of it like smoothly increasing the number of lanes on a highway during rush hour – you don’t want traffic to come to a standstill.

- Horizontal Scaling: Design your database for horizontal scalability. This means adding more database servers to handle increased load, rather than relying on a single, powerful server. This can be achieved using technologies like sharding or replication.

- Read Replicas: Implement read replicas to distribute read operations across multiple servers, freeing up the primary server to handle write operations. This is akin to having separate lanes for entering and exiting a highway.

- Connection Pooling: Use connection pooling to efficiently manage database connections. This avoids the overhead of constantly establishing and closing connections, improving performance under load.

- Caching: Leverage caching mechanisms to store frequently accessed data in memory. This reduces the load on the database server and speeds up response times. Think of this as a dedicated fast lane for frequently accessed information.

- Asynchronous Processing: Handle non-critical operations asynchronously using message queues. This prevents slow operations from blocking the main database threads.

- Database Choice: Select a database system designed for scalability. NoSQL databases often excel in this area, while some relational databases offer robust scaling capabilities.

For example, imagine an e-commerce site experiencing a sudden surge in traffic during a sale. A well-designed database will seamlessly handle this influx by distributing the load across multiple servers, ensuring a smooth shopping experience for all users.

Q 23. How do you handle schema changes during a Shifting Under Load operation?

Handling schema changes during SUL is a critical aspect. The key is minimizing downtime and data inconsistency. It’s like renovating a highway while keeping traffic flowing smoothly.

- Blue/Green Deployments: Deploy schema changes to a separate (green) environment. Once validated, switch traffic to the new environment. This ensures zero downtime.

- Canary Deployments: Gradually roll out schema changes to a small subset of users. Monitor performance and revert if issues arise. It’s like testing a new highway lane before opening it to all traffic.

- Zero-Downtime Migrations: Use database features (if available) that allow for schema changes without locking tables or interrupting operations. Some databases offer online schema alterations.

- Data Transformation Scripts: Write scripts to transform existing data to conform to the new schema. This should be done carefully to avoid data loss or corruption.

- Versioning: Maintain different database schema versions to enable rollbacks if needed. This is essential for disaster recovery.

Consider using tools like Liquibase or Flyway to automate the schema migration process and track changes effectively. A robust rollback plan is crucial, just like having a detour plan for highway construction.

Q 24. Explain your experience with different load testing tools.

My experience encompasses a variety of load testing tools, each with its strengths and weaknesses. Choosing the right tool depends on the specific needs of the project and the complexity of the database system.

- JMeter: A powerful open-source tool for simulating heavy load on web applications and databases. I’ve used it to test the performance of complex applications under extreme stress, identifying bottlenecks and performance issues.

- Gatling: Another excellent open-source tool, Gatling excels in creating and executing highly scalable load tests. Its Scala-based scripting provides flexibility and efficiency.

- k6: A modern open-source load testing tool focusing on developer experience and cloud integration. It’s particularly useful for continuous integration and continuous delivery workflows.

- LoadRunner (commercial): A comprehensive commercial tool offering advanced features like protocol support and detailed performance analysis. I’ve employed LoadRunner for large-scale projects requiring in-depth performance analysis.

In each case, the success of load testing hinges on accurate representation of real-world user behavior and thorough analysis of results to pinpoint areas for optimization.

Q 25. How do you manage user sessions during a Shifting Under Load operation?

Managing user sessions during SUL requires a strategy that balances user experience with system stability. Imagine smoothly redirecting traffic during a highway closure.

- Sticky Sessions: Employ sticky sessions (session affinity) to ensure that requests from a given user are routed to the same server. This preserves session state and avoids user disruption. However, this can limit scalability.

- Session Replication: Replicate user sessions across multiple servers to provide high availability. This requires a robust session management system.

- Stateless Applications: Design your application to be stateless, where possible. This removes the need for session management altogether and simplifies SUL operations.

- Session Databases: Store session data in a highly available session database, such as Redis or Memcached. This allows for easy access and scaling of session data.

- Session Timeouts: Use appropriate session timeouts to manage resources and prevent abandoned sessions from consuming server resources.

The choice of approach depends on the application architecture and scalability requirements. The key is to provide a seamless transition for users during the SUL process.

Q 26. What are some common security considerations related to Shifting Under Load?

Security is paramount during SUL operations. Failing to secure the process during load shifts could compromise sensitive data and expose vulnerabilities.

- Secure Configuration: Ensure all database servers and applications are properly configured with strong security measures, including encryption, access control, and regular security audits.

- Authentication and Authorization: Implement robust authentication and authorization mechanisms to protect access to sensitive data. This is especially important when dealing with multiple servers.

- Network Security: Secure all network connections between servers using encryption and firewalls to prevent unauthorized access.

- Data Encryption: Encrypt sensitive data both at rest and in transit to protect against unauthorized access.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting systems to detect and respond to security incidents promptly.

Imagine a highway under construction – security measures are needed to protect both the workers and the traveling public. Similarly, SUL operations require robust security protocols to protect against data breaches and other security risks.

Q 27. Describe your experience with cloud-based database solutions and their ability to handle Shifting Under Load.

Cloud-based database solutions are designed for scalability and high availability, making them ideal for SUL operations. They often provide managed services that simplify the process. Think of it like having a team of experts handle highway maintenance and expansion.

- AWS RDS/Aurora: Amazon’s RDS and Aurora offer various database engine options with built-in scalability and high availability features. They provide automatic scaling and failover mechanisms.

- Azure SQL Database: Microsoft’s Azure SQL Database offers similar features, including elastic scaling and automatic failover for high availability.

- Google Cloud SQL: Google’s Cloud SQL provides managed database services that easily scale to meet changing demands.

- Serverless Databases: Serverless database solutions like AWS DynamoDB or Google Firestore automatically scale to handle fluctuating loads, requiring minimal management.

Cloud providers often provide tools and services that simplify SUL, such as automated scaling, read replicas, and monitoring dashboards. They handle the underlying infrastructure, allowing developers to focus on application logic.

Q 28. How do you measure the success of a Shifting Under Load operation?

Measuring the success of a SUL operation requires a multi-faceted approach, looking beyond simple uptime. It’s like evaluating a highway expansion – just being open isn’t enough; it needs to be efficient and safe.

- Downtime: Track any downtime during the SUL operation. Ideally, there should be zero downtime.

- Performance Metrics: Monitor key performance indicators (KPIs) such as response time, throughput, and error rates before, during, and after the SUL operation. Ensure performance doesn’t degrade.

- Resource Utilization: Analyze resource utilization (CPU, memory, network) on all database servers to identify potential bottlenecks.

- User Experience: Gather user feedback to assess the impact of the SUL operation on user experience. Was there any noticeable disruption?

- Data Consistency: Ensure data consistency before and after the SUL operation. Data loss or corruption is unacceptable.

By carefully tracking these metrics and analyzing the results, we can identify areas for improvement and refine the SUL process for future deployments.

Key Topics to Learn for Shifting Under Load Interview

- Understanding Load Balancing Algorithms: Explore different load balancing techniques (round-robin, least connections, weighted round-robin) and their implications on system performance and resilience under varying loads.

- Practical Application: Microservices Architecture: Understand how load balancing is crucial in microservices environments, ensuring high availability and efficient resource utilization across multiple services.

- Capacity Planning and Scaling Strategies: Learn how to estimate resource needs under different load conditions and apply scaling strategies (vertical, horizontal) to handle peak demands effectively.

- Monitoring and Performance Tuning: Discuss techniques for monitoring system performance under load, identifying bottlenecks, and optimizing resource allocation for improved efficiency.

- High Availability and Disaster Recovery: Explore strategies to ensure system availability during periods of high load or unexpected failures, including redundancy and failover mechanisms.

- Database Performance Under Load: Understand how database systems handle high transaction volumes and explore techniques for optimizing database performance to avoid bottlenecks.

- Security Considerations: Discuss security best practices when implementing load balancing and scaling solutions to protect against potential vulnerabilities.

Next Steps

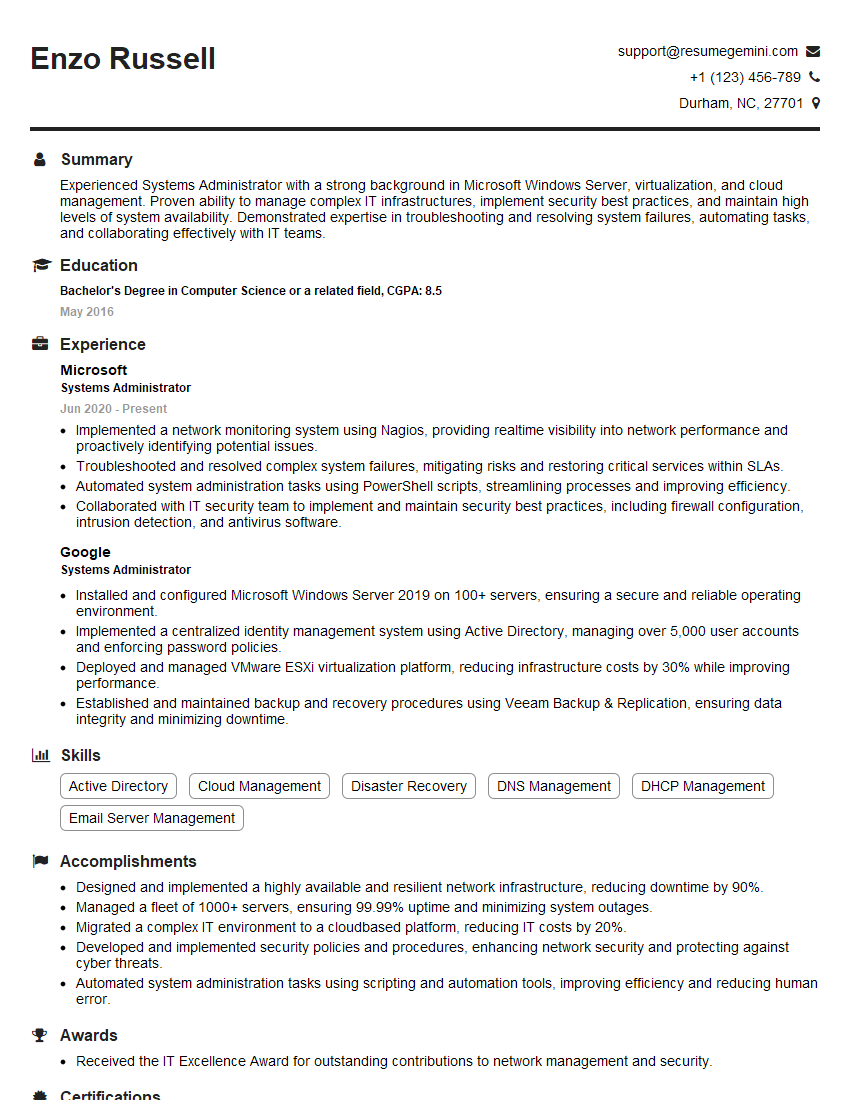

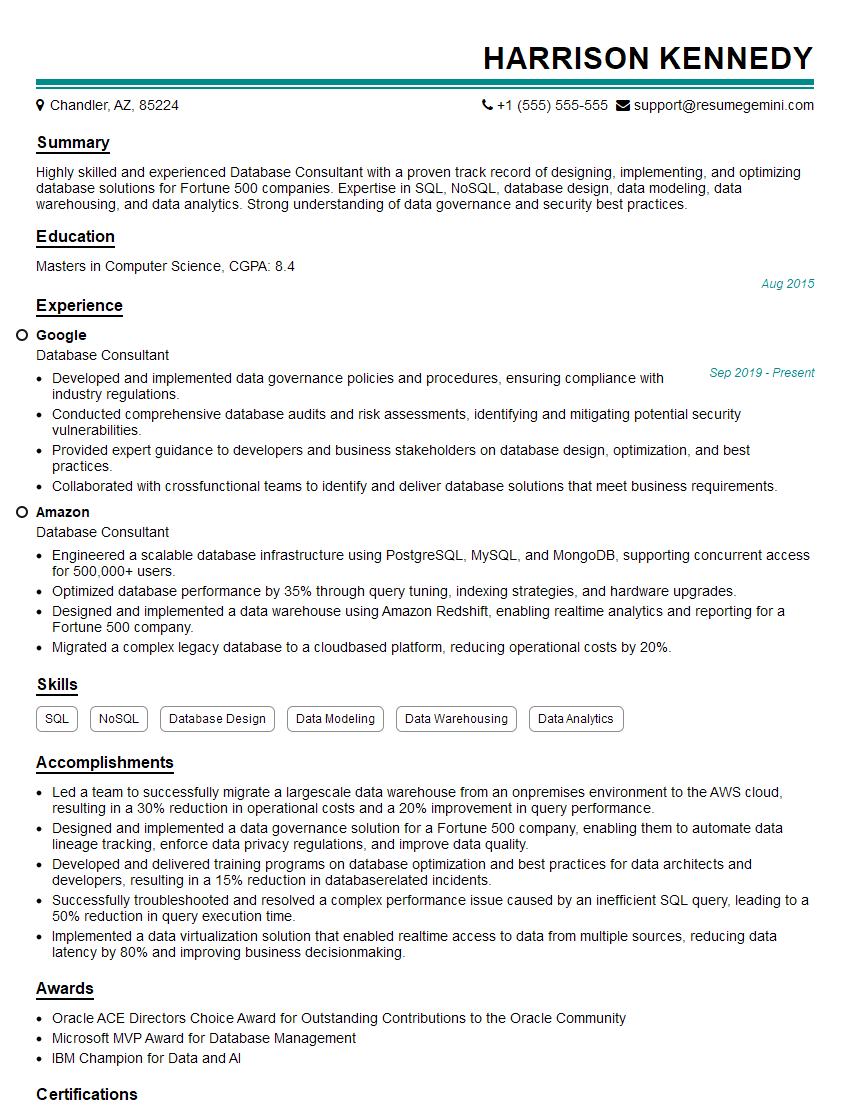

Mastering concepts related to Shifting Under Load is crucial for career advancement in today’s demanding IT landscape. Demonstrating proficiency in these areas significantly increases your chances of securing high-impact roles in system architecture, cloud engineering, and DevOps. To boost your job prospects, create an ATS-friendly resume that highlights your relevant skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional resume tailored to the specific requirements of your target roles. We provide examples of resumes tailored to highlight Shifting Under Load expertise to help you get started.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good