Unlock your full potential by mastering the most common Signal Processing and Modulation interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Signal Processing and Modulation Interview

Q 1. Explain the Nyquist-Shannon sampling theorem and its implications.

The Nyquist-Shannon sampling theorem is a fundamental principle in signal processing that dictates the minimum sampling rate required to accurately reconstruct a continuous-time signal from its discrete-time samples. In essence, it states that to perfectly recover a signal with a maximum frequency component fmax, you must sample it at a rate at least twice that frequency, i.e., at a rate of fs ≥ 2fmax. This minimum sampling rate, 2fmax, is known as the Nyquist rate.

Implications: Failing to meet the Nyquist rate leads to a phenomenon called aliasing, where higher-frequency components of the signal ‘fold’ into lower frequencies, corrupting the sampled representation. Imagine trying to capture a fast-spinning wheel with a slow-motion camera; you wouldn’t see the actual speed but a seemingly slower rotation. This is aliasing. Therefore, proper anti-aliasing filters (low-pass filters) are crucial before sampling to eliminate frequencies above fmax, ensuring accurate signal reconstruction. This theorem has far-reaching consequences in various fields, impacting digital audio, image processing, and telecommunications, where accurate signal representation is paramount.

Example: If you’re sampling an audio signal with a maximum frequency of 20 kHz (the upper limit of human hearing), you need a minimum sampling rate of 40 kHz. Common audio formats like CD quality audio use a 44.1 kHz sampling rate, exceeding the Nyquist rate to provide a safety margin.

Q 2. Describe different types of modulation techniques (AM, FM, PM, ASK, FSK, PSK, QAM) and their applications.

Modulation is the process of varying one or more properties of a periodic waveform, called the carrier signal, with a modulating signal that contains the information to be transmitted. Several techniques exist, each with unique characteristics and applications:

- Amplitude Modulation (AM): Varies the amplitude of the carrier signal proportionally to the instantaneous amplitude of the modulating signal. Used extensively in AM radio broadcasting due to its simplicity and long range, though susceptible to noise and interference.

- Frequency Modulation (FM): Varies the frequency of the carrier signal proportionally to the instantaneous amplitude of the modulating signal. Offers better noise immunity than AM and is widely used in FM radio broadcasting and some data transmission systems.

- Phase Modulation (PM): Varies the phase of the carrier signal proportionally to the instantaneous amplitude of the modulating signal. Similar noise immunity to FM but with different spectral characteristics.

- Amplitude Shift Keying (ASK): A digital modulation technique where the amplitude of the carrier signal represents the digital data; a high amplitude represents a ‘1’ and a low amplitude a ‘0’. Simple but susceptible to noise.

- Frequency Shift Keying (FSK): A digital modulation technique where the frequency of the carrier signal represents the digital data; different frequencies represent ‘0’ and ‘1’. More robust to noise than ASK.

- Phase Shift Keying (PSK): A digital modulation technique where the phase of the carrier signal represents the digital data; different phases represent different bits. Various types exist (BPSK, QPSK, etc.), offering increasing data rates with increasing complexity.

- Quadrature Amplitude Modulation (QAM): A digital modulation technique that combines amplitude and phase modulation to achieve higher data rates than PSK. Widely used in cable TV and DSL technologies.

The choice of modulation technique depends on factors like bandwidth availability, power limitations, noise levels, and the required data rate. For example, QAM is favored for high-speed data transmission in DSL, while FM is chosen for its noise immunity in broadcasting.

Q 3. What are the advantages and disadvantages of digital vs. analog signal processing?

Both analog and digital signal processing have their strengths and weaknesses:

| Feature | Analog Signal Processing | Digital Signal Processing |

|---|---|---|

| Implementation | Uses electronic circuits like op-amps, filters, etc. | Uses computer algorithms and digital hardware like DSPs. |

| Precision | Limited by component tolerances and noise. | High precision due to quantization and binary representation. |

| Flexibility | Less flexible; requires redesign for changes. | Highly flexible; changes can be implemented via software updates. |

| Cost | Can be cost-effective for simple applications. | Initial cost can be high, but becomes cost-effective for complex tasks. |

| Noise | Susceptible to noise and distortion. | Less susceptible to noise and distortion if implemented correctly. |

| Storage | Requires analog storage media (tapes, etc.). | Easily stored in digital format. |

In summary, digital signal processing offers superior flexibility, precision, and noise immunity but comes with a higher initial cost. Analog signal processing is often simpler and cheaper for basic applications but lacks the versatility and precision of its digital counterpart.

Q 4. Explain the concept of Fourier Transform and its applications in signal processing.

The Fourier Transform is a mathematical tool that decomposes a signal into its constituent frequencies. It transforms a signal from the time domain (where the signal is represented as a function of time) to the frequency domain (where the signal is represented as a function of frequency). This allows us to see which frequencies are present in the signal and their relative amplitudes.

Applications:

- Spectral Analysis: Identifying the frequency components of a signal, crucial in audio analysis, image processing, and vibration analysis.

- Signal Filtering: Designing filters to remove unwanted frequencies from a signal. By transforming the signal to the frequency domain, we can easily apply filtering operations and then transform back to the time domain.

- Signal Compression: Techniques like MP3 audio compression leverage the Fourier Transform to identify and remove perceptually irrelevant frequency components, reducing file size.

- Image Processing: Used extensively in image enhancement, filtering, and compression.

- Communications Systems: Analyzing signals in communication systems to identify channels, interference, and modulation schemes.

The Discrete Fourier Transform (DFT) is a crucial variant used in digital signal processing, enabling computational analysis of discrete-time signals. The Fast Fourier Transform (FFT) is a highly efficient algorithm for computing the DFT.

Q 5. How do you handle noise in a signal processing system?

Handling noise in signal processing is a critical aspect. Strategies depend on the nature and characteristics of the noise.

- Filtering: Using low-pass, high-pass, band-pass, or band-stop filters to attenuate noise within specific frequency ranges. The filter design depends on the noise’s frequency characteristics.

- Averaging: Repeatedly sampling the signal and averaging the samples. This helps reduce random noise, as it tends to average out.

- Signal Averaging (Ensemble Averaging): Useful when dealing with repetitive signals buried in noise. By averaging multiple repetitions of the signal, the signal-to-noise ratio is improved.

- Adaptive Filtering: Filters that adjust their parameters dynamically to optimize noise reduction based on changing noise conditions. Useful in scenarios with non-stationary noise.

- Wavelet Transform: A time-frequency analysis tool capable of effectively denoising signals by decomposing them into different frequency components and removing noise selectively in the wavelet domain.

- Median Filtering: A non-linear filter that replaces each sample with the median value of its neighbors. Effective at reducing impulse noise (spikes).

The choice of noise-reduction technique depends on the type of noise present and the application’s specific requirements. Often, a combination of methods is employed to achieve the best results.

Q 6. Describe different types of filters (low-pass, high-pass, band-pass, band-stop) and their applications.

Filters are essential in signal processing for isolating or removing specific frequency components from a signal. They are categorized based on their frequency response:

- Low-pass Filter: Allows low-frequency components to pass through while attenuating high-frequency components. Used in audio to remove high-frequency hiss or in image processing to smooth images.

- High-pass Filter: Allows high-frequency components to pass through while attenuating low-frequency components. Used in audio to remove low-frequency rumble or in image processing to sharpen edges.

- Band-pass Filter: Allows a specific range of frequencies to pass through while attenuating frequencies outside that range. Used in communication systems to select a particular channel or in medical imaging to isolate specific frequency bands.

- Band-stop Filter (Notch Filter): Attenuates a specific range of frequencies while allowing frequencies outside that range to pass through. Used to remove interference at a specific frequency (e.g., power line hum in audio).

Filters can be implemented using analog circuits (e.g., RC circuits) or digital signal processing techniques (e.g., Finite Impulse Response (FIR) and Infinite Impulse Response (IIR) filters). The design of a filter involves choosing appropriate parameters to achieve the desired frequency response, considering factors like attenuation, transition band, and phase response.

Q 7. Explain the concept of convolution and its significance in signal processing.

Convolution is a mathematical operation that combines two signals to produce a third signal. In signal processing, it represents the effect of a linear time-invariant (LTI) system on an input signal. Intuitively, it’s like sliding one signal (the impulse response of the system) across another signal (the input signal), calculating the overlap at each point, and summing the results.

Significance:

- System Analysis: Convolution determines the output of an LTI system given its impulse response and input signal. The impulse response fully characterizes the system’s behavior.

- Signal Filtering: Filtering can be viewed as a convolution operation. The filter’s impulse response determines how the input signal is modified.

- Image Processing: Convolution is fundamental in image processing operations like blurring, sharpening, and edge detection. The filter kernel (a small matrix) acts as the impulse response.

- Pattern Recognition: Convolution is used in pattern recognition systems to detect specific features in signals or images.

The discrete convolution of two sequences x[n] and h[n] is given by:

y[n] = Σk=-∞∞ x[k]h[n-k]where y[n] is the resulting signal. In practice, the summation limits depend on the signal lengths. The Fast Fourier Transform (FFT) can significantly speed up the computation of convolution using the convolution theorem, which states that convolution in the time domain is equivalent to multiplication in the frequency domain.

Q 8. What are the differences between FIR and IIR filters?

Finite Impulse Response (FIR) and Infinite Impulse Response (IIR) filters are two fundamental types of digital filters, differing primarily in their impulse response and structure. An FIR filter‘s impulse response is finite in length; it settles to zero after a certain number of samples. This means its output depends only on the current and past input samples, making it inherently stable. Conversely, an IIR filter has an impulse response that theoretically lasts forever, decaying over time. Its output depends on both past inputs and past outputs, leading to a recursive structure that can be more computationally efficient but may introduce instability if not designed carefully.

- Stability: FIR filters are always stable; IIR filters can be unstable.

- Implementation: FIR filters are typically implemented using direct convolution, while IIR filters use recursive structures.

- Phase Response: FIR filters can be designed to have linear phase response (meaning no phase distortion), a crucial property for many applications. Achieving linear phase in IIR filters is more challenging.

- Computational Complexity: IIR filters generally require fewer computations than FIR filters for the same filter order, leading to lower latency and power consumption. However, this comes at the cost of potential instability.

Imagine a dripping faucet. An FIR filter is like catching each drop separately in a bucket – the process stops once the dripping stops. An IIR filter is like a leaky bucket – some water remains even after the dripping ends, impacting the next drops caught. The leaky bucket can overflow (instability) if the leak is too large, while the first bucket will always stay within its limits.

Q 9. How do you design a digital filter using the windowing method?

Designing a digital filter using the windowing method is a straightforward approach, especially for FIR filters. It involves several steps:

- Specify Filter Requirements: Define the desired filter characteristics, including the type (low-pass, high-pass, band-pass, band-stop), cutoff frequency(ies), passband ripple, and stopband attenuation.

- Ideal Impulse Response: Determine the ideal impulse response

hideal[n]corresponding to the desired frequency response. This is often an inverse Discrete Fourier Transform (IDFT) of the desired frequency response. - Window Selection: Choose an appropriate window function (e.g., rectangular, Hamming, Hanning, Blackman). The window’s choice impacts the trade-off between transition width (sharpness of cutoff) and stopband attenuation. A rectangular window provides the sharpest transition but poor stopband attenuation; other windows offer better attenuation but wider transitions.

- Windowing: Multiply the ideal impulse response by the selected window function:

h[n] = hideal[n] * w[n], wherew[n]is the window function. - Implementation: Implement the resulting finite-length impulse response

h[n]using direct convolution or a Fast Fourier Transform (FFT) algorithm.

Example: Let’s say we want a low-pass FIR filter. We’d calculate the ideal impulse response using an IDFT of a rectangular function in the frequency domain, then multiply it by a Hamming window to reduce the sidelobes (undesired high-frequency components).

//Illustrative code (Python): import numpy as np from scipy.signal import firwin h = firwin(numtaps=51, cutoff=0.2, window='hamming')The numtaps parameter determines the filter order (length of the impulse response), cutoff specifies the normalized cutoff frequency, and window selects the window function. The resulting h array represents the coefficients of the FIR filter.

Q 10. Explain the z-transform and its applications in digital signal processing.

The z-transform is a powerful mathematical tool that maps a discrete-time signal (a sequence of numbers) into a complex frequency domain. It’s analogous to the Laplace transform for continuous-time signals. The z-transform of a discrete-time signal x[n] is defined as:

X(z) = Σ (x[n] * z-n), n = -∞ to ∞where z is a complex variable. The z-transform simplifies the analysis and design of discrete-time systems, particularly digital filters.

- System Analysis: The z-transform allows us to represent a linear time-invariant (LTI) system using its transfer function

H(z) = Y(z) / X(z), whereY(z)andX(z)are the z-transforms of the output and input signals, respectively. This transfer function reveals the system’s frequency response and stability properties. - Filter Design: Designing digital filters often involves manipulating the transfer function

H(z)in the z-domain to achieve desired frequency characteristics. For instance, we might specify a desired magnitude response and then use techniques like pole-zero placement to find the correspondingH(z). - Stability Determination: The stability of a digital filter is readily determined by examining the location of the poles of its transfer function in the z-plane. A system is stable if all its poles lie inside the unit circle (|z| < 1).

Think of the z-transform as a powerful lens that allows us to see the underlying structure and behavior of a discrete-time system from a different perspective, making it easier to analyze and design complex systems.

Q 11. What is aliasing and how can it be avoided?

Aliasing is a phenomenon that occurs when a continuous-time signal is sampled at a rate lower than twice its highest frequency component (Nyquist-Shannon sampling theorem). This results in the higher frequencies ‘folding back’ and appearing as lower frequencies in the sampled signal, causing distortion. Imagine a spinning wheel with spokes – if you take a picture with a slow shutter speed, the spokes may appear blurred or in an incorrect position.

Avoiding aliasing requires careful consideration of the sampling rate:

- Use a sufficiently high sampling rate: The sampling frequency (fs) must be at least twice the maximum frequency (fmax) present in the signal:

fs ≥ 2fmax. This is the Nyquist criterion. - Anti-aliasing filter: Employ a low-pass filter (analog filter) before sampling. This filter attenuates the frequencies above fmax, preventing them from corrupting the sampled signal.

In practice, a slightly higher sampling rate than the Nyquist rate is often used to provide a safety margin. Ignoring this can lead to significant errors in signal processing applications ranging from audio recordings to medical imaging.

Q 12. Explain the concept of quantization and its effects on signal quality.

Quantization is the process of converting a continuous-valued signal into a discrete-valued signal. This involves representing the signal’s amplitude using a finite number of levels. Think of it like rounding off numbers – instead of representing a voltage as 3.14159 volts, we might approximate it as 3.14 volts or 3.1 volts depending on the quantization resolution. This loss of precision leads to quantization error, which is the difference between the original signal and its quantized version.

The effects of quantization on signal quality are:

- Quantization Noise: Quantization error manifests as noise that is added to the signal. The higher the number of quantization levels (i.e., finer resolution), the lower the quantization noise.

- Signal Distortion: The signal’s shape can be altered due to quantization, especially if the resolution is coarse. This distortion can be significant for signals with high dynamic range.

- Loss of Information: Irreversible information loss occurs during the quantization process. The finer the quantization, the lower the information loss.

The impact of quantization is highly dependent on the application and the signal-to-noise ratio (SNR). In high-fidelity audio applications, high-resolution quantization is crucial to maintain good signal quality, whereas lower resolution might suffice in applications where fidelity is less critical.

Q 13. What is the difference between linear and non-linear signal processing?

The fundamental difference between linear and non-linear signal processing lies in how the system responds to superposition.

- Linear Signal Processing: A linear system obeys the principle of superposition. This means that if the input is a weighted sum of signals, the output is the same weighted sum of the corresponding individual outputs. Mathematically, if

x1[n]produces outputy1[n]andx2[n]producesy2[n], thenax1[n] + bx2[n]producesay1[n] + by2[n]whereaandbare constants. Examples include convolution and filtering. - Non-linear Signal Processing: Non-linear systems do not adhere to the principle of superposition. The output is not a linear combination of the inputs. Examples include systems that involve clipping, amplitude limiting, or operations like squaring or exponentiation of the signal.

Many real-world systems exhibit non-linear behavior. For instance, an amplifier might saturate at high input levels, exhibiting non-linear characteristics. While linear systems are easier to analyze using mathematical tools like the z-transform, non-linear systems often require more complex analysis techniques. However, non-linear processing can be advantageous in some cases for tasks like signal compression or noise reduction.

Q 14. Explain the concept of spectral analysis and its applications.

Spectral analysis is the process of decomposing a signal into its constituent frequency components. It aims to reveal the signal’s frequency content and its distribution over different frequencies. Think of it like separating a musical chord into its individual notes.

Techniques for spectral analysis include:

- Fourier Transform: The most common technique, which transforms a signal from the time domain to the frequency domain. The Discrete Fourier Transform (DFT) is used for discrete-time signals, while the Fast Fourier Transform (FFT) is a computationally efficient algorithm for calculating the DFT.

- Short-Time Fourier Transform (STFT): Used for non-stationary signals (signals whose frequency content changes over time), where the signal is analyzed in small, overlapping time windows. This provides a time-frequency representation.

- Wavelet Transform: Provides a more detailed time-frequency analysis, particularly useful for analyzing signals with transient components or changes in frequency characteristics over time.

Applications of spectral analysis are vast and span many fields:

- Audio Processing: Identifying the frequencies present in an audio signal, used for equalization, noise reduction, and audio compression.

- Image Processing: Analyzing the frequency content of images, used for image enhancement, compression, and feature extraction.

- Biomedical Engineering: Analyzing biological signals such as EEG and ECG, useful for diagnosis and monitoring.

- Communications: Analyzing signals in communication systems, used for modulation and demodulation, channel equalization, and interference detection.

Q 15. Describe different methods for signal detection and estimation.

Signal detection and estimation are crucial in signal processing, aiming to extract meaningful information from noisy observations. Methods vary depending on the signal characteristics and noise properties.

- Thresholding: A simple approach where the signal is compared to a threshold. If it exceeds the threshold, it’s considered detected. Think of a burglar alarm; it triggers (detects) when the sound level surpasses a predefined threshold.

- Matched Filtering: This technique maximizes the signal-to-noise ratio (SNR) by correlating the received signal with a template of the expected signal. We’ll delve deeper into this in a later answer.

- Hypothesis Testing: This statistical approach formulates hypotheses about the presence or absence of a signal and uses statistical tests (like the Neyman-Pearson test) to decide between them. Imagine medical diagnosis; a test might compare the patient’s symptoms to characteristics of a disease to decide if the patient has the illness or not.

- Maximum Likelihood Estimation (MLE): This method estimates the signal parameters (e.g., amplitude, frequency) that maximize the likelihood of observing the received signal given a specific signal model and noise distribution. This is frequently employed in radar systems to estimate the distance and velocity of targets.

- Bayesian Estimation: This approach incorporates prior knowledge about the signal into the estimation process, which can be particularly beneficial when dealing with limited data or uncertain signal parameters.

The choice of method depends on factors like the signal’s characteristics, the noise level, computational complexity, and the desired accuracy.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the challenges in processing wideband signals?

Processing wideband signals presents several challenges due to their broad frequency range:

- High sampling rates: According to the Nyquist-Shannon sampling theorem, wideband signals demand very high sampling rates, increasing computational complexity and hardware costs. A signal with a 1 GHz bandwidth would require at least a 2 GHz sampling rate.

- Increased data volume: High sampling rates result in a massive amount of data that needs to be processed, requiring powerful processors and significant memory.

- Hardware limitations: Designing and building analog-to-digital converters (ADCs) and digital signal processors (DSPs) capable of handling the high bandwidths can be technologically challenging and expensive.

- Interference: Wideband signals are more susceptible to interference from other signals sharing the same frequency range, demanding sophisticated interference mitigation techniques.

- Synchronization issues: Maintaining precise synchronization across the wideband becomes difficult, especially in multi-channel systems.

Addressing these challenges often involves techniques like downsampling (reducing the sampling rate), filtering (selective removal of unwanted frequency components), and parallel processing (distributing computations across multiple cores or processors).

Q 17. Explain the concept of matched filtering and its applications.

Matched filtering is an optimal linear filtering technique used to detect a known signal in the presence of additive noise. It correlates the received signal with a replica of the expected signal (the ‘matched filter’). This correlation process maximizes the signal-to-noise ratio (SNR) at the output, improving the detection reliability.

Imagine you’re trying to find a specific song within a noisy audio recording. A matched filter acts like a template that searches for an exact match to the song’s waveform. The higher the correlation, the more likely the song is present.

Applications:

- Radar: Detecting weak radar echoes from distant targets.

- Sonar: Detecting underwater objects.

- Communication Systems: Detecting weak signals in noisy channels.

- Medical Imaging: Enhancing image quality by removing noise.

Mathematically, the matched filter’s impulse response is the time-reversed and complex conjugate of the expected signal. The output of the matched filter is the correlation between the received signal and the matched filter.

Q 18. How do you perform signal compression and decompression?

Signal compression reduces the size of a signal while minimizing information loss. Decompression reconstructs the original signal from the compressed representation. The key is to exploit redundancy or irrelevancy in the signal.

Common methods include:

- Lossless Compression: Algorithms like run-length encoding (RLE) and Huffman coding remove redundancy without losing any information. The reconstructed signal is identical to the original. Think of ZIP files.

- Lossy Compression: Algorithms like MP3 (for audio) and JPEG (for images) remove perceptually irrelevant information to achieve higher compression ratios. The reconstructed signal is an approximation of the original, but the difference is usually imperceptible to humans. Think of streaming music – you lose some data for efficient transmission.

Compression Process (General Outline):

- Analysis: Transform the signal into a more suitable representation (e.g., frequency domain using Discrete Cosine Transform (DCT) for images).

- Quantization: Reduce the precision of the transformed coefficients, discarding less significant parts.

- Encoding: Efficiently encode the quantized coefficients using methods like Huffman or arithmetic coding.

Decompression reverses these steps. The choice between lossy and lossless depends on the application. Lossy compression is suitable for applications where a small amount of information loss is acceptable for significant size reduction, whereas lossless compression is crucial when preserving the integrity of the signal is paramount.

Q 19. Explain the concept of channel equalization and its importance in communication systems.

Channel equalization is a crucial technique in communication systems to compensate for the distorting effects of the communication channel. Channels introduce impairments (like attenuation, delay, and multipath fading), which smear the transmitted signal, causing intersymbol interference (ISI) – where successive symbols overlap and become indistinguishable.

Imagine trying to communicate across a noisy, echoing room. The words become distorted, and it’s hard to understand the message. Channel equalization acts like a ‘de-reverberation’ system, cleaning up the signal to make it clear.

Equalization techniques aim to invert the channel’s response, restoring the original signal as closely as possible. Common methods include:

- Linear Equalization: Uses a linear filter to compensate for channel distortion. Simple to implement, but may not be effective for severe distortion.

- Decision Feedback Equalization (DFE): A type of linear equalizer that incorporates past decisions to further reduce ISI. It offers better performance than a linear equalizer, but is more complex.

- Adaptive Equalization: Adjusts the equalizer’s parameters based on the channel’s changing characteristics. This is vital in wireless communications where the channel conditions can fluctuate dynamically.

- Blind Equalization: Does not require a training signal to adapt the equalizer; the equalizer attempts to learn the channel’s properties from the received signal itself.

The effectiveness of equalization is critical for reliable high-speed data transmission. Without equalization, the bit error rate would be unacceptably high.

Q 20. What are the different types of channel impairments in communication systems?

Communication channels introduce various impairments that degrade signal quality and reliability. These impairments can be broadly categorized as:

- Attenuation: A reduction in signal strength as it travels through the channel. Think of sound getting quieter as you move away from the source.

- Noise: Unwanted signals that interfere with the transmitted signal. This could be thermal noise, atmospheric noise, or interference from other sources.

- Multipath Propagation: The signal takes multiple paths to reach the receiver, causing delayed and attenuated versions of the signal to overlap, creating interference (ISI).

- Doppler Shift: A change in the signal frequency due to relative motion between the transmitter and receiver (commonly seen in wireless communication systems).

- Interference: Signals from other sources that contaminate the desired signal. This could be intentional jamming or unintentional interference.

- Distortion: Changes in the signal’s shape or characteristics. Non-linear components in the channel can introduce harmonic distortion.

- Fading: Fluctuations in the signal strength due to changes in the channel conditions (often encountered in wireless communication).

Understanding and mitigating these impairments is crucial for designing reliable and efficient communication systems.

Q 21. Describe different methods for error correction coding.

Error correction coding adds redundancy to the transmitted data to protect it from errors introduced during transmission. It allows the receiver to detect and correct errors, improving the reliability of the communication.

Imagine sending a message by carrier pigeon. You could write the message multiple times or use a code to represent the message, making it less susceptible to being lost or damaged. Error correction coding serves a similar purpose.

Methods include:

- Block Codes: Operate on fixed-size blocks of data. Examples include Hamming codes and Reed-Solomon codes.

- Convolutional Codes: Encode the data using a sliding window, producing a continuous stream of encoded bits. They are particularly effective in dealing with burst errors.

- Turbo Codes: A sophisticated type of convolutional code capable of achieving near-Shannon limit performance, meaning they are incredibly efficient at error correction.

- Low-Density Parity-Check (LDPC) Codes: Another powerful class of codes known for excellent performance, particularly over long codeword lengths.

The choice of error correction code depends on factors like the desired error correction capability, coding complexity, and computational resources. Higher error correction capabilities usually come at the cost of increased redundancy and thus lower data transmission rate.

Q 22. Explain the principles of OFDM modulation and its applications.

Orthogonal Frequency-Division Multiplexing (OFDM) is a digital modulation scheme that cleverly divides a high-rate data stream into many slower-rate data streams, each modulated onto a separate orthogonal subcarrier. Think of it like sending many smaller packages instead of one large one; it’s much more robust to interference and delay.

The key is orthogonality. Each subcarrier is designed so it doesn’t interfere with others, even if they overlap in frequency. This is achieved using carefully chosen frequencies. The receiver can then easily isolate and decode each subcarrier independently.

Applications: OFDM is ubiquitous in modern wireless communication. It’s found in:

- Wi-Fi (802.11a/g/n/ac/ax)

- Digital Video Broadcasting (DVB-T/T2)

- 4G LTE and 5G cellular networks

- Digital Audio Broadcasting (DAB)

Its resilience to multipath fading (signals bouncing off objects and arriving at the receiver at slightly different times) makes it ideal for wireless environments.

Q 23. What are the advantages and disadvantages of using different modulation schemes in wireless communication?

The choice of modulation scheme depends heavily on the desired trade-off between data rate, power efficiency, and robustness to noise and interference. Let’s compare a few:

- Binary Phase-Shift Keying (BPSK): Simple, robust, low data rate, power efficient.

- Quadrature Phase-Shift Keying (QPSK): Doubles the data rate of BPSK, slightly less robust.

- Quadrature Amplitude Modulation (QAM): Higher data rates, but increasingly susceptible to noise as the order increases (e.g., 16-QAM, 64-QAM, 256-QAM). Requires more power.

Advantages & Disadvantages Summary

- Higher-order modulation (like 64-QAM): Offers high spectral efficiency (more data per unit bandwidth) but is sensitive to noise and fading. Ideal for high-bandwidth channels with good conditions.

- Lower-order modulation (like BPSK): More robust to noise and fading, but lower spectral efficiency. Better for noisy or fading channels.

Imagine trying to whisper a message (low power, BPSK) versus shouting it (high power, 64-QAM). Shouting is faster but more easily overheard (noise). The best choice depends on the environment.

Q 24. Explain the concept of spread spectrum techniques.

Spread spectrum techniques spread a narrowband signal across a wider bandwidth, making it resistant to jamming and interference. This is achieved by modulating the signal with a pseudo-noise (PN) sequence – a seemingly random sequence that is known to both the transmitter and receiver.

Two primary types exist:

- Direct-Sequence Spread Spectrum (DSSS): The PN sequence is directly multiplied with the data signal, spreading it in the frequency domain. Think of it as spreading the signal’s energy thinly across a wider band.

- Frequency-Hopping Spread Spectrum (FHSS): The carrier frequency is hopped rapidly and pseudo-randomly across a range of frequencies. Imagine a radio constantly changing channels unpredictably.

Benefits:

- Anti-jamming capabilities: The signal is hard to detect and disrupt because its power is spread thinly across a wide bandwidth.

- Low probability of intercept (LPI): The spread signal is difficult to distinguish from background noise.

- Multipath resistance: The spread signal is less affected by multipath fading.

Example: GPS uses spread spectrum to allow many users to receive signals simultaneously without interference.

Q 25. How do you perform signal synchronization in a communication system?

Signal synchronization is crucial for proper demodulation and data recovery. It involves aligning the receiver’s timing and frequency with the transmitted signal. This is typically achieved in several stages:

- Coarse synchronization: Acquires an initial estimate of the carrier frequency and timing offset. Often involves correlating received signals with known training sequences.

- Fine synchronization: Refines the initial estimates to achieve precise alignment. Techniques like phase-locked loops (PLLs) are commonly used.

- Frame synchronization: Identifies the beginning and end of data frames in the received signal, using unique frame synchronization sequences.

Techniques:

- Correlation: Comparing the received signal with known training sequences to find the time and frequency alignment.

- Phase-locked loops (PLLs): Feedback control systems that lock onto the carrier frequency and track changes.

- Timing recovery algorithms: Algorithms that estimate and correct for timing errors.

Effective synchronization is vital for reliable communication; without it, received signals will be distorted, leading to errors.

Q 26. Describe your experience with signal processing toolboxes (e.g., MATLAB, Python libraries).

I have extensive experience with both MATLAB and Python’s signal processing libraries (SciPy, NumPy). In MATLAB, I’ve used the Signal Processing Toolbox extensively for tasks like:

- Filter design and implementation: Designing FIR and IIR filters for various applications, including noise reduction and signal shaping.

- Spectral analysis: Using FFTs and spectrograms to analyze signal characteristics and identify frequencies.

- Modulation and demodulation: Implementing various modulation schemes like OFDM, QAM, and FSK.

- Signal detection and estimation: Developing algorithms for signal detection and parameter estimation in noisy environments.

In Python, I’ve leveraged SciPy and NumPy for similar tasks, often finding its flexibility and open-source nature advantageous for prototyping and integrating with other libraries.

I’m proficient in using these tools to simulate communication systems, analyze experimental data, and develop signal processing algorithms from the ground up.

Q 27. Explain your experience with hardware design related to signal processing.

My experience with hardware design related to signal processing centers around embedded systems and FPGA development. I’ve worked on projects involving:

- FPGA implementation of communication systems: Designing and implementing high-speed digital signal processing algorithms (e.g., OFDM modulation/demodulation) on FPGAs using VHDL or Verilog. This involved optimization for resource utilization and timing constraints.

- Designing interfaces for analog-to-digital converters (ADCs) and digital-to-analog converters (DACs): This includes ensuring proper data transfer and clock synchronization.

- Development of embedded firmware for signal processing tasks: Writing firmware in C/C++ to control ADCs/DACs, process data, and interface with other components.

This hands-on experience has allowed me to bridge the gap between theoretical signal processing concepts and practical hardware implementation. I understand the limitations and challenges associated with implementing algorithms in hardware, and can optimize designs for performance and power efficiency.

Q 28. Describe a challenging signal processing problem you solved and how you approached it.

One challenging problem I faced involved developing an algorithm for robust speech enhancement in a highly noisy environment. The challenge was to remove background noise effectively while preserving the intelligibility and naturalness of the speech signal. Standard noise reduction techniques were inadequate because the noise was non-stationary and contained significant interfering speech from other sources.

My approach involved a multi-stage process:

- Blind source separation: Used Independent Component Analysis (ICA) to separate the mixed audio signals into independent components, attempting to isolate the target speech from the noise sources.

- Spectral subtraction: Employed spectral subtraction to further reduce residual noise in the separated speech component. I carefully tuned the parameters to minimize speech distortion.

- Wiener filtering: Applied Wiener filtering to further refine the signal, leveraging a priori knowledge about the speech spectrum.

- Post-processing: Applied post-processing steps such as spectral smoothing and dynamic range compression to improve the quality and naturalness of the enhanced speech.

The solution involved a combination of existing techniques adapted and refined through experimentation and evaluation using objective metrics (like signal-to-noise ratio and perceptual evaluation of speech quality) and subjective listening tests. The result was a significant improvement in speech intelligibility in noisy environments, exceeding the performance of standard techniques.

Key Topics to Learn for Signal Processing and Modulation Interview

- Signal Analysis Techniques: Mastering Fourier Transforms (DFT, FFT), Z-transforms, and Laplace transforms is crucial for understanding signal characteristics and manipulating them effectively. Consider exploring different transform properties and their applications.

- Digital Signal Processing (DSP) Fundamentals: Understand sampling, quantization, and the Nyquist-Shannon sampling theorem. Familiarize yourself with common DSP algorithms like filtering (FIR, IIR), convolution, and correlation.

- Modulation Techniques: Gain a solid grasp of Amplitude Modulation (AM), Frequency Modulation (FM), Phase Modulation (PM), and their variants (e.g., QAM, PSK). Understand the advantages and disadvantages of each technique in different communication scenarios.

- Practical Applications: Explore real-world applications of signal processing and modulation, such as telecommunications (cellular networks, satellite communication), radar systems, medical imaging (MRI, ultrasound), audio processing, and more. Being able to discuss specific examples demonstrates practical understanding.

- Noise and Interference: Understand the effects of noise on signals and various techniques for noise reduction and signal enhancement. This includes concepts like signal-to-noise ratio (SNR) and different types of noise.

- System Modeling and Analysis: Practice modeling communication systems using block diagrams and analyzing their performance characteristics. This includes concepts like channel capacity and bandwidth efficiency.

- Problem-Solving Approaches: Develop your ability to break down complex problems into smaller, manageable parts. Practice solving numerical problems related to signal analysis, modulation, and demodulation.

Next Steps

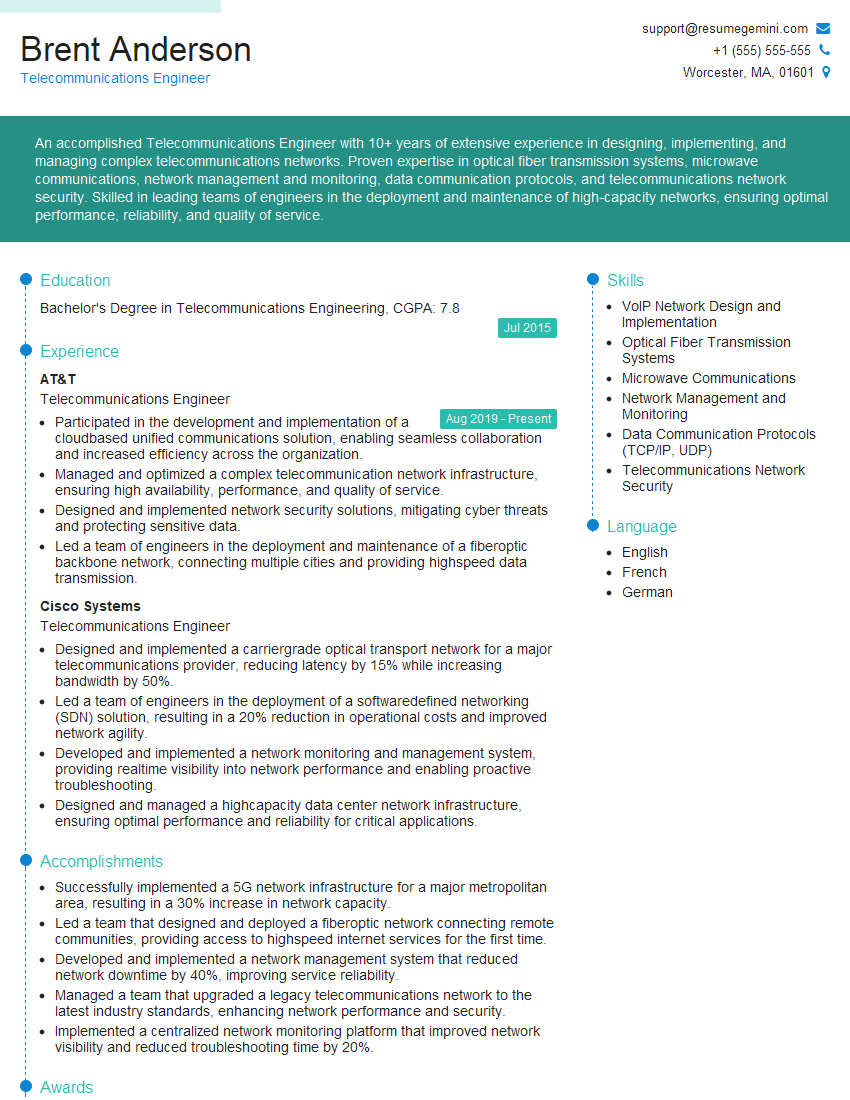

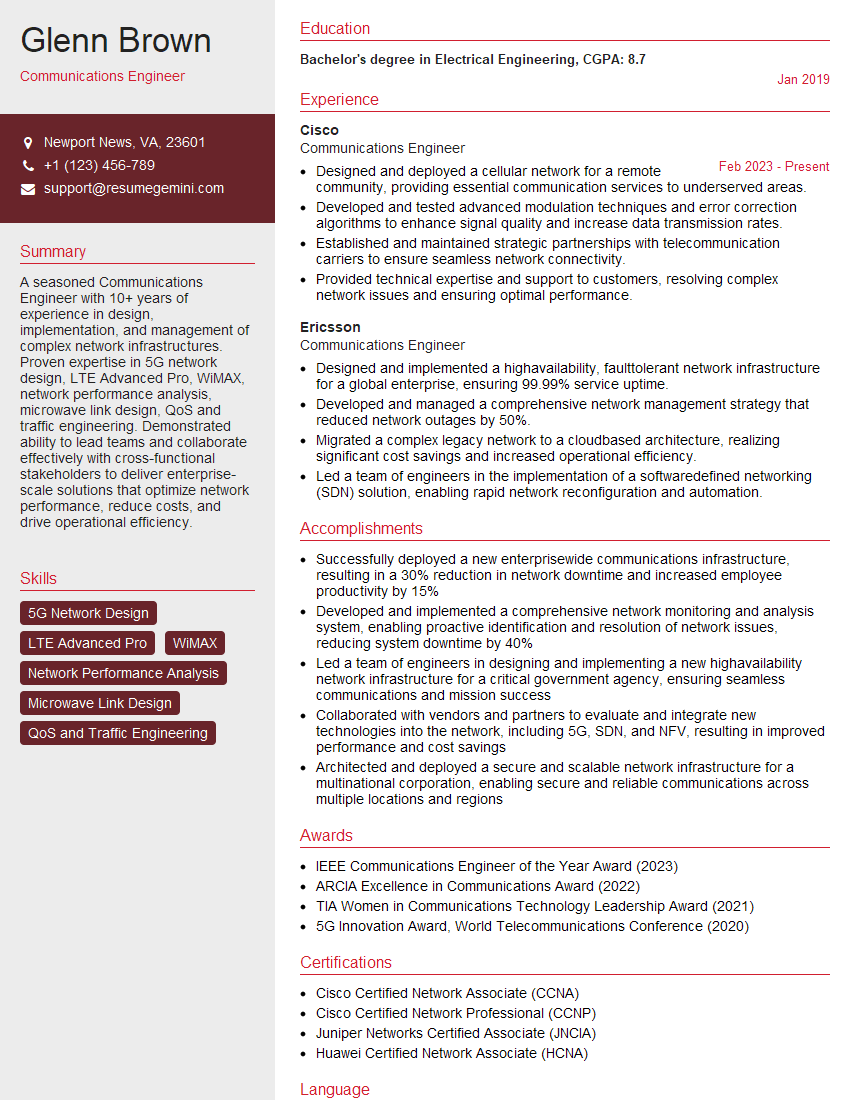

Mastering Signal Processing and Modulation is essential for a successful career in various high-demand fields, offering exciting opportunities for innovation and growth. A strong foundation in these areas significantly enhances your marketability and opens doors to advanced roles. To maximize your job prospects, crafting a compelling and ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you build a professional and effective resume tailored to the specific requirements of your target roles. We provide examples of resumes specifically designed for candidates in Signal Processing and Modulation to guide you through the process. Take the next step towards your dream career today!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good