Preparation is the key to success in any interview. In this post, we’ll explore crucial Simulation Software (ANSYS, Abaqus) interview questions and equip you with strategies to craft impactful answers. Whether you’re a beginner or a pro, these tips will elevate your preparation.

Questions Asked in Simulation Software (ANSYS, Abaqus) Interview

Q 1. Explain the difference between implicit and explicit solvers.

Implicit and explicit solvers are two fundamentally different approaches to solving finite element analysis (FEA) problems. The key difference lies in how they handle time and the solution of the governing equations.

Implicit solvers solve the system of equations at each time step simultaneously. Think of it like solving a complex puzzle all at once. They’re good for handling slow, quasi-static events, like slowly applying a load to a structure. They typically require fewer time steps but each step can be computationally expensive, involving iterative solution methods like Newton-Raphson. Stability is generally not a major concern as long as sufficient iterations are performed. An example would be simulating the deformation of a bridge under its own weight.

Explicit solvers solve the system of equations sequentially, step-by-step. It’s like following a recipe precisely. They are ideal for dynamic events, like a car crash or impact simulation, because they can handle large deformations and high speeds accurately. Each time step is relatively inexpensive, but stability becomes crucial, demanding very small time steps. An example would be simulating the impact of a dropped object onto a surface.

In short: Implicit is accurate and stable for slow events, while explicit is efficient for fast, dynamic events but requires careful time step selection to maintain stability.

Q 2. Describe the various element types available in ANSYS and Abaqus and their applications.

Both ANSYS and Abaqus offer a wide variety of element types, each suited to specific applications. Choosing the correct element type is crucial for accurate and efficient simulations.

- ANSYS: ANSYS offers a vast library including solid elements (e.g., SOLID185, SOLID186 – general-purpose 3D elements; TET10 – tetrahedral element), beam elements (e.g., BEAM188 – for slender structures), shell elements (e.g., SHELL181 – for thin structures), and many specialized elements for specific applications like fluids, acoustics, and electromagnetics. The choice depends on the geometry’s complexity and the desired level of detail.

- Abaqus: Similarly, Abaqus offers a range of elements including C3D8R (8-node linear brick element), C3D20R (20-node quadratic brick element), S4R (4-node linear shell element), and many more. The ‘R’ typically indicates reduced integration, which offers computational advantages but can sometimes lead to hourglassing effects that need to be mitigated.

Applications:

- Solid elements: Used for modeling 3D solid objects, capturing stress and strain throughout the volume. Examples include simulating a car body or a pressure vessel.

- Beam elements: Ideal for modeling slender structures like beams and columns, focusing on bending and axial effects. Examples include modeling building structures or aircraft wings.

- Shell elements: Used for modeling thin structures like plates and shells, considering in-plane and bending stresses. Examples include modeling thin-walled containers or car panels.

Choosing the right element type involves a thorough understanding of the problem and the underlying physics. A solid element is not always the best choice; selecting a simpler element like a beam or shell can significantly reduce computational time without losing accuracy in the right context.

Q 3. How do you handle convergence issues in a finite element analysis?

Convergence issues are common in FEA, often indicating a problem with the model, mesh, or solver settings. There’s no single solution, but a systematic approach is vital:

- Check the mesh: Poor mesh quality (e.g., excessively skewed elements, large aspect ratios) can cause convergence problems. Refinement in critical areas or remeshing is often necessary. Element size should be appropriate for the gradient of the solution; fine meshes are needed where stress concentrations are expected.

- Review boundary conditions: Incorrectly applied boundary conditions can lead to unrealistic stress states and prevent convergence. Carefully review the constraints and loads, ensuring they accurately represent the physical system.

- Adjust solver settings: Experiment with different solver settings. In implicit solvers, adjusting parameters like convergence tolerances and the number of iterations can improve convergence. In explicit solvers, decreasing the time step size might be necessary.

- Examine the material model: A poorly defined or inappropriate material model can also cause convergence problems. Ensure the material parameters are realistic and the model is suitable for the loading conditions.

- Nonlinearity: Nonlinear problems often require special handling. Techniques such as automatic time stepping (for implicit) or sub-cycling (for explicit) can improve convergence behavior. Using an appropriate load step size and increment can also help.

- Contact conditions: If your problem includes contact, ensuring proper definition of the contact properties and algorithms is crucial. Issues such as penetration often require careful attention to surface mesh quality and contact parameters.

Convergence issues require a detective approach. Systematically checking each aspect of the model will usually pinpoint the cause and allow for effective troubleshooting.

Q 4. What are the different types of boundary conditions and their application?

Boundary conditions define the interaction of the model with its surroundings. They are essential for a realistic simulation.

- Fixed Support/Constraint: Restricts the movement of nodes in one or more directions. For instance, fixing a node in all three directions (

ux=0, uy=0, uz=0) represents a completely fixed support. - Displacement: Specifies the displacement of nodes in a particular direction. This can be used to simulate imposed movement or deformation.

uy=0.1, for instance, would specify a 0.1 unit displacement in the y-direction. - Force/Pressure: Applies forces or pressures to nodes or surfaces. This is how loads are applied to the model, simulating gravity, wind loads, or contact forces.

- Temperature: Specifies the temperature at specific nodes or surfaces. This is crucial in thermal analysis simulations.

- Symmetry: Exploits geometric symmetry to reduce the model size and computational cost. Symmetry boundary conditions restrict the movement of nodes along the symmetry plane.

- Contact: Defines the interaction between surfaces in contact, accounting for friction and separation. This is often the most complex and challenging boundary condition.

Selecting the appropriate boundary conditions is paramount. Incorrect boundary conditions lead to inaccurate results and, in extreme cases, prevent convergence. Consider a simple beam example; if you forget to apply a fixed support, the beam will simply float away, giving unrealistic results.

Q 5. Explain mesh refinement techniques and their impact on accuracy.

Mesh refinement involves increasing the density of elements in specific areas of the model. It’s a crucial technique to improve accuracy, particularly in regions with high stress gradients or complex geometries.

Techniques:

- Global refinement: Refines the entire mesh uniformly, increasing the element count everywhere. While simple, it can be computationally expensive and unnecessary in many cases.

- Local refinement (h-refinement): Refines only specific regions of the mesh, concentrating elements where higher accuracy is needed. This is more efficient than global refinement.

- p-refinement: Increases the order of the interpolation functions within the elements, improving accuracy without increasing the element count. This is often more computationally expensive than h-refinement but can result in higher accuracy.

- Adaptive mesh refinement: Automatically refines the mesh based on the solution’s error estimate. This is more sophisticated but can lead to optimal mesh density.

Impact on accuracy:

Mesh refinement improves accuracy by reducing discretization error, the error introduced by approximating the continuous problem with a finite number of elements. However, excessive refinement can lead to diminishing returns and increased computational cost. A balance between accuracy and computational efficiency is essential. For example, in a stress concentration region, a finer mesh provides higher accuracy by capturing the steep stress gradient. A poorly refined mesh will often smooth out these gradients, leading to inaccurate predictions of stress levels and potentially failure locations.

Q 6. What are the advantages and disadvantages of using ANSYS and Abaqus?

Both ANSYS and Abaqus are industry-standard FEA software packages, but they have distinct advantages and disadvantages:

ANSYS:

- Advantages: User-friendly interface, extensive element library, strong support for multiphysics simulations (combining different physical phenomena like structural, thermal, and fluid dynamics in one model), and extensive documentation and tutorials.

- Disadvantages: Can be expensive, potentially steeper learning curve for advanced features, licensing can be complex.

Abaqus:

- Advantages: Powerful nonlinear capabilities (for large deformations and complex material behavior), excellent for advanced material modeling, strong in explicit dynamics simulations.

- Disadvantages: Steeper learning curve compared to ANSYS, particularly for beginners, less intuitive interface for some users, often more computationally demanding for certain problems.

The best choice depends on the specific project needs. For simple linear static analysis, ANSYS might be a more accessible option. However, for highly nonlinear dynamic simulations with complex material models, Abaqus might be a better choice. Many engineers are proficient in both packages to leverage their individual strengths.

Q 7. How do you validate your simulation results?

Validating simulation results is crucial to ensure their reliability and accuracy. It involves comparing simulation predictions with experimental data or established analytical solutions.

- Experimental Validation: Ideally, you should compare your simulation results with experimental data obtained from physical testing. This provides a direct measure of the simulation’s accuracy. This might involve testing a prototype or using existing data from literature.

- Analytical Solutions: For simpler problems, analytical solutions might exist. Comparing your simulation results to these analytical solutions can provide a benchmark for accuracy. This is particularly valuable for validating the simulation setup and ensuring the model behaves as expected.

- Benchmarking: Comparing your results against published results from similar simulations can build confidence. This requires careful consideration of model details and assumptions to ensure a fair comparison.

- Mesh Convergence Study: Performing a mesh convergence study demonstrates that the results are independent of mesh size, within a reasonable tolerance. This verifies that the solution has reached a converged state.

- Sensitivity Studies: Performing sensitivity studies demonstrates the influence of model parameters on the results. This helps to understand uncertainties and their impact on the predictions.

Validation is an iterative process. Discrepancies between simulation and experimental results might require revisiting the model, mesh, material properties, or boundary conditions. A well-validated simulation provides high confidence in its predictions.

Q 8. Describe your experience with meshing complex geometries.

Meshing complex geometries is crucial for accurate Finite Element Analysis (FEA). It involves dividing a 3D model into smaller, simpler elements – like tiny bricks or tetrahedra – that the software can solve. The quality of the mesh directly impacts the accuracy and convergence of the simulation. A poorly meshed model can lead to inaccurate results or even simulation failure.

My experience includes meshing parts with intricate features, such as thin walls, sharp corners, and complex internal geometries. I’m proficient in using various meshing techniques in both ANSYS and Abaqus, including:

- Structured meshing: Ideal for simple geometries where element size and shape are easily controlled. Think of a simple rectangular block – perfect for structured meshing.

- Unstructured meshing: Best for complex shapes where adaptability is key. This approach allows for a more refined mesh in critical areas (e.g., stress concentrations) while maintaining a coarser mesh in less sensitive regions, saving computational resources. I’ve used this extensively for casting simulations involving intricate mold designs.

- Mapped meshing: A hybrid approach combining structured and unstructured elements, offering a good balance between control and adaptability. Excellent for parts with complex curves but mostly regular shapes.

I also have significant experience using mesh refinement techniques, such as local mesh density control and adaptive meshing, to ensure accuracy in areas of high stress gradients. For instance, when simulating a crack propagation, I would significantly refine the mesh around the crack tip to capture the stress singularity accurately.

In addition to mesh generation, I meticulously check mesh quality using various metrics like aspect ratio, skewness, and element quality, ensuring the mesh is suitable for the chosen analysis type. Identifying and resolving mesh issues is a critical part of my workflow, significantly impacting the reliability of the simulation results.

Q 9. Explain your understanding of contact algorithms in FEA.

Contact algorithms in FEA are crucial for accurately modeling interactions between different parts or surfaces in a simulation. They define how these surfaces interact – from simple sliding to complex frictional behavior – and how forces are transmitted across the contact interface. Incorrectly modeling contact can drastically alter the simulation outcome.

My understanding encompasses various contact algorithms, including:

- Penalty method: This approach uses a penalty stiffness to prevent penetration between contacting surfaces. It’s relatively simple to implement but can be sensitive to the penalty parameter choice. Too low a value allows penetration; too high, stiffness issues arise.

- Lagrange multiplier method: A more robust method enforcing the non-penetration condition without the stiffness issues associated with the penalty method. It’s computationally more expensive, though.

- Augmented Lagrangian method: This combines aspects of both penalty and Lagrange multiplier methods, offering a good balance between robustness and computational efficiency. I often prefer this approach for its versatility.

Furthermore, I have experience modeling various contact behaviors, such as:

- Frictionless contact: Simplest scenario, where surfaces slide freely without friction. Useful for initial approximations or specific scenarios.

- Frictional contact: Models the effects of friction using Coulomb’s law, accounting for static and dynamic friction coefficients. This significantly impacts results in assemblies experiencing sliding or rubbing.

- Bonded contact: Models surfaces that are perfectly bonded together. Used for welding simulations or scenarios where parts are rigidly connected.

Selecting the appropriate contact algorithm and defining the contact properties correctly is vital for obtaining realistic and accurate results. For example, improper modeling of friction in a gear simulation could significantly misrepresent the forces and wear experienced.

Q 10. How do you determine the appropriate material properties for your simulation?

Determining the appropriate material properties is paramount to a successful FEA simulation. Incorrect material properties lead directly to inaccurate and unreliable results. The selection process involves a combination of theoretical knowledge, experimental data, and engineering judgment.

My approach involves the following steps:

- Identify relevant material: The first step is to precisely define the material used in the component. Is it a standard grade of steel, a specific alloy, or a composite material? This requires thorough review of design documents and specifications.

- Gather material data: Once the material is identified, the necessary material properties are collected. Sources include material datasheets, technical literature, and experimental testing (when available). This usually includes Young’s modulus (elasticity), Poisson’s ratio, yield strength, ultimate tensile strength, density, and thermal properties. For nonlinear materials, further data might be required, such as stress-strain curves obtained from tensile tests.

- Validate material data: Obtained material data should be critically evaluated for relevance and consistency. Outliers or inconsistencies must be resolved. Experimental data might need statistical analysis for reliability.

- Select appropriate material model: The choice of material model depends on the material behavior and the analysis type. Linear elastic models are sufficient for simple scenarios, while nonlinear models (plasticity, hyperelasticity, viscoelasticity) are needed for more complex behaviors. This requires understanding the limitations of each model.

- Implement material properties in FEA software: The gathered and validated material properties and selected model are inputted into the FEA software. This often involves defining material cards or using built-in material libraries.

For instance, simulating a crash test necessitates precise material properties exhibiting plastic deformation behavior, which requires utilizing a nonlinear material model and experimental stress-strain data. Approximations here can lead to vastly different energy absorption estimates.

Q 11. What is the difference between static and dynamic analysis?

Static and dynamic analyses are two fundamental types of FEA, differing primarily in how they handle time and inertia effects.

Static analysis assumes that loads applied to the structure are constant, and the resulting displacements and stresses are independent of time. It’s used for analyzing structures under steady-state conditions, such as a bridge under its own weight or a beam supporting a constant load. The analysis is computationally efficient because it solves for a single equilibrium state.

Dynamic analysis considers the effect of time-dependent loads and inertia forces. It’s used when time-varying loads or inertial effects are significant, such as earthquake simulations, vehicle crash tests, or vibration analysis of machinery. This type of analysis is more complex computationally as it tracks the response of the structure over time. Techniques include:

- Modal analysis: Determining the natural frequencies and mode shapes of a structure, essential for understanding its vibration characteristics.

- Transient dynamic analysis: Solving for the response of a structure to time-varying loads, such as an impact.

- Harmonic analysis: Analyzing the response of a structure to sinusoidal loading, like analyzing a rotating machine.

Choosing between static and dynamic analysis depends heavily on the nature of the problem and the potential influence of time-dependent loads and inertial effects. A simple cantilever beam under a static load is well-suited for static analysis, whereas an impact event would necessitate a dynamic analysis.

Q 12. How do you handle nonlinearity in your simulations?

Nonlinearity in FEA arises when the response of a structure is not proportional to the applied loads. This contrasts with linear analysis, where proportionality exists. Nonlinearity can stem from various sources, such as material nonlinearity (plasticity, hyperelasticity), geometric nonlinearity (large displacements or rotations), and contact nonlinearity (interactions between surfaces).

Handling nonlinearity requires specialized techniques:

- Incremental loading: Applying the load in small increments to track the response gradually. This is fundamental in most nonlinear analyses.

- Iterative solution procedures: At each load increment, an iterative process is used to solve the nonlinear equations. Newton-Raphson methods are commonly employed, refining the solution until convergence criteria are met.

- Arc-length methods: These techniques help navigate through limit points (e.g., buckling) that can hinder convergence in traditional Newton-Raphson methods. They are especially helpful for highly nonlinear problems.

- Proper material model selection: Choosing an appropriate constitutive model that accurately captures the nonlinear behavior of the material is crucial. Using an incorrect model can lead to inaccurate or unstable solutions.

For example, simulating metal forming requires accounting for plasticity and large deformations, demanding advanced nonlinear techniques and suitable material models. Ignoring nonlinearity in this context would drastically underestimate the required forces and lead to faulty design conclusions.

Q 13. Explain your experience with different solution strategies (e.g., direct, iterative).

Solution strategies in FEA refer to the methods used to solve the large system of equations that arise from the finite element discretization. The choice between direct and iterative solvers influences computational efficiency and memory requirements.

Direct solvers perform a complete factorization of the global stiffness matrix. They are generally more robust and reliable, ensuring a solution if the problem is well-posed. However, they require significant computational resources and memory, limiting their applicability to smaller problems. They are preferable for problems with well-conditioned matrices.

Iterative solvers generate a sequence of approximate solutions, iteratively refining them until a convergence criterion is satisfied. They are memory-efficient and suitable for large-scale problems. However, they might not converge for all problems or require careful selection of solver parameters, like pre-conditioners, to improve efficiency and robustness. They are particularly advantageous for sparse matrices, commonly arising in FEA.

My experience includes using both direct (e.g., frontal solvers) and iterative (e.g., conjugate gradient, GMRES) solvers in ANSYS and Abaqus. The choice between them depends on factors such as problem size, matrix properties, and desired accuracy. For smaller, simpler problems, a direct solver offers a quick and reliable solution, while for large, complex models, iterative solvers are usually necessary. I often employ pre-conditioning techniques for iterative solvers to accelerate convergence and ensure solution accuracy.

Q 14. Describe your experience with post-processing and visualization of results.

Post-processing and visualization are crucial for extracting meaningful information from FEA results. It involves analyzing the computed data and presenting it in a clear and understandable manner. This includes examining stress and strain distributions, displacements, reaction forces, and other quantities of interest.

My experience involves using the post-processing capabilities of both ANSYS and Abaqus to generate various visualizations, such as:

- Contour plots: Displaying the distribution of stress, strain, or other variables over the model’s surface or cross-sections, enabling quick identification of critical areas.

- Deformed shapes: Showing the magnitude and direction of displacements to understand structural deformation.

- X-Y plots: Graphing force-displacement curves, stress-strain curves, or time histories of various variables for detailed analysis.

- Animations: Creating animations of the model’s behavior over time to visualize dynamic events like impact or vibration.

Beyond visualization, I perform quantitative analysis of the results, extracting specific data points, calculating maximum stress values, and generating reports. Data extraction often involves using scripting capabilities within the FEA software for automation and efficiency. This ensures the results can be used for design modifications and decision-making. For example, in a pressure vessel design, post-processing helps to identify areas of high stress and guide design adjustments to mitigate potential failure.

Q 15. How do you perform modal analysis using ANSYS or Abaqus?

Modal analysis is a crucial technique used to determine the natural frequencies and mode shapes of a structure. Imagine plucking a guitar string – it vibrates at specific frequencies, these are its natural frequencies. Modal analysis helps us find these frequencies and the corresponding shapes (mode shapes) for a given structure, crucial for understanding its dynamic behavior under vibration.

In ANSYS, we typically use the Modal analysis type within the Mechanical APDL or Workbench environment. This involves defining the geometry, material properties, and boundary conditions. Then, we solve for the eigenvalues and eigenvectors, which represent the natural frequencies and mode shapes respectively. For example, analyzing a bridge’s structure helps identify resonant frequencies to avoid potential failures under dynamic loads like wind or traffic.

Abaqus employs a similar approach using its Frequency procedure. Both ANSYS and Abaqus allow for different solution methods, such as subspace iteration or Lanczos methods, depending on the model’s size and complexity. Post-processing involves visualizing the mode shapes to identify areas of high stress or displacement, aiding in design optimization.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain your experience with fatigue analysis.

Fatigue analysis is a critical aspect of engineering design, focusing on predicting the lifespan of a component subjected to cyclic loading. Imagine a metal component repeatedly bending – eventually, it will fail due to fatigue. Fatigue analysis helps determine how many cycles a component can endure before failure, considering various factors like stress amplitude, mean stress, and material properties.

My experience includes utilizing both ANSYS and Abaqus for fatigue analysis. We typically employ the stress-life approach (S-N curves) or strain-life approach (ε-N curves), depending on the material and loading conditions. In ANSYS, I’ve used the Fatigue module along with the results from a static or dynamic analysis. Abaqus offers similar capabilities through its Subroutine capabilities and built-in fatigue analysis tools. I’ve worked with various S-N curves obtained from experimental testing or literature, incorporating them into the analysis. For example, I successfully predicted the fatigue life of a turbine blade subjected to varying thermal and centrifugal loads, leading to modifications that significantly increased its service life.

Critical to successful fatigue analysis is proper meshing, particularly in high-stress regions, and selecting appropriate material models that capture the material’s fatigue behavior. Accurate load definitions are also paramount. Post-processing often involves generating fatigue life contours or generating a cumulative damage plot to understand component life under complex loading histories.

Q 17. How do you perform a buckling analysis?

Buckling analysis predicts the critical load at which a structural component loses stability and undergoes significant deformation. Think of a slender column – when subjected to sufficient compressive load, it will suddenly buckle, rather than simply compressing further. Buckling analysis identifies this critical load and the associated buckling mode shape.

In ANSYS, we use the Buckling analysis type, which is an eigenvalue analysis. This involves solving for the eigenvalues and eigenvectors representing the critical buckling loads and corresponding buckling mode shapes. Similarly, Abaqus uses its Linear Perturbation procedure under the Static step to perform buckling analysis. We define the geometry, material properties, boundary conditions, and apply compressive load(s). The software then calculates the critical buckling loads and shows the mode shape, which helps engineers understand which areas are prone to buckling. For instance, in designing a tall building, buckling analysis is paramount to ensure structural integrity against wind and seismic loads.

Accurate meshing, particularly in regions with potential stress concentrations, is vital for reliable results. Additionally, proper application of boundary conditions accurately representing the support system is crucial. Post-processing usually involves visualizing the buckling mode shape to identify areas most vulnerable to buckling, allowing for design adjustments to enhance structural stability.

Q 18. Describe your experience with thermal analysis.

Thermal analysis involves predicting the temperature distribution within a component or system under various thermal loads, such as heat generation, convection, radiation, and conduction. Imagine heating a metal plate – the temperature won’t be uniform; thermal analysis helps determine this temperature distribution.

My experience includes extensive use of ANSYS’s thermal analysis capabilities within its Mechanical APDL or Workbench environment, and Abaqus’s Heat Transfer procedure. I’ve modeled various scenarios, including steady-state and transient heat transfer, involving conduction, convection, and radiation. For instance, I performed thermal analysis of an electronic component to predict its temperature rise under operating conditions, optimizing its cooling system. Specific approaches involved defining material properties, thermal loads, boundary conditions, and selecting appropriate elements for the finite element method (FEM) mesh.

A key aspect is selecting the right element types based on the problem complexity and selecting appropriate boundary conditions representing realistic heat exchange with the environment. For transient problems, accurate time-step selection is crucial. Post-processing commonly involves generating temperature contour plots, temperature gradients, and heat flux maps to understand the temperature distribution and heat transfer mechanisms within the component or system. This aids in determining potential hotspots or areas of thermal stress.

Q 19. How do you perform CFD simulations using ANSYS Fluent or Abaqus CFD?

Computational Fluid Dynamics (CFD) simulates fluid flow and heat transfer. Imagine air flowing over an airplane wing – CFD helps predict the pressure distribution, velocity, and temperature fields. ANSYS Fluent is a widely used CFD solver.

My experience using ANSYS Fluent focuses on simulating various fluid flow phenomena, including incompressible and compressible flows, laminar and turbulent flows, and heat transfer. The process generally begins with defining the geometry, meshing the domain, defining material properties (density, viscosity, etc.), specifying boundary conditions (inlet, outlet, walls), and selecting a turbulence model. We then solve the governing Navier-Stokes equations to obtain the velocity, pressure, and temperature fields. Examples of simulations include analyzing the flow around an airfoil to optimize its design or simulating flow in a pipe to understand pressure drop and heat transfer. Abaqus CFD, while capable, is less commonly used for general-purpose CFD compared to ANSYS Fluent.

Fluent offers various solvers and turbulence models to handle different flow regimes. Post-processing involves visualizing the flow field using contour plots, streamlines, and vector plots to analyze pressure and velocity distributions, and assess heat transfer rates. Meshing is critical; a fine mesh is needed in regions with high flow gradients to obtain accurate results.

Q 20. Explain your understanding of turbulence modeling.

Turbulence modeling is crucial in CFD simulations because most real-world flows are turbulent, characterized by chaotic, fluctuating flow patterns. Imagine a river’s flow – it’s rarely smooth; it’s turbulent. Turbulence models are mathematical approximations that capture the effects of turbulence on the mean flow characteristics.

My understanding includes using various turbulence models in ANSYS Fluent, such as the k-ε model, k-ω SST model, and Reynolds Stress Model (RSM). The k-ε model is a relatively simple two-equation model, suitable for many high Reynolds number flows, while the k-ω SST model offers improved accuracy near walls. RSM is a more complex model offering increased accuracy but requiring more computational resources. The choice of model depends on factors like the flow regime, complexity, and available computational power. For instance, I’ve used the k-ω SST model for analyzing boundary layer flows over complex geometries due to its better accuracy near walls, crucial for predicting drag and lift accurately.

Understanding the limitations of each model is critical. No model is perfect, and the choice often involves a trade-off between accuracy and computational cost. Results must be carefully interpreted and validated with experimental data or other simulations whenever possible. Accurate selection of turbulence models is critical for obtaining reliable CFD results.

Q 21. Describe your experience with multiphysics simulations.

Multiphysics simulations involve coupling different physical phenomena within a single simulation. Imagine a heated pipe carrying a fluid – the fluid flow affects the temperature distribution, and vice versa. Multiphysics simulations account for these interactions.

My experience encompasses various multiphysics simulations, including fluid-structure interaction (FSI), thermal-structural analysis, and electro-thermal analysis. I’ve used ANSYS Workbench’s multiphysics capabilities extensively to couple different physics solvers, such as Fluent (for CFD) and Mechanical (for structural analysis). For example, I conducted FSI analysis to study the interaction between a flowing fluid and a flexible pipe, assessing the structural integrity under dynamic fluid loading. Abaqus also provides capabilities for multiphysics simulations through its coupled field analysis features.

Key aspects include proper definition of interfaces between different physics domains and selection of appropriate coupling schemes. Convergence can be challenging in multiphysics simulations, requiring careful attention to solver settings and mesh resolution. Post-processing involves visualizing the coupled fields to understand the interplay between different physics, providing a holistic view of the system’s behavior. Multiphysics simulations are invaluable in realistically modeling complex engineering systems.

Q 22. How do you handle uncertainties in your input parameters?

Handling uncertainties in input parameters is crucial for reliable simulation results. Real-world materials and geometries rarely match perfectly with idealized models. We address this through several techniques. One common approach is probabilistic analysis, where instead of using single values for parameters like material properties (Young’s modulus, Poisson’s ratio) or dimensions, we define them as probability distributions (e.g., normal, uniform). Software like ANSYS and Abaqus offer tools to integrate these distributions, running multiple simulations with different parameter sets sampled from the distributions. This allows us to obtain a distribution of results, providing a range of possible outcomes and quantifying the uncertainty.

For example, in simulating a bridge structure, the concrete’s compressive strength might be uncertain. Instead of using a single value, we’d use a normal distribution centered around the mean strength with a standard deviation reflecting the variability. The simulation then produces a distribution of stress and deflection values, telling us the probability of exceeding a critical threshold.

Another technique is sensitivity analysis, which helps identify parameters having the most significant impact on the output. This allows us to focus our efforts on accurately characterizing the critical parameters, and potentially simplify the modelling of less influential ones. We can use design of experiments (DOE) methods, like Latin Hypercube Sampling, to efficiently explore the parameter space.

Finally, robust design optimization methodologies combine uncertainty quantification and optimization, seeking designs that perform well even with parameter variations. This aims to create designs that are less sensitive to uncertainties, leading to more reliable performance.

Q 23. Explain your experience with scripting in ANSYS or Abaqus (APDL, Python).

Scripting is essential for automating repetitive tasks and extending the capabilities of ANSYS and Abaqus. I’m proficient in both APDL (ANSYS Parametric Design Language) and Python. In ANSYS, APDL allows me to create complex models, automate mesh generation, and control the solution process programmatically. For instance, I’ve used APDL to generate parameterized models of turbine blades, iterating through different design variables to optimize for performance.

! APDL Example: Creating a simple beam element

/PREP7

ET,1,BEAM188

*DO,I,1,10

RECTNG,0,1,0,0.1

TYPE,1

MAT,1

ESIZE,0.1

AMESH,ALL

*VPOINT,I

*NEXT

FINISHPython, with packages like pyansys and abaqus_python, provides a more versatile and powerful scripting environment. I’ve used Python for post-processing, data analysis, and integrating simulation workflows with other software tools. For example, I developed a Python script to automate the extraction of stress results from Abaqus, process the data, and generate custom visualization using Matplotlib. This eliminates manual data handling, reducing errors and saving significant time.

# Python Example: Simple data extraction (Illustrative)

from abaqus import *

from abaqusConstants import *

odb = openOdb('path/to/your/odb')

# ...code to extract stress data...In short, scripting significantly increases efficiency and allows for advanced automation in my simulation work, ultimately contributing to a more streamlined and robust workflow.

Q 24. Describe your experience with optimization techniques in simulation.

Optimization techniques play a pivotal role in design engineering. My experience encompasses several methods, including gradient-based optimizers, genetic algorithms, and topology optimization. I’ve used these methods extensively in both ANSYS and Abaqus.

Gradient-based methods, like the optimization features within ANSYS DesignXplorer or Abaqus’ built-in optimization capabilities, are efficient for smooth, well-behaved objective functions. They work by iteratively searching for the optimum design by calculating the gradient (the rate of change) of the objective function with respect to design variables. For example, I optimized the design of a pressure vessel to minimize weight while satisfying stress constraints.

Genetic algorithms are particularly powerful for problems with non-smooth or discontinuous objective functions, which can be very common in engineering scenarios such as maximizing strength with material limitations. They work through mimicking natural selection, generating a population of designs and using selection, crossover, and mutation operators to evolve towards better solutions. I’ve used this approach to optimize the layout of components within a complex assembly, maximizing stiffness while minimizing material usage.

Topology optimization alters the material distribution within a design space to achieve optimal performance under specified constraints (stress, displacement, natural frequency). This allows us to explore novel and highly efficient designs that are difficult to conceive manually. I’ve used this extensively to optimize lightweight designs of structural parts like automotive components and aerospace structures.

Q 25. How do you ensure the accuracy and reliability of your simulations?

Ensuring accuracy and reliability is paramount. This involves several key steps, starting with model validation. We compare simulation results with experimental data from tests or real-world observations. Discrepancies highlight areas needing improvement in the model. For instance, a simple example would involve validating a simple beam bending simulation with a corresponding physical experiment involving measuring the beam’s deflection under load. We also employ mesh convergence studies to ensure the results are independent of the mesh size. By systematically refining the mesh, we examine whether the results change significantly. If they do, this indicates a need for a finer mesh to accurately capture the solution.

Material model selection is crucial. We choose material models appropriate to the material’s behavior, considering non-linear effects like plasticity and creep if necessary. We validate the selected model by comparing its predictions to experimental data. Similarly, boundary conditions and loading scenarios must accurately represent the real system. Incorrect representation in this stage can lead to large inaccuracies. We use multiple methods to check the reliability of the modeling process itself. Proper use of boundary conditions is important. We check for possible issues such as overly-constrained models and incorrect symmetry assumptions that could yield inaccurate results.

Finally, using independent verification methods is important. This involves having a second engineer independently review the model, assumptions, and results. This provides an additional layer of quality control and helps identify potential errors. Documenting all assumptions, processes, and results helps immensely for future audits and troubleshooting.

Q 26. What are your experiences with different types of load cases?

My experience spans various load cases, including static, dynamic, thermal, and coupled field analyses (e.g., thermal-structural).

Static loads represent time-independent forces and pressures. I’ve extensively used these for structural analysis of buildings, bridges, and mechanical components under constant loads. Dynamic loads encompass time-varying forces, such as impact, shock, or vibration. In simulating crashworthiness of automotive structures or earthquake response of buildings, I’ve utilized explicit and implicit dynamic solvers in both ANSYS and Abaqus. These include modal analysis to determine resonant frequencies and transient dynamic analysis to simulate the system’s time-dependent response.

Thermal loads involve temperature variations and heat transfer. I’ve performed thermal analyses of electronics to predict temperature distributions and stress from thermal expansion. Coupled field problems account for interaction between different physical fields. For instance, in simulating a nuclear reactor core, we’d need coupled thermal-structural analysis to consider thermal stresses resulting from temperature gradients.

Moreover, I’m familiar with different load application methods, such as concentrated loads, distributed loads, pressure loads, and body forces (like gravity). The choice of loading method depends on the specific nature of the problem and the accuracy of the simulation required.

Q 27. Explain your understanding of model reduction techniques.

Model reduction techniques are essential for analyzing large and complex systems, which are computationally expensive to simulate directly. These techniques create simplified models that capture the essential dynamics of the original system while significantly reducing computational cost. Common methods include:

- Modal superposition: This technique utilizes the system’s natural vibration modes to represent the response, focusing on the dominant modes contributing to the system’s behavior. It works well for linear systems undergoing harmonic or transient vibrations.

- Component mode synthesis (CMS): CMS simplifies the system by dividing it into smaller substructures (components). These are individually analyzed, and their reduced-order models are coupled to generate a reduced-order model for the entire system. This is often advantageous for systems with repetitive structures or easily separable components.

- Krylov subspace methods: These methods construct a subspace that accurately represents the system’s response to a specific set of inputs. They are effective for transient dynamic analysis and model order reduction. This method is very effective in large linear systems.

- Proper orthogonal decomposition (POD): POD is a data-driven technique that extracts the most significant features from simulation data to create a reduced-order model. It is effective in capturing non-linear behaviors.

The choice of method depends on the problem’s specifics, the level of accuracy required, and the computational resources available. Model reduction techniques are critical for situations where full-scale simulations are impractical due to size and computational limitations.

Q 28. Describe a challenging simulation project you worked on and how you overcame the challenges.

One challenging project involved simulating the crashworthiness of a novel vehicle design with complex composite materials and geometries. The challenge stemmed from the material’s highly non-linear behavior and the computational cost of modeling the intricate geometry. We needed accurate predictions of energy absorption and passenger compartment intrusion during a frontal impact.

Initially, a full-scale, high-fidelity model was impractical due to computational cost. To overcome this, we employed several strategies. First, we utilized a multi-scale modeling approach. We performed detailed microscopic simulations of the composite material to extract effective material properties for a macroscale model of the vehicle structure. This gave us an accurate representation of the material’s non-linear response without the need for excessive mesh refinement at the microscopic level.

Second, we used adaptive mesh refinement, focusing computational resources on areas experiencing high stress and deformation. This significantly reduced the computational cost while maintaining accuracy in critical regions. Finally, we applied model reduction techniques based on Proper Orthogonal Decomposition (POD) to reduce computational complexity in subsequent simulations.

This combination of techniques allowed us to accurately predict the vehicle’s crash behavior within acceptable computational times. The project highlighted the importance of tailoring simulation strategies to the problem’s specific challenges and leveraging the full range of available tools and techniques. The results were successfully used for design iterations that improved vehicle safety without compromising weight or other performance criteria.

Key Topics to Learn for Simulation Software (ANSYS, Abaqus) Interview

- Finite Element Method (FEM): Understand the fundamental principles of FEM, including meshing techniques, element types, and solution procedures. Be prepared to discuss its application in various engineering problems.

- Material Modeling: Gain a strong understanding of material properties and constitutive models used in ANSYS and Abaqus. Practice selecting appropriate models for different materials and loading conditions.

- Structural Analysis: Master the application of static and dynamic analysis techniques, including linear and nonlinear analyses. Be ready to discuss concepts like stress, strain, deflection, and buckling.

- Pre-processing and Post-processing: Develop proficiency in mesh generation, boundary condition application, and result interpretation. Practice visualizing and analyzing simulation results effectively.

- ANSYS Workbench/Abaqus CAE: Familiarize yourself with the user interface, workflow, and functionalities of at least one of these software packages. Demonstrate your ability to navigate and utilize its features.

- Nonlinear Analysis Techniques: Explore advanced topics such as contact analysis, large deformation analysis, and material nonlinearity. Understand their applications and limitations.

- Verification and Validation: Learn the importance of validating simulation results against experimental data or analytical solutions. Understand methods for ensuring accuracy and reliability.

- Specific Applications (Choose one or two relevant to your experience): Deepen your understanding of applications relevant to your background, such as thermal analysis, fluid-structure interaction (FSI), or fatigue analysis.

- Problem-solving methodologies: Develop your ability to systematically approach and solve engineering problems using simulation software. Practice breaking down complex scenarios into manageable steps.

Next Steps

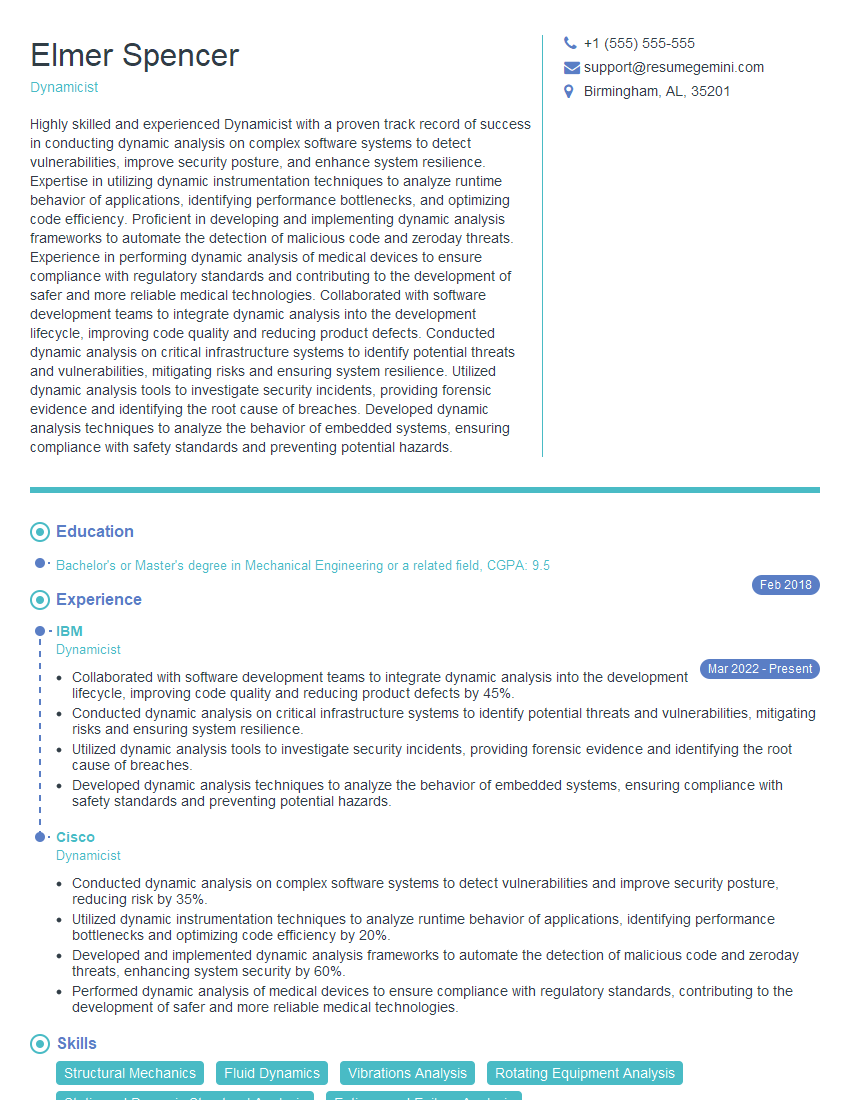

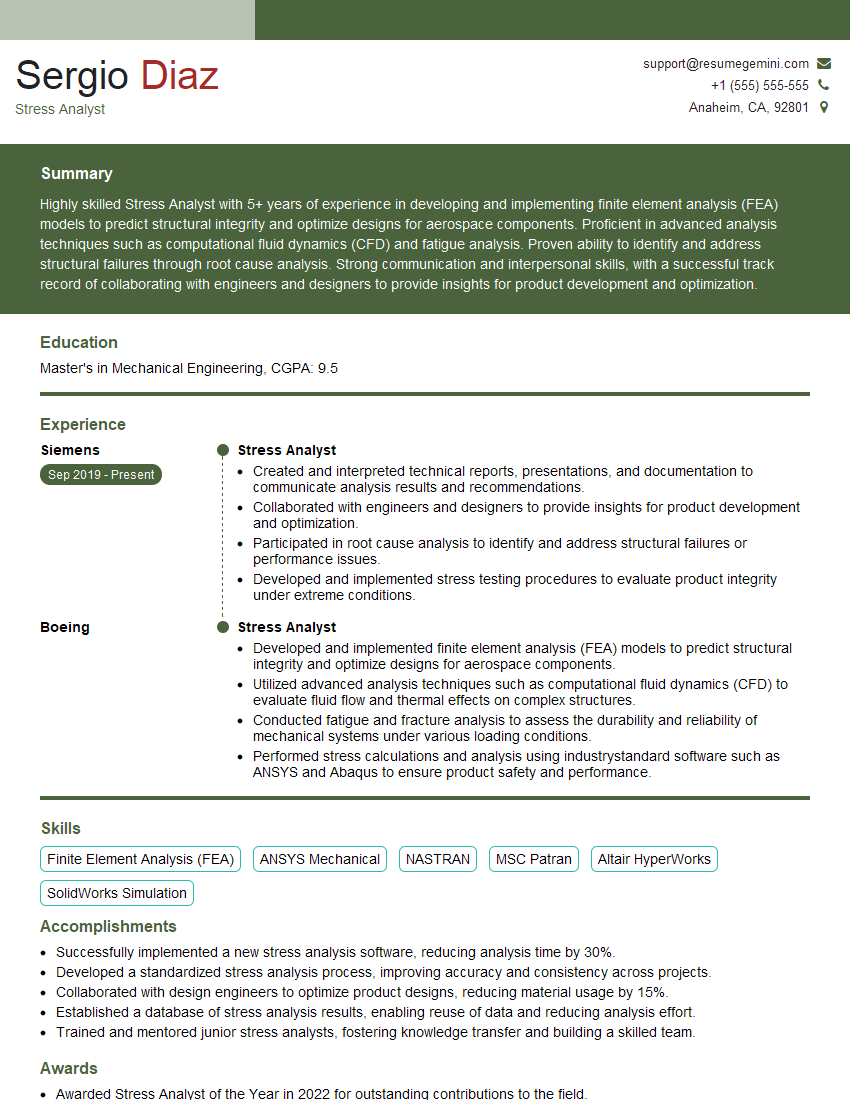

Mastering simulation software like ANSYS and Abaqus is crucial for career advancement in engineering and related fields. These skills are highly sought after, opening doors to challenging and rewarding roles. To maximize your job prospects, focus on crafting an ATS-friendly resume that clearly highlights your expertise. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We provide examples of resumes tailored to Simulation Software (ANSYS, Abaqus) professionals to guide you in showcasing your skills effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good