Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important Size Measurement interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in Size Measurement Interview

Q 1. Explain the difference between accuracy and precision in size measurement.

Accuracy and precision are crucial concepts in size measurement, often confused but distinct. Accuracy refers to how close a measurement is to the true value. Think of it like aiming for the bullseye on a dartboard – a highly accurate measurement hits the center. Precision, on the other hand, refers to how close repeated measurements are to each other. High precision means your measurements cluster tightly together, even if they’re not necessarily close to the true value (like all darts hitting close to each other, but off-center).

For example, imagine measuring a 10cm rod. An accurate measurement would be very close to 10cm. A precise measurement might consistently read 9.98cm, 9.99cm, and 10.00cm, showing high repeatability, even if the actual length is slightly different than the average reported length. A measurement can be precise without being accurate, and vice-versa. Ideally, we want both high accuracy and high precision.

Q 2. What are the common sources of error in size measurement?

Errors in size measurement stem from various sources. These can be broadly classified as:

- Instrument error: This includes inherent limitations of the measuring instrument like calibration drift, wear and tear, or design flaws. For example, a worn-out caliper might give consistently inaccurate readings.

- Environmental error: Temperature fluctuations, humidity, and vibrations can all affect measurement accuracy. For instance, a steel ruler expands slightly with increasing temperature, leading to inaccurate readings.

- Observer error: Human error is a significant factor. This includes parallax error (reading the scale from an angle), misinterpretation of the scale, incorrect handling of the instrument, or improper recording of the measurement. For example, not properly zeroing a micrometer before a measurement.

- Method error: Incorrect measurement techniques or inappropriate methodologies can lead to errors. For example, not using proper clamping pressure with a caliper.

- Part error: The part itself might have imperfections or irregularities that affect the measurement. For instance, surface roughness can make accurate thickness measurement difficult.

Q 3. Describe different methods for measuring length, diameter, and thickness.

Measuring length, diameter, and thickness utilizes various methods and instruments:

- Length: Rulers, measuring tapes, laser distance meters, optical comparators are commonly used depending on the length and precision required. For very precise length measurements, interferometry might be employed.

- Diameter: Vernier calipers, micrometers, and optical comparators are frequently used. For large diameters, laser-based systems are utilized. CMMs (Coordinate Measuring Machines) provide precise diameter measurements for complex shapes.

- Thickness: Micrometers, dial indicators, thickness gauges, and CMMs are common choices depending on the material and required precision. Ultrasonic thickness gauges are employed for measuring thickness without direct contact, useful for materials that cannot be easily accessed.

Q 4. How do you select the appropriate measuring instrument for a given task?

Selecting the appropriate measuring instrument is critical for accurate results. The choice depends on several factors:

- Required accuracy: A high-precision measurement requires a more sophisticated instrument like a micrometer or CMM compared to a simple ruler.

- Size and shape of the object: The instrument must be suitable for the object’s size and geometry. A caliper is suitable for relatively small objects, while a laser distance meter is better for large distances.

- Material of the object: The instrument should be compatible with the material’s properties. For example, a specialized gauge might be needed for measuring soft materials to avoid damaging them.

- Measurement environment: Environmental factors such as temperature and humidity need to be considered, especially when high precision is needed. This dictates whether certain instruments need to be temperature-compensated or are used in a controlled environment.

- Cost and availability: Budget limitations and the availability of appropriate equipment also play a role in instrument selection.

Q 5. Explain the concept of calibration and its importance in size measurement.

Calibration is the process of comparing a measuring instrument’s readings to a known standard to determine its accuracy. It’s crucial because instruments drift over time due to wear, temperature changes, or other factors. Regular calibration ensures that measurements are reliable and traceable to national or international standards.

Imagine a kitchen scale. Over time, it might become inaccurate. Calibration involves using a known weight (the standard) to check if the scale gives the correct reading. If not, adjustments are made to correct the readings, ensuring future measurements are more accurate. Without calibration, measurements become progressively less reliable, leading to potential errors in manufacturing, quality control, or scientific research.

Q 6. What is the purpose of a calibration certificate?

A calibration certificate is a document that verifies the accuracy of a measuring instrument at a specific point in time. It provides evidence that the instrument has been checked against a traceable standard and meets specified accuracy requirements. The certificate usually includes details about the instrument, the calibration procedure, the results, and the date of calibration. It’s a legal and essential record demonstrating the reliability of the measurements obtained using that instrument. This is crucial for quality control, regulatory compliance, and legal proceedings. Without a valid calibration certificate, results might be challenged.

Q 7. Describe your experience with different types of measuring instruments (e.g., calipers, micrometers, CMMs).

Throughout my career, I have extensively used various measuring instruments, including:

- Vernier Calipers: I’ve utilized these for measuring external and internal dimensions, depths, and step heights with an accuracy of typically 0.02mm. I’m adept at handling various types of calipers and understanding their limitations.

- Micrometers: I’m experienced in using both outside and inside micrometers for highly precise measurements down to 0.001mm or 0.0001 inches. I understand the importance of proper handling to avoid damage to the instrument or the workpiece.

- CMMs (Coordinate Measuring Machines): My experience with CMMs extends to various applications, from simple dimensional checks to complex surface measurements on intricate parts. I’m proficient in using CMM software for programming measurement routines, data analysis, and report generation. I’ve worked with both touch-trigger and non-contact scanning CMMs, adapting my techniques based on the specific application and the material being measured.

I am also experienced in using other instruments such as dial indicators, optical comparators, laser scanners, and profile projectors. My proficiency encompasses understanding instrument limitations, selecting appropriate methods, and interpreting the results to ensure high-quality measurements in a variety of industries.

Q 8. How do you handle measurement uncertainty?

Measurement uncertainty is inherent in any measurement process. It represents the doubt surrounding a measured value due to limitations in the measuring instrument, the measuring process itself, and the environment. Handling it effectively involves understanding its sources and quantifying its impact.

We typically address measurement uncertainty using a combination of techniques. First, we carefully select appropriate measurement instruments calibrated to traceable standards. Second, we meticulously control environmental factors like temperature and humidity, which can influence readings. Third, we perform multiple measurements to assess the variability and use statistical methods (like calculating standard deviations) to estimate the uncertainty. For instance, if I’m measuring the diameter of a shaft, I wouldn’t just take one reading. I’d take multiple measurements at different orientations and locations, averaging the results to minimize random errors. The standard deviation of these measurements would give a strong indication of the uncertainty in the shaft diameter.

Furthermore, we document uncertainty using a standardized format, often expressing it as a plus/minus value (e.g., 10.00 ± 0.05 mm). This allows others to understand the reliability of our measurements. Understanding and quantifying uncertainty is crucial for making informed decisions based on the data and ensuring the quality of manufactured parts.

Q 9. Explain the significance of GD&T (Geometric Dimensioning and Tolerancing).

Geometric Dimensioning and Tolerancing (GD&T) is a symbolic language used on engineering drawings to define the size, form, orientation, location, and runout of features. It’s crucial because it allows engineers to specify precisely what is acceptable for a part, going beyond simple plus/minus tolerances. It helps to avoid ambiguity and ensures that parts meet the required functionality.

The significance of GD&T lies in its ability to clearly communicate design intent. Traditional plus/minus tolerances only address size; GD&T addresses how features relate to each other and their ideal geometric forms. For example, you might need to specify that a hole needs to be within a certain size range (diameter), but also perfectly centered on a particular surface and perpendicular to it. GD&T provides the tools to do that clearly and unambiguously.

Without GD&T, reliance on purely numerical tolerances can lead to parts being rejected despite meeting functional requirements, or worse, parts that meet numerical specifications but fail to function correctly because their geometric features don’t align properly. GD&T significantly reduces this risk by specifying the geometric relationship among features in a precise way.

Q 10. How do you interpret GD&T symbols on engineering drawings?

Interpreting GD&T symbols requires understanding their meaning and how they are applied on the drawing. Each symbol represents a specific geometric control. For example:

□(Position) indicates the permissible deviation of a feature’s location from its ideal position. It might specify a circular zone within which the center of a hole must lie.∥(Flatness) specifies the allowable deviation from a perfectly flat surface. This is important for ensuring surface quality.↓(Perpendicularity) indicates the allowable deviation of a feature’s orientation from perpendicularity to a datum feature.∠(Circular Runout) indicates that the variation of a circular feature’s position about an axis of rotation must be within a specified tolerance.

The interpretation also involves understanding the associated tolerance values and the datum references (usually denoted by A, B, C) that establish the coordinate system for the geometric control. It’s a highly technical skill, but thorough training and experience are key to correct understanding and application.

Interpreting GD&T symbols on engineering drawings is not merely about reading symbols; it’s about understanding their context and relation to design intent. This requires knowledge of manufacturing processes and the functional aspects of the part. I always refer to GD&T standards (like ASME Y14.5) to ensure accurate interpretation.

Q 11. What are your experiences with different Coordinate Measuring Machines (CMMs)?

My experience with Coordinate Measuring Machines (CMMs) spans various types, including bridge-type, horizontal arm, and articulated arm CMMs. I’ve worked extensively with both contact and non-contact CMMs. I’m proficient in operating and programming each type, understanding their strengths and limitations.

Bridge-type CMMs are typically used for larger parts requiring high accuracy. I’ve used these for inspecting large castings and machined components. Horizontal arm CMMs are excellent for inspecting intricate parts and those requiring access from multiple angles; I’ve used these on automotive parts with complex geometries. Articulated arm CMMs are very flexible and mobile; these were useful during onsite inspections for quality assurance.

My experience also includes utilizing different probing systems (e.g., touch probes, scanning probes) and software packages for data acquisition, analysis, and report generation. I’ve had experience with Renishaw, Hexagon, and Zeiss CMMs, and I’m comfortable adapting to various software interfaces and measurement procedures for diverse part types and applications. The choice of CMM depends heavily on part size, complexity, and the required measurement accuracy. I always ensure I use the most appropriate CMM for the job.

Q 12. Describe your proficiency in using statistical process control (SPC) techniques for size measurement data analysis.

Statistical Process Control (SPC) is essential for analyzing size measurement data and identifying trends indicating potential problems in the manufacturing process. I’m proficient in using various SPC techniques, including control charts (X-bar and R charts, X-bar and s charts, etc.), process capability analysis (Cp, Cpk), and other statistical tools to assess process performance and identify assignable causes of variation.

For example, I might use X-bar and R charts to monitor the average and range of a critical dimension during the production run. If the data points fall outside the control limits, it suggests a process shift, requiring investigation. Process capability analysis helps to determine if the process is capable of meeting the specifications. Cp and Cpk values help to identify whether the process is centered and capable of producing parts that meet the requirements.

I also utilize software packages like Minitab and JMP for data analysis. Beyond simple chart generation, I utilize more advanced techniques like ANOVA (Analysis of Variance) to investigate the sources of variation, and regression analysis to model relationships between process parameters and measurement results. This allows me to identify specific factors influencing measurement variability. The insights gained from SPC analysis are critical in preventing defects, improving process efficiency, and enhancing overall product quality.

Q 13. Explain your understanding of measurement traceability.

Measurement traceability is the ability to demonstrate that a measurement result is consistent with national or international standards. It establishes an unbroken chain of comparisons to a known reference standard. This is paramount for ensuring the accuracy and reliability of our measurements.

We achieve traceability by using calibrated instruments whose calibration certificates demonstrate traceability to national standards (e.g., NIST in the US, NPL in the UK). This means the instrument’s accuracy has been verified by comparison to a known standard, and that standard has been verified against another, and so on, all the way up to the national standard. This ensures that measurements made with the instrument are consistent and comparable with measurements made elsewhere using similar traceable standards.

Without traceability, measurement results would lack credibility. Imagine a scenario where different parts are manufactured in different locations using uncalibrated or inconsistently calibrated instruments. It becomes impossible to confirm whether those parts meet the design specifications consistently. Traceability prevents ambiguity and provides confidence in measurement results. It is a fundamental requirement for many quality management systems like ISO 9001.

Q 14. How do you ensure the reliability and repeatability of your measurements?

Ensuring the reliability and repeatability of measurements is crucial for consistent, trustworthy results. We achieve this through a multi-pronged approach focused on both equipment and procedure.

First, we use properly calibrated and maintained measurement equipment. Regular calibration against traceable standards is crucial, and we maintain detailed records of these calibrations. Second, we adhere to strict measurement procedures, ensuring that measurements are conducted consistently and in a controlled environment. This involves standardized methods, proper handling of equipment, and minimization of environmental influences.

Third, we employ statistical methods to assess the variability of our measurements. Repeated measurements on the same part allow us to calculate repeatability and reproducibility (R&R) studies, which quantify the variation due to the measurement system and the operator. If the R&R values are excessively high, it signals a need for improvement in procedures or equipment. We also utilize control charts to monitor the stability of the measurement process over time. By actively managing these aspects, we build confidence in the reliability and consistency of our size measurement data.

Q 15. Describe a situation where you had to troubleshoot a measurement issue.

One time, we were experiencing inconsistencies in the dimensions of a newly manufactured injection-molded part. The initial measurements showed some parts were outside the specified tolerance range, leading to concerns about functionality and potential scrap. To troubleshoot, we first verified the calibration of our CMM (Coordinate Measuring Machine) using certified standards. Then, we systematically investigated potential sources of error. This included checking the injection molding machine’s settings (temperature, pressure, injection speed), examining the mold itself for wear or damage, and analyzing the raw material properties. We found that variations in the molding temperature were the primary culprit. By carefully controlling this parameter, we reduced the dimensional variations and brought the parts within the required tolerances.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you document and report measurement results?

Documenting and reporting measurement results requires meticulous attention to detail and a standardized approach. We typically use a combination of digital measurement systems, spreadsheets, and dedicated metrology software. Each measurement is recorded with the following essential information: date and time, measuring instrument used (including its identification number and calibration status), operator’s initials, sample identification, and the measured values along with associated uncertainties. For example, a dimension might be reported as 25.00 ± 0.05 mm, clearly indicating the mean value and tolerance. This data is then summarized in tables and reports, often including statistical analysis like mean, standard deviation, and histograms, which highlight the distribution of the measured values. These reports are reviewed by quality control personnel before being forwarded to relevant stakeholders.

Q 17. What are the limitations of various measurement techniques?

Different measurement techniques have inherent limitations. For instance, calipers, while simple and versatile, have limited accuracy compared to CMMs due to human error and the resolution of the instrument itself. CMMs, although highly accurate, are susceptible to thermal drift and require precise setup and calibration. Optical methods, like laser scanning, are excellent for capturing complex shapes but can be affected by surface reflectivity and environmental factors like dust or vibrations. Furthermore, contact methods can deform soft materials, leading to inaccurate measurements. Choosing the right technique is crucial, and understanding its limitations is paramount to obtaining reliable and meaningful data.

Q 18. How do you handle discrepancies between measured values and specifications?

Discrepancies between measured values and specifications trigger a thorough investigation. First, we verify the measurement process, re-checking the calibration of instruments and the measurement technique. If the error is confirmed to be in the measured values, we identify the root cause. This might involve examining the manufacturing process, material properties, or even the design itself. Depending on the significance of the discrepancy and the contract specifications, actions may range from adjusting the manufacturing process to re-evaluating the design tolerances or accepting a controlled deviation. Documentation of the investigation, the corrective actions taken, and the final resolution is crucial for maintaining quality control and preventing future issues. Statistical process control (SPC) charts are often used to monitor deviations over time.

Q 19. What software packages are you familiar with for analyzing size measurement data?

I’m proficient in several software packages for analyzing size measurement data. PolyWorks and Geomagic Control X are frequently used for processing CMM data, allowing for complex surface analysis, dimensional reporting, and tolerance verification. We also utilize statistical software like Minitab for performing gauge R&R studies, analyzing process capability, and generating control charts. Spreadsheets like Excel are commonly used for basic data entry, organization, and initial analysis. The choice of software is dictated by the complexity of the measurement task and the level of analysis required.

Q 20. Explain your experience with laser scanning or other advanced measurement technologies.

I have extensive experience with laser scanning technology, specifically using Creaform and FARO scanners. Laser scanning provides rapid, non-contact measurements of complex geometries, enabling us to quickly assess part dimensions, surface quality, and deviations from CAD models. This technology is especially useful for reverse engineering, quality inspection of complex parts (like automotive body panels or turbine blades), and dimensional inspection in applications where contact methods are impractical or destructive. I’m also familiar with structured light scanning, which provides similar advantages. My experience includes data processing using dedicated software to generate point clouds, mesh models, and perform precise dimensional comparisons against CAD models. The accuracy and speed of these techniques are invaluable for numerous applications.

Q 21. Describe your understanding of gauge R&R studies.

Gauge R&R (repeatability and reproducibility) studies are crucial for evaluating the variability of a measurement system. It determines how much of the observed variation in measurements is attributable to the gauge itself (repeatability – variation from repeated measurements by the same operator) and how much is due to differences between operators (reproducibility). These studies involve multiple operators measuring the same parts multiple times. The data is then analyzed using statistical methods (ANOVA is commonly employed) to quantify the repeatability and reproducibility components of variation. A high Gauge R&R variation indicates an unreliable measurement system requiring recalibration, improved training, or even replacement of the measuring instrument. A well-conducted Gauge R&R study ensures that the measurement system is capable of accurately capturing the variations in the measured parts, allowing us to trust the collected data for making informed decisions.

Q 22. How do you ensure the proper maintenance and care of measurement instruments?

Proper maintenance of measurement instruments is crucial for accurate and reliable results. It involves a combination of preventative measures and regular calibration checks. Think of it like maintaining a high-precision watch – regular cleaning and careful handling are essential for its longevity and accuracy.

- Regular Cleaning: Instruments should be cleaned regularly using appropriate cleaning solutions and materials to remove dust, debris, and contaminants that can affect measurements. The specific cleaning method will depend on the instrument type (e.g., wiping with a lint-free cloth for calipers, ultrasonic cleaning for complex probes).

- Calibration: Calibration against traceable standards is vital. This ensures the instrument readings are accurate and within acceptable tolerances. The frequency of calibration depends on the instrument’s use and the required accuracy level. Calibration certificates should be meticulously maintained and kept in a safe place.

- Storage: Instruments should be stored in a controlled environment, protected from extreme temperatures, humidity, and physical damage. This often involves using protective cases or storage solutions.

- Handling: Careful handling is paramount. Avoid dropping or subjecting instruments to impacts that can damage sensitive components. Use the instruments as per the manufacturer’s instructions.

- Documentation: All maintenance and calibration activities should be meticulously documented. This creates an audit trail, ensuring traceability and facilitating compliance with quality standards.

For example, in a quality control setting for a microchip manufacturer, even a tiny amount of dust on a micrometer could render measurements inaccurate, leading to rejected components and significant financial loss. Consistent calibration and careful cleaning are essential for preventing such scenarios.

Q 23. How familiar are you with ISO standards related to size measurement?

I’m very familiar with ISO standards related to size measurement, particularly ISO 10012-1, which addresses measurement management systems. This standard provides guidelines for establishing, implementing, and maintaining a measurement management system to ensure the accuracy and reliability of measurement results. It also covers aspects like calibration, traceability, and competency of personnel.

Other relevant ISO standards include those that specify particular methods for dimensional measurement, such as those focusing on coordinate measuring machines (CMMs) or optical techniques. Understanding these standards is crucial for ensuring that measurement processes are robust, compliant, and produce reliable data. I’ve utilized these standards in previous roles to develop and implement measurement procedures that meet industry best practices, and I’m adept at interpreting their requirements and ensuring compliance within diverse manufacturing contexts.

Q 24. Explain your experience with different types of sensors used in size measurement.

My experience encompasses a wide range of sensors used in size measurement, from traditional mechanical methods to advanced optical and digital technologies.

- Mechanical Sensors: I have extensive experience with dial indicators, micrometers, calipers, and other mechanical instruments. These are often preferred for simple, direct measurements, offering ease of use and cost-effectiveness, but their accuracy is limited by the operator’s skill.

- Optical Sensors: My experience includes utilizing optical sensors like laser scanners and vision systems. These offer high accuracy and speed, particularly for complex geometries and automated measurements. For example, I’ve used laser triangulation systems for precise surface profiling and vision systems for automated part inspection.

- Digital Sensors: I’m proficient with various digital sensors integrated into CMMs, automated inspection systems and handheld digital calipers. These sensors provide digital readout, often with data logging and statistical analysis capabilities, improving both accuracy and data management.

- Tactile Probes (CMMs): I have used various types of tactile probes on coordinate measuring machines (CMMs), including touch-trigger and scanning probes. These are essential for precise, three-dimensional measurements of complex parts, and understanding their specific characteristics and limitations is vital for accurate data acquisition.

The choice of sensor depends on the application, required accuracy, complexity of the part, and budget constraints. I can assess the specific needs of a project and select the most appropriate sensing technology.

Q 25. How do you address non-conformances related to size in a manufacturing process?

Addressing non-conformances related to size in a manufacturing process requires a systematic approach combining immediate corrective action and root cause analysis to prevent recurrence. My approach utilizes a structured problem-solving methodology, such as the 8D report process or similar quality tools.

- Identify and Isolate: The first step is to clearly identify and isolate the non-conforming parts or processes. This involves careful inspection and documentation of the affected items.

- Immediate Corrective Action: Implement immediate corrective actions to contain the problem and prevent further production of non-conforming items. This might involve temporarily stopping the production line.

- Root Cause Analysis: Conduct a thorough root cause analysis to identify the underlying factors contributing to the size non-conformances. Tools like the ‘5 Whys’ or Fishbone diagrams can be helpful.

- Corrective Actions: Develop and implement permanent corrective actions to address the root cause(s). This may involve adjustments to the manufacturing process, machine calibration, operator training, or material changes.

- Verification: Verify the effectiveness of the corrective actions through repeated measurements and process monitoring.

- Preventative Actions: Implement preventative actions to avoid recurrence of the problem. This may involve enhanced process controls, improved operator training, or adjustments to maintenance schedules.

- Documentation: Document all aspects of the non-conformances, corrective actions, and preventative actions. This is crucial for traceability and continuous improvement.

- Management Review: A management review should take place to assess the effectiveness of the response and to learn from the experience.

For example, if a batch of parts consistently falls outside the specified tolerance, the root cause might be due to machine wear, incorrect tool settings, or variations in material properties. The corrective action could involve recalibrating the machine, replacing worn tools, or adjusting the material specification.

Q 26. What is your experience with automated size measurement systems?

I have significant experience with automated size measurement systems, including those incorporating CMMs (Coordinate Measuring Machines), vision systems, and laser scanners. These systems offer significant advantages in terms of speed, accuracy, and repeatability compared to manual measurement methods. They’re particularly valuable for high-volume manufacturing or applications requiring high precision.

My experience includes programming and operating these systems, analyzing the resultant data, and integrating them into overall quality control processes. I’m familiar with various software packages used for data acquisition, analysis, and reporting. I understand the importance of system calibration and maintenance to ensure the accuracy and reliability of automated measurements. I’ve also worked on projects involving the integration of automated measurement systems into larger production lines, improving efficiency and reducing human error.

For instance, I was involved in a project where we implemented an automated vision system to inspect the dimensions of micro-electronics components. This significantly increased throughput and reduced the number of human errors associated with manual inspection, leading to improved product quality and reduced costs.

Q 27. Describe a time you had to explain complex measurement data to a non-technical audience.

I once had to explain the results of a complex dimensional analysis of a newly designed aircraft component to a team of non-technical project managers. The data involved intricate 3D measurements, statistical process control charts, and tolerance analysis. Instead of relying heavily on technical jargon, I used clear visuals and analogies to convey the key information.

I started by presenting a simplified overview of the component’s design and its critical dimensions. Then, instead of directly presenting complex statistical data, I used a visual representation like a simple bar chart to show the distribution of measurements around the target values. I explained the concept of tolerance in terms of acceptable variation, using an analogy of fitting a door frame – a slightly larger or smaller door frame wouldn’t necessarily be a problem unless it significantly affected functionality.

I further emphasized the key findings using simple language and focused on the implications of the measurements for the project timeline and budget. This ensured everyone understood the crucial aspects, regardless of their technical expertise. This approach facilitated efficient communication and effective decision-making, highlighting the importance of tailoring communication to the audience’s background.

Key Topics to Learn for Size Measurement Interview

- Fundamental Measurement Principles: Understanding accuracy, precision, and error analysis in various measurement systems (metric, imperial).

- Dimensional Metrology: Practical application of techniques like coordinate measuring machines (CMM), laser scanning, and optical methods for precise dimensional measurements.

- Statistical Process Control (SPC) in Size Measurement: Applying statistical methods to analyze measurement data, identify trends, and control variation in manufacturing processes.

- Calibration and Traceability: Understanding calibration procedures, standards, and maintaining traceability to national or international standards for reliable measurement results.

- Measurement Uncertainty: Evaluating and quantifying the uncertainty associated with measurement results, and understanding its impact on decision-making.

- Data Acquisition and Analysis: Proficiency in using measurement software and tools for data collection, processing, and interpretation. Experience with various data formats and analysis techniques.

- Specific Measurement Techniques: Knowledge of techniques relevant to the specific industry or role (e.g., surface roughness measurement, geometrical tolerancing).

- Problem-Solving and Troubleshooting: Ability to diagnose and resolve issues related to measurement equipment, processes, and data analysis.

- Relevant Standards and Specifications: Familiarity with industry-specific standards and specifications related to size measurement and quality control (e.g., ISO standards).

Next Steps

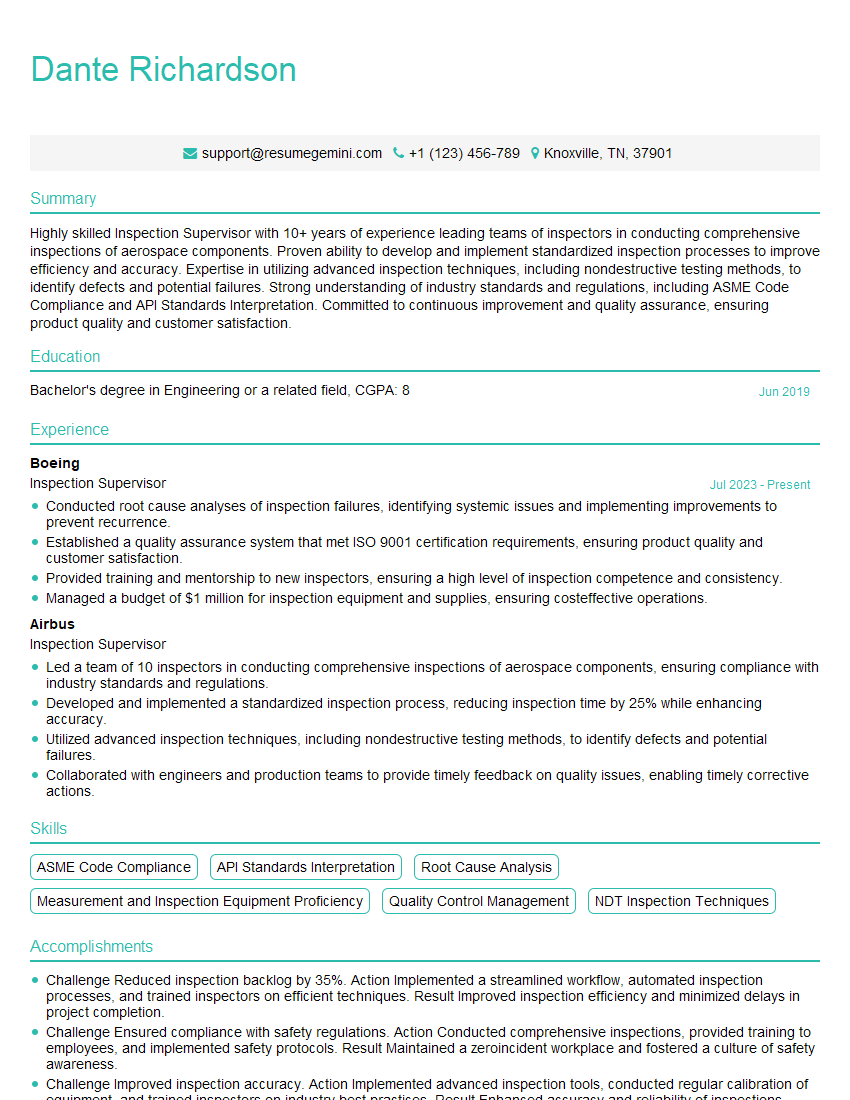

Mastering size measurement opens doors to exciting career opportunities in manufacturing, engineering, quality control, and various other technical fields. A strong understanding of these principles is highly valued by employers and significantly enhances your career prospects. To stand out, create an ATS-friendly resume that effectively showcases your skills and experience. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. They provide examples of resumes tailored specifically to Size Measurement roles, assisting you in crafting a compelling application that gets noticed.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

good