The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Skill in Using Audio Equipment and Sound Systems interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Skill in Using Audio Equipment and Sound Systems Interview

Q 1. Explain the difference between XLR and 1/4-inch connectors.

XLR and 1/4-inch connectors are both used to carry audio signals, but they differ significantly in their design and applications. XLR connectors, also known as Cannon connectors, are professional-grade, three-pin connectors designed for balanced audio signals. Balanced connections are less susceptible to noise interference, making them ideal for longer cable runs and professional audio applications. They use three wires: two carry the audio signal, and one is a ground wire, which helps to cancel out noise. Think of it like a sophisticated three-lane highway for your audio.

1/4-inch connectors, on the other hand, are typically used for unbalanced audio signals. Unbalanced connections are simpler, using only two wires: one for the audio signal and one for ground. They’re more prone to noise interference, especially over longer distances, making them more suitable for shorter cable runs or situations where noise isn’t a major concern. Imagine this as a simpler, two-lane road for your audio.

In practice, you’ll find XLR connectors used extensively with microphones, high-end audio equipment and professional stage setups. 1/4-inch connectors are commonly used with instruments like guitars and basses, or as inputs/outputs on simpler audio interfaces.

Q 2. Describe the function of a phantom power supply.

A phantom power supply provides power to condenser microphones over the same XLR cable that carries the audio signal. Condenser microphones require an external power source to operate, unlike dynamic microphones, which generate their own signal. Phantom power is typically +48 volts DC and is sent along the pins of the XLR cable, allowing the microphone to function without a separate power cable.

Think of it as a hidden power source within the cable itself. It simplifies setup by eliminating the need for additional power supplies, but it’s crucial to remember that not all microphones can handle phantom power, and using it on a microphone that doesn’t support it can damage the microphone.

Before connecting a microphone to a device with phantom power, always check the microphone’s specifications to ensure compatibility.

Q 3. What is the purpose of a compressor in audio engineering?

A compressor is a dynamic processor used to reduce the dynamic range of an audio signal. In simpler terms, it controls the difference between the loudest and quietest parts of a sound, preventing peaks from becoming too loud and bringing quieter parts up to a more manageable level. This makes the sound more consistent and prevents clipping, which introduces distortion.

Imagine a sound engineer recording a singer. The singer might have some quiet verses and some really powerful, loud choruses. A compressor can be used to reduce the volume difference between these sections, making the overall recording sound more even and less jarring. Compressors are essential tools for producing professional-sounding recordings and live performances. They’re also used to add punch and presence to certain instruments.

Key parameters of a compressor include threshold, ratio, attack, and release. These settings allow the user to finely tune how the compressor affects the audio.

Q 4. How do you troubleshoot feedback in a sound system?

Feedback, that ear-piercing squeal, occurs when a sound loop is created between a microphone and a loudspeaker. The microphone picks up sound from the loudspeaker, which then amplifies that sound, creating a positive feedback loop that quickly escalates into a loud, unpleasant noise.

Troubleshooting feedback involves systematically identifying and eliminating the problem. Here’s a step-by-step approach:

- Reduce microphone gain: Lowering the input sensitivity on the mixer is the first step; less gain means less sound for the system to amplify.

- Check microphone placement: Move the microphone away from loudspeakers; they should never face each other directly. Position them to minimize sound reflection from surfaces.

- EQ adjustments: Use a graphic equalizer to reduce the frequency causing the feedback. This usually involves cutting specific frequencies in the PA’s output that the microphone is picking up (often in the mid-range).

- Use directional microphones: Cardioid or supercardioid microphones pick up sound from a narrower angle, reducing the chance of picking up unwanted sounds from the speakers.

- Proper speaker placement: Avoid placing speakers and microphones directly opposite each other.

- Reduce overall system gain: If you’re still experiencing feedback after making the above adjustments, consider reducing the overall volume in the system.

It often involves a combination of these techniques, requiring a keen ear and experience to pinpoint the exact frequency causing the feedback.

Q 5. Explain the concept of equalization (EQ).

Equalization (EQ) is the process of adjusting the balance of different frequencies within an audio signal. It’s like a graphic equalizer on your stereo, but much more precise and nuanced. EQ allows you to boost or cut certain frequencies to shape the overall sound, enhance certain aspects, or remedy problems.

For instance, you might boost the bass frequencies to add warmth to a recording, cut harsh high frequencies to make a vocal sound smoother, or sculpt the tone of an instrument to make it sit better in a mix. Different types of EQs exist, such as parametric, graphic, and shelving EQs. Parametric EQs offer the most control, allowing adjustment of frequency, gain, and Q (bandwidth).

Imagine a painter adjusting the colors in a painting. EQ is similar; it allows a sound engineer to “color” the sound, enhancing its overall aesthetic and clarity. EQ is crucial in mixing, mastering, and live sound reinforcement to achieve a desirable and balanced sonic outcome.

Q 6. What are the different types of microphones and their applications?

There are many types of microphones, each with its own unique characteristics and best applications. The most common categories include:

- Dynamic Microphones: Rugged, reliable, and less susceptible to feedback. Ideal for live performances, loud instruments (like drums and guitar amps), and broadcast applications. They don’t require phantom power.

- Condenser Microphones: More sensitive and detailed, capturing a wider frequency range. Excellent for recording vocals, acoustic instruments, and delicate sounds. They require phantom power.

- Ribbon Microphones: Offer a unique, warm sound with a natural presence. Often used for recording vocals, guitar amps, and orchestral instruments.

- Boundary Microphones (PZM): Designed to be mounted on a flat surface, these microphones are particularly useful for conferences or recording in situations where traditional microphone placement is impractical.

- Lapel Microphones: Small microphones clipped onto clothing, ideal for presentations, interviews, and video recording.

The choice of microphone depends heavily on the application, the sound source’s characteristics, and the desired sonic outcome.

Q 7. Describe your experience with digital audio workstations (DAWs).

I have extensive experience with various Digital Audio Workstations (DAWs), including Pro Tools, Logic Pro X, Ableton Live, and Cubase. My proficiency encompasses recording, editing, mixing, and mastering audio projects. In professional settings, I’ve used DAWs for a variety of tasks, from recording and mixing music albums to producing podcasts and creating soundtracks for videos.

For example, while working on a recent music project, I utilized Pro Tools to record a full band in a studio setting, meticulously editing and mixing individual tracks to achieve a cohesive and polished final product. My experience extends to utilizing DAW plugins for various effects processing, such as compression, equalization, and reverb, enhancing the quality and creative impact of audio projects. I am adept at utilizing virtual instruments, MIDI editing, and audio automation to create sophisticated musical arrangements.

Furthermore, I’m comfortable collaborating on larger-scale productions using cloud-based collaborative platforms integrated with DAWs. I can efficiently manage large sessions, handle audio file organization, and ensure effective communication throughout the workflow.

Q 8. How do you test and calibrate a sound system?

Testing and calibrating a sound system is crucial for achieving optimal audio quality. It involves a systematic approach ensuring all components work together harmoniously. This process typically begins with a visual inspection, checking all cables for damage and ensuring all equipment is properly connected. Then, we move to signal flow verification – ensuring the signal path is clear from the source to the output.

Next comes the calibration phase. This involves using test tones (pink noise is frequently used) and a sound level meter to measure the output level of each speaker. This allows us to adjust the individual speaker levels to achieve consistent volume throughout the listening area. This step often requires careful adjustment, paying attention to acoustic issues such as room modes and reflections. We might also use specialized software for room correction, analyzing the acoustic response and making adjustments accordingly. Finally, we perform a listening test to evaluate the overall sound quality, making fine-tuning adjustments as needed. The goal is balanced sound reproduction, accurate frequency response, and minimal distortion.

For example, in a recent outdoor concert setup, I noticed a significant dip in the low frequencies at the back of the venue. By carefully adjusting the subwoofer EQ and positioning, we were able to significantly improve the bass response across the entire area.

Q 9. What are some common issues with speaker placement and how do you solve them?

Speaker placement significantly impacts sound quality. Incorrect placement can lead to several problems, including uneven sound distribution, unwanted reflections (leading to muddy sound), and comb filtering (resulting in frequency cancellations).

- Problem: Uneven sound distribution – some areas are too loud, others too quiet.

- Solution: Strategic positioning, considering the room’s acoustics and audience coverage.

- Problem: Excessive bass buildup in corners or near walls.

- Solution: Moving speakers away from boundaries, using bass traps, or employing digital room correction.

- Problem: Sound reflections creating a muddy or unclear sound.

- Solution: Employing sound diffusers or absorbers to mitigate these issues. Careful positioning to minimize reflection from hard surfaces.

Imagine setting up speakers for a small meeting room. Placing speakers too close to the walls results in a boomy bass and a less clear midrange. By moving them slightly away from the walls and angling them towards the listening area, you create a much clearer and more evenly distributed sound.

Q 10. How do you manage multiple audio sources in a live sound setting?

Managing multiple audio sources in a live sound setting requires meticulous organization and precise control. This often involves using a mixing console, a central hub for routing and mixing various audio signals. Each source (microphone, instrument, pre-recorded track) is routed to its own channel on the console.

The key is creating a well-defined signal flow, ensuring that each source has its dedicated channel. Then, we adjust each source’s level (gain), EQ (equalization to shape the sound), and pan (stereo positioning) to achieve the desired mix. It’s crucial to use aux sends effectively for monitoring and effects processing. For instance, vocals might have one send for the monitor mix (what the performer hears) and another for reverb (a common effect). Finally, we use the main output to send the final mix to the main speakers.

Efficient use of subgroups can be a game-changer, allowing for streamlined control of large numbers of channels. For example, in a concert with multiple backing vocalists, grouping their channels onto a subgroup simplifies overall volume control and equalization.

Q 11. Explain the difference between pre-fader and post-fader sends.

Pre-fader and post-fader sends are two different ways to send audio signals from a mixing console channel to an auxiliary (aux) bus, usually for monitoring or effects processing.

- Pre-fader send: The signal sent to the aux bus is independent of the channel fader. Adjusting the channel fader doesn’t affect the aux send level. This is commonly used for monitor mixes, ensuring the performer always hears a consistent level regardless of their channel’s main output level.

- Post-fader send: The signal sent to the aux bus is dependent on the channel fader. Adjusting the channel fader affects the aux send level. This is often used for effects sends, allowing the effect’s intensity to be adjusted along with the main signal level.

Think of a live band: The pre-fader send is ideal for the musician’s monitor mix – a consistent level regardless of how loud or soft their stage volume is. The post-fader send works well for a reverb effect applied to the vocals; when the vocalist sings louder, the reverb is also louder, maintaining the desired sonic balance.

Q 12. What are some techniques for noise reduction in audio recordings?

Noise reduction in audio recordings is vital for achieving a clean and professional sound. Several techniques can be employed, ranging from simple editing techniques to sophisticated noise reduction plugins.

- Gate: This tool automatically reduces or cuts off audio below a specified threshold, effectively removing background noise during silent parts.

- Compressor: Reduces the dynamic range, making quiet parts louder and loud parts quieter. This helps to even out the audio and minimize the perceived level of background hiss.

- Equalization (EQ): Can be used to attenuate (reduce) frequencies where noise is prominent, such as low-frequency rumble or high-frequency hiss.

- Noise Reduction Plugins: Advanced software plugins analyze noise profiles and intelligently reduce background noise while preserving the original audio signal. These tools work best when you have a dedicated section of recording containing only noise for the software to analyze.

For instance, when recording vocals, a gate can eliminate the background hum of air conditioners during pauses. Careful use of EQ can help reduce the rumble of traffic sounds in recordings captured in outdoor environments.

Q 13. Describe your experience with various audio mixing consoles.

Throughout my career, I’ve worked extensively with various audio mixing consoles, from small analog boards like the Soundcraft Signature 12 MTK to larger digital consoles such as the Yamaha RIVAGE PM10 and Avid S6. Each console has its own strengths and weaknesses. Analog consoles offer immediate tactile feedback and a certain warmth to their sound, but their flexibility is more limited. Digital consoles offer unparalleled flexibility with extensive routing options, extensive recall capabilities, and advanced processing tools.

My experience with these different consoles has sharpened my skills in audio signal flow, channel management, and efficient mixing techniques. I’ve learned to adapt my workflow to different interfaces, optimizing my approach for the specific capabilities of each console.

For instance, using the advanced automation features of the Avid S6 for a large theatrical production significantly reduced the time required for mixing and sound recall during different scenes. Working with analog consoles improved my intuitive understanding of the audio signal path and dynamics.

Q 14. How do you handle microphone bleed during recording?

Microphone bleed, where unwanted sounds are picked up by a microphone intended to capture a specific source, is a common issue in multi-microphone recordings. The solution often involves a multi-pronged approach.

- Strategic Microphone Placement: Careful placement is key. Position microphones close to the intended sound source and angle them to minimize pickup from other sources. Employing directional microphones (cardioid, supercardioid) helps limit pickup from the sides and rear.

- Microphone Shielding: Physical barriers, like microphone windscreens or strategically placed gobo panels can help block unwanted sound from reaching the microphone.

- Acoustic Treatment: Treating the recording space with sound absorption helps minimize reflections and reduce the overall ambient noise level, reducing the relative prominence of bleed.

- Post-Production Techniques: EQ and phase cancellation techniques can be employed in post-production to attempt to minimize bleed. However, this is often less effective than taking proactive measures during the recording phase.

For example, when recording a drum kit, using close-miking techniques and placing strategically placed gobos between the snare drum and toms can significantly reduce bleed between these instruments.

Q 15. Explain your workflow for setting up a live sound reinforcement system.

Setting up a live sound reinforcement system is a systematic process that requires meticulous planning and execution. My workflow begins with a thorough site survey, assessing the venue’s acoustics, power availability, and stage dimensions. This informs my decisions on speaker placement, microphone selection, and cable routing. Next, I create a detailed signal flow diagram, visualizing the path of audio from the source (instruments/vocals) to the speakers. This diagram ensures a clear understanding of how each component interacts and allows for easy troubleshooting. Then comes the physical setup: I place speakers strategically for optimal coverage and sound quality, avoiding feedback and ensuring even sound distribution across the audience. Microphone placement is crucial; I use my experience and knowledge of mic techniques to achieve optimal sound for each instrument and vocal. Once everything’s connected according to the diagram, I run system checks, including gain staging (adjusting input and output levels) to prevent clipping and distortion. Finally, I perform sound checks with the performers to fine-tune the mix, ensuring clear and balanced audio for the audience. For example, at a recent outdoor concert, the strong wind required careful speaker placement and wind screens to mitigate unwanted noise.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. What are the key considerations for choosing the right microphone for a particular application?

Choosing the right microphone is paramount for capturing high-quality audio. Key considerations include the type of sound source (e.g., vocals, acoustic guitar, drums), the desired proximity to the source (close miking vs. distant miking), the acoustic environment (room characteristics impacting sound reflections and resonance), and the desired frequency response (how well different frequencies are captured). For example, a dynamic microphone is robust and ideal for loud instruments like drums or amplified guitars because of its ability to handle high sound pressure levels and its rejection of unwanted background noise. In contrast, a condenser microphone is more sensitive and captures a wider range of frequencies, making it suitable for delicate instruments and vocals requiring a nuanced sound. Polar patterns (cardioid, omnidirectional, figure-8) are also critical; the cardioid pattern focuses on sound from the front and rejects sound from the sides and rear, while an omnidirectional microphone picks up sound equally from all directions. The choice depends on isolating the sound source or capturing ambient sounds.

Q 17. How do you use a spectrum analyzer to diagnose audio problems?

A spectrum analyzer is a valuable tool for visualizing the frequency content of an audio signal. I use it to identify and diagnose audio problems like feedback, frequency clashes, and unwanted resonances. The analyzer displays frequencies along the horizontal axis and their respective levels (amplitude) along the vertical axis. If I see a sharp peak at a particular frequency during a sound check, it might indicate feedback, which is usually caused by a microphone picking up the sound from a nearby speaker. To remedy this, I might adjust the microphone gain, EQ (equalization) settings, or reposition the microphone or speaker. Similarly, if there’s a muddy sound in the low frequencies, it could suggest the need for low-frequency EQ adjustments to reduce muddiness and enhance clarity. The spectrum analyzer allows for a precise visual identification and correction of these issues, enabling a balanced and clear audio output.

Q 18. What are your strategies for effective audio monitoring?

Effective audio monitoring is crucial for maintaining a consistent and high-quality sound throughout a performance. My strategies involve using a combination of monitoring techniques. For stage monitoring, I typically utilize in-ear monitors (IEMs) which provide a personalized mix for each performer, reducing stage volume and feedback issues. I also employ stage wedges or floor monitors that project sound directly to the performers. A crucial aspect is creating individual mixes for each performer to address their specific needs. For example, a vocalist might need a prominent vocal mix, while a guitarist may require a stronger instrumental mix. Additionally, I use a control room monitor for the overall mix, allowing me to adjust levels and balance the entire system. Constant attention to gain staging, EQ, and dynamic processing ensures that the monitor levels are comfortable and don’t lead to fatigue or discomfort for performers. Regular communication with performers during the sound check is key to optimizing their individual monitor mixes and addressing any feedback or imbalance concerns.

Q 19. Describe your experience with signal flow diagrams.

Signal flow diagrams are essential tools for understanding and troubleshooting audio systems. They visually represent the path of the audio signal, from its source to the output. These diagrams illustrate how different components, such as microphones, mixers, equalizers, compressors, and amplifiers, are interconnected and how the signal is processed at each stage. I use them extensively during the design, setup, and troubleshooting phases of sound systems. For instance, I create a signal flow diagram before a live event, meticulously noting each connection and the signal processing at each point, which helps me plan the setup and predict potential problems. If a problem occurs during a show, the signal flow diagram serves as a roadmap for pinpointing the source of the issue. For example, if a specific instrument isn’t audible, the diagram quickly allows me to trace the signal path to check connections, levels, and processing settings at each point.

Q 20. Explain the concept of impedance matching.

Impedance matching is crucial for efficient power transfer in an audio system. Impedance refers to the opposition to the flow of current in an electrical circuit. It’s measured in ohms (Ω). Mismatched impedance can lead to signal loss, distortion, and even damage to equipment. For optimal power transfer, the impedance of the source (e.g., microphone) should ideally match the impedance of the load (e.g., amplifier). In practice, a slight mismatch is tolerable, but significant differences can significantly impact sound quality. For instance, a low-impedance microphone connected to a high-impedance input will result in a weak signal. Conversely, a high-impedance microphone into a low-impedance input may cause distortion and damage. To ensure efficient signal transfer, I always check the impedance specifications of each component and use appropriate transformers or impedance matching devices when necessary. Understanding impedance matching is fundamental for designing and troubleshooting audio systems efficiently.

Q 21. How do you troubleshoot a failing audio device?

Troubleshooting a failing audio device begins with a systematic approach. First, I isolate the problem by checking all connections, making sure cables are securely plugged into the correct ports. Then, I visually inspect the device for any physical damage. Next, I move on to signal tracing, using the signal flow diagram to pinpoint where the problem might lie. I check signal levels at various points using a multimeter or by listening to the audio at different stages. If the problem persists, I might try replacing cables or substituting the faulty component with a known good one to test if the replacement solves the problem. If the issue stems from an internal problem with the device, I might consider contacting a professional technician for repair or replacement. For example, if a speaker isn’t working, I’d first check the speaker cable, then the amplifier output to the speaker, and finally the amplifier itself before concluding that the speaker is internally faulty. Careful documentation of every step of the troubleshooting process is crucial for efficient problem solving.

Q 22. What are your skills in using audio editing software?

My audio editing skills encompass a wide range of software, including industry-standard applications like Pro Tools, Logic Pro X, Ableton Live, and Audacity. I’m proficient in tasks such as waveform editing, noise reduction, equalization (EQ), compression, reverb, delay, and automation. For instance, in a recent project, I used Pro Tools’ advanced noise reduction capabilities to eliminate unwanted background hum from a live recording, significantly improving the final audio quality. My experience extends to mastering techniques, including loudness maximization and dynamic range control, ensuring a professional and polished sound. I’m also adept at using plugins from various developers to achieve specific sonic effects, tailoring the sound to the project’s needs. I understand the importance of non-destructive editing, preserving the original audio material for potential future modifications.

Beyond basic editing, I’m skilled in advanced techniques like spectral editing, which allows for precise frequency manipulation, and using MIDI editors for manipulating and automating musical instruments within the DAW. My workflow is efficient and organized, ensuring projects are completed within deadlines and to a high standard. I’m constantly learning about new plugins and techniques, remaining up-to-date with the latest industry trends.

Q 23. Explain the role of gain staging in audio production.

Gain staging is the process of setting the optimal signal levels at each stage of the audio chain, from the microphone preamp to the final output. Think of it like adjusting the water flow in a plumbing system – you need the right pressure at each point to avoid overflows or weak flow. In audio, proper gain staging prevents clipping (distortion caused by exceeding the maximum signal level) and maximizes the dynamic range and signal-to-noise ratio of your recordings or mixes. Poor gain staging can lead to a weak, muddy sound or harsh, distorted audio.

In practice, this involves carefully adjusting the gain on your microphones, preamps, compressors, and other processing units to achieve a balanced signal throughout the entire chain. You want a strong but not overwhelming signal at each stage. It’s crucial to monitor your levels closely with a VU meter or peak meter, ensuring that the signal never clips, but that it’s also loud enough to avoid noise. For example, I often start recording with a slightly lower gain level on my microphones, to leave headroom for dynamic peaks and avoid clipping during loud passages. Then, I use compression to control the dynamic range and make sure the audio level remains consistent. This ensures a clean and professional sound, which is far easier to mix and master than an improperly staged signal.

Q 24. What are your experiences in different audio formats?

My experience with audio formats is extensive, covering a wide range from lossless to lossy compression. I’m highly familiar with uncompressed formats like WAV and AIFF, ideal for studio work where preserving the highest audio fidelity is critical. I also work regularly with various lossy formats such as MP3, AAC, and FLAC. I understand the trade-offs involved in choosing a specific format – for example, FLAC offers excellent compression without significant loss of quality, but MP3 offers smaller file sizes at the cost of some audio fidelity. This understanding guides my choice of format based on the project’s specific needs. I’m also versed in more niche formats, like DSD and MQA, which are used in high-resolution audio applications. My experience extends to understanding metadata embedding and file management to maintain the best possible organization for archiving and retrieval.

I’m well-versed in the technical specifications of each format, including bit depth, sample rate, and compression algorithms. This understanding allows me to make informed decisions about which format is appropriate for a given application, whether it’s distributing a final mix for streaming platforms or archiving high-quality recordings for future use. This knowledge has been essential for many projects, including mastering albums for distribution to various streaming services, where optimized file sizes and high-quality audio are crucial.

Q 25. Describe your experience with different types of audio cables.

My experience with audio cables spans a variety of types, each suited for different applications and signal types. I’m familiar with XLR cables, commonly used for balanced microphone and line-level signals, ensuring a low-noise and interference-resistant transmission. I know the importance of using high-quality XLR cables to minimize signal degradation. I’m equally adept with TRS (Tip-Ring-Sleeve) cables, often used for balanced line-level signals, and TS (Tip-Sleeve) cables, used for unbalanced instrument signals. For digital audio transmission, I’ve worked with optical cables (Toslink) and S/PDIF coaxial cables. The choice of cable depends on the application; for example, long cable runs often necessitate the use of balanced connections with XLR or TRS to reduce noise and interference.

I have hands-on experience troubleshooting cable issues such as faulty connections, grounding problems, and signal interference. For instance, I once identified a grounding issue causing a hum in a live sound system by carefully inspecting and replacing a damaged XLR cable. My understanding extends to the different cable gauges and their impact on signal integrity, ensuring optimal performance based on the application. I’m also knowledgeable about various cable connectors and their compatibility. For instance, understanding the differences between various types of connectors (e.g., Neutrik, Switchcraft) is paramount for ensuring secure and reliable connections.

Q 26. What is your experience using acoustic treatment in a recording studio or live setting?

Acoustic treatment plays a crucial role in achieving optimal sound quality in recording studios and live performance venues. My experience includes designing and implementing acoustic treatments in both settings. This involves using various materials like acoustic panels, bass traps, diffusers, and absorbers to control reflections, reduce unwanted resonances, and optimize the sound environment. For example, in a recent studio project, we strategically placed bass traps in the corners of the room to mitigate low-frequency build-up, resulting in a cleaner, more accurate low-end response. In live settings, I often work with a combination of absorptive and diffusive elements, to manage reflections and create a more even sound field for the audience.

I understand the importance of analyzing the room’s acoustic properties using tools such as room measurement software and performing acoustic modeling. This helps me design an effective treatment plan that addresses specific acoustic problems. For instance, I often utilize software that analyzes the reverberation time of a space, allowing precise targeting of absorption materials to optimize that space’s response. This systematic approach ensures that the acoustic treatment enhances the listening experience and improves audio recording or playback quality.

Q 27. How do you ensure consistent audio levels throughout a performance or recording?

Maintaining consistent audio levels throughout a performance or recording is essential for achieving a balanced and professional-sounding result. This involves a combination of careful gain staging (as previously discussed), dynamic processing (using compressors and limiters), and monitoring techniques. I use metering tools such as VU meters and peak meters to monitor levels closely in real-time. I always strive to avoid clipping, while maintaining sufficient signal level to maximize dynamic range and minimize noise. In a live setting, I use a combination of digital mixing consoles and feedback suppression systems. During recording sessions, I employ automated mixing techniques and editing tools to ensure that levels stay within a consistent range.

For instance, in live concerts, I use dynamic processing to control the peaks and troughs in a vocalist’s performance, preventing sudden volume spikes and dips. When mixing, I use automation to adjust levels throughout the song, ensuring that each instrument or vocal track has an appropriate balance in relation to the overall mix. I’m also adept at using techniques such as riding faders manually to maintain consistent levels throughout a performance.

Q 28. Describe your experience with audio networking protocols (e.g., Dante, AES67).

My experience with audio networking protocols includes Dante and AES67. These protocols allow for the transmission of high-quality digital audio over standard Ethernet networks. Dante, in particular, is widely used in professional audio applications for its low latency and reliability. I’ve successfully implemented Dante networks in large-scale live sound systems and recording studios, managing multiple audio channels across several devices. I understand how to configure Dante controllers, route audio signals between devices, and troubleshoot network issues. AES67 offers interoperability between different manufacturers’ equipment, providing a more flexible and scalable audio networking solution. This interoperability is particularly important in complex environments where equipment from different vendors is used.

I understand the advantages and limitations of each protocol, including bandwidth requirements and latency considerations. My experience includes setting up and managing complex Dante networks with multiple devices, including microphones, mixers, and digital signal processors. I’ve also used AES67 in situations where interoperability with non-Dante devices was required, demonstrating adaptability and problem-solving skills in varied network configurations. Troubleshooting network issues is a critical part of this, and my experience includes pinpointing network glitches in large-scale setups.

Key Topics to Learn for Skill in Using Audio Equipment and Sound Systems Interview

- Microphone Techniques: Understanding different microphone types (dynamic, condenser, ribbon), polar patterns (cardioid, omnidirectional, figure-8), and their applications in various recording and live sound scenarios. Practical application: Explain how to choose the right microphone for a specific recording task (e.g., vocals, instruments, ambient sound).

- Mixing Consoles: Familiarity with analog and digital mixing consoles, including channel strips (EQ, compression, gain), routing, aux sends, and mastering principles. Practical application: Describe your experience setting up and operating a mixing console for a live performance or recording session, highlighting problem-solving techniques.

- Audio Signal Flow: A comprehensive understanding of how audio signals travel from source to output, including the roles of preamps, equalizers, compressors, effects processors, and digital audio workstations (DAWs). Practical application: Illustrate your understanding by tracing the signal path in a given audio setup.

- Sound System Setup and Troubleshooting: Knowledge of PA systems, speaker placement and configuration, sound reinforcement techniques, and common troubleshooting issues (feedback, low volume, distorted sound). Practical application: Explain your approach to setting up a sound system for an event, including considerations for room acoustics and audience size.

- Audio Software and DAWs: Proficiency in using common DAWs (e.g., Pro Tools, Logic Pro, Ableton Live) for recording, editing, mixing, and mastering audio. Practical application: Describe your experience using DAW software for a specific project, highlighting your workflow and problem-solving skills.

- Acoustic Principles: Understanding basic acoustics, including concepts like reverberation, reflection, absorption, and their impact on sound quality. Practical application: Explain how to improve the acoustics of a room for optimal sound.

Next Steps

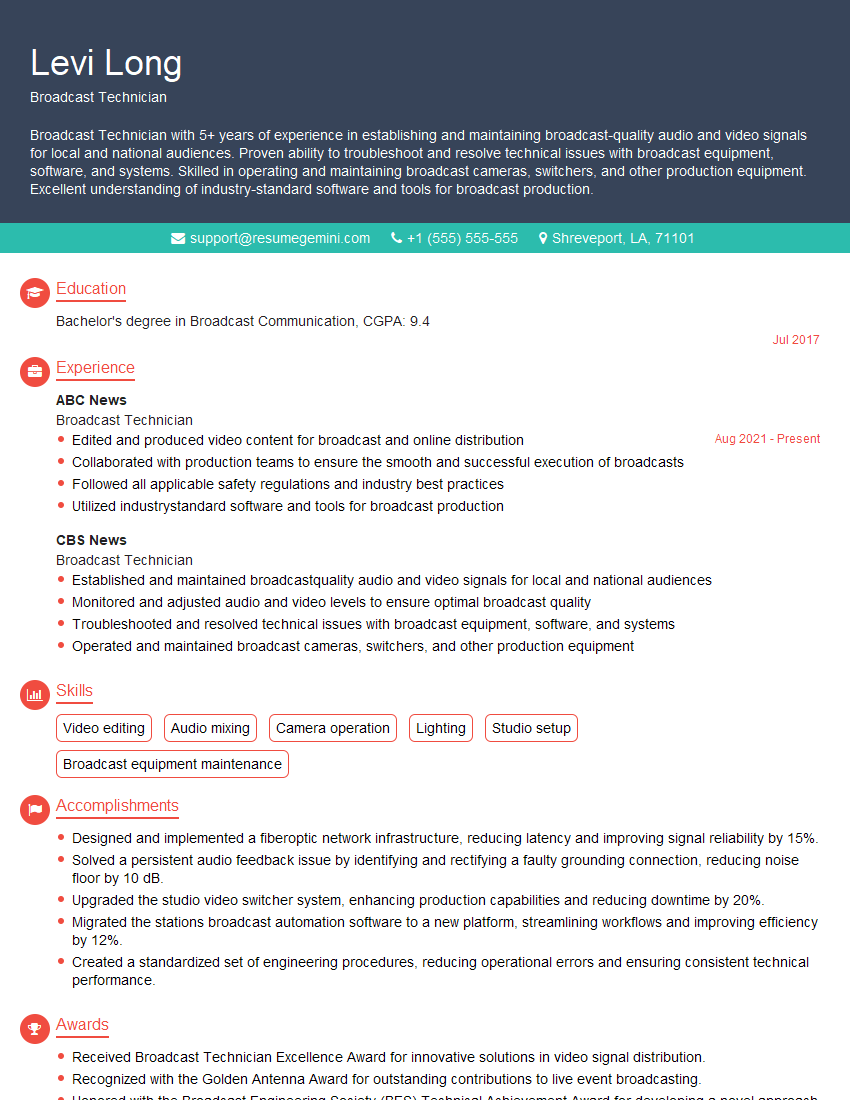

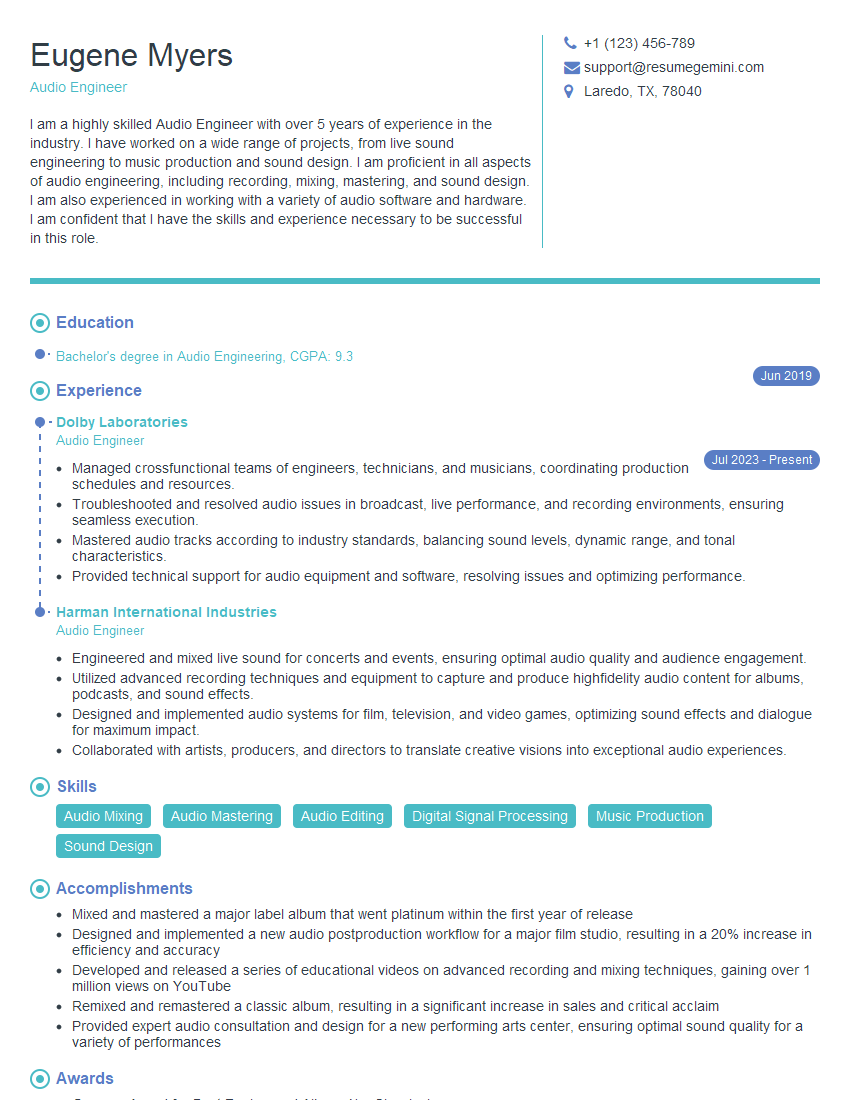

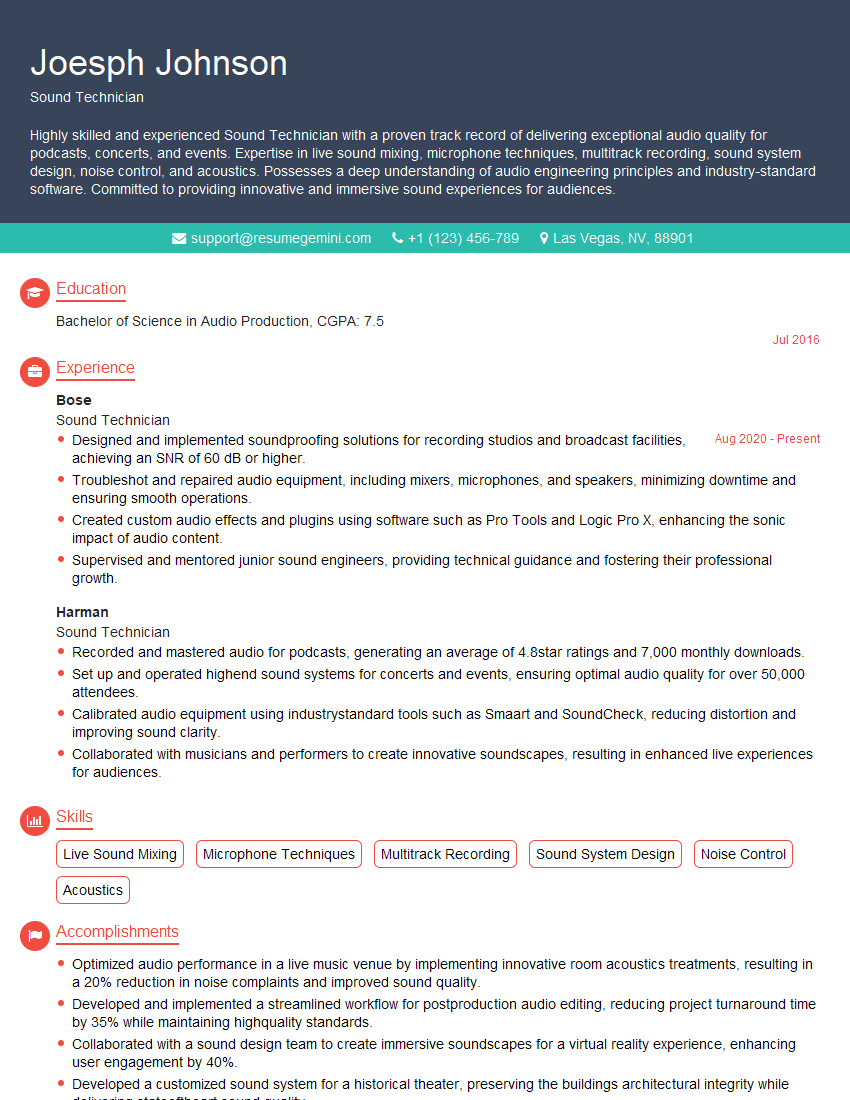

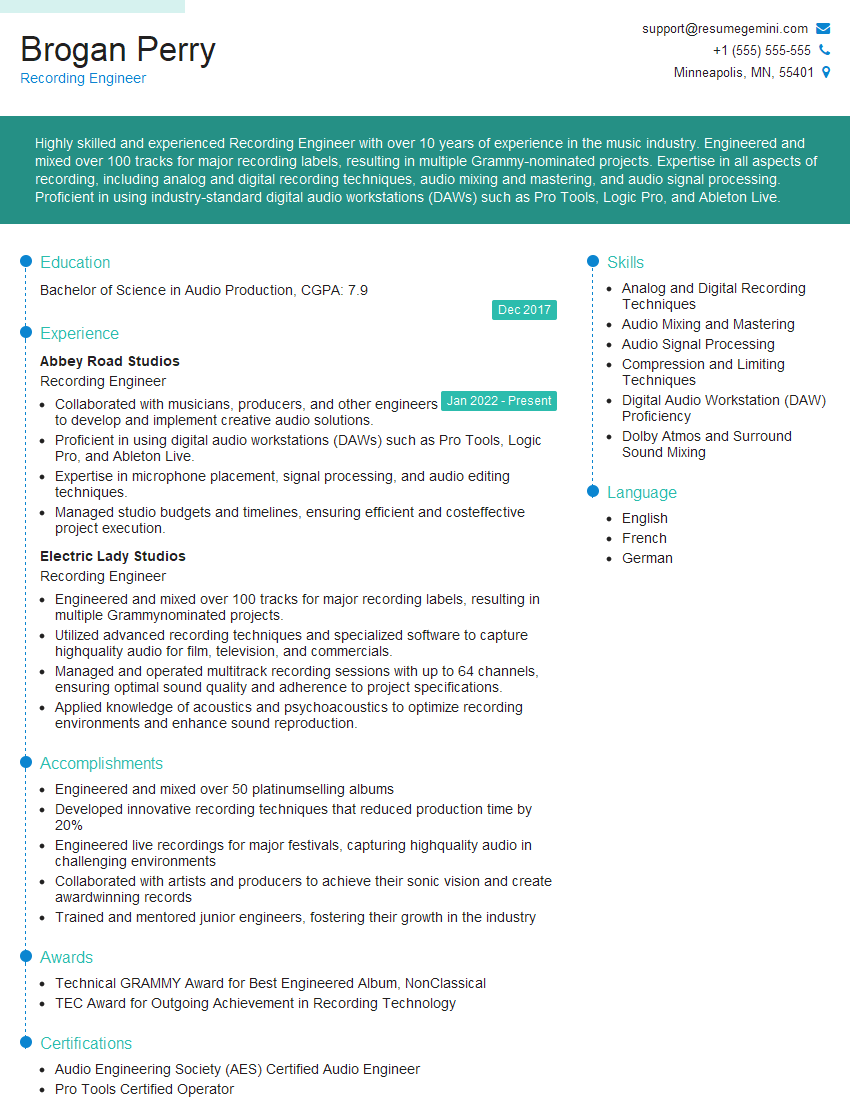

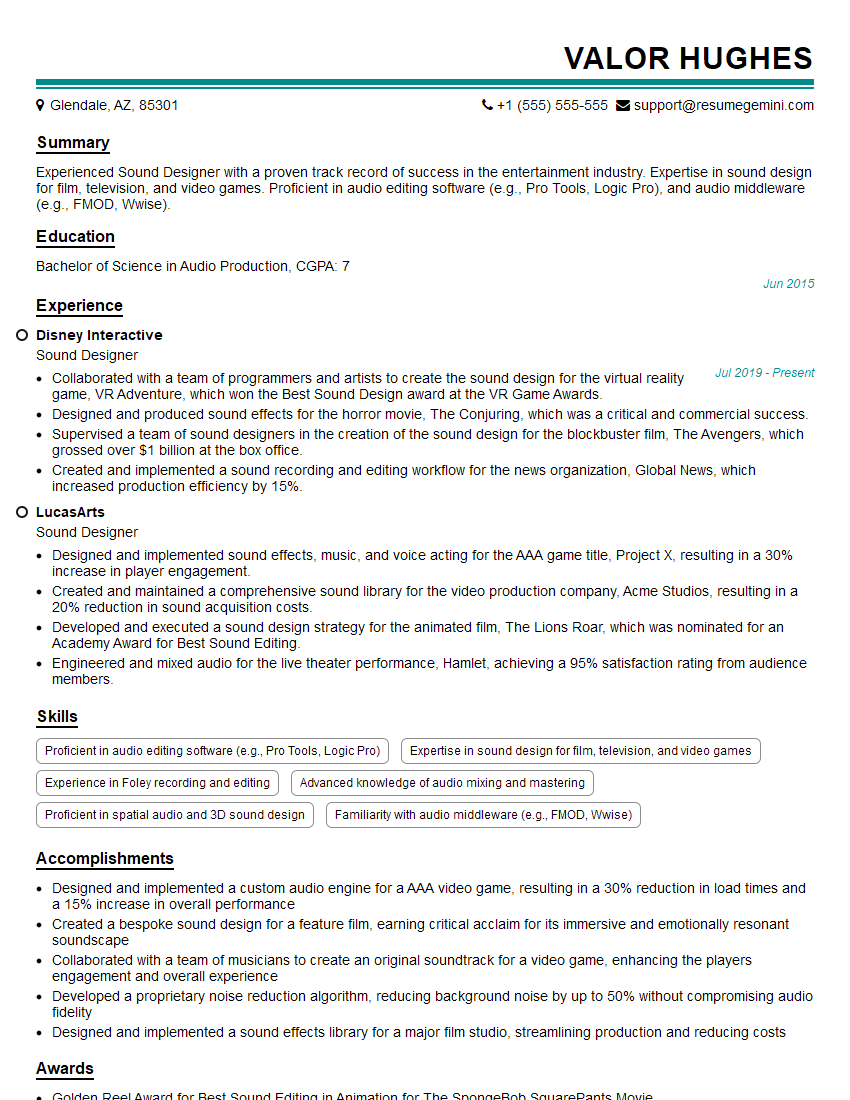

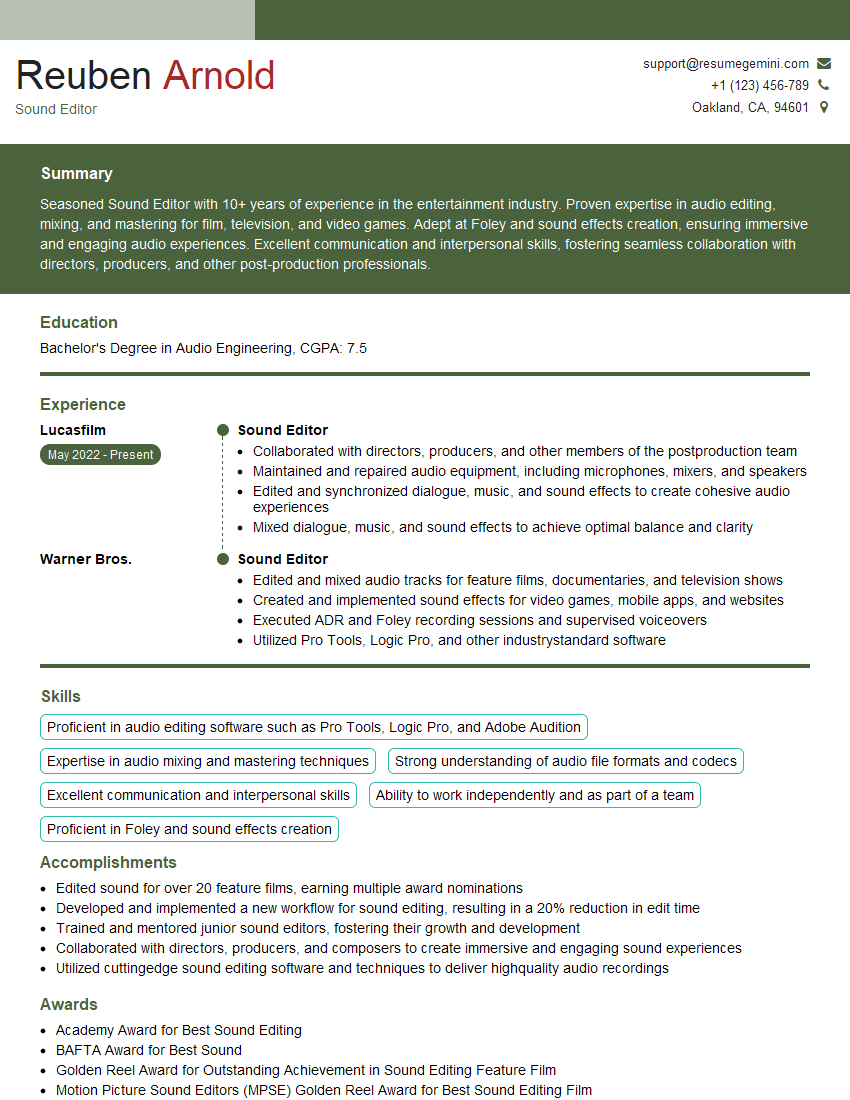

Mastering skills in audio equipment and sound systems is crucial for career advancement in various fields, including music production, live sound engineering, broadcasting, and post-production. A strong foundation in these areas demonstrates technical proficiency and problem-solving abilities highly valued by employers. To enhance your job prospects, create an ATS-friendly resume that effectively highlights your skills and experience. ResumeGemini is a trusted resource that can help you build a professional resume tailored to your specific skills. Examples of resumes tailored to Skill in Using Audio Equipment and Sound Systems are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good