Interviews are opportunities to demonstrate your expertise, and this guide is here to help you shine. Explore the essential Special Effects interview questions that employers frequently ask, paired with strategies for crafting responses that set you apart from the competition.

Questions Asked in Special Effects Interview

Q 1. Explain the difference between keyframing and procedural animation.

Keyframing and procedural animation are two fundamental approaches to animation in VFX. Keyframing is like drawing individual frames of a flipbook; you manually set the position, rotation, and other properties of an object at specific points in time (keyframes), and the software interpolates the motion between them. Procedural animation, on the other hand, defines the animation through algorithms and rules. You set parameters and the software calculates the animation based on those parameters, often creating more organic and unpredictable results.

Keyframing is excellent for precise control over character animation or complex movements requiring specific poses and timing. Think of a character walking – you’d keyframe the major poses (foot placement, arm swing) and the software fills in the gaps. However, it’s time-consuming for complex simulations.

Procedural animation shines when simulating natural phenomena like fire, smoke, or cloth. You set parameters like density, wind speed, and gravity, and the software generates the animation automatically. It’s efficient but offers less direct control. Imagine simulating a realistic ocean wave – procedural techniques are much more efficient than keyframing every single drop of water.

In practice, many VFX shots utilize a combination of both. For instance, you might use procedural methods to generate a realistic fire effect, then keyframe a character’s interaction with it for precise timing and visual storytelling.

Q 2. Describe your experience with various compositing software (e.g., Nuke, After Effects).

I have extensive experience with both Nuke and After Effects, each excelling in different areas of compositing. Nuke is my go-to for high-end compositing, especially for feature films. Its node-based workflow provides immense flexibility and control over complex shots, allowing for intricate layering, color correction, and effects. I’ve used it extensively for tasks such as roto-scoping, keying, and advanced techniques like 3D tracking and compositing.

After Effects, while less powerful for very complex scenes, is fantastic for quick turnaround projects, motion graphics, and simpler compositing tasks. Its intuitive interface and powerful effects library make it ideal for situations where speed and efficiency are prioritized. I’ve used it frequently for tasks like creating lower-thirds, integrating simple VFX elements, and performing basic compositing.

For example, on a recent project involving a spaceship flying through an asteroid field, I used Nuke to handle the complex compositing of the spaceship model, its reflections, and the particle effects simulating the asteroid field. After Effects was then used for some simple text elements and visual effects that needed quick adjustments during post-production.

Q 3. What are your preferred methods for creating realistic lighting and shadows?

Creating realistic lighting and shadows relies on understanding the physics of light and its interaction with surfaces. My preferred method involves a combination of techniques. I start by using global illumination (GI) solvers in rendering software like Arnold or RenderMan to accurately calculate indirect lighting and realistic shadow interactions. This ensures that the scene looks believable and properly lit, considering light bouncing off different surfaces.

Next, I utilize HDRI (High Dynamic Range Imaging) maps for environment lighting. These provide incredibly realistic lighting conditions and reflections, creating a sense of depth and immersion. Finally, I utilize post-processing techniques in compositing software (Nuke or After Effects) to fine-tune the lighting, add subtle highlights and shadows, and ensure consistent lighting across the entire shot.

For example, on a recent project featuring an outdoor scene, I used an HDRI map of a sunset to create realistic lighting on characters and objects. Arnold’s GI solver helped to add realistic indirect lighting and subtle shadows, bringing depth and realism to the scene. Post-processing allowed me to add a slight lens flare for a more cinematic look without overdoing it.

Q 4. How do you handle complex character rigging challenges?

Complex character rigging requires a deep understanding of anatomy, weight painting, and animation principles. I approach these challenges by breaking them down into smaller, manageable parts. First, I meticulously create a robust skeleton, ensuring a good balance between articulation and performance. This includes considering the character’s pose, movement capabilities, and overall design.

Next, I perform meticulous weight painting, ensuring that the skin deforms naturally over the underlying skeleton. A common strategy is to focus on individual muscle groups to enable more realistic deformations during animation. If necessary, I employ secondary rigging techniques, like blend shapes, to deal with complex facial animation or cloth simulations.

Finally, I thoroughly test the rig by animating various sequences, focusing on areas prone to errors (such as fingers or facial expressions). Advanced tools and techniques like inverse kinematics (IK) and forward kinematics (FK) are used where necessary to achieve the desired movement and control. For particularly challenging rigging tasks, I often collaborate with other artists and utilize industry-standard rigging software like Maya or Houdini.

Q 5. Explain your experience with different particle systems.

My experience with particle systems spans a wide range of software, including Houdini, Maya, and After Effects. Each system has its strengths and weaknesses. Houdini, for instance, offers the most powerful and flexible particle systems, allowing for complex simulations and intricate controls over particle behavior. I frequently use it to create realistic effects, like smoke, fire, water, or even crowds of people.

Maya’s particle system is more user-friendly, suitable for simpler effects and quicker turnarounds. I’ve used it successfully in situations needing less computational power. After Effects’ particle systems are ideal for motion graphics and simpler visual effects. The strength of After Effects lies in quick iterations and compositing-focused effects.

The selection of the particle system depends heavily on the complexity of the simulation and the project’s requirements. For realistic simulations like explosions or massive crowds, I rely on Houdini’s robust capabilities. For simpler effects or quick iterations in compositing, Maya or After Effects’ particle systems are efficient choices. Understanding the nuances of each system is crucial for optimizing workflows and achieving the desired level of realism.

Q 6. Describe your process for creating believable simulations (e.g., fire, water, smoke).

Creating believable simulations, such as fire, water, and smoke, involves understanding the underlying physics and employing appropriate simulation techniques. My process starts with researching the physical properties of the element I’m simulating. For fire, for example, I would study how it reacts to wind, gravity, and fuel sources. This research helps me choose the right simulation software and parameters.

Next, I use specialized software, such as Houdini or RealFlow (for fluids), to set up the simulation. This involves defining parameters like density, viscosity, temperature, and interaction with other elements in the scene. I use iterative refinement, constantly adjusting parameters and observing the results until the simulation aligns with my vision.

Finally, I refine the simulation in compositing software. This stage might include adding details, improving color accuracy, and integrating the simulation into the final scene. For instance, I might add subtle glowing embers to a fire simulation or adjust the color and transparency of a smoke plume to create more visual appeal while maintaining realism.

It’s a very iterative process. I frequently adjust parameters, render tests, and compare them to reference material to achieve the desired level of realism. The goal is not just a technically correct simulation but also one that enhances the storytelling and complements the overall visual style of the project.

Q 7. How do you approach troubleshooting technical issues in a VFX pipeline?

Troubleshooting technical issues in a VFX pipeline requires a systematic approach. My first step is always to isolate the problem. I start by identifying the exact point of failure—is it in the modeling, rigging, animation, rendering, or compositing stage? This helps focus the troubleshooting efforts.

Next, I carefully examine the error messages or symptoms. Many times the software provides hints as to the root cause. I review logs and look for inconsistencies in the scene or workflow.

If the problem isn’t immediately obvious, I systematically check each step of the pipeline, looking for potential sources of error. This might involve testing different render settings, checking for data corruption, or ensuring that all software versions are compatible.

Collaboration is also crucial. I often consult with other artists or technical experts to get a fresh perspective and benefit from their experience. And finally, if all else fails, I document the issue thoroughly and look for solutions online, consult the software documentation, or seek assistance from vendor support.

A methodical and detailed approach ensures that problems are resolved efficiently, avoiding costly delays and ensuring the project remains on schedule.

Q 8. What are your preferred methods for creating realistic textures and materials?

Creating realistic textures and materials is paramount in VFX. My approach involves a multi-faceted strategy, combining procedural techniques with photogrammetry and hand-painting where necessary.

Procedural Techniques: I heavily utilize software like Substance Designer to create intricate textures based on algorithms. This allows for incredibly detailed and repeatable results, perfect for things like creating realistic wood grain, stone, or fabric. For example, I might use noise functions combined with various filters to simulate the subtle variations in a piece of weathered wood, then apply displacement maps to add depth and realism. This offers superior control and scalability compared to purely hand-painted textures.

Photogrammetry: For ultra-realistic results, I frequently employ photogrammetry. This process involves capturing hundreds of photographs of a real-world object from various angles, which are then processed using specialized software (like RealityCapture or Meshroom) to generate a 3D model complete with highly accurate textures. This is invaluable for creating detailed assets like rocks, plants, or even characters.

Hand-Painting: While procedural and photogrammetry are my go-to methods, hand-painting in programs like Mari or Photoshop often proves crucial for achieving fine details, subtle imperfections, or unique artistic styles. Imagine adding intricate scratches to a metal surface or hand-painting realistic dirt and grime – this level of artistry is hard to achieve without manual intervention. I often blend these techniques for optimal results.

Example: In a recent project involving a scene with a heavily worn leather jacket, I used Substance Designer to create a base leather texture, refined it with hand-painted details in Mari to add wear and tear, and then incorporated a photogrammetry scan of actual leather wrinkles for added realism.

Q 9. Describe your experience with matchmoving and camera tracking.

Matchmoving and camera tracking are crucial for seamlessly integrating CG elements into live-action footage. My experience spans various software packages, including PFTrack, Boujou, and SynthEyes. The process typically begins with analyzing the footage to identify key features and creating a 3D camera solve. This involves identifying and tracking points in the footage which correspond to real-world locations. This 3D camera data is then used to accurately position and move virtual objects within the scene, ensuring they align correctly with the live-action elements.

Challenges and Solutions: Challenges arise with difficult footage – rapid camera movement, lack of distinct features, or reflective surfaces can make tracking challenging. I overcome these by employing different tracking methods, adjusting tracking parameters, and sometimes even using multiple trackers simultaneously. When necessary, I will manually keyframe the camera movement to maintain accuracy and continuity.

Example: In a project requiring the integration of a digital spaceship into an aerial shot, careful matchmoving was paramount. I used PFTrack to track several identifiable landmarks in the background, resulting in a highly accurate camera solve, ensuring seamless integration of the spaceship without any jarring inconsistencies.

Q 10. Explain your understanding of color grading and color spaces.

Color grading involves adjusting the color and tone of an image or video to achieve a specific look and feel, aligning with the overall artistic vision of the project. Understanding color spaces is fundamental to this process. Different color spaces represent colors in different ways, each with its own advantages and disadvantages.

Common Color Spaces: Some common color spaces include:

- Rec. 709: The standard color space for HDTV.

- ACES (Academy Color Encoding System): A wide-gamut color space designed for high dynamic range (HDR) and provides a large color volume.

- sRGB: The standard color space for the internet and most computer monitors.

Color Grading Workflow: My typical workflow starts with choosing the appropriate color space. I then use tools like DaVinci Resolve or Autodesk Flame to adjust the color balance, contrast, saturation, and other parameters. I pay close attention to the overall mood and tone, ensuring the final product is visually appealing and consistent.

Importance of Color Management: Accurate color management is crucial to prevent color shifts during the post-production process. This involves managing and maintaining the color space consistently throughout the pipeline. If a shot is rendered in ACES but then displayed on a monitor using sRGB, it could appear significantly different – potentially washed out or oversaturated. Therefore, I must account for those differences, employing LUTs (Look-Up Tables) to translate between color spaces as needed.

Q 11. How do you collaborate effectively with other members of a VFX team?

Effective collaboration is the backbone of successful VFX projects. My approach is built around clear communication, proactive problem-solving, and a deep understanding of each team member’s role.

Communication: I maintain open and consistent communication with the team, including directors, producers, supervisors, animators, modelers, and other artists. I actively participate in dailies, provide regular updates, and clearly articulate my needs and challenges. Utilizing project management software and shared cloud storage for assets helps enhance this collaboration.

Problem-Solving: When problems arise (and they inevitably do!), I approach them proactively, working collaboratively with team members to find optimal solutions. Rather than isolating problems, I advocate for open discussion to address them efficiently and effectively, often brainstorming alongside my colleagues.

Respect for Roles: I respect the expertise and contribution of each team member, recognizing that our individual skills complement each other to achieve a common goal. This understanding fosters mutual respect and facilitates efficient workflows.

Example: In one project, a conflict arose between the animation and lighting departments regarding character visibility. By facilitating communication between the leads, identifying the root cause, and suggesting a compromise, I managed to prevent delays and maintain the project’s timeline.

Q 12. What is your experience with version control systems (e.g., Git)?

Version control is indispensable in VFX. I have extensive experience using Git, both locally and through platforms like GitHub and Bitbucket. I understand branching strategies (like Gitflow), merging, conflict resolution, and commit best practices.

Workflow: My workflow usually involves creating branches for specific tasks, committing changes frequently with descriptive messages, and pushing updates to the remote repository. This ensures that all changes are tracked, easily reverted if necessary, and collaborative workflows are smooth. It allows for parallel development and reduces the risk of overwriting each other’s work.

Benefits: Git provides a robust system for managing the many iterations of assets in a typical VFX project. It’s crucial for tracking changes, managing revisions, collaborating with multiple artists, and ensuring that the project remains organized and manageable.

Example: During a particularly complex shot, I used Git to track numerous iterations of the lighting setup and camera movements. This allowed me to easily revert to an earlier version when a lighting change inadvertently affected other elements, saving significant time and effort.

Q 13. Describe your experience with rendering software (e.g., Arnold, Renderman).

I have extensive experience with various rendering software packages, including Arnold, Renderman, and V-Ray. My proficiency extends beyond merely operating these tools; I understand the underlying rendering principles and optimization techniques vital to efficient production.

Arnold: I’m highly proficient in Arnold’s physically-based rendering engine, utilizing its strengths in handling complex scenes and producing high-quality images. I often leverage its procedural shaders for creating intricate materials and its advanced features for creating realistic effects such as subsurface scattering and global illumination.

Renderman: Renderman’s strengths lie in its versatility and ability to handle massive datasets. My understanding of its shader language allows me to create custom shaders tailored to specific needs. I’ve used it on projects demanding photorealistic imagery.

V-Ray: V-Ray’s ease of use and extensive plugin support have made it invaluable for many projects, particularly in architectural visualization. I’m comfortable utilizing its features for efficient rendering of large-scale models and scenes.

Choice of Renderer: The choice of renderer depends on the specific project’s requirements; some projects necessitate the realism of Arnold, while others benefit from the flexibility of V-Ray. I’m comfortable selecting and using the most appropriate rendering engine for each project.

Q 14. How do you optimize scenes for faster rendering times?

Optimizing scenes for faster rendering times is crucial for efficient VFX production. My strategies are multi-pronged, focusing on both scene complexity and rendering settings.

Scene Optimization:

- Geometry Reduction: Simplifying models by reducing polygon count, using level of detail (LOD) systems, or employing proxies can dramatically improve rendering speed without significantly compromising visual quality.

- Texture Optimization: Using efficient texture formats (like OpenEXR) and optimizing texture resolutions can significantly reduce memory usage and rendering time. I often use smaller, lower-resolution textures where the level of detail is not critical.

- Light Optimization: Reducing the number of lights, strategically placing lights, using light linking, and utilizing light portals can vastly enhance rendering speed. Unnecessary lights are a common performance bottleneck.

- Culling and Occlusion: Employing techniques to cull invisible geometry and utilize occlusion culling can significantly reduce the amount of geometry the renderer needs to process.

Rendering Settings Optimization:

- Sample Reduction: While higher sample counts produce higher-quality images, reducing sample rates (while maintaining acceptable noise levels) can improve rendering times significantly. Adaptive sampling often provides the best balance between speed and quality.

- Ray Depth: Lowering ray bounce depth (especially in global illumination) will accelerate rendering while potentially reducing some subtle light reflections and refractions.

- Render Passes: Only render the necessary passes. Avoid rendering unnecessarily high-resolution passes.

Example: In a large-scale outdoor scene, I used LODs to render highly detailed models only when close to the camera. I also reduced the number of lights and optimized textures to reduce rendering times from several hours down to approximately 30 minutes, maintaining a high quality of results.

Q 15. What are some common challenges in creating realistic digital characters?

Creating realistic digital characters is a complex process fraught with challenges. The uncanny valley – that unsettling feeling when a character looks almost human but not quite – is a major hurdle. This often arises from subtle inconsistencies in details like skin texture, subtle muscle movements, and realistic eye rendering. Another significant challenge lies in accurately simulating the interplay of light and shadow on a character’s surface, especially considering factors like subsurface scattering (how light penetrates and scatters beneath the skin). Furthermore, creating believable hair and clothing that interacts realistically with the character’s movements and the environment is incredibly demanding, often requiring advanced simulation techniques.

For instance, achieving realistic skin requires meticulous texturing and the use of advanced shaders that can simulate pores, blemishes, and the subtle variations in skin tone and reflectivity. Similarly, simulating realistic hair necessitates sophisticated physics simulations and rendering techniques to prevent it from looking stiff or unnatural. The challenge is not just in creating the individual components but in seamlessly integrating them to create a cohesive, believable whole.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you maintain consistency in visual style across a project?

Maintaining visual consistency throughout a project is crucial for believability and immersion. This requires a comprehensive approach starting from the pre-production phase. Establishing a detailed style guide is essential, defining color palettes, lighting styles, character designs, and texture details. This guide serves as a reference point for the entire team, including modelers, texture artists, lighters, and compositors. Regular review meetings and shared resources like texture libraries and lighting setups further reinforce consistency.

A practical example is in film VFX. Let’s say we are creating a fantasy film. We need to maintain a consistent look for magical effects. We would define a specific color scheme (e.g., blues and purples for ice magic, fiery oranges and reds for fire magic) and particle effect styles to ensure that all magic spells in the film share a recognizable visual identity. This requires strict adherence to the style guide and close collaboration between artists throughout the production pipeline.

Q 17. Describe your experience with creating believable crowd simulations.

Creating believable crowd simulations involves more than just populating a scene with numerous characters. It requires careful consideration of individual character behaviors, group dynamics, and realistic interactions with the environment. Software like CrowdFlow or Massive allow for the creation of complex crowd simulations, where individual agents are programmed with parameters governing their movement, interactions, and reactions. Key factors include pathfinding, collision avoidance, realistic animation, and variations in character behaviors. Simply using repeated animation loops won’t work; the illusion of a crowd requires a high degree of randomness and unpredictability within a structured framework.

In one project, I worked on simulating a large, bustling marketplace scene. We used procedural generation to create variations in character models and behaviors. We also incorporated realistic reactions to environmental stimuli – for example, characters stepping aside to avoid obstacles or reacting to events happening around them. This ensured the crowd felt natural and responsive, adding depth and realism to the scene.

Q 18. Explain your understanding of different compositing techniques.

Compositing is the art of combining multiple visual elements into a single image or sequence. Several key techniques exist. Keying involves isolating an element from its background, usually using color information (chroma keying) or edge detection (matte painting). Tracking uses motion analysis to align elements accurately across multiple frames. Rotoscoping is a more labor-intensive technique involving manually outlining elements frame by frame. Color correction and grading involves adjusting color balance, contrast, and saturation to match different elements and create a cohesive look. Finally, advanced techniques like depth compositing utilize depth information to create realistic layering and blurring effects.

For example, in compositing a spaceship flying through a nebula, I’d use keying to separate the spaceship model from its background, tracking to maintain its position relative to the moving nebula, and color correction to ensure the spaceship’s lighting is consistent with the nebula’s colors. Depth compositing would then allow the nebula to be blurred appropriately behind the spaceship, enhancing the sense of depth and realism.

Q 19. How do you approach creating convincing effects that blend seamlessly with live-action footage?

Creating convincing effects that blend seamlessly with live-action footage demands careful planning and execution. Prior to shooting, pre-visualization (previs) helps determine camera angles, lighting, and the overall integration of the effects into the scene. During the shoot, careful attention must be paid to lighting and camera matching, ensuring that the virtual elements are lit and photographed consistently with the live-action components. Accurate camera tracking and matchmoving are crucial to ensure that the virtual elements are correctly positioned and animated in relation to the live-action environment. Finally, the compositing process involves careful color correction and grading to harmonize the visual styles.

Imagine adding a digital dragon to a live-action landscape. We would need to meticulously match the lighting and shadows on the dragon model to those in the live-action scene. The dragon’s scale and reflectivity must be subtly matched to the surrounding rocks, trees and sky, to ensure a natural appearance. This ensures a believable integration and enhances immersion.

Q 20. What is your experience with different types of shaders?

Shaders are programs that determine how surfaces appear on screen, controlling properties like color, reflectivity, roughness, and transparency. Different shader types cater to specific visual needs. Diffuse shaders simulate simple surface reflection. Specular shaders handle reflective highlights. Subsurface scattering shaders model how light penetrates and scatters under the surface (crucial for skin and other translucent materials). Principled BSDF shaders offer a versatile framework unifying many common surface properties. Volume shaders are used for creating fog, smoke, and other volumetric effects.

For example, a realistic skin shader might combine diffuse, specular, and subsurface scattering components to accurately represent skin’s complex interaction with light. The choice of shader depends entirely on the specific visual requirements of the scene and the desired level of realism.

Q 21. Describe your experience with creating believable facial animation.

Creating believable facial animation is among the most challenging aspects of visual effects. It involves a deep understanding of human anatomy, facial musculature, and the subtle nuances of expression. Techniques range from traditional keyframing to advanced performance capture and procedural animation. Performance capture uses motion capture data from actors’ facial performances to drive the animation, but often requires substantial clean-up and refinement. Blendshapes are another key technique; they use morph targets to blend between different facial expressions. Advanced techniques like muscle simulation offer even greater control and realism but require significant computing power.

For example, in animating a character’s emotional response, I might blend different blendshapes to create a subtle transition between neutral, surprise, and joy, making sure these nuances are appropriate to the context of the scene and character’s personality. Maintaining natural timing and flow is paramount for believability. Excessive or unnatural movements can quickly break the illusion.

Q 22. How do you optimize assets for game engines?

Optimizing assets for game engines is crucial for performance and visual fidelity. It involves a multi-pronged approach focusing on reducing polygon count, optimizing textures, and minimizing draw calls. Think of it like decluttering your house – you want to keep only what’s essential while ensuring it’s organized efficiently.

- Polygon Reduction: High-polygon models are visually rich but demand significant processing power. Techniques like decimation (reducing the number of polygons while preserving the overall shape) and level of detail (LOD) systems (using lower-poly models at greater distances) are critical. For example, a character model might have a high-poly version for close-ups and several progressively lower-poly versions for further distances.

- Texture Optimization: Textures are the ‘skin’ of your 3D models. Large, high-resolution textures consume considerable memory. Optimizations include using smaller textures where appropriate, compressing textures (e.g., using DXT or BC formats), and using texture atlases (combining multiple smaller textures into a single larger texture to reduce draw calls).

- Draw Call Reduction: A draw call is a command issued by the game engine to render an object. Minimizing these calls drastically improves performance. Techniques include using techniques like instancing (rendering multiple identical objects with a single draw call), batching (grouping objects to render together), and optimizing your scene hierarchy.

- Material Optimization: Materials define the visual properties of your assets. Using efficient shaders (programs that determine how objects are rendered) is crucial. Simple shaders consume less processing power, particularly important for mobile games or low-end hardware.

For instance, in a project I worked on involving a large city environment, we implemented LOD systems for buildings and vehicles, significantly reducing the draw call count and improving frame rates, especially at longer view distances.

Q 23. What are your preferred techniques for creating realistic hair and fur?

Creating realistic hair and fur is a complex undertaking, requiring a blend of artistic skill and technical know-how. My preferred techniques center around using advanced hair and fur simulation software coupled with grooming tools. Imagine trying to style a real-life wig – it takes precision and patience!

- Procedural Generation: Tools like XGen (Maya) or HairFX (3ds Max) allow for the procedural generation of hair and fur strands. This approach allows for a high degree of control over density, length, and styling, all while being very efficient for animation.

- Simulation: Physically-based simulations add realism to the hair and fur. This simulates how hair interacts with wind, gravity, and character movement. For example, hair flowing naturally in the wind or reacting realistically to a character’s head movements.

- Grooming: This is the artistic process of styling and shaping the generated hair/fur. It involves comb tools, guide curves, and other techniques to achieve the desired look. Think of it as a virtual hairdresser perfecting the hairstyle.

- Rendering: Subsurface scattering shaders are important for rendering realistic hair and fur, simulating the way light interacts with the individual strands.

In one project, I used XGen to create a realistic lion mane, implementing wind simulations and combing techniques for natural-looking movement and styling. This gave the mane incredible life and reacted believably to the character’s actions and environment.

Q 24. Describe your experience with virtual production workflows.

My experience with virtual production workflows encompasses the entire pipeline from pre-visualization to final compositing. It’s like filming a movie on a virtual set – but with much greater control and flexibility.

- Pre-visualization: Utilizing software like Unreal Engine or Unity to create virtual sets and camera paths beforehand greatly helps with planning and visualization.

- On-set Virtual Production: Working with LED walls, motion capture, and real-time rendering during filming to provide actors with immediate feedback in the virtual environment. This improves performance and allows for better creative decisions.

- Post-production: Integrating the virtual elements with live-action footage, requiring meticulous tracking, compositing, and rotoscoping techniques to create seamless blends.

In a recent project, we used an LED wall to project a realistic cityscape behind the actors. Using a real-time engine and camera tracking allowed for the actors to interact convincingly with the virtual environment, saving significantly on post-production time and costs.

Q 25. What is your understanding of different camera projection methods?

Different camera projection methods dictate how 3D geometry is mapped onto a 2D screen. The choice depends on the desired effect and the characteristics of the lens used. Think of it like choosing a lens for a camera – each provides a unique perspective.

- Perspective Projection: This is the most common method, mimicking how the human eye perceives depth and perspective. Objects further away appear smaller. It is commonly used in almost all film and gaming.

- Orthographic Projection: This method doesn’t account for perspective; parallel lines remain parallel, resulting in a flat, un-distorted projection. It’s often used for technical drawings, blueprints, or in situations where a distortion-free view is needed.

- Fisheye Projection: This extreme wide-angle projection introduces significant distortion, creating a curved, panoramic view. It provides a very unique and wide field of view.

Understanding these differences is vital for accurate camera matching and creating realistic visuals. For example, a perspective projection is usually preferred for creating a sense of realism in a film, while an orthographic projection might be used to create a technical schematic or a map.

Q 26. How do you manage your time effectively when working on multiple projects simultaneously?

Managing time effectively across multiple projects requires a robust organizational system and disciplined approach. Think of it like conducting an orchestra – each section needs attention and coordination.

- Prioritization: Identifying the most urgent and critical tasks across projects is paramount. Using tools like a Kanban board or a prioritized task list helps.

- Time Blocking: Allocating specific time blocks for each project ensures focused work. This minimizes context switching and boosts efficiency.

- Communication: Maintaining clear communication with clients and team members is crucial to avoid delays and conflicts.

- Delegation: If possible, delegate tasks to team members to manage workload effectively.

I personally utilize a project management software that allows me to track progress, deadlines, and allocate resources effectively. This ensures all projects are moving forward and meet their deadlines.

Q 27. Explain your experience with motion capture data and its application in VFX.

Motion capture (mocap) data is instrumental in VFX, providing realistic character animation and movement. It is like creating a skeleton for your character’s movement.

- Data Acquisition: Mocap involves capturing an actor’s movement using various technologies, such as optical markers, inertial sensors, or even specialized suits.

- Data Processing: The captured data needs cleaning and retargeting to fit the digital character’s skeletal structure. This involves removing noise and smoothing out any inconsistencies.

- Animation Integration: The processed mocap data is used to drive the character’s animation in 3D software, providing realistic and nuanced movement.

In one project, we used mocap to animate a complex fight scene. Capturing the actors’ performance in a mocap suit and then applying it to our digital characters yielded significantly more realistic and engaging results compared to traditional keyframing techniques.

Q 28. Describe your understanding of the VFX pipeline from pre-production to final delivery.

The VFX pipeline is a complex, multi-stage process, from initial concept to final delivery. Think of it like a relay race – each stage depends on the previous one.

- Pre-production: This involves concept art, storyboarding, and planning. It’s like the blueprint for the entire process.

- Production: This is where the assets are created: 3D modeling, texturing, rigging, animation, and lighting. This is the construction phase.

- Post-production: This includes compositing (combining different elements), visual effects, color correction, and final rendering. This is where all the pieces come together.

- Delivery: Delivering the final product to the client, in the required format and resolution.

A clear understanding of each stage is essential for efficient workflow and project management. In my work, I consistently ensure each step is properly documented and reviewed, guaranteeing seamless transition between departments and minimizing potential errors or delays.

Key Topics to Learn for Your Special Effects Interview

- 3D Modeling & Animation: Understand the principles of 3D modeling software (e.g., Maya, Blender, 3ds Max), character animation techniques, and rigging. Be prepared to discuss your experience with different modeling workflows and your approach to problem-solving during the animation process.

- VFX Compositing: Showcase your knowledge of compositing software (e.g., Nuke, After Effects), keying techniques, rotoscoping, and color correction. Discuss your experience in integrating CGI elements seamlessly into live-action footage.

- Simulation & Dynamics: Explain your understanding of fluid simulation, particle effects, rigid body dynamics, and cloth simulation. Be ready to discuss how you’ve utilized these techniques to create realistic and engaging effects in your previous projects.

- Lighting & Rendering: Demonstrate familiarity with lighting techniques (e.g., global illumination, ray tracing) and rendering processes. Be prepared to discuss different render engines and your approach to optimizing render times while maintaining quality.

- Texture & Material Creation: Explain your expertise in creating realistic and stylized textures and materials using software such as Substance Painter or Mari. Be prepared to discuss your workflow and techniques for achieving specific surface properties.

- Pipeline & Workflow: Discuss your understanding of the overall VFX pipeline, from asset creation to final compositing. Highlight your experience with different project management methodologies and your ability to collaborate effectively within a team.

- Problem-Solving & Technical Proficiency: Be prepared to discuss troubleshooting techniques, overcoming technical challenges, and optimizing performance within your chosen software.

Next Steps

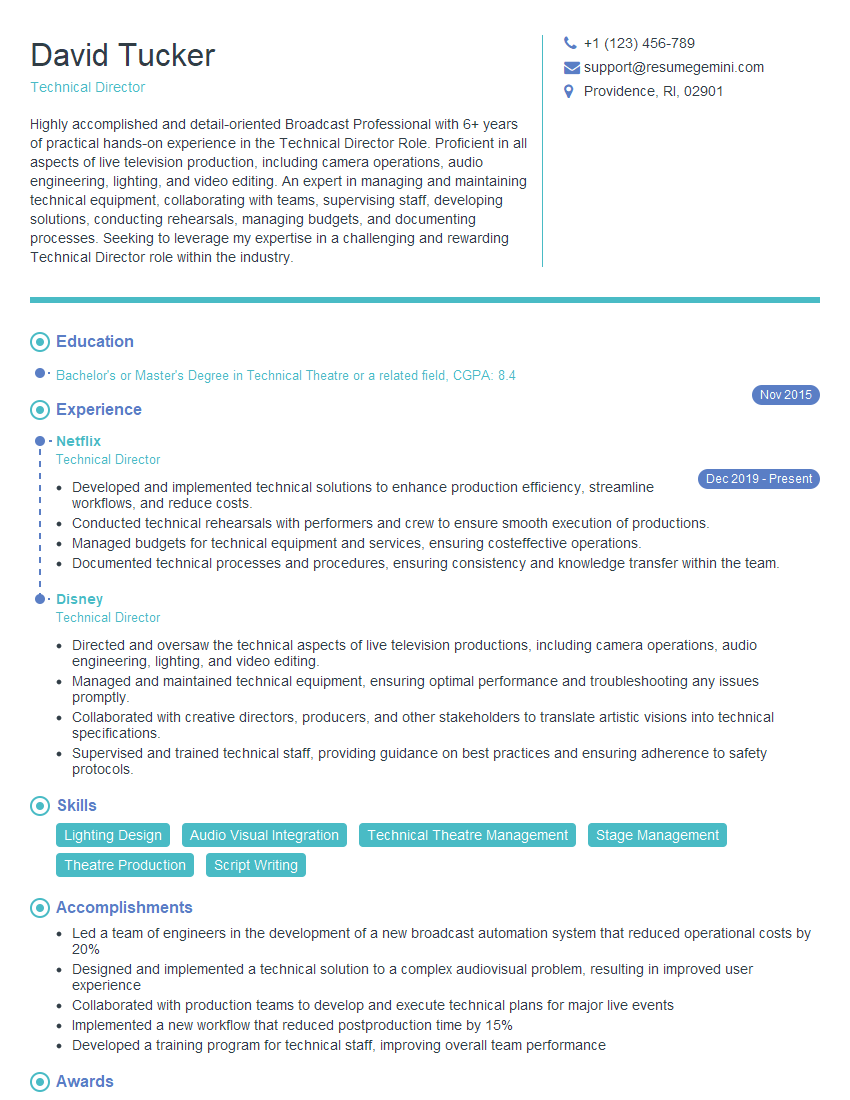

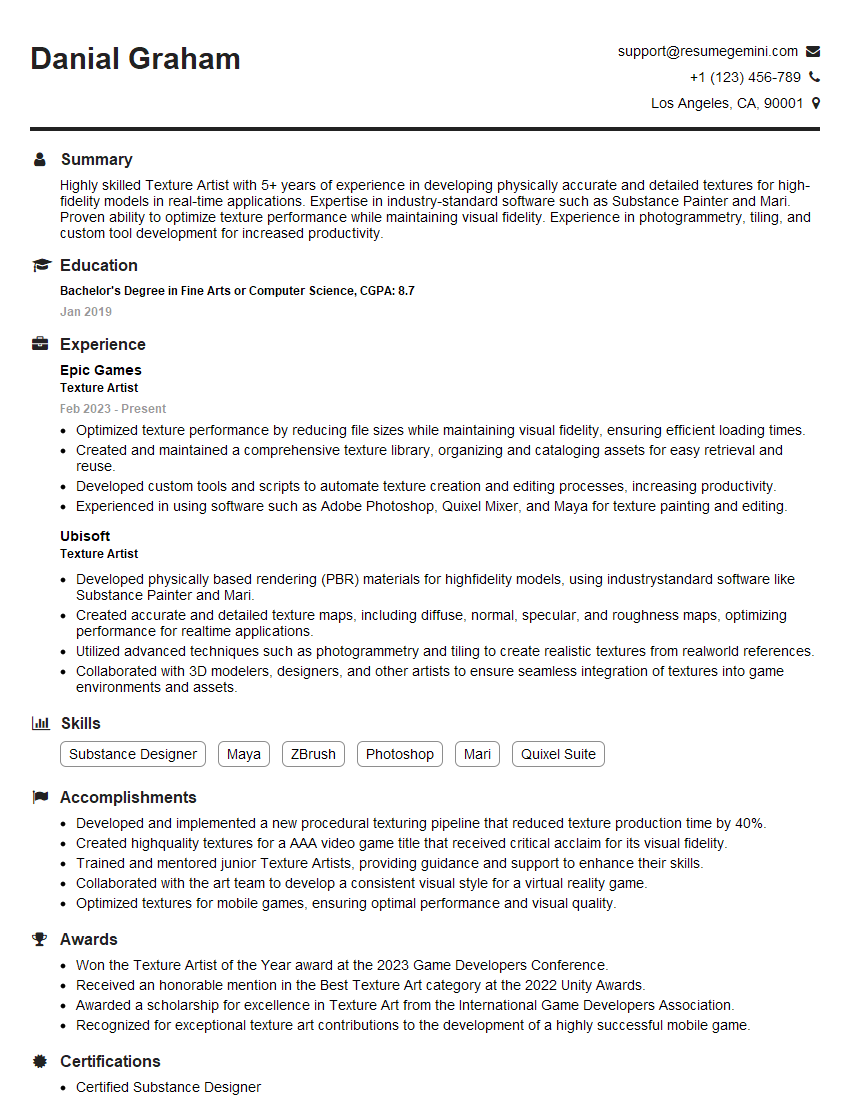

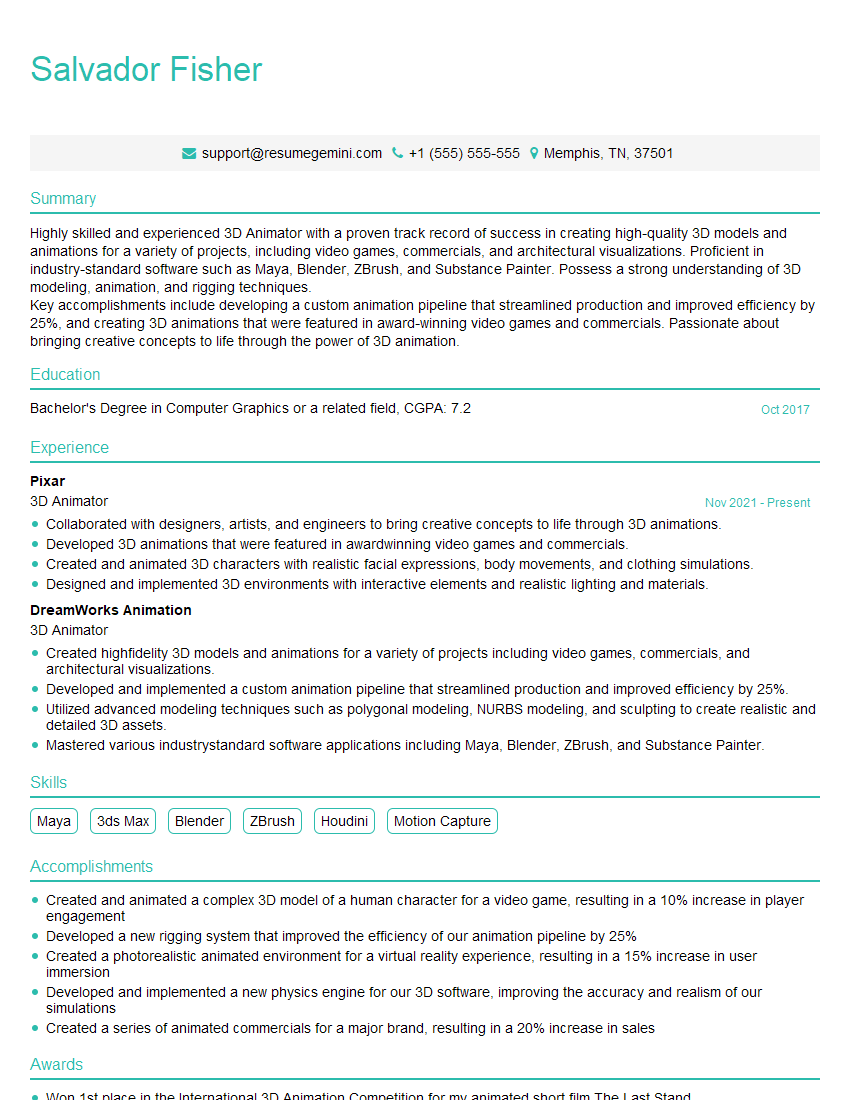

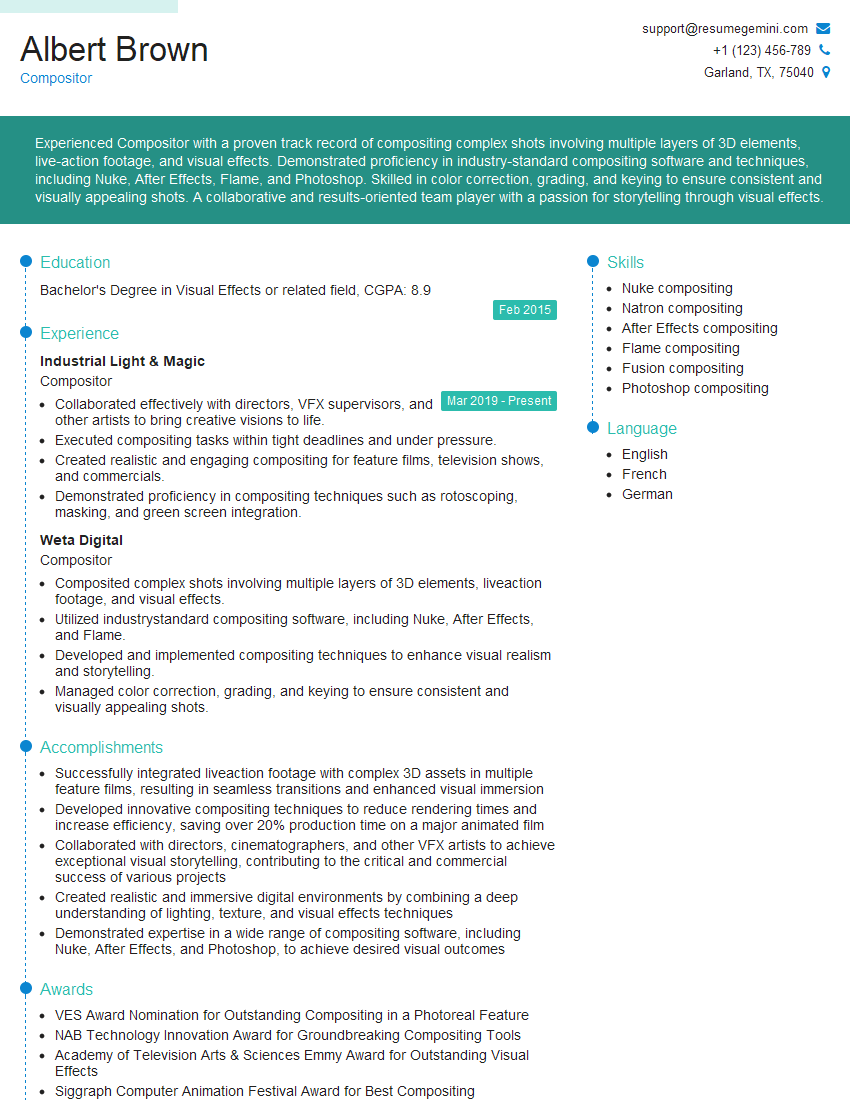

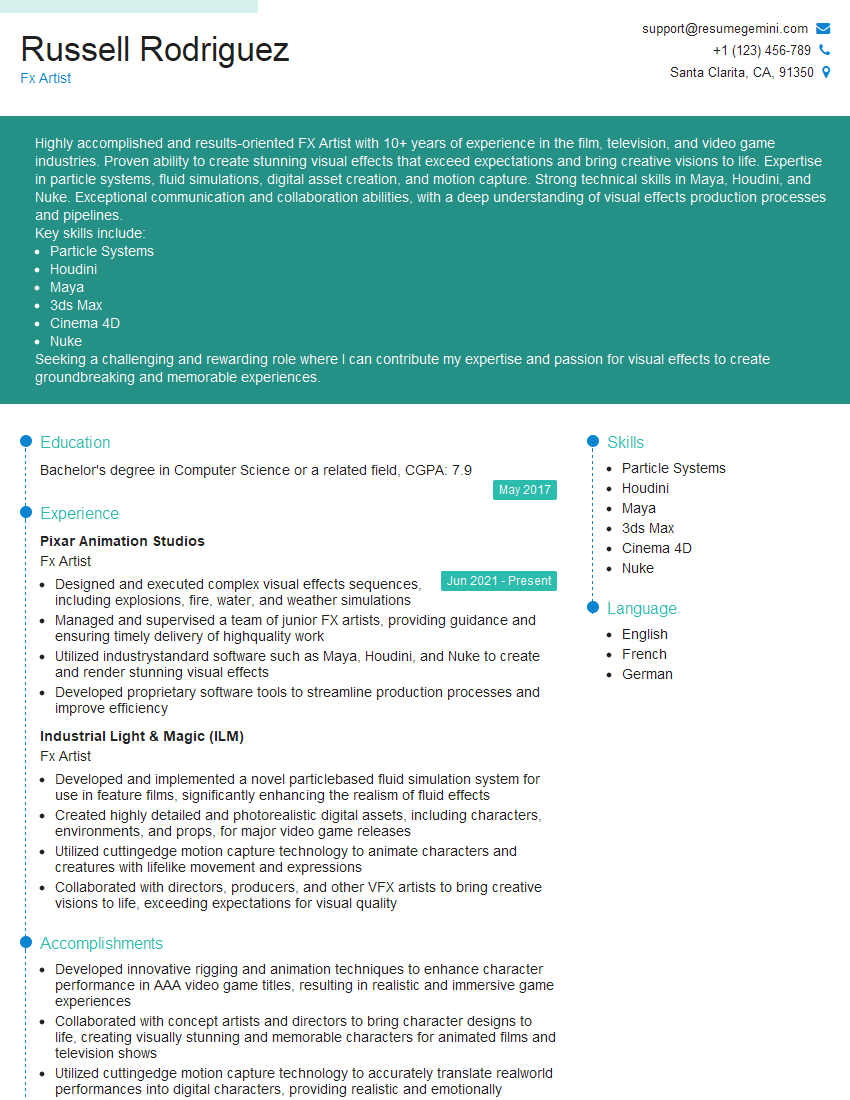

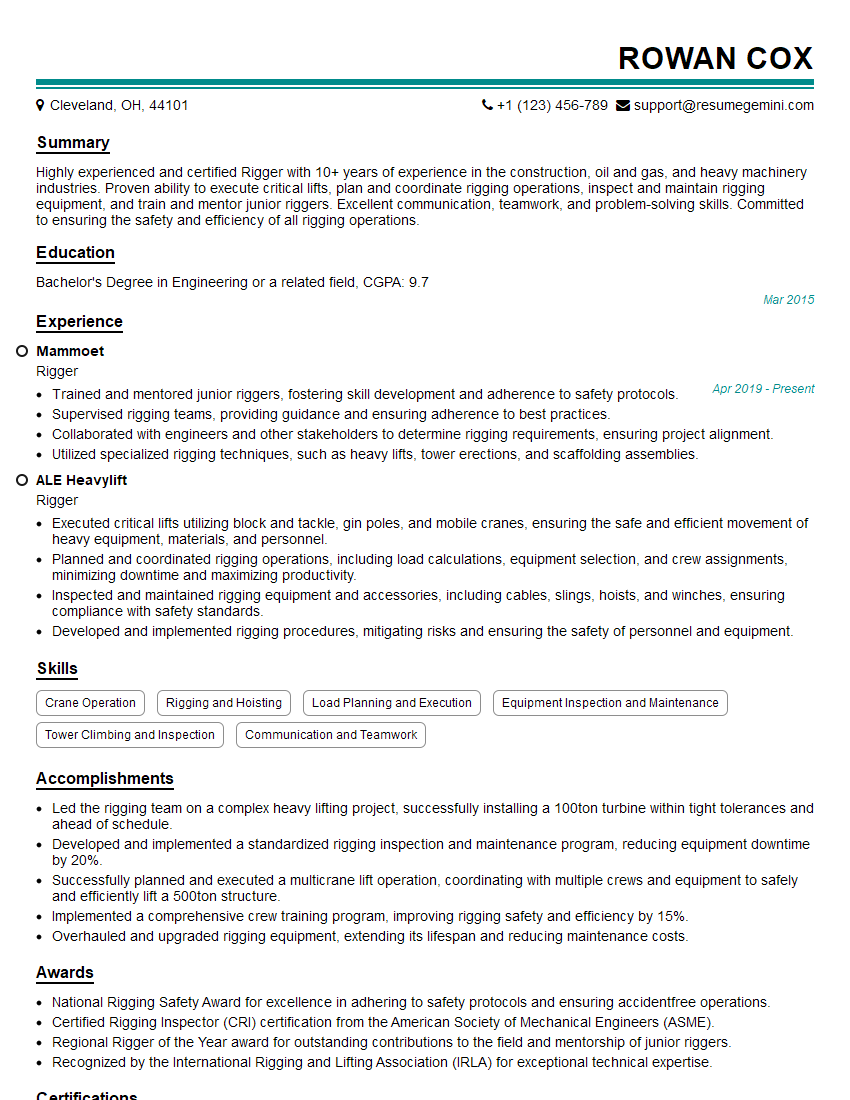

Mastering Special Effects opens doors to exciting and rewarding careers in film, television, gaming, and beyond. To maximize your job prospects, a strong, ATS-friendly resume is crucial. ResumeGemini is a trusted resource that can help you craft a compelling resume that highlights your skills and experience effectively. ResumeGemini provides examples of resumes specifically tailored to the Special Effects industry to help guide you in showcasing your unique qualifications. Take the next step towards your dream career – create a standout resume with ResumeGemini today.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

good