Cracking a skill-specific interview, like one for System Design and Optimization, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in System Design and Optimization Interview

Q 1. Explain the CAP theorem and its implications for system design.

The CAP theorem, also known as Brewer’s theorem, is a fundamental constraint in distributed data stores. It states that a distributed data store can only satisfy at most two of the following three guarantees simultaneously: Consistency, Availability, and Partition tolerance.

- Consistency: All nodes see the same data at the same time. Every read receives the most recent write or an error.

- Availability: Every request receives a response, without guarantee that it contains the most recent write.

- Partition tolerance: The system continues to operate despite arbitrary message loss or network failures between nodes.

In practice, partition tolerance is almost always a necessity for a robust distributed system. Network partitions happen—it’s a fact of life. Therefore, you must choose between Consistency and Availability. A system prioritizing CP (Consistency and Partition tolerance) will ensure data integrity even during network issues, but may temporarily be unavailable. A system prioritizing AP (Availability and Partition tolerance) remains available even during partitions, but might present inconsistent data to different clients until the partition is resolved. Choosing the right balance depends heavily on the application’s needs. For example, a banking system would prioritize CP for data integrity, while a social media feed might prioritize AP to ensure users can always see (potentially slightly outdated) information.

Q 2. Describe different database architectures (SQL, NoSQL) and their use cases.

Database architectures are broadly categorized into SQL (Relational) and NoSQL (Non-Relational) databases. The choice depends greatly on the application’s requirements.

- SQL Databases (e.g., MySQL, PostgreSQL, SQL Server): These databases organize data into tables with rows and columns, enforcing relationships between tables. They are excellent for structured data, ACID properties (Atomicity, Consistency, Isolation, Durability) guarantee transaction integrity, and offer powerful querying capabilities through SQL. Use cases include applications requiring strong consistency, complex relationships between data, and transactional integrity, such as banking systems, ERP systems, and e-commerce platforms managing inventory and orders.

- NoSQL Databases: These databases are more flexible and scalable, designed for handling large volumes of unstructured or semi-structured data. They typically prioritize availability over strict consistency. Various types exist:

- Key-value stores (e.g., Redis, Memcached): Simple, fast, and ideal for caching and session management.

- Document databases (e.g., MongoDB): Store data in JSON-like documents, suitable for applications with flexible schemas, like content management systems.

- Column-family stores (e.g., Cassandra): Optimized for handling massive datasets and high-throughput writes, suitable for large-scale analytics and time-series data.

- Graph databases (e.g., Neo4j): Ideal for managing relationships between data, suitable for social networks, recommendation engines, and knowledge graphs.

Imagine designing a social media platform: you might use a SQL database for user accounts and relationships (requiring strong consistency), while leveraging a NoSQL document database like MongoDB to store user posts and comments (allowing for flexible schemas and high volume).

Q 3. How would you design a URL shortening service?

A URL shortening service maps long URLs to shorter, memorable aliases. The core components include:

- URL Shortening Logic: This generates a unique short code (e.g., using Base62 encoding to allow for a larger character set) for each long URL. This code could be stored alongside the long URL in a database.

- Database: A key-value store (like Redis for speed) or a database with a unique key and associated long URL would be ideal. For massive scale, sharding might be necessary.

- Redirect Service: When a user accesses the short URL, the service looks up the corresponding long URL in the database and performs a 301 redirect.

- Analytics (optional): Tracking click data on shortened links can be valuable for users. This requires additional storage and processing.

Example using Base62 encoding (simplified):

function shortenURL(longURL) { let shortCode = generateUniqueShortCode(); // Store {shortCode: longURL} in database return shortCode;}Challenges include managing the mapping, handling collisions (duplicate short codes), ensuring scalability (handling millions of requests per second), and providing analytics.

Q 4. Design a rate limiter for an API.

A rate limiter controls the rate of API requests to prevent abuse and ensure fair usage. Several strategies exist:

- Sliding Window: Tracks requests within a fixed time window (e.g., 60 seconds). If the number of requests exceeds the limit within the window, subsequent requests are throttled.

- Token Bucket: Maintains a bucket of tokens that replenish at a fixed rate. Each request consumes a token. If no tokens are available, the request is throttled. This is more flexible than a sliding window, allowing bursts of requests within limits.

- Leaky Bucket: Similar to the token bucket but with a fixed-size bucket. Requests are accepted until the bucket is full, and excess requests are discarded. This ensures a constant rate of requests but doesn’t allow bursts.

Implementation could involve:

- Data Structures: Using Redis or in-memory data structures (like a hashmap in your backend) to store request counts or tokens.

- Algorithms: Implementing time-based algorithms (e.g., timestamps) to manage sliding windows or token replenishment rates.

For example, a sliding window implementation might use Redis to store the request count for a user within a specific time window. If the count exceeds the limit, the API returns a rate-limiting error.

Q 5. How would you design a system to handle high-volume traffic?

Handling high-volume traffic involves several strategies:

- Horizontal Scaling: Adding more servers to distribute the load. This is typically more cost-effective and scalable than vertical scaling.

- Load Balancing: Distributing incoming requests across multiple servers to prevent overload on any single server. Strategies include round-robin, least connections, and IP hash (described in the next question).

- Caching: Storing frequently accessed data in a cache (e.g., Redis, Memcached) to reduce the load on the main database.

- Content Delivery Network (CDN): Distributing static content (images, CSS, JavaScript) closer to users geographically, improving performance and reducing server load.

- Database Optimization: Using appropriate database technology (SQL or NoSQL), indexing effectively, and optimizing queries to improve database performance.

- Asynchronous Processing: Handling non-critical tasks (e.g., email notifications) asynchronously using message queues (e.g., RabbitMQ, Kafka) to improve responsiveness.

Imagine a news website during a major breaking news event: horizontal scaling and load balancing are crucial to ensure the website remains available. Caching reduces database load, while a CDN speeds up content delivery.

Q 6. Explain different load balancing strategies and their trade-offs.

Load balancing strategies distribute incoming requests across multiple servers. Common strategies include:

- Round Robin: Distributes requests sequentially to each server. Simple to implement but doesn’t account for server load.

- Least Connections: Directs requests to the server with the fewest active connections. More efficient than round robin but requires tracking server load.

- IP Hash: Uses the client’s IP address to determine the server, ensuring that requests from the same client always go to the same server. Useful for applications requiring session affinity (e.g., maintaining user session state across requests).

- Weighted Round Robin: Assigns weights to servers, allowing for directing a higher proportion of requests to more powerful servers.

Trade-offs: Round robin is simple but may not distribute load evenly. Least connections is more efficient but requires more overhead. IP hash ensures consistency but can lead to uneven load if some servers are slower or busier. The choice depends on application requirements. A system dealing with persistent sessions would benefit from IP hash or sticky sessions, while a stateless API might prefer least connections.

Q 7. How would you design a system for real-time chat?

Designing a real-time chat system requires handling concurrent users, message delivery, and presence updates efficiently.

- WebSockets: A persistent, bidirectional communication channel between the client and server, ideal for real-time interactions. Libraries like Socket.IO simplify WebSocket implementation.

- Message Queue (e.g., RabbitMQ, Kafka): Used for asynchronous message delivery and handling. This helps decouple the chat application components.

- Presence System: Tracks which users are online and available for chatting. This typically involves a database or a distributed cache to store user status.

- Scalability: The system needs to handle a large number of concurrent users. This requires horizontal scaling, load balancing, and potentially sharding (splitting the user base across different servers).

- Database: A database is needed to store chat history. The choice between SQL and NoSQL depends on scalability needs.

A simplified architecture might involve clients connecting via WebSockets to a chat server, which uses a message queue to forward messages to other clients or to persist chat history. The presence system updates user online status, allowing for efficient visibility of available chat partners.

Q 8. Design a system for storing and retrieving user preferences.

Designing a system for storing and retrieving user preferences requires a balance between scalability, performance, and ease of access. We need a solution that can handle a large number of users and preferences efficiently while providing a simple interface for both reading and updating preferences.

One approach uses a NoSQL database like MongoDB or Cassandra. These databases excel at handling unstructured data and are highly scalable. We can store preferences as JSON documents, with each document representing a user’s preferences. The user ID would be the primary key for easy retrieval.

{ "userId": "user123", "preferences": { "theme": "dark", "notifications": true, "language": "en" } }

For enhanced performance, we can add a caching layer (e.g., Redis) to store frequently accessed preferences. This reduces the load on the database. The cache would be invalidated when a user’s preferences are updated.

Consider using a key-value store like Redis or Memcached for even faster retrieval of frequently accessed preferences. This approach prioritizes speed but requires careful management of cache invalidation to ensure data consistency.

Another approach involves using a relational database such as PostgreSQL or MySQL. We could create a table with columns for userId, preference_key, and preference_value. This provides better data integrity and allows for complex queries but might be less scalable than NoSQL for very large datasets. Proper indexing is crucial for optimal performance.

Regardless of the database choice, a well-defined API is necessary for applications to interact with the preference storage system. This API should handle CRUD (Create, Read, Update, Delete) operations securely and efficiently.

Q 9. Explain different caching strategies and their benefits.

Caching strategies are crucial for improving the performance and responsiveness of applications by storing frequently accessed data in a faster, temporary storage medium. Think of it like having a well-organized desk – you keep the things you use most often within easy reach.

- Cache-Aside: This is the most common strategy. The application first checks the cache. If the data is present (a cache hit), it’s returned. Otherwise (a cache miss), the application fetches the data from the main data store (database), populates the cache, and then returns the data. This is simple to implement but requires careful handling of cache invalidation.

- Write-Through: Data is written to both the cache and the main data store simultaneously. This ensures data consistency but can be slower than other methods because of the dual write operation.

- Write-Back: Data is written only to the cache initially, and asynchronously written to the main data store later. This provides the best performance but risks data loss if the cache fails. It’s often used for less critical data.

- Read/Write-Through with Write-Back for Bulk Updates: Combines the benefits of Write-Through for consistency and Write-Back for better performance when updating large datasets.

Choosing the right strategy depends on factors such as data update frequency, data consistency requirements, and performance expectations. For example, a system with highly volatile data might benefit from a write-through approach, while a system with infrequent updates and a tolerance for slight inconsistencies might choose a write-back approach.

Q 10. How would you design a system for recommending products?

Designing a product recommendation system involves leveraging various techniques to suggest relevant products to users. Imagine a smart bookstore recommending books based on your past purchases and browsing history.

A collaborative filtering approach analyzes user interactions (ratings, purchases, reviews) to identify users with similar tastes and recommend products liked by those users. This is excellent for discovering items a user might not have otherwise found.

Content-based filtering focuses on the characteristics of products (e.g., genre, keywords, features) to recommend items similar to those the user has previously interacted with. It’s great for recommending niche items but can suffer from limited diversity.

Hybrid approaches combine collaborative and content-based filtering for a more robust system. This leverages strengths from both methods to provide broader and more relevant recommendations. For instance, it could recommend items similar to what the user liked (content-based) and items liked by similar users (collaborative).

For scalability, we could employ a distributed architecture. Consider using tools like Apache Spark or Hadoop to process large datasets of user interactions and product features. A machine learning model (trained offline) would predict user preferences, which could be stored in a database for quick retrieval during the recommendation process. A real-time recommendation engine might use technologies like Apache Kafka to handle streaming data and update recommendations dynamically.

Metrics such as precision, recall, and NDCG (Normalized Discounted Cumulative Gain) are crucial for evaluating the effectiveness of the recommendation system.

Q 11. Describe the different types of databases and when to use each.

Databases are categorized into various types based on their data model and functionality. Choosing the right type depends on the specific application requirements.

- Relational Databases (RDBMS): These databases use a structured, tabular format (tables with rows and columns). They enforce data integrity through relationships between tables. Examples include MySQL, PostgreSQL, and Oracle. They are ideal for applications requiring structured data, complex queries, and ACID properties (Atomicity, Consistency, Isolation, Durability).

- NoSQL Databases: These databases offer more flexible schemas and are well-suited for handling large volumes of unstructured or semi-structured data. Examples include MongoDB (document database), Cassandra (wide-column store), and Redis (key-value store). They excel at scalability and handling high-volume reads and writes. The choice between different NoSQL types depends on the data model and application needs.

- Graph Databases: These databases store data as nodes and edges, making them ideal for representing relationships between data points. Examples include Neo4j. They are used in social networks, recommendation engines, and knowledge graphs.

- Column-Family Databases: Store data in column families, optimized for specific types of queries. Cassandra is a prominent example. They are best suited for applications requiring fast reads of specific columns.

In summary, the choice between database types depends on the application’s data characteristics, query patterns, scalability requirements, and data consistency needs. For example, an e-commerce site might use an RDBMS for order management and a NoSQL database for storing product catalogs and user reviews.

Q 12. How would you design a system for handling user authentication?

Designing a robust user authentication system is critical for security. Imagine a bank’s online system – security is paramount.

A multi-factor authentication (MFA) approach strengthens security significantly. It requires users to provide multiple forms of verification, such as a password and a one-time code from a mobile app. This adds an extra layer of protection against unauthorized access.

For password storage, use a strong hashing algorithm (like bcrypt or Argon2) to store password hashes instead of plain text. Salting and peppering add further security against rainbow table attacks. Implement measures to prevent brute-force attacks, such as account lockout after multiple failed login attempts.

Session management is crucial. Use secure session cookies with short expiration times and implement HTTPS to encrypt communication between the client and server. Consider using JWT (JSON Web Tokens) for stateless authentication, simplifying scalability and handling.

Centralize authentication logic using a dedicated authentication service. This service manages user accounts, passwords, and authentication tokens. This approach is scalable and allows for easy integration with various applications.

Regular security audits and penetration testing are essential to identify and address vulnerabilities. Keep your authentication system updated with the latest security patches.

Q 13. How would you design a system for processing large datasets?

Processing large datasets requires a distributed computing approach, leveraging the power of multiple machines to work together. Think of it like a team of workers collaboratively assembling a complex product.

A common approach involves using a distributed data processing framework like Apache Hadoop or Apache Spark. Hadoop provides a robust infrastructure for storing and processing large datasets in a distributed manner. Spark offers faster processing speeds by keeping data in memory as much as possible.

Data partitioning is crucial. The dataset is divided into smaller chunks (partitions) that are processed in parallel by different machines. This significantly reduces processing time. Partitioning strategies should be chosen carefully based on data characteristics and query patterns.

Data serialization formats like Avro or Parquet are efficient ways to store and exchange data between different components. These formats compress data and improve processing efficiency.

For querying large datasets, tools like Hive (on top of Hadoop) or Presto provide SQL-like interfaces for querying data stored in distributed storage. These tools abstract away the complexities of distributed data processing, making querying more straightforward.

Consider data optimization techniques such as compression, indexing, and columnar storage for improving query performance and reducing storage costs.

Q 14. Explain different message queuing systems and their use cases.

Message queuing systems (MQ) are crucial for building asynchronous and decoupled systems, enabling applications to communicate without direct dependencies. Think of it as a post office, where messages are delivered reliably.

- RabbitMQ: A versatile, open-source message broker supporting various messaging protocols (AMQP, MQTT, STOMP). It offers features like message persistence, routing, and clustering, making it suitable for various use cases.

- Kafka: A high-throughput, distributed streaming platform designed for handling massive volumes of real-time data streams. It’s excellent for applications requiring high-speed data ingestion and processing, such as real-time analytics and event streaming.

- ActiveMQ: A mature, open-source message broker that supports various messaging protocols and features like message persistence, transactions, and security.

- Amazon SQS (Simple Queue Service): A fully managed cloud-based message queue service that simplifies the management and scaling of message queues.

Use cases vary widely. In e-commerce, an MQ can handle order processing (asynchronously updating inventory, shipping status, and sending notifications). In microservices architectures, MQ decouples services, allowing them to communicate without tight dependencies. Real-time analytics applications utilize MQ for high-speed data ingestion and processing.

Choosing the right message queue depends on factors such as message volume, throughput requirements, messaging protocols, and management overhead. Kafka is ideal for high-throughput streaming applications while RabbitMQ offers greater flexibility and features for a wider range of applications.

Q 15. How would you design a system for handling user payments?

Designing a robust user payment system requires careful consideration of security, reliability, and scalability. We’ll need multiple components working together seamlessly.

- Payment Gateway Integration: This is the heart of the system. We’ll integrate with established payment gateways like Stripe or PayPal, leveraging their secure infrastructure for processing transactions. This offloads much of the complexity of handling various payment methods (credit cards, debit cards, digital wallets).

- Transaction Processing: A microservice will handle the actual processing of payments. This service should be highly available and fault-tolerant, using techniques like message queues (e.g., Kafka) to ensure transactions are processed even if part of the system is down. Idempotency is crucial – ensuring that duplicate payment requests are handled correctly, preventing double charges.

- Database: A relational database (like PostgreSQL or MySQL) will store transaction details, including payment status, amounts, and user information. We’ll need robust indexing to ensure quick retrieval of data for reporting and reconciliation.

- Security: Security is paramount. We’ll employ encryption (HTTPS, TLS) for all communication, PCI DSS compliance for handling sensitive card data, and robust authentication mechanisms to protect user accounts.

- Notifications and Reconciliation: The system needs to notify users of transaction status (success, failure). A reconciliation process will be necessary to ensure all transactions are accurately accounted for and match the gateway’s records.

Example: Imagine a user purchasing a product. Their payment request goes to our payment gateway integration. This integration forwards the request to the chosen payment gateway (e.g., Stripe). Stripe processes the payment, and sends a response back to our transaction processing service. The service updates the database, sends a confirmation email to the user, and logs the event. If the payment fails, the user receives an error message.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of consistency and availability in distributed systems.

Consistency and availability are fundamental concepts in distributed systems, often presented as a trade-off. They represent different guarantees a system makes about data.

- Consistency: This refers to the agreement on data across all nodes in the distributed system. A consistent system guarantees that all clients see the same data at any given time. Think of it like a perfectly synchronized clock across multiple locations.

- Availability: This is the ability of the system to respond to requests in a timely manner, even with partial failures. A highly available system continues to operate even if some nodes go down. Think of a system working 24/7.

The CAP theorem illustrates this trade-off: In a distributed data store, you can only simultaneously provide two out of three guarantees: Consistency, Availability, and Partition tolerance (the ability to continue operating despite network partitions).

Example: A simple database replicated across multiple servers. If we prioritize consistency, every write operation must be propagated to all servers before the operation is considered successful. This might impact availability during network hiccups as writes might be temporarily blocked. If we prioritize availability, a write operation might be successful on one server before being propagated to others, resulting in temporary inconsistencies.

Q 17. How would you design a system for detecting and handling failures?

Designing for failure is crucial in any system, especially a distributed one. Our approach should be proactive, anticipating potential issues and building mechanisms to handle them gracefully.

- Redundancy: Replicate critical components (databases, servers) across multiple availability zones. This ensures that if one component fails, others can take over seamlessly.

- Health Checks: Implement regular health checks on all components. If a component fails a health check, it should be automatically taken out of service and replaced by a healthy instance.

- Circuit Breakers: Use circuit breakers to prevent cascading failures. If a service consistently fails, the circuit breaker will prevent further requests from being sent until the service recovers.

- Retry Mechanisms: Implement retry mechanisms for transient failures. Network glitches or temporary database unavailability might cause operations to fail. Retrying after a short delay often resolves such issues.

- Monitoring and Alerting: Establish robust monitoring and alerting systems to quickly identify failures and notify the appropriate teams.

Example: If a database server goes down, the system should automatically switch to a replica server. Users should experience minimal downtime. If a payment gateway becomes unresponsive, the circuit breaker prevents requests from being sent to it until it’s back online, preventing further errors. Retry mechanisms might be used for temporary network glitches during payment processing.

Q 18. How would you design a system for monitoring and alerting?

Monitoring and alerting are crucial for maintaining system health and responding to issues proactively.

- Metrics Collection: Collect system metrics like CPU usage, memory consumption, request latency, and error rates. Tools like Prometheus and Grafana are commonly used for this purpose.

- Logging: Implement detailed logging across all system components. Logs should contain relevant information for debugging and troubleshooting.

- Alerting System: Set up an alerting system to notify relevant teams when critical thresholds are exceeded or errors occur. Tools like PagerDuty or Opsgenie are effective solutions.

- Dashboards: Create dashboards to visualize key metrics and provide a high-level overview of system health. This makes identifying potential problems easier.

- Alerting Logic: Define clear alerting thresholds and escalation paths to ensure timely responses. Consider different severity levels for alerts (critical, warning, informational).

Example: If the CPU utilization on a server exceeds 90%, an alert is triggered. If a specific API endpoint starts returning errors, an alert is generated, and an on-call engineer is notified. Dashboards provide a visual representation of system health indicators like request latency and error rates, allowing for proactive identification of performance issues.

Q 19. Explain different strategies for optimizing database queries.

Optimizing database queries is critical for application performance. Slow queries can lead to poor user experience and scalability problems.

- Indexing: Create indexes on frequently queried columns to speed up data retrieval. Indexes are like a book’s index; they allow the database to quickly locate specific rows.

- Query Optimization: Use EXPLAIN PLAN (or similar tools) to analyze query execution plans and identify bottlenecks. Rewriting queries to reduce I/O operations and improve selectivity can significantly improve performance.

- Caching: Cache frequently accessed data in memory (e.g., Redis, Memcached). This avoids expensive database lookups.

- Database Tuning: Tune database parameters, like buffer pool size and connection pool size, to optimize resource utilization.

- Connection Pooling: Use connection pooling to reuse database connections, reducing the overhead of establishing new connections for each request.

- Data Modeling: Proper data modeling ensures efficient data storage and retrieval. Normalize your data to minimize redundancy and improve data integrity.

Example: Instead of a full table scan, adding an index on a ‘user_id’ column in a ‘users’ table dramatically speeds up queries that filter by user ID. Caching frequently accessed user profiles in memory minimizes database queries.

Q 20. How would you design a system for handling user data privacy?

Designing for user data privacy requires a multi-faceted approach, adhering to relevant regulations (like GDPR, CCPA).

- Data Minimization: Collect only the necessary data. Avoid collecting unnecessary user information.

- Data Encryption: Encrypt data both in transit (HTTPS) and at rest (database encryption).

- Access Control: Implement strict access control measures to limit who can access user data. Use role-based access control (RBAC).

- Data Anonymization: When possible, anonymize data to remove personally identifiable information (PII).

- Consent Management: Implement a clear consent management system, allowing users to control what data is collected and how it’s used.

- Data Retention Policies: Establish clear data retention policies and procedures for securely deleting data when it’s no longer needed.

- Privacy by Design: Integrate privacy considerations throughout the entire design and development process.

Example: We might only collect the email address and password for user authentication, avoiding unnecessary information like their address or phone number. All data is encrypted at rest and in transit. Access to user data is restricted to authorized personnel only, tracked using detailed audit logs.

Q 21. How would you design a system for handling geographically distributed data?

Handling geographically distributed data requires strategies to ensure low latency, high availability, and data consistency across regions.

- Data Replication: Replicate data across multiple data centers in different geographic locations. This reduces latency for users in various regions.

- Content Delivery Network (CDN): Use a CDN to cache static content (images, videos) closer to users, reducing latency and bandwidth costs.

- Database Sharding: Partition the database across multiple servers in different regions, improving scalability and reducing database load on a single location.

- Multi-region deployments: Deploy application components across multiple regions for higher availability. This enables the system to continue functioning even if a region experiences an outage.

- Data Synchronization: Implement a robust data synchronization mechanism to ensure consistency across replicated data stores. This might involve technologies like Kafka or database replication tools.

Example: A social media platform with users worldwide might replicate its user data across multiple data centers (e.g., North America, Europe, Asia). Static content (images, videos) are cached on a CDN, reducing loading times for users globally. Database sharding distributes database load across servers in different regions, improving performance and resilience.

Q 22. Explain different approaches to microservices architecture.

Microservices architecture involves breaking down a large application into smaller, independent services that communicate with each other. This contrasts with monolithic architectures where everything is in one large codebase. Several approaches exist for structuring these microservices:

- Decomposition by Business Capability: Services are organized around specific business functions, like order processing or user management. This is often the most intuitive approach, aligning services with clear business responsibilities. For example, an e-commerce platform might have separate services for catalog management, shopping cart, and payment processing.

- Decomposition by Subdomain: Based on the bounded contexts in Domain-Driven Design (DDD), this approach groups services around distinct areas of the business domain. This approach aims for strong encapsulation and independence, reducing inter-service dependencies.

- Decomposition by Data Ownership: Services are defined around the data they own and manage. Each service is responsible for its data consistency and integrity. This method can streamline data management and ensure better data control.

- API-centric Approach: Focusing on well-defined APIs (Application Programming Interfaces) that are the contract for service interaction. This approach promotes loose coupling and flexibility, allowing services to evolve independently without impacting others.

Choosing the right approach depends on factors like the complexity of the application, the team structure, and the business needs. Often, a hybrid approach combining elements from multiple strategies is the most effective.

Q 23. How would you design a system for handling data replication?

Designing a system for data replication involves choosing a replication strategy that balances data consistency, availability, and performance. Here’s a breakdown:

- Master-Slave Replication: A central master database handles writes, and multiple slave databases replicate data from the master. This is simple to implement but has a single point of failure (the master). Read operations can be distributed across slaves, improving performance.

- Master-Master Replication: Multiple databases act as masters, allowing writes to any of them. This improves availability but requires sophisticated conflict resolution mechanisms to maintain data consistency. Techniques like last-write-wins or timestamp-based conflict resolution are often used.

- Multi-Master Replication with Conflict Detection and Resolution: This approach extends master-master by employing sophisticated algorithms to detect and resolve data conflicts. This is more complex but provides higher availability and fault tolerance.

- Asynchronous Replication: Data is replicated periodically or on demand, trading off immediacy for better scalability. This is often sufficient for applications with less stringent real-time requirements.

- Synchronous Replication: Data is replicated immediately, ensuring strong consistency but potentially impacting write performance. This is suitable for applications requiring high data consistency, like financial systems.

The choice depends on the specific application requirements. Consider factors like acceptable latency, data consistency requirements, and the acceptable frequency of data inconsistencies.

Q 24. Explain different techniques for optimizing network performance.

Optimizing network performance involves addressing several key areas:

- Content Delivery Networks (CDNs): Distributing content closer to users geographically reduces latency and improves loading times. CDNs cache static content like images and videos, minimizing server load.

- Load Balancing: Distributing network traffic across multiple servers prevents overload on individual machines. Different load balancing algorithms (round-robin, least connections, etc.) exist, each with its advantages and disadvantages.

- Caching: Storing frequently accessed data in memory or on faster storage (e.g., Redis) reduces database load and speeds up response times. This can be implemented at various levels: browser cache, CDN cache, server-side cache, and database cache.

- Compression: Reducing the size of data transmitted reduces bandwidth consumption and improves transfer speeds. Techniques like gzip compression are commonly used.

- Protocol Optimization: Choosing the right network protocol (e.g., HTTP/2 instead of HTTP/1.1) and optimizing its settings can significantly improve performance.

- Network Topology Optimization: Carefully designing the network infrastructure to minimize latency and improve throughput. This involves considerations like network routing and placement of servers.

The optimal combination of these techniques depends on the application and its performance bottlenecks. Thorough monitoring and performance testing are crucial for effective optimization.

Q 25. How would you design a system for handling asynchronous tasks?

Handling asynchronous tasks efficiently is crucial for responsiveness and scalability. Several approaches exist:

- Message Queues (e.g., RabbitMQ, Kafka): Tasks are submitted as messages to a queue. Workers consume these messages and process them asynchronously. This decouples task submission from task execution, improving system resilience and scalability.

- Task Queues (e.g., Celery, Redis Queue): Similar to message queues, but often provide more advanced features like task scheduling, retry mechanisms, and monitoring.

- Background Jobs (e.g., Sidekiq, Resque): Dedicated background processes that handle asynchronous tasks. This is often simpler to implement than message queues for smaller applications but may lack the advanced features and scalability of dedicated message queues.

- Event-driven Architecture: Systems communicate via asynchronous events. When an event occurs, subscribed components are notified, triggering the execution of asynchronous tasks. This enables loose coupling and high scalability.

The choice depends on the scale and complexity of the tasks, the level of required reliability, and the overall architecture of the system. For instance, a simple system might use background jobs, while a complex, high-throughput system would benefit from a robust message queue.

Q 26. How would you design a system for handling large-scale data ingestion?

Handling large-scale data ingestion requires a robust and scalable system. Key considerations include:

- Batch Processing: Ingesting data in large batches using tools like Apache Spark or Hadoop. This is efficient for large datasets but may introduce latency.

- Stream Processing: Processing data in real-time or near real-time using frameworks like Apache Kafka Streams or Apache Flink. This is ideal for applications that require immediate processing of data streams.

- Data Lakes: Storing raw data in its original format in a centralized repository. Data lakes provide flexibility in processing and analyzing data but require careful management.

- Data Warehouses: Storing structured and processed data optimized for analytical queries. Data warehouses are efficient for analytical processing but require data transformation and loading.

- Distributed Systems: Employing distributed storage and processing techniques to handle the volume and velocity of data. This often involves using cloud-based services or clusters of servers.

- Schema-on-Read vs. Schema-on-Write: Choosing between defining the data schema before or after ingestion. Schema-on-read offers greater flexibility but requires more processing; schema-on-write imposes constraints but simplifies processing.

A typical system might combine batch and stream processing, using a data lake for raw data and a data warehouse for processed data, leveraging distributed systems for scalability and employing appropriate schema management.

Q 27. Explain the concept of eventual consistency and its implications.

Eventual consistency is a consistency model in distributed systems where updates to the system will propagate through the system eventually, but there may be a period where the data in different parts of the system are inconsistent.

Imagine a distributed database with multiple replicas. If you update a record on one replica, it won’t be immediately reflected on all others. It takes time for the updates to propagate, creating a temporary inconsistency. Once the propagation completes, the system becomes consistent again.

Implications:

- Simplified Development: Easier to implement than strong consistency, enabling higher availability and scalability.

- Increased Availability: If one replica goes down, others can still serve data, albeit potentially stale data.

- Potential for Data Conflicts: Requires mechanisms to handle conflicts if multiple updates occur concurrently.

- Complexity in Application Logic: Applications must be designed to handle eventual consistency, potentially requiring retry mechanisms or conflict resolution strategies.

Eventual consistency is suitable for applications where strict real-time consistency isn’t critical, such as social media updates or email systems. However, for applications demanding immediate consistency, like financial transactions, strong consistency models are preferred.

Q 28. Describe different approaches to designing fault-tolerant systems.

Designing fault-tolerant systems involves anticipating potential failures and implementing mechanisms to mitigate their impact. Several approaches exist:

- Redundancy: Having multiple instances of critical components (servers, databases, network connections) so that if one fails, others can take over. This can be active-active (all components are active and processing requests) or active-passive (one component is active, others are on standby).

- Failover Mechanisms: Automatic mechanisms that switch to a backup component in case of failure. This often involves techniques like load balancing and health checks.

- Error Handling and Recovery: Implementing robust error handling and recovery procedures to minimize the impact of errors. This includes techniques like retry mechanisms, circuit breakers, and graceful degradation.

- Data Replication: Replicating data across multiple locations to protect against data loss. This ensures that data is available even if one location fails.

- Self-Healing Systems: Systems that automatically detect and recover from failures without human intervention. This often involves automated monitoring, alerting, and recovery processes.

- Blue/Green Deployments: Deploying new versions of software alongside the old version, allowing a seamless switch if the new version fails.

The optimal strategy depends on the criticality of the system, the acceptable downtime, and the cost of implementation. A combination of these techniques is frequently employed to achieve high levels of fault tolerance.

Key Topics to Learn for System Design and Optimization Interview

- Scalability and Capacity Planning: Understanding how to design systems that can handle increasing loads and user demands. Practical application includes designing a system to handle peak traffic during holiday shopping seasons.

- Database Design and Optimization: Choosing the right database technology (SQL, NoSQL) and optimizing queries for performance. Practical application involves designing a database schema for a social media platform to efficiently manage user data and posts.

- Caching Strategies: Implementing various caching mechanisms (e.g., CDN, memcached, Redis) to reduce latency and improve response times. Practical application involves optimizing a web application’s performance using appropriate caching layers.

- API Design and Microservices Architecture: Designing robust and scalable APIs and understanding the principles of microservices architecture. Practical application includes designing RESTful APIs for a mobile application.

- Load Balancing and Fault Tolerance: Implementing strategies to distribute traffic evenly across servers and ensure system availability even in the face of failures. Practical application includes designing a load balancing solution for a high-traffic website.

- System Monitoring and Logging: Setting up comprehensive monitoring and logging systems to track performance and identify potential issues. Practical application involves implementing monitoring and alerting to proactively identify performance bottlenecks.

- Algorithm and Data Structure Optimization: Choosing appropriate algorithms and data structures to optimize performance of critical components. Practical application includes optimizing search algorithms for a large dataset.

- Security Considerations: Understanding and addressing security concerns throughout the design process, including authentication, authorization, and data protection. Practical application involves designing secure authentication flows for a user login system.

Next Steps

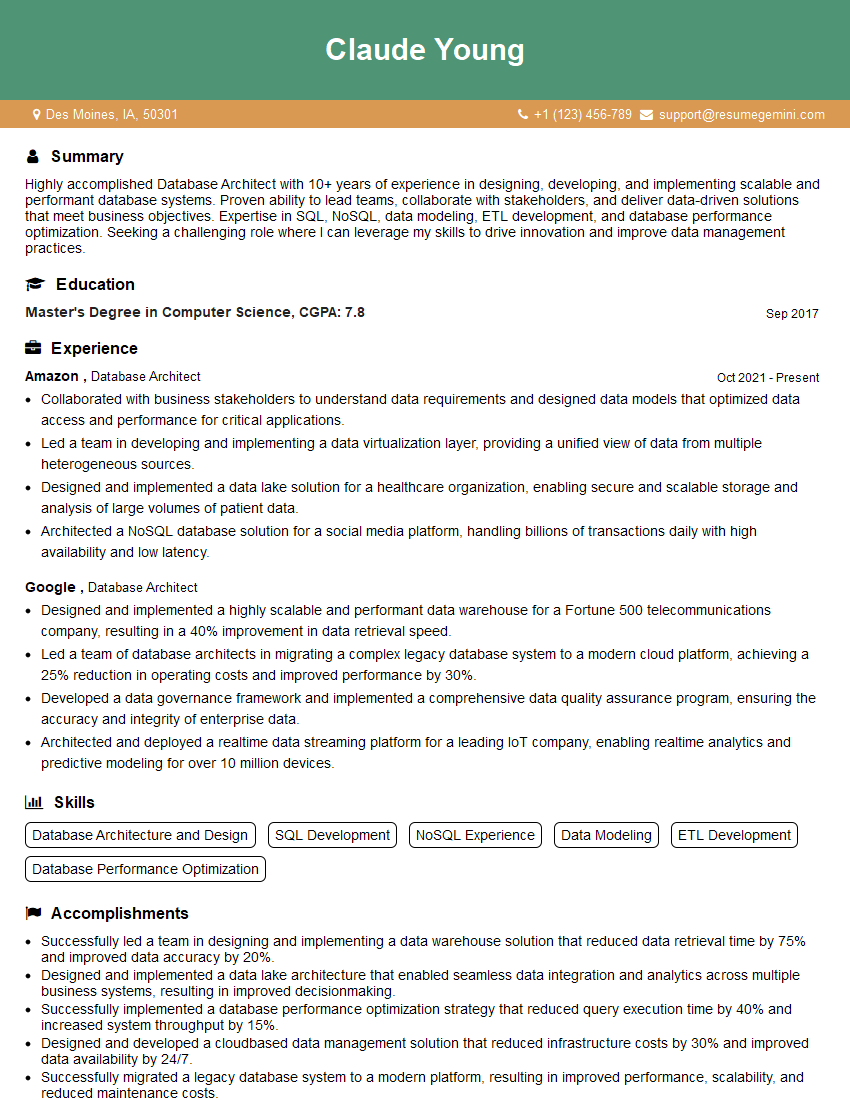

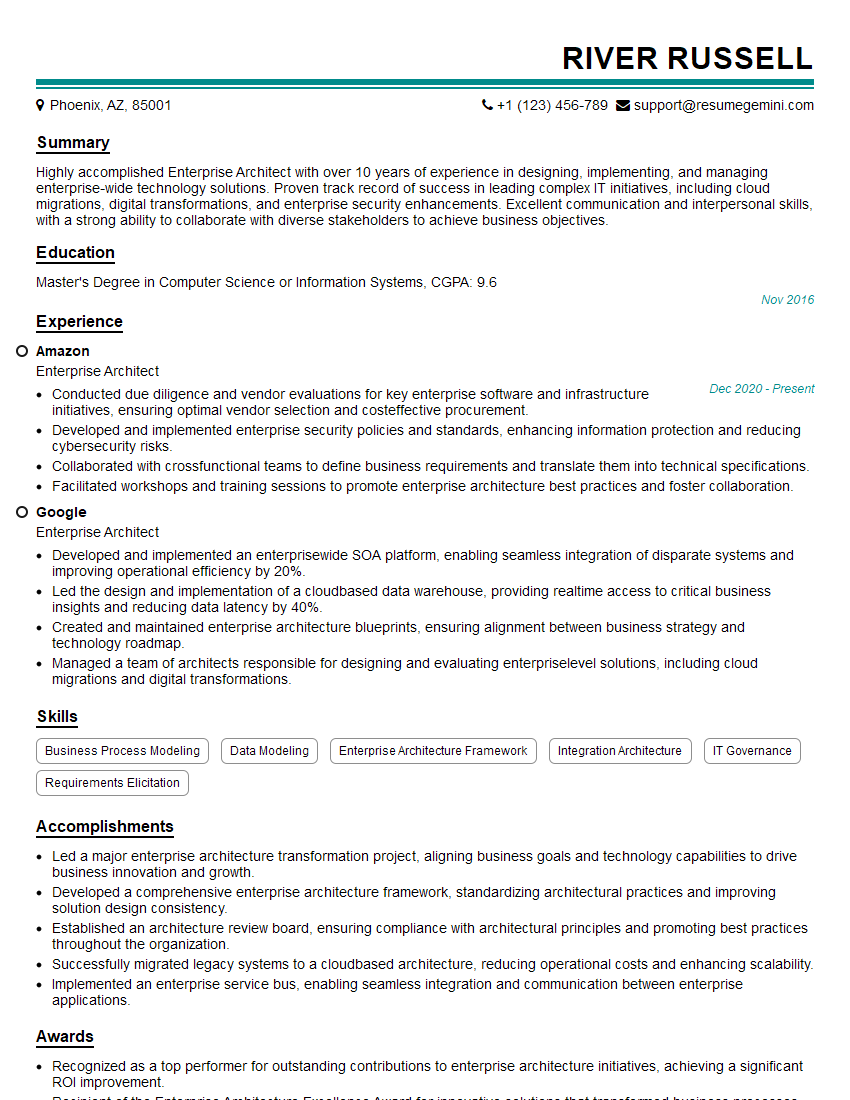

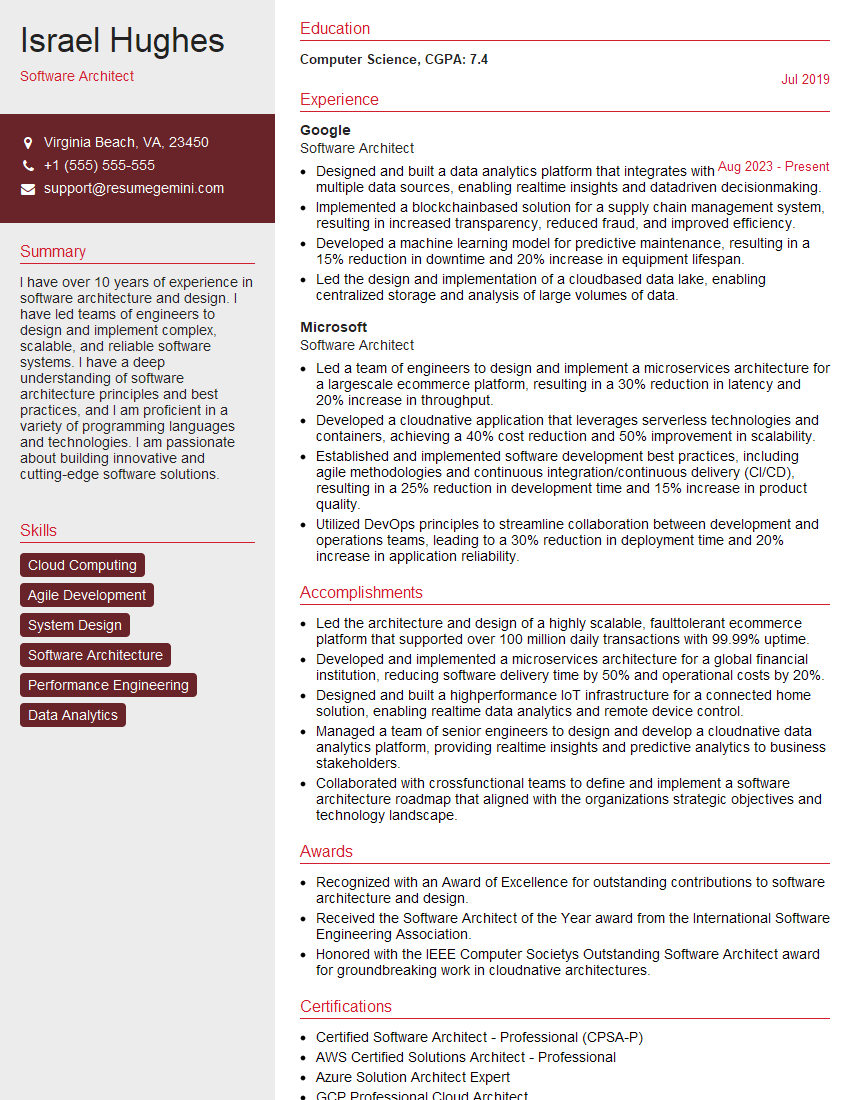

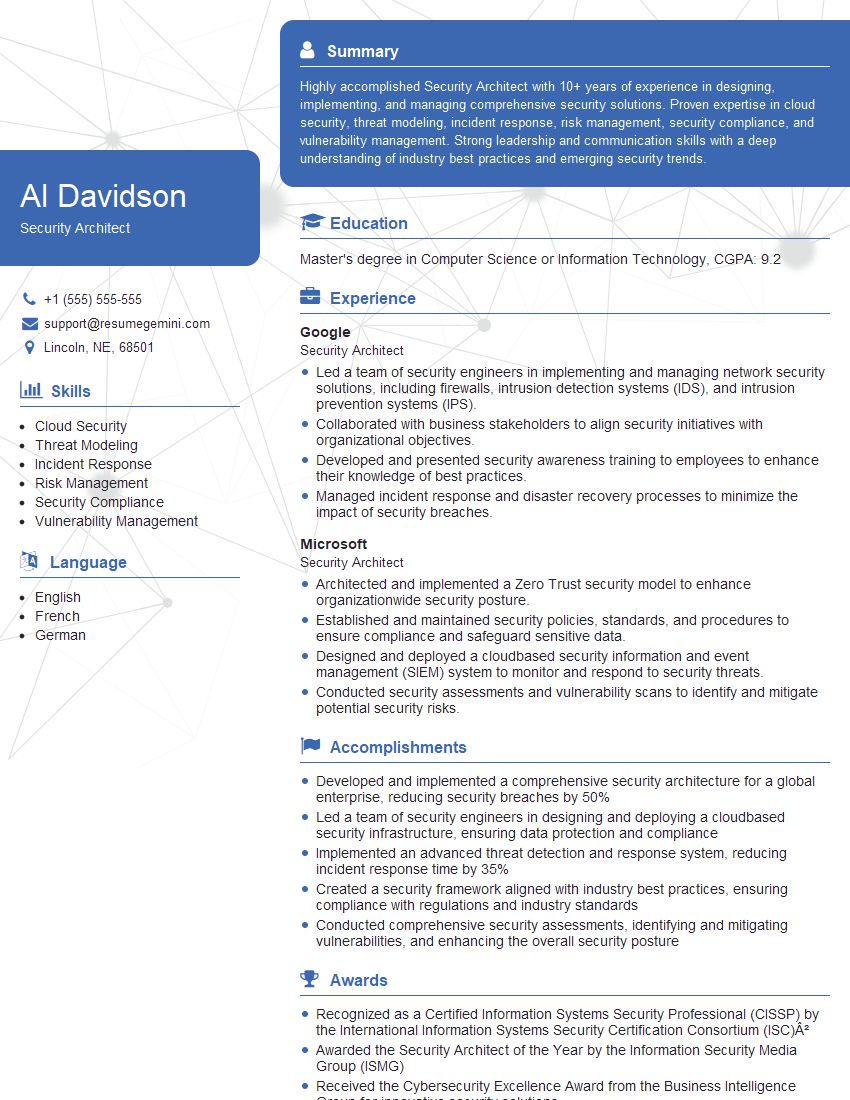

Mastering System Design and Optimization is crucial for career advancement in the tech industry, opening doors to leadership roles and higher compensation. A strong, ATS-friendly resume is your first step in showcasing these skills to potential employers. To make your resume stand out, leverage ResumeGemini, a trusted resource for creating professional and impactful resumes. ResumeGemini provides examples of resumes tailored specifically to System Design and Optimization roles, helping you present your qualifications effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good