Every successful interview starts with knowing what to expect. In this blog, we’ll take you through the top Tables and Data Extraction interview questions, breaking them down with expert tips to help you deliver impactful answers. Step into your next interview fully prepared and ready to succeed.

Questions Asked in Tables and Data Extraction Interview

Q 1. Explain the difference between structured, semi-structured, and unstructured data.

Data comes in three main forms: structured, semi-structured, and unstructured. Think of it like organizing your closet. Structured data is like neatly folded clothes in labeled drawers – it’s organized, consistent, and easily searchable. Semi-structured data is like a pile of clothes sorted by color but not neatly folded – it has some organization but lacks the rigid structure of the folded clothes. Unstructured data is like a messy pile of clothes – it’s disorganized and difficult to search.

- Structured Data: Resides in a predefined format, typically relational databases. Examples include data in SQL databases with tables and columns, CSV files, and spreadsheets. Each data point has a specific place and type.

- Semi-structured Data: Doesn’t conform to a rigid table structure but contains tags or markers that separate data elements. Examples include JSON and XML files, log files, and NoSQL databases. The data is organized, but not as strictly as in relational databases.

- Unstructured Data: Lacks a predefined format or organization. Examples include text documents, images, audio files, and videos. It’s difficult to query or analyze without significant pre-processing.

In a real-world scenario, customer information in a CRM system is structured, whereas social media posts about your product are unstructured.

Q 2. What are some common data extraction techniques?

Data extraction techniques vary depending on the data source and its format. Some common methods include:

- Screen Scraping: Extracting data from websites using tools that mimic browser behavior. This is useful for gathering information from public websites but can be fragile and prone to errors if the website structure changes.

- API Integration: Using application programming interfaces (APIs) provided by data sources. This is the most reliable and efficient method when APIs are available, as it’s designed for data access.

- Database Queries (SQL): Using SQL commands to retrieve data from relational databases. This is crucial for working with structured data in databases.

- Parsing techniques (e.g., regular expressions): Using pattern matching (regular expressions or regex) to extract specific data from text files or semi-structured data like log files. This is powerful for handling complex text formats.

- Optical Character Recognition (OCR): Converting images of text (like scanned documents) into machine-readable text. This is essential for digitizing paper-based data.

Choosing the right technique depends on the data source and the desired outcome. For instance, scraping might be suitable for a one-time data extraction from a website, while API integration is preferable for ongoing data synchronization.

Q 3. Describe your experience with ETL processes.

ETL (Extract, Transform, Load) is a crucial data integration process. I have extensive experience with the entire ETL lifecycle, from requirements gathering and design to implementation and testing. My experience involves designing and implementing ETL pipelines using tools like Apache Kafka, Informatica PowerCenter, and cloud-based ETL services (AWS Glue, Azure Data Factory).

In a recent project, I built an ETL pipeline to consolidate customer data from multiple disparate systems (CRM, marketing automation, and e-commerce platforms). This involved extracting data from various sources, transforming it to ensure data consistency (e.g., standardizing addresses, handling null values), and loading it into a data warehouse for reporting and analysis. I focused on improving data quality and efficiency by implementing error handling and logging mechanisms, along with performance optimization techniques.

Q 4. How do you handle data cleansing and transformation?

Data cleansing and transformation are vital steps in ETL and data preparation. Cleansing involves identifying and correcting inaccuracies and inconsistencies, while transformation involves converting data into a usable format.

My approach involves several steps: First, I analyze the data to identify issues like missing values, duplicates, and outliers. Then, I apply appropriate techniques, such as imputation for missing values (using mean, median, or mode), deduplication techniques, and outlier treatment (removal or transformation). Transformation includes data type conversion, data normalization, and aggregation. I often use scripting languages like Python with libraries like Pandas for these tasks, leveraging its capabilities for data manipulation. For example, I might use regular expressions to standardize date formats or replace inconsistent spellings in a dataset.

Q 5. What SQL commands are most useful for data extraction?

Several SQL commands are essential for data extraction. Here are some of the most useful ones:

SELECT: This is the fundamental command to retrieve data from one or more tables.SELECT * FROM Customers;retrieves all columns from the Customers table.WHERE: This clause filters the data based on specific conditions.SELECT * FROM Customers WHERE Country = 'USA';retrieves customers only from the USA.JOIN: This combines data from multiple tables based on a related column.SELECT * FROM Customers INNER JOIN Orders ON Customers.CustomerID = Orders.CustomerID;combines customer and order data.GROUP BYandHAVING: These are used for aggregation and filtering aggregated data.SELECT COUNT(*) FROM Orders GROUP BY CustomerID HAVING COUNT(*) > 5;finds customers with more than 5 orders.ORDER BY: This sorts the retrieved data.SELECT * FROM Customers ORDER BY LastName;sorts customers by last name.LIMIT(orTOPin some systems): This restricts the number of rows returned.SELECT * FROM Customers LIMIT 10;retrieves the top 10 customers.

The combination of these commands allows for flexible and powerful data extraction from relational databases.

Q 6. Explain your experience with different database systems (e.g., MySQL, PostgreSQL, Oracle).

I have experience with several database systems, including MySQL, PostgreSQL, and Oracle. My expertise spans database design, query optimization, and data management.

MySQL is a popular open-source relational database, well-suited for web applications. I’ve used it extensively in projects requiring high performance and scalability. PostgreSQL, another robust open-source option, offers advanced features like JSON support and more robust data types, which I’ve leveraged in projects demanding more complex data modeling. Oracle is a commercial, enterprise-grade database known for its high availability and performance capabilities; I’ve worked with it in large-scale enterprise data warehousing projects. In each case, my focus has been on efficient database design to ensure data integrity and optimal query performance.

Q 7. How do you ensure data quality during extraction?

Ensuring data quality during extraction is critical. My approach is multi-faceted:

- Data Validation: Implementing checks at each stage of the extraction process. This includes validating data types, checking for null values, and comparing extracted data against expected values (referencing a data dictionary or schema).

- Source Data Assessment: Understanding the quality of the source data before extraction is crucial. This involves analyzing data profiling reports and identifying potential issues in the source data.

- Error Handling and Logging: Implementing mechanisms to handle errors during extraction gracefully and to log all errors and exceptions. This allows for quick identification and resolution of data quality issues.

- Data Transformation Rules: Defining and applying data transformation rules to standardize data formats and values. For example, using regular expressions to standardize addresses or data type conversions.

- Regular Data Quality Monitoring: Setting up monitoring processes to continuously track data quality metrics (completeness, accuracy, consistency) over time.

Proactive data quality checks at each step, coupled with detailed logging and monitoring, help identify and address issues early in the process, preventing inaccurate or incomplete data from impacting downstream analysis.

Q 8. What are some common challenges you face during data extraction?

Data extraction, while seemingly straightforward, often presents numerous challenges. These can range from simple issues like inconsistent data formats to complex problems involving data security and scalability. Some common hurdles include:

- Data Inconsistency: Different data sources often employ varying formats, structures, and naming conventions. Imagine trying to combine sales data from two different departments – one might use ‘SalesAmount’ while the other uses ‘Revenue’. This requires meticulous cleaning and transformation.

- Data Quality Issues: Missing values, duplicates, and erroneous data are prevalent. For instance, a dataset might contain addresses with incomplete zip codes or sales figures with obvious typos. Handling these requires robust data validation and cleaning procedures.

- Data Volume and Velocity: Extracting from massive datasets can be incredibly time-consuming and resource-intensive. Dealing with high-velocity streams of data (think real-time sensor readings) demands efficient processing and storage solutions.

- Data Source Complexity: Extracting data from diverse sources, such as databases, APIs, web pages, and legacy systems, requires specialized knowledge and tools. Each source might demand a unique approach.

- Access Restrictions: Permissions and security protocols can hinder data access. Navigating these security measures often involves working closely with database administrators and adhering to strict compliance guidelines.

Overcoming these challenges often necessitates a combination of robust scripting skills, familiarity with various data formats and tools, and a methodical approach to data cleansing and transformation.

Q 9. How do you handle large datasets during extraction?

Handling large datasets during extraction involves strategic planning and the use of efficient techniques. Simply loading everything into memory isn’t feasible for datasets exceeding available RAM. Here’s a breakdown of my approach:

- Chunking: Instead of loading the entire dataset at once, I process it in smaller, manageable chunks. This allows for efficient memory management and reduces the risk of system crashes.

- Parallel Processing: Leveraging multi-core processors through techniques like multiprocessing or multithreading significantly accelerates processing time. This involves splitting the task across multiple cores, working on different chunks concurrently.

- Database Interactions: For database sources, I use optimized SQL queries with appropriate indexing and filtering to retrieve only the necessary data. Avoid using

SELECT *– always specify the exact columns needed. - Streaming: For very large or continuously updated data sources, streaming techniques are crucial. This approach processes data as it arrives, without the need to store everything in memory. Libraries like Apache Kafka or Apache Spark are excellent tools for this.

- Data Compression: Compressing data before or during extraction minimizes storage space and improves transfer speeds. Popular compression formats include gzip and bz2.

Imagine extracting millions of customer records. Chunking the data into 100,000-record segments and processing them in parallel using multiple cores would greatly reduce extraction time compared to loading all the data at once.

Q 10. Describe your experience with data profiling.

Data profiling is a critical step in understanding the data’s characteristics before extraction and transformation. It involves analyzing data quality, identifying patterns, and assessing the overall data structure. My experience includes:

- Data Quality Assessment: I identify missing values, outliers, inconsistencies, and duplicates. For example, detecting inconsistent date formats or duplicate customer IDs. This informs data cleansing strategies.

- Data Type Identification: I determine the data type of each attribute (e.g., integer, string, date). This is crucial for proper data transformation and database loading.

- Distribution Analysis: I examine the distribution of values within each attribute to understand data variability and identify potential biases. Histograms and frequency tables are useful tools here.

- Data Range and Cardinality: I determine the minimum and maximum values and the number of unique values for each attribute. This helps in database schema design and identifying potential constraints.

- Pattern Recognition: I look for patterns and correlations within the data, which can provide insights into relationships between attributes and facilitate decision-making.

In a recent project, data profiling revealed a high percentage of missing values in a crucial column, which necessitated imputation strategies and careful consideration during the data analysis phase.

Q 11. How do you optimize data extraction queries for performance?

Optimizing data extraction queries for performance is essential for efficiency, especially with large datasets. My approach focuses on several key strategies:

- Using appropriate indexes: Database indexes significantly speed up data retrieval. Ensuring indexes exist on frequently queried columns is crucial. This is analogous to having a well-organized library catalog.

- Filtering data early: Applying filters (

WHEREclauses in SQL) early in the query reduces the amount of data processed. This avoids unnecessary computations. - Avoiding

SELECT *: Always explicitly list the columns needed. Selecting all columns (SELECT *) is inefficient and increases processing time. - Using appropriate join types: Choosing the correct join type (INNER JOIN, LEFT JOIN, etc.) is critical for performance. Unnecessary joins can significantly slow down extraction.

- Optimizing database connections: Efficiently managing database connections reduces overhead. Using connection pooling and proper connection management is important.

- Batch processing: Processing data in batches rather than individual records reduces the number of database interactions, leading to significant performance gains.

For example, instead of SELECT * FROM customers WHERE country = 'USA', a better optimized query would be SELECT customerID, name, email FROM customers WHERE country = 'USA', assuming only these columns are needed.

Q 12. Explain your experience with various data formats (e.g., CSV, JSON, XML).

I have extensive experience working with diverse data formats, each requiring specific handling techniques:

- CSV (Comma Separated Values): A simple and widely used format, easily parsed using libraries in most programming languages. Challenges can arise with inconsistent delimiters or escape characters, requiring careful handling.

- JSON (JavaScript Object Notation): A lightweight, human-readable format, ideal for web applications and APIs. JSON libraries provide efficient parsing and manipulation. Handling nested structures requires careful consideration.

- XML (Extensible Markup Language): A more complex, hierarchical format used for data interchange. XML parsing often involves navigating through nested elements and attributes, potentially requiring DOM (Document Object Model) or SAX (Simple API for XML) parsing techniques.

- Parquet and Avro: Columnar storage formats optimized for efficient data processing and storage, particularly beneficial for large datasets. They provide better compression and faster read speeds compared to row-oriented formats like CSV.

My experience includes using Python’s csv, json, and xml modules, along with libraries like pandas for efficient data manipulation across these formats. The choice of parsing method depends on the complexity of the data structure and the desired level of performance.

Q 13. How do you handle data security and privacy during data extraction?

Data security and privacy are paramount during data extraction. My approach incorporates several key measures:

- Access Control: I adhere strictly to least privilege principles, requesting only the necessary access permissions to complete the extraction task. This minimizes the risk of unauthorized data access.

- Data Encryption: Both data at rest and data in transit should be encrypted. This ensures confidentiality even if data is intercepted.

- Secure Connections: I utilize secure protocols like HTTPS and SSH for communication with data sources to prevent eavesdropping.

- Data Masking and Anonymization: Sensitive data should be masked or anonymized before extraction or storage to protect privacy. Techniques include data obfuscation and replacing identifying information with pseudonyms.

- Compliance Adherence: I am familiar with relevant data privacy regulations (e.g., GDPR, CCPA) and ensure that all extraction processes adhere to these regulations.

- Logging and Auditing: Detailed logs of all data extraction activities are maintained for auditing and security analysis.

For instance, before extracting customer data, I would ensure all personally identifiable information (PII) is appropriately masked or anonymized before storing or transferring it. I would also implement encryption throughout the process and strictly adhere to the company’s security policies and relevant regulations.

Q 14. What is your experience with data integration tools?

My experience with data integration tools encompasses a range of technologies, each with its strengths and weaknesses. This includes:

- ETL (Extract, Transform, Load) tools: I’m proficient with tools like Informatica PowerCenter, Talend Open Studio, and Apache Kafka. These tools offer robust capabilities for extracting, transforming, and loading data from various sources into target systems.

- Cloud-based data integration platforms: I have experience with cloud services like AWS Glue, Azure Data Factory, and Google Cloud Dataflow. These provide scalable and cost-effective solutions for large-scale data integration tasks.

- Data warehousing and business intelligence tools: My work includes experience with tools like Tableau, Power BI, and Qlik Sense, which often integrate with data extracted from multiple sources.

- Scripting languages for data integration: I’m proficient in Python and its related libraries (pandas, SQLAlchemy) for custom data integration scripts and pipeline development. This provides flexibility and control over the entire process.

The choice of tool depends heavily on the complexity of the integration task, the volume and velocity of the data, and the organization’s existing infrastructure. For large-scale, complex integrations, ETL tools are often preferred; for simpler tasks, scripting languages might suffice. Cloud-based solutions provide scalability and elasticity for ever-changing data volumes.

Q 15. Describe your experience with scripting languages (e.g., Python, R) for data extraction.

I have extensive experience using scripting languages like Python and R for data extraction. Python, with its rich ecosystem of libraries such as Beautiful Soup, Scrapy, and requests, is my go-to for web scraping and API interaction. R, with packages like rvest and httr, offers powerful tools for data manipulation and analysis post-extraction. My experience includes everything from simple data retrieval to complex web scraping projects involving dynamic websites and handling of pagination. For example, I once used Python and Scrapy to extract product information from a large e-commerce website, processing thousands of pages and handling different product categories efficiently. This involved writing custom scraping rules, handling pagination, and implementing robust error handling. In another project, I utilized R’s data manipulation capabilities to clean and transform extracted data from APIs into a format suitable for analysis and reporting.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you test the accuracy of extracted data?

Testing the accuracy of extracted data is crucial. My approach involves a multi-step process. First, I perform visual inspection of a sample of the extracted data to check for obvious errors. Then, I employ data validation techniques. This can involve comparing extracted data against known values from a reliable source (e.g., a smaller, manually verified dataset). If I’m extracting numerical data, I can check for range validity, identifying any impossible or unlikely values. I also use checksums or hash functions to verify data integrity during transfer and storage. Finally, I employ statistical analysis, looking for anomalies and inconsistencies in the data. For instance, if I’m extracting sales data, I might compare the extracted totals to known overall sales figures. This comprehensive approach helps to ensure data quality and reliability.

Q 17. How do you document your data extraction processes?

Thorough documentation of data extraction processes is paramount for reproducibility and maintainability. My documentation strategy includes detailed descriptions of data sources, extraction methods, transformation steps, and error handling procedures. I use a combination of text-based documentation (e.g., Markdown files) and code comments to explain my scripts. The documentation clearly outlines the assumptions made, potential limitations, and any dependencies. I also maintain a version control system (like Git) to track changes to the extraction scripts and documentation. For example, my documentation for a recent project included a data dictionary, specifying the meaning and data type of each extracted field, along with a flowchart illustrating the data extraction workflow. This meticulous approach ensures clarity and allows others (or myself in the future) to understand and maintain the extraction process easily.

Q 18. Explain your experience with different data extraction methods (e.g., APIs, web scraping).

I have extensive experience with diverse data extraction methods. APIs are a preferred method when available, as they provide a structured and efficient way to access data. I’ve worked with various APIs, including RESTful APIs, SOAP APIs, and GraphQL APIs, using appropriate libraries in Python or R to interact with them. For instance, I used the Twitter API to extract tweet data for sentiment analysis. When APIs are unavailable, I resort to web scraping, employing libraries like Beautiful Soup and Scrapy (in Python) or rvest (in R). I’m proficient at handling different website structures, including dynamic content loaded via JavaScript, using techniques like Selenium or Playwright. I’ve also experience with database extraction using SQL queries and various database connectors. Each method has its strengths and weaknesses, and the choice depends on the specific data source and context.

Q 19. What is your experience with data warehousing?

My experience with data warehousing involves designing and implementing data warehousing solutions to store and manage large volumes of extracted data. I understand the importance of data modeling, ETL (Extract, Transform, Load) processes, and data governance. I’m familiar with various data warehousing architectures, including star schemas and snowflake schemas. I have used tools like SQL Server Integration Services (SSIS) and Apache Spark for ETL processes. In a previous role, I was involved in building a data warehouse for a telecommunications company, where I designed the data model, implemented the ETL processes, and ensured data quality. My experience also extends to cloud-based data warehouses such as Snowflake and Google BigQuery.

Q 20. How do you manage data versioning during extraction?

Data versioning is crucial to maintain the history and traceability of extracted data. I leverage version control systems like Git to track changes in extraction scripts, which indirectly manages data versions. Each commit represents a snapshot of the extraction process at a particular point in time. Furthermore, I incorporate timestamps and version numbers into the extracted data itself. This allows me to reconstruct past versions if needed and trace changes. If I’m dealing with very large datasets, I might use incremental extraction and update mechanisms, only extracting and updating changes since the last run, improving efficiency. This way, each version of the data is associated with a specific version of the script that generated it, allowing me to reproduce and compare various extractions over time.

Q 21. How do you handle errors and exceptions during data extraction?

Handling errors and exceptions is a critical aspect of robust data extraction. My approach involves implementing comprehensive error handling mechanisms within my scripts. This includes using try-except blocks (in Python) or similar constructs to catch and handle potential errors, such as network issues, invalid data formats, and API rate limits. I log errors meticulously, providing detailed information about the error type, location, and time. For web scraping, I implement mechanisms to handle dynamic content and website changes gracefully, such as retries with exponential backoff and proxy rotation to prevent being blocked. For APIs, I use appropriate methods for handling rate limits and authentication errors. In addition to error handling, I implement validation checks at different stages of the process to identify and address data inconsistencies early on. This combination of proactive and reactive error management ensures data quality and the smooth operation of the extraction process.

Q 22. What is the difference between a JOIN and a UNION in SQL?

Both JOIN and UNION are SQL clauses used to combine data from multiple tables, but they do so in fundamentally different ways. A JOIN combines rows from two or more tables based on a related column between them, creating a single result set. A UNION, on the other hand, stacks the result sets of two or more SELECT statements vertically, combining rows with the same number of columns and compatible data types.

Think of it like this: A JOIN is like merging two spreadsheets based on a common identifier (e.g., customer ID), aligning related information. A UNION is like stacking two spreadsheets on top of each other.

Example: JOIN

Let’s say we have two tables: Customers (CustomerID, Name) and Orders (OrderID, CustomerID, OrderDate). A JOIN would allow us to retrieve customer names along with their order details:

SELECT Customers.Name, Orders.OrderID, Orders.OrderDate FROM Customers INNER JOIN Orders ON Customers.CustomerID = Orders.CustomerID;Example: UNION

If we have two tables, Employees and Customers, both with columns Name and Address, a UNION would combine all names and addresses into a single result set:

SELECT Name, Address FROM Employees UNION SELECT Name, Address FROM Customers;In short, use JOIN when you need to combine related data from different tables and UNION when you need to combine the results of multiple SELECT statements into a single dataset.

Q 23. Explain the concept of normalization in database design.

Database normalization is a process of organizing data to reduce redundancy and improve data integrity. It involves dividing larger tables into smaller ones and defining relationships between them. The goal is to isolate data so that additions, deletions, and modifications of a field can be made in just one table and then propagated through the rest of the database via the defined relationships.

Normalization is typically achieved through a series of normal forms, each addressing specific types of redundancy. The most common are:

- First Normal Form (1NF): Eliminate repeating groups of data within a table. Each column should contain only atomic values (indivisible values).

- Second Normal Form (2NF): Be in 1NF and eliminate redundant data that depends on only part of the primary key (in tables with composite keys).

- Third Normal Form (3NF): Be in 2NF and eliminate data that is not dependent on the primary key.

Example: Imagine a table storing customer information with multiple phone numbers. This violates 1NF because phone numbers are a repeating group. Normalizing this would involve creating a separate table for phone numbers with a foreign key linking back to the customer table.

Proper normalization significantly improves database efficiency, reducing storage space, enhancing data integrity, and making data management easier. It’s a crucial aspect of robust database design.

Q 24. How do you identify and resolve data inconsistencies?

Identifying and resolving data inconsistencies requires a multi-step approach. It starts with understanding the potential sources of inconsistencies and then employing appropriate techniques for detection and correction.

Identification:

- Data Profiling: Analyzing data to understand its structure, content, and quality. This helps identify anomalies and inconsistencies like unexpected data types, missing values, or outliers.

- Data Validation: Setting rules and constraints to ensure data integrity. This might involve checking for valid ranges, formats, or relationships between different data points.

- Data Comparison: Comparing data across multiple sources or versions to highlight discrepancies. This is particularly useful when merging datasets or tracking data changes over time.

Resolution:

- Data Cleaning: Addressing inconsistencies by correcting errors, handling missing values (imputation or removal), and transforming data into a consistent format.

- Data Reconciliation: Resolving conflicting data entries by prioritizing reliable sources or applying business rules to determine the most accurate value.

- Data De-duplication: Identifying and removing duplicate records to maintain data accuracy and consistency.

For example, if customer addresses are stored inconsistently (e.g., some with abbreviations, some without), a data cleaning process would standardize them. Similarly, conflicting order dates from different sources would require reconciliation using a defined process to decide which date is correct.

Q 25. Describe your experience with data visualization tools.

I have extensive experience with various data visualization tools, including Tableau, Power BI, and Python libraries like Matplotlib and Seaborn. My experience encompasses data preparation, visualization creation, and report generation.

In my previous role, I used Tableau to create interactive dashboards that tracked key performance indicators (KPIs) for marketing campaigns. This involved connecting to various data sources, performing data cleaning and transformations within Tableau’s environment, and creating visualizations such as bar charts, line graphs, and maps to showcase campaign effectiveness. I leveraged Power BI to build similar reports for sales data, incorporating features like drill-downs and interactive filtering to facilitate deeper data analysis by stakeholders.

My Python experience focuses on creating customized visualizations based on specific analytical needs. For example, I used Matplotlib and Seaborn to generate publication-ready figures showcasing complex statistical analyses. The choice of tool is highly dependent on the data’s size, the complexity of the analysis, and the target audience of the visualizations.

Q 26. How do you handle data conflicts when merging datasets?

Handling data conflicts when merging datasets requires a well-defined strategy. The approach depends on the nature of the conflict and the context of the data. Common strategies include:

- Prioritization: Assigning weights or priorities to different datasets based on reliability or recency. Data from a more trusted or updated source would take precedence.

- Manual Resolution: For complex or critical conflicts, manual inspection and resolution are necessary. This might involve subject-matter experts reviewing conflicting records and making informed decisions.

- Automated Conflict Resolution Rules: Defining rules based on business logic to automatically resolve conflicts. This might involve choosing the maximum value, the average, or applying other relevant criteria.

- Creating a New Field: Adding a new field to indicate the presence of a conflict and the source of each conflicting value. This preserves all information and allows for further analysis or resolution later.

For instance, if two datasets contain conflicting customer addresses, a prioritization approach would choose the address from the most recently updated dataset. Complex conflicts involving multiple attributes might need manual review and decision-making.

Q 27. What are some best practices for data extraction?

Best practices for data extraction focus on efficiency, accuracy, and data integrity. Key considerations include:

- Understanding Data Sources: Thoroughly understanding the structure, content, and limitations of the data source before initiating extraction. This includes examining data schemas, documentation, and any relevant metadata.

- Choosing Appropriate Tools: Selecting extraction tools appropriate for the data source and desired format. This might involve using SQL for relational databases, APIs for web services, or specialized ETL (Extract, Transform, Load) tools.

- Data Validation and Transformation: Implementing data validation steps to ensure data quality and consistency during extraction. Transformations might be necessary to clean, format, or convert the data into a usable format.

- Error Handling and Logging: Robust error handling mechanisms to manage and report any extraction errors. Maintaining detailed logs for auditing and troubleshooting purposes.

- Security and Compliance: Adhering to security protocols and compliance requirements during data extraction. This includes protecting sensitive data and complying with relevant regulations.

For example, before extracting data from a customer database, one should examine the table schema and field types to ensure that the extraction process correctly handles different data types. Robust error handling is crucial to prevent data loss or corruption during the process.

Q 28. How do you stay up-to-date with the latest data extraction technologies?

Staying up-to-date with data extraction technologies involves a multi-faceted approach:

- Following Industry Blogs and Publications: Regularly reading industry blogs, publications, and newsletters focused on data management, big data, and cloud computing. These often highlight new tools, techniques, and trends.

- Attending Conferences and Workshops: Participating in relevant conferences and workshops to learn about the latest advancements from industry experts and interact with other professionals.

- Online Courses and Certifications: Enrolling in online courses and pursuing certifications in data extraction, ETL processes, and related technologies to deepen technical skills.

- Experimenting with New Tools: Hands-on experience with new tools and technologies through personal projects or contributing to open-source initiatives.

- Networking with Peers: Engaging with colleagues and experts in the field through professional networks and online communities to learn about best practices and share experiences.

By combining these methods, I can ensure my knowledge and skills remain current in the rapidly evolving landscape of data extraction.

Key Topics to Learn for Tables and Data Extraction Interview

- Relational Database Concepts: Understanding database structures, schemas, and relationships between tables is fundamental. This includes knowledge of primary and foreign keys, normalization, and different database models.

- SQL for Data Extraction: Mastering SQL queries, including `SELECT`, `FROM`, `WHERE`, `JOIN`, `GROUP BY`, and `HAVING` clauses, is crucial for efficiently extracting data. Practice writing complex queries to handle various data manipulation scenarios.

- Data Cleaning and Transformation: Learn techniques to handle missing values, outliers, and inconsistencies in extracted data. Understanding data types and their implications is essential for accurate analysis.

- Data Extraction from various sources: Explore extracting data not only from relational databases but also from APIs, CSV files, JSON files, and other common data formats. Understanding the nuances of each format is vital.

- Data Validation and Integrity: Learn methods to ensure the accuracy and reliability of extracted data. This includes implementing checks and validations to detect and correct errors.

- Practical Application: Consider projects involving data extraction for reporting, analysis, machine learning, or data warehousing. Thinking through the entire process, from data source to final output, will strengthen your understanding.

- Optimization Techniques: Explore methods for optimizing data extraction processes for speed and efficiency, particularly when dealing with large datasets. This might include indexing, query optimization, and parallel processing.

Next Steps

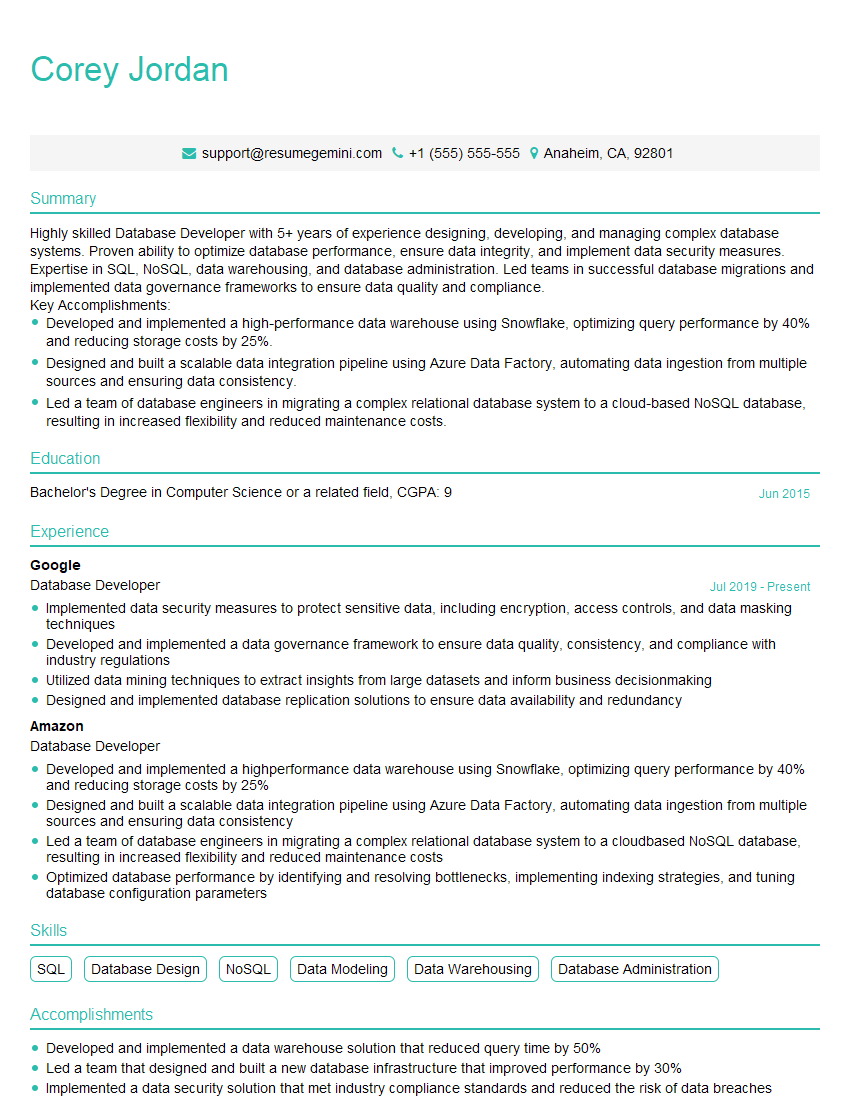

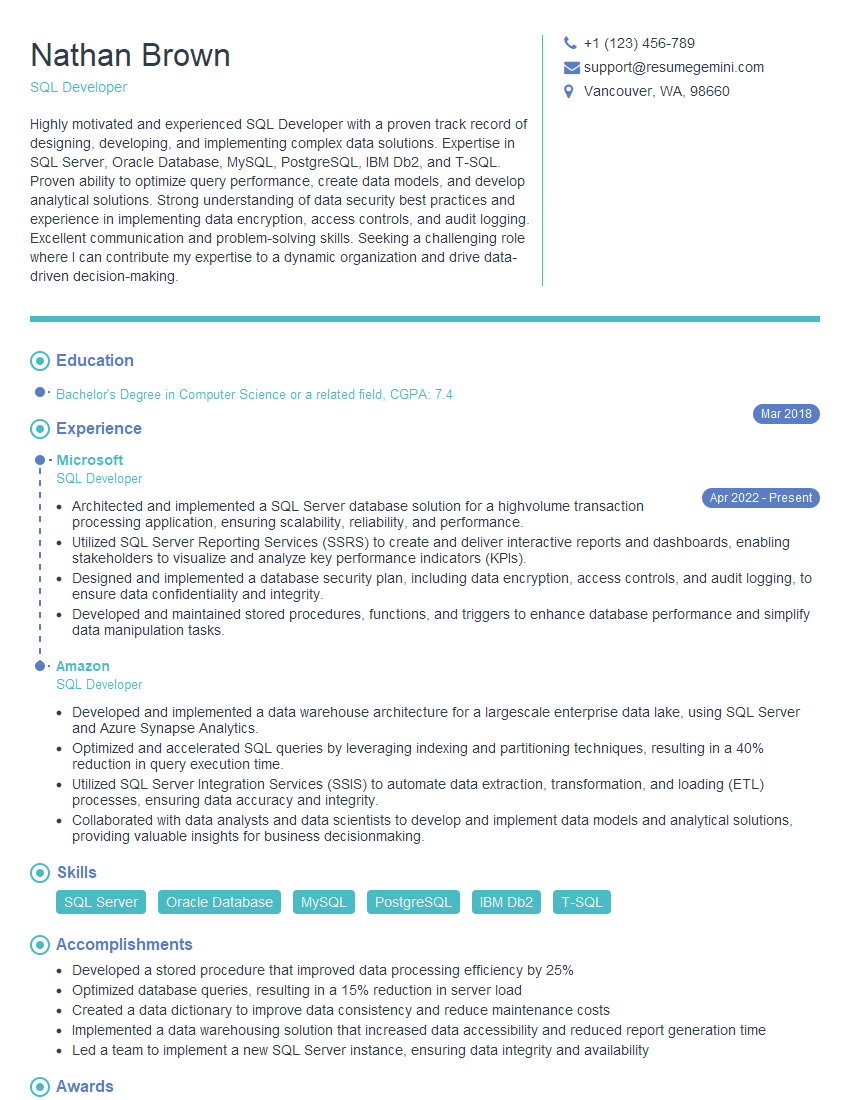

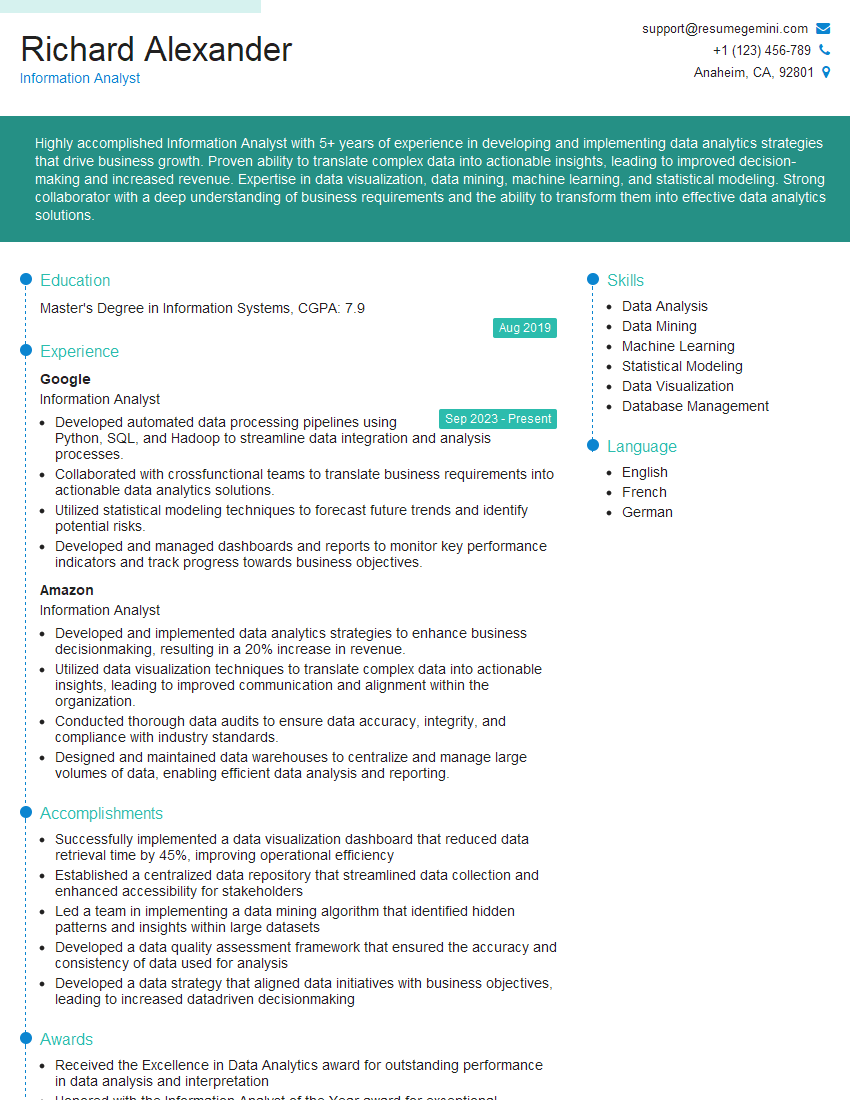

Mastering Tables and Data Extraction is vital for success in many data-driven roles, opening doors to exciting career opportunities with significant growth potential. A strong understanding of these concepts significantly enhances your problem-solving abilities and demonstrates valuable technical skills to potential employers. To maximize your job prospects, create an ATS-friendly resume that highlights your expertise. ResumeGemini is a trusted resource that can help you build a professional resume that effectively showcases your skills and experience. We provide examples of resumes tailored to Tables and Data Extraction to guide you in crafting a compelling application.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good