Cracking a skill-specific interview, like one for Test Plan and Test Case Design, requires understanding the nuances of the role. In this blog, we present the questions you’re most likely to encounter, along with insights into how to answer them effectively. Let’s ensure you’re ready to make a strong impression.

Questions Asked in Test Plan and Test Case Design Interview

Q 1. Explain the difference between a test plan and a test strategy.

Think of a test strategy as the overarching plan for how you’ll approach testing a product, while a test plan is the detailed roadmap for executing that strategy. The strategy defines the ‘what’ and ‘why,’ while the plan defines the ‘how’ and ‘when’.

For example, a test strategy might state: “We will employ a risk-based testing approach, focusing on critical functionalities and prioritizing user stories with the highest business value. We’ll use a combination of automated and manual testing.”

A test plan, on the other hand, will detail the specific steps to achieve that strategy. This includes defining test environments, scheduling test activities, assigning responsibilities, outlining specific test cases, identifying required resources, and setting success criteria.

In essence, the strategy provides the high-level direction, whereas the plan lays out the concrete actions needed to execute that direction effectively.

Q 2. Describe your process for creating a test plan.

My process for creating a test plan follows a structured approach:

- Scope Definition: Clearly define the scope of testing – which features, modules, and functionalities will be included, and what will be excluded. This includes understanding the requirements, user stories, and acceptance criteria.

- Risk Assessment: Identify potential risks that could impact the testing process, such as tight deadlines, limited resources, or complex functionalities. This helps prioritize testing efforts.

- Test Strategy Selection: Choose a suitable testing strategy (e.g., risk-based, agile, waterfall) that aligns with the project’s methodology and goals. This informs the overall testing approach.

- Test Environment Setup: Detail the necessary hardware, software, and network configurations for the testing environment. This ensures consistency and avoids unexpected issues during testing.

- Test Case Design and Development: Create detailed test cases covering various aspects of the software, including functional, performance, security, and usability. I leverage various test case design techniques (discussed in a later question).

- Test Data Planning: Define the test data required for executing the test cases, ensuring data integrity and compliance with data governance policies.

- Resource Allocation: Assign roles and responsibilities to team members, including testers, developers, and other stakeholders. This ensures accountability and efficient task management.

- Schedule Definition: Create a realistic schedule for the testing activities, including milestones and deadlines. This guides the testing process and helps in tracking progress.

- Reporting and Communication: Define how test results will be reported, tracked, and communicated to stakeholders. Regular updates keep everyone informed about progress and potential issues.

Q 3. How do you prioritize test cases?

Test case prioritization is crucial for efficient testing, especially when faced with limited time or resources. I employ a multi-faceted approach:

- Risk-Based Prioritization: Test cases covering functionalities with high business impact or high risk of failure are prioritized. This ensures critical features are thoroughly tested.

- Dependency-Based Prioritization: Test cases dependent on other functionalities or modules are prioritized based on their dependencies. This ensures a systematic approach.

- Criticality-Based Prioritization: Test cases based on critical functionalities, such as login, payment gateways, or data integrity, are given top priority. This ensures the stability of core functions.

- Coverage-Based Prioritization: Prioritize test cases to maximize test coverage. This approach ensures comprehensive testing of different aspects of the system.

- MoSCoW Method: This involves categorizing requirements as Must have, Should have, Could have, and Won’t have. Test cases aligning with the ‘Must have’ and ‘Should have’ categories are prioritized.

I often use a combination of these techniques to create a comprehensive prioritization scheme. For example, a test case related to a critical payment function (high risk, criticality) and dependent on the user login (dependency) will get a higher priority than a test case for a minor UI element (low risk, low criticality).

Q 4. What are the different types of test cases?

There’s a wide range of test case types, each serving a specific purpose:

- Functional Test Cases: Verify that the software performs its intended functions correctly. Examples include verifying login functionality, calculating totals, or processing payments.

- Non-Functional Test Cases: Assess aspects beyond functionality, such as performance (response time, load testing), security (vulnerability checks, authentication), usability (ease of use, intuitive design), and reliability (error handling, system stability).

- Unit Test Cases: Test individual components or modules of the software in isolation. Developers typically perform these tests.

- Integration Test Cases: Verify the interactions between different modules or components of the system. They ensure that integrated parts work together correctly.

- System Test Cases: Test the entire system as a whole, including all integrated components. This is a crucial stage for verifying end-to-end functionality.

- Regression Test Cases: Re-run tests after code changes or bug fixes to ensure that new changes haven’t introduced new defects or broken existing functionality.

- User Acceptance Test Cases: Verify that the system meets the user’s requirements and expectations. This often involves end-users testing the software.

Q 5. How do you handle changes in requirements during testing?

Handling requirement changes during testing is a common challenge. My approach involves:

- Impact Assessment: Analyze the change to determine its potential impact on the existing test cases and overall testing strategy. This is critical to understand the scope of work.

- Prioritization: If necessary, re-prioritize existing test cases based on the impact of the change. Critical changes will need testing sooner than others.

- Test Case Update: Update or create new test cases to accommodate the changed requirements. This ensures that the new functionality is tested thoroughly.

- Retesting: Re-run affected test cases to verify that the changes have been implemented correctly and haven’t introduced new defects. Regression testing is important here.

- Communication: Maintain transparent communication with the development team, project managers, and other stakeholders about the changes, their impact on the testing schedule, and any potential delays. This ensures everyone is on the same page.

- Documentation: Update the test plan and related documentation to reflect the changes in requirements and the adjustments made to the testing process. This ensures traceability and accountability.

Using a flexible and adaptable testing approach, like Agile, is very helpful to accommodate changing requirements gracefully.

Q 6. Explain the importance of test case traceability.

Test case traceability is essential for ensuring that all requirements are adequately tested and for facilitating defect tracking and reporting. It provides a clear link between requirements, test cases, and defects.

Importance:

- Requirement Coverage: It demonstrates that all requirements have been covered by test cases. This ensures comprehensive testing.

- Defect Tracking: It enables efficient tracking of defects back to their root cause (a specific requirement or test case). This improves debugging efficiency.

- Risk Management: By identifying untested requirements, it helps mitigate risks and potential issues.

- Auditability: Traceability makes the testing process transparent and auditable, demonstrating compliance with quality standards.

- Regression Testing: Helps to identify which test cases need to be re-executed when changes are made to the software.

For example, a traceability matrix can be used to map requirements to test cases, showing which tests cover which requirements. This provides a clear and concise view of test coverage.

Q 7. What are some common test case design techniques?

Several test case design techniques help create effective and comprehensive test cases:

- Equivalence Partitioning: Dividing input data into groups (partitions) that are expected to be treated similarly by the software. Testing one representative value from each partition reduces the number of test cases while covering a broad range of inputs.

- Boundary Value Analysis: Focusing on the boundaries or edge cases of input values. These are often areas where defects are more likely to occur.

- Decision Table Testing: Used to test complex logic with multiple conditions and actions. This technique organizes the conditions and resulting actions in a tabular format.

- State Transition Testing: Used to test systems that transition through different states. Test cases are designed to cover all possible transitions and ensure correct behavior in each state.

- Use Case Testing: Based on user scenarios and workflows. Test cases are designed to cover all possible user interactions and workflows.

- Error Guessing: Based on the tester’s experience and intuition to identify potential areas where defects are likely to occur. This technique is often used in conjunction with other techniques.

The choice of technique depends on the nature of the software and the specific requirements being tested. Often, a combination of techniques is used to create a robust and effective set of test cases.

Q 8. How do you ensure test coverage?

Ensuring comprehensive test coverage is crucial for delivering high-quality software. It’s about making sure we’ve tested all aspects of the application, minimizing the risk of undiscovered bugs. We achieve this through a multi-pronged approach.

Requirement Traceability Matrix (RTM): This is a table that links requirements to test cases, ensuring every requirement has at least one corresponding test case. Think of it as a map, guiding us to ensure we cover every feature and functionality.

Test Case Design Techniques: We use various techniques like Equivalence Partitioning (dividing input data into groups that are expected to behave similarly), Boundary Value Analysis (focusing on edge cases), and State Transition Testing (covering different states and transitions of the application) to systematically design test cases that maximize coverage.

Code Coverage Analysis (for unit and integration testing): Tools can measure the percentage of code lines, branches, or paths executed during testing. This provides an objective measure of how thoroughly the code itself has been tested. For example, a 90% statement coverage doesn’t guarantee full functionality, but it provides a good indicator.

Risk-Based Testing: We prioritize testing based on the criticality of features and potential impact of failures. If a feature is crucial to the application’s core function and a failure would have severe consequences, we allocate more testing resources to that area.

Imagine building a house. You wouldn’t just test the walls; you’d test the foundation, plumbing, electrical wiring, and roof—each element contributing to the overall structure’s stability. Similarly, comprehensive test coverage ensures the stability and reliability of our software.

Q 9. How do you estimate the time required for testing?

Estimating testing time is a critical skill. It’s not just about guessing; it’s about a structured approach combining experience, data, and analysis.

Size of the application: A larger application naturally requires more testing time.

Complexity of the application: The more intricate the logic and interactions, the longer it takes to test thoroughly.

Number of features and functionalities: Each feature needs to be tested individually and in combination with others.

Test case design: The complexity and number of test cases influence testing duration.

Defect density from past projects: Past projects give insight into the probable number of bugs, influencing testing efforts.

Team size and expertise: A skilled team might work faster and more efficiently.

We often use techniques like Three-Point Estimation (optimistic, most likely, pessimistic) to account for uncertainty. We also break down testing into smaller tasks and estimate each individually, which offers a more accurate overall estimate. For example, we might estimate the time for unit testing, integration testing, and user acceptance testing separately before combining them. The final estimate should also include contingency time for unexpected issues and unforeseen delays.

Q 10. What metrics do you use to track testing progress?

Tracking testing progress is essential for managing resources and ensuring timely delivery. We rely on several key metrics:

Test Case Execution Status: The number of test cases executed versus the total number planned, expressed as a percentage. This gives a clear overview of progress.

Defect Density: The number of defects found per lines of code or per feature. A high defect density might indicate areas needing more testing.

Defect Severity and Priority: Categorizing defects based on their impact helps prioritize fixes. Critical bugs naturally take precedence.

Test Coverage: As discussed earlier, this metric quantifies the extent to which the application has been tested.

Test Execution Time: This metric, when tracked across different phases, can help in identifying bottlenecks and areas for improvement.

Cycle Time: Time taken to complete one test iteration. This helps evaluate the team’s efficiency.

We use dashboards and reporting tools to visualize these metrics, enabling proactive identification of potential problems and timely adjustments to the test plan.

Q 11. How do you report bugs effectively?

Effective bug reporting is critical for developers to understand and fix issues quickly. A well-written bug report should be clear, concise, and contain all necessary information.

Clear and concise title: A title should briefly describe the bug. For example, “Login button unresponsive on Chrome”.

Steps to reproduce: A step-by-step guide allowing anyone to reproduce the bug. The more detailed, the better.

Expected result: What should have happened.

Actual result: What actually happened.

Environment details: Operating system, browser, hardware specs, etc.

Screenshots or videos: Visual aids provide powerful evidence.

Severity and priority: Assessing the impact and urgency of the bug.

We often use bug tracking tools that allow for assigning bugs to developers, tracking progress, and generating reports. A good analogy is a well-written recipe: it should provide all the ingredients and instructions to successfully reproduce (or in this case, reproduce and fix) the dish (or bug).

Q 12. What is the difference between verification and validation?

Verification and validation are often confused but represent distinct processes in software testing:

Verification: Are we building the product right? This focuses on ensuring that the software conforms to the specifications. It involves activities like code reviews, inspections, and walkthroughs, checking if the software meets its defined requirements.

Validation: Are we building the right product? This focuses on ensuring that the software meets the user’s needs and expectations. This involves activities like user acceptance testing (UAT) and beta testing.

Think of building a house: verification ensures the house is built according to the blueprint (specifications), while validation ensures the house meets the client’s needs (e.g., number of bedrooms, kitchen size, etc.). Both are essential for delivering a successful product.

Q 13. Describe your experience with different testing methodologies (e.g., Waterfall, Agile).

I have experience working within both Waterfall and Agile methodologies. Each has its own strengths and weaknesses:

Waterfall: In a Waterfall project, testing is typically a distinct phase occurring towards the end of the development lifecycle. This approach is highly structured and well-documented but can be less flexible and responsive to changing requirements. Test planning is detailed upfront, and changes require significant rework.

Agile: In Agile, testing is integrated throughout the development process. Testing activities happen in short sprints, allowing for rapid feedback and iterative improvements. This approach is more adaptable to changing requirements but requires a high level of collaboration and communication between developers and testers. We utilize techniques like Test Driven Development (TDD) and Behavior Driven Development (BDD).

I’ve found that Agile methodologies are better suited for projects with evolving requirements, while Waterfall can be more suitable for projects with well-defined, stable requirements. My approach adapts to the chosen methodology, focusing on ensuring effective testing regardless of the process.

Q 14. How do you handle conflicting priorities among different stakeholders?

Conflicting priorities among stakeholders are common, and skillful negotiation is key. My approach involves:

Clearly defined goals and objectives: Ensuring everyone understands the project’s goals helps prioritize features and align expectations.

Prioritization matrix: Using a matrix to rank features based on factors like business value, risk, and dependencies helps to objectively determine priorities.

Open communication and collaboration: Facilitating open discussions among stakeholders allows for transparent exchange of perspectives and finding common ground.

Compromise and negotiation: Finding solutions that balance competing interests, understanding that some compromises are necessary.

Documentation and agreement: Clearly documenting the agreed-upon priorities ensures everyone is on the same page, reducing future misunderstandings.

It’s about actively listening to everyone’s concerns, understanding their perspectives, and working towards a solution that satisfies the most critical needs within the project constraints. Sometimes, it’s about explaining the tradeoffs involved in different approaches and helping stakeholders understand the potential implications of their choices. Ultimately, successful conflict resolution requires skillful communication and a collaborative mindset.

Q 15. Describe your experience with test management tools.

My experience with test management tools spans several years and encompasses a variety of platforms. I’m proficient in tools like Jira, Azure DevOps, and TestRail. These tools are essential for managing the entire software testing lifecycle, from requirement gathering and test planning to execution, defect tracking, and reporting.

For instance, in a recent project using Jira, I leveraged its features to create and assign test cases, track progress against deadlines, and generate comprehensive reports that highlighted testing coverage and bug density. My experience also includes configuring workflows, customizing dashboards, and integrating these tools with other project management systems to ensure seamless collaboration across teams. This integrated approach enables efficient tracking of test progress, identification of bottlenecks, and timely resolution of issues.

Beyond these specific tools, I understand the importance of selecting a tool that aligns with the project’s specific needs and the team’s familiarity. The choice involves considering factors such as scalability, integration capabilities, reporting features, and the overall cost of ownership.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you incorporate risk management into your test planning?

Risk management is an integral part of my test planning process. I approach it systematically, identifying potential risks early and developing mitigation strategies to prevent them from impacting the project. This proactive approach involves several key steps.

- Risk Identification: I start by brainstorming potential problems, considering factors like schedule constraints, technical complexities, resource limitations, and the potential for defects. For example, a tight deadline could risk insufficient testing, while complex integration points might increase the chance of compatibility issues.

- Risk Assessment: Once identified, risks are evaluated based on their likelihood and potential impact. A risk matrix, often visually represented, helps prioritize the most critical risks.

- Mitigation Planning: For each significant risk, I develop specific mitigation strategies. This might involve adding buffer time to the schedule, dedicating additional resources to critical areas, or implementing rigorous testing techniques to address potential integration problems.

- Contingency Planning: I also develop contingency plans to handle unforeseen events or situations where mitigation efforts are unsuccessful. This might include identifying alternative approaches or fallback strategies.

- Monitoring and Reporting: Throughout the testing process, I monitor the identified risks and report on their status regularly to the project team. This ensures that any emerging risks are addressed promptly.

This structured approach allows me to proactively address potential problems, minimizing disruption and ensuring the project’s success. It’s like having a safety net for the project, allowing for flexibility and problem-solving ahead of time.

Q 17. What is your approach to test data management?

Test data management is crucial for ensuring the effectiveness and reliability of testing. My approach involves several key aspects, ensuring the data used for testing is relevant, representative of real-world scenarios, and secure.

- Data Identification and Analysis: I begin by carefully analyzing the application’s requirements and identifying the types of data needed for comprehensive testing. This could include customer data, product information, transaction details, and various configurations.

- Data Acquisition: The data can come from various sources – production systems (with appropriate anonymization and masking), staging environments, or specially created synthetic datasets. The choice depends on security, privacy, and data availability considerations.

- Data Preparation and Cleansing: Raw data often requires substantial preparation. This might involve data transformations, anonymization techniques, and data cleansing to remove inconsistencies or errors. This step is crucial to ensure data quality and integrity.

- Data Subsetting and Masking: To manage large datasets efficiently and protect sensitive information, I often create subsets of the data for different testing phases. Data masking techniques are employed to safeguard sensitive data while retaining data structure and integrity.

- Data Security and Compliance: Strict adherence to data security and compliance regulations is paramount. This includes implementing appropriate access controls, encryption, and data retention policies.

I often use a combination of manual and automated techniques to manage and process test data, leveraging scripting and specialized tools wherever possible. For example, I might use SQL scripts to create and populate test databases, or utilize data masking tools to anonymize sensitive information before using it for testing.

Q 18. How do you ensure the quality of your test cases?

Ensuring the quality of test cases is paramount for effective testing. My approach is multifaceted and includes several key steps:

- Clear and Concise Test Case Design: Each test case should have a clear objective, well-defined preconditions, detailed steps, and expected results. Ambiguity needs to be eliminated. I use a standardized template to ensure consistency.

- Peer Reviews: Before execution, test cases undergo peer reviews to identify any inconsistencies, missing steps, or areas of improvement. This collaborative approach enhances the quality and improves coverage.

- Test Case Reusability: I strive to design test cases that are modular and reusable across multiple test cycles or even projects, reducing redundancy and improving efficiency.

- Regular Updates: Test cases should be updated to reflect any changes in the application’s functionality or requirements. This prevents outdated tests and ensures continued relevance.

- Traceability: Each test case should be linked to the relevant requirements, ensuring full test coverage. This traceability is crucial for demonstrating test completeness.

Using a combination of these methods ensures that the test cases are reliable, repeatable, and contribute effectively to identifying defects. A well-structured test case is like a well-written recipe: easy to follow and guaranteed to produce consistent results.

Q 19. Explain the concept of test automation and its benefits.

Test automation is the use of software tools to automate repetitive testing tasks. This allows testers to focus on more complex and critical aspects of testing, rather than manual execution of simple tests.

Benefits:

- Increased Efficiency: Automation significantly reduces testing time and effort, allowing for faster release cycles.

- Improved Accuracy: Automated tests are less prone to human error, resulting in more reliable results.

- Increased Test Coverage: Automation allows for executing a larger number of tests, leading to more comprehensive coverage.

- Faster Feedback: Automated tests provide immediate feedback, allowing for quicker identification and resolution of defects.

- Cost Savings: While initial investment might be needed, automation ultimately reduces long-term costs associated with manual testing.

For example, automating regression testing, which involves repeatedly testing existing functionality after code changes, greatly reduces the time and cost required for this essential process. Imagine manually testing hundreds of user flows – automation is the key to efficiency in such scenarios.

Q 20. How do you select appropriate test automation tools?

Selecting appropriate test automation tools is a crucial decision with long-term implications. My selection criteria include:

- Project Needs: The primary factor is the specific requirements of the project, including the application’s technology stack, testing types (functional, performance, UI), and the level of automation required.

- Tool Capabilities: I evaluate tools based on their features, including ease of use, scripting capabilities, reporting features, integration with other tools, and support for various testing frameworks (e.g., Selenium, Appium, JUnit).

- Team Expertise: The team’s existing skills and experience play a vital role. Choosing a tool that aligns with the team’s capabilities minimizes training overhead and ensures efficient adoption.

- Cost and Licensing: Both the initial cost of the tool and the ongoing licensing fees need to be factored into the decision, considering the project budget and long-term sustainability.

- Community Support and Documentation: Strong community support and comprehensive documentation are crucial for addressing any challenges or questions that might arise during implementation.

I often conduct proof-of-concept exercises to evaluate the suitability of different tools before committing to a specific choice. This hands-on approach provides valuable insights into the tool’s capabilities and how well it aligns with our specific needs.

Q 21. Describe your experience with performance testing.

Performance testing is crucial for ensuring that a system can handle expected workloads without performance degradation. My experience encompasses various types of performance testing:

- Load Testing: This involves simulating a large number of users accessing the application simultaneously to determine its behavior under high load.

- Stress Testing: This involves pushing the system beyond its expected limits to identify its breaking point and determine its resilience.

- Endurance Testing (Soak Testing): This involves running the system under a constant load for an extended period to assess its stability and identify any performance degradation over time.

- Spike Testing: This involves suddenly increasing the load to a very high level to assess the system’s ability to handle sudden traffic surges.

I use a variety of tools for performance testing, including JMeter and LoadRunner. My approach involves:

- Defining Performance Goals: Clearly establishing performance goals and metrics is critical, such as response times, throughput, and error rates.

- Test Planning: Planning the test scenarios, data sets, and tools used is essential for effective testing.

- Test Execution and Monitoring: Rigorous monitoring is critical to identify performance bottlenecks and issues.

- Analysis and Reporting: Analyzing the test results and producing comprehensive reports that highlight key findings and recommendations is crucial.

For example, in a recent project, we used JMeter to simulate thousands of concurrent users on an e-commerce platform. This helped identify a bottleneck in the database query causing response time delays, which was subsequently addressed and improved the overall system performance.

Q 22. How do you handle situations where testing deadlines are tight?

Tight deadlines are a common challenge in software testing. My approach involves prioritizing tasks strategically, focusing on risk-based testing. This means identifying the most critical functionalities and focusing testing efforts there first. I use techniques like risk assessment matrices to objectively assign priorities. For example, if a feature is central to the user experience and has a high likelihood of causing major problems if it fails, it receives top priority.

Secondly, I advocate for clear communication with the development team and stakeholders. This ensures everyone understands the constraints and works collaboratively towards a viable solution. This might involve suggesting a reduction in scope, if feasible, or discussing potential compromises to meet the deadline. Open communication prevents misunderstandings and helps everyone manage expectations.

Finally, efficient test execution is crucial. This includes optimizing test cases, leveraging automation wherever possible, and effectively utilizing the available testing resources. For instance, parallel testing can significantly reduce the overall testing time. Ultimately, it’s a balancing act between thoroughness and speed, but prioritization and communication are key to navigating tight deadlines successfully.

Q 23. What is your experience with different types of testing (e.g., unit, integration, system, regression)?

My experience encompasses the full software testing lifecycle, including unit, integration, system, and regression testing.

- Unit Testing: I’m proficient in writing and executing unit tests, typically using frameworks like JUnit or pytest. I focus on testing individual components of the software in isolation to identify and resolve bugs early. For example, in a recent project, unit tests ensured that individual database interactions within our application functioned correctly before integration.

- Integration Testing: I have extensive experience verifying the interactions between different modules or components. This involves testing the interfaces and data flow between integrated parts of the system. For instance, I’ve used mocking techniques to simulate dependencies and isolate the integration points during testing.

- System Testing: This involves testing the entire system as a whole, focusing on the end-to-end functionality and user experience. I use various testing techniques, such as black-box testing, to ensure all components work seamlessly together. For example, I’ve designed and executed comprehensive system tests for e-commerce platforms to verify features like order placement, payment processing and shipping.

- Regression Testing: I understand the importance of regression testing to verify that newly added code doesn’t negatively impact existing functionality. I frequently utilize automated regression testing suites to ensure efficiency and consistency. This helps to minimize the risk of introducing new bugs while fixing or adding features.

My experience with different testing types allows me to select the most appropriate strategies depending on the project’s specific needs and context.

Q 24. How do you deal with ambiguity in requirements?

Ambiguity in requirements is a common challenge. My strategy is to proactively clarify any unclear points with stakeholders, particularly the product owner or business analysts. I use various techniques to address ambiguity:

- Requirement Clarification Meetings: I actively participate in meetings to discuss and clarify any vague requirements. This is crucial to eliminate misunderstandings and ensure everyone is on the same page.

- Documentation Review: Thorough review of all related documentation, including user stories, use cases, and design documents, to identify potential ambiguities.

- Questioning and Probing: Asking clarifying questions to understand the intent behind the requirement. This might involve asking “what if” scenarios or edge cases to ensure the requirements cover various possibilities.

- Creating Traceability Matrix: This matrix helps to trace requirements to test cases, ensuring all requirements are covered and simplifying the identification of gaps.

By actively addressing ambiguity early in the process, I prevent costly rework and ensure that the testing process focuses on the correct aspects of the application. Documenting these clarifications is also crucial for future reference and to maintain a clear understanding of the project’s requirements.

Q 25. Explain your experience with different bug tracking tools.

I have experience using several bug tracking tools, including Jira, Bugzilla, and Azure DevOps. Each has its strengths and weaknesses. For example, Jira is known for its flexibility and extensive plugin ecosystem, which allows for customization to fit various workflows. Bugzilla is a more open-source option, ideal for projects with open collaboration. Azure DevOps seamlessly integrates with other Microsoft products, making it efficient for teams already using the Microsoft ecosystem.

My experience extends beyond simply logging defects; I also understand how to effectively use the tools to manage the entire defect lifecycle: This includes prioritizing bugs based on their severity and impact, assigning them to developers, tracking their progress, and verifying fixes. Effective use of these tools contributes significantly to efficient and transparent bug management, improving overall software quality.

Q 26. How do you contribute to continuous improvement in testing processes?

Continuous improvement in testing is a key aspect of my approach. I actively contribute to this by:

- Test Process Reviews: Regularly reviewing our testing processes to identify areas for improvement. This often involves analyzing past testing cycles to pinpoint bottlenecks and areas where inefficiencies occurred.

- Automation of Test Cases: Identifying opportunities to automate repetitive test cases, reducing testing time and improving efficiency. This can significantly impact time to market and resource utilization.

- Test Case Optimization: Regularly reviewing and optimizing existing test cases to ensure they remain relevant, efficient, and effective. Outdated or redundant test cases can waste valuable time and resources.

- Knowledge Sharing and Training: Sharing best practices and conducting training sessions to enhance the testing skills within the team. This fosters a collaborative environment and leads to a more skilled and efficient team.

- Metrics Tracking and Analysis: Monitoring key testing metrics, such as defect density and test coverage, to identify trends and areas for improvement. This data-driven approach informs informed decision-making and helps track our progress over time.

Continuous improvement is an iterative process, and I believe in fostering a culture of continuous learning and adaptation within the testing team.

Q 27. Describe a challenging testing scenario and how you overcame it.

In a recent project involving a complex e-commerce platform, we encountered a critical performance issue just before the release. The system was experiencing significant delays during peak hours, impacting the user experience and potentially affecting sales. The initial diagnosis proved difficult as the cause wasn’t immediately apparent.

To overcome this, I employed a multi-pronged approach:

- Performance Testing: We conducted thorough performance testing using tools like JMeter, identifying specific bottlenecks in the system architecture.

- Root Cause Analysis: We collaborated with the development team to analyze the performance test results and pinpoint the underlying causes of the delays. This involved examining database queries, network latency, and server resource utilization.

- Code Optimization: The development team optimized database queries, improved code efficiency, and implemented caching strategies to enhance performance.

- Load Testing: After code optimization, we performed load testing to simulate peak user traffic and verify the improvements made.

By combining systematic testing, collaboration with developers, and methodical root cause analysis, we resolved the performance issue successfully, ensuring a smooth launch and positive user experience. This experience highlighted the value of performance testing and proactive collaboration to address critical issues.

Key Topics to Learn for Test Plan and Test Case Design Interview

- Test Planning Fundamentals: Understanding the scope, objectives, and deliverables of a test plan. Defining test strategies, methodologies (e.g., Waterfall, Agile), and entry/exit criteria.

- Test Case Design Techniques: Mastering various techniques like equivalence partitioning, boundary value analysis, decision table testing, state transition testing, and use case testing. Knowing when to apply each technique effectively.

- Risk Management in Testing: Identifying and mitigating potential risks throughout the testing lifecycle. Understanding risk assessment and prioritization for efficient resource allocation.

- Test Data Management: Strategies for creating, managing, and securing test data. Understanding the importance of realistic and representative test data.

- Test Environment Setup: Understanding the requirements for setting up a suitable test environment, including hardware, software, and network configurations.

- Defect Reporting and Tracking: Knowing how to effectively report and track defects, including providing clear and concise descriptions, steps to reproduce, and expected versus actual results.

- Test Metrics and Reporting: Understanding key testing metrics (e.g., defect density, test coverage) and how to present them in a clear and concise manner to stakeholders.

- Test Automation Considerations: Discussing the role of automation in testing and its potential benefits and limitations. Understanding different automation frameworks and tools (mentioning them generally, without specifics).

- Practical Application: Be prepared to discuss how you would approach designing test plans and test cases for various software applications, considering different types of testing (functional, non-functional, etc.).

Next Steps

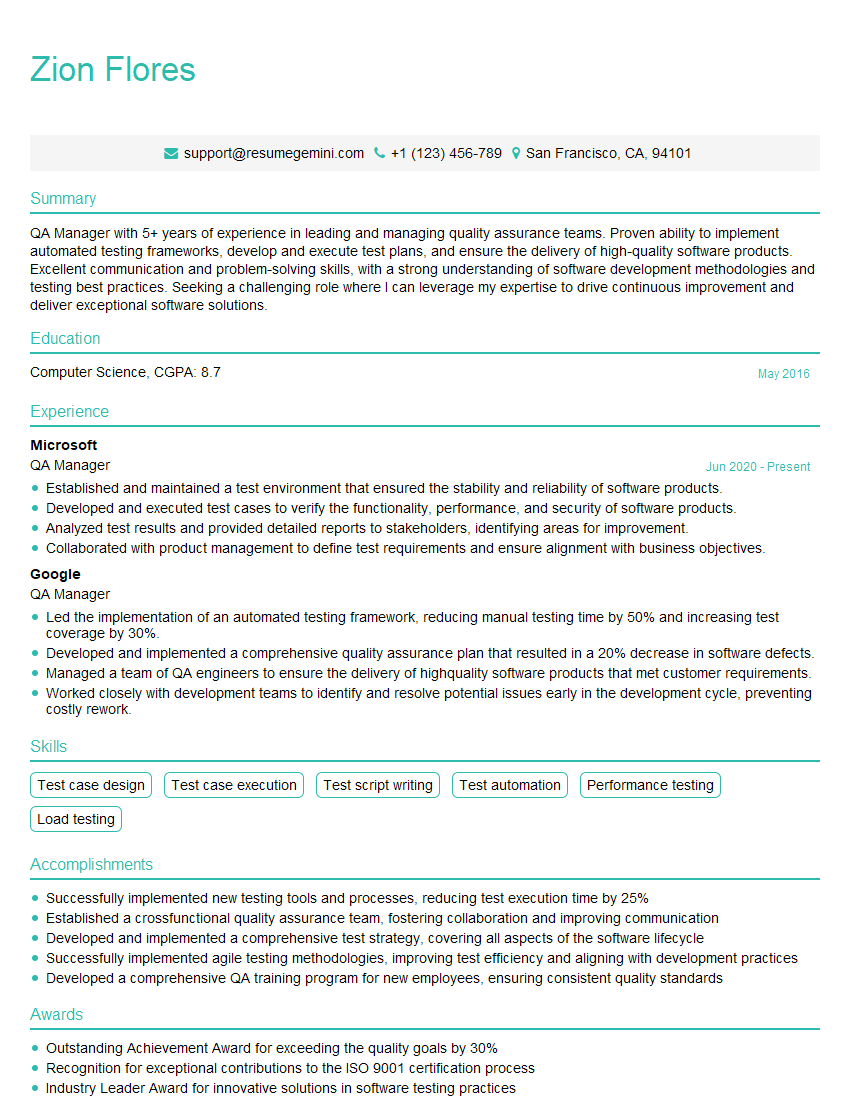

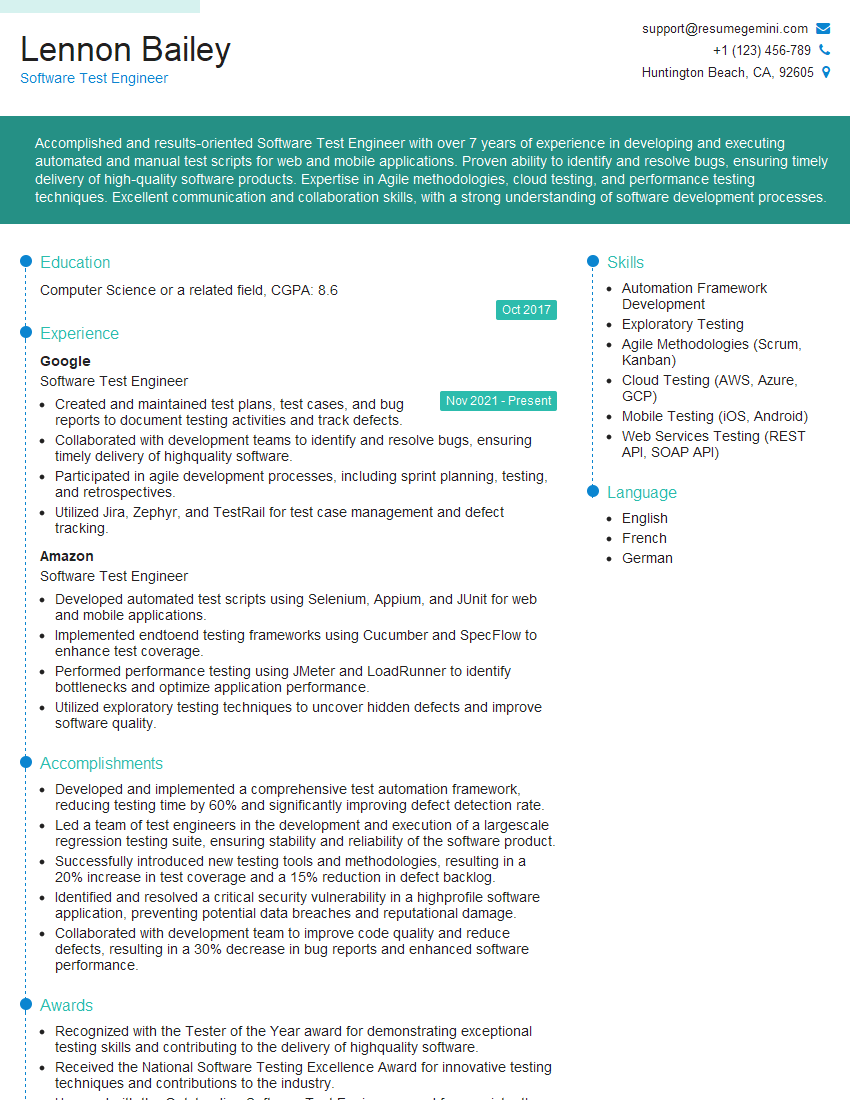

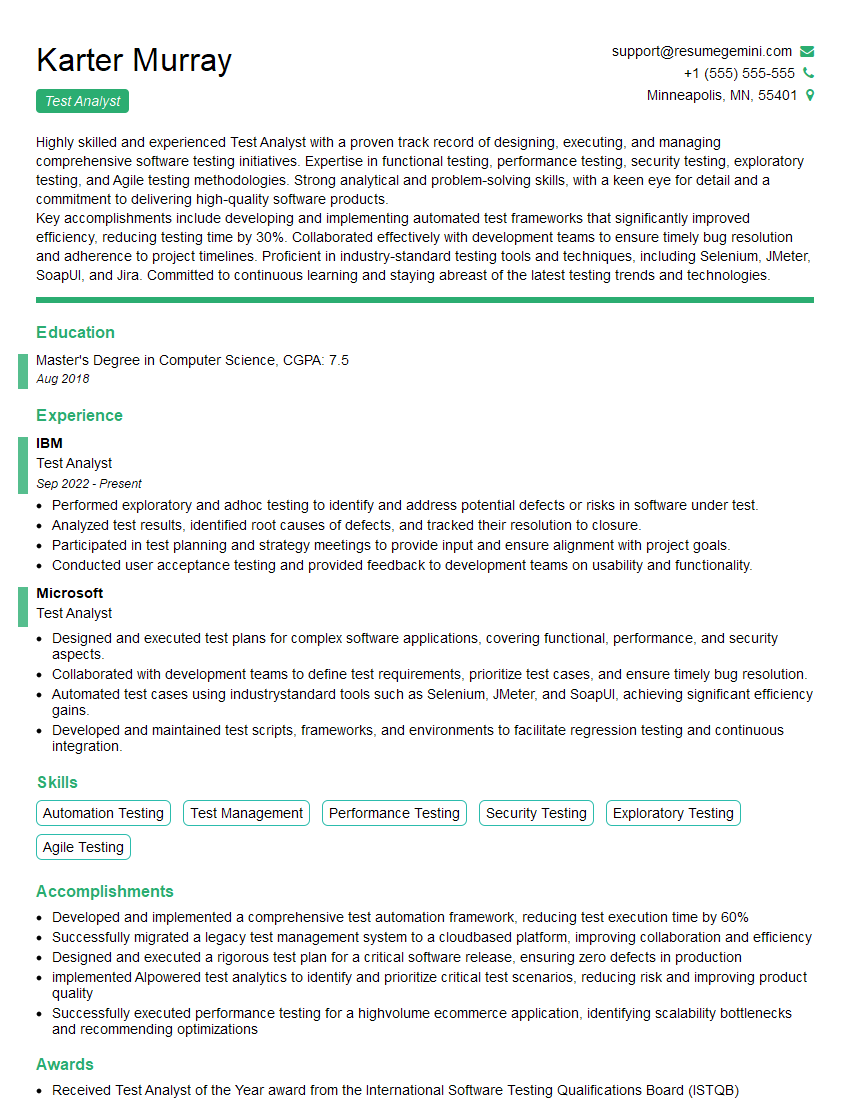

Mastering Test Plan and Test Case Design is crucial for career advancement in software quality assurance. A strong understanding of these concepts demonstrates your ability to contribute significantly to project success and showcases your analytical and problem-solving skills. To maximize your job prospects, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We offer examples of resumes tailored specifically to Test Plan and Test Case Design roles to guide you through the process.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good