Unlock your full potential by mastering the most common Thread Handling interview questions. This blog offers a deep dive into the critical topics, ensuring you’re not only prepared to answer but to excel. With these insights, you’ll approach your interview with clarity and confidence.

Questions Asked in Thread Handling Interview

Q 1. Explain the difference between a process and a thread.

Imagine a restaurant kitchen. A process is like the entire kitchen operation – it encompasses everything from receiving ingredients to preparing and serving food. It’s a self-contained unit with its own resources (staff, ovens, etc.). A thread, on the other hand, is like an individual chef or cook within that kitchen. Multiple threads (chefs) can work concurrently within the same process (kitchen) to prepare different dishes simultaneously, sharing the same resources (ovens, counters). Processes are heavier and more independent; threads are lighter and share the resources of their parent process. Creating a new process is resource-intensive, while creating a new thread within an existing process is relatively faster and lighter.

Q 2. What is context switching, and how does it impact thread performance?

Context switching is the process of the operating system saving the state of a running thread (its registers, program counter, etc.) and loading the state of another thread, allowing multiple threads to share the same processor core. Think of it like a chef quickly switching between preparing several dishes. The impact on performance is mostly due to the overhead – it takes time to save and restore the context. Frequent context switching, especially with a large number of threads, can lead to performance degradation due to this overhead. This is why efficiently managing the number of threads is crucial for optimal performance. The more threads you have, the more context switches are needed, which can significantly increase the execution time.

Q 3. Describe different thread lifecycles states.

A thread typically goes through several states during its lifetime:

- New: The thread has been created but hasn’t started executing.

- Runnable: The thread is ready to run but might be waiting for its turn on the processor.

- Running: The thread is currently executing on a processor.

- Blocked/Waiting: The thread is waiting for an event, such as I/O completion or a resource to become available.

- Dead: The thread has finished execution.

These states are managed by the operating system’s thread scheduler, which determines which thread runs when.

Q 4. Explain the concept of thread synchronization.

Thread synchronization is a mechanism that ensures that multiple threads access and manipulate shared resources (like variables or files) in a controlled manner, preventing data corruption or unexpected behavior. Without synchronization, multiple threads modifying the same data concurrently can lead to race conditions (explained later). Synchronization mechanisms ensure that only one thread accesses a shared resource at a time or that access is coordinated in a specific way.

Q 5. What are mutexes, semaphores, and condition variables? Explain their use cases and differences.

These are common synchronization primitives:

- Mutexes (Mutual Exclusion): A mutex acts like a key to a shared resource. Only the thread that holds the key (acquires the mutex) can access the resource. Other threads must wait until the key is released. This prevents concurrent access. Example: Protecting a bank account balance from concurrent updates.

- Semaphores: A semaphore is a counter that controls access to a resource. It can be initialized to a specific value representing the number of available resources. A thread decrements the counter before accessing the resource and increments it after use. If the counter is zero, the thread must wait. Useful for controlling access to a limited number of resources. Example: Controlling the number of threads accessing a database connection pool.

- Condition Variables: These enable threads to wait for a specific condition to become true before continuing execution. They’re often used in conjunction with mutexes to coordinate threads waiting on different events. Example: A thread producing data and another thread consuming it. The consumer waits on a condition variable until data is available.

The key difference is their level of granularity. Mutexes provide exclusive access; semaphores allow controlled concurrent access; and condition variables enable sophisticated waiting based on specific conditions.

Q 6. Explain deadlock and how to prevent it.

A deadlock occurs when two or more threads are blocked indefinitely, waiting for each other to release the resources that they need. Imagine two trains on a single-track railway approaching each other from opposite directions – neither can proceed until the other moves, resulting in a standstill.

Preventing Deadlocks:

- Mutual Exclusion: Carefully manage access to shared resources.

- Hold and Wait: Avoid allowing a thread to hold one resource while waiting for another.

- No Preemption: Resources should not be forcibly taken away from a thread; they should be released voluntarily.

- Circular Wait: Avoid circular dependencies where Thread A waits for B, B waits for C, and C waits for A. Proper resource ordering can help.

Techniques like acquiring locks in a predefined order for multiple resources or using timeouts to avoid indefinite waiting can help prevent deadlocks.

Q 7. What is a race condition? How can you identify and prevent race conditions?

A race condition occurs when multiple threads access and manipulate shared data concurrently, and the final outcome depends on the unpredictable order in which the threads execute. Imagine two chefs simultaneously trying to add the final ingredient to a dish; the result could be unexpected if they don’t coordinate their actions.

Identifying and Preventing Race Conditions:

- Careful Code Review: Examine code for potential concurrent access to shared data.

- Use Synchronization Primitives: Employ mutexes, semaphores, or condition variables to control access to shared resources and ensure that operations are atomic (indivisible).

- Testing and Debugging: Thorough testing, including stress testing with multiple threads, can help reveal race conditions. Debugging tools can assist in tracking thread execution and identifying conflicts.

- Thread-safe Data Structures: Use data structures designed for concurrent access (e.g., concurrent hashmaps).

Prevention is key. Careful design and rigorous testing are crucial to avoid race conditions.

Q 8. Explain the concept of thread safety.

Thread safety ensures that multiple threads can access and manipulate shared resources (like variables or data structures) concurrently without causing data corruption or unexpected behavior. Think of it like a well-organized library: multiple people can borrow and return books simultaneously without chaos, as long as there are proper procedures in place.

If a program isn’t thread-safe, it might suffer from race conditions, where the final state of the shared resource depends on the unpredictable order in which threads execute. This often leads to inconsistent or incorrect results.

Q 9. How do you ensure thread safety in your code?

Ensuring thread safety involves using synchronization mechanisms to control access to shared resources. The most common approach is using locks (mutexes), which allow only one thread to access a critical section of code at a time. Other techniques include:

- Mutexes (Mutual Exclusion):

lock (sharedResource) { // Access shared resource }This prevents race conditions by ensuring exclusive access. - Semaphores: Control access to a resource by a limited number of threads. Useful when you want to allow multiple threads but limit concurrency.

- Condition Variables: Allow threads to wait for specific conditions to be met before proceeding, improving efficiency in producer-consumer scenarios.

- Thread-Local Storage (TLS): Each thread gets its own copy of a variable, eliminating the need for synchronization.

- Immutable Objects: If your shared objects are immutable (their state cannot be changed after creation), then no synchronization is needed as they are inherently thread-safe.

Careful design and coding practices are crucial. Avoid shared mutable state whenever possible. Prefer immutable data structures where applicable.

Q 10. Describe different approaches to inter-thread communication.

Inter-thread communication allows threads to exchange data and coordinate their activities. Common approaches include:

- Shared Memory: Threads communicate by accessing shared variables or data structures. Requires careful synchronization to prevent race conditions (as discussed above).

- Message Queues: Threads communicate by sending and receiving messages asynchronously. This decouples threads, making the system more robust and scalable. Examples include Java’s

BlockingQueueor similar constructs in other languages. - Pipes: Similar to message queues but usually used for streams of data. One thread writes to the pipe, and another reads from it.

- Sockets: For communication between threads in different processes or even on different machines.

The choice of method depends on the specific needs of the application. Message queues often provide better scalability and fault tolerance compared to shared memory.

Q 11. Explain the producer-consumer problem and its solutions.

The producer-consumer problem describes a common scenario where one or more producer threads generate data and place it in a shared buffer, and one or more consumer threads retrieve data from the buffer. The challenge is to ensure that producers don’t overwrite data before consumers have read it, and vice-versa.

Solutions typically involve synchronization mechanisms. A bounded buffer with semaphores (or condition variables) is a common approach. Semaphores manage access to the buffer, preventing overflow and underflow. Condition variables can signal producers when the buffer is full, and consumers when the buffer is empty. This approach ensures efficient resource utilization and avoids deadlocks.

Using a BlockingQueue in Java simplifies the implementation considerably. The put() method blocks the producer if the queue is full, and take() blocks the consumer if the queue is empty.

Q 12. What are the advantages and disadvantages of multithreading?

Multithreading offers several advantages:

- Improved responsiveness: The application remains responsive even during long-running tasks because other threads continue execution.

- Resource sharing: Threads can share resources efficiently, reducing memory footprint.

- Parallelism: On multi-core processors, threads can truly run in parallel, significantly speeding up execution.

However, there are also disadvantages:

- Increased complexity: Threading adds complexity to design, implementation, and debugging.

- Synchronization overhead: Synchronization mechanisms introduce overhead and can become a bottleneck.

- Race conditions and deadlocks: These errors are difficult to reproduce and debug.

- Resource contention: Multiple threads competing for the same resources can lead to performance degradation.

The decision to use multithreading should be based on a careful assessment of the trade-offs. It’s not always the best solution; sometimes, asynchronous programming or other approaches might be more appropriate.

Q 13. How do you handle exceptions in multithreaded applications?

Handling exceptions in multithreaded applications requires careful planning. Exceptions thrown in one thread shouldn’t bring down the entire application. Several strategies are commonly used:

- Thread-local exception handling: Catch and handle exceptions within each thread, potentially logging errors or taking corrective actions locally.

- Centralized exception handling: Gather exceptions from various threads in a central location (e.g., a queue) for processing or logging. This can simplify monitoring and management of errors across the application.

- UncaughtExceptionHandler: In Java, you can use

Thread.setDefaultUncaughtExceptionHandler()to handle exceptions that aren’t caught within a thread. This is useful for preventing application crashes due to unhandled exceptions in worker threads.

It is crucial to design your application’s error handling to maintain resilience even in the face of unforeseen exceptions. Robust logging is essential for troubleshooting and debugging.

Q 14. What is the difference between join() and wait()?

Both join() and wait() are used for thread synchronization, but they serve different purposes:

join(): Makes the calling thread wait until the target thread completes execution. Think of it as waiting for a task to finish before moving on.thread.join();wait(): Causes a thread to release the lock on an object and block until another thread notifies it (usingnotify()ornotifyAll()).wait()is used for inter-thread communication and coordination in situations where threads need to synchronize based on conditions. It requires careful usage to avoid deadlocks.

In essence, join() is used for waiting on a thread’s completion, while wait() is used for conditional synchronization between threads.

Q 15. Explain the concept of thread pools and their benefits.

Imagine a restaurant kitchen: instead of having one cook handle all orders, you have a team of cooks (threads) working simultaneously. A thread pool is like a team of pre-assigned cooks, ready to tackle incoming orders (tasks). Instead of creating a new cook for each order, which is resource-intensive, you efficiently utilize the existing pool.

- Benefits:

- Resource Efficiency: Reduces the overhead of creating and destroying threads constantly. Creating a thread takes time and resources. A pool reuses threads.

- Improved Responsiveness: Threads are readily available to handle incoming tasks, ensuring faster response times.

- Controlled Concurrency: Prevents the creation of too many threads, which can lead to system overload.

Example: In a web server, a thread pool handles incoming requests. Each request is assigned to an available thread in the pool, processed, and the thread returns to the pool for reuse.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you manage resources in a multithreaded environment?

Managing resources in a multithreaded environment is crucial to prevent deadlocks, race conditions, and other concurrency issues. Think of it as managing shared toys among multiple children – you need rules to avoid conflicts.

- Synchronization Mechanisms: These are like the rules for sharing toys. Common techniques include:

- Mutexes (Mutual Exclusion): Only one thread can access a shared resource at a time. It’s like having a single key to a toy box.

- Semaphores: Control access to a resource by a limited number of threads. Imagine a toy box with only two keys.

- Condition Variables: Allow threads to wait for specific conditions before accessing a resource. This is like a child waiting for another child to finish playing before using the toy.

- Monitors: Higher-level constructs that encapsulate shared resources and synchronization mechanisms. Think of a designated play area with clear rules.

Resource Management Strategies:

- Proper Resource Allocation: Assign resources to threads carefully, avoiding over-allocation and resource starvation.

- Resource Deallocation: Release resources promptly when they are no longer needed to prevent leaks and deadlocks.

- Error Handling: Implement robust error handling to gracefully handle exceptions and resource failures.

Example: In a database application, you’d use mutexes to protect database connections from concurrent access, ensuring data consistency.

Q 17. What are atomic operations, and why are they important in multithreaded programming?

Atomic operations are like indivisible actions; they’re either completed entirely or not at all – no partially done work. Think of it as a single, uninterrupted move of a chess piece: you can’t move it halfway and then change your mind.

Importance in Multithreading: Atomic operations are vital for preventing race conditions, where multiple threads concurrently access and modify shared data leading to unpredictable results. Since atomic operations are guaranteed to complete without interruption, they ensure data consistency in a multithreaded environment.

Example: Incrementing a shared counter is not atomic in many languages, but many languages provide an atomic increment operation that handles this safely.

// Example (Conceptual, language-specific syntax may vary)atomic_increment(sharedCounter);Without atomicity, two threads might each read the counter’s value, increment it, and write it back, resulting in a loss of an increment.

Q 18. Describe different thread scheduling algorithms.

Thread scheduling algorithms determine which thread gets to run on a CPU core at a given time. Different algorithms offer varying performance characteristics.

- First-Come, First-Served (FCFS): Threads are scheduled in the order they arrive. Simple but can lead to starvation.

- Shortest Job First (SJF): Threads with shorter execution times are prioritized. Optimizes overall throughput but requires predicting execution time.

- Priority Scheduling: Threads are assigned priorities, and higher-priority threads are favored. Can lead to starvation of low-priority threads.

- Round Robin: Each thread gets a fixed time slice (quantum) before being preempted. Fair but can lead to context-switching overhead.

- Multilevel Queue Scheduling: Threads are assigned to different queues with varying priorities. Offers flexibility but can be complex to manage.

The choice of algorithm depends on the application’s requirements and the characteristics of the threads. For example, a real-time system might prioritize threads with strict deadlines (priority scheduling), while a general-purpose application might use round robin for fairness.

Q 19. Explain the concept of starvation in multithreading.

Starvation in multithreading occurs when a thread is perpetually unable to access the resources it needs to execute, even though resources are available. Imagine a child constantly being interrupted when trying to play with a toy.

Causes:

- Priority Inversion: A lower-priority thread holds a resource needed by a higher-priority thread.

- Unfair Scheduling Algorithms: Algorithms that favor certain threads might starve others.

- Deadlocks: A set of threads are blocked indefinitely, waiting for each other to release resources.

Consequences: Starvation can lead to performance degradation, application unresponsiveness, and even application crashes. It’s critical to design multithreaded applications carefully to avoid this.

Example: In a multithreaded application with high priority threads constantly hogging a CPU core, the lower priority threads might not get to execute resulting in starvation.

Q 20. How can you measure the performance of a multithreaded application?

Measuring the performance of a multithreaded application requires careful consideration of multiple factors.

- Throughput: The total amount of work completed per unit of time. Measures how quickly tasks are processed.

- Latency: The time it takes to complete a single task. Measures the responsiveness of the system.

- Resource Utilization: How efficiently the application uses CPU, memory, and other resources. Identifies bottlenecks.

- Scalability: How well the application performs as the number of threads or tasks increases. Tests the robustness of the application under load.

Tools and Techniques:

- Profilers: Provide detailed information on thread execution times, resource usage, and potential bottlenecks.

- Performance Counters: System-level metrics that can track CPU usage, memory usage, and other resource metrics.

- Benchmarking: Running controlled tests under various load conditions to measure performance under different scenarios.

Example: Using a profiler to identify CPU-bound threads or memory leaks in a multithreaded application can greatly improve performance.

Q 21. What are some common thread-related debugging techniques?

Debugging multithreaded applications can be significantly more complex than single-threaded applications due to the non-deterministic nature of concurrent execution.

- Debuggers with Multithreading Support: Use debuggers that allow you to step through threads individually, inspect their states, and set breakpoints on specific threads.

- Logging: Implement comprehensive logging to track thread activities, resource accesses, and other relevant information. Timestamp entries precisely to understand the order of events.

- Synchronization Debugging Tools: Use tools that can detect race conditions, deadlocks, and other concurrency issues.

- Reproducible Test Cases: Create test cases that reliably reproduce the errors you are observing. This simplifies debugging significantly.

- Thread-Specific IDs and Names: Assign unique IDs or names to threads to make it easier to track their actions within logs and debugging tools.

- Using Thread-Local Storage: If data is thread specific, store it in ThreadLocal variables to avoid unnecessary synchronization.

Example: A log might show multiple threads accessing a shared variable at nearly the same time, which could indicate a race condition.

Q 22. Describe your experience with different concurrency models (e.g., fork-join).

Concurrency models define how multiple tasks execute seemingly simultaneously. I’ve extensive experience with several, including the fork-join framework. Fork-join excels at dividing large tasks into smaller, independent subtasks that can be executed concurrently. Imagine processing a huge image: fork-join allows splitting it into smaller sections, processed by different threads, and then rejoined to form the final result. This significantly improves performance, especially on multi-core processors. Other models I’ve worked with include thread pools, which manage a set of worker threads to efficiently handle incoming tasks, preventing the overhead of constantly creating and destroying threads. I’ve also utilized coroutines and asynchronous programming in Python’s asyncio library and Go’s goroutines for handling I/O-bound operations efficiently without blocking the main thread.

In a previous project, I optimized a large-scale data processing pipeline by implementing a fork-join approach. The original sequential code took hours; the fork-join implementation reduced processing time to under 30 minutes. The key was effectively partitioning the data and managing the merging of results back into a cohesive whole. I also addressed potential bottlenecks by carefully considering the size of the subtasks to balance the workload between threads and minimize synchronization overhead.

Q 23. How do you handle thread priorities?

Thread priorities dictate the order in which the operating system schedules threads for execution. Higher-priority threads generally get more CPU time than lower-priority threads. This is crucial for managing resource allocation in multithreaded applications. For instance, a real-time system might assign high priority to threads responsible for handling critical sensor data, ensuring timely responses. I’ve used thread priorities in several projects to ensure that time-sensitive operations don’t get starved by less important tasks. This often involves careful analysis of the application’s requirements to determine appropriate priority levels for different threads.

However, over-reliance on thread priorities can be problematic. Improperly assigned priorities can lead to priority inversion, where a high-priority thread is blocked by a lower-priority thread holding a resource it needs. Therefore, careful design and testing are essential. I’ve encountered situations where adjusting thread priorities significantly improved application responsiveness, but in other cases, relying solely on priorities wasn’t the most effective solution, and other synchronization mechanisms were preferred. It’s important to understand the limitations and potential pitfalls associated with thread priorities.

Q 24. Explain the concept of thread local storage.

Thread Local Storage (TLS) provides each thread with its own independent copy of a variable. This is incredibly useful for managing per-thread data without the need for explicit synchronization mechanisms. Think of it like each thread having its own private locker for storing its specific data. This eliminates race conditions and simplifies code significantly. For example, if you have a logging function that needs to store thread-specific information like the thread ID, TLS is ideal. Each thread can store its ID in its TLS slot without worrying about conflicts with other threads.

In a project involving database connections, I used TLS to store each thread’s database connection object. This prevented threads from accidentally using each other’s connections, leading to more robust and easier to maintain code. The use of TLS significantly simplified the management of database connections, and avoided complex synchronization patterns. While TLS is convenient, it’s crucial to remember that the data stored in TLS is not shared across threads; therefore careful consideration should be given to data sharing mechanisms if needed across threads.

Q 25. What are some common challenges in multithreaded programming?

Multithreaded programming presents several challenges. Race conditions are a major concern. This occurs when multiple threads access and modify the same shared resource concurrently, leading to unpredictable results. Deadlocks happen when two or more threads are blocked indefinitely, waiting for each other to release resources. Starvation arises when a thread is repeatedly denied access to a resource, preventing it from making progress. These issues can be notoriously difficult to debug because they often manifest only under specific timing conditions. Other challenges include ensuring data consistency across threads and managing thread synchronization effectively without introducing performance bottlenecks.

I’ve encountered all these problems firsthand. For example, a deadlock in a previous project was resolved by carefully examining the order of resource acquisition and release using a resource ordering scheme. To manage race conditions, I typically employ synchronization mechanisms like mutexes, semaphores, or condition variables. The choice of synchronization mechanism depends on the specific requirements of the application. Profiling tools are crucial for pinpointing performance bottlenecks related to thread synchronization.

Q 26. Explain your experience using thread-safe data structures.

Thread-safe data structures are designed to handle concurrent access from multiple threads without data corruption or race conditions. I have extensive experience using various thread-safe data structures, including concurrent hash maps, queues, and lists provided by Java’s java.util.concurrent package and C++’s standard library. These structures often employ internal locking mechanisms to ensure atomic operations and protect data integrity. For instance, ConcurrentHashMap in Java offers superior performance compared to synchronizing a regular HashMap, because it partitions the map into segments, allowing multiple threads to access different segments concurrently. This minimizes contention and improves throughput.

In a recent project that involved processing a large volume of user data concurrently, I implemented a thread-safe queue using ConcurrentLinkedQueue to manage incoming requests, preventing data loss and ensuring efficient task distribution amongst the worker threads. This significantly improved the system’s scalability and reliability. The choice of appropriate thread-safe data structures is crucial for performance and correctness in concurrent applications. Understanding the properties and limitations of each structure is critical for selecting the best one for a specific application.

Q 27. Discuss the trade-offs between using threads and asynchronous programming.

Threads and asynchronous programming both provide concurrency, but differ significantly in their approach. Threads create multiple execution paths within a single process, while asynchronous programming uses a single thread to manage multiple tasks by switching between them based on I/O events. Threads are generally better suited for CPU-bound tasks where the goal is to leverage multiple cores to perform calculations, while asynchronous programming excels at handling I/O-bound tasks, such as network requests or disk access, where a thread would be blocked waiting for I/O completion. Threads have a greater overhead compared to asynchronous operations due to context switching, thread creation, and management, while asynchronous operations have a lower overhead as they operate within a single thread.

The choice depends on the application’s nature. A CPU-intensive video encoding application might benefit from multithreading, using each core to process a portion of the video, while a web server dealing with numerous concurrent connections would likely be more efficient using asynchronous I/O to handle requests without blocking the main thread. I’ve seen projects use a hybrid approach, combining threads with asynchronous I/O to handle both CPU-bound and I/O-bound tasks effectively.

Q 28. How would you design a thread-safe counter?

Designing a thread-safe counter requires careful consideration to prevent race conditions. A simple approach uses a mutex (or similar locking mechanism) to protect the counter variable. When a thread wants to increment the counter, it acquires the mutex, increments the counter, and releases the mutex. This ensures that only one thread can access and modify the counter at a time, preventing inconsistencies.

// Example (Conceptual C++):

class ThreadSafeCounter {

private:

int counter = 0;

std::mutex mutex;

public:

void increment() {

std::lock_guard

However, this approach can lead to performance bottlenecks with high concurrency. More advanced techniques, such as atomic operations or lock-free data structures, can provide better performance by minimizing the time spent holding locks. Atomic operations provide a way to perform operations on a variable in an uninterruptible manner, while lock-free data structures employ clever algorithms to avoid locks entirely. The choice depends on factors such as performance requirements and the level of complexity one is comfortable with.

Key Topics to Learn for Thread Handling Interview

- Thread Creation and Lifecycle: Understand the different ways to create threads (e.g., extending the Thread class, implementing the Runnable interface), their states (new, runnable, blocked, waiting, timed waiting, terminated), and how to manage their lifecycle effectively.

- Synchronization and Concurrency: Grasp the concepts of race conditions, deadlocks, and livelocks. Explore synchronization mechanisms like mutexes, semaphores, monitors, and the importance of thread safety in multithreaded applications. Practice using these techniques to prevent concurrency issues.

- Inter-thread Communication: Learn how threads communicate with each other using mechanisms like wait/notify, condition variables, and shared memory. Understand the challenges and best practices for efficient and reliable inter-thread communication.

- Thread Pools and Executors: Familiarize yourself with thread pools and executors for efficient thread management. Understand their benefits in terms of resource utilization and performance optimization.

- Practical Applications: Consider real-world scenarios where thread handling is crucial, such as parallel processing, concurrent data access, responsive user interfaces, and high-throughput systems. Be prepared to discuss your experience or understanding of these scenarios.

- Debugging and Troubleshooting: Develop skills in identifying and resolving common thread-related issues. Understand the use of debugging tools to analyze thread behavior and diagnose problems effectively.

- Thread Safety and Immutability: Understand how to design thread-safe classes and utilize immutable objects to minimize concurrency problems. This is crucial for robust and reliable multithreaded systems.

Next Steps

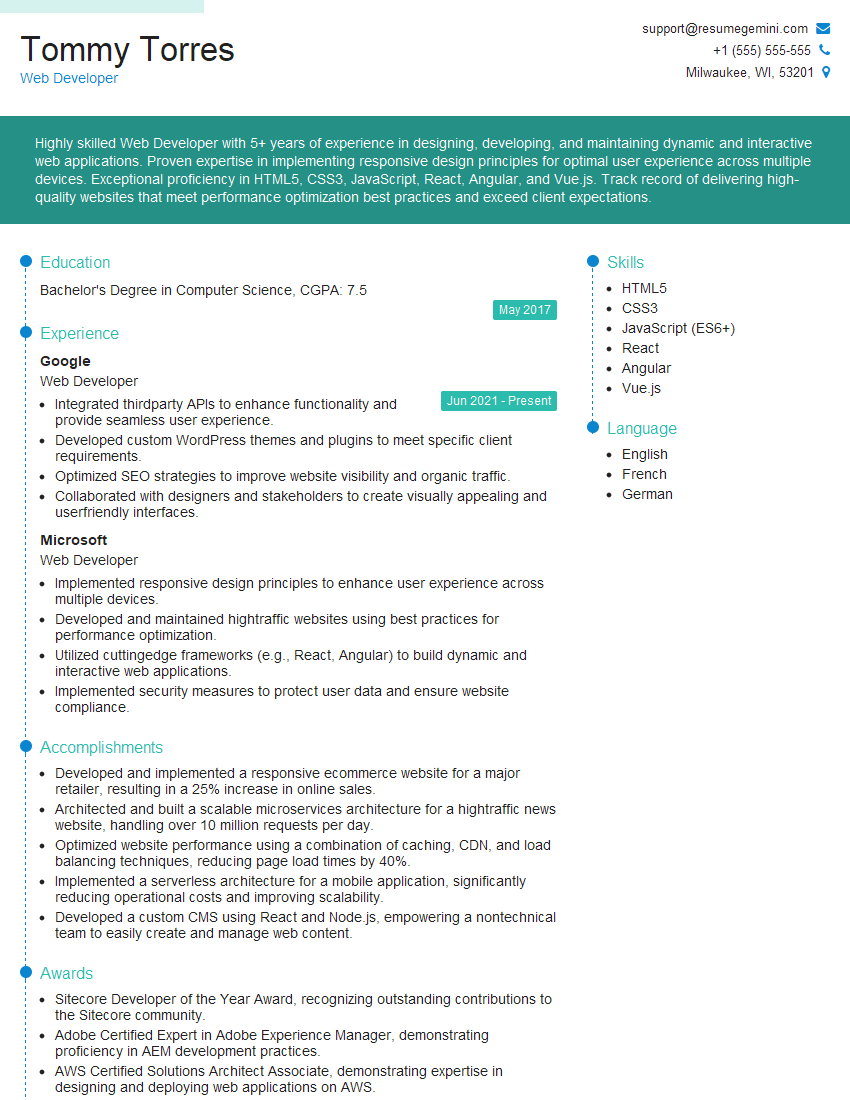

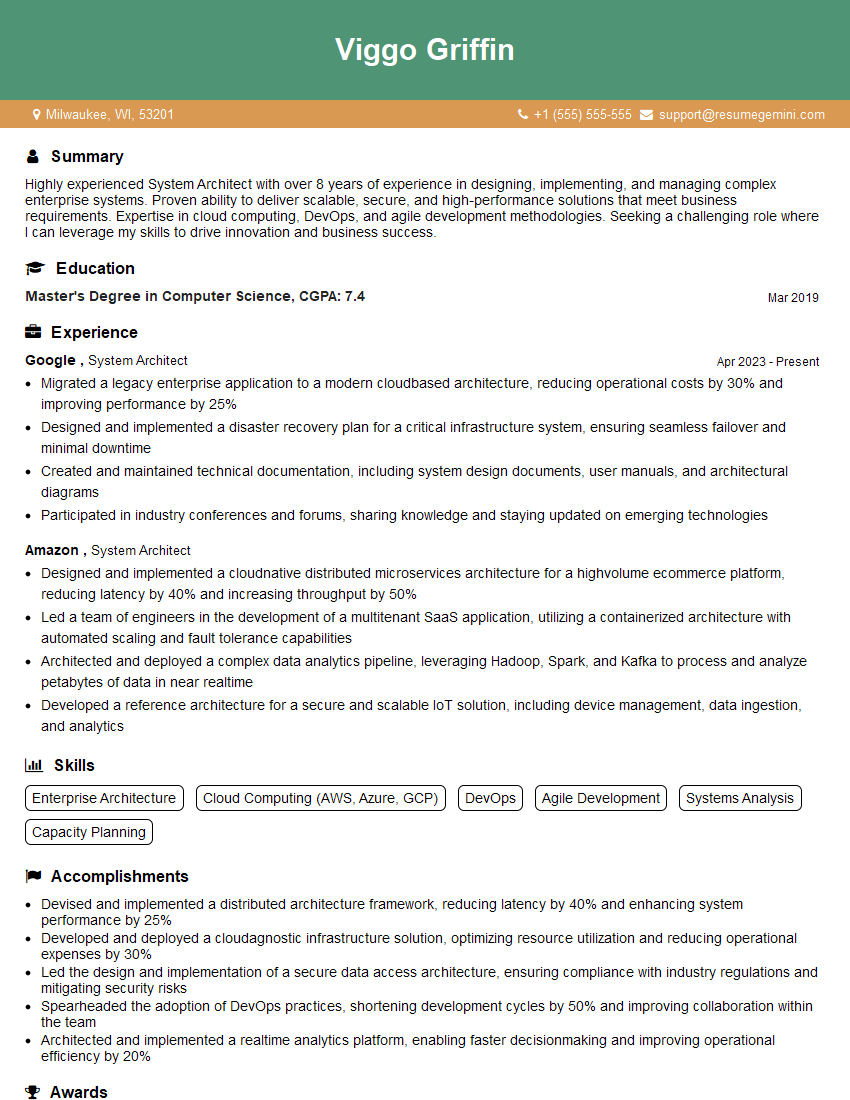

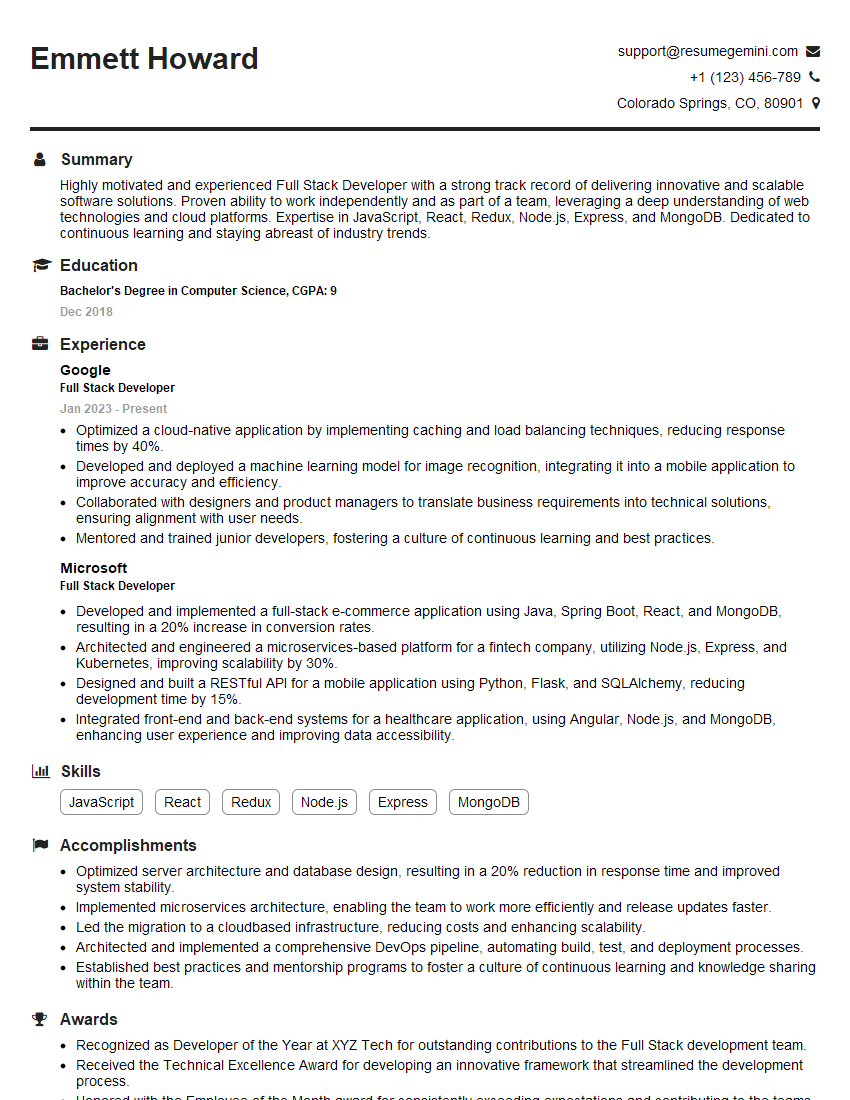

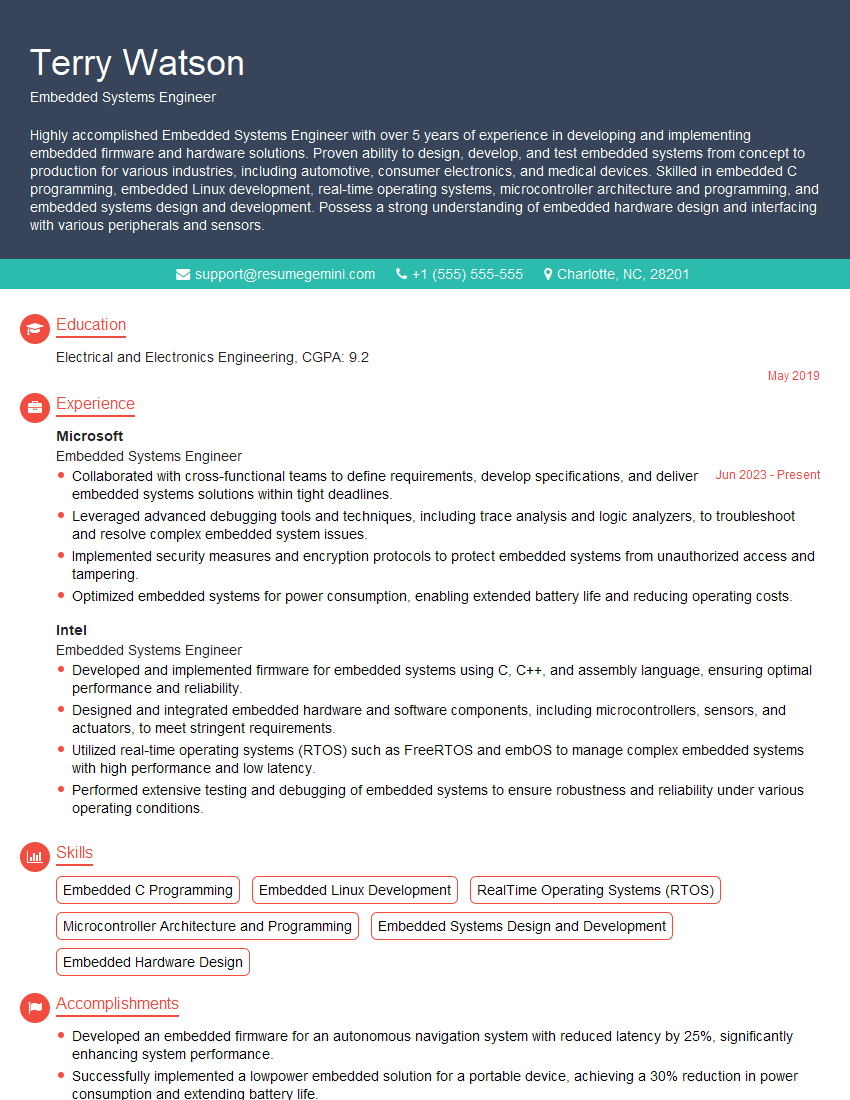

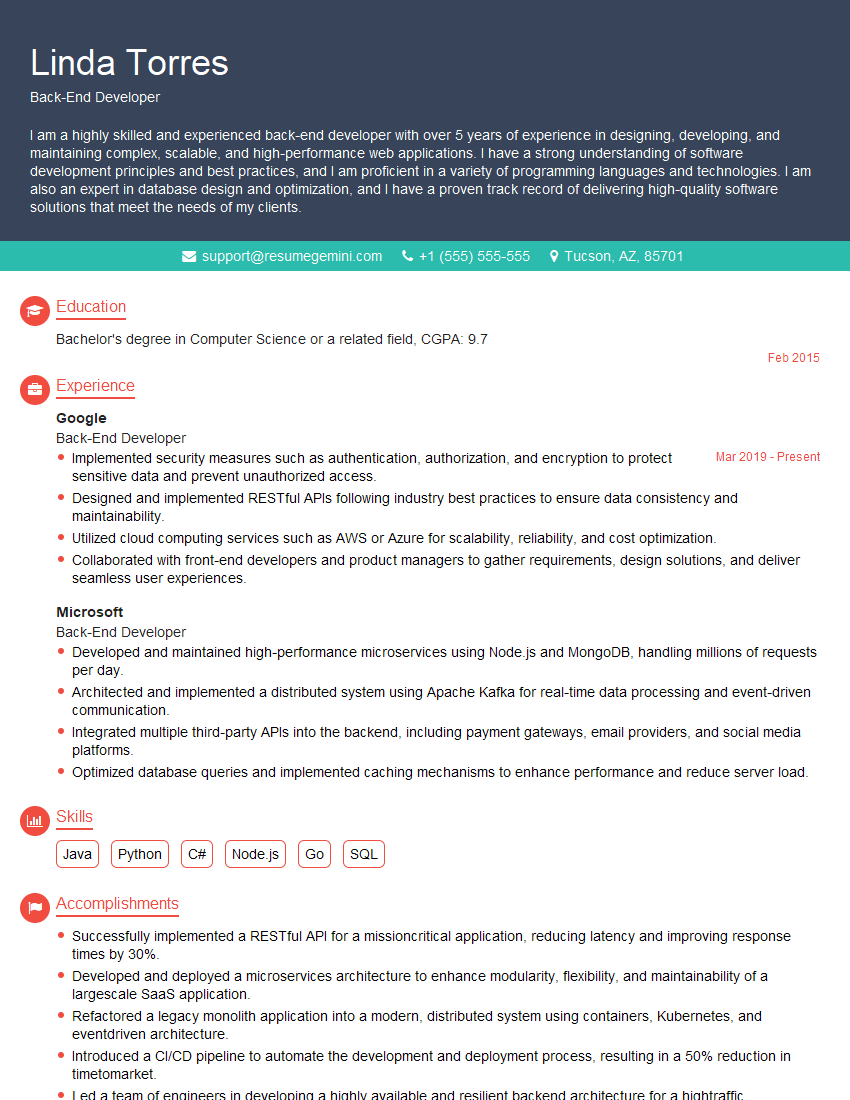

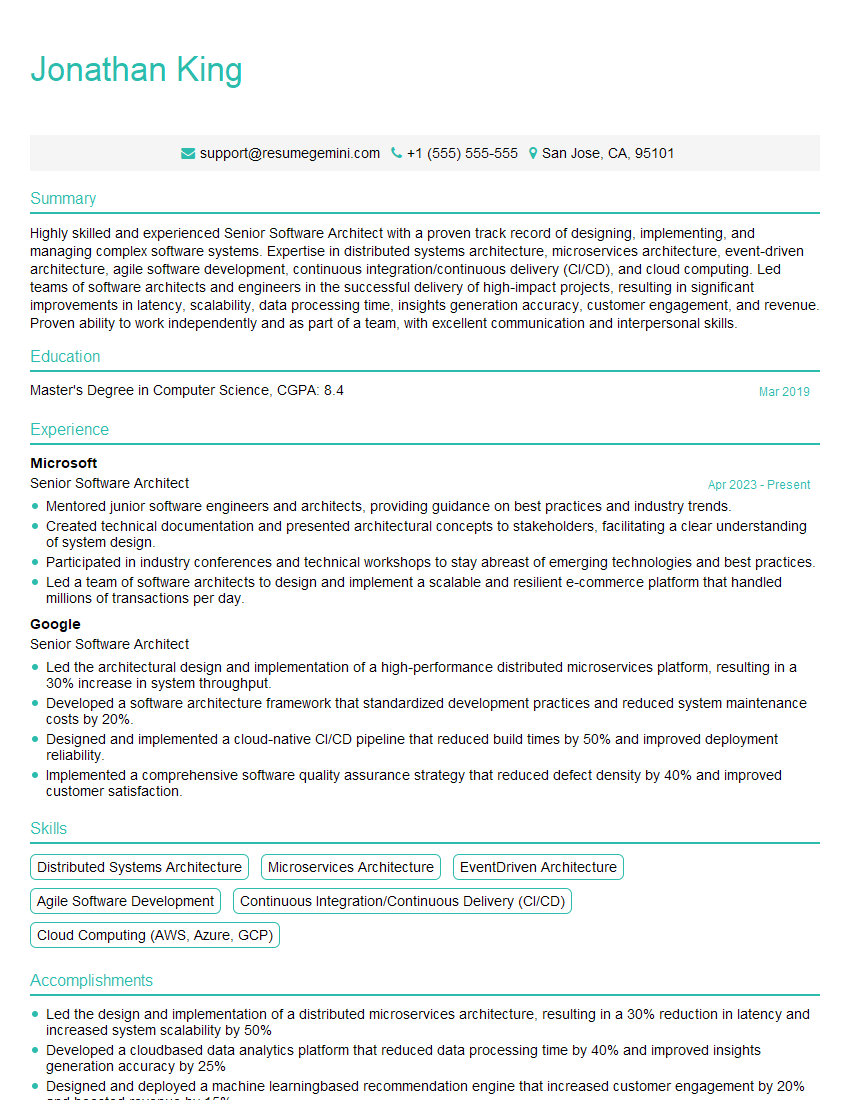

Mastering thread handling is crucial for career advancement in software development, opening doors to high-demand roles requiring advanced concurrency skills. To maximize your job prospects, create an ATS-friendly resume that highlights your expertise. ResumeGemini is a trusted resource for building professional and effective resumes that get noticed. Use ResumeGemini to craft a compelling narrative showcasing your thread handling capabilities. Examples of resumes tailored to Thread Handling are available to guide you.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good