The right preparation can turn an interview into an opportunity to showcase your expertise. This guide to Understanding of quality control and testing methodologies interview questions is your ultimate resource, providing key insights and tips to help you ace your responses and stand out as a top candidate.

Questions Asked in Understanding of quality control and testing methodologies Interview

Q 1. Explain the difference between Verification and Validation.

Verification and validation are two crucial aspects of quality control, often confused but distinct. Think of verification as checking if we built the product right, while validation checks if we built the right product.

Verification focuses on the process. It’s about ensuring that each stage of development adheres to specifications and standards. This involves reviews, inspections, and walkthroughs of code, design documents, and test plans to confirm they meet requirements. For example, verifying that a function calculates the correct area of a circle according to the mathematical formula.

Validation, on the other hand, focuses on the outcome. It verifies that the finished product meets the user needs and requirements. This involves testing the software with real-world data and scenarios to ensure it performs as expected and satisfies the defined objectives. For example, validating that a user can successfully complete an online purchase on an e-commerce website.

In short: Verification is about process adherence, while validation is about meeting the intended goals.

Q 2. Describe the SDLC (Software Development Life Cycle) and the role of QA within it.

The Software Development Life Cycle (SDLC) is a structured process for planning, creating, testing, and deploying software. There are various SDLC models, including Waterfall, Agile, Spiral, and Iterative. QA plays a vital role throughout the entire lifecycle.

Waterfall: QA is usually a distinct phase, happening after development is complete. This can lead to late detection of defects.

Agile: QA is integrated throughout the development process, with continuous testing and feedback loops. This early involvement helps to catch bugs quickly and reduce costs.

Regardless of the SDLC model, QA’s responsibilities generally include:

- Requirement Analysis: Understanding the requirements to define testing scope and objectives.

- Test Planning: Creating a comprehensive test plan that outlines the testing strategy, resources, and timeline.

- Test Case Design: Developing detailed test cases covering various aspects of the software.

- Test Execution: Running the test cases and documenting the results.

- Defect Reporting: Reporting and tracking defects found during testing.

- Test Closure: Summarizing the testing activities and reporting on overall quality.

Effective QA is essential for delivering high-quality software that meets user expectations and business needs.

Q 3. What are the different types of software testing?

Software testing encompasses a wide variety of techniques, categorized in many ways. Here are some key types:

- Unit Testing: Testing individual components or modules of the software.

- Integration Testing: Testing the interaction between different modules or components.

- System Testing: Testing the entire system as a whole.

- Acceptance Testing: Testing the system to ensure it meets the user’s requirements and is ready for deployment (UAT – User Acceptance Testing is a common type).

- Regression Testing: Retesting the software after making changes to ensure that existing functionality still works.

- Performance Testing: Evaluating the system’s response time, stability, and scalability under various loads (Load Testing, Stress Testing, Endurance Testing are sub-types).

- Security Testing: Identifying vulnerabilities and weaknesses in the system’s security.

- Usability Testing: Assessing how easy and user-friendly the software is.

- Compatibility Testing: Verifying the software’s compatibility with different browsers, operating systems, and devices.

The specific types of testing used will depend on the project’s requirements and complexity.

Q 4. Explain the difference between black-box, white-box, and gray-box testing.

These testing methods differ in their approach to testing the software’s internal structure:

- Black-box testing: Treats the software as a ‘black box,’ focusing solely on inputs and outputs without knowledge of the internal code. Testers design test cases based on requirements and specifications, without seeing the underlying code. This approach is useful for finding functional errors and usability issues.

- White-box testing: Requires in-depth knowledge of the software’s internal structure, code, and algorithms. Testers can create test cases that target specific code paths and internal states. This approach is excellent for finding logic errors, code coverage issues and security vulnerabilities.

- Gray-box testing: A combination of black-box and white-box testing. Testers have partial knowledge of the internal structure, such as system architecture or design documents, to guide their testing but don’t have access to the source code. This approach offers a good balance between thoroughness and practicality.

Think of it like a car mechanic: black-box testing is like checking if the car starts and runs, white-box testing is like examining the engine parts and wiring to find potential problems, and gray-box testing is like checking the engine’s performance using diagnostic tools without complete disassembly.

Q 5. What is test-driven development (TDD)?

Test-Driven Development (TDD) is an agile software development approach where test cases are written before the code they are intended to test. This “test-first” approach ensures that code is written to meet specific requirements and that the code is testable from the beginning.

The basic cycle of TDD involves these steps:

- Write a failing test: Before writing any code, a test is written that defines the expected behavior of the functionality to be implemented.

- Write the minimal code necessary to pass the test: The simplest possible code is written to satisfy the test.

- Refactor the code: Improve the code’s design, structure, and readability without changing its functionality. The test should still pass after refactoring.

TDD promotes cleaner, more maintainable code by enforcing a clear definition of requirements and ensuring that the code works as expected. It’s particularly effective in preventing bugs and improving code quality.

Q 6. Describe your experience with Agile methodologies and QA.

In my experience, Agile methodologies and QA are deeply intertwined. I’ve worked extensively in Scrum and Kanban environments, where QA is not a separate phase but an integrated part of each sprint or iteration.

My contributions in Agile QA include:

- Participating in sprint planning: Estimating testing efforts and defining acceptance criteria for user stories.

- Daily stand-ups: Providing updates on testing progress and reporting any roadblocks.

- Sprint reviews: Demonstrating the tested functionality and providing feedback to the development team.

- Continuous testing: Performing various types of testing (unit, integration, system, etc.) throughout the sprint.

- Close collaboration with developers: Working closely with developers to understand the code and identify potential issues early on.

Agile’s emphasis on iterative development and continuous feedback aligns perfectly with QA’s role in ensuring high-quality software delivery. The iterative nature allows for early detection and resolution of issues, minimizing risks and reducing development costs.

Q 7. How do you prioritize test cases?

Prioritizing test cases is crucial for efficient and effective testing, especially when time and resources are limited. I use a combination of methods to prioritize test cases, depending on the project’s context and risk profile:

- Risk-based prioritization: Test cases that cover high-risk areas (critical functionality, security features, etc.) are given higher priority.

- Business value prioritization: Test cases that cover functionalities crucial to the business value are prioritized higher. Features that users will use most frequently or those which contribute the greatest value should be tested first.

- Test coverage prioritization: Test cases that improve test coverage are prioritized to ensure comprehensive testing.

- Dependency prioritization: Test cases that depend on the functionality of other components are prioritized accordingly (ensuring dependencies are tested first).

- Criticality/Severity prioritization: Tests addressing high severity issues (potential for major system failure, data loss etc.) are given highest priority.

I often use a prioritization matrix or a risk assessment model to systematically analyze and rank test cases. The process also involves regular review and adjustment to reflect changes in project requirements and priorities.

Q 8. What are some common software testing metrics?

Software testing metrics are crucial for evaluating the effectiveness of testing efforts and the overall quality of a software product. They provide quantifiable data to track progress, identify areas needing improvement, and demonstrate the value of testing to stakeholders.

- Defect Density: This metric measures the number of defects found per lines of code (LOC) or per function point. A lower defect density indicates higher quality. For example, 5 defects per 1000 LOC suggests a better quality level than 20 defects per 1000 LOC.

- Defect Removal Efficiency (DRE): DRE shows the percentage of defects found during testing versus the total number of defects found in production. A high DRE signifies effective testing. A DRE of 80% means 80% of defects were caught before reaching the end-user.

- Test Coverage: This metric indicates the extent to which the software has been tested. It can include code coverage (percentage of code executed during testing), requirement coverage (percentage of requirements verified), or functional coverage (percentage of functionalities tested). Achieving 90% code coverage is often a target, although the ideal percentage varies depending on project criticality and risks.

- Test Execution Time: This metric measures the time taken to execute the test suite. It helps identify areas where testing can be optimized for efficiency. A decrease in test execution time, without compromising coverage, reflects improved test automation and efficiency.

- Mean Time To Failure (MTTF): This metric focuses on the reliability of the software by measuring the average time between failures. A higher MTTF is desirable. If a system crashes every 2 hours, its MTTF is 2 hours; a more reliable system might have an MTTF of 20 hours or more.

These metrics, used in conjunction, give a comprehensive picture of the software’s quality and the efficiency of the testing process. Regular monitoring and analysis of these metrics are key to continuous improvement.

Q 9. How do you handle defects/bugs found during testing?

Handling defects effectively is critical for delivering high-quality software. My approach involves a structured process:

- Reproduce the bug: First, I meticulously try to reproduce the defect using the steps provided in the bug report. This ensures I understand the issue accurately.

- Isolate the root cause: Once I’ve reproduced the bug, I delve into the code and analyze the logs to determine the exact cause of the failure. This may involve debugging, reviewing code changes, or analyzing system logs.

- Report the bug: I then document the bug in a bug tracking system (like Jira or Bugzilla), providing clear details: steps to reproduce, actual vs. expected behavior, screenshots or videos, severity level, and potential impact on other functionalities.

- Verify the fix: After the developer fixes the bug, I thoroughly retest to ensure the issue is resolved and that the fix hasn’t introduced any new problems. Regression testing is vital here.

- Close the bug: Once I confirm the fix, I update the bug status in the system and close the report.

Throughout this process, effective communication with developers and stakeholders is paramount. I proactively share updates, collaborate on solutions, and ensure everyone is informed about the bug’s status and resolution.

Q 10. Explain your experience with different testing tools (e.g., Selenium, JUnit, TestNG).

I have extensive experience with several testing tools, each serving different purposes:

- Selenium: I’ve used Selenium extensively for automating web application testing. Its cross-browser compatibility and support for multiple programming languages (Java, Python, C#) make it ideal for comprehensive UI testing. For example, I automated tests for a large e-commerce website, ensuring smooth functionality across Chrome, Firefox, and Safari.

- JUnit and TestNG: These are Java-based testing frameworks that I utilize heavily for unit and integration testing. JUnit is known for its simplicity, while TestNG offers more advanced features like data-driven testing and parallel test execution. I use these frameworks to write automated unit tests for individual components, ensuring code correctness and early detection of defects.

Beyond these, I have familiarity with other tools like REST-Assured for API testing, Postman for API testing and exploration, and performance testing tools like JMeter. The choice of tool depends on the specific testing needs and the nature of the application under test.

Q 11. What is a test plan, and what are its key components?

A test plan is a formal document that outlines the scope, approach, resources, and schedule for software testing. It serves as a roadmap for the entire testing process, ensuring a structured and efficient approach.

Key components of a test plan include:

- Test Objectives: What are we trying to achieve with this testing effort?

- Test Scope: What parts of the software will be tested and which will be excluded?

- Test Strategy: Which testing methods (unit, integration, system, acceptance) will be used, and how will they be performed?

- Test Environment: Description of the hardware, software, and network configuration required for testing.

- Test Schedule: Timeline for completing different testing phases.

- Resource Allocation: Identifying the testers, tools, and other resources needed.

- Risk Assessment: Identifying potential risks and outlining mitigation strategies.

- Test Deliverables: Defining the reports, documentation, and other outputs of the testing process.

A well-defined test plan is crucial for successful software testing, minimizing risks and ensuring effective resource utilization. It’s a living document that can be adjusted as the project evolves.

Q 12. How do you create effective test cases?

Creating effective test cases requires careful planning and attention to detail. My approach emphasizes clarity, completeness, and reusability.

- Understand Requirements: Thoroughly review user stories, requirements specifications, and design documents to fully grasp the intended functionality.

- Identify Test Scenarios: Determine different scenarios that will test the software under various conditions, including positive (valid inputs) and negative (invalid inputs) test cases.

- Define Test Cases: Structure each test case clearly, including: a unique ID, test case name, objective, preconditions, test steps (clear, concise instructions), expected results, postconditions, and any relevant data.

- Prioritize Test Cases: Focus on critical functionalities and high-risk areas first. This prioritization helps manage time effectively and reduces the risk of missing major defects.

- Review and Update: Regularly review and update test cases as requirements change or new functionalities are added. Version control helps track changes and maintains consistency.

Effective test cases are not merely a checklist; they should provide detailed, reproducible steps that clearly demonstrate the functionality and identify potential defects. A well-written test case is crucial for consistent, reliable testing.

Q 13. Describe your experience with different testing environments.

I’ve worked with diverse testing environments, ranging from simple local setups to complex, cloud-based infrastructure. My experience includes:

- Local Development Environments: I often use local machines for initial unit and integration testing, configuring the necessary software and databases as needed. This allows for rapid iteration and immediate feedback during development.

- Staging Environments: These mirror the production environment as closely as possible. I use staging environments to perform system testing, ensuring the application behaves as expected in a near-production setting before deployment.

- Cloud-Based Environments (AWS, Azure): I have experience setting up and utilizing cloud-based environments for testing, especially for scalability and performance testing. Cloud environments enable simulating large user loads and testing applications across various geographical locations.

- Virtual Machines (VMs): VMs provide isolated test environments, preventing conflicts with other applications and ensuring consistent test results. This is particularly valuable for parallel testing.

Adapting to different environments is key. Understanding the specific configuration of each environment ensures the tests are relevant and the results are reliable. This includes managing different operating systems, databases, and network configurations.

Q 14. How do you manage your time effectively during testing?

Effective time management during testing is crucial for timely project delivery and high-quality results. My strategies include:

- Prioritization: I focus on high-priority test cases first, addressing critical functionalities and high-risk areas. This ensures that major defects are identified early.

- Test Automation: I automate repetitive tasks like regression tests, freeing up time for more complex testing activities like exploratory testing. Automation also speeds up the entire test cycle.

- Test Case Optimization: I regularly review and optimize existing test cases to improve efficiency and reduce redundancy. This ensures the test suite is concise and effective.

- Defect Tracking and Reporting: I meticulously track and report defects, ensuring timely resolution and reducing the need for extensive rework later in the cycle.

- Time Estimation: I accurately estimate time needed for different testing tasks, helping to create realistic schedules and avoid delays. I use time tracking tools and analyze past data to make better estimations.

- Collaboration and Communication: Effective communication with the team helps prevent bottlenecks and ensures efficient task allocation.

Continuous monitoring of progress and proactive adaptation to unforeseen challenges are key to staying on schedule and meeting quality goals. Regularly reviewing the test plan and adapting to emerging challenges is vital to keeping things moving forward.

Q 15. What are your preferred defect tracking systems?

My preferred defect tracking systems depend heavily on the project’s size and the development methodology used. For smaller projects, a simpler system like Jira’s issue tracker might suffice. Its intuitive interface and Kanban board views make it easy to manage defects and track progress. For larger, more complex projects, however, I find that more robust systems such as Azure DevOps or even a custom-built system integrated with our CI/CD pipeline are more beneficial. These systems allow for better traceability, custom workflows, and detailed reporting, crucial for managing a high volume of defects effectively.

In choosing a system, I prioritize features like robust searching and filtering capabilities, detailed reporting on defect trends, customizable workflows for different defect types (bugs, enhancements, tasks), and seamless integration with other project management tools. Ultimately, the ‘best’ system is the one that best supports the specific needs of the project and team.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the concept of risk-based testing.

Risk-based testing is a strategic approach to software testing where we prioritize testing efforts based on the potential impact and likelihood of defects. Instead of testing everything equally, we focus on areas and functionalities that pose the highest risk to the system’s success. Think of it like this: you wouldn’t spend the same amount of time checking the spelling on a welcome banner as you would checking the security of a payment gateway.

The process typically involves identifying potential risks through risk assessment (which could involve reviewing requirements, design documents, and past project data), analyzing the likelihood and impact of these risks, prioritizing test cases based on risk level, and then executing tests accordingly. This approach ensures that the most critical areas are thoroughly tested, even under tight deadlines, maximizing our return on investment in testing.

For example, if we’re testing an e-commerce site, a high-risk area would be the checkout process, given the potential for financial loss if it fails. We’d dedicate significantly more testing resources to this area than, say, testing the aesthetic aspects of the product images.

Q 17. How do you ensure test coverage?

Ensuring test coverage requires a multi-pronged approach. It’s not just about the quantity of tests, but also the quality and the extent to which they cover different aspects of the software. We aim for both high code coverage and high functional coverage.

For code coverage, we use tools that track the execution of code during testing. Tools like SonarQube or JaCoCo generate reports showing which parts of the code have been tested and which haven’t. A high percentage doesn’t always mean perfect testing, but it helps identify gaps. For functional coverage, we use techniques like requirement traceability matrices to ensure each requirement has corresponding test cases. Test case design techniques, such as equivalence partitioning and boundary value analysis, ensure systematic coverage of different input ranges and scenarios.

Beyond these methods, we use risk-based testing to guide our coverage efforts, focusing on high-risk areas. Regular reviews of test coverage reports and ongoing discussions with developers help to identify and address any gaps in coverage.

Q 18. Describe your experience with performance testing.

I have extensive experience in performance testing, encompassing load testing, stress testing, endurance testing, and spike testing. I’ve used tools like JMeter and LoadRunner to simulate realistic user loads on various applications, from small web applications to large-scale enterprise systems. My experience includes designing and executing performance tests, analyzing results, identifying performance bottlenecks, and working with developers to implement performance improvements.

In one project, I used JMeter to simulate 10,000 concurrent users accessing a newly developed e-commerce platform. The tests revealed a significant bottleneck in the database query performance, which was subsequently optimized by the development team. This resulted in a considerable improvement in the system’s responsiveness and overall performance under heavy load.

My performance testing approach is always data-driven. I collect baseline performance metrics and track improvements post-optimization, ensuring that any changes made don’t negatively impact other areas.

Q 19. What is load testing and how is it different from stress testing?

Load testing and stress testing are both types of performance testing, but they serve different purposes. Load testing aims to determine the application’s behavior under expected user load. We try to simulate the typical number of users accessing the application simultaneously during peak hours. The goal is to verify that the system can handle the expected load without performance degradation.

Stress testing, on the other hand, pushes the application beyond its expected limits to find its breaking point. We increase the load far beyond normal expectations to identify the system’s capacity and resilience under extreme conditions. The goal is to determine how the system behaves when overloaded and to identify vulnerabilities and potential failure points. For example, if we expect 1000 concurrent users, load testing would simulate that, while stress testing might involve simulating 10,000 or even 100,000 to find where the system crashes or becomes unresponsive.

Q 20. How do you handle conflicts with developers during the testing process?

Conflicts with developers are inevitable during the testing process, but they can be effectively managed through open communication and collaboration. The key is to maintain a professional and respectful atmosphere while focusing on the common goal of delivering high-quality software.

When a conflict arises, I always start by calmly presenting my findings and the evidence supporting my observations. I avoid blaming and instead focus on the objective impact of the bug or issue. I explain the impact on the user experience and the potential risks. Then, I work collaboratively with the developers to reproduce the issue and brainstorm solutions. I find that a joint debugging session, where we work together to understand the root cause, is often very effective in resolving the conflict. Utilizing the chosen defect tracking system to document the issue and its resolution ensures accountability and transparency.

In situations where disagreement persists, involving a senior team member or project manager can help to mediate and provide an objective perspective. The goal is always to find a constructive solution that satisfies both testing and development requirements.

Q 21. What are some common challenges in software testing?

Software testing presents numerous challenges, varying from project to project. Some common ones include:

- Time constraints: Testing deadlines are often tight, leaving insufficient time for thorough testing.

- Resource limitations: Limited budget and personnel can restrict testing scope and depth.

- Changing requirements: Late changes in requirements can impact test plans and necessitate extensive rework.

- Testing complex systems: Testing intricate systems with numerous interdependencies can be extremely challenging.

- Lack of clear test specifications: Ambiguous or incomplete test specifications can lead to misunderstandings and inconsistencies.

- Difficult-to-reproduce defects: Some defects occur intermittently and are hard to reproduce, making them difficult to track and fix.

- Keeping up with technology: The rapid pace of technological advancements requires continuous learning and adaptation.

Addressing these challenges requires proactive planning, effective communication, collaboration with developers, and utilization of appropriate testing tools and methodologies.

Q 22. How do you ensure the quality of your test data?

Ensuring high-quality test data is crucial for reliable testing. It’s like building a house – you wouldn’t use substandard materials, would you? Poor test data leads to inaccurate results and can mask real defects. My approach involves several key steps:

Data Generation Strategies: I leverage various techniques depending on the application. This can include using data generation tools that mimic real-world data patterns, or even writing custom scripts to produce specific data sets. For instance, for testing an e-commerce application, I might use a tool to generate realistic customer profiles, product details, and order information.

Data Cleansing and Validation: Once the data is generated, it needs thorough cleansing. This involves checking for duplicates, inconsistencies, and invalid data points. I employ automated scripts and validation rules to ensure data accuracy and integrity. For example, I would check for realistic address formats, valid credit card numbers (using checksum validation), and correct date ranges.

Data Subsetting and Masking: To protect sensitive information, I use techniques like data masking to replace sensitive data with realistic but non-sensitive values. For example, I would replace real credit card numbers with fake ones that still follow the necessary formatting and validation rules. Subsetting allows us to work with manageable datasets representing diverse scenarios without the overhead of the full dataset.

Test Data Management Tools: To streamline the process, I utilize test data management (TDM) tools. These tools help automate data generation, cleansing, and masking, improving efficiency and consistency.

By following these steps, I ensure that the test data accurately reflects real-world scenarios and provides reliable results, ultimately leading to higher-quality software.

Q 23. Explain your experience with security testing.

Security testing is a critical aspect of software development, and I have extensive experience in various methodologies. My experience includes:

Static Application Security Testing (SAST): I’ve used SAST tools to analyze code for vulnerabilities without actually executing it. Think of it like a spell-checker for security – it helps identify potential issues early in the development lifecycle.

Dynamic Application Security Testing (DAST): DAST involves testing the running application to find vulnerabilities that may not be apparent in the code. This is like actually trying to break into a system – discovering weaknesses through real-world attacks (simulated, of course!).

Penetration Testing: I have experience conducting penetration tests, simulating real-world attacks to identify security vulnerabilities. It’s like hiring ethical hackers to test the defenses of a system. This requires a strong understanding of common attack vectors and how to exploit them responsibly.

Security Scanning Tools: I am proficient in using various security scanning tools to automate vulnerability detection. These tools can scan code, databases, and network infrastructure for known weaknesses, giving a more comprehensive assessment.

I ensure that all security testing activities are performed in accordance with established best practices and industry standards, and always prioritize responsible disclosure of any discovered vulnerabilities.

Q 24. How do you stay up-to-date with the latest testing methodologies and technologies?

The field of software testing is constantly evolving, so continuous learning is essential. I stay updated through several strategies:

Professional Certifications: Pursuing certifications like ISTQB (International Software Testing Qualifications Board) demonstrates commitment and keeps my skills current.

Online Courses and Workshops: Platforms like Coursera, Udemy, and LinkedIn Learning offer courses on the latest testing methodologies and tools.

Industry Conferences and Webinars: Attending conferences and webinars provides opportunities to learn from industry experts and network with other professionals. It’s a great way to learn about cutting-edge techniques and trends.

Technical Blogs and Publications: Following reputable blogs and publications keeps me informed about new tools, techniques, and industry best practices.

Hands-on Practice: I actively seek out opportunities to work with new tools and technologies to gain practical experience. Nothing beats real-world application.

This multifaceted approach ensures I remain knowledgeable and proficient in the latest testing advancements.

Q 25. What is your experience with automation frameworks?

I possess extensive experience with various automation frameworks, including Selenium, Appium, and Cypress. My expertise extends beyond simply using these tools; I understand the underlying principles of test automation and how to choose the right framework for a specific project.

Selenium: I’ve used Selenium WebDriver to automate web application testing, creating robust and maintainable automated tests across different browsers and platforms.

Appium: My experience with Appium allows me to automate testing for mobile applications (both Android and iOS).

Cypress: I’ve utilized Cypress for its ease of use and ability to debug tests effectively, especially for modern web applications. It’s incredibly efficient for end-to-end testing.

Framework Design and Implementation: I understand the importance of designing efficient and maintainable automation frameworks. This includes the use of Page Object Model (POM) to improve code reusability and reduce maintenance costs, and TestNG or JUnit for effective test management and reporting.

I can also integrate these frameworks with CI/CD pipelines to automate the testing process and enable faster feedback loops.

Q 26. Describe a time you had to troubleshoot a complex testing issue.

During a recent project involving a complex e-commerce platform, we encountered a frustrating issue where automated tests intermittently failed. The error messages were vague, providing little insight into the root cause.

My troubleshooting approach was systematic:

Reproduce the Error: I first focused on consistently reproducing the error. This involved running the failing tests multiple times under varying conditions to identify any patterns.

Log Analysis: I thoroughly examined the logs generated during test execution. This revealed that the failure was related to network latency issues, occurring only when the server load was high.

Environment Analysis: This led me to investigate the test environment. We discovered that the test environment wasn’t accurately mirroring the production environment’s load capacity. This was a significant factor in the intermittent failures.

Solution Implementation: To resolve this, we implemented strategies to simulate higher loads on the test environment. This allowed us to uncover and fix the underlying code defects causing the failures under stress.

Root Cause Analysis: Beyond fixing the immediate issue, I conducted a root cause analysis to prevent similar situations in the future. We implemented better monitoring of test environment performance and adjusted our testing strategies to simulate realistic load conditions more effectively.

This experience highlighted the importance of thorough investigation, systematic troubleshooting, and the need for test environments that accurately mimic real-world conditions.

Q 27. How do you contribute to continuous improvement in the testing process?

Contributing to continuous improvement in the testing process is an ongoing commitment. My contributions involve several key actions:

Test Process Optimization: I regularly analyze the testing process to identify inefficiencies and bottlenecks. This involves reviewing test case execution times, defect densities, and overall cycle times. For instance, I might suggest implementing parallel test execution or adopting more efficient test case design techniques.

Defect Tracking and Analysis: I meticulously track and analyze defects. This involves identifying recurring issues and patterns which helps us focus improvement efforts on the most problematic areas.

Test Automation Enhancement: I constantly look for ways to improve our test automation strategy. This might include adopting new automation tools or improving our test framework’s design and architecture.

Knowledge Sharing and Training: I actively share my knowledge and expertise with other team members. This includes mentoring junior testers and providing training on new technologies or best practices.

Feedback and Collaboration: I actively seek and provide feedback on the testing process and share suggestions for improvement. This ensures that the entire team is involved in the continuous improvement effort.

This proactive approach fosters a culture of continuous improvement, resulting in more efficient and effective testing processes.

Q 28. What is your experience with non-functional testing?

Non-functional testing is crucial for ensuring the overall quality and usability of an application. It focuses on aspects beyond the basic functionality, such as performance, security, and usability. My experience in this area covers:

Performance Testing: I have conducted various performance tests including load testing (determining the application’s behavior under various user loads), stress testing (pushing the application beyond its limits), and endurance testing (assessing its stability over extended periods). I utilize tools like JMeter and LoadRunner to simulate realistic user loads and analyze performance metrics.

Usability Testing: I have experience planning and executing usability tests, observing users as they interact with the application to identify areas for improvement. This includes gathering feedback, identifying pain points, and suggesting design changes to improve the user experience.

Security Testing (already discussed above): Security testing, as previously mentioned, is a vital component of non-functional testing. It ensures the application is protected against various security threats.

Compatibility Testing: I have experience in verifying compatibility across different browsers, devices, and operating systems. This ensures the application functions correctly in various environments.

Understanding and addressing these non-functional aspects are crucial for delivering a high-quality user experience and a robust application.

Key Topics to Learn for Understanding of Quality Control and Testing Methodologies Interview

- Software Development Life Cycle (SDLC) Models: Understanding different SDLC models (Waterfall, Agile, DevOps) and how quality control and testing are integrated at each stage. Practical application: Explain how testing activities differ in Agile vs. Waterfall projects.

- Testing Types and Methodologies: Mastering various testing types (unit, integration, system, acceptance, regression, performance, security) and methodologies (black-box, white-box, grey-box). Practical application: Describe a scenario where you would use each testing type and explain your choice.

- Test Case Design Techniques: Familiarize yourself with techniques like equivalence partitioning, boundary value analysis, decision table testing, and state transition testing. Practical application: Design test cases for a specific functionality using at least two different techniques.

- Defect Tracking and Management: Understand the process of identifying, reporting, tracking, and resolving defects using bug tracking tools (Jira, Bugzilla, etc.). Practical application: Describe your experience with a defect life cycle and the tools you’ve used.

- Quality Metrics and Reporting: Learn how to collect, analyze, and present quality metrics (defect density, test coverage, etc.) to stakeholders. Practical application: Explain how you would measure the effectiveness of a testing process.

- Automation Testing Frameworks: Gain familiarity with popular automation frameworks (Selenium, Appium, Cypress) and their applications. Practical application: Discuss your experience with test automation and the benefits it offers.

- Risk Management in Testing: Understand how to identify and mitigate risks associated with software quality and testing. Practical Application: Explain how you’d approach risk assessment in a project with tight deadlines.

Next Steps

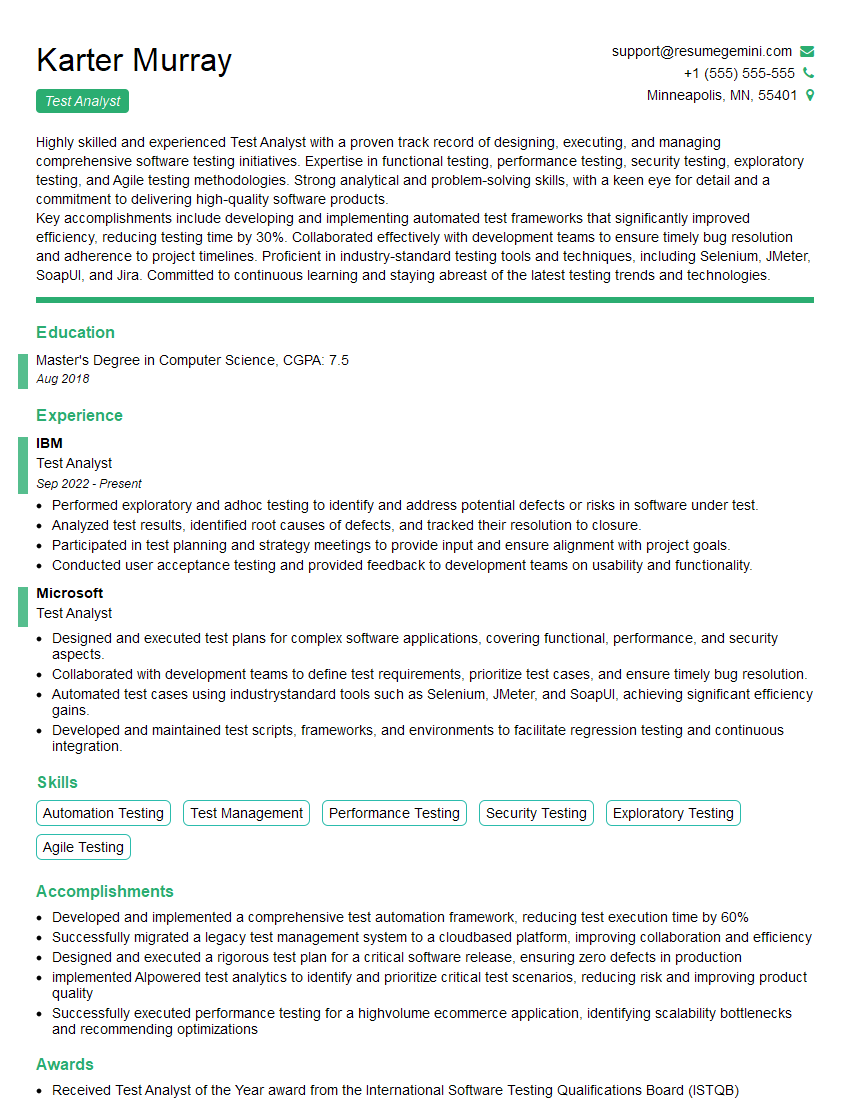

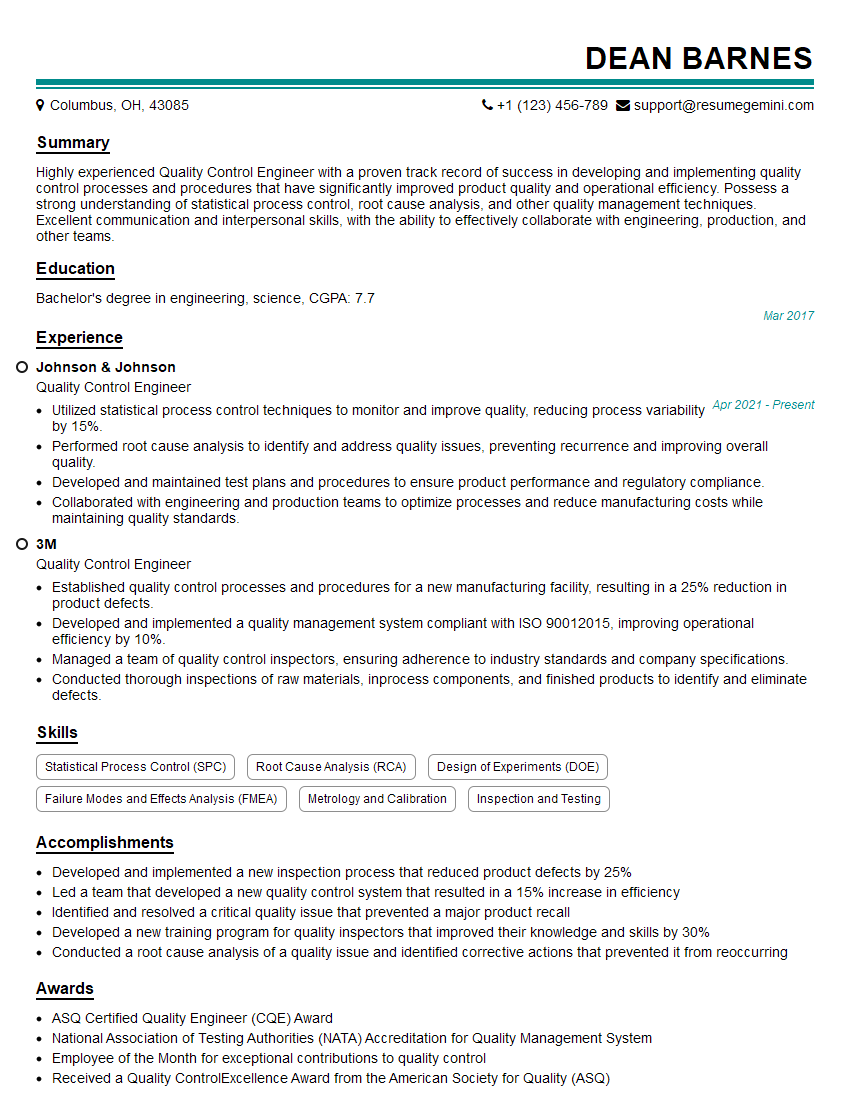

Mastering quality control and testing methodologies is crucial for career advancement in the software industry. A strong understanding of these concepts opens doors to higher-paying roles and more challenging projects. To significantly boost your job prospects, create an ATS-friendly resume that highlights your skills and experience effectively. ResumeGemini is a trusted resource that can help you build a professional and impactful resume. We provide examples of resumes tailored to highlight expertise in Understanding of quality control and testing methodologies – leverage these examples to craft your winning resume!

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good