Are you ready to stand out in your next interview? Understanding and preparing for Web interview questions is a game-changer. In this blog, we’ve compiled key questions and expert advice to help you showcase your skills with confidence and precision. Let’s get started on your journey to acing the interview.

Questions Asked in Web Interview

Q 1. Explain the difference between HTTP and HTTPS.

HTTP (Hypertext Transfer Protocol) and HTTPS (Hypertext Transfer Protocol Secure) are the foundation of data communication on the web. The key difference lies in security. HTTP is an unencrypted protocol, meaning data transmitted between a client (like your browser) and a server is sent in plain text. Anyone intercepting this communication can read the data. Think of it like sending a postcard – everyone can see what’s written on it. HTTPS, on the other hand, uses SSL/TLS (Secure Sockets Layer/Transport Layer Security) to encrypt the communication. This encryption ensures that only the sender and receiver can understand the data, much like sending a letter in a sealed envelope.

In practice, this means that using HTTPS is crucial for websites handling sensitive information like passwords, credit card details, or personal data. The ‘s’ in HTTPS signifies this added security layer, and you’ll usually see a padlock icon in your browser’s address bar to indicate a secure connection.

Q 2. What are REST APIs and how do they work?

REST APIs (Representational State Transfer Application Programming Interfaces) are a architectural style for building web services. They allow different software systems to communicate and exchange data over the internet using standard HTTP methods. Imagine a restaurant; the waiter (API) takes your order (request) and sends it to the kitchen (server). The kitchen prepares the food (processes data) and sends it back to the waiter, who then delivers it to you (response).

REST APIs use standard HTTP methods like GET, POST, PUT, and DELETE to perform different actions on resources. Each resource is identified by a unique URI (Uniform Resource Identifier), and the response is usually in a standard format like JSON or XML. This standardized approach makes REST APIs highly flexible and easy to integrate with various systems.

For example, a social media API might use a GET request to retrieve a user’s profile information, a POST request to create a new post, a PUT request to update an existing post, and a DELETE request to delete a post. These are all done through URLs designed to specifically target those resources.

Q 3. Describe the different types of HTTP methods (GET, POST, PUT, DELETE).

HTTP methods define the type of action a client wants to perform on a server resource. They’re like verbs in a sentence, describing what to do with the data.

GET: Retrieves data from the server. It’s used to read information; think of it as asking a question. Example: Getting a list of products from an e-commerce website.POST: Sends data to the server to create or update a resource. This is like making a statement or submitting information. Example: Submitting a new user registration form.PUT: Updates an existing resource on the server. Think of it as replacing the entire resource with new data. Example: Completely replacing a user profile with updated details.DELETE: Deletes a resource from the server. This is like removing something. Example: Deleting a user account.

While these are the most common, other methods exist such as PATCH (for partial updates) and HEAD (for retrieving only headers).

Q 4. What is the difference between a cookie and local storage?

Both cookies and local storage are mechanisms for storing data in a user’s web browser, but they have key differences.

- Cookies: Sent by the server and stored by the browser. They’re primarily used for session management and tracking user preferences. They can be accessed by the server that created them. They are also limited in size (around 4KB). Cookies also have expiration dates; they can expire after a certain amount of time, or be session cookies that last only until the browser is closed.

- Local Storage: Client-side storage that’s entirely managed by the browser. The server cannot access it directly. Local storage offers larger storage capacity (typically 5MB or more). Data persists even after the browser is closed.

In essence, cookies are designed for server-side interaction, whereas local storage is focused on enhancing the user experience on the client-side. A common use for local storage is storing application data that does not require server interaction, like user settings or game progress.

Q 5. Explain the concept of AJAX and its uses.

AJAX (Asynchronous JavaScript and XML) is a technique for updating parts of a web page without reloading the entire page. Think of it as sending a quick message to the server in the background while you continue using the webpage. This is achieved using JavaScript to make asynchronous HTTP requests.

The primary use of AJAX is to improve the user experience by making web applications more responsive. For example, auto-suggest features in search bars or real-time chat applications heavily rely on AJAX to fetch and display data without interrupting user interaction. It allows for dynamic updates and enhances interactivity without the lag of full page refreshes.

Q 6. What are some common JavaScript frameworks or libraries you’ve used (React, Angular, Vue, etc.)?

I have extensive experience with several JavaScript frameworks. React is my go-to for building user interfaces because of its component-based architecture and virtual DOM for efficient updates. I’ve also used Angular extensively for large-scale enterprise applications, appreciating its structured approach and powerful features. Vue.js is another framework I’m familiar with, often preferred for its ease of learning and integration into existing projects. The choice of framework often depends on project requirements and team expertise. For example, a small-scale project might benefit from Vue’s simplicity, while a large-scale project would likely need the robust structure and tooling of Angular or React.

Q 7. How do you handle cross-browser compatibility issues?

Cross-browser compatibility is a critical aspect of web development. Different browsers (Chrome, Firefox, Safari, Edge, etc.) render web pages slightly differently, leading to inconsistencies in how elements are displayed or how features behave. This is mostly due to differences in rendering engines and JavaScript implementations.

To address this, I use several strategies:

- CSS Reset/Normalize: Employing a CSS reset or normalize library (like normalize.css) helps standardize the default styles of different browsers, minimizing inconsistencies.

- Feature Detection: Instead of relying on browser sniffing (detecting the browser type), I employ feature detection. This involves checking if a browser supports a particular feature before using it. This ensures compatibility across various browsers and versions.

- Testing across browsers: Thorough testing in different browsers and on different devices is crucial to ensure the application functions as intended across various platforms.

- Using polyfills: Polyfills are pieces of code that provide functionality missing in older browsers. They ensure consistency across different browser versions.

- Responsive Design Principles: Following responsive design principles ensures the application adapts gracefully to different screen sizes and devices.

By using a combination of these techniques, I aim for consistent and reliable performance across various browsers, delivering a seamless user experience.

Q 8. Describe your experience with responsive web design.

Responsive web design is the practice of building websites that adapt seamlessly to different screen sizes and devices. Instead of creating separate websites for desktops, tablets, and smartphones, responsive design uses techniques like flexible layouts, flexible images, and media queries to ensure a consistent and optimal user experience across all platforms.

My experience includes working with various responsive design frameworks, such as Bootstrap and Tailwind CSS. I’m proficient in using CSS media queries to adjust layouts based on screen width, viewport height, and device orientation. For example, I’ve used media queries to stack elements vertically on smaller screens while maintaining a horizontal layout on larger screens. I also have experience optimizing images for different screen densities using techniques like responsive images (<img srcset="image-small.jpg, image-medium.jpg 2x, image-large.jpg 3x">) to reduce page load times. In a recent project, I redesigned a client’s website, utilizing a flexible grid system and media queries to ensure it displayed perfectly on everything from small mobile phones to large desktop monitors, resulting in a significant increase in user engagement and mobile conversions.

Q 9. Explain the importance of SEO best practices.

SEO (Search Engine Optimization) best practices are crucial for increasing a website’s visibility in search engine results pages (SERPs). They involve a range of techniques to improve organic (non-paid) search rankings. The importance lies in driving more relevant traffic to your website, leading to increased brand awareness, lead generation, and ultimately, higher sales or conversions.

- Keyword Research: Identifying relevant keywords that users search for related to your website’s content is the foundation. Tools like Google Keyword Planner help in finding the right terms.

- On-Page Optimization: Optimizing website content and HTML source code to improve its relevance to specific keywords. This involves using keywords in titles, headings, meta descriptions, and image alt text.

- Off-Page Optimization: Building the website’s authority and reputation through activities like link building (getting other reputable websites to link to yours), social media engagement, and online reputation management.

- Technical SEO: Ensuring the website’s technical aspects are search engine friendly – sitemaps, robots.txt, fast loading speed, mobile-friendliness etc.

In a project, implementing a comprehensive SEO strategy improved organic traffic by 40% within six months, significantly increasing leads and conversions.

Q 10. What are some common web security vulnerabilities and how can they be mitigated?

Web security vulnerabilities are weaknesses in a website’s design, code, or configuration that can be exploited by attackers. Common vulnerabilities include:

- SQL Injection: Malicious SQL code inserted into input fields to manipulate or access database data. Mitigation involves parameterized queries and input sanitization.

- Cross-Site Scripting (XSS): Injecting malicious scripts into a website to steal user data or redirect users to phishing sites. Prevention involves input validation and output encoding.

- Cross-Site Request Forgery (CSRF): Tricking users into performing unwanted actions on a website. Prevention involves using CSRF tokens.

- Broken Authentication and Session Management: Weak password policies or insecure session handling allowing unauthorized access. Mitigation involves strong password policies, multi-factor authentication, and secure session management.

To mitigate these, I employ secure coding practices, regularly update software and plugins, use web application firewalls (WAFs), and implement robust authentication and authorization mechanisms. I also regularly conduct security audits and penetration testing to identify and address potential vulnerabilities proactively. Thinking of security as a layered defense is key; no single measure guarantees complete protection.

Q 11. How do you optimize web pages for performance?

Optimizing web pages for performance is critical for user experience and SEO. A slow-loading website leads to higher bounce rates and lower search rankings. My approach focuses on several key areas:

- Image Optimization: Compressing images without significant loss of quality using tools like TinyPNG or ImageOptim. Using appropriate image formats (WebP for better compression) and responsive images.

- Minification and Bundling: Reducing the size of CSS and JavaScript files by removing unnecessary characters and combining multiple files into fewer ones. Tools like Webpack and Gulp can automate this process.

- Caching: Implementing browser caching and server-side caching to reduce the number of requests to the server. CDNs (Content Delivery Networks) can further improve this.

- Code Optimization: Writing efficient JavaScript and CSS code to avoid performance bottlenecks. Profiling tools can help identify areas for improvement.

- Lazy Loading: Only loading images and other resources when they are needed, rather than all at once. This improves initial page load time.

In a recent project, these optimizations reduced page load time by over 50%, significantly improving user experience and boosting conversion rates.

Q 12. Explain your experience with version control systems (Git).

I’m proficient in using Git for version control. I’m familiar with all the core Git commands, including git init, git clone, git add, git commit, git push, git pull, git branch, git merge, and git rebase. I’ve extensively used Git in both individual and team projects, employing branching strategies like Gitflow for managing different features and releases.

My experience includes using Git platforms like GitHub and GitLab for collaborative development, code reviews, and issue tracking. I understand the importance of writing clear and concise commit messages to maintain a clean and understandable project history. In one project, my team used Git’s branching and merging capabilities to develop and deploy multiple features concurrently without conflicts, resulting in a much faster development cycle.

Q 13. What is your experience with databases (SQL, NoSQL)?

I have experience with both SQL and NoSQL databases. SQL databases, like MySQL and PostgreSQL, are relational databases with structured data organized in tables with rows and columns. They’re suitable for applications requiring data integrity and complex queries. I’m proficient in writing SQL queries for data retrieval, manipulation, and management.

NoSQL databases, such as MongoDB and Cassandra, are non-relational and offer more flexibility for handling large volumes of unstructured or semi-structured data. I’ve used MongoDB for projects requiring scalability and flexibility, such as storing user profiles or product catalogs. Choosing between SQL and NoSQL depends on the specific needs of the application; SQL is better for structured data requiring ACID properties, while NoSQL is better for large, unstructured data and high scalability.

For example, in one project, we used MySQL for storing structured user data requiring strict data integrity, and MongoDB for storing unstructured user-generated content like images and comments.

Q 14. Describe your approach to debugging JavaScript code.

Debugging JavaScript code requires a systematic approach. My strategy generally involves:

- Console Logging: Using

console.log()to inspect variable values, function inputs/outputs, and program flow at different stages. This is the most basic, yet effective debugging technique. - Browser Developer Tools: Utilizing the built-in developer tools in modern browsers (Chrome DevTools, Firefox Developer Tools) to set breakpoints, step through code execution, inspect the call stack, and examine the DOM.

- Linters and Code Formatters: Using tools like ESLint and Prettier to identify potential code errors and improve code readability, preventing bugs before they happen.

- Debuggers: Using advanced debuggers to step through code, inspect variables, and analyze the execution flow in detail.

- Error Messages: Carefully examining error messages provided by the browser’s console. These are often very helpful clues.

I also prioritize writing clean, well-documented code to make debugging easier. If a bug is persistent, I’ll break down the problem into smaller parts, employing a process of elimination to find the root cause. A systematic approach, combined with the use of the right tools, is crucial for effective JavaScript debugging.

Q 15. How do you handle conflicting priorities or tight deadlines?

Conflicting priorities and tight deadlines are a common reality in web development. My approach involves a combination of proactive planning, effective communication, and prioritization techniques. First, I clarify all project goals and dependencies with stakeholders, ensuring everyone’s on the same page. Then, I employ a prioritization matrix, such as MoSCoW (Must have, Should have, Could have, Won’t have), to rank tasks based on their importance and urgency. This helps me focus on the most critical tasks first. If necessary, I’ll break down larger tasks into smaller, more manageable chunks, facilitating better progress tracking and identification of potential roadblocks early on. Open and honest communication is key; I proactively inform stakeholders of any potential delays or challenges, proposing solutions and collaboratively adjusting priorities as needed. Finally, I leverage project management tools to track progress and ensure transparency throughout the development process.

For example, on a recent project with overlapping deadlines for both a website redesign and a critical bug fix, I used the MoSCoW method to prioritize the bug fix as a ‘Must have’, addressing user disruption immediately. The website redesign, while important, was categorized as ‘Should have’ and its launch was slightly postponed to ensure the stability and functionality of the existing site.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. Explain the difference between client-side and server-side scripting.

Client-side scripting and server-side scripting are two distinct approaches to web development, differing primarily in where the code executes. Client-side scripting refers to code that runs on the user’s web browser (e.g., JavaScript), interacting directly with the user interface. This allows for dynamic updates and enhanced user experience without requiring a page reload. Server-side scripting, on the other hand, involves code executed on a web server (e.g., Python, PHP, Node.js), processing data, interacting with databases, and generating dynamic content that’s then sent to the client’s browser. Think of it like this: client-side code is like the front-end of a restaurant, directly interacting with customers, while server-side code is like the kitchen, handling the preparation of the food (data processing) before serving it to the customer.

A simple example illustrates the difference: A form submission. Client-side JavaScript can validate the form data before sending it to the server, improving user experience by providing immediate feedback on incorrect inputs. Server-side code then processes the validated data, perhaps storing it in a database or performing other actions before responding to the client.

//Client-side JavaScript (example) function validateForm() { //Validation logic... return true; //or false }Q 17. What are your preferred testing methodologies for web applications?

My preferred testing methodologies for web applications are multifaceted and incorporate different approaches at various stages of the development lifecycle. I firmly believe in a multi-layered testing strategy encompassing unit testing, integration testing, system testing, and user acceptance testing (UAT).

- Unit Testing: This involves testing individual components or modules of the code in isolation to ensure they function correctly. I use frameworks like Jest (JavaScript) or pytest (Python) for this.

- Integration Testing: This verifies the interaction between different modules or components.

- System Testing: This tests the entire system as a whole, focusing on functionality, performance, and security. This often involves automated tests and manual exploratory testing.

- User Acceptance Testing (UAT): This involves end-users testing the application to ensure it meets their requirements and expectations.

Furthermore, I advocate for automated testing as much as possible to ensure regression prevention and faster feedback loops. Tools such as Selenium for UI testing and Cypress for end-to-end testing are frequently employed. I also strongly believe in the importance of performance testing using tools like JMeter or Gatling to identify bottlenecks and optimize the application’s responsiveness under various load conditions.

Q 18. Explain your understanding of web accessibility (WCAG).

Web Content Accessibility Guidelines (WCAG) are a set of international standards for making web content accessible to people with disabilities. My understanding of WCAG encompasses its four guiding principles: Perceivable, Operable, Understandable, and Robust (POUR).

- Perceivable: Information and user interface components must be presentable to users in ways they can perceive. This includes providing alternative text for images, captions for videos, and sufficient color contrast.

- Operable: User interface components and navigation must be operable. This means ensuring keyboard navigation is possible, avoiding time limits, and providing mechanisms for seizures.

- Understandable: Information and the operation of the user interface must be understandable. This includes clear and concise language, predictable behavior, and error prevention.

- Robust: Content must be robust enough that it can be interpreted reliably by a wide variety of user agents, including assistive technologies.

In practical terms, this means using semantic HTML, providing alternative text for all non-text content, ensuring sufficient color contrast ratios, building keyboard-accessible interfaces, and following best practices for ARIA attributes where needed. I regularly use accessibility testing tools like WAVE and Lighthouse to identify and address potential accessibility issues during development.

Q 19. How familiar are you with different web server technologies (Apache, Nginx)?

I have significant experience with both Apache and Nginx web servers. Apache, known for its maturity and extensive module support, is a robust and versatile choice for a wide range of applications. I’ve used it extensively for hosting websites, managing virtual hosts, and configuring various security settings. On the other hand, Nginx, renowned for its speed and efficiency, is particularly well-suited for high-traffic websites and applications. I’ve leveraged its capabilities for reverse proxying, load balancing, and caching, significantly improving application performance. My experience extends to configuring both servers for SSL/TLS encryption, fine-tuning performance parameters, and troubleshooting various server-related issues.

For instance, on a high-traffic e-commerce platform, I chose Nginx due to its superior performance capabilities under load, utilizing its caching mechanisms to reduce server load and improve page response times. For smaller projects with less stringent performance needs, Apache’s ease of configuration and extensive module ecosystem often made it the more efficient choice.

Q 20. What experience do you have with cloud platforms (AWS, Azure, GCP)?

I have hands-on experience with AWS, Azure, and GCP, having deployed and managed web applications on each platform. My experience on AWS involves leveraging services like EC2 for compute, S3 for storage, RDS for databases, and CloudFront for content delivery. I’ve worked with various AWS services to build scalable, reliable, and cost-effective web solutions. Similarly, I’ve utilized Azure’s comparable services, including Azure Virtual Machines, Azure Blob Storage, Azure SQL Database, and Azure CDN. On GCP, my experience encompasses Compute Engine, Cloud Storage, Cloud SQL, and Cloud CDN. My proficiency includes configuring infrastructure as code using tools like Terraform, automating deployments using CI/CD pipelines, and monitoring application performance using cloud-based monitoring tools.

A specific example includes a project where I migrated a legacy on-premise application to AWS. This involved designing a scalable architecture, migrating databases, configuring security groups, and setting up automated backups and monitoring. The migration resulted in improved scalability, availability, and reduced operational overhead.

Q 21. Describe your experience with CI/CD pipelines.

My experience with CI/CD pipelines is extensive, covering the entire lifecycle from code commit to deployment. I’ve implemented and managed CI/CD pipelines using various tools such as Jenkins, GitLab CI, and GitHub Actions. My approach emphasizes automation at every stage, including automated testing, code building, deployment, and monitoring. I use version control systems like Git for code management, ensuring that all changes are tracked and easily reversible. The pipelines incorporate automated testing suites to catch errors early in the process, reducing the likelihood of bugs reaching production. Deployment is automated to various environments (development, staging, production) using techniques like blue-green deployments or canary deployments, minimizing downtime and risk. Finally, the pipelines include monitoring and logging to track application performance and identify potential issues after deployment.

On a recent project, we implemented a CI/CD pipeline using Jenkins that automated the build, testing, and deployment process. This resulted in a significant reduction in deployment time and increased the frequency of releases, allowing us to quickly iterate and deliver new features to users.

Q 22. How do you stay up-to-date with the latest web development trends?

Staying current in the rapidly evolving world of web development requires a multi-pronged approach. I actively engage in several key strategies:

- Following industry blogs and publications: Sites like CSS-Tricks, Smashing Magazine, and A List Apart provide insightful articles and tutorials on the latest trends and best practices. I also subscribe to newsletters from reputable sources.

- Participating in online communities: I actively engage on platforms like Stack Overflow, Reddit (r/webdev), and Discord servers dedicated to web development. These communities are a goldmine of information, allowing me to learn from others’ experiences and stay abreast of emerging technologies.

- Attending conferences and webinars: Conferences like Web Summit and smaller, niche events offer valuable opportunities to network with other professionals and learn directly from experts. Webinars often provide more focused training on specific technologies.

- Experimenting with new tools and technologies: I dedicate time to exploring new frameworks, libraries, and tools, even if it’s just for personal projects. This hands-on experience solidifies my understanding and allows me to assess their practical value.

- Continuous learning through online courses: Platforms such as Udemy, Coursera, and Codecademy offer a vast array of courses covering all aspects of web development, allowing for focused learning on specific areas of interest.

This combination of passive and active learning ensures that I remain at the forefront of web development trends.

Q 23. Explain your understanding of design patterns in web development.

Design patterns are reusable solutions to commonly occurring problems in software design. In web development, they promote code reusability, maintainability, and scalability. They are like blueprints for structuring your code, providing a proven framework to handle specific situations. Some examples relevant to web development include:

- Model-View-Controller (MVC): This classic pattern separates the application’s concerns into three interconnected parts: the Model (data), the View (presentation), and the Controller (logic). This improves organization and testability. Think of a blog post: the model is the post data, the view is the HTML displaying it, and the controller handles user interactions like commenting.

- Singleton: This ensures that only one instance of a class is created. Useful for managing database connections or global configuration settings, preventing conflicts and ensuring consistency.

- Factory: This pattern creates objects without specifying their concrete classes. It’s incredibly useful for decoupling code and allowing for flexible object creation. Imagine dynamically creating different types of user accounts based on user roles.

- Observer: This pattern allows objects to be notified of changes in other objects. This is crucial for real-time updates in applications, for instance, updating a chat interface when a new message arrives.

Choosing the right design pattern depends on the specific problem and project requirements. Understanding these patterns allows for building cleaner, more maintainable, and scalable web applications.

Q 24. Describe your experience with different front-end build tools (Webpack, Parcel).

I have extensive experience with both Webpack and Parcel, two popular front-end build tools. They both handle tasks like bundling, minification, and code splitting, but they differ significantly in their approach:

- Webpack: Webpack is highly configurable and powerful. This allows for fine-grained control over the build process, but it can also lead to complex configuration files. Its flexibility makes it suitable for large-scale projects with intricate build requirements. I’ve used Webpack extensively in projects needing custom loaders, plugins, and sophisticated optimization strategies. For instance, I used it to optimize the loading of images and code splitting to improve page load times in a large e-commerce application.

- Parcel: Parcel is known for its simplicity and zero-configuration approach. It automatically handles many common tasks without needing extensive configuration. This makes it ideal for quick prototyping and smaller projects where ease of use is prioritized. I found Parcel extremely beneficial for smaller projects and rapid prototyping, allowing me to focus on development rather than wrestling with complex configuration files. It’s significantly faster than Webpack in many cases.

The choice between Webpack and Parcel often depends on project size and complexity. For large, complex projects requiring fine-tuned control, Webpack shines. For smaller projects or rapid prototyping, Parcel’s ease of use is a major advantage.

Q 25. Explain your experience with GraphQL.

GraphQL is a query language for APIs and a runtime for fulfilling those queries with your existing data. It empowers clients to request precisely the data they need, minimizing over-fetching (receiving more data than necessary) and under-fetching (requiring multiple requests to get all the data). This results in more efficient and faster data fetching compared to traditional REST APIs.

My experience with GraphQL includes:

- Building GraphQL schemas: I’ve designed and implemented GraphQL schemas defining the types, queries, and mutations available to clients.

- Using GraphQL resolvers: I’ve written resolvers to fetch data from various sources (databases, external APIs) and format it for the client.

- Implementing GraphQL with different frameworks: I’ve utilized various frameworks like Apollo Server (Node.js) and others to build GraphQL APIs. I’ve used these to build APIs for mobile and web applications.

- Utilizing GraphQL clients: I’m familiar with using GraphQL clients like Apollo Client and Relay to interact with GraphQL APIs in front-end applications.

GraphQL has significantly improved the efficiency and maintainability of data fetching in the projects I’ve worked on. Its declarative nature and ability to reduce network traffic made it a valuable asset.

Q 26. What is your experience with serverless architectures?

Serverless architectures involve deploying and running code without managing servers. The cloud provider handles the infrastructure, scaling, and maintenance. Functions are triggered by events (e.g., HTTP requests, database changes). This approach offers significant advantages in terms of cost-effectiveness, scalability, and ease of maintenance.

My experience includes:

- Developing and deploying serverless functions: I have built and deployed functions using AWS Lambda, Google Cloud Functions, and Azure Functions. These functions often handle tasks like image processing, API gateways, and background jobs.

- Integrating serverless functions with other services: I’ve integrated serverless functions with various other services, including databases (e.g., DynamoDB, Cloud Firestore), message queues (e.g., SQS, Pub/Sub), and other cloud-based services.

- Designing serverless applications: I’ve designed and implemented applications specifically leveraging the benefits of a serverless architecture. This included considerations for event-driven design, asynchronous operations, and efficient resource utilization.

I found serverless architectures exceptionally beneficial for projects requiring rapid scaling and efficient cost management, especially those with unpredictable traffic patterns.

Q 27. How would you approach building a secure authentication system?

Building a secure authentication system is critical for any web application. My approach involves several key components:

- Strong password policies: Enforce strong passwords using length requirements, complexity rules, and regular password changes. Consider using a password manager for added security.

- Multi-factor authentication (MFA): Implement MFA to add an extra layer of security. This could involve using time-based one-time passwords (TOTP), push notifications, or hardware security keys.

- Secure storage of credentials: Never store passwords in plain text. Use strong hashing algorithms (like bcrypt or Argon2) to store password hashes securely. Consider using a dedicated key management service for better security.

- Input validation and sanitization: Always validate and sanitize user inputs to prevent injection attacks (SQL injection, cross-site scripting – XSS). This prevents malicious code from being executed.

- Secure session management: Use secure cookies with appropriate flags (HttpOnly, Secure) to prevent session hijacking. Implement short session timeouts and use techniques like token-based authentication (JWT).

- Regular security audits and penetration testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities.

- HTTPS: Always use HTTPS to encrypt communication between the client and the server. This protects sensitive data from eavesdropping.

Following these best practices ensures a robust and secure authentication system, protecting user data and application integrity. The specific implementation details may vary depending on the technology stack used, but the principles remain consistent.

Q 28. Describe a time you had to overcome a significant technical challenge in web development.

In a previous project, we encountered a significant performance bottleneck with a large-scale image processing task. The application was using a monolithic architecture, and image processing was a computationally intensive operation causing significant delays and impacting user experience.

To overcome this, we employed a multi-pronged strategy:

- Microservice architecture: We refactored the application into microservices, separating the image processing task into its own independent service. This allowed for independent scaling and improved resource utilization.

- Asynchronous processing: We implemented asynchronous processing using message queues (RabbitMQ), decoupling the image processing task from the main application flow. This prevented blocking operations and improved responsiveness.

- Optimization of image processing pipeline: We optimized the image processing pipeline by employing techniques such as image compression, caching, and using parallel processing. This significantly reduced processing time.

- Load balancing and scaling: We implemented load balancing to distribute the traffic across multiple instances of the image processing service and used auto-scaling to automatically adjust the number of instances based on demand.

This multi-faceted approach dramatically improved the application’s performance. The transition to a microservice architecture, coupled with asynchronous processing and optimization, resolved the bottleneck and enhanced user experience. It highlighted the importance of understanding system architecture and applying appropriate techniques for performance optimization.

Key Topics to Learn for Web Interview

- Front-End Development: Understanding HTML, CSS, and JavaScript fundamentals; practical application in building responsive and user-friendly websites; exploring frameworks like React, Angular, or Vue.js.

- Back-End Development: Mastering server-side languages (e.g., Python, Node.js, Java, PHP); experience with databases (SQL, NoSQL); building and deploying RESTful APIs; understanding server architecture and deployment strategies.

- Databases and Data Modeling: Designing efficient database schemas; understanding relational and NoSQL databases; working with SQL and performing data manipulation; experience with ORM frameworks.

- Version Control (Git): Proficiency in using Git for code management; understanding branching strategies; collaborating effectively on projects using Git repositories.

- Testing and Debugging: Writing unit tests and integration tests; using debugging tools to identify and resolve issues; understanding different testing methodologies.

- Web Security: Understanding common web vulnerabilities (e.g., XSS, SQL injection); implementing secure coding practices; applying security best practices to web applications.

- Problem-Solving and Algorithm Design: Approaching coding challenges with a structured approach; applying data structures and algorithms to solve real-world problems; practicing your problem-solving skills on platforms like LeetCode or HackerRank.

- Cloud Computing (AWS, Azure, GCP): Understanding cloud infrastructure and services; experience with deploying and managing web applications in the cloud; familiarity with cloud-based databases and services.

Next Steps

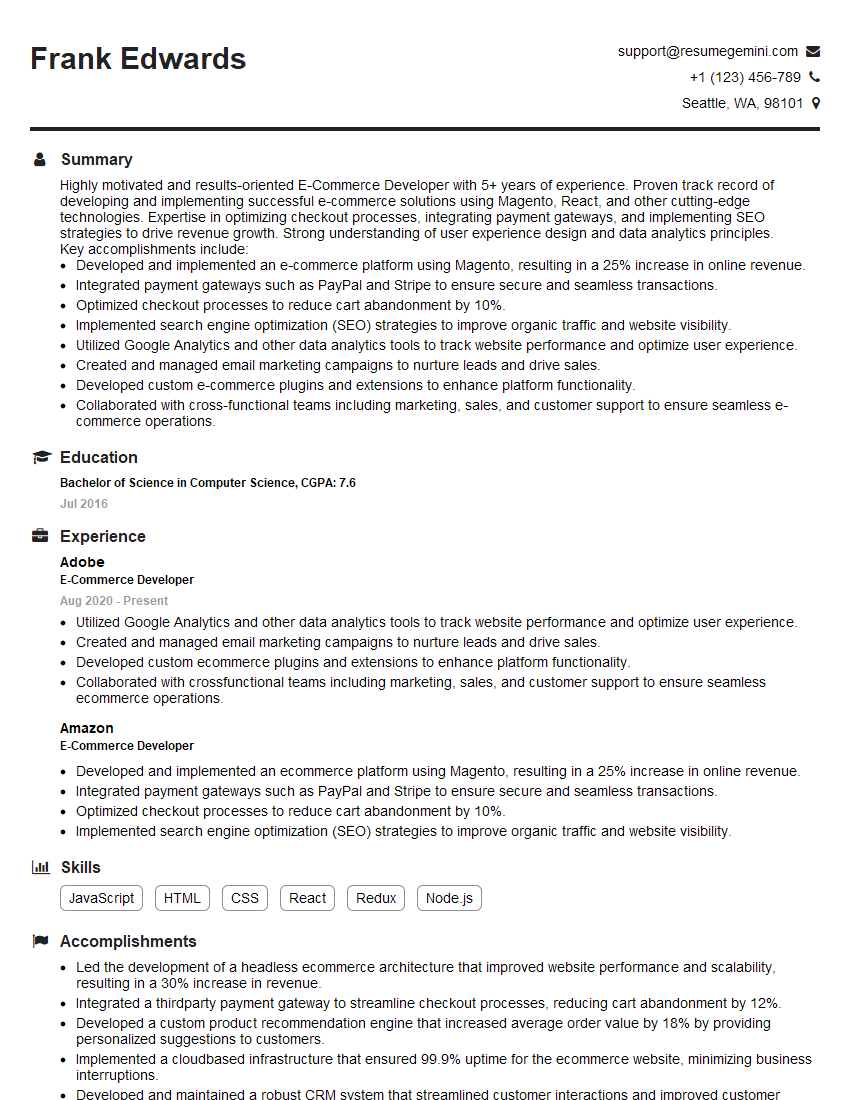

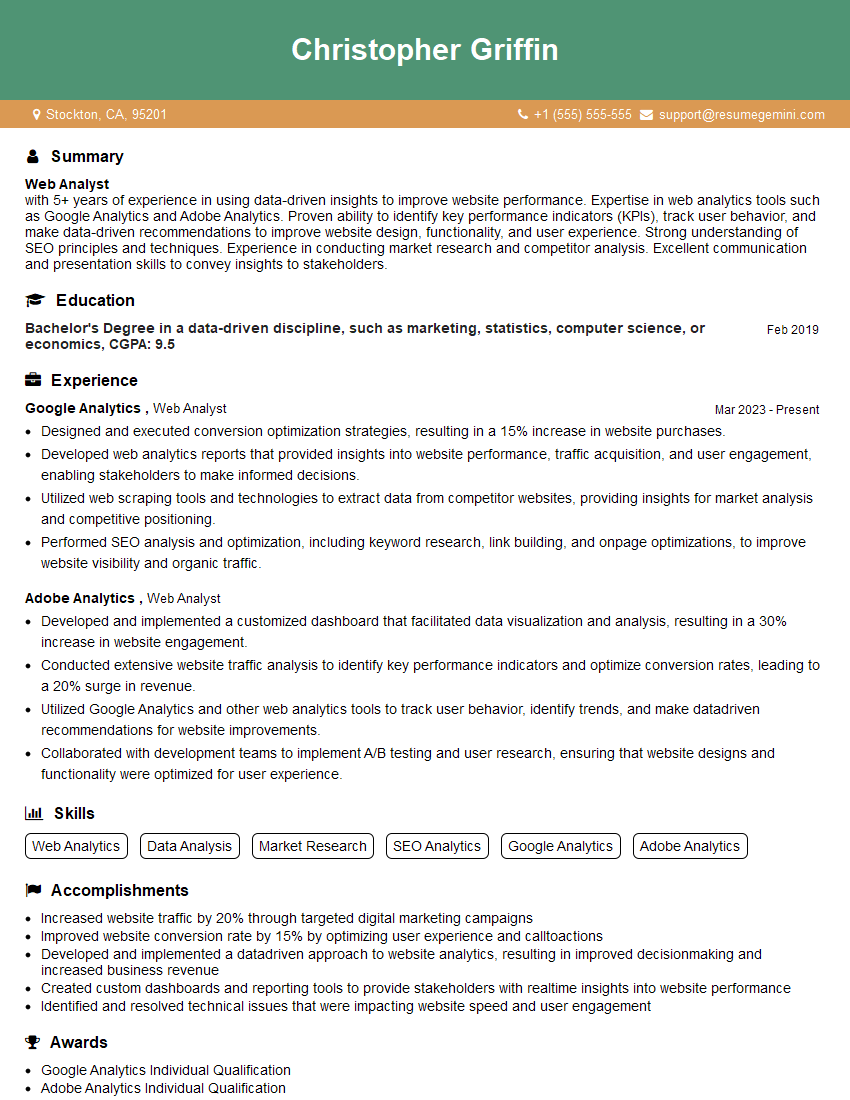

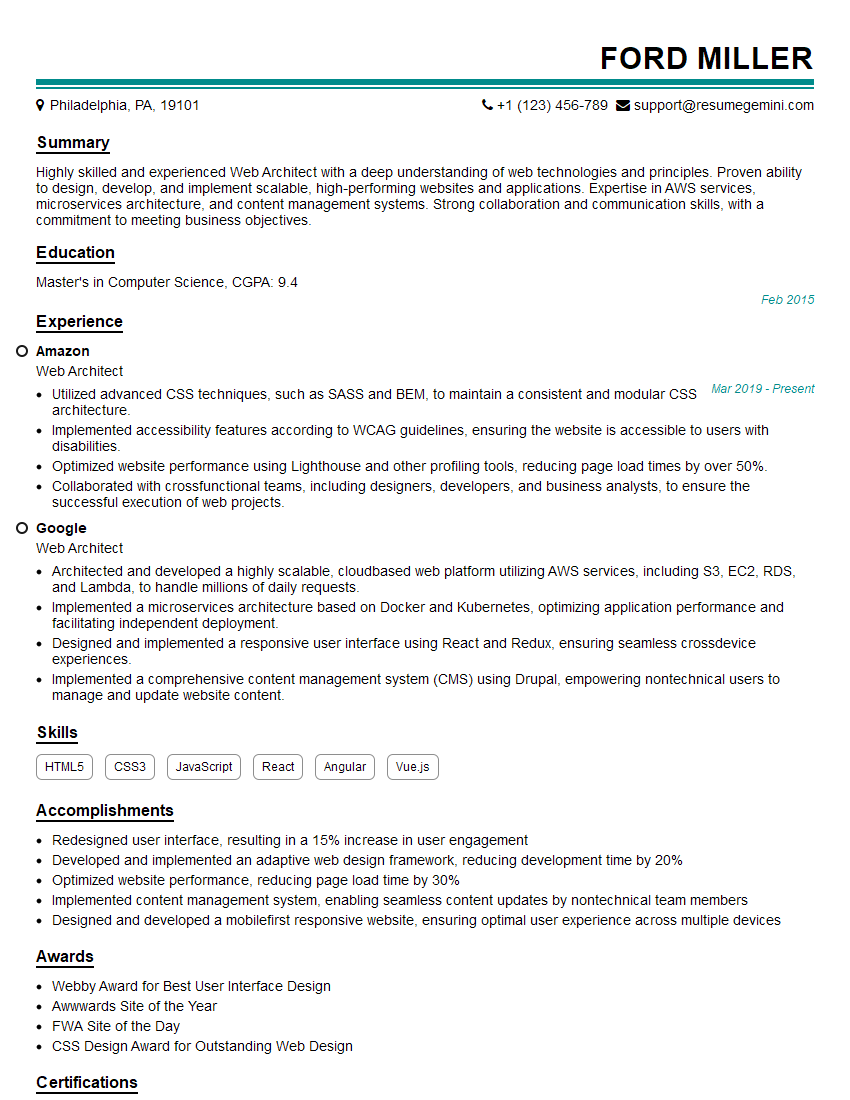

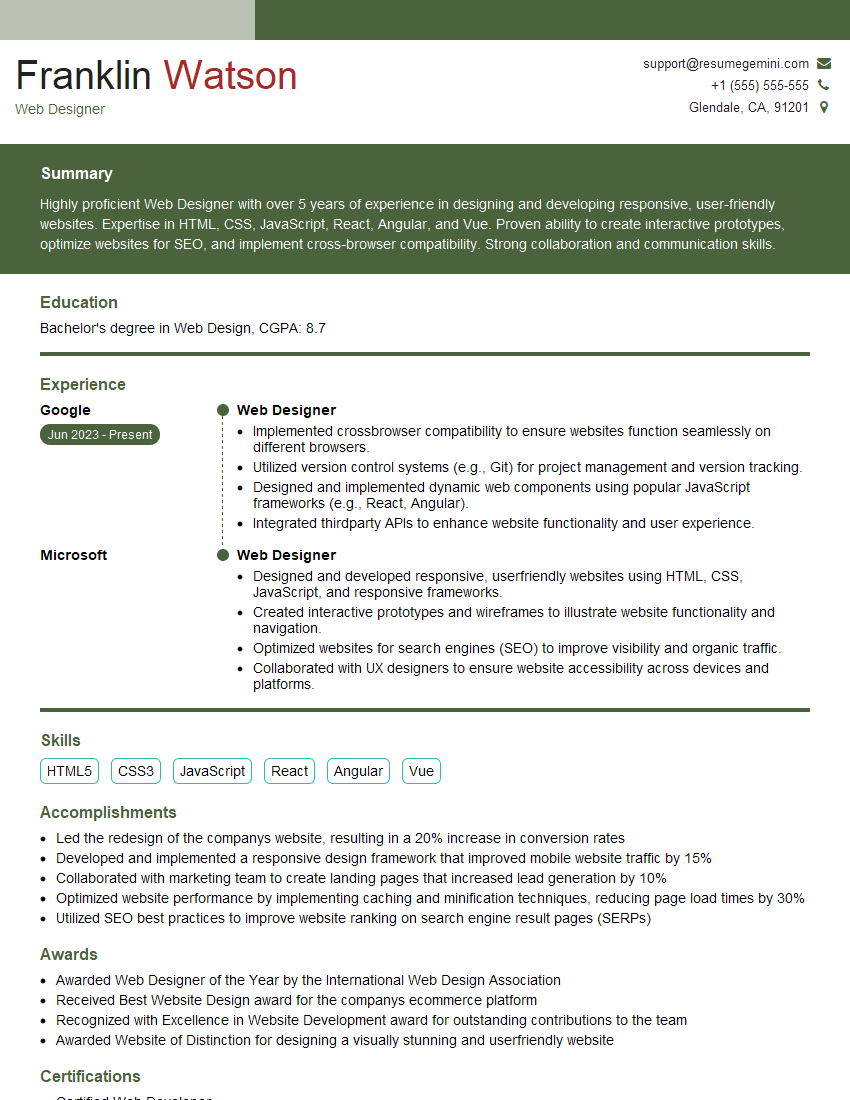

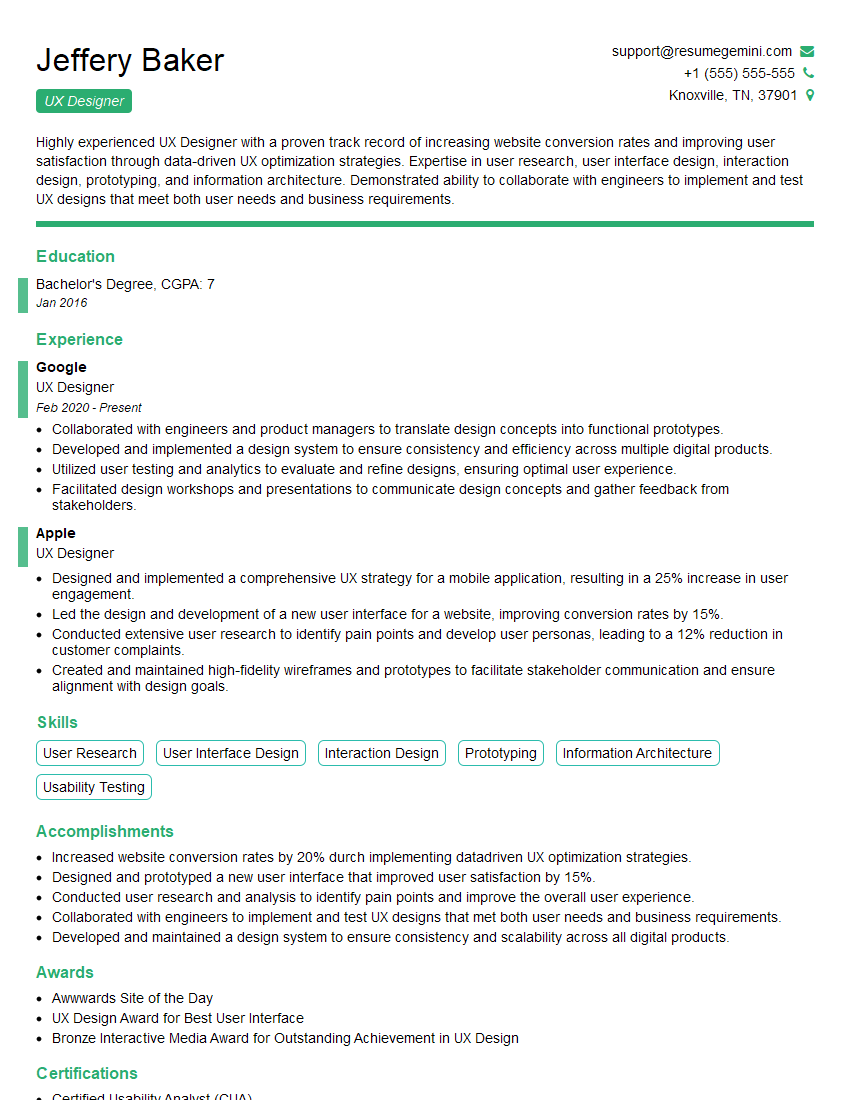

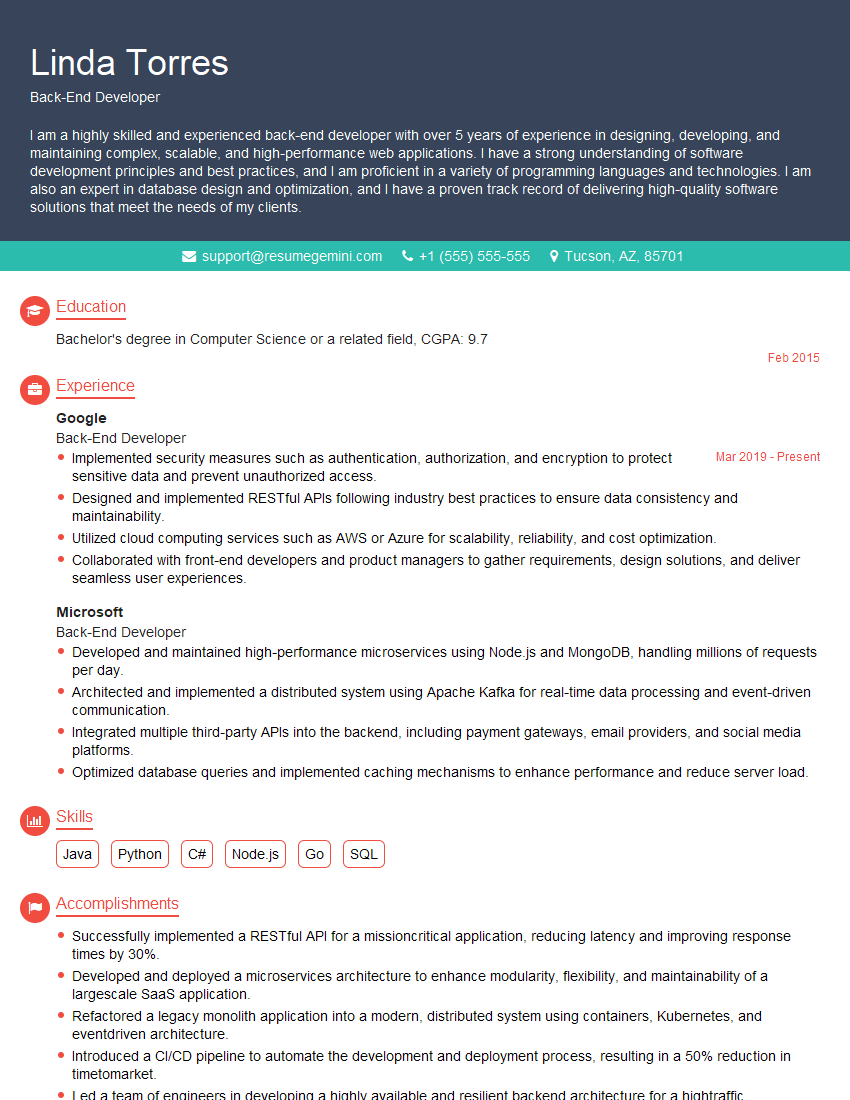

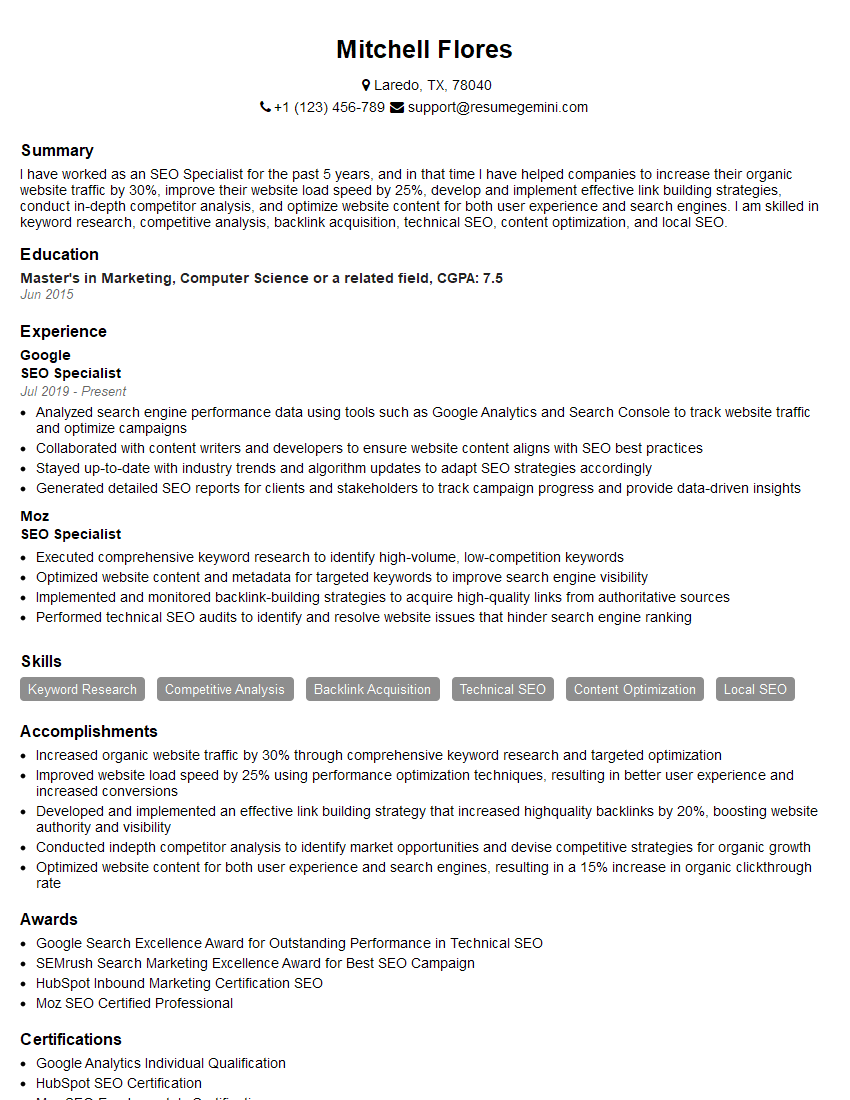

Mastering web development skills opens doors to exciting and rewarding careers. To maximize your job prospects, invest time in crafting a compelling and ATS-friendly resume that showcases your abilities. ResumeGemini is a trusted resource to help you build a professional resume that stands out. They provide examples of resumes tailored to Web development roles, helping you present your skills and experience effectively.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good