Feeling uncertain about what to expect in your upcoming interview? We’ve got you covered! This blog highlights the most important DevOps Configuration Management interview questions and provides actionable advice to help you stand out as the ideal candidate. Let’s pave the way for your success.

Questions Asked in DevOps Configuration Management Interview

Q 1. Explain the difference between imperative and declarative configuration management.

The core difference between imperative and declarative configuration management lies in how you instruct the system to achieve a desired state.

Imperative Configuration Management: This approach focuses on how to achieve the desired state. You explicitly define the steps the system must take. Think of it like providing a detailed recipe – you specify each action in sequence. Tools like Ansible and Chef often fall under this category. For example, you might instruct Ansible to first install package X, then configure service Y, and finally start process Z. This is very explicit and step-by-step.

Declarative Configuration Management: This approach focuses on what the desired end state should be. You define the target configuration, and the tool figures out the steps needed to get there. It’s like giving someone the finished dish’s photo – they’ll find the best way to make it happen. Terraform and Puppet are strong examples. You’d describe the desired number of servers, their specifications, and network configurations, and the tool will manage the creation and provisioning.

Analogy: Imagine building a LEGO castle. Imperative is like giving instructions: “Place brick A on brick B, then add brick C…”. Declarative is like showing a picture of the finished castle; the builder figures out the steps.

In practice: Imperative is great for highly customized tasks or situations needing precise control over each step. Declarative excels in managing complex infrastructure where the overall goal is clear, but the exact path isn’t as crucial.

Q 2. What are the benefits of using Infrastructure as Code (IaC)?

Infrastructure as Code (IaC) offers numerous benefits, significantly improving efficiency, reliability, and consistency in managing infrastructure:

- Increased Automation: IaC automates infrastructure provisioning, reducing manual effort and human error. This means faster deployment and reduced time to market.

- Improved Consistency and Repeatability: IaC ensures that infrastructure deployments are consistent across environments (development, testing, production). You can reliably recreate infrastructure from scratch easily.

- Version Control and Collaboration: Treats infrastructure as code, enabling version control (using Git, for example), facilitating collaboration, and allowing rollback to previous versions.

- Enhanced Agility: Quickly spin up and tear down environments, enabling rapid experimentation and iterative development.

- Cost Optimization: IaC helps optimize cloud resource usage by automating scaling and minimizing wasted resources.

- Improved Security and Compliance: IaC allows for automated security checks and the implementation of compliance policies, reducing the risk of security breaches.

- Enhanced Documentation: IaC provides a single source of truth for infrastructure configuration. Your code is your documentation.

Real-world example: Instead of manually creating 10 virtual machines in a cloud provider, you can define this in a Terraform configuration file and deploy them automatically. If you need to add 5 more, you simply change the configuration and re-run it.

Q 3. Describe your experience with popular IaC tools like Terraform, Ansible, Chef, Puppet, or SaltStack.

I have extensive experience with several IaC tools, each with its strengths and weaknesses. My experience includes:

- Terraform: My go-to for multi-cloud and complex infrastructure. Its declarative approach and state management are invaluable for managing large deployments. I’ve used it to provision resources across AWS, Azure, and GCP, leveraging its rich provider ecosystem.

- Ansible: Excellent for configuration management and automation tasks within existing infrastructure. Its agentless architecture simplifies deployment and is ideal for managing application deployments and configurations on existing servers.

- Chef: Used extensively in larger organizations with a need for robust infrastructure management and centralized configuration. Its focus on cookbooks and recipes makes it powerful for complex, repeatable configurations.

- Puppet: Similar to Chef, but with its own strengths and nuances. Puppet’s focus on declarative configurations and its strong community support make it another robust option for managing complex environments.

Example: In a recent project, I used Terraform to provision a complete Kubernetes cluster on AWS, automating the setup of nodes, networking, and security groups. Then, Ansible was employed to deploy and configure the applications running within the cluster. This combined approach leverages the strengths of each tool.

Q 4. How do you manage configuration drift in your infrastructure?

Configuration drift occurs when the actual state of your infrastructure diverges from your desired state as defined in your IaC. This is a serious problem as it can lead to inconsistencies, security vulnerabilities, and outages.

My strategy for managing configuration drift involves a multi-pronged approach:

- Regular Audits: Employing tools to compare the desired state (as defined in IaC) against the actual state of the infrastructure. Tools offer built-in capabilities, or you might develop custom scripts.

- Configuration Management Tools: Leveraging the built-in features of IaC tools to detect and correct drifts. For example, Terraform’s state file tracks infrastructure and allows you to identify deviations.

- Automated Remediation: Integrating automated remediation workflows into my CI/CD pipeline. When drift is detected, automated scripts correct the infrastructure to match the desired state.

- Immutable Infrastructure: Adopting immutable infrastructure principles where instead of modifying existing infrastructure, you create new instances with the desired configuration and replace the old ones.

- Monitoring and Alerting: Monitoring tools are vital to detect anomalies that might indicate configuration drift before it escalates into a major issue.

Example: If an automated check reveals a server missing a security patch, the remediation workflow could automatically apply the patch, or even replace the affected server with a new one that includes the patch.

Q 5. Explain the concept of version control for infrastructure.

Version control for infrastructure is essential for tracking changes, collaboration, and managing deployments. It’s the practice of managing infrastructure configurations using a version control system like Git. This means that every change made to the infrastructure code is tracked, allowing you to revert to previous versions if needed.

Benefits:

- Auditing and Traceability: Track every change made to the infrastructure configuration.

- Collaboration: Enables multiple engineers to work on the infrastructure code simultaneously.

- Rollback: Easily revert to previous versions in case of errors or issues.

- Branching and Merging: Develop new features and configurations in isolation before merging them into the main branch.

- Improved Code Quality: Encourages code reviews and improves the overall quality of infrastructure code.

In practice: Infrastructure code (e.g., Terraform configuration files) is stored in a Git repository. Changes are made through pull requests and reviewed before merging. This ensures that only tested and approved changes are applied to the infrastructure.

Q 6. How do you handle rollbacks in case of failed deployments?

Handling rollbacks effectively during failed deployments is crucial to minimizing downtime and preventing further issues. My approach combines several strategies:

- Idempotency: Ensure that infrastructure code is idempotent, meaning it can be run multiple times without causing unintended side effects. This makes rollbacks cleaner.

- Version Control: Leverage version control to revert to a previous known good state. This is the most straightforward and effective rollback strategy.

- State Management: Utilize state management features provided by IaC tools (like Terraform’s state file). This enables rollback to a specific point in time.

- Rollback Scripts: Create specific scripts dedicated to rolling back deployments, defining the exact steps to undo changes.

- Automated Rollbacks: Integrate automated rollback procedures within CI/CD pipelines to enable rapid and efficient rollbacks.

- Blue/Green Deployments: Use blue/green deployments where you deploy to a separate environment (‘blue’ or ‘green’), and switch traffic once verified, making rollback simple.

- Canary Deployments: Gradually roll out changes to a small subset of users to monitor impact before full deployment. This limits exposure if something goes wrong.

Example: If a new application deployment fails, a rollback script could automatically revert the changes by deleting the new deployment and restoring the previous version from a snapshot.

Q 7. Describe your experience with different configuration management methodologies (e.g., GitOps).

I’m familiar with various configuration management methodologies, and GitOps is a particularly effective approach. GitOps treats the Git repository as the single source of truth for the desired state of the infrastructure. All changes are made through pull requests, and the infrastructure is automatically updated to reflect the changes in the repository.

Key features and benefits of GitOps:

- Declarative approach: Defines the desired state, not the steps to get there.

- Version control: All changes are tracked in Git, simplifying auditing and rollbacks.

- Automation: Uses tools to synchronize the infrastructure with the Git repository automatically.

- Collaboration and workflow: Leverages standard Git workflows for review and approvals.

- Observability: Provides tools to monitor the state of the infrastructure and detect discrepancies.

Other methodologies: I’m also experienced with more traditional approaches like Infrastructure as Code (IaC) using tools like Terraform, Ansible, etc., but GitOps has become my preferred methodology for its focus on collaboration, version control, and automation, which leads to improved reliability and reduced risks.

Example: Whenever a change (e.g., adding a new server) is made in a pull request and merged into the main Git branch, a GitOps tool automatically detects this and updates the infrastructure to match the new configuration.

Q 8. How do you ensure idempotency in your configuration management scripts?

Idempotency in configuration management means that running a script multiple times produces the same result as running it once. This is crucial because it ensures consistency and prevents unintended changes during deployments or updates. Imagine painting a wall: you don’t want to repaint it accidentally after it’s already the desired color. Similarly, an idempotent script ensures that infrastructure remains in the target state, even if the script is executed repeatedly due to failures or retries.

To achieve idempotency, we use several strategies:

- Checking for Existence: Before creating a resource (e.g., a user, a file, a service), the script first checks if it already exists. If it does, the script proceeds without creating it again.

- Using state management tools: Tools like Ansible and Chef track the state of managed systems. They compare the desired state (defined in the configuration files) to the current state and only make changes if there’s a difference. This inherent state comparison ensures idempotency.

- Using conditional statements: Scripts use conditional statements (

if,else) to perform actions only when needed. For instance, a script might only install a package if it isn’t already present:if ! [ -x "/usr/bin/somepackage" ]; then apt-get install somepackage; fi(in a bash script). - Version control: Managing configuration as code (e.g., in Git) provides a history of changes, ensuring we can revert to previous states if needed and enabling reproducible deployments.

For example, in Ansible, a task like creating a file will only create the file if it doesn’t exist, and if it does exist, it’ll only update the file content if there’s a change. This is built-in to Ansible’s design ensuring that the task is idempotent.

Q 9. Explain your experience with different orchestration tools (e.g., Kubernetes, Docker Swarm).

I have extensive experience with Kubernetes and Docker Swarm, both powerful orchestration tools for containerized applications. My experience spans from setting up clusters to managing deployments and scaling applications.

Kubernetes: I’ve worked on several projects using Kubernetes, leveraging its features like deployments, stateful sets, and DaemonSets for managing applications at scale. I’m proficient in using YAML to define deployments, services, and other Kubernetes resources. I have experience with managing persistent volumes and network policies for enhanced security and data persistence. A recent project involved migrating a monolith application to microservices deployed on a Kubernetes cluster, significantly improving scalability and resilience.

Docker Swarm: I’ve used Docker Swarm in smaller-scale projects where Kubernetes’ complexity wasn’t necessary. Swarm’s simplicity makes it easier to set up and manage, ideal for quick prototyping and simpler deployments. I’ve utilized Swarm’s service model for load balancing and managing application replicas. However, for complex, large-scale deployments, Kubernetes offers much richer feature sets and better scalability.

In summary, my experience shows a strategic choice between these orchestration tools based on the specific project needs and scale. I have the practical skills to handle both and am able to make informed decisions about which tool would be the most efficient and effective for a given project.

Q 10. How do you manage secrets in your infrastructure?

Managing secrets—sensitive information like passwords, API keys, and certificates—is paramount for security. Storing them directly in configuration files is a major risk. Instead, I leverage several best practices:

- Dedicated Secret Management Tools: Tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault provide secure storage and management of secrets. These services handle encryption, access control, and auditing, minimizing the risk of exposure.

- Environment Variables: For secrets that need to be available to applications, I use environment variables instead of hardcoding them in configuration files. These are often injected during deployment through orchestration tools like Kubernetes or Docker.

- Secure Configuration Repositories: I ensure that configuration repositories are protected with access controls. Only authorized personnel have access, limiting the risk of unauthorized modification or exposure of secrets within the configurations.

- Principle of Least Privilege: Applications should only have access to the secrets they absolutely require. This limits the impact if a secret is compromised.

- Regular Audits and Rotation: Regularly auditing access to secrets and rotating secrets (changing passwords and keys periodically) is crucial for maintaining security.

For example, in a Kubernetes deployment, secrets are often stored in Kubernetes Secret objects, and applications access them through environment variables or Kubernetes service accounts with proper permissions.

Q 11. Describe your experience with monitoring and logging in a DevOps environment.

Monitoring and logging are essential for maintaining the health and stability of a DevOps environment. They provide real-time insights into application performance, infrastructure health, and security events. Effective monitoring and logging allow for proactive problem detection and faster resolution times.

My experience includes implementing comprehensive monitoring and logging solutions using tools like Prometheus, Grafana, Elasticsearch, Logstash, and Kibana (ELK stack), as well as cloud-based monitoring services like Datadog or CloudWatch.

I use Prometheus for metrics collection and Grafana for visualizing those metrics, enabling us to track critical system parameters like CPU usage, memory consumption, and network traffic. The ELK stack provides a powerful platform for centralized log management, allowing us to search, analyze, and visualize logs from various sources. This helps in identifying errors, debugging applications, and tracking security events.

Alerting is a key component of my monitoring approach. I set up alerts based on critical thresholds, ensuring that the operations team is notified immediately when issues arise, allowing for faster responses and minimizing downtime. For example, if CPU usage exceeds 90%, an alert is triggered, alerting the team to investigate the issue before it causes performance degradation.

Q 12. How do you troubleshoot configuration management issues?

Troubleshooting configuration management issues is a core competency in DevOps. My approach involves a structured, systematic process:

- Reproduce the Issue: First, I try to reproduce the issue consistently in a controlled environment (e.g., a staging or development environment). This helps rule out transient problems.

- Gather Logs and Metrics: I collect relevant logs from servers, applications, and configuration management tools. Monitoring data provides valuable context about the system’s state before and during the failure.

- Check Configuration: I review the configuration files, paying close attention to syntax, variable values, and permissions. Errors in configuration are a common source of problems.

- Review Infrastructure: I ensure that the underlying infrastructure (network, storage, compute) is operating correctly and meets the requirements of the application.

- Use Debugging Tools: I leverage debugging tools to step through scripts, identifying the exact point of failure. For example, using debuggers in scripts or using logging statements to track variable values.

- Test Changes Incrementally: Once a potential solution is identified, I test it in a controlled environment before deploying it to production. This minimizes the risk of introducing further problems.

- Version Control: All configuration changes are tracked in version control to allow for rollbacks if necessary.

By following these steps, I can efficiently diagnose and resolve configuration management problems, ensuring system stability and minimizing downtime. Experience has shown me that a methodical approach is key to troubleshooting effectively.

Q 13. Explain your understanding of CI/CD pipelines and their role in configuration management.

CI/CD pipelines are integral to modern configuration management. They automate the process of building, testing, and deploying applications and infrastructure changes. This automation ensures consistency, reduces human error, and speeds up the delivery process.

In my experience, CI/CD pipelines are closely tied to configuration management. Configuration as code is centrally managed in a version control system (like Git). The CI pipeline then picks up these changes, validates them (e.g., syntax checks, linting), builds artifacts, runs tests (unit, integration), and packages them for deployment. The CD pipeline then automates the deployment process to various environments (development, staging, production), using tools like Ansible, Puppet, Chef, or cloud-native deployment mechanisms.

For example, a change to a server’s configuration file is committed to Git. The CI pipeline then triggers, validating the change, building the new configuration, and testing it in a simulated environment. Once tests pass, the CD pipeline deploys the changes to the target servers, potentially utilizing a blue-green deployment strategy for zero-downtime updates. This integration of CI/CD with configuration management makes deployments reliable, repeatable, and efficient.

Q 14. How do you ensure security best practices in your configuration management processes?

Security is paramount in configuration management. My approach incorporates several best practices to ensure secure configurations and deployments:

- Principle of Least Privilege: Users and processes should only have the minimum necessary permissions. This limits the impact of a compromise.

- Regular Security Audits: Conduct regular security audits of configurations to identify vulnerabilities and misconfigurations.

- Secure Coding Practices: Follow secure coding practices when writing configuration management scripts, avoiding common vulnerabilities like SQL injection or cross-site scripting.

- Input Validation: Always validate inputs to scripts to prevent injection attacks.

- Strong Passwords and Encryption: Use strong, unique passwords and encryption for sensitive data.

- Secure Configuration Storage: Store configurations securely, using tools like HashiCorp Vault or cloud-based secret management services.

- Regular Updates and Patching: Keep configuration management tools and underlying infrastructure up-to-date with security patches.

- Secure Deployment Processes: Use secure deployment strategies, such as blue-green deployments, to minimize downtime and risk.

By implementing these security best practices, I aim to minimize the risk of vulnerabilities and ensure the security and integrity of our systems.

Q 15. Describe your experience with different cloud platforms (e.g., AWS, Azure, GCP) and their configuration management tools.

My experience spans across major cloud platforms – AWS, Azure, and GCP. Each offers unique configuration management tools, and my approach adapts to the specific platform’s strengths. In AWS, I’ve extensively used AWS Config for tracking and assessing resource configurations, and have automated deployments with tools like AWS CloudFormation and AWS OpsWorks. These allow me to define infrastructure as code (IaC), ensuring consistency and repeatability. On Azure, I’m proficient with Azure Resource Manager (ARM) templates, enabling similar IaC capabilities, and leverage Azure Automation for managing runbooks and automating tasks. Finally, in GCP, I utilize Google Cloud Deployment Manager and Terraform, again prioritizing IaC to manage infrastructure efficiently and predictably. Choosing the right tool hinges on the specific project requirements and existing infrastructure.

For example, in one project involving a large-scale migration to AWS, we used CloudFormation to define and deploy the entire infrastructure – from EC2 instances and VPCs to databases and load balancers. This automated process drastically reduced deployment time and minimized human error, a significant improvement over manual configuration.

Career Expert Tips:

- Ace those interviews! Prepare effectively by reviewing the Top 50 Most Common Interview Questions on ResumeGemini.

- Navigate your job search with confidence! Explore a wide range of Career Tips on ResumeGemini. Learn about common challenges and recommendations to overcome them.

- Craft the perfect resume! Master the Art of Resume Writing with ResumeGemini’s guide. Showcase your unique qualifications and achievements effectively.

- Don’t miss out on holiday savings! Build your dream resume with ResumeGemini’s ATS optimized templates.

Q 16. How do you manage dependencies between different components in your infrastructure?

Managing dependencies between infrastructure components is crucial for reliable deployments. I employ a combination of techniques to achieve this, often starting with a well-defined infrastructure architecture diagram that clearly illustrates the relationships between various components. This serves as a roadmap for configuration management. Then, I leverage Infrastructure-as-Code (IaC) tools like Terraform or CloudFormation. These allow me to define the entire infrastructure in code, specifying dependencies explicitly. For instance, a database instance might need to be created before an application server can connect to it; IaC handles this sequencing automatically.

Furthermore, I utilize version control (like Git) to track changes, manage dependencies between infrastructure components, and enable rollback capabilities. Imagine a scenario where a new web server depends on a specific version of a database. IaC and version control ensure that the correct database version is deployed before the web server. This prevents deployment failures and simplifies troubleshooting.

# Example Terraform snippet illustrating dependency management

resource "aws_db_instance" "example" {

# ... database configuration ...

}

resource "aws_instance" "web_server" {

depends_on = [aws_db_instance.example]

# ... web server configuration ...

}Q 17. How do you automate the deployment and configuration of applications?

Automating deployment and configuration is paramount in DevOps. My approach revolves around Infrastructure-as-Code (IaC) and Continuous Integration/Continuous Deployment (CI/CD) pipelines. IaC tools (Terraform, CloudFormation, Ansible, Puppet, Chef) allow me to define the desired state of infrastructure and applications in code, making deployments repeatable and consistent. CI/CD pipelines, often built with tools like Jenkins, GitLab CI, or CircleCI, automate the building, testing, and deployment process, triggered by code commits.

For example, a change to the application code automatically triggers the CI/CD pipeline, which builds a new container image, runs tests, and deploys it to the target environment. This ensures rapid delivery and reduces manual intervention. Furthermore, tools like Ansible or Chef can be integrated into the pipeline to configure the application servers, installing necessary software and adjusting settings according to the defined IaC templates. This holistic approach ensures seamless and automated deployments, improving efficiency and reducing errors.

Q 18. Explain your understanding of different deployment strategies (e.g., blue/green, canary).

Different deployment strategies cater to various needs regarding risk mitigation and downtime. Blue/green deployment involves maintaining two identical environments: a ‘blue’ (production) and a ‘green’ (staging). New deployments are rolled out to the green environment, thoroughly tested, and then traffic is switched from blue to green. If issues arise, traffic can be quickly switched back to the blue environment with minimal disruption. Canary deployment is a more gradual approach. A small subset of users is routed to the new version (the ‘canary’), allowing for monitoring and feedback before a full rollout. This minimizes the risk of widespread issues.

Consider a website update. A blue/green deployment ensures a seamless transition; users experience no downtime. Conversely, a canary deployment allows for iterative feedback, identifying potential issues before they affect the entire user base. The best strategy depends on factors like application complexity, risk tolerance, and user base size.

Q 19. How do you handle configuration management in a microservices architecture?

Configuration management in a microservices architecture requires a decentralized approach. Each microservice typically manages its configuration independently. Tools like Consul, etcd, or Kubernetes are often used for service discovery and configuration management. These tools provide a centralized repository for service configurations, allowing microservices to dynamically retrieve their settings without hardcoding values.

Configuration as code principles remain essential. Each microservice’s configuration is defined in code (e.g., YAML, JSON) and version controlled. This enables consistent deployments and simplifies rollbacks. Centralized logging and monitoring are also vital to understand the overall health and configuration of the microservices ecosystem. For instance, a change in a specific microservice’s configuration can be tracked and rolled back if necessary without affecting other services, thanks to this decentralized yet coordinated approach.

Q 20. Explain your experience with infrastructure automation frameworks.

My experience with infrastructure automation frameworks is extensive, including Ansible, Puppet, Chef, and Terraform. Ansible excels in its simplicity and agentless architecture, making it ideal for quick deployments and configuration changes. Puppet and Chef are more robust and feature-rich, suited for larger, more complex environments requiring strong centralized management. Terraform stands out as an IaC tool, allowing infrastructure definition and deployment across multiple cloud providers.

Choosing the right framework depends on several factors such as team expertise, project scale, infrastructure complexity, and specific cloud provider preferences. For example, if you need to manage a large enterprise environment with a strong focus on compliance and auditing, Puppet or Chef’s centralized approach might be preferred. However, for a smaller project with a rapid deployment need, Ansible’s simplicity and ease of use can be more advantageous.

Q 21. How do you ensure compliance with security and regulatory standards in your configuration management processes?

Security and compliance are integral to my configuration management practices. I use various methods to ensure adherence to standards. First, IaC tools enable version control and auditing of configuration changes, providing a clear audit trail. This is vital for compliance reporting. Second, security best practices like least privilege access and principle of least astonishment are implemented throughout the infrastructure. This minimizes the impact of any security compromise. Third, I regularly incorporate security scanning and penetration testing into the CI/CD pipeline to identify and address vulnerabilities before they reach production.

For example, incorporating tools like `inspec` (for compliance testing) or integrating with cloud provider security services within the CI/CD pipeline provides automated security checks as part of the deployment process. Regular security audits ensure that the configuration management system itself is secure and complies with the organization’s security policies.

Q 22. What are your preferred methods for testing and validating infrastructure configurations?

Validating infrastructure configurations is crucial for ensuring stability and reliability. My preferred methods involve a multi-layered approach combining automated testing with manual verification.

- Automated Testing: I leverage tools like Ansible’s

ansible-lintfor syntax and best-practice checks, and Testinfra for testing the actual state of the infrastructure. For example, I might write a Testinfra test to verify that a specific port is open on a server after deployment.testinfra.utils.ansible_runner.AnsibleRunner(playbook='my_playbook.yml'). This ensures consistency across environments. I also utilize configuration drift detection tools that compare desired and actual states, alerting on any discrepancies. - Manual Verification: While automation is essential, manual checks remain vital. This includes logging into servers to perform basic health checks, ensuring services are running, and verifying application functionality. This ‘hands-on’ approach catches issues that automated checks might miss, like unexpected permission issues or environmental dependencies.

- Integration Testing: I always incorporate integration testing to check how different components of the infrastructure interact. For instance, I might test the interaction between a web server, a database, and a message queue to confirm data flows correctly.

By combining automated and manual methods, I ensure comprehensive validation, mitigating risks and enhancing confidence in the deployed infrastructure.

Q 23. Explain your experience with different scripting languages (e.g., Python, Bash, PowerShell).

I’m proficient in several scripting languages, each suited for different tasks within DevOps. My experience includes:

- Python: My go-to for complex automation tasks, especially when interacting with APIs, data processing, or creating custom tools. I’ve used it extensively for building custom modules for Ansible and creating scripts to automate report generation and infrastructure monitoring. For instance, I wrote a Python script that automatically generates daily infrastructure health reports based on data collected from various monitoring tools.

- Bash: My workhorse for quick automation, shell scripting, and system administration tasks. It’s invaluable for tasks such as automating deployments, managing system processes, and creating simple utilities. For example, I wrote a Bash script that automates the process of backing up database instances, ensuring that daily backups are performed reliably and efficiently.

- PowerShell: My primary choice for automating Windows-based systems. I’ve used it extensively for managing Active Directory, configuring IIS servers, and managing Windows services. I created a PowerShell script to automate the installation and configuration of a web application on a Windows Server.

I choose the right tool for the job, understanding the strengths and weaknesses of each language.

Q 24. How do you handle disaster recovery and business continuity in relation to configuration management?

Disaster recovery and business continuity are critical. My approach centers around redundancy and automated failover mechanisms, heavily reliant on configuration management.

- Redundancy: I design infrastructure with redundancy in mind. This includes using multiple availability zones, geographically dispersed servers, and redundant network paths. Configuration management tools ensure consistent configuration across all redundant systems.

- Automated Failover: I implement automated failover mechanisms using tools like HAProxy or Kubernetes. Configuration management plays a crucial role by ensuring that the failover process seamlessly transitions to the backup system with the exact same configuration as the primary.

- Regular Backups and Snapshots: I employ regular backups and snapshots of both the infrastructure configuration and the application data. Configuration management allows me to easily restore the infrastructure to a known good state in case of a disaster.

- DR Testing: Regular disaster recovery drills are paramount. These simulations allow us to test the effectiveness of our failover and restoration procedures, identify bottlenecks, and refine our processes.

Configuration management is not just about setting up the system, it’s the backbone of ensuring it can recover from failures.

Q 25. Describe your approach to managing infrastructure in different environments (development, testing, production).

Managing infrastructure across different environments requires a structured approach. I employ a consistent configuration management strategy, with variations tailored to each environment’s specific needs.

- Development: This environment prioritizes rapid iteration and experimentation. I use a flexible approach with quick deployments, allowing developers to test changes frequently. This often involves automated provisioning and ephemeral resources.

- Testing: The testing environment mirrors production as closely as possible, ensuring that the application behaves consistently across environments. I use the same configuration management tools as production but may utilize automated testing frameworks more extensively.

- Production: This environment prioritizes stability, security, and scalability. I leverage robust configuration management tools, rigorous change management processes, and comprehensive monitoring to maintain uptime and security.

Utilizing tools like Ansible, Puppet, or Chef, I create different configuration sets, or environments, within a single management framework. This enables consistency, reduces errors, and facilitates smooth transitions between environments.

Q 26. How do you collaborate with other teams (e.g., development, security) in a DevOps environment?

Collaboration is key in a DevOps environment. I foster strong relationships with development and security teams through:

- Shared Tools and Processes: I utilize tools and processes that enable easy collaboration, such as Git for version control and collaborative issue trackers. Everyone has visibility into the infrastructure configurations.

- Communication and Feedback Loops: I actively solicit feedback from development and security on infrastructure configurations. Regular communication ensures that requirements are met and potential issues are identified early.

- Infrastructure as Code (IaC): Using IaC, all configurations are stored in version control allowing for transparency and collaboration. Any changes made are reviewed, reducing the risk of configuration errors.

- Security Integration: Security is integral to the process. I work with the security team to ensure all security best practices are implemented in the infrastructure configurations. This includes things like secure credential management, regular security audits, and penetration testing.

By working collaboratively, we minimize misunderstandings, improve overall efficiency, and deliver higher quality results.

Q 27. Explain how you would approach building a CI/CD pipeline for a new application.

Building a CI/CD pipeline involves a series of steps. My approach would involve the following:

- Source Code Management: Using Git (or another SCM), I establish a repository for both the application code and the infrastructure-as-code. This allows for version control and collaboration.

- Continuous Integration (CI): I set up a CI server (e.g., Jenkins, GitLab CI) to automate the build process. The CI server would run unit tests and build the application artifacts.

- Infrastructure as Code (IaC): I utilize IaC tools (e.g., Terraform, Ansible) to define the infrastructure. This would ensure consistent and repeatable deployments across environments.

- Automated Testing: I integrate automated tests (unit, integration, and system tests) into the pipeline to verify the application’s functionality before deployment.

- Deployment Automation: I automate the deployment process using tools such as Ansible, Chef, or Puppet. This ensures that the application is deployed consistently and reliably across environments.

- Continuous Delivery/Deployment (CD): The CD phase automates the release of the application to different environments (development, testing, production). This may involve strategies like blue/green deployments or canary deployments.

- Monitoring and Logging: I implement monitoring and logging to track the application’s performance and identify potential issues. This feedback loop is crucial for continuous improvement.

By automating these steps, we can achieve faster deployments, reduced errors, and increased efficiency. The specific tools used may vary depending on the project requirements and existing infrastructure.

Key Topics to Learn for DevOps Configuration Management Interview

- Infrastructure as Code (IaC): Understand the principles and practical application of IaC tools like Terraform, Ansible, Puppet, or Chef. Be prepared to discuss their strengths and weaknesses in various scenarios.

- Version Control Systems (VCS): Demonstrate proficiency in Git, including branching strategies, merging, resolving conflicts, and using Git for managing infrastructure code.

- Configuration Management Tools: Gain in-depth knowledge of at least one major configuration management tool (e.g., Ansible, Puppet, Chef, SaltStack). Practice implementing configurations, managing nodes, and troubleshooting issues.

- Continuous Integration/Continuous Delivery (CI/CD): Explain how configuration management integrates with CI/CD pipelines to automate deployments and infrastructure updates. Be ready to discuss tools like Jenkins, GitLab CI, or Azure DevOps.

- Cloud Platforms: Familiarize yourself with cloud-based infrastructure management using services like AWS, Azure, or GCP. Understand how configuration management tools interact with these platforms.

- Security Best Practices: Discuss security considerations in configuration management, including access control, secrets management, and compliance requirements.

- Monitoring and Logging: Explain how to monitor the health and performance of managed infrastructure and use logging to troubleshoot configuration issues.

- Problem-Solving and Troubleshooting: Be prepared to discuss your approach to troubleshooting configuration discrepancies, failed deployments, and other common challenges.

- Automation and Scripting: Showcase your skills in scripting languages like Python or Bash to automate tasks related to configuration management.

Next Steps

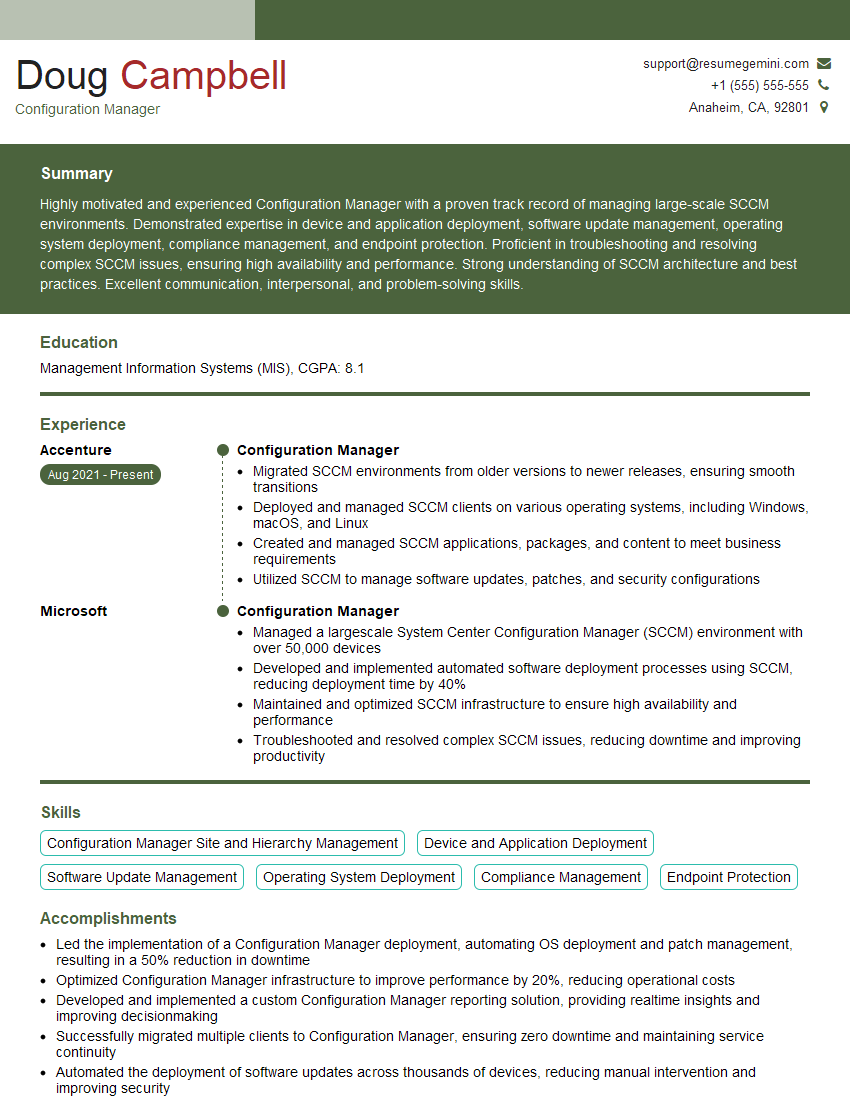

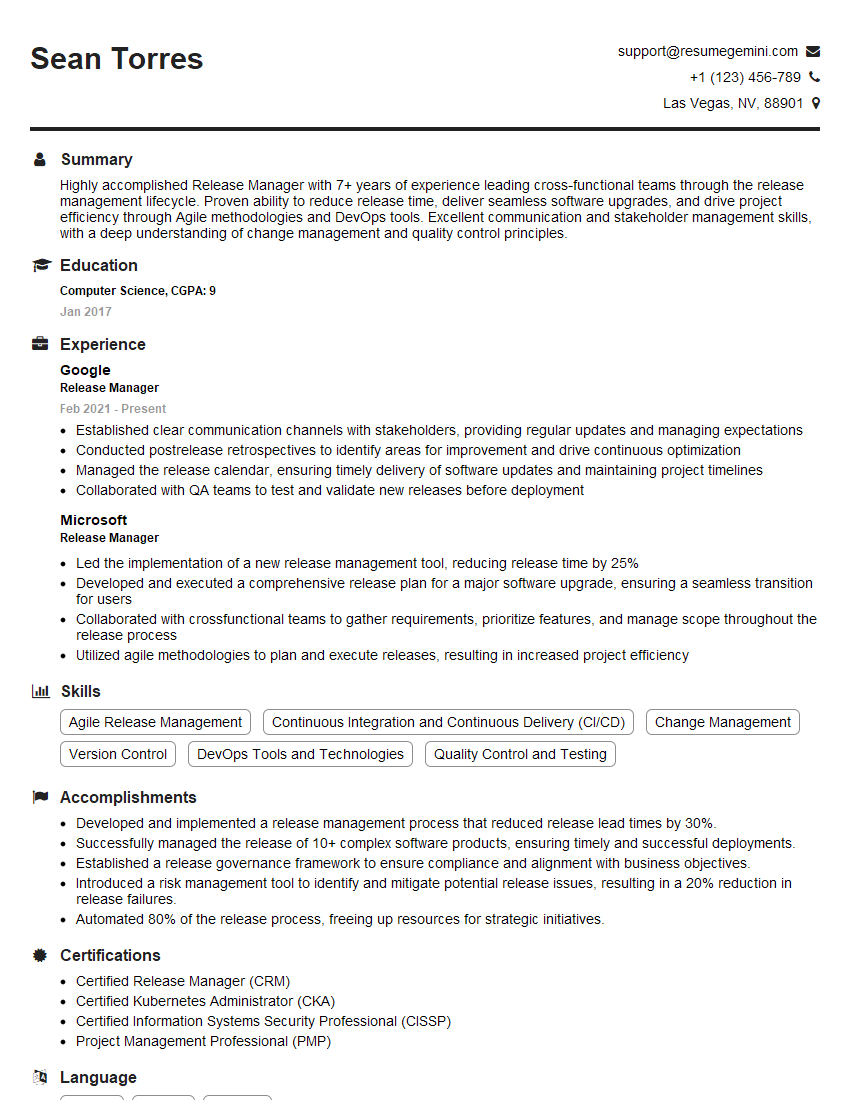

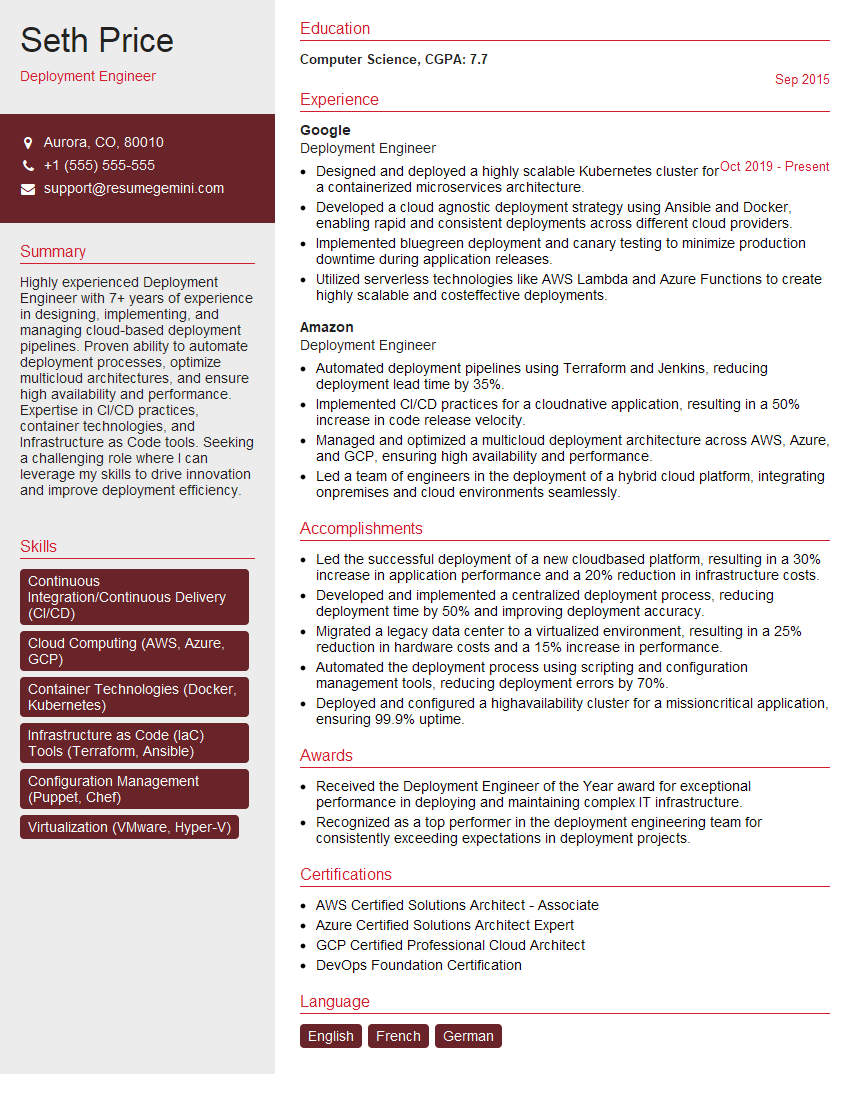

Mastering DevOps Configuration Management opens doors to exciting and high-demand roles within the technology industry. It significantly enhances your ability to build, deploy, and manage robust and scalable systems. To maximize your job prospects, creating a strong, ATS-friendly resume is crucial. ResumeGemini can help you build a professional and impactful resume that highlights your skills and experience effectively. They offer examples of resumes tailored to DevOps Configuration Management roles, helping you present yourself in the best possible light. Invest time in crafting a compelling resume – it’s your first impression to potential employers.

Explore more articles

Users Rating of Our Blogs

Share Your Experience

We value your feedback! Please rate our content and share your thoughts (optional).

What Readers Say About Our Blog

Hello,

we currently offer a complimentary backlink and URL indexing test for search engine optimization professionals.

You can get complimentary indexing credits to test how link discovery works in practice.

No credit card is required and there is no recurring fee.

You can find details here:

https://wikipedia-backlinks.com/indexing/

Regards

NICE RESPONSE TO Q & A

hi

The aim of this message is regarding an unclaimed deposit of a deceased nationale that bears the same name as you. You are not relate to him as there are millions of people answering the names across around the world. But i will use my position to influence the release of the deposit to you for our mutual benefit.

Respond for full details and how to claim the deposit. This is 100% risk free. Send hello to my email id: [email protected]

Luka Chachibaialuka

Hey interviewgemini.com, just wanted to follow up on my last email.

We just launched Call the Monster, an parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

We’re also running a giveaway for everyone who downloads the app. Since it’s brand new, there aren’t many users yet, which means you’ve got a much better chance of winning some great prizes.

You can check it out here: https://bit.ly/callamonsterapp

Or follow us on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call the Monster App

Hey interviewgemini.com, I saw your website and love your approach.

I just want this to look like spam email, but want to share something important to you. We just launched Call the Monster, a parenting app that lets you summon friendly ‘monsters’ kids actually listen to.

Parents are loving it for calming chaos before bedtime. Thought you might want to try it: https://bit.ly/callamonsterapp or just follow our fun monster lore on Instagram: https://www.instagram.com/callamonsterapp

Thanks,

Ryan

CEO – Call A Monster APP

To the interviewgemini.com Owner.

Dear interviewgemini.com Webmaster!

Hi interviewgemini.com Webmaster!

Dear interviewgemini.com Webmaster!

excellent

Hello,

We found issues with your domain’s email setup that may be sending your messages to spam or blocking them completely. InboxShield Mini shows you how to fix it in minutes — no tech skills required.

Scan your domain now for details: https://inboxshield-mini.com/

— Adam @ InboxShield Mini

Reply STOP to unsubscribe

Hi, are you owner of interviewgemini.com? What if I told you I could help you find extra time in your schedule, reconnect with leads you didn’t even realize you missed, and bring in more “I want to work with you” conversations, without increasing your ad spend or hiring a full-time employee?

All with a flexible, budget-friendly service that could easily pay for itself. Sounds good?

Would it be nice to jump on a quick 10-minute call so I can show you exactly how we make this work?

Best,

Hapei

Marketing Director

Hey, I know you’re the owner of interviewgemini.com. I’ll be quick.

Fundraising for your business is tough and time-consuming. We make it easier by guaranteeing two private investor meetings each month, for six months. No demos, no pitch events – just direct introductions to active investors matched to your startup.

If youR17;re raising, this could help you build real momentum. Want me to send more info?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

Hi, I represent an SEO company that specialises in getting you AI citations and higher rankings on Google. I’d like to offer you a 100% free SEO audit for your website. Would you be interested?

good